Abstract

Estimating of population and domain means based on model-design approaches is considered in this paper. Population elements randomly belong to domains. A joint distribution of the variable under study and an auxiliary variable is assumed. Data are observed in a sample selected from a fixed population. The partition of the sample elements into domains of the population is also known. Outside of the sample, values of the auxiliary variable are known but their partition among the domains is not known. The domain means are estimated based on the likelihood function of the data observed in the sample and outside of it. The maximum likelihood estimation method provides regression-type estimators of domain means of the variable under study. They are dependent on posterior probabilities that observations of the auxiliary variable belong to particular domains. Moreover, the weighted means of the domain averages estimators are used to estimation of the population mean. The accuracy of the evaluated estimators and the ordinary estimator is compared using a simulation analysis. The results of this paper could be useful in economic, demographic and sociological surveys.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Domains are usually treated as fixed and mutually disjoint subsets of the population. We consider the case when a population element belong to a domain with some probability. Therefore, the size of the domain is random. Our problem is estimation domain means and the population mean of a variable under study y on the basis of a random sample selected from a whole population. In the population all values of an auxiliary variable x are known, while values of y are observed only in the sample. In the sample domains are identifiable, while outside of the population they are not. Under the outlined assumptions let us consider the following motivation examples. Let us consider the population of firms that have taken out bank loans for investments. The values of granted loans are observations of the variable y, while the observations of the variable x are the values of companies’ capital. Any company with the probability \(p_h\), \(h=1, \ldots ,H\) may default on its loan. The h index identifies the domain consisted of companies classified approximately at the same credit risk. The total distribution of x, y variables in the population will be treated as a mixture of the distributions of these variables in domains weighted by the probabilities \(p_h\). The empirical Sect. 3.2 of the article presents other examples.

Ża̧dło [14] assumed that population elements randomly belong to domains. He presented several examples. For instance, he considered the estimation of the income of different enterprises when they randomly belonged to different investment intervals. Elections are another example of domains. In this case a domain consists of people who vote for a specific party. Often, the selection of a particular party is random because a lot of voters are not committed to voting for any particular party. The model for generating an accounting error (see [13]) can also exemplify domains. An observed value of an accounting document is treated as the outcome of the random variable, which is a mixture of two distribution functions. One of these distribution functions generates the true accounting amount, and the second generates an accounting amount contaminated with an error. Documents without errors belong to the first domain and documents polluted with accounting errors belong to the second domain. Hence, documents randomly belong to the domains. This idea, which is based on distribution mixtures, is developed in this paper.

Many auxiliary variables are usually observed during national censuses. Moreover, variables under study (observed during a census) can be used as auxiliary variables in survey sampling on a subsequent occasion. Therefore, we can expect these variables to be highly correlated. Let us note that apart from the above examples, there are many populations where all values of the auxiliary variable are observed. These can be found in economic, demographic, agricultural and other official registers.

In this paper, the model or model-randomization approaches are taken into account (see, e.g., [8] or [9]. Estimating domain means is usually better, when the estimation is supported by data on auxiliary variables observed outside the sample but usually under the assumption that their distribution among domains is known (see several monographs on Small Area Sampling and, e.g., [10]). The models formulated in this paper are close to those considered by Chambers and Skinner [2]. Estimators of domain averages are derived by means of the maximum pseudo-likelihood method. More precisely, a variant of the likelihood method of estimation based on incomplete data of the variable under study is adopted to estimate distribution mixture parameters. Our analysis is mainly supported by monographs [4, 6, 7].

The most important results of the paper are as follows:

-

The pseudo-likelihood function is formulated for estimation of the mixture distribution parameters in the case when data are observed in the sample selected according various inclusion probabilities (Sect. 2.2).

-

On the basis of this function, the regression and ratio type estimators of domain means are derived in the case of bivariate normal components of the distribution mixture (Sect. 3.1 and Appendix).

-

These results are generalized into the case of a multidimensional auxiliary variable (Sect. 3.1).

-

The linear combination of the regression (ratio) estimators is used to estimation the population mean (Sect. 3.1).

-

Examples of the simulation analysis of the estimation accuracy are prepared (Sect. 3.2).

2 General Results

2.1 Model-Design Approach

Let us denote by U a population of size N partitioned into H mutually disjoint domains denoted by \(U_h\), \(h=1, \ldots ,H\), \(1<H<N\). Let \([y_k,\textbf{x}_k,\textbf{z}_{k*}]\) be the k-th observation of the variable under study, an auxiliary variable vector, and a vector identifying domains where \(\textbf{x}_k=[x_{k,1} \ldots x_{k,m}]\), \(1\le m<N\) and \(\textbf{z}_{k*}=[z_{k,1} \ldots z_{k,h} \ldots z_{k,H}]\), \(k=1, \ldots ,N\). Let \(\textbf{z}^{(h)}\) be a row vector, in which all H elements are equal to zero except the h-th element, which is equal to one, and this identifies the h-th domain. When \(\textbf{z}_{k*}=\textbf{z}^{(h)}\), then the k-the population element is in the h-th domain.

Let us assume that \([y_k \textbf{x}_k \textbf{z}_{k*}]\) is an observation of a random vector \([Y_k \textbf{X}_k \textbf{Z}_{k*}]\) attached to a k-th population element, where \(\textbf{X}_k=[X_{k,1} \ldots X_{k,m}]\) and \(\textbf{Z}_{k*}=[Z_{k,1} \ldots Z_{k,H}]\). The random vectors \([Y_k \textbf{X}_k \textbf{Z}_{k*}]\), \(k\in U\), are independent, and each of them has the same probability distribution. Let \(P(\textbf{Z}_{k*}=\textbf{z}^{(h)})=p_h\), \(h=1, \ldots ,H\), \(\sum _{h=1}^Hp_h=1\). Random variable \(\textbf{Z}_{k*}\) has multinomial distribution with parameters \((1,p_1, \ldots ,p_H)\) (see, e.g., [7]). Event \(\{Y_k<y_k,\textbf{X}_k<\textbf{x}_k\}\) with specific feature \(\{\textbf{Z}_{k*}=\textbf{z}^{(h)}\}\) written as \(\{Y_k<y_k,\textbf{X}_k<\textbf{x}_k,\textbf{Z}_{k*}=\textbf{z}^{(h)}\}\) concerns the h-th domain and

The events \(\{Y_k<y_k,\textbf{X}_k<\textbf{x}_k,\textbf{Z}_{k*}=\textbf{z}^{(h)}\}\) and \(\{Y_k<y_k,\textbf{X}_k<\textbf{x}_k,\textbf{Z}_{k*}=\textbf{z}^{(t)}\}\) are mutually exclusive for all \(h\ne t\) and \(h=1, \ldots ,H\), \(t=1, \ldots ,H\). This and the total probability theorem lets us write the following:

where \(F(y_k,\textbf{x}_k|\textbf{Z}_{k*}=\textbf{z}^{(h)})\) is the conditional distribution function. In the case where variables \([Y_k\textbf{X}_k]\) are continuous, we have:

where \(f(y_k,\textbf{x}_k|\textbf{Z}_{k*}=\textbf{z}^{(h)})\), \(h=1, \ldots ,G\), \(k\in U\), are density functions. This leads to the conclusion that our model defines the following distribution function: \(F(y,\textbf{x})=\prod _{k\in U}F(y_k,\textbf{x}_k)\) or density function \(f(y,\textbf{x})=\prod _{k\in U}f(y_k,\textbf{x}_k)\).

According to the assumptions of this model, the random variable \(\sum _{k=1}^N\textbf{Z}_{k*}\) has multinomial probability distribution with parameters \([N,p_1, \ldots ,p_H]\). Moreover, the column vector \(\textbf{Z}_{*h}=[Z_{1,h} \ldots Z_{N,h}]^T\) identifies the h-th domain of size \(N_h=\sum _{k\in U}\textbf{Z}_{k,h}\) where \(0\le N_h\le N\) and the expected domains sizes are \(E(N_h)=Np_h\), \(h=1, \ldots ,H\) because \(N_h\) has binomial distribution with parameters \((N, p_h)\). Let us note that the introduced definitions lead to the conclusion that the sizes and consistencies of the domains are random. Hence, the multinomial probability model leads to partitions of the population into disjoint subsets called domains. Therefore, each outcome of partitioning the population into domains could be different.

Our main aim is to estimate the expected (domain mean) value \(\mu _h=E(Y_k|\textbf{Z}_{k*}=\textbf{z}^{(h)})\) and the probabilities \(p_h\), \(h=1, \ldots ,H\). Additionally, estimators of the expected value (population mean) \(\mu =\sum _{h=1}^Hp_h\mu _h\) are proposed.

In order to do this, sample s of size \(n\le N\) is selected from population U according to a sampling design denoted by \(P(s)\ge 0\), \(s\in {{\mathscr {S}}}\), where \({{\mathscr {S}}}\) is sampling space and \(\sum _{s\in {{\mathscr {S}}}}P(s)=1\). Inclusion probabilities of the sampling design are defined by \(\pi _k=\sum _{\{s: k\in s,s\in {{\mathscr {S}}}\}}P(s)\), \(k=1, \ldots ,N\). Let \(\underline{s}=U-s\) be the complement of s in U. Moreover, let \(s=\bigcup _{h=1}^Hs_h\), where \(s_h\subseteq U_h\), \(n_h\) is the size of \(s_h\), \(n=\sum _{h=1}^Hn_h\) is size of s. We assume that \(1<n_h\le N_h\) for \(h=1, \ldots H.\) If \(s=U\), then \(\underline{s}\) is the empty set.

2.2 Maximum Likelihood Estimation

Identifying a domain is possible after observation of variable \(\textbf{Z}_{k*}\) in sample s. The density function of the conditional distribution of \([Y_k \textbf{X}_k \textbf{Z}_{k*}]\) provided \(\textbf{Z}_{k*}=\textbf{z}^{(h)}\) will be denoted by \(f_h(y_k,\textbf{x}_k,\theta _h)\), \(h=1, \ldots ,H\) where \(\theta _h=[\theta _{h,1} \ldots \theta _{h,m}]\), \(\theta _h\subseteq R^m\), \(\theta =[\theta _1 \cdots \theta _h \cdots \theta _H]\). Therefore, the observed values of the variables in the whole population are defined by the following distribution mixture:

where \(\Theta =\{\textbf{p}\cup \theta \}\), \(\textbf{p}=[p_1 \ldots p_H]\). We assume that only values \(\textbf{x}_1, \ldots , \textbf{x}_k, \ldots ,\textbf{x}_N\) are observed in the whole population before selecting a sample. The marginal distribution of \(\textbf{X}_k\) is as follows:

where \(g_h(\textbf{x}_k,\theta _{x,h})=\int _{R}f_h(y_k,\textbf{x}_k,\theta _{h})\textrm{d}y_k\), \(\theta _{x,h}\subseteq \theta _{h}\) and \(\Theta _x\subseteq \Theta .\) Moreover, let: \(\theta _x=[\theta _{x,1} \cdots \theta _{x,H}]\), \(\Theta _x=\{\theta _x,\textbf{p}\}\).

The sample contains the following data on variable values: \([y_k \textbf{x}_k,\textbf{z}_{k*}]\) of random variables \((Y_k\textbf{X}_k,\textbf{Z}_{k*})\), \(k\in s\). Let \({\textbf {d}}_s=\{[y_k\; {\textbf {x}}_{k*}\; {\textbf {z}}_{k*}],k\in s\}\) and \({\textbf {x}}_{\underline{s}}=\{{\textbf {x}}_{k*},k\in \underline{s}\}\). Hence, the sample contains complete data on the distribution mixture, while outside of the sample, the data are incomplete.

When the sample is selected according to preassigned inclusion probabilities, the pseudo-likelihood approach (see, [3, 8, 12]) leads to the following function:

where the complete and incomplete log-likelihood functions are as follows, respectively:

where \(n_h\) is the size of \(s_h\subseteq U_h\), which is the sub-sample of \(s=\bigcup _{h=1}^Hs_h\), \(N_h\ge n_h>1\), \(n=\sum _{h=1}^Hn_h\). We can easy show that \(E_P(l_1({\textbf {d}}_s))=l_1({\textbf {d}}_U)\) and \(E_P(l_2({\textbf {x}}_{\underline{s}}))=l_2({\textbf {x}}_{U})\) where

This means that the sample log-likelihood functions \(l_1({\textbf {d}}_s)\) and \(l_2({\textbf {x}}_{\underline{s}})\) are design-unbiased estimators of the population log-likelihood functions \(l_1({\textbf {d}}_U)\) and \(l_2({\textbf {x}}_{U})\), respectively.

Usually, looking for the maximum of the log-likelihood function \(l({\textbf {d}}_s,{\textbf {x}}_{\underline{s}})\) is very complex and not exact. An approximation method has to be applied to solve the problem. Therefore, we use the more simple iteration method known as the EM-algorithm (see [4, 6, 7]). According to this method, function \( l({\textbf {d}}_s,{\textbf {x}}_{\underline{s}})\) is replaced with the following:

where

\(\sum _{h=1}^H\tau _{h,k}^{(t)}=1\) and \(\hat{\tau }^{(t)}_{h,k}\) is the posterior probability that the k-element (\(k\in \underline{s}\)) belongs to the h-th domain. Moreover, \(\hat{\tau }_h^{(t)}\) is the estimator of the expected size of the h-domain in the set \(\underline{s}\). The Appendix provides outline of how to get optimal values of parameters \(\hat{\Theta }_x^{(t+1)}\) and the following estimators of probabilities \(p_h\):

where

Statistics \(\hat{N}\) and \(\hat{\tau }^{(t)}\) are estimators of N. In general, estimators of \(\hat{\Theta }^{(t+1)}\) could be obtained as roots of the first subsystem of the equation system (21). Moreover, \(\tilde{N}^{(t)}_h=N\hat{p}_h^{(t)}\) is the estimator of the expected values of the domain size \(Np_h\). The initial values of \(\hat{\Theta }^{(t)}\) and \(\hat{p}^{(t)}_h\) are equal to the roots of system \(\frac{\partial l^{(t)}({\textbf {d}}_s,{\textbf {x}}_{\underline{s}})}{\partial p_h}={\textbf {0}}\) and \(\hat{p}^{(t)}_h=\frac{\hat{N}_h}{\hat{N}}\), \(h=1, \ldots ,H\).

When \(\pi _k\), \(k\in U\) depend on variables from \({\textbf {X}}\), the likelihood function under the condition that \({\textbf {X}}={\textbf {x}}\) needs to be consider. Several aspects of this problem were discussed by Pfeffermann [8] on the basis of large literature. Therefore, in order to simplify our considerations, we assume that the inclusion probabilities \(\pi _k\), \(k\in U\) as well as \(p_h\), \(h=1, \ldots ,H\) could depend on the non-random auxiliary variable that is different from observations of variables from \({\textbf {X}}\).

The simple random sample drawn without replacement does not depend on the auxiliary variables. In this case \(\pi _k=\frac{n}{N}\) for \(k\in U\), and the estimator expressed by (7) simplifies to the following form:

3 Estimation for a Bivariate Normal Model

3.1 Estimators

We assume that the components of the distribution mixture are two dimensional normal components with parameters: \(N(\mu _{y,h},\mu _{x,h},\sigma ^2_{y,h},,\sigma ^2_{x,h},\rho _h)\), \(h=1, \ldots ,H\).

In the Appendix, we evaluate estimators of domain means \(\mu _{y,h}\) and the fraction of the population elements in domains \(p_h\), \(h=1, \ldots ,H\) according to the EM estimation algorithm and expressions (4)–(8). From Expressions (6) and (7), let us write for \(t=0,1,2, \ldots \) the following:

where \(\hat{p}_h\) and \(p_h^{(0)}=\bar{p}\) are explained by expressions (7) and (8).

The following regression-type estimators of \(\mu _{y,h}\) are derived in the Appendix:

where \(t=0,1,2, \ldots \),

When the constant of the linear regression y on x is approximately equal to zero, we can use the following ratio-type estimator:

Particularly, in the case of a simple random sample drawn without replacement, when \(\pi _k=\frac{n}{N}\) for all \(k\in U\), we have:

Generalization of the proposed regression-type estimators into the case of a multi-dimensional auxiliary variable is as follows. Let

Let \(\mathbf {I_a}\) be the unit matrix of degree a and \(\mathbf {J_a}\) be the a-element column vector which all elements are equal to one. The rows of the matrix \(\textbf{X}\) could be rewritten in such a way that

These let us generalize the estimators defined by expressions (12) and (13) as follows:

where \(t=0,1,2, \ldots \) and \(\bar{\textbf{x}}_{\underline{s},h}^{(0)}=\bar{\textbf{x}}_{s_h}\), \(\hat{\Sigma }_{xx,h}^{(0)}=\Sigma _{xx,s_h}\).

Usually, the estimation process is stopped when the number of iterations t reaches the preassigned level T. Some other stopping rules are discussed, e.g., in [6, 7]. These works also considered several procedures which assess accuracy of estimators such as bootstrap methods.

Finally, let us show that the estimators evaluated in the previous paragraph, which are given by expressions (12)–(14), (16), (18), (19), (7) and (8), let us construct the following estimators of the population mean:

where \(t=0,1,2, \ldots \).

3.2 Simulation Study

Let simple random samples \(\{s_j,j=1, \ldots ,M\}\) be independently drawn without replacement from the whole population of size N. We assume that each of them is partitioned between H-domains in such a way that \(s_j=s_{1,j}\cup \ldots \cup s_{h,j}\cup \ldots \cup s_{H,j}\) and \(2\le n_h\le n-2(H-1)\), \(h=1, \ldots ,H\). Values of relative efficiency coefficient for estimator of the mean in the h-domain, \(h=1, \ldots ,H\), are defined as the following ratio:

where \(mse(t_{s_h})=\frac{1}{M}\sum _{j=1}^{M}(t_{s_{h,j}}-\bar{y}_h)^2\), \(v(\bar{y}_{s_h})=\frac{1}{M}\sum _{j=1}^{M}(\bar{y}_{s_{h,j}}-\bar{y}_h)^2\), \(\bar{y}_h=\frac{1}{N_h}\sum _{j=1}^{M}y_{k,i}\), \(h=1, \ldots ,H\). The relative bias of estimators is defined as follows:

We assume that \(M=10 000\).

Example 1

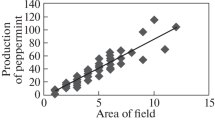

Let us consider the following simple set of data on a two-dimensional random variable generated according to two-dimensional normal distribution. The set consists of three domains of the same size equal to 500. Hence, a population of size 1500 is divided into three domains. The data in the h-domain are generated according to normal distribution \(N(\mu _{x,h},\mu _{y,h},v_{x,h},v_{y,h},\rho _h)\). We will consider the following population partitioned into domains. The domain parameters of the population are: N(8, 4, 1, 1, 0.5), N(14, 11.2, 1, 1, 0.8) and N(20, 19, 1, 1, 0.95). The spread of artificially generated data is shown in Fig. 1.

The simple random sample (\(s=s_1\cup s_2\cup s_3\)) is drawn without replacement from the whole population of size N. We assume that the size of each \(s_{h,j}\) is \(2\le n_h\le n-2(H-1)\), \(h=1,2,3\), \(j=1, \ldots ,M\). In the second column of Table 1, the domains are identified by integers 1, 2 and 3. Columns 3–6 give the relative efficiency coefficient values e(.) for the domains.

Estimators \(\bar{y}_{s_h}\), \(\tilde{y}_h^{(t)}\) and \(\check{y}_h^{(t)}\) are less accurate than \(\hat{y}^{(t)}_h\). In the second domain estimators \(\tilde{y}_h^{(t)}\) and \(\check{y}_h^{(t)}\) are more accurate than \(\bar{y}_{s_h}\) and comparable with the accuracy of \(\hat{y}_h^{(t)}\) for \(n=75, 150\) and in the third domain for \(n=150\). All considered estimators are practically unbiased because their relative biases (evaluated as the ratio of the bias module by the square root of the mean square error of the estimator) are not larger than \(0.1\%\). Therefore, the biases are not shown in Table 1. Accuracy of the estimators increases when the sample size also increases. When the correlation coefficient between the auxiliary variable and the variable under study in a particular domain increases, then the accuracy of the estimator also increases. Regression estimator \(\hat{y}^{(t)}_h\) is significantly more accurate than the ordinary sub-sample mean \(\bar{y}_{s_h}\). Statistic \(\hat{y}^{(t)}_h\) seems to be the most universal of the considered estimators and therefore should be preferred.

The relative biases of \(\hat{p}_h^{(t)}\), \(h=1, \ldots ,H\), are not larger than \(0.5\%\). Accuracies of these also increase when the sample size increases. They are better than ordinary sample frequencies \(\bar{p}_h\) for \(n\ge 45\). Hence, the considered procedure could also be used to estimate of the probabilities \(p_h\), \(h=1, \ldots ,H\) of distribution mixtures. The several last rows of Table 1 let us say that all three estimators of the population average are significantly better than the simple sample mean. Moreover, the ratio-type estimator is the most accurate.

Example 2

The second population consists of data published in [11] about Swedich municipalities. We consider data about three variables REV84 (real estate values from 1984), RMT85 (revenues from municipal taxation in 1985) and ME84 (municipal employees in 1984). We take into account these data without the largest outliers. The size of the considered population is 281. The population was partitioned into three domains according to quantiles 30% and 70% of variable REV84. This provided the following sizes of domains: \(N_1=86\), \(N_2=109\) and \(N_3=86\). Real estate valuation depends on market fluctuations. Therefore, the same property today may be in the first domain, but tomorrow it may be in a different domain. Therefore, belonging to a domain can be treated as random.

The distributions of variables RMT85 and ME84 have too much asymmetry on the right side, and they differ too significantly from normal distribution. Therefore, we considered their logarithmic transformation, and the spread of this is shown Fig. 2. The domain mean values of logRMT85 were \(\mu _1=6.704\), \(\mu _2=7.5.20\) and \(\mu _3=8.528\). The simulation of estimation accuracy was based on the simple random samples drawn without replacement. The sizes of the samples were: 8 (2.85% the population size), 14 (4.98%) and 28(2.96%). Table 2 shows only the accuracies of the estimation of population mean because the estimators of the domain means were less accurate than the simple random sample mean. Analysis of Table 2 lets us say that all of the estimators of the population mean that are taken into account, are more accurate than the simple random sample mean. The accuracy of the second regression estimator is the best among the considered ones, and similarly its relative bias is the smallest.

Example 3

Let us consider data about current and starting salaries of employees that are available in the SPSS statistical packages as the example dataset. The set consists of 474 observations. The two data domains are identified. The first domain of 390 observations is the set of clerks and the second one consists of 84 managers. In general, an employee randomly belongs to one of these domains, because one day he could be a manager and the next day he could be a clerk, and vice versa. The starting and current salaries in the first domain (clerks) are $14164 and $28054, respectively. The starting and current salaries in the second domain (managers) are $28091 and $63978, respectively. The spread of the data partitioned into domains is shown in Fig. 3. The following sizes of samples were taken into account: 15 (3.2%), 24 (5.1%) and 48 (10.1%). The results of the simulation are shown in Table 3. Similar to example 2, this table shows only the accuracy of the estimation of the population mean because the estimators of domain means were less accurate than the simple random sample mean.

Analysis of Table 3 leads to the conclusions that the all estimators are more accurate than the simple sample mean for sample size \(n>24\) except the ratio estimator because its deff coefficients are less than 100% for all sample sizes. The accuracy of the ratio estimator decreases when the sample size increases. The relative biases of the estimators are quite large.

Analysis of all the tables and figures lets us say that estimation of domain means is possible only when data observed in domains are well separated. The more optimistic conclusion is that the proposed estimators of population means are always more accurate than the simple random sample in all considered cases, when the sample size is at least 5% of the population size. Their biases are also rather acceptable. Of those estimators of the population mean, the second regression estimator and the ratio estimator are the best and could be used in practical research.

The presented simulation analyzes will be continued in a wider scope in the next article. In particular, different mixtures of at least three-dimensional probability distributions will be considered. In addition, various modifications to the estimators used herein will be proposed, leading to a more accurate estimation of the domain averages.

4 Conclusions

Three estimators of domain means use additional auxiliary data in order to improve estimation accuracy. Properties of the maximum likelihood method let us to derive new estimators of domain and population means. The simulation analysis shows which is the best estimator for occasions when the domains are sufficiently well separated. This separation may not to be very obvious when estimating the population mean. In this case, all of the estimators of population means were better than the simple random sample mean. The considered estimation method lets us also estimate the probabilities of the distribution mixtures. Generalization of the regression estimators for a multidimensional auxiliary variable was also shown.

Some other generalization or modifications of the estimation procedure are possible. Auxiliary variables observed in censuses or in official registers can be used to improve the efficiency of estimating means. We can consider distributions other than normal as elements of the mixture. For instance, expenditures or incomes in domains could be modeled by means of asymmetric distributions like lognormal or gamma distributions.

References

Cassel CM, Särndal CE, Wretman JH (1977) Foundation of inference in survey sampling. Wiley, New York

Chambers RL, Skinner CJ (2003) Analysis of survey data. Wiley, Chichester

Chen J, Sitter RR (1999) A pseudo empirical likelihood approach to the effective use of auxiliary information in complex surveys. Stat Sin 9:385–406

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm (with discussion). J R Stat Soc Ser B 39:1–38

Kendall MG, Stuart A (1961) The advanced theory of statistics. Vol. 2: inference and relationship. Charles Griffin & Company Limited, London

McLachlan G, Krishnan T (1977) The EM algorithm and extensions. Wiley, New York

McLachlan G, Peel D (2000) Finite mixture models. Wiley, New York

Pfeffermann D (1993) The role of sampling weights when modelling survey data. Int Stat Rev 61(2):317–337

Skinner CJ, Holt D, Smith TMF (1989) Analysis of complex surveys. Wiley, New York

Rao JNK, Molina I (2015) Small area estimation. Wiley, Hoboken

Särndal CE, Swensson B, Wretman J (1992) Model assisted survey sampling. Springer, New York

Thompson ME (1997) Theory of sample surveys. Chapman & Hall, London

WywiałJ L (2018) Application of two gamma distribution mixture to financial auditing. Sankhya B 80(1):1–18

Ża̧dło T (2006) On prediction of total value in incompletely specified domains. Aust N Z J Stat 48(3):269–283

Acknowledgements

This paper is the result of a grant supported by the National Science Centre, Poland, No. 2016/21/B/HS4/00666.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declare that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Derivation of parameters \(\hat{\Theta }_x^{(t+1)}\)

\(\hat{\Theta }_x^{(t+1)}\) maximize the log-likelihood function \(l^{(t)}({\textbf {d}}_s,{\textbf {x}}_{\underline{s}})\) [given by expression (4)] provided \(\sum _{h=1}^Hp_h=1\). Equivalently \(\hat{\Theta }_x^{(t+1)}\) maximize the Lagrangian function: \(\psi _x^{(t)}(\Theta )=l^{(t)}({\textbf {d}}_s,{\textbf {x}}_s)+\lambda (1-\sum _{h=1}^Hp_h)\). According to the pseudo-likelihood approach (see, e.g., [8, 12]), \(\hat{\Theta }_x^{(t+1)}\) is the solution of the following pseudo-log-likelihood estimation equation system:

where

These equations let us write: \(\hat{N}_h+\hat{\tau }_h^{(t)}=\lambda p_h\) for \(h=1, \ldots ,H\). This leads to \(\lambda =\sum _{h=1}^H(\hat{N}_h+\hat{\tau }_h^{(t)})\) and finally to the expression (7).

1.2 Derivation of estimators under an assumed normal distribution mixture

Under an assumed mixture of normal distribution, the components of the model expressed by (1) become as follows:

where \(\theta _h=[\mu _{y,h}\;\mu _{x,h}\;\sigma _{y,h}\;\sigma _{x,h}\;\sigma _{x,y,h}]\), \(\rho _h=\frac{\sigma _{xy,h}}{\sigma _{x,h}\sigma _{y,h}}\) and

The log-likelihood functions defined by expressions (3)–(6) take the following forms:

where

The first derivatives of the likelihood function expressed by (27) in the case of normal distribution, as given by (25), are evaluated as follows (see Kendall and Stuart [5], page 57):

The first derivatives of the likelihood function expressed by (29) in the case of normal distribution are as follows:

where \(\tau ^{(t)}_{h,k}\), \(\tau ^{(t)}_h\), \(\bar{x}_{\underline{s},h}^{(t)}\) and \(\sigma _{*x,\underline{s},h}\) are given by expressions (9), (11) and (30).

Equations \(\frac{\partial l^{(t)}(\textbf{x}_U)}{\partial \mu _{x,h}}=\frac{\partial l_1(\textbf{d}_s)}{\partial \mu _{x,h}}+\frac{\partial l_2^{(t)}(\textbf{x}_{\underline{s}})}{\partial \mu _{x,h}}=0\) and \(\frac{\partial l^{(t)}(\textbf{x}_U)}{\partial \mu _{y,h}}=\frac{\partial l_1(\textbf{d}_s)}{\partial \mu _{y,h}}=0\) are equivalent to the following, respectively:

and

When in (38) we replace \(\frac{\bar{y}_{s_h}-\mu _{y,h}}{\sigma _{y,h}}\) with the right side of equation (39), then, after simplification, we have:

This leads to the iterative estimator \(\hat{x}_{\underline{s},h}^{(t+1)}\), given by (10) and (11).

Equation \(\frac{\partial l_1(\textbf{d}_s)}{\partial \mu _{y,h}}=0\) is equivalent to the following:

After replacing \(\mu _{x,h}\) with \(\hat{x}_{x,h}^{(t+1)}\), we have:

Now, when we replace \(\sigma _{xy,h}\) and \(\sigma _{x,h}^2\) with \(\sigma _{xy,s_h}\) and \(\sigma _{x,s_h}^2\), respectively, we obtain the estimator \(\tilde{y}^{(t+1)}_h\) expressed by (13).

Equations \(\frac{\partial l^{(t)}(\textbf{x}_U)}{\partial \sigma ^2_{y,h}}=\frac{\partial l_1(\textbf{d}_s)}{\partial \sigma ^2_{y,h}}=0\), \(\frac{\partial l^{(t)}(\textbf{x}_U)}{\partial \sigma ^2_{x,h}}=\frac{\partial l_1(\textbf{d}_s)}{\partial \sigma ^2_{x,h}}+\frac{\partial l_2^{(t)}(\textbf{x}_{\underline{s}})}{\partial \sigma ^2_{x,h}}=0\) are equivalent to the following:

and after multiplying \(\frac{\partial l_1(\textbf{d}_s)}{\partial \rho _h}=0\) [see equation (35)] by \(\frac{1-\rho ^2_h}{n_h\rho _h}\) we have:

Similar to Kendall and Stuart [5] pp. 57–58, let us add Eqs. (41) and (42). Next, after subtracting Eq. (43) from this sum, we have:

The above equation is multiplied by \(\frac{\rho _h^2}{1-\rho _h^2}\) and simplified to the form:

In Eq. (42) we replace \(\rho _h\frac{\sigma _{*xy,s_h}}{\sigma _{x,h}\sigma _{y,h}}\) with the right side of the above equation. After some simplification, this lets us write:

In expressions (28) and (30), mean \(\mu _h\) is replaced by \(\bar{x}_{s_h}\) and \(\bar{x}_{\underline{s}_h}^{(t)}\), respectively. This leads to expression (14) and (12).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wywiał, J.L. On the Maximum Likelihood Estimation of Population and Domain Means. J Stat Theory Pract 17, 40 (2023). https://doi.org/10.1007/s42519-023-00337-4

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-023-00337-4