Abstract

Classical reliability models consider the failure modes of a multicomponent system as independent phenomena, even if these modes concern only one component or neighbouring components. In fact, when a failure mode occurs on a given system, causes may be related to external events or to the physical degradation of any component in the system. In both cases, failure modes can partially or totally share some root causes, which may compromise the principle of independence of failure modes. This paper reviews the failure interaction models that characterise the effect of any failure mode of a component on the failure modes of its neighbors and on the entire system. Three relevant groups of interaction models are reviewed: reliability indexes’ interaction, state-based interaction and copula-based interaction. All these models share the hypothesis of stochastic dependence between failure modes, also referred to as failure interaction. They differ in their fundamental modeling concepts. Advantages and limits of each of them are emphasized in a comparative study dealing with dependency concepts, modeling methods and application domains.

Similar content being viewed by others

1 Introduction

Risk management of industrial systems involves both an intelligent optimization of maintenance resources and an ability to anticipate the potential failures of its components [5]. Failure analysis based on reliability engineering methods is the core of this particular subject matter. Common methods used in this area, such as Failure Mode and Effects Analysis (FMEA), reliability block diagrams and Stress-Strength analysis, assume that the failure modes occur independently even if they concern only one component or neighbour components [26, 8, 41]. When multiple failure modes may be observed on a given system within a relative short operation time, they remain stochastically independent. Although this fundamental assumption allows some level of simplicity when addressing the failure analysis and risk assessment, it may be quite restrictive and irrelevant due to the mutual influence or interaction of multiple failure modes within the system. This is especially true considering that diverse nowadays systems (Reverse osmosis membranes, semi-conductors, etc.) have become more and more complex. Their functional decomposition show multiple components that interact. Understanding the interactivity of failures within a multicomponent system has become a critical and complex challenge for reliability analysis.

This paper reviews failure interaction modeling in the context of multiple failure modes and provides a framework allowing to identify and select the proper models pertaining to relevant technical matters and applications. The reviewed models share the principle of stochastic dependence between failure modes also called failure interaction. While stochastic models are diverse and numerous, we chose to limit our review to failure interaction models. They seek to characterise the effect of any failure mode of a component on the failure modes of its neighbors and the entire system. This is critical in the understanding and prediction of how and why a system fails. Practical implications like maintenance methods and strategies can then be addressed through these models. They are classified into three approaches: reliability indexes’ interaction, state-based interaction and copula-based interaction. Based on their fundamental modeling hypothesis, advantages and limits of each approach are emphasized in a comparative study dealing with dependency concepts, modeling methods and application domains.

The remainder of the paper is organized as follows. Section 2 presents the concepts of failure interaction as apprehended in the literature on failure analysis. Interactive failure models are discussed according to each proposed approach. Modeling equations are described in detail to show the main differences between the models reviewed. Section 3 presents a comparative study of the reviewed interactive failure models based on their concepts, methods and applications related to the subject matter. Section 4 contains some concluding remarks.

2 Concepts of interactive failures

Cho and Parlar [10] present the interdependences of a system’s components in three categories: economic, structural- and stochastic. Economic dependence exists whenever the combination of diverse maintenance actions, if performed simultaneously, can have a significant impact on the overall cost of the system’s maintenance. Structural dependence relates to the architecture of the system. Some components may indissociably share a specific function. In this case, the maintenance of a component requires that the other components be stopped or dismounted. For example, the principle of cascading failures considers a system within which components fail successively in a specific pattern, subject to the architecture. Concepts of interactive failures or failure interaction presented in this review are beyond economic and structural considerations. They are defined as stochastic dependences. Stochastic dependence appears when the failure or the degradation of a component influences the state of another component. This category of failure dependence establishes a relation between the states of a system’s components, regardless of its architecture. The inherent principle suggests that the propagation of a component’s failure alters the reliability of other components, and consequently, of the overall system. In this case, relationships are determined between the components’ ages, failure rates and failure times. This stochastic dependence implies that the states of components interact, in which case, the terms interactive failures and failure interaction become relevant.

Murthy and Nguyen [43, 44] present two types of failure interaction:

-

Type I, induced failures: a component causes the instantaneous failure of another component with a probability \(p\) or has no effect with a probability \(1 - p\);

-

Type II, failure rate or shock damage interaction: a component’s failure changes the failure rate of another component; in other words, a component’s failure acts as a shock on another component and the accumulation of shocks accelerates the failure rate.

The aforementioned types of failure interactions are largely reused, updated and extended in most models presented by this review. For example, Nakagawa and Murthy [45] consider shock damage interactions to find the optimal replacement number to minimize the expected cost of maintenance. Failure interaction can be defined as a gradual, immediate, unidirectional or bidirectional phenomenon. The most relevant works pertaining to the subject of interactive failures or failure interaction can be classified into 3 groups of models:

-

Reliability indexes’ interaction: Failure rates of diverse components and/or failure modes are linked by an analytical function, which in general has an incremental effect on the system’s overall failure rate. Structural and economic dependencies within the system influence greatly the choice of the function. As a consequence, these models address in general simple architectures (series, parallel, etc.). Such models are built from failure times in maintenance logs and don’t explicit physical degradation processes;

-

State-based interaction: A relationship is established between the diverse degradation processes within the system. The monitoring process of state variables (Temperatures, wear, etc.) is rather important in this case since the interaction will be defined considering the variations in the degradation rates of a system’s components;

-

Copula-based interaction: These models are created regardless of any assumptions one might have on the evolution of the degradation processes and/or the failure times. A function (copula) that links components’ reliabilities is selected based solely on the likelihood of the data.

Table 1 summarizes the general criteria that led to the classification of the failure interaction models.

2.1 Reliability indexes’ interaction

Reliability indexes’ interaction models are the most common in the literature. They establish an analytical relationship of dependence between the reliability indexes or parameters of a system’s components. Practically, they establish a mathematical dependence between failure rates or failure times regardless of any physical degradation parameter. They rely solely on the accuracy of the maintenance logs. They combine the concepts proposed by Murthy and Nguyen [43, 44] for systems with two components or more.

2.1.1 Failure interaction of two components

Satow and Osaki [50] base their study on the hypothesis defined by Murthy and Nguyen [43, 44]. In their works, component 1 causes the instant failure of component 2 (type I interaction) or causes the failure of component 2 due to the accumulation of multiple shocks (type II interaction). The idea is that within physical systems components’ failures can be precipitated by the failure of neighbour components through random phenomena like vibrations, frictions, etc. The failures of component 1 are assumed to follow a non-homogeneous Poisson process of intensity \(h\left( t \right)\). The system has to be replaced at the \(N\)-th failure of component 1 and whenever component 2 fails. In the case of induced failures (type I interaction), \(p_{j}\) denotes the probability that component 2 fails instantaneously at the \(j\)-th failure of component 1. The mean time to replacement is:

where \(H\left( t \right) = \mathop \smallint \limits_{0}^{t} h\left( u \right)du\). In the case of shock damage interaction (type II interaction), component 1 causes damage with a distribution \(G\left( x \right)\) to component 2 that fails when the total damages reach a threshold level \(Z\).

where \(G^{\left( k \right)} \left( x \right)\) is the \(k\)-fold convolution function of \(H\left( t \right)\) with itself, \(G^{\left( 0 \right)} \left( x \right) = 0\) and \(k = 1,2, \ldots\) These models are used to determine a replacement policy that minimizes the cost in regard to the system age \(\left( t \right)\) and a threshold \(\left( Z \right)\) of shocks accumulated by component 2. The main difficulty of that method is the definition of the threshold and the damage distribution. Since the primarily available data are failure times from maintenance logs, one might need to rely on experimental data or a physical model in addition.

Wang and Zhang [58] associate a maintenance technician to a system with 2 dissimilar components. Considering the case of a type II interaction, a component 1 causes random shocks on a component 2. These internal shocks are accumulated until the system fails. The failure of component 2 causes the instant failure of component 1. Component 1 is replaced upon failure and component 2 can be repaired. Like the previous model, a component is assumed to be critical to the system’s primary function. Moreover, it requires extensive repairs upon its failure while some other part can be easily replaced but, still, can unbalance the stability of the system as a whole through chain reactions or random phenomenon in the shared environment. Diverse quantities are then studied for a renewal process: \(X_{i}\) the time between failures of the \(i\)-th component 1, \(Y_{i}\) the damage caused to component 2 by shocks due to the failure of the \(i\)-th component 1, \(Z_{n}\) the repair time of component 2 in the \(n\)-th cycle of a replacement policy. A geometric model is chosen to characterize the threshold of shocks accumulated by component 2. Another geometric model represents the evolution of the repair time \(\Delta _{n}\) in the \(n\)-th cycle of a replacement policy. The distribution functions of \(X_{i}\), \(Y_{i}\) and \(Z_{n}\) are denoted respectively by \(F\left( t \right)\), \(H\left( t \right)\) et \(G_{n} \left( t \right)\) so that:

where \(a\) and \(b\) \(\left( { 0 < b \le 1, 0 < a \le 1 } \right)\). are the geometric ratios. Wang and Zhang [58] define \(T_{i}\) and \(v_{i}\) as respectively the time between failures and the number of component 1 failures in the \(i\)-th operation cycle. \(Z\left( i \right)\) denotes the total amplitude of accumulated shocks due to component 2 failures. Equation (4) illustrates the model.

where \(H^{\left( k \right)} \left(\Delta \right)\) is the \(k\)-fold convolution function of \(H\left( t \right)\) and \(k = 1,2, \ldots\) In reality, the length of repair time cannot be described as strictly geometric. There is in fact high randomness due to the human factor in the repair process. But, the model (4) succeeds in determining a relation between the age of a system and the repair time while including shock damage interaction.

Lai and Yan [33] base their work on type II interactions that are also extensively studied in related early articles by Lai and Chen [31, 32] and Lai [30]. The model (5) considers a repairable component 2 and a non-repairable component 1. The main point of this model is to introduce a certain proportionality in failure rate interaction that is intuitively assumed. The potential for chain reactions and the shared environment contribute to an acceleration of the failure rate. The failure rate of component 2 follows a non-homogeneous Poisson process of intensity \(h_{2} \left( t \right)\). Every failure of component 2 increases the failure rate of component 1. Inversely, if component 1 fails, component 2 instantly fails. This hypothesis can be restrictive since no retroactive effect is accounted for. The failure rate of component 1 is:

where \(N_{2} \left( t \right)\) is the number of failures of component 2. Lai and Yan [33] apply the concept of minimal repairs to component 2. They take into account the economic dependences within a system and determine a replacement policy with an optimal number of minimal repairs for a maintenance cycle of optimal duration \(T\).

Golmakani and Moakedi [16] use the same model (5) but consider 2 types of failures. A component 1 is subject to soft failures following a non-homogeneous Poisson process. A component 2 is subject to hard failures following a homogeneous Poisson process. Hard failures have an instant detectable effect and require immediate intervention when they occur. Soft failures can only be detected by a scheduled inspection because they don’t stop the system but decrease its performance. Generally, hard failures pertain to the inability to perform a primary function while soft failures are related to secondary functions. A component’s hard failure can be the root cause of another component’s soft failure if this component serves a secondary function (e.g. protective apparel) for a more critical component. The model (6) is based on the assumption that a hard failure by component 2 increases the failure rate of component 1. The latter has inversely no effect on component 2 if it stops working. A coefficient \(p\) represents the percentage of increase of the failure rate of component 1 due to hard failures.

where \(h_{1}^{0} \left( t \right)\) is the initial failure rate of component 1. The estimation of the coefficient \(p\) would rely on experimental data or the availability of a physical model. Yet, considering a constant incremental effect (\(p\)), suggests that the interaction have little to no variability. Though, defining the failures in consideration of the time of detection and inspection allows the authors to characterize the effects of interaction in a maintenance strategy based on the optimal number of inspections in a cycle.

Sung et al. [56] combine the concepts of failure rate interaction (type II) and external shocks. The effect of external shocks is particularly important for mechanical systems that often have a protective external component subject to the state of the surrounding environment. Whether a system is well-designed or not, it is merely impossible to perfectly prevent an interaction with external factors. This interaction has an internal consequence as well. The external shocks occur following a non-homogeneous Poisson process of intensity \(r\left( t \right)\) which suggests randomness. They cause minor failures with a probability \(p\) and catastrophic with a probability \(1 - p\), exclusively to a component 2. The failures of component 2 act as internal shocks as well and increase the failure rate of component 1. The entire system fails if component 1 fails. The number of component 2 failures \(N_{2} \left( t \right)\) follows a non-homogeneous Poisson process of intensity \(\delta \left( t \right) = h_{2} \left( t \right) + p.r\left( t \right)\) where \(h_{2} \left( t \right)\) is the failure rate independently of the external shocks. Thus the failure rate of the system depends on the failure rate of the dominant component 1 and the number of external shocks endured by the other component. Equation (7) gives the failure rate of the dominant component:

The survival function of the system is given by:

This method allows the inclusion in an analytical model of the effect of the environment on the system but the starting hypothesis remains restrictive since only systems with a single dominant component are addressed by this model. A long-term replacement policy is derived by the authors in function of an optimal number of minimal repairs, which minimizes the overall cost of maintenance.

2.1.2 Failure interaction in a multicomponent system

The models are relatively abundant in the case of systems with two components. They become less representative for multicomponent systems. In fact, models for multicomponent systems are less abundant in the literature since the understanding of the interaction becomes more difficult when the studied system is more complex. Jhang and Sheu [22] compare maintenance strategies for a system with \(N\) components. Each component \(i\) can be subject to a minor failure with a probability \(1 - p_{i}\) or cause a major failure to all other components stopping the system with a probability \(p_{i}\) (type I interaction). This is similar to the reasoning behind hard/soft failures but, here, a retroactive effect considering the set of components affected is accounted for. Minimal repairs are used in the case of minor failures. All failures are assumed to follow a non-homogeneous process of intensity \(h_{i} \left( t \right)\). The failure times are simulated by random draws by the authors. \(Y = \hbox{min} \left\{ {Y_{1,i} , 1 \le i \le N} \right\}\) is defined as the time until the first system replacement; where \(Y_{1,i}\) is the time until a failure from component \(i\) stops the system. The reliability function of \(Y_{1,i}\) is:

The survival function of \(Y\) is:

The interactive model (10) is considered with both—but separately—age and block replacement policies that are common maintenance strategies. This shows the flexibility of the approach. Yet, building this model from failure times in maintenance logs would require substantive data on all components; while in reality, only critical components are properly observed for a limited period. The main results show that accounting for the interactivity of failures in the developed models helps to better anticipate the maintenance costs.

Lai [29] broadens the scope of earlier work on type II interactions in systems with two components and studies type II interactions for multicomponent systems. He considers a system consisting of \(N\) components. One of them is assumed to be dominant and non-repairable, while the others are secondary components and can be repaired. They are mutually independent and follow non-homogeneous Poisson processes. The hypothesis of dominance is plausible if the primary function in a system is guaranteed by a single component. Though, as aforementioned, industrial systems tend to be more and more complex and multiple components can share a critical function or have more than one critical functions. The failure of a secondary component increases the failure rate of the primary component. Lai [29] proposes a replacement policy following the system’s age, considering that secondary failures are corrected by minimal repairs. Li et al. [35] use the same model with \(n + 1\) components. One of them is dominant and non-repairable and the others are secondary and repairable. The secondary components are mutually independent and follow exponential laws of parameters \(h_{n} \left( t \right) < h_{n - 1} \left( t \right) < \cdots < h_{2} \left( t \right) < h_{1} \left( t \right)\). A voting system of \(m\) components out of the secondary \(n\) causes the failure of the system. Such an approach adds more randomness to the model since unlike the model of Lai [29] the set of components interacting and failing can vary. The failures of secondary components increase the failure rate of the primary component denoted by \(h_{N} \left( t \right)\):

where \(k_{i}\) is the number of failures \(N_{i} \left( t \right)\) of component \(i\). \(S_{ik} \left( {k = 0,1,2, \ldots } \right)\) is defined as the random failure time of component \(i\). The probability that \(k\) or more secondary components fail is:

where \(R_{i} \left( t \right) = \int_{0}^{t} {h_{i} \left( x \right)dx}\) is the survival function of a single component. \(h_{N} \left( t \right)\) depends on the conditional probability \(h_{{Nk_{i} }} \left( t \right) = P\left( {t | N_{1} \left( t \right) = k_{1} , \ldots , N_{n} \left( t \right) = k_{n} } \right)\) and:

Estimating the probability of failure of the dominant component is complex and the authors propose the use of Markov processes. A transition matrix helps to determine the performance and reliability parameters of the system. However, the use of Markov processes adds a certain complexity to the model because the number of states of the dominant component will be directly correlated to the number of secondary components.

As above-mentioned, there are cases that respect the ideas of the dominance of a single component and the unilaterality of interactions. Yet, these hypotheses are restrictive for systems with a more complex structure.

Zhang et al. [69, 70] also use Markov processes to characterize the states of a system with n components. They integrate the concept of interactivity in a more general manner. There is no predetermined dominant component since in most systems the primary function is performed by a set of components with the same level of criticality. This shared level of criticality influences the maintenance actions. This is why the authors include opportunistic maintenance concepts to their study. This adds to the model dynamics between failure effects and maintenance actions. A component is assumed to have a finite set of states: \(\varPsi = \left\{ {0, \ldots ,m} \right\}\) where state \(0\) is the initial state, and states \(1 , \ldots , m - 1\) reflect the deteriorating conditions. The component is subject to corrective maintenance when it reaches \(m\). Markov chains are used to model the state transition of a component with a transition rate \(h\). The deterioration process of a component speeds up when other components fail. Maintenance opportunity from other components is assumed to follow a Poisson process of intensity \(\mu = h_{f} + \mu_{p}\) where \(h_{f}\) is the failure rate of other components and \(\mu_{p}\) is the rate of preventive maintenance on other components. A variable \(a\) identifies the maintenance actions by:

-

a \(= 0\): no maintenance

-

\(a = 1\): conducting maintenance.

-

\(S = \{ \left( {i,\omega } \right)|0 \le i \le m;0 \le \omega \le 2\}\). is defined as a space where \(i\) is the state of a component and \(\omega\) is the occurrence of maintenance opportunity given by:

-

\(\omega = 0\): no maintenance opportunity;

-

\(\omega = 1\): maintenance opportunity due to failures of other components;

-

\(\omega = 2\): maintenance opportunity due to other components’ preventive maintenance.

The transition probability from state \(s \in S\) to another \(s^{{\prime }} \in S\) is defined by \(P_{{ss^{{\prime }} }} \left( a \right)\) in the following manner:

where \(p_{ij}\) is a transition probability that a component deteriorates from state \(i\) to state \(j\) and \(q_{ij}\) is defined as another transition probability induced by other components’ failures. The authors propose the method of Hidden Markov Chains to determine the transition matrix according to observed data. This suggests a certain abundance of reliable observational data on each component’s failure. By applying the concept of opportunistic maintenance to their model, they take into account the economic dependences.

Liu et al. [39] represent the interactivity by a probability matrix \(P = \left( {p_{ij} } \right)\) considering type I interactions as defined by Murthy and Nguyen [43]. Thus, a component \(i\) causes an instant failure to a component \(j\) with a probability \(p_{ij}\) and has no effect with a probability \(1 - p_{ij}\). Failure interaction undoubtedly decreases the reliability of a system. By accounting for the interactivity, Liu et al. [39] identify some issues in the early stages of a system’s design that could affect the constructor’s warranty. This shows that the concept of failure interaction has on the long run, in addition to the physical implications of a failure, an extensive and accelerating effect on the decrease of a system’s economic value. They define \(w\) as a system’s period of warranty, \(p_{i} \left( w \right)\) the probability of failure for a component \(i\) during \(w\), \(\alpha_{i} \left( w \right)\) the probability that a component \(i\) failure will cause the system failure, and \(F_{S} \left( w \right)\) the distribution function of the system:

where \(Y_{i} = \hbox{min} \left( {T_{j} , \forall j \in \varOmega , j \ne i} \right), \varOmega = \left\{ {1,2, \ldots ,n} \right\}\). The number of failures \(N_{ij}\) of a component \(j\) caused by a component \(i\):

The authors apply their model to series and parallel architectures to adjust the warranty cost considering the number of failures due to type I interactions. This approach could be improved by including type II interactions. Zhang et al. [66] tackle the issue of product warranty as well by considering type III interactions that we classified in this paper as type II interactions. They base their model on Satow and Osaki [50] with two components in series. Moreover, the methods aforementioned, perform limitedly when it comes to complex architectures. Their core concepts revolve around key assumptions on some dependencies.

2.1.3 Interaction of failure modes from a system point-of-view

A system can be subject to diverse failure modes. These modes can influence each other, mutually or not. The models considered thus far are based on assumptions of failure interaction between the components of a system. They do not explicitly identify the dependences between diverse failure modes of the system as a whole. They could be applied in such cases by considering the modes as components in series, but the interactivity of failure modes can have a more complex form. Some recent papers intend to model this dependence.

Zequeira and Bérenguer [65] distinguish two types of failure modes: maintainable and non-maintainable. The modes are distinguished considering the reparability in the occurrence of a failure. Preventive maintenance corrects the deterioration due to maintainable modes. Non-maintainable modes can only be corrected by a complete overhaul of the system. Moreover, minimal repairs are considered in the case of failures. The model is applied to components in series. It puts the stochastic dependence on display by considering that the failure rate of maintainable modes \(h_{m} \left( t \right)\) depends on the failure rate of non-maintainable modes \(h_{nm} \left( t \right)\). There is a certain similarity to the hard/soft failures reasoning but, here, only maintainability distinguishes the two types of failures. A term \(p\left( t \right)\) is defined as the probability that a non-maintainable mode will automatically cause a maintainable mode. This probability is estimated by considering the physical and structural characteristics of the system. In other words, there needs to be a supportive physical model or conclusive experimental model to determine how the modes are linked. Preventive maintenance is performed at periods \(kT\) where \(k = 0,1,2, \ldots\) and \(T > 0\). During a period \(\left[ {kT,\left( {k + 1} \right)T} \right[\) the maintainable failure rate is \(h_{m} \left[ {t - \left( {k - 1} \right)t} \right] + p\left( t \right)h_{nm} \left( t \right)\). Then, the system’s failure rate during period \(\left[ {kT,\left( {k + 1} \right)T} \right[\) is given by (17):

The authors use a mechanical coupling as an illustrative example, which shows applicability of the model on real-life systems. However, not all maintainable failure modes have the same impact or repair-time. Considering them as a single category alters the comprehension of a system’s deterioration process. They also propose an imperfect preventive maintenance policy to estimate an optimal complete revision period \(T\) and an optimal number of preventive replacements \(N\) that minimize the overall cost.

Castro [7] proposes an improvement on the model by Zequeira and Bérenguer [65]. He suggests that the occurrence of maintainable failures is correlated with the number of non-maintainable failures denoted by \(N_{2} \left( t \right)\) aggregated following the installation of a system. This choice is motivated by the fact that the number of non-maintainable failures in a specific period is easier to comprehend than a failure rate to estimate. The failure rate of maintainable modes \(h_{1,k} \left( t \right)\) during period \(\left[ {kT,\left( {k + 1} \right)T} \right[\) is then defined as a doubly stochastic Poisson process or a Cox process. An adjustment factor \(a > 1\) quantifies the effect of cumulative degradation due to non-maintainable failures in such a model:

where \(h_{1,0} \left( t \right)\) is the failure rate of maintainable modes before the first operation of preventive maintenance. The number of failures considering the distinctive modes is integrated with a periodic preventive maintenance strategy. With minimal repairs used as troubleshooting actions, the strategy estimates the optimal number of minimal repairs \(N\) before a complete revision at period \(T\) that minimizes the overall cost. Both (17) and (18) models share the same principle of a unidirectional interactivity. It is not taken into account that two maintainable modes could be significantly distinctive and interact in a bidirectional manner.

Fan et al. [13] have, interestingly, proposed a model with two failure modes that have bidirectional stochastic dependence. Their model is based on the works of Murthy and Nguyen [43, 44], Zequeira and Bérenguer [65] and Castro [7]. This is important since the assumption of failure interaction regardless of architecture suggests the possibility of a retroactive effect. Most of the previous models consider this effect as immediate, instant or total (on the whole system) while it could be gradual. The failure rates are defined as doubly stochastic Poisson processes or Cox processes. The authors consider that the failure rate of mode \(i\) depends on the number of the failure in the other mode \(\bar{\iota }\) and vice versa. A performance variable is associated with each failure mode. In this model, \(t\) is defined in function of the number of passed preventive maintenance cycles. In fact \(t = t_{k} + t_{L}\) where \(t_{k}\) is the time from the system’s installation to the \(k\)-th maintenance operation and \(t_{L}\) is the operating time since the last preventive action. \(h_{0,i} \left( t \right)\) is the failure rate before the first preventive maintenance. At the \(l\)-th preventive maintenance cycle, the failure rate for the mode \(i\) is:

where \(a_{i}^{{\bar{N}_{{\bar{\iota },l}}^{Z} }}\) and \(a_{i}^{{N_{{\bar{\iota },l}}^{Z} (t)}}\) are adjustment factors representing the effect of mode \(\bar{\iota }\). \(\bar{N}_{{\bar{\iota },l}}^{Z}\) is the number of failures by mode \(\bar{\iota }\) before \(t_{l}\). \(N_{{\bar{\iota },l}}^{Z} (t)\) is the number of failures by mode \(\bar{\iota }\) estimated at \(t\). \(y_{i,l}^{ + }\) is the effective age of the mode \(i\) right after the \(l\)-th preventive maintenance. \(y_{i,l}^{ + } \left( t \right)\) is the predicted effective age of the mode \(i\) right after the \(\left( {l - k} \right)\)-th preventive maintenance at \(t\). This approach is suitable for repairable systems. As an application, Fan et al. [13] develop a Cooperative Predictive Maintenance Model that relies on minimal repairs and imperfect maintenance with restricted resources. A subsequent cooperative maintenance strategy for both modes is proposed and takes into account the system’s effective age and the bidirectional interactions.

2.1.4 General interactive failure models

All the above-mentioned models share some restrictiveness due to the numerous hypotheses formulated on the structure of the system. They define a dominant component or consider a specific architecture for the overall system. Therefore, they cannot be generalized for all cases.

Sun et al. [54, 55] identify the interactions with an immediate effect and the interactions with a gradual effect. They assume the hypothesis of a gradual effect accurately represents physical systems. In a system with \(N\) components, they distinguish an independent failure rate denoted by \(h_{Ii} \left( t \right)\) inherent to each component \(i \left( {i = 1,2, \ldots ,N} \right)\) and a dependent or interactive failure rate denoted by \(h_{i} \left( t \right)\). The interactive failure rate of one component integrates the influence of other components:

\(\vec{h}_{{j_{i} }} \left( t \right)_{B}\) is the vector of failure rates before any interaction influencing component \(i\). Sun et al. [55] use the Taylor expansion to establish a parametric expression of the dependent failure rate of one component in function of the independent failure rates of all components. The obtained analytical expression provides this idea of an update in a failure rate from independent to dependent by a linear combination with coefficients denoted by \(\theta_{{ij_{i} }}\):

The authors call the \(\theta_{{ij_{i} }}\) interactive coefficients since they represent the weighted effect of a failure rate from a component or a failure mode on another. \(\theta_{{ij_{i} }}\) is a parameter comprised between 0 (no interaction) and 1 (perfect interaction and immediate/simultaneous failure). A matrix of the coefficients can consequently be built. Such an approach characterizes the interactivity independently from any assumption on the architecture of the system. Yet, building the matrix is not straightforward and relies on substantive experimentation and the opinions of experts. The estimation of the interactive coefficients requires a partition of the failure times in order to fit independent failure rates for each component or failure mode. This requires abundant observational data for each scenario of the \(\frac{{N^{2} }}{2}\) possibilities of interaction. Zhao et al. [71] reprise the model by Sun et al. [55]. They present an alternative to the classical method of Failure Modes and Effects Analysis (FMEA) that would account for the interactivity in a complex system. They apply their model to a gyroscope and demonstrate that the concept of interactive failures are indeed applicable and have a significant influence on the overall reliability of a system subject to multiple failure modes. Wang and Li [59] reprise the analytic model of Sun et al. [55] as well and propose an allocation method of redundancies in the design of a system. They demonstrate that the effect of interactive failures can be minimized if they are properly modeled in the system design process. The parameters pertaining to reliability indexes’ interaction are summed up in Table 2.

2.2 State-based interaction

In the context of conditional maintenance, the interactivity can be represented with a system’s state variables. With sensors and captors, data on the system’s degradation is collected and it helps to provide a physical interpretation of interactive failures. Keizer et al. [27] review diverse condition-based maintenance policies for systems with multiple dependent components. They consider structural, stochastic and resource dependences. Among models with stochastic dependences, they distinguish failure interaction models as follows:

-

Failure induced damage: the failure of one component causes immediate damages to other components by causing an immediate failure or increasing the deterioration level;

-

Load sharing: a component fails but the system keeps operating as the other components need to work harder;

-

Common-mode deterioration: many components fail simultaneously.

We consider a slightly different classification since some of the reviewed models by Keizer et al. [27] are copula-based. The following approaches are not. Chen et al. [9] distinguish the natural failure of a system caused by its degradation process from traumatic failures due to external shocks caused by the environment. The degradation follows a stochastic process \(D\left( t \right)\) and a failure threshold is fixed \(D_{f}\). The shocks occur independently from the degradation process and follow a Poisson process of intensity \(\lambda\). The traumatic failures are assumed to occur with a probability \(p\) that depends on the degradation level. The system is more prone to failure modes if the degradation level increases significantly. This is particularly true for mechanical systems subject to degradation. The overall system becomes more vulnerable to its environment as it ages (e.g. proneness to corrosion, vibration, physical shocks etc.). The internal components and their mechanical links are in reverse subject to the propagation of external shocks.

where \(\alpha\) is a parameter of the dependence.

As a matter of fact, the traumatic failure rate \(h_{s} \left( t \right)\) depends on the probability \(p\):

The moment of a natural failure is denoted by \(T_{d}\) and the moment of a traumatic failure by \(T_{s}\). The system’s failure times are defined as \(T = \hbox{min} \left\{ {T_{d} ,T_{s} } \right\}\). The authors consider an example with linear degradation \(D\left( t \right) = Bt\) where \(B\) is a random variable so that \(B = \beta\) for traumatic failures. Thus, the survival function of the system is:

The authors apply the renewal theory to the proposed model and derive an inspection policy that minimizes the long-term cost of maintenance. In this approach, the cumulative effect of shocks and the variability of their amplitude are not accounted for because shocks can cause traumatic failure or have no effect.

Huynh et al. [20, 21] aim to combine the competitive effects of degradation, internal and external shocks. They reprise the concept of natural and traumatic failures and define the degradation as a continuous stochastic process \(X\left( t \right)\). A Gamma distribution is selected to model the degradation since it characterizes in a satisfying manner diverse phenomenon like erosion, corrosion, etc. The shocks follow a non-homogeneous Poisson process. The system’s failure rate depends on the degradation level. Above a fixed degradation level threshold \(M\), the system’s failure rate \(h\left( t \right)\) is altered. It means that the system becomes more vulnerable to internal and external shocks. It also suggests that there is a specific moment when the interaction becomes more significant. As an example, a component with a regulation or protective function could fail in an electronic system, and cause heating that would accelerate the system’s failure.

where \({\mathbf{1}}_{\left( . \right)}\) is an indicator function; \(h_{1} \left( t \right)\) and \(h_{2} \left( t \right)\) are two continuous and non-decreasing failure rates following the relation \(h_{1} \left( t \right) \le h_{2} \left( t \right), \forall t \ge 0\). The number of faures due to shocks \(N_{s} \left( t \right)\) is a Cox process. The authors define the following variable \(:\,\,\sigma_{s} = \inf \left\{ {t \ge 0, N_{s} \left( t \right) = 1} \right\}\). The survival function of the system is then:

\(F_{{\sigma_{M} }}\)(resp. \(f_{{\sigma_{M} }}\)) is the distribution function (resp. p.d.f.) of the time when \(M\) is reached. \(\bar{F}_{1} \left( t \right)\) is the survival function associated to \(h_{1} \left( t \right)\). Huynh et al. [20, 21] suggest that the parameters of this model, like the threshold \(M\), \(h_{1} \left( t \right)\) and \(h_{2} \left( t \right)\), can be estimated by classical statistical methods. They apply the principle of minimal repairs and the renewal theory as well and obtain an inspection and maintenance policy that minimizes the long-term cost in function of the age and the degradation level.

Do et al. [11] consider a system consisting of two dependent components that are connected in series. This means that the two components are critical to the functioning of the system. Their dependence is expressed by the relationship of their degradation processes. The accumulation of wear is described by a scalar random variable \(X_{t}^{i}\) and a component \(i\) is assumed to have failed when a threshold \(L_{i}\) is reached. The accumulation of wear at \(t + 1\) becomes:

where \(\Delta X^{i}\) is the independent random increment of the deterioration level, \(f\left( {X_{t}^{j} } \right)\) represents the impact of a component \(j\) on \(i\), and \(\sigma^{j}\), \(\mu^{j}\) are positive parameters quantifying the influence of a component \(j\) on \(i\). The parameters of the acceleration of the degradation processes \(\sigma^{j}\), \(\mu^{j}\) would have to be estimated through experimental methods or abundant condition-based maintenance historical data. Assaf et al. [3, 4] present a model based on Do et al. [11] involving similar issues for multicomponent systems in the form of degradation rate-state interactions. The interaction is defined as an acceleration of the wear indicators caused to a component by neighbor components.

Song et al. [52, 53] work on multicomponent systems with the hypothesis that each component has 2 failure modes that are stochastically dependent. Each component is subject to degradation and shocks. This approach is motivated by the fact that a component’s degradation can often be evaluated experimentally easier than the interactive effects of other components. It would be for example hard to evaluate with precision how the corrosion of a component could have caused the deformation of another. Components are then assumed to cause random shocks on each other. A resistance limit to shocks \(D_{i}\) specific to the component \(i \left( {i = 1,2, \ldots ,n} \right)\) is fixed. A component degrades following a process \(X_{i} \left( t \right)\) with a failure threshold \(H_{i}\). Contrary to the two aforementioned models, the cumulative effects of shocks are calculated and this increases the degradation level. \(W_{ij}\), \(Y_{ij}\) and \(S_{i} \left( t \right)\) are respectively defined as the amplitude of the \(j\)-th shock on component \(i\), the proportion of the \(j\)-th shock impact on component \(i\) and the cumulative of shocks \(Y_{ij}\). The survival function of the systems depends on \(F_{{W_{i} }} \left( \omega \right)\) and \(F_{{X_{i} }} \left( {x_{i} , t} \right)\) that are respectively the cumulative density functions of \(W_{ij}\) and \(X_{{S_{i} }} \left( t \right) = X_{i} \left( t \right) + S_{i} \left( t \right).\) The reliability of a single component depends on the survival probability in case of a single shock and the probability that the failure threshold is not reached by the accumulated shocks:

where \(G_{i} \left( t \right)\) is the cumulative density function of \(X_{i} \left( t \right)\) and \(f_{{Y_{i} }}^{k} \left( u \right)\) is the distribution function of the sum of the independent and identically distributed \(k\) variables \(Y_{ij}\). The survival function of the system is then determined for standard architectures (series, parallel, series–parallel). For example, the reliability of a system with components in series is:

A maintenance strategy that minimizes the cost is derived and an optimal inspection period is estimated. It is important to note, however, that the proposed model considers a number of shocks common to all components. The failure threshold is fixed and has no variability. The amplitude of shocks is not considered a random process when in reality it could be.

One of the more elaborate approaches is in an article by Rafiee et al. [48] that links the concept of interactive failures with a changing degradation rate. This work results from some earlier collaborative research on multiple competitive failure modes, failure limits change and shock damage interaction: Feng and Coit [15], Peng et al. [47], Jiang et al. [24], Arab et al. [2]. The proposed approach considers two conditionally independent events: \(NHF_{t}\) meaning No Hard Failure occurs by time \(t\) and \(NSF_{t}\) meaning No Soft Failure occurs by time \(t\). Hard failures are due to random shocks exceeding a threshold \(D_{1}\). These shocks occur following a Poisson process of intensity \(\lambda\). The \(k\)-th random shock is denoted by \(W_{k}\) with a c.d.f \(F_{W} \left( w \right)\) and the number of shocks is denoted by \(N\left( t \right)\). Soft failures are caused by the degradation. A threshold \(H\) is defined and can be reached by the combined effect of random shocks and natural degradation. The natural degradation is defined as \(X\left( t \right) = \varphi + \beta t + \varepsilon\). where the initial degradation \(\varphi\) and the degradation rate \(\beta\) are random variables, and the random error \(\varepsilon\) follows a normal distribution. The degradation rate \(\beta\) can change from \(\beta_{1}\) to \(\beta_{2}\) due to a trigger \(J\)-th shock and become:

where \(T_{J}\) is the transition time, the time when the rate \(\beta\) changes. \(Y_{k}\) and \(S\left( t \right)\) denote respectively the \(k\)-th shock’s damage size and the total damage size due to all random shocks. The overall degradation is represented by \(X_{S} \left( t \right) = X\left( t \right) + S\left( t \right)\) where \(S\left( t \right) = \mathop \sum \limits_{k = 1}^{N\left( t \right)} Y_{k}\) if \(N\left( t \right) > 0\) and \(S\left( t \right) = 0\) otherwise. The survival function of a device subject to two dependent competing failure processes is denoted by \(R\left( t \right)\). Equations (31) sum up the proposed model:

where \(f_{{T_{j} }} \left( {t_{j} |j} \right)\) is the p.d.f. of \(T_{J}\) for \(J = j\).

This approach is of even more interest since it puts into perspective 4 distinct models of shock interaction and proposes an application on micro-electromechanical systems (MEMS). This study also contains a sensitivity analysis to provide a physical interpretation of the interactivity. The shock processes considered are:

-

The generalized extreme shock model: a single shock above a critical threshold value changes the degradation rate of a system.

-

The generalized \(\delta\)-shock model: the interval between two sequential shocks above a critical threshold value is inferior to a limit \(\delta\) so that the degradation rate changes.

-

The generalized \(m\)-shocks model: An accumulation of \(m\) shocks above a critical threshold value changes the degradation rate.

-

The generalized consecutive shocks model: a succession of \(n\) shocks above a critical threshold value changes the degradation rate.

Liu et al. [40] propose an extension to this mod by including the damage due to self-regulation in self-healing systems.

These diverse models of shock processes represent the internal failure interactions as well as the external effects of a system’s environment. Other models considering a changing degradation rate are available in the literature such as Bian and Gebraeel. [6], Rasmekomen and Parlikad [49], Hao et al. [14, 18, 51]. Yet, for all of the models, the idea that the amplitude of the shocks, internal and external, can vary or be random, needs to be studied further. In fact, the changing degradation rate is in most cases subject to a constant factor.

Liang et al. [36] build on the previous work of Liang and Parlikad [37] and Rasmekomen and Parlikad [49] to deal with the issue of fault propagation. They consider that the phenomena of dependences, induced or inherent to a system, can interact and lead to a cooperative acceleration of dependent failures and cause new dependences. They model the deterioration of a multicomponent system as a multi-layered vector-valued continuous-time Markov chain. They distinguish non-critical component from critical components. For a system with \(\nu\) critical components, the normal deterioration is \(\left\{ {X_{0} \left( t \right)} \right\} = \left\{ {X_{1,0} \left( t \right), \ldots , X_{\nu ,0} \left( t \right)} \right\}\). They establish the inherent deterioration rate of a critical component \(l\):

where \(r_{l,0}\) is the intrinsic deterioration rate and \(g\left( . \right)\) is the affected deterioration rate, which is a linear function of other critical components’ conditions \(X_{j,0} \left( t \right)\). Non-critical components also influence the deterioration of the critical components by affecting their state. After the \(h\) th malfunction of a non-critical component, the inherent deterioration of a critical component \(l\) becomes:

The transition rate between those two states is:

Liang et al. [36] remark that the current knowledge of fault propagation relies heavily on the expert opinion. This suggests a potential bias which remains to be addressed.

The parameters pertaining to state-based interaction are summed up in Table 3.

2.3 Copula-based interaction model

Copula-based interaction models differ from the above models in the sense that they rely on little to no assumption on interaction mechanisms. They define the reliability indexes and/or the state variables of components as random variables and model the dependence analytically. Copulas are used to built multivariate probability distributions which, in most cases, consider that the marginal probability distribution of each variable is uniform. A copula \(C\) can be defined as the joint distribution of \(p\) random variables \(U_{1} , U_{2} , . . . ,U_{p}\), each of which is marginally uniformly distributed, so that the joint cumulative distribution function of such a distribution is:

The copulas are parametric functions. Their parameters govern the intensity of the dependence. There is a large number of copulas. The most commonly used are Archimedean and Gaussian copulas. Archimedean copulas have a closed form. A unique parameter represents the intensity of the dependence. They have the advantage of being applicable to cases of high dimensionality. Some examples of widely known Archimedean copulas are Ali-Mikhail-Haq, Clayton, Frank and Gumbel copulas. Gaussian copulas are determined following the matrix of correlation of the considered random variables. Kolev et al. [28] provide useful information regarding copulas in their review.

While it could be argued that some of the following models have hybrid traits resembling reliability-index and state-based interaction models, we classify them as copula-based since the interactivity between components and/or failure modes is modelled through candidate copulas which are the focus of these approaches.

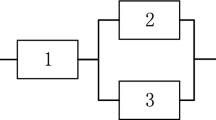

Limbourg et al. [38] explore the influence of spatial dependencies on a multi-component system’s reliability. They study diverse architecture, especially voting systems. Mostly, they bring emphasis on the fact that when components are neighbor, they show dependent failures. A Gaussian Copula \(C\) is used to model the dependent failures probabilities among neighbor components. Given a state variable \(x\), the dependent failure probability \(F\left( x \right)\) of a system with \(n\) components is:

where \(F_{i} \left( x \right)\) \(\left( {i = 1, \ldots n} \right)\) is the failure probability of each component.

A copula can directly link reliability indexes. For example, Jiayin et al. [25] use a copula \(C\) to model the dependence of \(n\) components in a system. They propose a characterization with a copula following the type of architecture: series or parallel. The reliability of the system is written:

-

For a system with \(n\) components in parallel:

$$R_{parel} \left( t \right) = 1 - C_{n} \left( {1 - R_{1} \left( t \right), \ldots , 1 - R_{n} \left( t \right)} \right)$$(37a) -

For a series system:

$$R_{S} \left( t \right) = \Delta_{{1 - R_{1} \left( t \right)}}^{1} \ldots \Delta_{{1 - R_{n} \left( t \right)}}^{1} C_{n} \left( {u_{1} , u_{2} , \ldots , u_{n} } \right)$$(37b)where \(\Delta_{{x_{1} }}^{{x_{2} }} f\left( x \right) = f\left( {x_{2} } \right) - f\left( {x_{1} } \right)\) and \(u_{i} = 1 - R_{i}\) is the failure probability of a single component \(i\). The authors choose the Gumbel copula. The latter is Archimedean and has a unique parameter θ that represents the degree of correlation of the random variables. This parameter can be estimated by the maximum likelihood method.

Similarly, Jia et al. [23] demonstrate how a copula can be used to evaluate reliability indexes for multicomponent systems with failure interactions among the components. They provide an illustration through the Clayton copula. They especially address the survival function, the failure rate and the meantime to failure for series, parallel, and k-out-of-n systems. Eryilmaz [12] studies k-out-of-n systems as well considering \(n\) dependent components with different weights and diverse failure distributions. Examples with Clayton and Gumbel copulas are included in the paper. This shows the large applicability of copula-based models. Yet, even though the methods proposed could be applied; usability would be another issue. The mathematical complexity suggests a certain expertise or supporting algorithms. There is also a certain limitation into the assumption that diverse components would all interact between each other following the same mechanism and a single interaction copula. In response to that matter, Navarro and Durante [46] take a step further in the determination of a joint reliability function of residual lifetimes for a multicomponent system. Their study of coherent systems leads them to define special cases of dependence mechanisms:

-

All the components of the system are working;

-

Some components have failed at given time

-

Some components have failed at some unknown failure times.

A distortion function allows the distinction of such cases and is the copula linking the failure functions of all the components. Yet, even though the parameters of the chosen function are tailored to specific cases, it is supposed that all the components involved follow the same failure interaction mechanism.

Xu et al. [62] study a multistate manufacturing system and bring an emphasis on the fact that in most cases not all components in a system are stochastically dependent. They define the failure interaction function as follows:

where \(C_{i} \left( x \right)\) is the general failure rate based on a copula of components with failure interaction, \(P_{j} \left( x \right)\) is the failure rate of a dependent component \(j\), and \(\partial_{i}\) is the interaction coefficient of component \(i\). For high accuracy and to deal with problems of small data, Xu et al. [62] suggest that the Grey system theory be used for the estimation of the parameters rather than classical methods like the Moment Estimation Method or Maximum Likelihood Estimation Method. Yet, the issue of the mathematical complexity of the overall model remains and is combined with the empirical nature of the Grey system theory. It limits the physical interpretation of the interaction mechanisms.

Copulas can also link degradation processes in diverse ways. Guo et al. [17] intend to determine the joint reliability \(R\) of two degradation processes that cause dependent competitive risks. They propose a reliability model based on a copula \(C\). The latter models the interdependence of the two phenomena and integrates the reliability associated to each degradation process \(R_{1}\) and \(R_{2}\). The authors suggest two distinct definitions of the copula.

\(\theta\) is a vector of the copula parameters. It governs the amplitude of the dependence’s strength. Simulated data are used in this study. \(\theta\) is estimated by the maximum likelihood method. Gumbel, Clayton, t- and Gaussian copulas are comparatively applied in the model. The goodness of fit is evaluated by the following criteria: Log-likelihood (LL), Akaike information criterion (AIC), Bayesian information criterion (BIC). Moreover, the models obtained are compared among each other following the average relative error (ARE). Guo et al. [17] identify the Gaussian copula as the best candidate function for both copula definitions since it gives relatively more precise results.

Wang and Pham [60] study competitive dependent risks related to degradation and random shocks. The survival function \(R\left( t \right)\) of a system with \(n\) components is determined in function of the number of probable fatal shocks \(N\left( t \right)\) through a copula \(C\).

Constant copulas (Normal, Plackett, Gumbel, Clayton, t-, Gaussian, etc.) and time-varying copulas (Normal, Rotated-Gumbel, Symmetrized Joe-Clayton) are comparatively considered as candidates. The strength of the fit is evaluated according to diverse criteria: Log-likelihood (LL), Akaike information criterion (AIC), Bayesian information criterion (BIC). Wang and Pham [60] demonstrate that time-varying copulas produce better results than constant copulas because they better fit the simulated data. This shows that there is a possible variability in the phenomenon of failure interaction. An and Sun [1] follow the same train of thoughts as Wang and Pham [60] and develop a similar model for dependent degradation processes and shock loads above a certain level. This model is tested on a MEMS application with diverse candidate copulas.

Xi et al. [61] use a distinctive approach that accounts for the interactivity within a complex system. They develop a sampling method involving a copula in the context of Residual-Useful-Life (RUL) prediction. Their method consists of two steps: first, statistical learning of the historical data and second real-time RUL prediction. A copula \(C\) is used to model the dependences between failure times \(T_{i}\) and degradation levels \(T_{N}\) of the system considered:

where \(F\) is an \(N\)-dimensional distribution function with marginal functions \(F_{1} , \ldots ,F_{N}\). The copula is selected following the Bayesian approach of Huard et al. [19]. While this method does not explicitly model the dependency between failure modes, it builds a relation between degradation levels and any other type of failure. This represents how the degradation of the system makes it more prone to its diverse failure modes.

Mercier and Pham [42] take a different direction. They consider a system with two units and model the failure interaction by a deterioration process following a bivariate non-decreasing Levy process: \(\left( {X_{t} = \left( {X_{t}^{\left( 1 \right)} ,X_{t}^{\left( 2 \right)} } \right)} \right)_{t > 0}\), a process with range \({\mathbb{R}}_{ + }^{2}\) starting from \(\left( {0, 0} \right)\). In Tankov [57], Peter Tankov introduced Lévy copulas to model the dependencies between components of a multidimensional spectrally positive Lévy process. The system is considered as failed when it reaches a failure zone \({\mathcal{L}} \subset {\mathbb{R}}_{ + }^{2}\). The failure time is:

Considering \(L_{1} > 0\) and \(L_{2} > 0\) as the respective failure thresholds of units 1 and 2, three situations are studied:

-

Units set in series: \({\mathcal{L}} = {\mathbb{R}}_{ + }^{2} \backslash \left[ {0, L_{1} \left[ \times \right[0, L_{2} } \right[\);

-

Units set in parallel: \({\mathcal{L}} = \left[ {L_{1} , \infty \left[ \times \right[L_{2} , \infty } \right[\);

-

Both components of \(\left( {X_{t} } \right)_{t > 0}\), standing for different wear indicators of a single system.

Since, the increments in a Levy Process are supposed to be independent, there is no specific closed-form that represents the interactivity. The wear indicators contribute collaboratively to the assessment of the system’s overall degradation. The studied system is assumed to be continuously monitored so that, upon failure, a signal is sent to trigger an instantaneous perfect repair. Based on this model, the authors propose a preventive maintenance policy assessed through a cost function on an infinite horizon time. The determined policy performs better than a simple periodic replacement policy. Likewise, Li et al. [34] model the stochastic dependence between the degradation of two components due to common environment with the Clayton-Lévy copula. Yet, the lack of traceability and complexity in the Levy process is a limitation in terms of comprehending failure mechanisms and propose pragmatic maintenance actions.

Furthermore, determining the relationship between maintenance and failure interaction is a work in progress. Yang et al. [64] establish a joint survival function for a repairable multicomponent system. The system is subject to partially perfect repair. It means that since only one component at a time fails and causes the system to fail, only the failed component is fully repaired, The latent age to failure of each component \(i\) denoted by \(d_{i}\) is then critical in the calculation of the system’s survival function. The latter is defined as a multivariate Weibull distribution constructed via Archimedean (Gumbel–Hougaard copula) or Gaussian copulas. For a system with \(K\) components, this joint distribution is denoted by \(S\left( {d_{1} , \ldots , d_{K} ,\theta } \right)\) where \(\theta\) is the vector of parameters of the model estimated by Maximum Likelihood. Furthermore, the authors use hypothesis testing to test the statistical dependency of component failures. Yet, even though this paper offers some insight into a relationship between maintenance actions (partially perfect repair) and failure dependencies, it remains limited since maintenance operations can be quite diverse (minimal repair, perfect repair, etc.). Zhang and Yang [67] and Yang et al. [63] rely on this previously proposed model to develop a maintenance approach that involves renewal theory. They specifically use Clayton and Gaussian copulas. But, the complexity of the obtained model requires the use of a simulation based optimization approach with stochastic approximation.

Zhang et al. [68] focus their interest on Accelerated Life Tests. Considering \(k\) different stress levels \(S_{i}\) \(\left( {i = 1, \ldots ,k} \right)\), they aim to define a joint survival function for a system with \(p\) failure modes by using a copula \({\hat{\text{C}}}_{p}\). They do so by making the assumptions that the distribution families, the mechanism of each competing failure mode and the copula used to construct the joint survival function will not change under different stress levels. In reality, the interaction mechanisms might be affected by the difference of stress levels. The survival copula \(\widehat{\text{C}}_{p} \left( {R_{i1} (t), \ldots ,R_{ip} \left( t \right) |\theta_{C} } \right)\), where \(R_{ij} \left( t \right)\) is the reliability considering failure mode \(j\) under \(S_{i}\) and \(\theta_{C}\) is the parameter vector, can be Archimedean as suggested by the authors. In fact, they use the Gumbel–Hougaard copula due to its relative simplicity.

The parameters pertaining to copula-based interaction are summed up in Table 4.

3 Comparative study of failure interaction models

The above-mentioned approaches have the general advantage of presenting models that account for the phenomenon of failure interaction. Thus, they are more realistic than all classical techniques that consider failures as independent. There are other advantages and also limitations. They are divided into three different aspects: concepts, methods and applications.

3.1 Concepts

The conceptual differences between the models relate to the starting assumptions and the concept’s meaning established to validate the dependence failure hypothesis. These aspects are summed up in Table 5.

One can notice that reliability indexes’ interaction models rely on elaborate assumptions about the system’s structure. The dependence is one-sided and some entities are assumed to be dominant beforehand in most cases. Moreover, they are only applied to simple architectures (series, parallel, etc.). The main issue with state-based interaction models is that the interactivity within complex systems is rarely measureable by a finite set of state variables. The interactivity itself is rarely observable since only components critical to the system’s primary function will be monitored. In fact, the interactions could affect more than just the components with sensors or captors. Then, there is a risk of significant bias in the results interpretation. Copula-based interaction models are quite useful in cases when there is little prior information about the system. But, they rely on rigid and complex analytical functions selected from a finite set. The failure interaction might not respect the form of the preselected functions. Moreover, some specificities in the architecture can be distinguished through qualitative and experimental information. This information would be left out if an approach that is solely analytical is used.

3.2 Methods

The differences in terms of methodology are related to the diverse techniques and the selected study process to develop the starting hypotheses. Table 6 sums up the issues related to methodology.

The methods used for copula-based interaction models have significant mathematical complexity in comparison to other methods. It is also difficult to associate a physical explanation to the parameters even though they use a large array of well-known statistical learning methods and tests. Reliability indexes’ interaction models are applicable to diverse preventive maintenance policies. State-based interaction models are limited to the domain of conditional maintenance but have the best interpretability. Most models in the literature rely on experimental designs and simulated data when it is known that there could be higher variability in reality.

3.3 Applications

The applications are numerous when it comes to interactive failures. But how efficient are they? The criteria to consider include the performance of the methods employed in terms of financial savings or availability improvement, and the level of expertise and complexity required for the application. Research is often subject to less practical constraints than in the field of application. Usually, industrial companies are limited in terms of their capabilities for experimentation, expertise levels, and time constraints. It is therefore important to see which approaches can be applied in a general set with basic or generic tools. Table 7 emphasizes the issue of applicability.

Copula-based interaction models are the least practical as standalone applications due to their analytical complexity. Reliability indexes’ interaction models are less complex but still difficult to apply due to high computational complexity. This issue is amplified by the lack of dedicated software for reliability models integrating the interactivity. State-based interaction models would provide the best results in the industry. Yet, they require the highest economic investment in order to be applied.

4 Conclusion

The interactivity of failure modes is a concept presented by the literature in the context of stochastic dependence. This failure interaction can be defined as a gradual, immediate, unidirectional or bidirectional phenomenon. Diverse models are reviewed and classified into three distinctive categories: Reliability indexes’ interaction, state-based interaction, and copula-based interaction.

All of the models presented are more realistic than classical methods that overlook the interactivity or assume it to be negligible. Yet, they have a few limitations. Copula-based interaction models are data-driven and based on a limited set of analytical functions. Their complexity makes them impractical for the industry, especially since interpretation of these models can be ambiguous. Reliability indexes’ interaction models can be paired with numerous classical statistical learning and preventive maintenance methods. But, they rely on restrictive hypotheses about a system’s structure. State-based interaction models have the best interpretability. But, conditional maintenance can be costly and the state variables might capture only partial aspects of the phenomenon studied.

Another general observation about all models is how they lack representation of the variability in the interactivity phenomenon. In most cases, the parameters, factors or coefficients used to account for the interactivity are defined as constant numbers or effects rather than random variables. In future work, it would be useful to put forth an interaction model that could integrate this variability more comprehensively to the chosen coefficients or parameters of interaction.

References

An Z, Sun D (2017) Reliability modeling for systems subject to multiple dependent competing failure processes with shock loads above a certain level. Reliab Eng Syst Saf 157:129–138

Arab A, Keedy E, Feng Q (2013) Reliability analysis for implanted multi-stent systems with stochastic dependent competing risk processes. In: Paper presented at the IIE annual conference. Proceedings

Assaf R, Do P, Scarf P, Nefti-Meziani S (2017) Diagnosis for systems with multi-component wear interactions. In: 2017 IEEE international conference on prognostics and health management (ICPHM). IEEE, pp 96–102

Assaf R, Do P, Scarf P, Nefti-Meziani S (2016) Wear rate-state interaction modelling for a multi-component system: models and an experimental platform. IFAC Pap OnLine 49(28):232–237

Blanchard BS, Fabrycky WJ, Fabrycky WJ (1990) Systems engineering and analysis, vol 4. Prentice Hall, New Jersey

Bian L, Gebraeel N (2014) Stochastic modeling and real-time prognostics for multi-component systems with degradation rate interactions. IIE Trans 46(5):470–482

Castro I (2009) A model of imperfect preventive maintenance with dependent failure modes. Eur J Oper Res 196(1):217–224

Čepin M (2011) Assessment of power system reliability: methods and applications. Springer, London

Chen L, Ye Z, Huang B (2011) Condition-based maintenance for systems under dependent competing failures. In: 2011 IEEE international conference on paper presented at the industrial engineering and engineering management (IEEM)

Cho DI, Parlar M (1991) A survey of maintenance models for multi-unit systems. Eur J Oper Res 51(1):1–23

Do P, Scarf P, Iung B (2015) Condition-based maintenance for a two-component system with dependencies. IFAC Pap OnLine 48(21):946–951

Eryilmaz S (2014) Multivariate copula based dynamic reliability modeling with application to weighted-k-out-of-n systems of dependent components. Struct Saf 51:23–28

Fan H, Hu C, Chen M, Zhou D (2011) Cooperative predictive maintenance of repairable systems with dependent failure modes and resource constraint. Trans Reliab IEEE 60(1):144–157

Fan M, Zeng Z, Zio E, Kang R (2017) Modeling dependent competing failure processes with degradation-shock dependence. Reliab Eng Syst Saf 165:422–430

Feng Q, Coit DW (2010) Reliability analysis for multiple dependent failure processes: an MEMS application. Int J Perform Eng 6(1):100

Golmakani HR, Moakedi H (2012) Periodic inspection optimization model for a two-component repairable system with failure interaction. Comput Ind Eng 63(3):540–545

Guo C, Wang W, Guo B, Peng R (2013) Maintenance optimization for systems with dependent competing risks using a copula function. Eksploatacja i Niezawodność 15:9–17

Hao S, Yang J, Ma X, Zhao Y (2017) Reliability modeling for mutually dependent competing failure processes due to degradation and random shocks. Appl Math Model 51:232–249

Huard D, Evin G, Favre A-C (2006) Bayesian copula selection. Comput Stat Data Anal 51(2):809–822

Huynh KT, Barros A, Berenguer C, Castro IT (2011) A periodic inspection and replacement policy for systems subject to competing failure modes due to degradation and traumatic events. Reliab Eng Syst Saf 96(4):497–508

Huynh KT, Castro IT, Barros A, Berenguer C (2012) Modeling age-based maintenance strategies with minimal repairs for systems subject to competing failure modes due to degradation and shocks. Eur J Oper Res 218(1):140–151

Jhang J-P, Sheu S-H (2000) Optimal age and block replacement policies for a multi-component system with failure interaction. Int J Syst Sci 31(5):593–603

Jia X, Wang L, Wei C (2014) Reliability research of dependent failure systems using copula. Commun Stat Simul Comput 43(8):1838–1851

Jiang L, Feng Q, Coit DW (2012) Reliability and maintenance modeling for dependent competing failure processes with shifting failure thresholds. Trans Reliab IEEE 61(4):932–948

Jiayin T, Ping H, Qin W (2009) Copulas model for reliability calculation involving failure correlation. In: CiSE 2009 international conference on paper presented at the computational intelligence and software engineering, 2009

Johnson RA (1988) 3 Stress-strength models for reliability. Handb Stat 7:27–54

Keizer MCO, Flapper SDP, Teunter RH (2017) Condition-based maintenance policies for systems with multiple dependent components: a review. Eur J Oper Res 261(2):405–420

Kolev N, Anjos UD, Mendes BVDM (2006) Copulas: a review and recent developments. Stoch Models 22(4):617–660

Lai M-T (2007) Periodical replacement model for a multi-unit system subject to failure rate interaction. Qual Quant 41(3):401–411

Lai M-T (2009) A discrete replacement model for a two-unit parallel system subject to failure rate interaction. Qual Quant 43(3):471–479

Lai M-T, Chen Y-C (2006) Optimal periodic replacement policy for a two-unit system with failure rate interaction. Int J Adv Manuf Technol 29(3–4):367–371

Lai M-T, Chen Y-C (2008) Optimal replacement period of a two-unit system with failure rate interaction and external shocks. Int J Syst Sci 39(1):71–79

Lai M-T, Yan H (2014) Optimal number of minimal repairs with cumulative repair cost limit for a two-unit system with failure rate interactions. Int J Syst Science(ahead-of-print):1–8

Li H, Deloux E, Dieulle L (2016) A condition-based maintenance policy for multi-component systems with Lévy copulas dependence. Reliab Eng Syst Saf 149:44–55

Li Z, Gao Q, Liu S, Liu G (2013) Reliability analysis for a multi-unit system with failure rate interaction. In: 2013 International conference on paper presented at the quality, reliability, risk, maintenance, and safety engineering (QR2MSE)

Liang Z, Parlikad AK, Srinivasan R, Rasmekomen N (2017) On fault propagation in deterioration of multi-component systems. Reliab Eng Syst Saf 162:72–80

Liang Z, Parlikad AK (2015) A condition-based maintenance model for assets with accelerated deterioration due to fault propagation. IEEE Trans Reliab 64(3):972–982

Limbourg P, Kochs H, Echtle K, Eusgeld I (2007) Reliability prediction in systems with correlated component failures ü an approach using copulas. In: 2007 20th international conference on architecture of computing systems (ARCS). VDE, pp 1–8

Liu B, Wu J, Xie M (2015) Cost analysis for multi-component system with failure interaction under renewing free-replacement warranty. Eur J Oper Res 243(3):874–882

Liu H, Yeh RH, Cai B (2017) Reliability modeling for dependent competing failure processes of damage self-healing systems. Comput Ind Eng 105:55–62

Liu HC, Liu L, Liu N (2013) Risk evaluation approaches in failure mode and effects analysis: a literature review. Expert Syst Appl 40(2):828–838

Mercier S, Pham HH (2012) A preventive maintenance policy for a continuously monitored system with correlated wear indicators. Eur J Oper Res 222(2):263–272

Murthy D, Nguyen D (1985) Study of a multi-component system with failure interaction. Eur J Oper Res 21(3):330–338

Murthy D, Nguyen D (1985) Study of two-component system with failure interaction. Naval Res Logist Q 32(2):239–247

Nakagawa T, Murthy D (1993) Optimal replacement policies for a two-unit system with failure interactions. Revue française d’automatique, d’informatique et de recherche opérationnelle. Recherche opérationnelle 27(4):427–438

Navarro J, Durante F (2017) Copula-based representations for the reliability of the residual lifetimes of coherent systems with dependent components. J Multivar Anal 158:87–102

Peng H, Feng Q, Coit DW (2010) Reliability and maintenance modeling for systems subject to multiple dependent competing failure processes. IIE Trans 43(1):12–22

Rafiee K, Feng Q, Coit DW (2014) Reliability modeling for dependent competing failure processes with changing degradation rate. IIE Trans 46(5):483–496

Rasmekomen N, Parlikad AK (2016) Condition-based maintenance of multi-component systems with degradation state-rate interactions. Reliab Eng Syst Saf 148:1–10

Satow T, Osaki S (2003) Optimal replacement policies for a two-unit system with shock damage interaction. Comput Math Appl 46(7):1129–1138

Shen J, Elwany A, Cui L (2018) Reliability analysis for multi-component systems with degradation interaction and categorized shocks. Appl Math Model 56:487–500

Song S, Coit DW, Feng Q, Peng H (2012) Component reliability with competing failure processes and dependent shock damage. In: Paper presented at the IIE annual conference. Proceedings

Song S, Coit DW, Feng Q, Peng H (2014) Reliability analysis for multi-component systems subject to multiple dependent competing failure processes. Trans Reliab IEEE 63(1):331–345

Sun Y, Ma L (2010) Estimating Interactive Coefficients for Analysing Interactive Failures. Maint Reliab 2(46):67–72

Sun Y, Ma L, Mathew J, Zhang S (2006) An analytical model for interactive failures. Reliab Eng Syst Saf 91(5):495–504