Abstract

In modern terminology, “organoids” refer to cells that grow in a specific three-dimensional (3D) environment in vitro, sharing similar structures with their source organs or tissues. Observing the morphology or growth characteristics of organoids through a microscope is a commonly used method of organoid analysis. However, it is difficult, time-consuming, and inaccurate to screen and analyze organoids only manually, a problem which cannot be easily solved with traditional technology. Artificial intelligence (AI) technology has proven to be effective in many biological and medical research fields, especially in the analysis of single-cell or hematoxylin/eosin stained tissue slices. When used to analyze organoids, AI should also provide more efficient, quantitative, accurate, and fast solutions. In this review, we will first briefly outline the application areas of organoids and then discuss the shortcomings of traditional organoid measurement and analysis methods. Secondly, we will summarize the development from machine learning to deep learning and the advantages of the latter, and then describe how to utilize a convolutional neural network to solve the challenges in organoid observation and analysis. Finally, we will discuss the limitations of current AI used in organoid research, as well as opportunities and future research directions.

Graphic abstract

Similar content being viewed by others

Introduction

Organoids are stem-cell-derived or self-organized three-dimensional (3D) cell/tissue constructs [1]. They can replicate the cell type, composition, structure, and function of different tissues. Compared with the traditional two-dimensional (2D) cell culture, organoids have the advantage of closer similarity to physiological cell composition and behavior, with a relatively stable genome and suitability for biological uses and high-throughput screening [1, 2]. Compared with animal models, organoids are originated from humans and expected to more accurately recapitulate the development of human organs and diseases; they also allow real-time imaging and are in line with experimental ethics requirements [3, 4]. After years of rapid development, scientists are now widely using embryonic stem cells and induced pluripotent stem cells by cultivating them to differentiate and self-assemble into various 3D structures similar to human tissues, creating most types of non-tumor-derived organoids, such as skin [5], brain [6], liver [7], intestine [8], prostate [9], lung [10], and pancreas [11]. Tumor spheroids can also be obtained by puncturing or surgically removing part of a patient’s tumor tissue and culturing it in Matrigel for weeks to months. Generally speaking, complex clusters of organ-specific cells composed of stem or progenitor cells are called organoids. Organoids can grow into microscopic models of their parent organs that can be used for 3D studies [12]. Spheroids, on the other hand, are simple clusters of broad cells such as those from tumor tissue, embryoid bodies, hepatocytes, neural tissue, or mammary glands. Spheroid structure is of low complexity and does not require scaffolding to form 3D cultures. Therefore, spheroids are easy and popular models for drug screening [13].

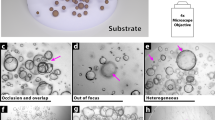

Artificial intelligence (AI) is facilitating high-throughput analysis of image data in various biomedical imaging disciplines. Specifically, the growth of deep-learning (DL) research has led to the widespread application of computer visualization techniques in biomedical image-data analysis and mining [14, 15]. Continued advances in data collection and aggregation, processing power, DL algorithms, and convolutional neural networks (CNNs) have resulted in improved accuracy of cell detection and segmentation. Through automated algorithms, quantifiable 2D and spatial cellular features can be mined from microscope images, allowing researchers to gain greater insight into cells and tissues under different conditions [16]. To evaluate the characteristics and physiological functions of organoids under normal culture or specific conditions, imaging and analysis of organoids are crucial. However, it is time-consuming and challenging to quantify organoids’ morphological and growth characteristics by only relying on observation by the human eye, especially in high-throughput drug screening. Traditional machine learning (ML) performs poorly in processing high-dimensional data such as images, voices, and videos [17]. DL makes up for this shortcoming, avoids the requirement of feature engineering, quickly achieves end-to-end training, and has advantages in extracting deep structural features of objects [18]. The advancement of DL technology has also broadened its application to biological and medical studies. However, we found that there are still very few reported cases of combining DL with organoid research. Meanwhile, there are more and more researchers looking for methods of quantifying and analyzing organoids by AI (Figs. 1a–1f). Because of the rapid rise of AI usage in organoid studies and a concomitant lack of review articles on the topic, we wrote this review to fill the gap. We believe that this paper provides useful information for biological researchers interested in understanding AI and will significantly promote the development and progress of DL-based algorithm application in organoid research.

Current representative research on artificial intelligence (AI) applied to organoids: a automatic ventricular segmentation using U-net (adapted and modified from Ref. [101], Copyright 2020, with permission from the authors); b predicting retinal differentiation in retinal organoids (adapted and modified from Ref. [100], Copyright 2020, with permission from the authors); c detection and tracking of organoids (adapted and modified from Ref. [31], Copyright 2021, with permission from Elsevier Ltd.); d classification of human lung epithelial spheroids (adapted and modified from Ref. [70], Copyright 2020, with permission from The Royal Society of Chemistry); e automatic segmentation of tumor organoids (adapted and modified from Ref. [48], Copyright 2021, with permission from Elsevier Ltd.); f identification and quantification of human intestinal organoids (adapted and modified from Ref. [29], Copyright 2019, with permission from the authors)

Organoids, a new approach to disease modeling

Application areas of organoids

The pandemic disaster, COVID-19 (or SARS-CoV-2), swept the world at the beginning of 2020. Research to understand the infection mechanism of the virus was imminent, and organoids have made outstanding contributions as an infection model for COVID-19. Organoids have provided indispensable tools for studying the cell tropism and pathogenic mechanism of the virus, and for subsequent drug development [19, 20]. This is just a representative example of organoid usage. In recent years, besides serving as a model for infectious diseases, organoids have been widely used in many other key fields, including human development study, disease modeling, precision medicine, toxicology research, and regenerative medicine, and they have very bright application prospects. Organoids can be used as models for studying human development [21]. With recent breakthroughs in genome engineering and various omics technologies, organoid technology makes it possible to do human biology research that previously seemed infeasible. Retinal organoids derived from human stem cells allow detailed studies of differentiated human retinal cells [22]. In brain research, cerebral organoids provide an excellent way for scientists to study brain development and neurological diseases [3]. Organoids can also be used as tools for studying gene function and cell development [23]. The study of mouse genetics can be combined with the versatility of the 3D culture system to study gene functions and cell functions and development [2, 23]. Organoids also can serve as the research basis for disease modeling and precision medicine [21]. They are used to analyze pathology, which is one of their significant advantages in disease modeling. For example, intestinal organoids are widely used in researching ulcerative colitis, intestinal injury regeneration, colon cancer, and many other intestinal diseases [24]. Last but not least, organoids can be used for drug screening and development [2]. Many drugs have been forcibly withdrawn from the market primarily because they produced negative side effects in the human body which could not be predicted by traditional cell or animal models. Compared with animal and cell models, the use of organoids has the advantage of overcoming differences in species and can simulate the layered cell structure and microenvironment in a patient’s body [4].

Disadvantages of current organoid-analysis approaches

Researchers studying the life sciences are involved in a wide range of research areas such as cell biology, biochemistry, genomics, proteomics, transcriptomics, and systems biology. While all of these disciplines may provide essential information about health and disease mechanisms, microscopy is one of the central techniques for imaging vital cellular processes. Thus, cell detection and evaluation are fundamental tasks in biomedical research and clinical applications [25]. Accurate assessment of cellular changes depends on accurate and effective cell detection, as well as evaluation of morphology and its changes. However, manual evaluation is usually performed in a visually guided, labor-intensive, and time-intensive way that can result in high variability between observers [26]. Andrion et al. [27] evaluated the consistency of histopathological diagnoses of a group of 88 pleural malignant mesothelioma cases by evaluating the consistency between the observations of five pathologists. Ultimately, only 70% of the diagnoses were consistently reproduced by panel review. With the rapid development and advancement in imaging technologies for biological samples, the requirements of image analysis have also significantly increased and plenty of data needed to be more quantified. Sun et al. [28] summarized the application, feasibility, and research directions of DL for data analysis in large-scale cellular optical images. They also explored the promise of DL for high-throughput screening of cell images, that is, high-throughput optical imaging techniques. Due to the hugely increased amount of data acquired, biologists must eventually depend on computers to analyze and verify the correctness of results.

To analyze the morphology or growth characteristics of organoids, researchers usually utilize a fluorescence microscope to observe and analyze results. At present, the primary analysis methods for these 3D organoid images include cell-number counting, measuring the intensity of markers in a specific area, and morphological measurement (size, shape, etc.). But these traditional approaches to organoid analysis have drawbacks, e.g., detecting and evaluating the organoids in the image when the organoids are highly overlapped, over-illuminated, or partially obscured by noise [29]. At present, most studies only analyze organoid size, shape, and cell viability [30]. However, in these processes, organoid size, shape, and other factors were usually not being continuously tracked, and there is no reliable automatic segmentation technology that can be used for locating and quantifying organoids in a 3D culture environment [31]. Considering that organoids are usually cultured in a 3D environment, it is hard to locate organoids with a single Z-axis image [32]. Scientists usually have to change the Z-axis during imaging by adjusting the position and focal plane manually, recording the corresponding image data, using an optical microscope, and performing analysis based on subjective measurements—essentially hand-drawing the organoid’s boundary. This process is complicated and error-prone. On the one hand, the protocols may vary from person to person; on the other hand, human-induced errors may be included in the analysis. To avoid these ambiguities, the use of computer-aided analysis is essential. Computers can not only make decisions more quickly, but also reduce conflicts between experts, thus saving analysis time and improving diagnostic results [33].

The development of artificial intelligence (AI) and its advantages

Machine learning and deep learning

Machine learning (ML) is now the key to AI and the fundamental way to make computers intelligent, allowing machines to recognize patterns and make decisions with minimal human intervention [34]. In fact, ML is a combination of computer science and statistics [35]. In organoid analysis, ML teaches computers to identify organoid phenotypes, allowing programs to process large amounts of biomedical data without relying on manual modification of parameters [36]. One research group developed MOrgAna, which implements ML for image segmentation, quantification, and visualization of morphological and fluorescence information of organoids from large numbers of images in a short period of time; it also has a user-friendly interactive interface that does not require the user to have any programming experience [37]. Kong et al. [38] adopted a novel ML-based approach to identify documented drug biomarkers through pharmacogenomic data from 3D organoid models. It overcomes the disadvantage of using traditional machine learning to unreliably identify robust biomarkers from preclinical models. More practical applications of ML abound in clinical diagnosis, precision therapy, drug screening, health monitoring, and many other settings [39]. ML is usually composed of a feature extractor and a classifier. First, an expert with multi-domain knowledge is needed to design a feature extractor that can analyze which data are the more important features and convert them into a combination of feature vectors. Then the machine learns these feature vectors and finds the corresponding patterns. Finally, the classifier can automatically detect or classify the input patterns based on the input feature vectors. However, traditional ML techniques are limited in their ability to process raw data [17].

Deep learning (DL) is a subfield of ML which uses a multilayer nonlinear processing unit cascade (i.e., neural networks) for feature extraction and transformation and realizes multilevel feature representation and abstract concept learning [17]. DL does not require experts to design complex feature engineering manually, but directly transfers the data to inputs of the neural network, which can easily achieve end-to-end training, and shows better advantages with big data. As a result, it is more flexible than traditional machine learning models such as decision trees (DT), logistic regression (LR), and support vector machines (SVMs) [40, 41]. With the rapid development of AI, DL and computer vision have begun to be closely integrated, and a series of excellent algorithms have been produced. These algorithms have an astonishing ability to obtain detailed information and decipher image content. Currently, these algorithms based on DL are being applied to study biological images, helping biologists obtain the analysis and interpretation of imaging data [25]. Li et al. [42] analyzed organs-on-chips (OoCs) based on DL, and summarized combination of OoCs and DL for data analysis, automation, and image digitization. In addition, a computerized system combined with DL algorithms is essential for processing large-scale image datasets and providing rigorous image-feature measurement for disease modeling, comparative research, and personalized medicine [43]. DL includes many different types of neural networks. In biomedical data analysis, the more commonly used networks are the deep neural network (DNN), convolutional neural network (CNN), recurrent neural network (RNN) and generative adversarial network (GAN) [44,45,46]. The CNN is currently the most widely used, most complete, and overall best network. In the analysis of diabetic retinopathy and diagnosis of cardiovascular disease, accurate retinal artery/vein (A/V) classification is essential. Traditional methods fail to fuse vascular topological information. Mishra et al. [47] proposed a new CNN-based model, VTG-NET (vascular topology network), which can integrate vascular topology information into retinal A/V classification and outperforms state-of-the-art methods. CNN is currently a vital research direction based on DL in computer vision, which performs well in image classification, segmentation, and object detection. Generally speaking, CNN consists of one input layer, multiple convolutional (conv) layers, multiple pooling layers, one or more fully connected layers, and one output layer. Figure 2 shows the process of obtaining the output image from the input organoid image.

Main tasks of deep learning

Object detection (combining classification and localization) and image segmentation are the two most important applications of DL in organoid analysis. Object detection can help quantify the number, location, and distribution of organoids, and image segmentation can help in analysis of the area and boundaries of organoids and other factors [48, 49]. Even some complex organoid-image analysis tasks, such as 3D organoid reconstruction, still require the support of fast and accurate object-detection technology and image-segmentation technology [28, 50].

Traditional object-detection algorithms mostly use sliding window or image-segmentation methods first to generate a large number of bounding boxes, and then perform feature extraction (histogram of oriented gradient [51], local binary pattern [52], Haar-like [53]) on the bounding boxes, and transferal of the features to classifier (SVM [54], random forest [55], or AdaBoost [56]). Finally, the classifier outputs the discrimination result [57]. However, the detection accuracy of the traditional method is relatively low, and the speed is very low. The early image recognition and classification technology [58] mostly used people as the object of design features. For different scenes or different things like organoids, the corresponding experts need to design the artificial features, which are highly dependent on the prior knowledge of the designer. It is necessary to manually code according to specific data types and domain characteristics, which makes it difficult to process massive amounts of data. In addition, artificial feature design only supports a limited number of parameters, and too few extracted features directly affect the system’s performance, which can easily lead to significant differences in experimental results. In today’s big-data era, relying on manual feature extraction is not appropriate, so traditional object-detection algorithms have difficulty in meeting the requirements. Varoquaux et al. [59] analyzed the problems existing in the application of ML in medical imaging and proposed some directions for improvement. Castiglioni et al. [60] analyzed the application of AI in medical images, and discussed the impact and changes of medical image analysis from ML to DL. At present, the research on detection algorithms based on DL is very mature [57].

In recent years, DL image semantic segmentation technology for medical imaging has attracted much attention and research. Image segmentation is a technology that divides an image into several specific and unique regions and marks objects of interest [61]. Early image semantic segmentation methods mainly used some shallow features extracted manually, such as edge-based [62] and threshold-based [63] features. Nevertheless, the expected segmentation effect cannot be achieved for complex scene pictures, especially in medical imaging. With the continuous exploration of many researchers, the semantic segmentation method based on CNNs has made excellent progress. When measuring the algorithm’s performance in object detection and semantic segmentation, some evaluation indicators are indispensable (see Table 1). Here, we need to introduce the confusion matrix of machine learning. The main purpose of the confusion matrix is to solve two or more classification problems, and it is used for judging the accuracy and correctness of a model [64]. According to the indicators in Table 1, the performance of a model can be easily quantified in terms of object detection and image segmentation. These methods allow researchers to evaluate and compare the strengths and weaknesses of different models in analyzing the same dataset.

Classical convolutional neural networks

Since 2015, the accuracy and speed of using CNNs in target detection, image segmentation, object tracking, and other tasks has approached or even exceeded that of human beings [66]. During this period, a large number of CNN algorithms applied in cellular analysis have been produced and achieved perfect results [67,68,69]. Some of these algorithms can also be applied to organoid analysis after optimization and improvement [48, 70].

YOLO network

The YOLO [71] algorithm treats the object-detection problem as a regression problem. Therefore, the YOLO network structure is a CNN structure with a regression function. The algorithm can predict the position and category of multiple bounding boxes in real time at once. The YOLO network comprises an input layer, a stack of convolutional layers and pooling layers, two fully connected layers, and an output layer (Fig. 3a). The input layer is a sample image that has undergone simple preprocessing (such as cropping, scale unification, grayscale, or normalization). The convolutional layer has two functions: (1) extracting the features of the input image; (2) increasing or decreasing the number of output channels. The function of the pooling layer is to expand the receptive field, compress features, reduce the dimensions of the feature graph, and simplify the complexity of the network [72]. The fully connected layer usually appears between the last pooling layer and the output layer, and its function is to transform the input 2D eigenmatrix into a one-dimensional (1D) eigenvector, so as to facilitate classification of the output layer. The output layer is the last layer of the CNN, and its function is to classify the input according to the 1D feature vector. The number of output characteristic graphs matches the number of object classifications. However, the YOLO model still has many problems. Compared with other object-detection algorithms, YOLO’s object-detection accuracy is low. It is easy to cause object-positioning errors, and YOLO does not have a good detection effect for overlapping and small objects. Its generalization ability is relatively weak. Therefore, researchers continued to improve the YOLO network and developed more advanced algorithms such as YOLOv2 [73], YOLOv3 [74], YOLOv4 [75], and YOLOv5 [76]. At present, the YOLO series of algorithms are already some of the most widely used object-detection algorithms in biomedical engineering. For example, induced pluripotent stem cells (iPSCs) show great promise in many studies, but the ability to automatically identify iPSCs without cell staining remains difficult. Wang et al. [77] established an accurate, noninvasive iPSC colony-detection method based on a YOLO network, and the result showed an F1-value of 0.867 and an average accuracy of 0.898. YOLO series algorithms are fast and accurate, and have been widely used in many fields. Applying these algorithms in the identification and localization of organoids can also produce good results.

Typical network structures in object detection and image segmentation: a YOLO network structure (adapted and modified from Ref. [71], Copyright 2016, with permission from IEEE); b Faster-RCNN network structure (adapted and modified from Ref. [78], Copyright 2017, with permission from IEEE); c U-net network structure (adapted and modified from Ref. [85], Copyright 2015, with permission from Springer International Publishing Switzerland)

Faster-RCNN network

The Faster-RCNN algorithm [78] has become one of the mainstream object-detection algorithms due to its higher detection accuracy. Compared with the YOLO series of algorithms, Faster-RCNN has a higher mean average precision but a slightly lower speed [79]. The algorithm can usually be divided into four parts: a feature-extraction network, a region-proposal network (RPN), a regions-of-interest (RoI) pooling layer, and a classification layer (Fig. 3b). First, the feature extraction network performs feature extraction on the preprocessed input image. The feature-extraction network can be designed to stand on its own, using convolutional layers, pooling layers, and activation functions, or it can use an existing network structure, such as VGG-16, ResNet [80], or Inception [81]. Then the RPN generates many bounding boxes from the input feature map and classifies the boxes according to whether they contain the object or not. Meanwhile, the feature map is passed into the RoI pooling layer for pooling operation, and a fixed-size feature map of the bounding boxes is generated. Finally, classification and regression operations are performed on the generated feature map of the boxes to obtain the object type and location. This model is also widely used in biomedical engineering. Li et al. [82] proposed an automatic cryo-electron tomography (cryo-ET) image-analysis algorithm based on Faster-RCNN to locate and identify cell differences and structure. Moreover, the proposed model has high precision and robustness that can be easily applied to detect other cell structures. Li et al. [83] proposed a new framework of Faster-RCNN combined with feature pyramid networks (FPN) [84] to detect abnormal cervical cells in cytological images of cancer screening tests. The mean average precision of this model was 6%–9% higher than that of previous methods. Due to the advantages of the Faster-RCNN network, Orgaquant, a model for quantifying organoids, also adopted its architecture [29]. Many instance-segmentation models, such as Mask-RCNN, are derived from Faster-RCNN. In combination with the instance-segmentation models, the area and location of each organoid in the image can be automatically calculated.

U-net

In 2015, Ronneberger et al. [85] pioneered the U-net network structure, which was a breakthrough development of DL in medical image segmentation. U-net is an improvement of the structure of the fully convolutional network (FCN), and is named U-net because of its “U-shaped” network structure [86]. The model is an asymmetric network of multiple encoders and decoders composed of convolution, down-sampling, up-sampling, and splicing operations (Fig. 3c). Its compression path can extract important image features and reduce image resolution, and consists of four blocks. Each block contains two 3×3 convolutional layers, two rectified linear unit (ReLU) layers, and one maximum pooling layer. The expansion path of U-net also includes four blocks, and each block has two 3×3 convolutional layers, two ReLU layers, and one up-sampling layer. The up-sampling function decodes the abstract features of the image obtained by the down-sampling process to the original image size. After each up-sampling operation, the size of the feature map is expanded to twice the original size, and the number of channels of the feature map is halved. In addition, each layer has to be merged with the feature map of the symmetrical compression path on the left part of the U-net. U-net’s final output image size is 388×388 pixels. Unlike FCN’s summation [87], U-net uses a concatenation operation to crop the feature map of the same layer of the contraction path to the same size as the expansion path and then perform a splicing operation to help restore the information loss caused by down-sampling. Since the publication of U-net, its encoder-decoder-hop network structure has inspired a large number of medical-image segmentation networks based on its structure. As DL technology has developed, researchers have introduced improvement methods such as an attention mechanism [88], a dense module [89], a transformer module [90], feature enhancement [91], and a residual block [92], into the structure of U-net to meet the requirements of different types of biomedical image segmentation. For example, research teams have improved the encoder-decoder structure [90, 93, 94], the application of U-net to 3D images [95], the generalization ability of the network [96, 97], and more. U-net combines contextual information, its training speed is high, and it does not require a large amount of data to achieve accurate segmentation. These characteristics make it widely used in medical image segmentation [98]. Prangemeier et al. [99] demonstrated a U-net method that performs multiclass segmentation of individual yeast cells in micro-structured environments. Another group of researchers used an improved U-net to segment clear organoid boundaries for further study [48]. U-net has excellent image-segmentation ability. In future, it will be necessary to make full use of this model to explore more quantifiable evaluation parameters and indicators of different organoids in two or three dimensions.

Deep learning in organoid images and potential integrations

There have been some instances of combining AI with organoids. Researchers have achieved identification and counts of human intestinal organoids [29], automatic discrimination of retinal organoid differentiation [100], automatic evaluation of tumor spheroid behavior [48], multiscale 3D phenotype analysis of brain organoids [101], organoid tracking [31], and other tasks (see Table 2). Compared with relying on manpower to analyze and quantify organoids, many of these solutions have greatly improved accuracy or speed [2, 48, 100]. In Fig. 4a, we present the number of publications with the term “cells” as well as AI terms (such as “deep learning,” “machine learning,” or “convolutional neural network”) and publications with “organoids” and AI-related terms in their title or abstract, since 2015 and up until 2021, based on a Web of Science search. The numbers indicate that the application of AI in biology or medicine is the current and future development trend. Figure 4b shows the number of publications with the terms “organoid” and AI-related terms in their title or abstract, since 2015 and up until 2021, based on searches in PubMed and Web of Science. Although the number of papers starts out small, it increases rapidly year over year.

The development trend over time of artificial intelligence (AI) applied to cells and organoids (2015–2021). a In recent years, the number of articles on application of AI to cells and organoids has grown rapidly. The former is in the process of rapid development, while the latter is still in its infancy and has promising prospects. b The rapid increase in the number of articles on the application of AI to organoids in recent years confirms that the task of automatically and intelligently analyzing and quantifying organoids is gradually becoming more highly valued

In this section, we will discuss the application of DL in organoid image analysis, including image classification, image segmentation, object tracking, and microscope enhancement [25]. We will describe in detail how these DL methodologies were applied for organoid analysis in each specific case and their pros and cons.

Object classification

Object classification is a major research area in medical image analysis. DL-based methods have made great contributions to providing automated, efficient, fast, and accurate solutions in this field [103]. In biology applications, object classification and detection involve quantification of the very subtle changes in the morphological characteristics of cells, tissues, or organoids, which is a challenging task [70]. For example, retinal organoids are mostly differentiated from mouse or human pluripotent stem cells, because they are similar to organs in the body [104, 105]. But the differentiation process is highly random or uncontrollable. Retinal differentiation varies greatly among organoids in the same batch, let alone different cell lines used at different times [106]. In addition, the method of selecting retinal tissue for further growth and maturation is primarily based on subjective morphological observation and features visible in bright-field imaging. However, manually selecting and distinguishing features under a microscope with bright-field imaging is tedious and inefficient. Because the classification criteria are relatively subjective, the results are highly variable when judged by different observers. Therefore, Kegeles et al. [100] developed an automated noninvasive method that uses a DL-based CNN to identify and predict retinal organoid differentiation (Fig. 5a). Experimental results showed that the prediction performance of DL algorithms was significantly better than that of human experts: the accuracy comparison was 0.84 vs. 0.67±0.06. This research also had limitations. Although the authors described the problem as a classification task rather than a regression task, it would be inaccurate to classify the differentiation of retinal organoids into only three categories, one of which was a category they called “satisfactory,” which did not have obviously separable retinal areas but only a sparse or scattered fluorescence pattern. In addition, retinal differentiation would be difficult to predict by relying only on two experts’ subjective labels (a large portion of the organoids were not decided in common by these two experts). Thus, we believe that the result dataset and labeling still leave a lot of room for improvement (Fig. 5b). Bian et al. [102] proposed DL-based OrgaNet (Fig. 5c), an organoid viability assessment model based on imaging, which is a direct and reproducible method for organoid viability assessment. It avoids the disadvantage that traditional adenosine triphosphate bioluminescence cannot quantify organoid activity over time. The task of object detection is to find all the objects of interest from the image and identify their category and location [57]. Since organoids are usually in a 3D culture environment, the images are affected by many imaging artifacts, which make it difficult to evaluate the morphology and growth characteristics of these cultures; and manual measurement and counting of these organoids is also a very inefficient process. To solve these problems, Kassis et al. [29] developed an algorithm based on CNN, OrgaQuant, which can measure the localization and quantification of human intestinal organoids (Fig. 6a). OrgaQuant is also an end-to-end training neural network that can analyze thousands of images completely automatically without parameter adjustment. The experimental results showed that the diameter of organoids measured by OrganQuant was consistent with that measured by human (Fig. 6b). Still, the recognition speed is faster than that of humans (Fig. 6c). The model recognizes all intestinal organoids in an image in only 30 s with the NVIDIA Quadro P5000 GPU. Abdul et al. [70] developed an algorithm called Deep-LUMEN, which can detect and identify morphological changes in lung epithelial spheroids in bright-field images. The algorithm can track the changes in the lumen structure of the tissue spheroids and distinguish between polarized and non-polarized lung epithelial spheroids. Deep-LUMEN had an 83% mean average precision (Fig. 6d), and the recognition speed was much faster than that of humans (Fig. 6e). Furthermore, they observed that the confidence score corresponded to the degree of lumen development (Fig. 6f). However, the average accuracy of the model is obtained when the intersection over union (IOU) is equal to 0.5. Because the IOU setting is too small, this does not mean that the recognition effect of the model is very good. It is also necessary to make IOU take on some larger values between 0.5 and 1, and then measure several sets of average accuracy data. In addition, the number of images in the training datasets was nearly 4000, while the test set only had 197 images, so the proportion of the test dataset in the whole dataset was too small.

Experimental process and expert prediction of retinal differentiation. a Experimental outline of retinal differentiation. The neural network was fed bright-field images on day 5 and fluorescence images on day 9. Fluorescence images of representative organoids from each class, classified by experts as “retina,” “non-retina,” or “satisfactory.” b Prediction results for different classes which can be assigned after combining the votes from two experts. c The overall pipeline of the OrgaNet model. First, the feature extractor based on the convolutional neural network (CNN) is used to extract the organoid representation, and then the multi-head classifier is used to simulate expert evaluation. Finally, the scoring function is used to process the feature-space data and output the organoid viability score

Recognition results for organoids. a–c Automated quantification of human intestinal organoids using OrgaQuant (adapted and modified from Ref. [29], Copyright 2019, with permission from the authors). d–f Automated quantification of lung epithelial spheroids using Deep-LUMEN (adapted and modified from Ref. [70], Copyright 2020, with permission from The Royal Society of Chemistry). a OrgaQuant recognition process. b There is no difference between human and OrgaQuant measurements. c OrgaQuant versus human recognition speed. d Mean average precision of Deep-LUMEN (model 6) compared with model 5. e Deep-LUMEN (model 6) versus human recognition speed. f The confidence score of Deep-LUMEN reflects the degree of lumen formation

Image segmentation

Image segmentation is the technique and process of dividing an image into a number of specific areas with unique properties and proposing objects of interest [25, 107]. Because they serve as biomimetic tumor models, tumor spheroids or organoids are critical in advancing anti-cancer drug research and development. It is essential to effectively quantify and analyze the behavior and growth of tumor cells and provide a more accurate physiological model for evaluating the effect of drug quality [48]. However, due to the lack of effective automated methods for analyzing 3D tumor cell proliferation and invasion, tumor spheroid organoids have not been widely used in preclinical research. Although there are some 3D tumor invasion analysis methods, these methods only measure the tumor size and invasion distance in a specific direction [108,109,110]. Also, these measurement methods can be inaccurate in special applications. On the one hand, the shape of the tumor is not necessarily spherical; on the other hand, due to the heterogeneity of the extracellular matrix, the invasion distance of tumor balls in different directions is often different. In addition, relying on manual tumor imaging and manual drawing of tumor-sphere boundaries [109,110,111,112] is very time-consuming and error-prone. Our team [48] designed a model for automatic tumor-spheroid segmentation and an AI-based recognition system to solve the above problems (Fig. 7a). This system can be used to analyze the behavior of tumor spheroids. It integrates automatic recognition, automatic focusing, and an improved CNN algorithm (PSP-U-net) to intelligently detect the boundary of the tumor spheroid (Fig. 7b). As the number of training rounds increases, the segmentation of organoids becomes more precise. Compared with the other four methods, this algorithm has the highest accuracy, and an F-value above 0.95 after 125,000 training rounds. In addition, the team developed two comprehensive parameters for analyzing tumor invasion: the excess perimeter index (EPI) and the multiscale entropy index (MSEI). Through experiments, we were able to prove that these two parameters can better describe the aggressiveness of 3D tumors than the parameters used in traditional analysis methods.

Segmentation results for organoids. a, b Equipment and execution processing of tumor-spheroid organoid segmentation (adapted and modified from Ref. [48], Copyright 2021, with permission from Elsevier Ltd.). c Demonstration of automated segmentation using SCOUT (adapted and modified from Ref. [101], Copyright 2020, with permission from the authors). a A comprehensive system for automated tumor imaging and analysis (1: condenser with light source; 2: sample plate; 3: motorized X and Y stages; 4: motorized Z-axis module; 5: objective wheel; 6: filter wheel; 7: CCD; 8: software interface of the system). b The process of segmenting tumor sphere images. c Using U-net to automatically segment the ventricles and 3D rendering of the ventricles

Image-segmentation techniques based on DL are also applied in the analysis of organoid components. Brain organoids are cell cultures that are differentiated from pluripotent stem cells under 3D culture conditions, into brain-like cell composition and similar anatomical structures, and can reflect brain-like development processes and physiological, pathological, and pharmacological characteristics [113, 114]. At present, brain organoid analysis mainly relies on single-cell transcriptome analysis and 2D histology. The loss of 3D spatial information means that system-level single-cell analysis remains unexplored in brain organoids. Therefore, Albanese et al. [101] proposed a computational pipeline called “SCOUT,” which is applied in automatic multiscale comparative analysis of intact brain organoids. Image analysis based on algorithms and CNNs can extract hundreds of features of molecular representation, space, cell structure, and organoid properties from fluorescence microscope images. SCOUT provides a necessary framework for comparative analysis of emerging 3D in vitro models using fluorescence microscopy (Fig. 7c). They used U-net to detect SOX2-lined ventricles. This model achieved a 97.2% Dice coefficient for ventricular segmentation, as well as basic morphological analysis of 3D ventricles (volume, axial ratio, etc.). However, the study lacks an evaluation of the data that U-net can quantify, as well as comparative experiments.

Object tracking

Object tracking is a popular application of DL. It involves obtaining a set of initial objects and developing a unique identifier for each initial detection, and then tracing the detected objects in subsequent frames in the video [115, 116]. Organoids are regularly observed in microscopic images to obtain their morphological or growth characteristics. The growth and drug responses of organoids are both longitudinal and dynamic processes. Scientists will have to use optical microscopes to perform consistent observation. However, long-term manual tracking is difficult and time-consuming work. Moreover, a single field of view (FOV) cannot cover the entire culture medium to maintain the overall situation [117]. This problem requires manual adjustment of the position and focal plane to monitor each area and continuous observation of specific organoids to obtain the movement and dynamics of each organoid growth characteristic. Also, it is not easy to find the correct field of view to track the pre-selected organoids because the organoids are moving. In addition, the density of the organoids in the culture medium varies greatly, the image may be out of focus, the shape and size of the organoids vary widely, artifacts and noises may appear in the image due to illumination conditions and the position and shape of the same organoids change over time. These factors make continuous observation of organoids a challenging task. Bian et al. [31] proposed a new deep neural network (DNN) which can effectively detect organoids and dynamically track them throughout the culture process (Fig. 8a). They divided the solution into two steps: first, process the high-throughput sequence images frame by frame to detect all organoids; second, calculate the similarity between organoids in adjacent frames and match adjacent structures in pairs of organoids. With the help of their proposed dataset, the model offers fast and high-precision organoid detection and tracking (Figs. 8b and 8c), effectively reducing the burden on researchers. However, there are still some problems. The article did not explain clearly how to calculate the distance matrix used in predicting the organoids in adjacent frames. In Fig. 8b, some air bubbles were mistakenly identified as organoids.

Alveolar organoid detection and tracking (adapted and modified from Ref. [31], Copyright 2021, with permission from Elsevier Ltd.). a The network structure, divided into a detection part and a tracking part, with a single shot multi-box detector (SSD) model used in the former. b Identification and quantification of applying an SSD to alveolar organoids. c Localization results of organoid tracking over time

Augmented microscopy

Augmented microscopy refers to a technology that can dig out more potential information from biological images. It is very suitable for combining with DL [118,119,120]. Since many millisecond-level transient cellular processes occur in 3D tissues and span long time scales, a constant challenge in chip measurement is the attempt to extract more spatiotemporal information from an object with sufficiently high throughput. The traditional method does not offer a good solution [121,122,123]. Therefore, Zhu et al. [124] proposed a new fusion of microfluidics and light-field microscopes to achieve high-speed four-dimensional (4D, space–time) imaging of moving nematodes on a chip. The combination of light-field microscopy (LFM) that supports DL and chip-based sample manipulation can continuously record the 3D instantaneous position of nematodes and screen a large number of worms on a high-throughput chip (Fig. 9a). Volume imaging of dynamic signals in large, moving, and light-scattering samples is a research hotspot and quite difficult to achieve. Chen et al. [125] combined the digital light sheet illumination strategy with a microfluidic chip and an image-restoration algorithm based on DL to achieve freely moving fruit flies with single-cell resolution and up to a 20-Hz video rate on a common inverted-microscope 3D functional image of larvae (Fig. 9b). In summary, augmented microscopy shows the greatest potential for various types of lab-on-a-chip biological research, including high-throughput organoid screening and growth analysis. In future, deploying DL algorithms in microscopes or high-content imaging and analysis devices will be a promising direction for automated organoid data analysis.

Combination of microscope and deep learning (DL). a 3D trajectories and speeds of six moving wild-type worms (1–6) and six uncoordinated-type mutant worms (7–12) over 450 ms (adapted and modified from Ref. [124], Copyright 2021, with permission from Elsevier B.V.). b Image restoration procedure based on DL (adapted and modified from Ref. [125], Copyright 2021, with permission from The Royal Society of Chemistry)

Discussion and conclusions

The limitations in current artificial intelligence (AI) analysis of organoids

-

1.

DL algorithms require big data support [126]. It is evident from the review of the existing literature that most of the current DL methods that represent the leading level use supervised learning, particularly learning based on the CNN framework. To achieve good accuracy, neural networks usually require many annotated samples to perform training tasks. Collecting annotated high-quality datasets containing tens of thousands or hundreds of thousands of images for supervised learning is usually a very difficult task, and manual annotation on these images is also very tedious and expensive. As a result, the construction of standardized databases for different organoids is urgently needed [127, 128].

-

2.

DL is prone to overfitting [61]. When the trained model is too complex, the model’s generalization ability is not strong. This problem is also caused by an insufficient dataset. The standardized databases proposed above would partially solve this problem.

-

3.

DL requires great computing hardware [129]. Normally, the dimensions of the pictures used for model training are several hundred pixels square, but organoid images obtained under the microscope have high resolution (several thousand pixels square). The current hardware available is not enough to support large-scale use of high-dimensional images for training DL algorithms. Therefore, many researchers reduce the dimensionality of pictures by dividing the original image into several image blocks; but this usually causes loss of objects in the image [29, 48].

-

4.

DL applied in organoid research still lacks interpretability [130]. Humans do not understand these characteristics learned by DL, although DL can provide more accurate results [43]. If researchers can make full use of DL to explore and mine more organoid growth and differentiation data and provide a clear explanation, it may eventually lead to new biological discoveries.

-

5.

DL for organoid analysis is still relatively simple [29, 48, 70, 100]. At present, most studies that have combined artificial intelligence and organoids simply involve recognition and segmentation of organoids, or classification into two or at most three categories. For analysis of organoids, there are still many meaningful indicators that could be discovered and quantified with the help of artificial intelligence.

Conclusions

At present, in vitro observation of organoids mainly focuses on organoid morphological changes. Optical, electrochemical, and other methods that can detect multiple indicators of organoids in vivo are still lacking. Therefore, studies with multi-disciplinary research are important future directions [131, 132]. One promising approach is to use DL methods such as object detection and image segmentation to evaluate multiple indicators of organoids. In addition, there are problems in researching organoids at this stage; for example, repeatability and consistency are still major bottlenecks in organoid culture. These problems are largely caused by a lack of process control and industry standards. The excessive influence of human factors in the organoid culture process and the low degree of automation have led to inevitable human error. Therefore, development of more automated, intelligent, and integrated systems is the future development trend [133, 134]. Using DL-based object detection to obtain useful information, the system or equipment can make intelligent judgments and carry out operations according to the recognition results, which is an important application of technology to avoid human interference. We also anticipate more combinations of DL algorithms and high-content analysis. A “self-learning microscope” system is expected, which would combine automation, high speed, high throughput, and high repeatability, and could help biologists collect a large amount of living cell data and extract reliable and accurate scientific results [25, 124]. Another shortcoming of organoid systems is the lack of communication between tissues. For example, the communication modeling between the cell and the stromal cell group and the vascular system development in the organoid system remain to be elucidated. Most organoid-related research is aimed at simulating a part of the human body rather than the whole. Currently, most studies on organoids are limited to reproduction of organ-specific or tissue-specific neurophysiology [135, 136]. We are glad to see that some research institutions have already tried to overcome these difficulties. For example, Southeast University’s “Digital Clone Human” program uses advanced biomedical-data-acquisition equipment to collect high-quality biomedical data that are cross-scale, covers the full life cycle, and includes molecules, cells, and tissues, as well as both organs and human systems. Further information is available at http://117.73.8.164:8083/. We believe that by using artificial intelligence, virtual reality, and other information technology, researchers will be able to build personalized, self-deductive digital humans to simulate human life processes. This could even lead to the replacement of clinical trials in life science research and the biomedical industry. The prospects and future of organoids are foreseeable, but first, organoid research will have to overcome many obstacles so that it can finally successfully step into the future. Luckily, more and more biological and medical researchers are now devoted to organoid exploration and study. We believe that readers will observe a significant increase in the scope of DL-based organoid analysis in the near future.

References

Tuveson D, Clevers H (2019) Cancer modeling meets human organoid technology. Science 364(6444):952–955. https://doi.org/10.1126/science.aaw6985

Shariati L, Esmaeili Y, Javanmard SH et al (2021) Organoid technology: current standing and future perspectives. Stem Cells 39(12):1625–1649. https://doi.org/10.1002/stem.3379

Wang Z, Wang SN, Xu TY et al (2017) Organoid technology for brain and therapeutics research. CNS Neurosci Ther 23(10):771–778. https://doi.org/10.1111/cns.12754

Davies JA (2012) Replacing animal models: a practical guide to creating and using culture-based biomimetic alternatives. John Wiley, Blackwell. https://doi.org/10.1002/9781119940685

Lee J, Rabbani CC, Gao H et al (2020) Hair-bearing human skin generated entirely from pluripotent stem cells. Nature 582(7812):399–404. https://doi.org/10.1038/s41586-020-2352-3

Pham MT, Pollock KM, Rose MD et al (2018) Generation of human vascularized brain organoids. NeuroReport 29(7):588–593. https://doi.org/10.1097/WNR.0000000000001014

Ramachandran SD, Schirmer K, Münst B et al (2015) In vitro generation of functional liver organoid-like structures using adult human cells. PLoS ONE 10(10):e0139345. https://doi.org/10.1371/journal.pone.0139345

Cruz-Acuna R, Quiros M, Farkas AE et al (2017) Synthetic hydrogels for human intestinal organoid generation and colonic wound repair. Nat Cell Biol 19(11):1326–1335. https://doi.org/10.1038/ncb3632

Drost J, Karthaus WR, Gao D et al (2016) Organoid culture systems for prostate epithelial and cancer tissue. Nat Protoc 11(2):347–358. https://doi.org/10.1038/nprot.2016.006

Dye BR, Hill DR, Ferguson MA et al (2015) In vitro generation of human pluripotent stem cell derived lung organoids. eLife 4:e05098. https://doi.org/10.7554/eLife.05098

Broutier L, Andersson-Rolf A, Hindley CJ et al (2016) Culture and establishment of self-renewing human and mouse adult liver and pancreas 3D organoids and their genetic manipulation. Nat Protoc 11(9):1724–1743. https://doi.org/10.1038/nprot.2016.097

Zanoni M, Cortesi M, Zamagni A et al (2020) Modeling neoplastic disease with spheroids and organoids. J Hematol Oncol 13(1):97. https://doi.org/10.1186/s13045-020-00931-0

Gunti S, Hoke ATK, Vu KP et al (2021) Organoid and spheroid tumor models: techniques and applications. Cancers 13(4):874. https://doi.org/10.3390/cancers13040874

Born J, Beymer D, Rajan D et al (2021) On the role of artificial intelligence in medical imaging of COVID-19. Patterns 2(6):100269. https://doi.org/10.1016/j.patter.2021.100269

Thwaites D, Moses D, Haworth A et al (2021) Artificial intelligence in medical imaging and radiation oncology: opportunities and challenges. J Med Imaging Radiat Oncol 65(5):481–485. https://doi.org/10.1111/1754-9485.13275

Durkee MS, Abraham R, Clark MR et al (2021) Artificial intelligence and cellular segmentation in tissue microscopy images. Am J Pathol 191(10):1693–1701. https://doi.org/10.1016/j.ajpath.2021.05.022

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

van der Laak J, Litjens G, Ciompi F (2021) Deep learning in histopathology: the path to the clinic. Nat Med 27(5):775–784. https://doi.org/10.1038/s41591-021-01343-4

Han Y, Duan X, Yang L et al (2021) Identification of SARS-CoV-2 inhibitors using lung and colonic organoids. Nature 589(7841):270–275. https://doi.org/10.1038/s41586-020-2901-9

Zhao B, Ni C, Gao R et al (2020) Recapitulation of SARS-CoV-2 infection and cholangiocyte damage with human liver ductal organoids. Protein Cell 11(10):771–775. https://doi.org/10.1007/s13238-020-00718-6

Rossi G, Manfrin A, Lutolf MP (2018) Progress and potential in organoid research. Nat Rev Genet 19(11):671–687. https://doi.org/10.1038/s41576-018-0051-9

Bell CM, Zack DJ, Berlinicke CA (2020) Human organoids for the study of retinal development and disease. Ann Rev Vision Sci 6(1):91–114. https://doi.org/10.1146/annurev-vision-121219-081855

Artegiani B, Clevers H (2018) Use and application of 3D-organoid technology. Human Mol Genet 27(R2):R99–R107. https://doi.org/10.1093/hmg/ddy187

Hentschel V, Seufferlein T, Armacki M (2021) Intestinal organoids in co-culture: redefining the boundaries of gut mucosa ex vivo modeling. Am J Physiol Gastrointest Liver Physiol 321(6):G693–G704. https://doi.org/10.1152/ajpgi.00043.2021

Moen E, Bannon D, Kudo T et al (2019) Deep learning for cellular image analysis. Nat Methods 16(12):1233–1246. https://doi.org/10.1038/s41592-019-0403-1

Höfener H, Homeyer A, Weiss N et al (2018) Deep learning nuclei detection: a simple approach can deliver state-of-the-art results. Comput Med Imaging Graphics 70:43–52. https://doi.org/10.1016/j.compmedimag.2018.08.010

Andrion A, Magnani C, Betta PG et al (1995) Malignant mesothelioma of the pleura: interobserver variability. J Clin Pathol 48(9):856–860. https://doi.org/10.1136/jcp.48.9.856

Sun J, Tarnok A, Su X (2020) Deep learning-based single-cell optical image studies. Cytometry A 97(3):226–240. https://doi.org/10.1002/cyto.a.23973

Kassis T, Hernandez-Gordillo V, Langer R et al (2019) OrgaQuant: human intestinal organoid localization and quantification using deep convolutional neural networks. Sci Rep 9(1):12479. https://doi.org/10.1038/s41598-019-48874-y

Paulauskaite-Taraseviciene A, Sutiene K, Valotka J et al (2019) Deep learning-based detection of overlapping cells. In: Proceedings of the 3rd International Conference on Advances in Artificial Intelligence, pp 217–220. https://doi.org/10.1145/3369114.3369120

Bian X, Li G, Wang C et al (2021) A deep learning model for detection and tracking in high-throughput images of organoid. Comput Biol Med 134:104490. https://doi.org/10.1016/j.compbiomed.2021.104490

Bonda U, Jaeschke A, Lighterness A et al (2020) 3D quantification of vascular-like structures in z stack confocal images. STAR Protoc 1(3):100180. https://doi.org/10.1016/j.xpro.2020.100180

Roy M, Chakraborty S, Mali K et al (2017) Cellular image processing using morphological analysis. In: IEEE 8th Annual Ubiquitous Computing, Electronics and Mobile Communication Conference, pp 237–241. https://doi.org/10.1109/UEMCON.2017.8249037

Pushpanathan K, Hanafi M, Mashohor S et al (2021) Machine learning in medicinal plants recognition: a review. Artif Intell Rev 54(1):305–327. https://doi.org/10.1007/s10462-020-09847-0

Clarke SL, Parmesar K, Saleem MA et al (2022) Future of machine learning in paediatrics. Arch Dis Child 107(3):223–228. https://doi.org/10.1136/archdischild-2020-321023

Jordan MI, Mitchell TM (2015) Machine learning: trends, perspectives, and prospects. Science 349(6245):255–260. https://doi.org/10.1126/science.aaa8415

Gritti N, Lim JL, Anlas K et al (2021) MOrgAna: accessible quantitative analysis of organoids with machine learning. Development 148(18):dev199611. https://doi.org/10.1242/dev.199611

Kong J, Lee H, Kim D et al (2020) Network-based machine learning in colorectal and bladder organoid models predicts anti-cancer drug efficacy in patients. Nat Commun 11(1):5485. https://doi.org/10.1038/s41467-020-19313-8

Goecks J, Jalili V, Heiser LM et al (2020) How machine learning will transform biomedicine. Cell 181(1):92–101. https://doi.org/10.1016/j.cell.2020.03.022

Safavian SR, Landgrebe D (1991) A survey of decision tree classifier methodology. IEEE Trans Syst Man Cybern 21(3):660–674. https://doi.org/10.1109/21.97458

Wu J, Ji Y, Zhao L et al (2016) A mass spectrometric analysis method based on PPCA and SVM for early detection of ovarian cancer. Comput Math Methods Med 2016:6169249. https://doi.org/10.1155/2016/6169249

Li J, Chen J, Bai H et al (2022) An overview of organs-on-chips based on deep learning. Research 2022:9869518. https://doi.org/10.34133/2022/9869518

Rahaman MM, Li C, Wu X et al (2020) A survey for cervical cytopathology image analysis using deep learning. IEEE Access 8:61687–61710. https://doi.org/10.1109/ACCESS.2020.2983186

Goodfellow IJ, Pouget-Abadie J, Mirza M et al (2014) Generative adversarial nets. Adv Neural Inform Process Syst 3:2672–2680

Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60(6):84–90. https://doi.org/10.1145/3065386

DiPietro R, Hager GD (2020) Deep learning: RNNs and LSTM. In: Zhou SK, Rueckert D, Fichtinger G (eds) Handbook of medical image computing and computer assisted intervention, pp 503–519. Academic Press, Elsevier. https://doi.org/10.1016/B978-0-12-816176-0.00026-0

Mishra S, Wang YX, Wei CC et al (2021) VTG-Net: a CNN based vessel topology graph network for retinal artery/vein classification. Front Med 8:750396. https://doi.org/10.3389/fmed.2021.750396

Chen Z, Ma N, Sun X et al (2021) Automated evaluation of tumor spheroid behavior in 3D culture using deep learning-based recognition. Biomaterials 272:120770. https://doi.org/10.1016/j.biomaterials.2021.120770

Bian X, Li G, Wang C et al (2021) OrgaNet: a deep learning approach for automated evaluation of organoids viability in drug screening. In: International Symposium on Bioinformatics Research and Applications, pp 411–423. https://doi.org/10.1007/978-3-030-91415-8_35

Caicedo JC, Cooper S, Heigwer F et al (2017) Data-analysis strategies for image-based cell profiling. Nat Methods 14(9):849–863. https://doi.org/10.1038/nmeth.4397

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp 886–893. https://doi.org/10.1109/CVPR.2005.177

Ojala T, Pietikainen M, Maenpaa T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Patt Anal Mach Intell 24(7):971–987. https://doi.org/10.1109/TPAMI.2002.1017623

Lienhart R, Maydt J (2002) An extended set of Haar-like features for rapid object detection. In: International Conference on Image Processing. https://doi.org/10.1109/ICIP.2002.1038171

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines and other kernel-based learning methods. Cambridge University Press. https://doi.org/10.1017/CBO9780511801389

Svetnik V, Liaw A, Tong C et al (2003) Random forest: a classification and regression tool for compound classification and QSAR modeling. J Chem Inform Comput Sci 43(6):1947–1958. https://doi.org/10.1021/ci034160g

Freund Y, Schapire RE (1996) Experiments with a new boosting algorithm. In: Proceedings of the 13th International Conference on Machine Learning.

Zhao ZQ, Zheng P, Xu ST et al (2019) Object detection with deep learning: a review. IEEE Trans Neur Netw Learn Syst 30(11):3212–3232. https://doi.org/10.1109/TNNLS.2018.2876865

Fu KS, Rosenfeld A (1976) Pattern recognition and image processing. IEEE Trans Comput C 25(12):1336–1346. https://doi.org/10.1109/TC.1976.1674602

Varoquaux G, Cheplygina V (2022) Machine learning for medical imaging: methodological failures and recommendations for the future. NPJ Digit Med 5(1):48. https://doi.org/10.1038/s41746-022-00592-y

Castiglioni I, Rundo L, Codari M et al (2021) AI applications to medical images: from machine learning to deep learning. Phys Med 83:9–24. https://doi.org/10.1016/j.ejmp.2021.02.006

Hesamian MH, Jia W, He X et al (2019) Deep learning techniques for medical image segmentation: achievements and challenges. J Digit Imaging 32(4):582–596. https://doi.org/10.1007/s10278-019-00227-x

Canny J (1986) A computational approach to edge detection. IEEE Trans Patt Anal Mach Intell PAMI 8(6):679–698. https://doi.org/10.1109/TPAMI.1986.4767851

Prewitt JM (1970) Object enhancement and extraction. Picture Process Psychopict 10(1):15–19

Foody GM (2002) Status of land cover classification accuracy assessment. Remote Sens Environ 80(1):185–201. https://doi.org/10.1016/S0034-4257(01)00295-4

Yue Y, Finley T, Radlinski F et al (2007) A support vector method for optimizing average precision. In: Proceedings of the 30th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 271–278. https://doi.org/10.1145/1277741.1277790

Kusumoto D, Yuasa S (2019) The application of convolutional neural network to stem cell biology. Inflamm Regen 39:14. https://doi.org/10.1186/s41232-019-0103-3

Anwar SM, Majid M, Qayyum A et al (2018) Medical image analysis using convolutional neural networks: a review. J Med Syst 42(11):226. https://doi.org/10.1007/s10916-018-1088-1

Brinker TJ, Hekler A, Utikal JS et al (2018) Skin cancer classification using convolutional neural networks: systematic review. J Med Internet Res 20(10):e11936. https://doi.org/10.2196/11936

Jiang Y, Li C (2020) Convolutional neural networks for image-based high-throughput plant phenotyping: a review. Plant Phenomics 2020:4152816. https://doi.org/10.34133/2020/4152816

Abdul L, Rajasekar S, Lin DSY et al (2020) Deep-LUMEN assay - human lung epithelial spheroid classification from brightfield images using deep learning. Lab Chip 20(24):4623–4631. https://doi.org/10.1039/d0lc01010c

Redmon J, Divvala S, Girshick R et al (2016) You only look once: unified, real-time object detection. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 779–788. https://doi.org/10.1109/CVPR.2016.91

Ajit A, Acharya K, Samanta A (2020) A review of convolutional neural networks. In: International Conference on Emerging Trends in Information Technology and Engineering, pp 1–5. https://doi.org/10.1109/ic-ETITE47903.2020.049

Redmon J, Farhadi A (2017) YOLO9000: better, faster, stronger. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 6517–6525. https://doi.org/10.1109/CVPR.2017.690

Redmon J, Farhadi A (2018) YOLOv3: an incremental improvement. https://doi.org/10.48550/arXiv.1804.02767

Bochkovskiy A, Wang CY, Liao HY (2020) YOLOv4: optimal speed and accuracy of object detection. https://arxiv.org/abs/2004.10934

Snegireva D, Perkova A (2021) Traffic sign recognition application using YOLOv5 architecture. In: International Russian Automation Conference, pp 1002–1007. https://doi.org/10.1109/RusAutoCon52004.2021.9537355

Wang X, Liao J, Yue G et al (2021) Induced pluripotent stem cells detection via ensemble Yolo network. In: 43rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp 3738–3741. https://doi.org/10.1109/EMBC46164.2021.9629744

Ren S, He K, Girshick RB et al (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Patt Anal Mach Intell 39(6):1137–1149. https://doi.org/10.1109/TPAMI.2016.2577031

Jaderberg M, Simonyan K, Zisserman A (2015) Spatial transformer networks. Adv Neural Inform Process Syst 28:2017–2025

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Szegedy C, Ioffe S, Vanhoucke V et al (2017) Inception-v4, inception-ResNet and the impact of residual connections on learning. In: 31st AAAI Conference on Artificial Intelligence, pp 4278–4284

Li R, Zeng X, Sigmund SE et al (2019) Automatic localization and identification of mitochondria in cellular electron cryo-tomography using faster-RCNN. BMC Bioinform 20(Suppl 3):132. https://doi.org/10.1186/s12859-019-2650-7

Li X, Xu Z, Shen X et al (2021) Detection of cervical cancer cells in whole slide images using deformable and global context aware faster RCNN-FPN. Curr Oncol 28(5):3585–3601. https://doi.org/10.3390/curroncol28050307

Lin TY, Dollar P, Girshick R et al (2017) Feature pyramid networks for object detection. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 936–944. https://doi.org/10.1109/CVPR.2017.106

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: 18th International Conference on Medical Image Computing and Computer-assisted Intervention, pp 234–241. https://doi.org/10.1007/978-3-319-24574-4_28

Anand V, Gupta S, Koundal D et al (2022) Modified U-NET architecture for segmentation of skin lesion. Sensors 22(3):867. https://doi.org/10.3390/s22030867

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3431–3440

Lin D, Li Y, Nwe TL et al (2020) RefineU-Net: improved U-Net with progressive global feedbacks and residual attention guided local refinement for medical image segmentation. Patt Recognit Lett 138:267–275. https://doi.org/10.1016/j.patrec.2020.07.013

Wu Y, Wu J, Jin S et al (2021) Dense-U-net: dense encoder–decoder network for holographic imaging of 3D particle fields. Optics Commun 493:126970. https://doi.org/10.1016/j.optcom.2021.126970

Zhou HY, Guo J, Zhang Y et al (2021) nnFormer: interleaved transformer for volumetric segmentation. https://arxiv.org/abs/2109.03201

Zhang B, Li W, Hui Y et al (2020) MFENet: multi-level feature enhancement network for real-time semantic segmentation. Neurocomputing 393:54–65. https://doi.org/10.1016/j.neucom.2020.02.019

Li D, Rahardja S (2021) BSEResU-Net: an attention-based before-activation residual U-Net for retinal vessel segmentation. Comput Methods Programs Biomed 205:106070. https://doi.org/10.1016/j.cmpb.2021.106070

Kohl SAA, Romera-Paredes B, Maier-Hein K et al (2019) A hierarchical probabilistic u-net for modeling multi-scale ambiguities. https://arxiv.org/abs/1905.13077

Wang W, Chen J, Zhao J et al (2019) Automated segmentation of pulmonary lobes using coordination-guided deep neural networks. In: IEEE 16th International Symposium on Biomedical Imaging, pp 1353–1357. https://doi.org/10.1109/ISBI.2019.8759492

Milletari F, Navab N, Ahmadi S (2016) V-Net: fully convolutional neural networks for volumetric medical image segmentation. In: Fourth International Conference on 3D Vision, pp 565–571. https://doi.org/10.1109/3DV.2016.79

Huang C, Han H, Yao Q et al (2019) 3D U2-Net: a 3D universal U-Net for multi-domain medical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, pp 291–299

Yan W, Wang Y, Gu S et al (2019) The domain shift problem of medical image segmentation and vendor-adaptation by Unet-GAN. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, pp 623–631

Leng J, Liu Y, Zhang T et al (2019) Context-aware U-Net for biomedical image segmentation. In: IEEE International Conference on Bioinformatics and Biomedicine, pp 2535–2538. https://doi.org/10.1109/BIBM.2018.8621512

Prangemeier T, Wildner C, Francani AO et al (2021) Yeast cell segmentation in microstructured environments with deep learning. Biosystems 211:104557. https://doi.org/10.1016/j.biosystems.2021.104557

Kegeles E, Naumov A, Karpulevich EA et al (2020) Convolutional neural networks can predict retinal differentiation in retinal organoids. Front Cell Neurosci 14:171. https://doi.org/10.3389/fncel.2020.00171

Albanese A, Swaney JM, Yun DH et al (2020) Multiscale 3D phenotyping of human cerebral organoids. Sci Rep 10(1):21487. https://doi.org/10.1038/s41598-020-78130-7

Bian X, Li G, Wang C et al (2021) OrgaNet: a deep learning approach for automated evaluation of organoids viability in drug screening. In: International Symposium on Bioinformatics Research and Applications, pp 411–423. https://doi.org/10.1007/978-3-030-91415-8_35

Zawadzka-Gosk E, Wołk K, Czarnowski W (2019) Deep learning in state-of-the-art image classification exceeding 99% accuracy. World Conference on Information Systems and Technologies, pp 946–957

McCauley HA, Wells JM (2017) Pluripotent stem cell-derived organoids: using principles of developmental biology to grow human tissues in a dish. Development 144(6):958–962. https://doi.org/10.1242/dev.140731

Hallam D, Hilgen G, Dorgau B et al (2018) Human-induced pluripotent stem cells generate light responsive retinal organoids with variable and nutrient-dependent efficiency. Stem Cells 36(10):1535–1551. https://doi.org/10.1002/stem.2883

Cowan CS, Renner M, De Gennaro M et al (2020) Cell types of the human retina and its organoids at single-cell resolution. Cell 182(6):1623-1640.e34. https://doi.org/10.1016/j.cell.2020.08.013

He K, Gkioxari G, Dollár P et al (2017) Mask R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp 2961–2969

Berger AJ, Linsmeier KM, Kreeger PK et al (2017) Decoupling the effects of stiffness and fiber density on cellular behaviors via an interpenetrating network of gelatin-methacrylate and collagen. Biomaterials 141:125–135. https://doi.org/10.1016/j.biomaterials.2017.06.039

Sutherland RM, Durand RE (1984) Growth and cellular characteristics of multicell spheroids. Recent Results Cancer Res 95:24–49. https://doi.org/10.1007/978-3-642-82340-4_2

Kunz-Schughart LA, Kreutz M, Knuechel R (1998) Multicellular spheroids: a three-dimensional in vitro culture system to study tumour biology. Int J Exp Pathol 79(1):1–23. https://doi.org/10.1046/j.1365-2613.1998.00051.x

Ziolkowska K, Stelmachowska A, Kwapiszewski R et al (2013) Long-term three-dimensional cell culture and anticancer drug activity evaluation in a microfluidic chip. Biosens Bioelectron 40(1):68–74. https://doi.org/10.1016/j.bios.2012.06.017

Park SE, Georgescu A, Huh D (2019) Organoids-on-a-chip. Science 364(6444):960–965. https://doi.org/10.1126/science.aaw7894

Lancaster MA, Renner M, Martin CA et al (2013) Cerebral organoids model human brain development and microcephaly. Nature 501(7467):373–379. https://doi.org/10.1038/nature12517

Chiaradia I, Lancaster MA (2020) Brain organoids for the study of human neurobiology at the interface of in vitro and in vivo. Nat Neurosci 23(12):1496–1508. https://doi.org/10.1038/s41593-020-00730-3

Tang C, Wu Z, Wang S et al (2021) Industrial object detection method based on improved CenterNet. In: International Conference on Computer Engineering and Artificial Intelligence, pp 121–125. https://doi.org/10.1109/ICCEAI52939.2021.00023

Russakovsky O, Deng J, Su H et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vision 115(3):211–252. https://doi.org/10.1007/s11263-015-0816-y

Yang Y, Zhao L, Chen X et al (2018) Reduced field of view single-shot spiral perfusion imaging. Magn Reson Med 79(1):208–216. https://doi.org/10.1002/mrm.26664

Chen PC, Gadepalli K, MacDonald R et al (2019) An augmented reality microscope with real-time artificial intelligence integration for cancer diagnosis. Nat Med 25(9):1453–1457. https://doi.org/10.1038/s41591-019-0539-7

Christiansen EM, Yang SJ, Ando DM et al (2018) In silico labeling: predicting fluorescent labels in unlabeled images. Cell 173(3):792-803.e19. https://doi.org/10.1016/j.cell.2018.03.040

Ounkomol C, Seshamani S, Maleckar MM et al (2018) Label-free prediction of three-dimensional fluorescence images from transmitted-light microscopy. Nat Methods 15(11):917–920. https://doi.org/10.1038/s41592-018-0111-2

Huisken J, Swoger J, Del Bene F et al (2004) Optical sectioning deep inside live embryos by selective plane illumination microscopy. Science 305(5686):1007–1009. https://doi.org/10.1126/science.1100035

Keller PJ, Schmidt AD, Wittbrodt J et al (2008) Reconstruction of zebrafish early embryonic development by scanned light sheet microscopy. Science 322(5904):1065–1069. https://doi.org/10.1126/science.1162493

Ding Y, Ma J, Langenbacher AD et al (2018) Multiscale light-sheet for rapid imaging of cardiopulmonary system. JCI Insight 3(16):e121396. https://doi.org/10.1172/jci.insight.121396

Zhu T, Zhu L, Li Y et al (2021) High-speed large-scale 4D activities mapping of moving C. elegans by deep-learning-enabled light-field microscopy on a chip. Sens Actuat B Chem 348:130638. https://doi.org/10.1016/j.snb.2021.130638

Chen X, Ping J, Sun Y et al (2021) Deep-learning on-chip light-sheet microscopy enabling video-rate volumetric imaging of dynamic biological specimens. Lab Chip 21(18):3420–3428. https://doi.org/10.1039/d1lc00475a

Marcus G (2018) Deep learning: a critical appraisal. https://arxiv.org/abs/1801.00631

Wells WM (2016) Medical image analysis - past, present, and future. Med Image Anal 33:4–6. https://doi.org/10.1016/j.media.2016.06.013

Madabhushi A, Lee G (2016) Image analysis and machine learning in digital pathology: challenges and opportunities. Med Image Anal 33:170–175. https://doi.org/10.1016/j.media.2016.06.037

Sang J (2018) Deep learning interpretation. In: Proceedings of the 26th ACM International Conference on Multimedia, pp 2098–2100. https://doi.org/10.1145/3240508.3241472

Li X, Xiong H, Li X et al (2021) Interpretable deep learning: interpretation, interpretability, trustworthiness, and beyond. https://arxiv.org/abs/2103.10689

Bhat SM, Badiger VA, Vasishta S et al (2021) 3D tumor angiogenesis models: recent advances and challenges. J Cancer Res Clin Oncol 147(12):3477–3494. https://doi.org/10.1007/s00432-021-03814-0

Chang M, Bogacheva MS, Lou YR (2021) Challenges for the applications of human pluripotent stem cell-derived liver organoids. Front Cell Dev Biol 9:748576. https://doi.org/10.3389/fcell.2021.748576

Li Y, Yang X, Plummer R et al (2021) Human pluripotent stem cell-derived hepatocyte-like cells and organoids for liver disease and therapy. Int J Mol Sci 22(19):10471. https://doi.org/10.3390/ijms221910471

Palano G, Foinquinos A, Mullers E (2021) In vitro assays and imaging methods for drug discovery for cardiac fibrosis. Front Physiol 12:697270. https://doi.org/10.3389/fphys.2021.697270

Kim J, Koo BK, Knoblich JA (2020) Human organoids: model systems for human biology and medicine. Nat Rev Mol Cell Biol 21(10):571–584. https://doi.org/10.1038/s41580-020-0259-3

Xu X, Li L, Luo L et al (2021) Opportunities and challenges of glioma organoids. Cell Commun Signal 19(1):102. https://doi.org/10.1186/s12964-021-00777-0

Acknowledgements