Abstract

Recent meta-analyses and meta-analytic reviews of most common approaches to cognitive training broadly converge on describing a lack of transfer effects past the trained task. This also extends to the more recent attempts at using video games to improve cognitive abilities, bringing into question if they have any true effects on cognitive functioning at all. Despite this, video game training studies are slowly beginning to accumulate and provide evidence of replicable improvements. Our study aimed to train non-video game playing individuals in the real-time strategy video game StarCraft II in order to observe any subsequent changes to perceptual, attentional, and executive functioning. Thirty hours of StarCraft II training resulted in improvements to perceptual and attentional abilities, but not executive functioning. This pattern of results is in line with previous research on the more frequently investigated “action” video games. By splitting the StarCraft II training group into two conditions of “fixed” and “variable” training, we were also able to demonstrate that manipulating the video game environment produces measurable differences in the amount of cognitive improvement. Lastly, by extracting in-game behavior features from recordings of each participant’s gameplay, we were able to show a direct correlation between in-game behavior change and cognitive performance change after training. These findings highlight and support the growing trend of more finely detailed and methodologically rigorous approaches to studying the relationship between video games and cognitive functioning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The past two decades have seen a quick increase in research on the topic of cognitive training, or the targeted efforts of scientists to improve cognitive ability by way of behavioral interventions. This focus is both warranted and unsurprising given how important cognitive ability is for life success (e.g., Strenze, 2007; Watkins et al., 2007; Lynn & Yadav, 2015), and some of the inspiration for training approaches can be traced to the observation that performing certain tasks seems to be related to improved cognition. For instance, chess players (Burgoyne et al., 2016; Sala et al., 2017) and musicians (Schellenberg, 2011; Swaminathan et al., 2017) have repeatedly been shown to demonstrate greater performance on tests of intelligence than non-playing individuals.

Despite these observations, demonstrating a causal link between performing those tasks and enhanced cognitive ability has proven very difficult. Even though the cross-sectional evidence is strong, both chess and musical training (Gliga & Flesner, 2014; Sala & Gobet, 2017a; Sala & Gobet, 2017b) fail to show benefits to cognitive ability in training studies, especially when an active control group is used as the reference. Although training the domain-specific skills related to those tasks undeniably improves the task performance itself, there appears to be no transfer to domain-general cognitive abilities.

This is mirrored by the second notable approach to improving domain-general cognitive ability, i.e., by training those abilities directly (Taatgen, 2016). A good example of this is the attempt to improve fluid intelligence by training its strong correlate, working memory (Conway et al., 2003; Chuderski, 2013). Even though the initial results were promising (Jaeggi et al., 2008), it has since been repeatedly shown that working memory training has near-zero effects on intelligence (again, particularly when compared to active control groups: Melby-Lervåg et al., 2016). Brain training games are similarly unimpressive. Although the marketing claims that playing these games can improve domain-general cognition—and by way of transfer general cognitive ability—abound, evidence has failed to materialize. As in working memory training, improvements after brain training games seem to be limited to the training task or very closely related tasks (near-transfer); there is little evidence of any far-transfer effects to general cognitive ability (Simons et al., 2016).

These failures and others have led some researchers to go so far as to conclude that cognitive training produces no appreciable benefits (Sala & Gobet, 2019), and such a conclusion is not unwarranted given the available literature. However, there is still one form of training that continues to show promise: video game training. Evidence of an association between video game experience and cognitive ability has mounted rapidly. The meta-analytic data converge to consistently demonstrate that video game players (VGPs) outperform non-video game players (NVGPs) on a wide range of cognitive tasks at medium effect sizes (Powers et al., 2013; Powers & Brooks, 2014; Bediou et al., 2018; Sala et al., 2018; for review, see: Green & Bavelier, 2015). Despite some of the methodological concerns that have been raised about this body of literature (e.g., Boot et al., 2011; Unsworth et al., 2015; Sala et al., 2018), its volume and relative consistency in showing positive effects offers the hope of a causal link between playing video games and improved cognitive abilities.

Although not as numerous as the cross-sectional comparisons, video game training studies have also begun to accumulate. The most recent meta-analyses on such cognitive interventions in healthy adults report that across all of the various domains of cognition under consideration, training NVGPs using video games results in small to medium effects of cognitive improvement (Powers et al., 2013; Powers & Brooks, 2014; Wang et al., 2016; Bediou et al., 2018). Bediou et al. (2018) note that while most of the cognitive domains they included in their research were shown to benefit from video gaming, they did not benefit equally. The largest effect sizes were for the domains of spatial cognition (e.g., mental rotation, spatial working memory), top-down attention (e.g., complex search, multiple object tracking), and perception (e.g., contrast sensitivity, lateral masking). These three domains also showed the largest effects in both cross-sectional and training studies, which is a notable consistency.

Most importantly, the effects remain even when considering only training studies that used an active control group (Bediou et al., 2018), which provides a higher standard of evidence than the typically employed passive control groups. That being said, conclusions about the efficacy of video game training are not all positive. A recent review of these results and their meta-analyses argues the true effect of video game training on overall cognitive ability is near-zero after correcting for modelling flaws and publication bias (Sala et al., 2018). Even so, the fact that the effects remain when using an active reference distinguishes the literature on video game training from the examples mentioned earlier.

Although the meta-analyses cited above include varying types of video games, the primary focus is clearly on what the broader literature refers to as “action” video games. They tend to contain features that are considered key to impacting cognition, such as: “(a) a fast pace; (b) a high degree of perceptual and motor load, but also working memory, planning and goal setting; (c) an emphasis on constantly switching between a highly focused state of attention and a more distributed state of attention; and (d) a high degree of clutter and distraction” (Bediou et al., 2018). This definition potentially encompasses several genres of video games, but the literature reflects that most researchers have focused on first-person shooter (FPS) and third-person shooter (TPS) action video games. However, there is a third genre that seemingly fits the description above: real-time strategy (RTS).

Unlike FPS and TPS video games, real-time strategy video games are played from a top-down (allocentric) perspective, somewhat resembling classic strategy games like chess. As in chess, players are given control over a set of specialized pieces (units) and must deploy them across a game board (map) in order to defeat their opponent. One critical added element is that players are able to create more units to deploy onto the game map, typically by a mechanic of gathering and spending resources. Combined with the need to react to the opponent’s actions in real time, this leads to a dynamic of executing a strategy as quickly as possible while also being flexible enough to adapt to changing circumstances. Furthermore, because action can occur anywhere on the game map, players must often switch their focus between individual tasks and map locations while also maintaining an overall picture of the game state.

Without delving into the specifics of RTS mechanics too deeply, it can be argued that RTS video games are a good fit to the definition of action video games proposed by Bediou et al. (2018). Indeed, the same paper notes that some of the gameplay mechanics that were rather specific to FPS and TPS video games in the past can now be found in several other genres, including RTS. However, since most previous studies either excluded RTS players from their “action” video game player samples or treated them as NVGPs, the evidence for their potential to impact cognition is much more limited than in the case of FPS and TPS games. Only a few studies have investigated FPS and RTS players (Dobrowolski et al., 2015; Dale & Green, 2017; Klaffehn et al., 2018), with results suggesting similar but not necessarily equivalent (task dependent) performance advantages in comparison to NVGP controls. More importantly, two training studies have also used RTS games. Boot et al. (2008) trained NVGPs using the RTS “Rise of Nations” over a period of 21.5 h and found no effect of training across a large battery of cognitive tasks. Glass et al. (2013) trained participants using the RTS game “StarCraft,” focusing on the assessment of cognitive flexibility; 40 h of training was sufficient to produce significant gains in the StarCraft trainees over and above that of an active (playing the life simulator “The Sims”) control group. One major difference between these two studies is that Rise of Nations is less representative of an “action” video game due to its slower pace, while StarCraft is one of the first RTS games to resemble the definition outlined by Bediou et al. (2018).

Our study aimed to test the efficacy of RTS video game training in improving both perceptual/attentional and executive cognitive functioning. We chose to use the RTS game StarCraft II (SC2), which is a sequel to the original StarCraft that was used by Glass et al. (2013). This choice was motivated primarily not only by its previous use and description in the scientific literature as a tool for studying complex skill learning (see: Thompson et al., 2013) but also by the possibility of modifying in-game parameters to provide varying types of training experiences. As this is an efficacy study, we implemented two different SC2 training regimes alongside one active and one passive control group. The SC2 training had two conditions: Fixed, in which the player repeatedly faced an AI opponent that always used the same strategy, and Variable, in which the AI opponent and their strategy was randomly selected for each match. This is a similar approach to that taken by Glass et al. (2013), in which the authors created two training conditions, with the goal of manipulating the need for cognitive flexibility. Here, our intent was to manipulate the predictability of the training in order to observe its impact on subsequent post-training cognitive changes.

We assumed that a less predictable and repetitive training experience would lead to a slower rate of automatization of in-game behavior, and hence provide greater overall task demands over the duration of the training. If this assumption holds true and the SC2 training does improve cognitive performance overall, we would expect to see more improvement in the “variable” SC2 condition than in the “fixed” condition. In addition to standard comparisons against the passive and active control groups, demonstrating a difference in efficacy between the two SC2 training conditions would provide more direct evidence of a link between RTS gameplay and cognitive functioning. That being said, the goals of this study are twofold: (1) to demonstrate the effects of RTS video game training on a range of cognitive abilities in a large and well-controlled study and (2) to test if manipulating the predictability of the training experience (within the same video game) can affect the efficacy of the training in terms of cognitive outcomes. The full set of hypotheses regarding our study groups includes the null hypothesis of equivalent training improvement between our passive and active control groups, greater cognitive improvement as a result of StarCraft II training when compared to the control groups, and greater cognitive improvement as a result of StarCraft II “Variable” training than “Fixed” training.

Methods

Participants

A total of 90 participants took part in the study, pseudo-randomly split across four groups: passive control (n = 15; 8 females), active control (n = 15; 8 females), StarCraft II Fixed (n = 30; 15 females), and StarCraft II Variable (n = 30; 16 females). These participants were recruited primarily through Facebook advertising campaigns, which invited individuals to participate in a longitudinal study of complex skill learning. Potential participants were screened online using the VGQ (Video Game Questionnaire; Sobczyk et al., 2015) in order to assess their video game playing history. Only individuals who reported playing action video games (first-person shooter, third-person shooter, real-time strategy, open-world action games, and multi-player online battle arenas) less than 5 h per week over the past 6 months were accepted to the study and invited for laboratory measures. Further, only respondents indicating no previous experience with real-time strategy games and StarCraft (I and II) were considered. We also asked directly about previous FPS experience, with only one participant reporting FPS play in the past. None of our participants declared any action video game playing in the 6 months prior to recruitment.

Written informed consent was gathered from each participant prior to beginning their training, and they were provided with information regarding their rights as voluntary study participants in line with the Helsinki Declaration. Participants who entered the study were asked to refrain from playing any other video games for its duration. During the study process, a total of eight participants (one passive control, two active control, two SC Fixed, and two SC Variable) either chose to drop out prior to beginning their training (six) or were removed for failing to follow the training protocol (two). Six of these participants were replaced while the study was still in open recruitment, and two (one passive control and one SC Variable) could not be replaced before the study concluded. Participants were rewarded to the sum of approximately 250 USD upon completing the study.

The groups were balanced for age, with average ages of 24.87 (SD = 2.87), 24.73 (SD = 4.09), 24.67 (SD = 2.99), and 24.47 (SD = 2.95) for the passive control, active control, SC Fixed, and SC Variable groups, respectively. In the same order, the average years of education were 15.40 (SD = 2.58), 16.53 (SD = 2.38), 16.07 (SD = 2.24), and 16.30 (SD = 2.15). One-way ANOVA indicates that these groups did not differ in either age (p = .980) or years of education (p = .543). Further, the general cognitive ability of our participants was measured prior to training using Raven’s Progressive Matrices (Raven, 1936) and the Operation Span task (Unsworth et al., 2005). Raven’s was administered with a 30-min time restriction. No significant differences were found in raw Raven’s scores (passive control: 47.43, SD = 20.31; active control: 48.40, SD = 21.43; StarCraft Fixed: 42.56, SD = 17.71; StarCraft Variable: 48.86, SD = 17.28), p = .546. OSPAN scores were compared using a minimum 80% math accuracy cut-off; three participants from the StarCraft Fixed group were removed from the analysis on this basis. The groups (passive control: 54.86, SD = 4.27; active control: 56.07, SD = 3.14; StarCraft Fixed: 54.04, SD = 7.92; StarCraft Variable: 55.93, SD = 3.21) did not differ significantly in OSPAN score (p = .605).

Measures

We applied a battery of tasks that were selected to cover the major domains of cognition typically implicated in action video game play, i.e., perception, attention, and cognitive control. The battery included: (1) the Visual Motion Direction Discrimination task, measuring perceptual ability; (2) the Attentional Window task, measuring attentional breadth; (3) the Stop Signal task, measuring inhibitory control; (4) the Flanker Switching task, measuring mental flexibility, and (5) the Memory Updating task, measuring memory maintenance and updating. All measures were conducted using a stationary computer in laboratory settings, except for the paper-pencil based Raven’s matrices. Procedures were displayed using a 24-inch BenQ XL2411P monitor at a resolution of 1920 × 1080 pixels and at a refresh rate of 100 Hz. The PsychoPy experiment building package (Peirce et al., 2019) was used to program each procedure. Participants were seated 60 cm from the monitor in all procedures except for the Attentional Window task, for which participants were placed at 40 cm.

Raven’s Progressive Matrices

In a 60-item non-verbal test of eductive ability (Raven, 1936), participants are presented with series of eight patterns and must make a multiple-choice response to fill in the final missing pattern. The patterns increase in difficulty sequentially. A raw Raven’s score is computed by summing the total number of correct responses.

Operation Span

Based on Unsworth et al. (2005), participants completed a practice session consisting of a simple letter span task (two 3-letter and two 4-letter sequences, fixed order) in which they were asked to recall presented letters in the correct order, and a series of 15 math operations (e.g., “(1x2) + 1 = ?”). After these tasks were practiced individually, they were practiced in tandem by interspersing the presentation of letters with math operations (three trials). Finally, the main task was performed over a series of 75 letters/math problems that were broken open into set sizes (number of letters in the span) of 3–7 and presented in random order. Participants were encouraged to be both fast and accurate in both subtasks. OSPAN score (number of perfectly recalled orders) and math accuracy were the primary measures.

Visual Motion Direction Discrimination

Similar to the procedures used by Britten et al. (1992), Palmer et al. (2005), and Shadlen and Newsome (2001), each trial began with the presentation of a red fixation circle (0.4 deg. in diameter) in the center of a 5-deg. diameter aperture (light gray background) for 1000 ms. This was followed by the presentation of a motion display, in which dots (0.1 deg. squares) moved across the screen at a rate of approximately 5 deg. per second, at a density of 16.7 dots per degree squared per second. In this motion display, dots either moved at random or coherently to the left or the right side of the aperture. Five levels of motion coherence (percent of dots moving in coherence) were used: 1.6%, 3.2%, 6.4%, 12.8%, and 25.6%. Participants were asked to indicate which direction the coherent dots were moving (evenly split across trials). The motion display continued for 2 s or until a response was made. Following this, the fixation circle changed color for 1000 ms to indicate responses as correct/incorrect/timed out. Participants completed 10 practice trials at each coherence level followed by 75 trials at each coherence level, totaling 425 trials. The primary measures for this task are response accuracy and reaction time for each level of coherence.

Attentional Window

Based on Hüttermann et al. (2012), but adapted from the original projection-screen format, each trial began with a 1000 ms fixation cross, followed by the presentation of two white cueing circles (2 deg. diameter) for 200 ms. These circles always mirrored each other’s positions on the screen and could appear either along the direct x- or y-axis. The cues were replaced with a blank screen for 200 ms, after which the target stimuli were presented in the cued positions for 300 ms. The stimuli on each side consisted of four shapes (circles or squares) arranged in a square 2.5 × 2.5-deg. grid and were colored either light gray or dark gray. After the targets disappeared, participants were asked to indicate how many light gray colored circles appeared in each stimulus set. A correct answer for both stimuli sets increased the distance between the two sets for the subsequent trial on that axis by 1 deg., while an incorrect answer on both stimuli sets decreased that distance by 1 deg.

The stimuli sets began at a distance of 10 deg. from each other (the minimum distance), and 5 deg. from the screen center. The number of targets in each set ranged between 0 and 3 across 288 trials in four blocks of 72, with a pseudo-random distribution. Target set position (x- or y-axis) was evenly split across trials. A total of 16 practice trials (two at 10, 20, 30, and 40 deg. of eccentricity for each axis) were completed initially. The primary measure in this procedure is the average deg. of visual eccentricity on each axis.

Stop Signal

Based on the specification provided by Verbruggen et al. (2008), each trial began with an initial fixation cross (2 deg. in size) presented on a gray background, which lasted for 250 ms. This was followed by the primary task stimulus, which could be either a white circle (10 deg. diameter) or a white square (10 × 10 deg.), and which would remain on screen for 1250 ms or until a response was made. Participants were asked to identify the shape of the target stimulus as quickly as possible. However, on some of the trails, the target shape could change color from white to red shortly after being presented (stop trials). Participants were to inhibit their response on trials in which the target changed color. The delay between target presentation and color change (stop signal delay, SSD) was initially 250 ms and was adjusted across trials using an adaptive staircase procedure. Successfully inhibiting a response on a stop trial increased the SSD by 50 ms, while a failure to inhibit decreased the SSD by 50 ms. Participants initially completed 32 practice trials, followed by three blocks of 64 trials; 25% of the trials in each block were stop trials, and the SSD value carried over across blocks. SSD is the primary measure in this procedure.

Flanker Switching

Based on the specification provided by Cain et al. (2012), each trial began with the brief presentation of a central fixation point (1800–2200 ms; white circle, 1 deg. diameter) on a gray background. This fixation was followed by a centrally presented array of three arrows (3 deg. × 1.4 deg., separated by .4 deg.), which remained on screen for 1300 ms. The arrow array could be blue or yellow in color, and each arrow in the array could be pointed either left or right. Participants were asked to respond as quickly as possible to the central arrow only, while ignoring the two flanking arrows. If the array was blue, the task was to indicate the direction that the arrow was pointing as quickly as possible (pro trials). In the case of a yellow array, the task was to indicate the direction opposite to that pointed by the arrow as quickly as possible (anti trials). Array color was evenly split across trials, as was the direction of the target arrow and its flankers. A total of eight practice trials and one block of 321 trials were completed. Switch trials where those in which the previous trial was of a different response type, i.e., pro-response trials preceded by anti-response trials and anti-response trials preceded by pro-response trials. Repeated trials where those in which the previous trial’s response type was the same (pro-pro or anti-anti). Reaction times to such sequences were only considered if both trials received a correct response. Switch cost was calculated as Switch trial RT − Repeated trial RT and is the primary measure in this procedure.

Memory Updating

Adapted from the specification provided by Salthouse et al. (1991), each trial began with the presentation of square 2 × 2 matrix (10 deg. in width/height) on a gray background. In the first phase, four random digits (from 0 to 9) appeared sequentially (but in pseudo-random spatial order) in each of the matrix cells for 1 s. In the next phase, depending on the load condition, new digits began to appear in pseudo-random locations. This updating could occur from 0 to 5 times in a trial, and the same cell was only updated twice in the case of load 5. After updating, a single cell was probed at random. The participant’s task was to remember the initial values that appeared in the first phase and then to update each cells value if a new digit appeared in it by means of a simple addition/subtraction. The value of each update was chosen pseudo-randomly, with the constraints that the update could never be 0 and could never cause the cell value to fall below 0 or exceed 9. Participants responded to the probe by entering the value that they had most recently ascribed to the probed cell. Six practice trials (sequentially increasing in load) and three blocks of 30 trials each were completed. Load was evenly split and randomized within each block. Response accuracy is the primary measure in this procedure.

Training Groups

Passive Control

Participants in this group were tested in our laboratory before and after a 4-week wait period. They were also asked to refrain from playing video games during this time.

Hearthstone

Participants in the active control group trained using the free-to-play online card game Hearthstone. We chose this title as it is not an action video game yet contains a similar structure to StarCraft II in two primary aspects: (1) the game is played through individual 1 vs. 1 matches and (2) there is a matchmaking scheme that constantly tries to match the player with a skill-appropriate opponent. Each player begins with a deck of 30 cards (including minions, items, and magical effects), which they take turns using to attack their opponent’s health points while defending their own. The gameplay encourages the strategic use of cards, and each player’s turn is limited to 75 s. A match ends when either the player or their opponent runs out of health points, and the first to do so loses. Participants were introduced to the game in the laboratory through self- and experimenter-guided materials, played a series of introductory matches (supervised by an experimenter), and were then helped to install the game onto their own computer (approximately 2 h total). A third-party stat tracking application was also installed (Hearthstone Deck Tracker) to gain access to match details and replays.

They were then asked to continue playing the game on their own for a total of 30 h over a period of 4 weeks, with the restriction of playing no more than 5 h in any given day and 10 h in any given week. Although the entire training did not take place in the laboratory, these participants were asked to visit the laboratory for one 2-h session each week. An online platform was created and used to track each participant’s progress, as well as to provide them with information about the progress of their training (hours trained, days left, next appointment time, etc.) and if they were reaching their daily/weekly limits. Training time was controlled by a timer on the platform that was set by participants before each match and ended after. After each match, participants also had to indicate if the match was won, lost, or ended before finishing due to technical/external factors. During the last week of training, we calculated the actual time spent in game from the gathered match data and informed each participant about their exact remaining time.

StarCraft Fixed and Variable

StarCraft II is a real-time strategy game in which players face off on a game map, viewed from the top-down perspective. Each player begins with a base structure, the ability to construct new buildings, and several worker units. These workers can gather resources (available across the game map), which can be used to construct new buildings or produce units. Buildings give access to the production of different types of military units and to research upgrades for those units (spending resources to improve their abilities). Military units are used to defend your base and attack your opponent, while worker units gather resources and construct new buildings. The goal of the game is to defeat your opponent by destroying their units and structures. This requires coordinating both the economic (e.g., expanding your base, producing units, and gathering resources) and combat (e.g., issuing attack/move commands to your military units) aspects.

All of our StarCraft II participants underwent the same introductory training procedure. Participants were invited to the laboratory and first provided with a self-guided online set of materials that introduced them into StarCraft II and described some of the key concepts. Next, participants played through the tutorial stages of StarCraft II under the supervision of an experimenter. The experimenter not only acted as a guide through the tutorial and followed a standard script but was also allowed to interject if it was clear that the participant did not have a clear understanding of SC2’s core mechanics. Finally, the introductory training was completed after the participant played three supervised matches against an increasing level of artificial intelligence. The entire introductory procedure lasted approximately 3 h.

Participants were then asked to complete 30 h of StarCraft II gameplay over a period of 4 weeks, with the restriction of playing no more than 5 h in any given day and 10 h in any given week. All training sessions took place within our laboratory. The same online platform that was used for Hearthstone was also used to track each SC2 participant’s progress, as well as to provide them with needed information (match settings, hours trained, days left, etc.). The training sessions themselves consisted of 1 vs. 1 matches (which typically last around 20 min) against an artificial intelligence (AI). The difficulty level of this AI was adjusted using a staircase procedure in which three match wins resulted in a difficulty increase, and one match loss resulted in a difficulty decrease. The matches were played across a randomly selected subset of the standard maps that are available in StarCraft II, with a new map being randomly selected for each match. As in the Hearthstone group, we calculated the actual time spent in game from the match replays and informed each participant about their exact remaining time in the last week of their training. The training of our SC Fixed and Variable groups differed in only two aspects: the opponent type and the opponent’s strategy. Both of these are options that are set at the start of each match.

StarCraft II allows players to choose from three factions (Terran, Zerg, Protoss), each of which contains a unique set of units (along with their abilities) that impact how the faction is played. Terran units favor a balanced and flexible playstyle, Zerg an aggressive playstyle with quick and low-cost unit production, and Protoss a slower playstyle that employs fewer but more powerful units. That being said, the final approach to a match is still fully determined by the player. Both SC Fixed and SC Variable trainees were limited to using the Terran faction during training. We chose the Terran due to their flexible playstyle in comparison to the other factions, keeping in mind StarCraft II’s relatively steep learning curve and the general lack of video game experience within our sample.

As for the opponent, SC Fixed trainees always paired off against a Terran AI, while SC Variable trainees were randomly assigned an opponent out of the three factions at the start of each match. The effect of this manipulation is that SC Variable trainees were provided with a wider range of experience in terms of what they could expect from their opponent’s units. This is relevant to the gameplay due to the “rock, paper, scissors” nature of StarCraft II. Units are balanced so that they each have a weakness (i.e., units they are weak against) and a strength (i.e., units they are strong against), making it important to be able to correctly recognize your opponent’s unit composition; doing so allows the player to plan an effective counter strategy. For the opponent strategy, there were five possibilities:

-

1.

Economic focus: AI focuses on base/economy expansion and development, tending to defend and launch counterattack.

-

2.

Timing attack: AI times its attacks with the completion of unit upgrades, which are intended to give an advantage over the opponent.

-

3.

Aggressive push: AI uses a timing attack that pushes with all its units at once, attempting to engage the opponent before they have time to research their own upgrades.

-

4.

Full rush: AI attempts to end the match as quickly as possible by focusing on unit buildup instead of researching upgrades.

-

5.

Straight to air: AI focuses on building air units; this strategy is designed to practice air battles.

The SC2 Fixed group always faced the economic focus strategy. We chose this strategy because it is the least aggressive option and therefore is more forgiving of mistakes and/or slow play. In a sense, it should provide the most predictable and least time-pressured experience. The SC Variable group was randomly assigned one out of the five opponent strategies at the beginning of each match. In practice, this means that SC Variable trainees were faced with a more varied threat in each match. This could take the form of increased time pressure, such as with the full rush strategy, and also of a specific unit composition that requires an appropriate counter. It is important to note here that our SC trainees knew about their opponent’s strategy before the start of each match, which should in theory limit their need to create counter strategies on the fly, so long as they were able to apply knowledge from their past matches.

Results

Behavioral Data Analysis

The data from our five cognitive (Table 1) tasks were analyzed by computing planned contrasts within ANCOVA models, with pre-training performance as a covariate and post-training performance as the dependent variable. Initial one-way ANOVA of pre-training performance between our four groups did not reveal any significant differences across the cognitive tasks (ps > .263; tasks with multiple levels averaged into single indicator). Three total contrasts were applied: (1) passive control vs. Hearthstone, (2) combined control groups vs. combined StarCraft 2 groups, and (3) SC Fixed training vs. SC Variable training. Two additional contrasts were planned to compare the combined SC2 groups to each control group separately in the case of contrast (1) being significant; this was not the case for any of the tested models, and as such, they are not reported here. No filtering was applied to the raw data, and participants were excluded on an individual task basis. It should be noted that while the ANCOVA models described below were performed for planned comparisons, the remaining comparisons (replay data, regression, and moderation) are exploratory in nature.

Visual Motion Direction Discrimination

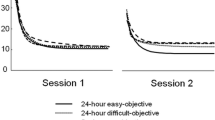

As the initial model did not reveal a significant group × coherence level interaction (p = .355), motion discrimination accuracy was averaged across the five coherence levels (1.6, 3.2, 6.4, 12.8, 25.6) and used as the dependent variable in the analysis. Ten participants (1 passive control, 1 Hearthstone, 6 SC Fixed, 2 SC Variable) were excluded from the analyses due to chance level responses that did not vary with coherence level. Contrast (1) revealed no significant difference between passive control and Hearthstone groups in terms of post-training performance (p = .0535). Contrast (2) revealed significant differences between the SC and control groups: F(1, 71) = 5.71, p = .010, np2 = .074. SC trainees showed significantly higher accuracy post-training than the combined control groups (Fig. 1). Contrast (3) revealed significant differences in accuracy between SC Fixed and SC Variable trainees: F(1, 71) = 3.65, p = .030, np2 = .049. Participants trained in the SC Variable condition had significantly higher post-training accuracy than the SC Fixed condition. The same contrasts performed for reaction time data revealed no significant differences in post-training reaction time across the three comparisons (ps = .681, .520, and .175, respectively).

Box and scatter plot of combined control (passive + active) and StarCraft II (Fixed + Variable) groups, depicting change in Visual Motion Direction Discrimination and Attentional Window performance after the study period. Note: VMDD data represent average of all coherence levels, while Attentional Window data represent vertical trials only

Attentional Window

Average eccentricity for horizontal and vertical trials were the dependent variables in this analysis. No participants were excluded. Analysis of horizontal eccentricity did not reveal significant effects for any of the three tested contrasts (ps > .220). Contrast (1) revealed no significant differences in post-training vertical eccentricity between passive control and Hearthstone groups (p = .485). Contrast (2) revealed a significant difference between SC and control groups for vertical eccentricity: F(1, 82) = 3.53, p = .032, np2 = .041. SC trainees were significantly more accurate post-training (Fig. 1). Contrast (3) also revealed a significant difference between SC Fixed and SC Variable groups for vertical eccentricity: F(1, 82) = 4.98, p = .014, np2 = .057. SC Variable trainees were significantly more accurate post-training.

Stop Signal

Stop signal delay and go-trial reaction time were the dependent variables in this analysis. Five participants (2 Hearthstone, 1 SC Fixed, 2 SC Variable) were excluded from the analyses due to low go-trial accuracy (< 75% accuracy) and/or failing to monitor for stop-trials (< 30% accuracy).

Stop Signal Delay

Contrast (1) revealed no significant differences between passive control and Hearthstone groups (p = .341). Contrast (2) indicated a significant difference between the combined SC training and control groups, F(1, 78) = 4.71, p = .0165, np2 = .057. However, the direction of the effect was opposite to that predicted. The combined control had significantly higher stop signal delay values post-training than did the combined SC group (Fig. 2). Contrast (3) indicated no differences between SC Fixed and SC Variable groups (p = .378).

Go Trial RT

Contrast (1) revealed no significant differences between passive control and Hearthstone groups (p = .311). Contrast (2) indicated a significant difference between the combined SC training and control groups: F(1, 78) = 6.17, p = .015, np2 = .073. SC trainees had significantly faster reaction times post-training than did control participants. Contrast (3) indicated no differences in performance between SC Fixed and SC Variable groups (p = .103).

Flanker Switching

Switch cost was the dependent variable in this analysis. Six participants (1 passive control, 1 Hearthstone, 4 SC Fixed) were removed from the analyses due to average task accuracy (determine direction of arrow) dropping below 75%. Contrasts (1) and (3) were non-significant (ps > .459). Contrast (2) did not reveal any significant differences in post-training switch costs between SC and control groups (Fig. 2), but a trend was evident: F(1, 76) = 2.67, p = .053, np2 = .034. Contrast (3) indicated no differences in performance between SC Fixed and SC Variable groups.

Memory Updating

As the initial model did not reveal a significant group × load level interaction (p = .743), response accuracy was averaged across loads (0–5) and used as the dependent variable in this analysis. No participants were excluded. Contrast (1) revealed no significant differences between passive control and Hearthstone groups (p = .335). Contrast (2) also revealed no significant differences between SC and control groups (p = .139). Contrast (3) revealed a significant difference between SC Fixed and SC Variable groups: F(1, 83) = 2.85, p = .0475, np2 = .033. SC Variable trainees had significantly higher accuracy post-training than did SC Fixed trainees (Fig. 2).

Box and scatter plots of change in performance on Stop Signal, Flanker Switching, and Memory Updating tasks after the training period. Stop Signal and Flanker Switching data compare the combined control (passive + active) and StarCraft II (Fixed + Variable) groups, while Memory Updating data compare the SC2 Fixed and SC2 Variable groups. Note: Memory Updating data represents average of all load levels

StarCraft II Replay Data Analysis

Each match played by our participants was recorded using SC2’s game engine, which gave us access to in-game behavioral data over time. The Python utility “sc2reader” (https://github.com/ggtracker/sc2reader) and custom scripts were used to extract the replay data. Replay indicators were constructed based on the descriptions provided by Thompson et al. (2013), and replays were filtered for lack of player activity to ensure that they represented gameplay (two such replays were removed). The following set of indicators was extracted:

-

(1)

Total matches: total number of SC2 matches played during the training.

-

(2)

Match length: based on timestamps within each replay, giving the exact duration of each match.

-

(3)

Difficulty of AI setting across matches (1–10).

-

(4)

Perception Action Cycles (PACs) per minute: sequence of actions performed in one switch of the in-game map focus, and all the actions (selecting, building, producing, giving move/attack orders to units, etc.) performed in that time frame. PACs resemble saccades, as players can only see a fraction of the game map at one time and therefore must move their “viewport” around the map to perform actions (see Thompson et al., 2013 for details on PAC extraction).

-

(5)

PAC latency: latency (in seconds) between starting a PAC and issuing an action.

-

(6)

PAC gap: the time (in seconds) between PACs.

-

(7)

Actions per minute (APM): number of actions (selecting, building, producing, etc.) performed per minute.

We compared our SC2 groups on these indicators using multivariate ANOVA to see how our training manipulation affected in-game behavior (see Table 2 for test values). The effect of group was significant, F(1, 49) = 3.48, p = .002, ηp2 = .390. Further pair-wise testing (Bonferroni corrected) revealed significant differences for four factors: (1) participants in the SC Fixed group played significantly fewer matches than those in the SC Variable group, F(1, 57) = 7.35, p = .009, ηp2 = .114; (2) reflecting the previous point, SC Fixed participants also played significantly longer matches, F(1, 57) = 9.43, p = .003, ηp2 = .142; (3) participants in the SC Fixed group collected more resources on average, F(1, 57) = 14.63, p < .001, ηp2 = .204; (4) participants in the SC Fixed group also used more resources on average, F(1, 57) = 10.83, p = .002, ηp2 = .160.

Regression Analyses

We also conducted multiple linear regression analyses to observe the relationships between our participants’ cognitive abilities and in-game performance. The values were taken as difference scores: post-test − pre-test for cognitive data and fourth quarter − first quarter of recordings for in-game data, i.e., change in cognitive performance and change in in-game performance. Cognitive data with multiple levels (VMDD and Memory Updating) were averaged into a single performance indicator. In-game indicators (PACs, PAC latency, PAC gap, APM) and a general indicator of change in performance (AI difficulty) were used as predictors in five linear regression models (stepwise method), one for each cognitive indicator. This analysis was conducted separately for each group. A significant regression model was obtained for Stop Signal data, in the SC Variable group only: F(1, 26) = 8.96, p = .006. This model also resulted in only a single predictor, namely actions per minute (R2 = .264). As the improvement in actions per minute increased, the improvement in inhibitory ability decreased (as measured by change in stop signal delay; β = − .934).

We followed up this analysis by conducting a simple moderation path (PROCESS Model 1; Hayes, 2017) to determine if the relationship between change in APM (X; post-pre) and change in stop signal delay (Y; post-pre) is moderated by StarCraft training group (M; SC Fixed vs. Variable). The overall model was significant in the case of average player APM change: F(3, 53) = 3.69, p = .017, R2 = 0.173. Change in APM was a significant predictor of change in stop signal delay, b = 1.44, t(53) = 2.41, p = .019, while group was non-significant (p = .052). The APM change × group interaction was significant, indicating a moderating effect of group: b = − 1.26, t(53) = − 2.67, p = .009. Simple slope analysis revealed a significant effect of X on Y for the SC Variable group, b = − 1.08, t(53) = − 2.56, p = .013, 95% CI [− 1.90, − 0.25], but no such effect for the SC Fixed group (p = .398) (Fig. 3).

Discussion

This training study, which aimed to investigate the efficacy of improving attentional, perceptual, and executive functioning through training with the real-time strategy video game StarCraft II, resulted in several novel and important findings. Alongside the demonstration of SC2’s efficacy in improving cognitive abilities, we were able to show the first direct relationship between improving at a specific facet of the game and improvement to a specific cognitive function. Further, we were able to show that this relationship, as well as the amount of change to perceptual and attentional abilities, was dependent on the experimental manipulation of the StarCraft II training paradigm. These results and their implications are discussed in turn below.

First of all, it is clear that training in StarCraft II for a period of 30 h is sufficient to improve some of the tested abilities over and above that of both a standard test-retest improvement and equivalent training in a card-based video game (Hearthstone). We observed these improvements across tasks of attention (Attentional Window) and perception (Visual Motion Direction Discrimination), but not for cognitive control (though a trend difference was visible for task switching), which is in line with previously reported training effects from more typical action video games (Powers et al., 2013; Powers & Brooks, 2014; Bediou et al., 2018; Wang et al., 2016).

Green et al. (2010) previously used the Visual Motion Direction Discrimination task in a training experiment based on two first-person shooter games (Unreal Tournament 2004 and Call of Duty 2) and an active control (The Sims 2), finding a significant post-training decrease in RT for the first-person shooter condition but no change in accuracy. While a direct comparison of that result to our own is confounded by the difference in genre, it is interesting to note that we found the opposite pattern for real-time strategy, i.e., no significant change in reaction time but a significant improvement in accuracy for our SC2 trainees relative to the control groups.

It is also worth noting that our Attentional Window effects were limited to the vertical axis only, with increased average eccentricity for SC2 trainees when compared to the combined control group and for participants in the SC Variable condition when compared to the SC Fixed condition. This effect may be tied to SC2’s user interface, which places HUD elements such as building queue/options and unit/resource counts at the top and bottom of the game screen. Frequent and brief (due to competing attentional demands) glances at these elements may have been needed by our SC2 novices to maintain and update their game state, leading to the observed attentional enhancement on the vertical axis.

One task that did not show a significant improvement (compared to controls) and yet provided investigation worthy data was the Stop Signal task, which reflects inhibitory control. Previous studies have reported effects on inhibitory control as among the weakest in both cross-sectional and training designs (Bediou et al., 2018). In the case of our study, we found that our StarCraft trainees failed to improve at response inhibition while the control groups did so significantly. This result implies that playing SC2 may have a negative effect on inhibitory control, as the learning effects manifested by our control groups did not appear in the case of StarCraft II trainees.

Such a conclusion is strongly supported by the finding of a significant negative relationship between change in stop signal performance and change in actions per minute within the game. As StarCraft II trainees increased their in-game actions per minute (which is a crucial component of successful performance in RTS video games), their ability to inhibit their responses on the basis of visual stimuli decreased. Furthermore, our moderation analysis confirmed that this relationship was moderated by SC group so that it held true for the SC Variable group but not the Fixed group, suggesting that the change in training conditions resulting from our manipulation led to a need for response disinhibition that was specific to the Variable group. To the best of our knowledge, this is the first report of a direct correlation between a specific in-game behavior and change in cognitive performance. It should be added here that the most top-level indicator of performance, i.e., change in AI difficulty, was not related to performance changes in any of the measured cognitive functions. This, together with the rather high specificity of the observed effects, suggests that simply improving at the game in terms of overall outcome is not necessarily related to improvement in any given cognitive ability. However, that is not to say that performance in individual tests of cognition are unrelated to video game performance, as recently demonstrated in a study of current competitive video game players (Large et al., 2019).

The significant differences that were observed between two conditions within the same game is another novel and important result of our study. The SC Variable trainees improved their Visual Motion Direction Discrimination, Attentional Window, and Memory Updating performance significantly more than SC Fixed trainees. Further, the relationship between stop signal performance and actions per minute was moderated by group, so that it appeared for SC Variable but not for SC Fixed. Lastly, the above-mentioned correlational relationships between in-game data and cognitive data only appeared for the SC Variable group. This follows the results of Glass et al.’s (2013) StarCraft study on cognitive flexibility, in which their flexibility-oriented training condition resulted in significant gains in flexibility when compared to an active control group. However, they were not able to detect any significant differences between the two StarCraft sub-conditions themselves.

It is tempting to reason that the differences found here are the result of our seemingly minor manipulation of opponent strategy and type between the two SC groups. However, in practice, this manipulation likely caused differences in the training experience that we were not able to capture in the basic data available here. From what we were able to capture of in-game data (see Table 2), our manipulation led the two SC groups to differ on their total number of matches, and on the average length of their matches. While these differences do confirm that the in-game experience of our SC groups was not identical, they generally did not relate to cognitive outcomes. Further, they can be explained by the fact that the SC Fixed group played exclusively against the economic AI strategy; this strategy leads to longer games because the AI never attempts to end the game with early attacks. Even so, the effects described here serve as evidence and suggestion that improvement in cognitive performance after video game training is tied to the specific activities performed with the game.

It is also notable that the above-described results were obtained in reference to a combined control group of passive and active participants. Our active control training in Hearthstone produced an observable advantage over passive control participants in the Visual Motion Direction Discrimination task. Although we did not predict any differences between these two groups, there is some evidence that non-action video games (e.g., logical and puzzle games) can also improve aspects of cognitive functioning, albeit to a smaller degree (Powers et al., 2013; Oei & Patterson, 2014). Furthermore, Hearthstone bears much more resemblance to action video games—and specifically StarCraft II—than the non-action titles that have previously been used as active controls (e.g., The Sims). Namely, it is a competitive video game that is played via head to head matchups against other players, contains a system for Variable adjustment of difficulty, is time pressured (albeit not heavily), and requires flexibility in planning and execution. The most obvious differences between Hearthstone and StarCraft II are the lack of need for high actions per minute and manual dexterity in Hearthstone, which may hint at the importance of these elements for cognitive improvement. These features make Hearthstone a suitable active control task for our study and increase the validity of the obtained results.

Although this study allowed for some insight into the relationship between in-game actions and cognitive performance by making use of replay data, only the most basic of conclusions can be drawn from it due to the strong limitation of only having two measurement points of cognitive ability (pre/post training). It is unlikely that a linear rate of improvement can be assumed between these two points, as some cognitive functions can be more heavily engaged or are more easily improved by way of training, and accordingly would reach asymptotes at different times in the study. Future training studies using designs that test cognitive abilities at time points within the study duration would allow for the improvements and their dynamics to be properly assessed. Further, any interpretation of these results must be tempered by the low to moderate effect sizes that we obtained, and the general difficulty of reproducing cognitive training effects.

In conclusion, our study contributes to the literature on video game training by: (1) demonstrating the efficacy of improving cognitive functioning through training in a real-time strategy video game, (2) showing that improvement in cognitive functioning can be related to specific in-game performance changes, and (3) experimentally inducing greater cognitive improvement in one of two groups by manipulating the gameplay experience. These results not only demonstrate that playing real-time strategy video games can lead to similar cognitive advantages as those found in previous studies but also supplement the growing notion (Bediou et al., 2018; Dale et al., 2020) that a more fine-grained approach is needed to properly study the rapidly evolving field of video games and how they affect cognition. More (and higher quality) experimental evidence is needed before any definitive conclusions can be drawn regarding the impact of real-time strategy games like StarCraft II on cognitive ability.

References

Bediou, B., Adams, D. M., Mayer, R. E., Tipton, E., Green, C. S., & Bavelier, D. (2018). Meta-analysis of action video game impact on perceptual, attentional, and cognitive skills. Psychological Bulletin, 144(1), 77–110.

Boot, W. R., Kramer, A. F., Simons, D. J., Fabiani, M., & Gratton, G. (2008). The effects of video game playing on attention, memory, and executive control. Acta Psychologica, 129(3), 387–398.

Boot, W. R., Blakely, D. P., & Simons, D. J. (2011). Do action video games improve perception and cognition? Frontiers in Psychology, 2, 226.

Britten, K. H., Shadlen, M. N., Newsome, W. T., & Movshon, J. A. (1992). The analysis of visual motion: A comparison of neuronal and psychophysical performance. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 12(12), 4745–4765.

Burgoyne, A. P., Sala, G., Gobet, F., Macnamara, B. N., Campitelli, G., & Hambrick, D. Z. (2016). The relationship between cognitive ability and chess skill: A comprehensive meta-analysis. Intelligence, 59, 72–83.

Cain, M. S., Landau, A. N., & Shimamura, A. P. (2012). Action video game experience reduces the cost of switching tasks. Attention, Perception & Psychophysics, 74(4), 641–647.

Chuderski, A. (2013). When are fluid intelligence and working memory isomorphic and when are they not? Intelligence, 41(4), 244–262.

Conway, A. R. A., Kane, M. J., & Engle, R. W. (2003). Working memory capacity and its relation to general intelligence. Trends in Cognitive Sciences, 7(12), 547–552.

Dale, G., & Green, C. S. (2017). Associations between avid action and real-time strategy game play and cognitive performance: A pilot study. Journal of Cognitive Enhancement, 1(3), 295–317.

Dale, G., Joessel, A., Bavelier, D., & Green, C. S. (2020). A new look at the cognitive neuroscience of video game play. Annals of the New York Academy of Sciences.

Dobrowolski, P., Hanusz, K., Sobczyk, B., Skorko, M., & Wiatrow, A. (2015). Cognitive enhancement in video game players: The role of video game genre. Computers in Human Behavior, 44, 59–63.

Glass, B. D., Maddox, W. T., & Love, B. C. (2013). Real-time strategy game training: Emergence of a cognitive flexibility trait. PloS One, 8(8), e70350.

Gliga, F., & Flesner, P. I. (2014). Cognitive benefits of chess training in novice children. Procedia-Social and Behavioral Sciences, 116(21), 962–967.

Green, C. S., & Bavelier, D. (2015). Action video game training for cognitive enhancement. Current Opinion in Behavioral Sciences, 4, 103–108.

Green, C. S., Pouget, A., & Bavelier, D. (2010). Improved probabilistic inference as a general learning mechanism with action video games. Current Biology, 20(17), 1573–1579.

Hayes, A. F. (2017). Introduction to mediation, moderation, and conditional process analysis, second edition: A regression-based approach. Guilford Publications.

Hüttermann, S., Bock, O., & Memmert, D. (2012). The breadth of attention in old age. In Ageing Research (Vol. 3, Issue 1, p. 10). https://doi.org/10.4081/ar.2012.e10

Jaeggi, S. M., Buschkuehl, M., Jonides, J., & Perrig, W. J. (2008). Improving fluid intelligence with training on working memory. Proceedings of the National Academy of Sciences of the United States of America, 105(19), 6829–6833.

Klaffehn, A. L., Schwarz, K. A., Kunde, W., & Pfister, R. (2018). Similar task-switching performance of real-time strategy and first-person shooter players: Implications for cognitive training. Journal of Cognitive Enhancement, 2(3), 240–258.

Large, A. M., Bediou, B., Cekic, S., Hart, Y., Bavelier, D., & Green, C. S. (2019). Cognitive and behavioral correlates of achievement in a complex multi-player video game. Media and Communication, 7(4), 198.

Lynn, R., & Yadav, P. (2015). Differences in cognitive ability, per capita income, infant mortality, fertility and latitude across the states of India. Intelligence, 49, 179–185.

Melby-Lervåg, M., Redick, T. S., & Hulme, C. (2016). Working memory training does not improve performance on measures of intelligence or other measures of “far transfer”: Evidence from a meta analytic review. Perspectives on Psychological Science: A Journal of the Association for Psychological Science, 11(4), 512–534.

Oei, A. C., & Patterson, M. D. (2014). Playing a puzzle video game with changing requirements improves executive functions. Computers in Human Behavior, 37, 216–228.

Palmer, J., Huk, A. C., & Shadlen, M. N. (2005). The effect of stimulus strength on the speed and accuracy of a perceptual decision. Journal of Vision, 5(5), 376–404.

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., Kastman, E., & Lindeløv, J. K. (2019). PsychoPy2: Experiments in behavior made easy. Behavior Research Methods, 51(1), 195–203.

Powers, K. L., & Brooks, P. J. (2014). Evaluating the specificity of effects of video game training. Learning by Playing: Video Gaming in Education, 302.

Powers, K. L., Brooks, P. J., Aldrich, N. J., Palladino, M. A., & Alfieri, L. (2013). Effects of video-game play on information processing: A meta-analytic investigation. Psychonomic Bulletin & Review, 20(6), 1055–1079.

Raven, J. C. (1936). Mental tests used in genetic studies: The performance of related individuals on tests mainly educative and mainly reproductive. Unpublished Master’s Thesis, University of London.

Sala, G., & Gobet, F. (2017a). When the music’s over. Does music skill transfer to children’s and young adolescents’ cognitive and academic skills? A meta-analysis. Educational Research Review, 20, 55–67.

Sala, G., & Gobet, F. (2017b). Does chess instruction improve mathematical problem-solving ability? Two experimental studies with an active control group. Learning & Behavior, 45(4), 414–421.

Sala, G., & Gobet, F. (2019). Cognitive training does not enhance general cognition. Trends in Cognitive Sciences, 23(1), 9–20.

Sala, G., Burgoyne, A. P., Macnamara, B. N., Hambrick, D. Z., Campitelli, G., & Gobet, F. (2017). Checking the “Academic Selection” argument. Chess players outperform non-chess players in cognitive skills related to intelligence: A meta-analysis. Intelligence, 61, 130–139.

Sala, G., Tatlidil, K. S., & Gobet, F. (2018). Video game training does not enhance cognitive ability: A comprehensive meta-analytic investigation. Psychological Bulletin, 144(2), 111–139.

Salthouse, T. A., Babcock, R. L., & Shaw, R. J. (1991). Effects of adult age on structural and operational capacities in working memory. Psychology and Aging, 6(1), 118–127.

Schellenberg, E. G. (2011). Music lessons, emotional intelligence, and IQ. Music Perception: An Interdisciplinary Journal, 29(2), 185–194.

Shadlen, M. N., & Newsome, W. T. (2001). Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. Journal of Neurophysiology, 86(4), 1916–1936.

Simons, D. J., Boot, W. R., Charness, N., Gathercole, S. E., Chabris, C. F., Hambrick, D. Z., & Stine-Morrow, E. A. L. (2016). Do “brain-training” programs work? Psychological Science in the Public Interest: A Journal of the American Psychological Society, 17(3), 103–186.

Sobczyk, B., Dobrowolski, P., Skorko, M., Michalak, J., & Brzezicka, A. (2015). Issues and advances in research methods on video games and cognitive abilities. Frontiers in Psychology, 6, 1451.

Strenze, T. (2007). Intelligence and socioeconomic success: A meta-analytic review of longitudinal research. Intelligence, 35(5), 401–426.

Swaminathan, S., Schellenberg, E. G., & Khalil, S. (2017). Revisiting the association between music lessons and intelligence: Training effects or music aptitude? Intelligence, 62, 119–124.

Taatgen, N. A. (2016). Theoretical models of training and transfer effects. In T. Strobach & J. Karbach (Eds.), Cognitive training: An overview of features and applications (pp. 19–29). Springer International Publishing.

Thompson, J. J., Blair, M. R., Chen, L., & Henrey, A. J. (2013). Video game telemetry as a critical tool in the study of complex skill learning. PloS One, 8(9), e75129.

Unsworth, N., Heitz, R. P., Schrock, J. C., & Engle, R. W. (2005). An automated version of the operation span task. Behavior Research Methods, 37(3), 498–505.

Unsworth, N., Redick, T. S., McMillan, B. D., Hambrick, D. Z., Kane, M. J., & Engle, R. W. (2015). Is playing video games related to cognitive abilities? Psychological Science, 26(6), 759–774.

Verbruggen, F., Logan, G. D., & Stevens, M. A. (2008). STOP-IT: Windows executable software for the stop-signal paradigm. Behavior Research Methods, 40(2), 479–483.

Wang, P., Liu, H.-H., Zhu, X.-T., Meng, T., Li, H.-J., & Zuo, X.-N. (2016). Action video game training for healthy adults: A meta-analytic study. Frontiers in Psychology, 7, 907.

Watkins, M. W., Lei, P.-W., & Canivez, G. L. (2007). Psychometric intelligence and achievement: A cross-lagged panel analysis. Intelligence, 35(1), 59–68.

Funding

This research was supported by the Polish National Science Centre grant number 2013/10/E/HS6/00186, as well as stipends award to N. Kowalczyk by the Foundation for Polish Science and the Kosciuszko Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

All study subjects were informed of their rights as voluntary participants in accord with the Helsinki declaration. The study plan was approved by the SWPS University of Social Sciences Ethics Committee.

Informed Consent

Written informed consent was obtained from all study participants prior to participation in the study.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dobrowolski, P., Skorko, M., Myśliwiec, M. et al. Perceptual, Attentional, and Executive Functioning After Real-Time Strategy Video Game Training: Efficacy and Relation to In-Game Behavior. J Cogn Enhanc 5, 397–410 (2021). https://doi.org/10.1007/s41465-021-00211-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41465-021-00211-w