Abstract

The research potential in the field of mobile mapping technologies is often hindered by several constraints. These include the need for costly hardware to collect data, limited access to target sites with specific environmental conditions or the collection of ground truth data for a quantitative evaluation of the developed solutions. To address these challenges, the research community has often prepared open datasets suitable for developments and testing. However, the availability of datasets that encompass truly demanding mixed indoor–outdoor and subterranean conditions, acquired with diverse but synchronized sensors, is currently limited. To alleviate this issue, we propose the MIN3D dataset (MultI-seNsor 3D mapping with an unmanned ground vehicle for mining applications) which includes data gathered using a wheeled mobile robot in two distinct locations: (i) textureless dark corridors and outside parts of a university campus and (ii) tunnels of an underground WW2 site in Walim (Poland). MIN3D comprises around 150 GB of raw data, including images captured by multiple co-calibrated monocular, stereo and thermal cameras, two LiDAR sensors and three inertial measurement units. Reliable ground truth (GT) point clouds were collected using a survey-grade terrestrial laser scanner. By openly sharing this dataset, we aim to support the efforts of the scientific community in developing robust methods for navigation and mapping in challenging underground conditions. In the paper, we describe the collected data and provide an initial accuracy assessment of some visual- and LiDAR-based simultaneous localization and mapping (SLAM) algorithms for selected sequences. Encountered problems, open research questions and areas that could benefit from utilizing our dataset are discussed. Data are available at https://3dom.fbk.eu/benchmarks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Mobile Mapping

Mobile mapping (Chiang et al. 2021; Elhashash et al. 2022) is a widely used technique for various applications, such as documenting and inventorying scenes (Vallet and Mallet 2016; Di Stefano et al. 2021), integration of airborne surveying (Toschi et al. 2017), creating computer models for simulations or decisions (Tak et al. 2021; Feng et al. 2022), and guiding robots for navigation (Funk et al. 2021). The integration of localization and mapping into a single process, known as simultaneous localization and mapping (SLAM), is crucial for accurate spatial positioning and navigation. A variety of sensors and devices can be used for mapping, including 2D and 3D LiDAR scanners, cameras, and depth sensors. SLAM algorithms perform well in indoor environments, such as factories and warehouses, allowing for autonomous operation of robots. However, mapping in outdoor and uncontrolled scenarios presents challenges for SLAM algorithms, such as uneven terrain, limited range of 2D LiDAR sensors, dynamic objects and more sources of sensor noise, which can potentially degrade the quality of mapping and render some popular assumptions useless (e.g., presence of the flat ground). In such scenarios, 3D LiDAR scanners and camera-based systems (V-SLAM) are more effective. In open spaces, global navigation satellite systems (GNSS) can provide a reliable location, but in areas where satellite signals are not available, such as tunnels, caves and mines, a more sophisticated SLAM algorithm is needed.

Research and development of mobile mapping solutions for such environments can be traced back to early works of Thrun et al. (2003), which showcased the usage of laser scanners mounted on a robot to carry out a volumetric 3D survey of an underground mine. Future advances in field robotics and increasing availability of open-source solutions resulted in developing a wide selection of robotic (Kanellakis and Nikolakopoulos 2016; Nüchter et al. 2017; Ren et al. 2019; Trybała 2021; Yang et al. 2022), handheld (Zlot and Bosse 2013; Trybała et al. 2023), and wearable (Masiero et al. 2018; Blaser et al. 2019) solutions of varying complexity for mapping subterranean spaces. However, the problem of performing robust SLAM in challenging environments still cannot be considered as fully solved (Ebadi et al. 2022).

1.2 Open Datasets

To advance research in the field of SLAM, multiple open datasets have been collected and made publicly available by scientists from the robotics and geomatics communities (Geiger et al. 2012; Liu et al. 2021; Macario Barros et al. 2022; Helmberger et al. 2022). These datasets allow researchers to investigate various mapping approaches and easily test and evaluate in-house, commercial, or open-source software solutions without the need for access to expensive data acquisition platforms, particularly for robotic systems. The popularity of these datasets has led to the creation of benchmarks, where automated systems evaluate the accuracy of processing methods using standardized metrics and rank them among other submitted solutions.

This approach enables an objective comparison of different SLAM algorithms through use of common metrics, such as absolute and relative trajectory errors (ATE and RTE), to assess localization accuracy. However, there are various other strategies for evaluating the quality of 3D mapping, such as using different metrics for measuring the compliance of point clouds with ground truth (GT), and for aligning the resulting spatial data with reference data, such as using global or local registration methods.

One of the key events that greatly accelerated progress in mobile mapping research were the competitions organized by the US-based Defense Advanced Research Projects Agency (DARPA), such as the DARPA Grand Challenges (starting from 2004) (Seetharaman et al. 2006) and the Subterranean Challenge (held in 2017–2021) (Chung et al. 2023). The former type of competition primarily focused on the needs of the automotive industry, such as localization, mapping, and perception in open, urban areas, and the latter on robot autonomy, perception, and SLAM, respectively. Through dedicated funding, clear goals, and reliable evaluation methods, these events enabled teams from around the world to collaborate and develop innovative SLAM solutions. The by-products of these challenges are also open datasets and benchmarks, which were collected and formed during the field trials of the competitions. Although there are numerous publicly available datasets dedicated to evaluating SLAM algorithms, the diversity of real-world environments in which these algorithms are applied, as well as the various sensor configurations for which mapping solutions are developed, results in a constant need for acquiring more data to evaluate method performance under different conditions. This issue is becoming increasingly critical as learning-based methods gain popularity. Providing them with well-diversified training data with reliable reference data is crucial for their generalization, adaptability, and in consequence usability in real-world scenarios. Furthermore, the universality and uniqueness of a dataset is not only determined by the environment in which the data was collected, but also by the limited set of sensors used. The use of multiple sensors to simultaneously acquire different types of data not only facilitates the development and testing of data fusion methods, but also provides the most objective way to compare methods based on different sensors, such as visual SLAM (V-SLAM) with LiDAR-based approaches. In recent years, the AMICOSFootnote 1 and VOT3DFootnote 2 EIT Raw Materials projects, among others, tackled the use of ground/wheeled/handheld robotic platforms, equipped with various imaging and LiDAR devices, to inspect underground mining scenarios and technical infrastructures. Multi-sensors robots (Trybała et al. 2022) or portable stereo-vision systems (Torresani et al. 2021) can be used to search for hot idlers in a conveyor belt, map underground spaces, or automatically search for humans or damages of components (Szrek et al. 2020; Menna et al. 2022; Dabek et al. 2022).

1.3 Paper Contribution

A common aspect of robotics platforms and mobile mapping solutions is the accuracy and robustness evaluation of localization and mapping methods in harsh conditions (Nocerino et al. 2017; Trybała et al. 2023). Despite the availability of various robotic datasets collected in different environments, most of the available datasets do not have redundant sensor suites or accurate and complete 3D ground truth.

To address the above-mentioned issues, we propose a novel set of data collected in (i) an indoor man-made environment (University buildings) and (ii) an underground facility in Walim (Poland) using a wheeled mobile robot (UGV) equipped with multiple low-cost sensors. The dataset comprises data from an exhaustive, redundant sensor system, including two sets of different stereo cameras, inertial measurement units (IMUs), and two independent LiDAR scanners: a spinning Velodyne VLP-16 with an actuator and a solid-state Livox Horizon. To facilitate the evaluation of mapping results by the users, we also provide reliable GT data in the form of a survey-grade point cloud acquired with a Riegl time-of-flight-based terrestrial laser scanner and the parameters of the external calibration of the sensors mounted on the robot. The collected data are processed and a preliminary accuracy assessment of the results obtained with selected SLAM methods, utilizing various sensors, is presented.

The structure of the article is as follows. First, related works and available datasets for testing SLAM methods are discussed. Then, the utilized in-house mobile robot characteristics, dataset structure, and ground truth data acquisition methodology are presented. The collected and shared eight sequences are reported in Sect. 3, together with some results of the performance of selected state-of-the-art SLAM algorithms. Finally, the directions of challenging research areas and an outlook or the future developments in the context of utilizing the presented dataset close the paper.

2 Related Works

2.1 SLAM Datasets: Common Scenarios

In the general research area of mobile mapping, numerous open datasets have been published, often featuring a dedicated benchmark. The most prominent research groups involved in these studies focus on the applications in the automotive industry, photogrammetry, surveying, and robotics. At the early days of 3D SLAM developments for autonomous systems, datasets being published were dominated by car-based systems in urban areas and did not focus on benchmarking and metrological evaluation of mapping. Thus, they did not provide an accurate reference data for mapping, but only the raw data from sensor systems consisting usually of camera(s), LiDAR scanners, and inertial measurement unit (IMU) (Smith et al. 2009; Blanco-Claraco et al. 2014; Cordts et al. 2015).

The prime example is the Massachusetts Institute of Technology (MIT) DARPA Grand Challenge 2007 dataset (Huang et al. 2010). Despite the lack of full GT, it still marks an important moment of publicly releasing a huge amount of image and point cloud data, acquired with sensors relevant to the automotive industry applications, enabling a wide audience to work on robotic perception-related solutions. Similarly, two Korea Advanced Institute of Science and Technology (KAIST) datasets (Choi et al. 2018; Jeong et al. 2019) include only GNSS-derived trajectories as the reference data, but provide additional data, recorded at day and at night, and extend the sensor selection by a thermal camera.

Among the most influential SLAM datasets, constituting arguably the most popular benchmark, is the Karlsruhe Institute of Technology and Toyota Technological Institute (KITTI) dataset (Geiger et al. 2012, 2013). The data were collected with a sensor system mounted on a roof of a Volkswagen car in the urban area of Karlsruhe, Germany. Apart from providing image, LiDAR point clouds and IMU data, it includes GNSS-based trajectory and an automated benchmarking system for evaluating the performance of submitted solutions. Although it does not contain a reliable reference for mapping, subsequent developments added other challenges to the benchmark suite, such as image depth prediction, object detection, or semantic segmentation. A recent survey of SLAM algorithms (Liu et al. 2021) highlighted the problem of providing the reliable GT for mapping in open SLAM datasets: only 35 out of 97 investigated datasets include 3D reference data for mapping quality evaluation.

In the robotic and photogrammetric communities, three distinct classes of SLAM datasets can be distinguished, based on whether they were acquired with an unmanned aerial vehicle (UAV), unmanned ground vehicle (UGV), or a handheld system. An example of a UAV-based research is the European Robotics Challenge (EuRoC) dataset (Burri et al. 2016), which features visual–inertial system data with GT data consisting of trajectories acquired with a motion capture (MC) system, total station (TS) tracking and terrestrial laser scanner (TLS) point cloud for mapping evaluation. However, the dataset lacks LiDAR scanning data. For UGV systems, the Technical University of Munich (TUM) RGB-D (Sturm et al. 2012) and Mobile Autonomous Robotic Systems lab (MARS) Mapper (Chen et al. 2020) systems should be mentioned. The former one features only the data from depth cameras and only a, MC GT trajectory (further extended by an IMU in a handheld system in the TUM VI dataset (Schubert et al. 2018)), while the latter contains data from multiple LiDAR devices, stereo camera and an IMU, as well as trajectory obtained with a tracking system and a TLS point cloud. In the scope of the ETH3D dataset (Schops et al. 2017), multiple image sequences obtained with a monocular camera and a stereo visual–inertial handheld system are shared. Most of them are recorded indoors, with a GT provided by a MC system. For a few scenes mapped outside, a GT is only reconstructed by a structure-from-motion (SfM) approach. From the most recent developments, interesting SLAM datasets start to include novel sensors such as event cameras (Klenk et al. 2021) and utilize simulation environments to facilitate the need for data acquisition in different conditions, especially for learning-based methods (Wang et al. 2020b). Nevertheless, the final evaluation of SLAM method performance should be assessed on real datasets, since multiple noise sources and possible failure factors present in the real world are extremely hard to reproduce in a simulated environment.

2.2 SLAM Datasets: Challenging Environments

All the above-mentioned datasets are, however, captured in relatively easy indoor, feature-rich environments. For mobile mapping underground sites, especially industrial facilities, conditions are much harder and include a multitude of potential noise sources, such as dust, variable humidity, uneven lighting, lack of distinct visual and geometrical features, vibrations, and uneven ground. These factors negatively affect possible assumptions in SLAM algorithm, e.g., the presence of planar features in the surveyed data. Dynamic conditions of the working machinery and possible rockfalls further contribute to the unpredictability of the environment and dangers that need to be recognized for a mobile robot working in such a facility. Thus, SLAM datasets acquired in such harsh conditions were investigated further: the HILTI (Helmberger et al. 2022) and ConSLAM (Trzeciak et al. 2023) datasets from a construction site and the S3LI dataset (Giubilato et al. 2022) from Mount Etna in Italy, providing data of featureless, bare rock surface of the volcanic landscape. The HILTI dataset features multiple datasets from different editions, which vary in terms of the sensor suites used. Although the ConSLAM dataset provides data collected with a similar, prototypic sensor setup, it provides data from a periodically repeated measurements at the same construction site. Another challenging natural environment of a botanic garden was investigated by Liu et al. (2023), who share a dataset collected with a wheeled mobile robot with a rich sensor selection and a reliable ground truth 3D point cloud. A different challenging case could be considered for the mapping systems operating in areas with unreliable, partial GNSS signal coverage. An example of a dataset focusing on such conditions is BIMAGE Blaser et al. (2021), which provides raw data from a mobile mapping system collected in urban canyons and forest areas supplemented by ground control points surveyed with a total station.

The first underground SLAM dataset (Leung et al. 2017) was published in 2017 and featured data acquired in a Chilean underground mine recorded using a Clearpath UGV, equipped with a radar, stereo camera, and a Riegl TLS. The TLS was used in two ways: as a reference sensor, performing static scans when the robot was not moving, and similarly to an industrial-grade 3D LiDAR system, continuously scanning during robot’s movement. However, utilizing such expensive instrument is not common, since it greatly increases the costs of the measurement system, is not suitable for flying units due to its weight, and the laser scan frequency is low (6 s for one full scan).

The most suitable datasets for evaluating robotic SLAM solutions for mining-related applications were acquired during the DARPA Subterranean (SubT) Challenge. Many teams shared data they collected during at least one of the events, which included a tunnel circuit, a power plant site and a cave system, all of which are relevant to the subject of our study. Datasets were published by the DARPA Army Research Lab (Rogers et al. 2020) and teams: Cerberus (Tranzatto et al. 2022), CoSTAR (Koval et al. 2022; Reinke et al. 2022), MARBLE (Kasper et al. 2019; Kramer et al. 2022), CTU-Cras-Norlab (Petracek et al. 2021; Krátký et al. 2021), Explorer (Wang et al. 2020a). They include UGV- and UAV-based data from stereo cameras, IMUs, and industrial-grade LiDAR scanners, seldom supplemented by thermal cameras and radars. The TLS-based GT was provided by DARPA. Although useful, those datasets usually feature expensive platforms (e.g., Boston Dynamics Spot) and do not have redundant sensors (multiple stereo cameras, LiDAR scanners, IMUs), making them solution dependent. A summary of the above-mentioned relevant open SLAM datasets is presented in Table 1.

To move beyond a simple comparison between different SLAM algorithms (working on the data from the same sensor), we decided to enhance this approach, allowing to compare results from SLAM running on different sensors, acquired during the same sequence (e.g., solid-state and spinning LiDAR sensors, stereo and RGB-D camera). Moreover, utilizing low-cost solutions popular in the robotic community and sharing a survey-grade, TLS-based ground truth data, we allow researchers to evaluate SLAM software and hardware solutions that can be then used by them to create affordable systems for popularizing mobile mapping methods in underground applications.

3 The MIN3D Dataset

The data were collected in interior and exterior areas of the Wroclaw University of Science and Technology (Fig. 1) and within some tunnels of the underground facility “Rzeczka”, which is a part of the “Riese” complex (Fig. 2), constructed during the World War II (Stach et al. 2014). Both sites feature varying surfaces and environments, which pose a challenge for SLAM algorithms as they must adapt to varying structural conditions, illumination changes, and the inconsistent level of the presence of distinct visual features.

3.1 Employed Robotic System and Sensor Configuration

The data were collected using a mobile robot equipped with a multi-sensory measuring column (Fig. 3). The robot was equipped with various sensors, as well as a data recording computer, power batteries, and lighting, which featured an adjustable intensity to adapt to the environmental conditions and enable the recording of image data in low-light environments. The list of utilized devices includes:

-

A Velodyne LiDAR scanner, mounted at the top of the measuring column through an additional rotation module, which increases the resolution of the acquired data by rotating the sensor around the horizontal axis.

-

An Intel RealSense D455 depth camera, placed below the Velodyne.

-

Monocular RGB and IR cameras installed in the middle pair and featuring a similar optical system and field of view.

-

A synchronized Basler stereo-rig.

-

A Livox LiDAR scanner located at the bottom of the column.

-

A NGIMU inertial measurement unit mounted at the robot base.

The mobile robot with its sensors placed along the vertical column bar with the example data frames (adapted from: Trybała et al. 2022)

The data are supplemented by two IMU sensors integrated with an Intel RealSense camera and the Livox LiDAR system as well as an independent NGIMU. The robot was controlled manually from a remote operator panel. Control signals were transmitted in the 2.4 GHz frequency band, while data acquisition control telemetry was obtained from a tablet connected to a computer placed on the robot via a Wi-Fi network. The block diagram of the connected sensors is shown in Fig. 4, while the remote visualization and control panel are shown in Fig. 5. A methodology for system calibration, i.e., estimation of the relative orientation between sensors, was presented in Trybała et al. (2022).

Block diagram of a robotic multi-sensory measurement system (Trybała et al. 2022)

Remote visualization and control panel (Trybała et al. 2022)

3.2 Data Acquisition

A total of eight datasets were acquired in two different settings:

-

Three sequences inside and around the research building of the Faculty of Geoengineering, Mining and Geology at Wrocław University of Science and Technology (Poland).

-

Five sequences in the underground site “Rzeczka”, part of the “Riese” underground complex (Walim, Poland).

The conditions of the university dataset resemble an industrial environment, with mostly monochromatic colors, scarce visual features, long and uniform corridors, and the presence of reflective surfaces. During the acquisitions in the subterranean environment, the robot was equipped with its own lighting system due to the absence of illumination in a huge part of the mine. These real underground conditions allowed to illustrate the challenges often encountered in a setting of an industrial mine, where irregular tunnels carved or blasted in rock are mixed with reinforced, more structured areas.

To further increase the level of difficulty for SLAM algorithms, the acquisitions featured illumination changes (robot driving from indoor to outdoor or through a room with light turned off) and frequent revisiting of the same area, often from different perspectives. The specific aims of each acquisition, together with resulting data size, has been summed up in Table 2. Approximate robot trajectories for each sequence, drawn on a 2D projection of the ground truth point cloud cross sections, are shown in Figs. 6, 7, 8, 9, 10, 11, 12.

Due to some problems with the reliability of RealSense internal IMU, an additional NGIMU sensor was added to the measurement system. However, we still provide the incomplete data from the RealSense device, since it may allow some interesting analyses and development in terms of multi-IMU systems, as discussed further in Sect. 4. Similarly, probably due to the challenging environmental conditions, the Basler stereo camera rig was not able to maintain the desired frame rate during the tunnel tests. Direct processing of this data will result in worse results than for images from other cameras, but might facilitate the development and evaluation of, e.g., AI-based image denoising and frame rate interpolation methods for robustifying mobile robotic applications in challenging environments.

3.3 Dataset Structure

The data were recorded using an Intel NUC machine and, during the measurements, saved in the.rosbag file format using ROS (Robot Operating System) Melodic (Quigley et al. 2009) and common driver packages. In the post-processing operations, the data were unpacked and converted to open formats suitable for further analyses, as shown in Table 3.

The naming convention of the files is < ROS timestamp > < dot > < extension > , since all data were time-stamped in a centralized manner, according to the ROS master node clock. However, time stamps of some of the data were already pre-synchronized by the respective device drivers. Time stamps for: RealSense RGB, IR images, depth maps, and IMU are synchronized to each other, as well as Basler stereo pairs and Livox point clouds with its internal IMU. The dataset general structure is explained in Fig. 13.

3.4 Ground Truth and Evaluation Methodology

Reference data were acquired using a RIEGL VZ-400i terrestrial laser scanner (Fig. 14). The scanner features a laser pulse repetition rate of 100–1200 kHz, a maximum effective measurement rate of 500,000 points/s, and a measurement range of 0.5 m to 800 m. The scanning angle range is a total of 100° in a vertical line and max. 360° in the horizontal frame. The manufacturer’s stated accuracy of resulting single point 3D position is 5 mm and the declared precision is 3 mm (Riegl datasheet 2019).

For both test sites, the distance between consecutive scan positions was about 5–15 m. The scanning parameters used were a laser pulse repetition rate of 1200 kHz, a scanning resolution of 0.05°, and a point cloud resolution characterized by point-to-point distance of 17.5 mm at a distance of 20 m.

The processing of the acquired TLS data was carried out in the dedicated RiSCAN PRO software (RIEGL Laser Measurement Systems GmbH 2019) for point cloud filtering and scan registration. The preliminary scan registration was performed using an automatic registration method based on voxels extraction and fitting. To improve scan position registration, alignment was performed using the multi station adjustment (MSA) procedure. The position and orientation of each scan position were adjusted in a bundle adjustment (BA), which included several iterations to minimize position error between overlapping planes and determine the best fit.

The alignment process resulted in an error (i.e., scanner position standard deviation after BA) of ca 2 mm for both test sites. Control of the alignment of the overlapping first and last positions, creating a loop, showed a spatial matching within 5 mm. This quality control was omitted only for the GT point cloud of the first university sequence, which does not include a loop. The resulting point cloud of the building test site and the underground facility are shown in Figs. 15 and 16, respectively.

To facilitate the proper matching of measurement data with GT, reference points in the form of white spheres with a diameter of 100 mm were placed in the area of interest of both test sites (Fig. 17). The reference targets were selected to be properly visible by all the optical sensors mounted on the robot.

4 Processing and Analyses

The MIN3D dataset could support evaluations of 3D mapping methods, including SLAM. As multiple approaches to assess the quality of the mapping results exist, we do not provide a dedicated benchmarking tool and leave the decision of selecting an appropriate workflow to the readers. Pipelines developed for ETH3D (Schops et al. 2017), 3D Tanks and Temples (Knapitsch et al. 2017), as well as a more sophisticated analysis presented by Toschi et al. (2015) could be mentioned as examples of sound methodologies for carrying out quality evaluation of the 3D reconstruction for the results achieved from processing MIN3D data.

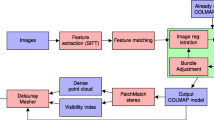

An overview of current state-of-the-art strategies of tackling common problems, based on the research and experiences from the DARPA Subterranean Challenge, in applying SLAM in underground environments can be found in Ebadi et al. (2022). Moreover, we also envision a MIN3D contribution toward the development of specific algorithms for challenging mining environments (Fig. 18), which includes, e.g., dedicated methods for dealing with various structurization levels of geometry, loop closure detection in mostly featureless conditions, or point cloud filtering and optimization approaches.

4.1 Underground Mobile Mapping Accuracy

During the preliminary evaluation of SLAM on our dataset, comparisons of the point clouds obtained with example SLAM algorithms were carried out on representative sequences of the dataset. One sequence has been selected from the indoor part of the dataset (University 2) and two sequences were chosen from the underground tunnels (Underground 1 and 3). Based on the state-of-the-art research, we chose one V-SLAM algorithm, one LiDAR-inertial algorithm, and one pure-LiDAR method. We processed:

-

RealSense RGB-D data with ORB-SLAM3 (Campos et al. 2021).

-

Livox LiDAR scanner and IMU data with FAST-LIO SLAM (Kim et al. 2021; Xu et al. 2022).

-

Actuated Velodyne LiDAR scanner data with SC-A-LOAM (Kim et al. 2022).

As representative statistics, summarizing the mapping performance of each of those methods, we have chosen mean, standard deviation, and (− 3σ, 3σ) range of cloud-to-cloud distance distributions, calculated as signed distances using M3C2 plugin of Cloud Compare software (Lague et al. 2013).

Point clouds based on the simultaneously acquired data from three different sensors were obtained using the previously mentioned state-of-the-art mobile mapping methods. They were then registered to the GT model using iterative closest point (ICP; Besl and McKay 1992) with an initial manual alignment. Mapping errors were estimated as the distances between the point clouds and the local GT model and signed according to their estimated normals. The error distributions were analyzed together with the qualitative analysis of the results (long- and short-term drifts, topology correctness). Summary statistics of the quantitative analysis of the mapping error distributions are presented in Table 4.

The obtained results show that the tested methods were appropriate for 3D mapping of the examined areas. Using a common color-coding scheme (Fig. 19), we compare the results of accuracy evaluation of the three tested SLAM approaches (Figs. 20, 21, 22). Qualitatively analyzing them, the resulting point clouds present the “correct” topology comparing to the GT data. However, in-depth analysis often revealed inconsistencies such as lack of proper loop closures, high short-term rotational drifts, long-term rotational drift around the robot’s roll axis, and increased noise near reflective surfaces. Those resulted in point cloud errors such as “ghosting”, i.e., not-aligned point clouds of areas measured multiple times or dimension deformation, i.e., shortening of the tunnel length. The above-mentioned issues occurred in both indoor and underground datasets and their example visualizations are presented in Fig. 23. It is worth mentioning that no algorithm was able to properly recover from the simulation of the kidnapped robot problem between sequences Underground 3 and 4 using loop closure detection. Thus, Underground 3 was analyzed only as a standalone sequence.

4.2 Multi-sensor Signal Analysis

Apart from the core issue of improving robustness and reliability of various mobile mapping and localization approaches, we encourage using the proposed MIN3D dataset also for other purposes, such as image enhancement. Conditions in which the data were acquired can challenge state-of-the-art image processing methods. Such methods include, but are not limited to, image deblurring and upscaling, frame interpolation, depth estimation from monocular camera (or improving the quality of depth obtained with the stereo images), and application of different 3D geometry reconstruction methods. Evaluations of deep learning-based techniques are foreseen, since scarcity of the training data from unique, underground conditions may seriously hinder their performance on the MIN3D dataset.

Furthermore, simultaneous acquisition of data from various sensors allows exploration of novel data fusion methods: this could also include methods for improving the quality of the data using multiple devices. As an example, we show a proof of concept of utilizing two IMUs in the context of possible developments in the area of the positioning methods using multiple inertial devices. We compared signals from IMUs installed in Livox and RealSense, with the focus on acceleration data. Figure 24 presents raw data from the sensors expressed in g units.

Firstly, the moving average of the absolute value of the signals was calculated for every channel with the window of 1 s (see Fig. 25). This way, an average variability of vibration strength can be visualized and compared per axis. The main visible difference between devices is expressed as slightly stronger vibrations in the horizontal plane for RealSense compared to Livox. It can be explained by the fact that RealSense was mounted higher on the sensor column, and angular movements of the entire column, with respect to the pivot point at the bottom of the column, translate to stronger readings of the RealSense IMU.

In the second step, a moving variance was calculated for all channels, also with the window length of 1 s (see Fig. 26). Comparison of vibrations in the XY directions shows that there is a visible proportional relation between the energy of vibration of each device. It could be explained by the fact that the devices are mounted at different heights on the column, and the angular nature of the vibrations of the column causes the amplification of vibrations in the lateral plane as a function of the height of the column.

The authors attempted to evaluate the relation between vibration energy for the devices. The best achieved fit was a linear model with the ratio of 2.93 at R2 = 0.91 (Fig. 27). It shows that RealSense at its mounting point experiences almost 3 × more energetic vibration in the horizontal plane in relation to Livox due to the angular vibrations of the column. Additionally, the linear nature of the model, as well as the coefficient of 2.93 can be confirmed by the fact that Livox is mounted 30 cm above the pivot point (bottom mount of the column) and RealSense is placed at 88 cm above the pivot point, which is 2.933 times higher.

5 Conclusions

The paper presented a novel UGV-based dataset for developing and testing mobile mapping solutions (e.g., SLAM) in challenging GNSS-denied conditions, common in mining applications or textureless indoor spaces. We provide data sequences collected simultaneously with multiple sensors, including different LiDAR scanners, cameras, and inertial units. The environments of tests were selected to pose a challenge for state-of-the-art data processing algorithms and feature changing illumination, varying complexity of geometry, and textureless areas. Acquisitions were carried out inside a university building and in an underground historical tunnel, which allows to also evaluate the performance degradation of developed methods between real and simulated conditions. Such analysis would be especially valuable for learning-based approaches.

We presented quantitative evaluations of selected SLAM methods utilizing data from different sensors (cameras, LiDAR devices, IMU) and showed some shortcomings in their performance when applied to the MIN3D data. Additionally, an analysis of data from the multi-IMU system was performed to showcase the possible directions of research of multi-sensor data fusion.

In summary, it is envisaged that the utilization of the MIN3D dataset has the potential to accelerate advancements in multiple research domains within the field of robotics, computer vision and geomatics, acknowledging that the list provided below is not exhaustive:

-

Testing and improving mapping approaches (visual, LiDAR, fusion) in the challenging underground or indoor conditions.

-

Robustifying V-SLAM in the environments with changing illumination.

-

Estimating depth with a monocular camera.

-

Developing visual and LiDAR-based loop closure detection algorithms in degraded environments.

-

Using multi-sensor odometry and mapping approaches (multi-IMU, multi-camera, multi-LiDAR).

-

Online calibration and utilization of multi-sensor suites, including cameras with different spectral responses (e.g., RGB and thermal).

Data Availability

Datasets have been published at https://3dom.fbk.eu/benchmarks.

References

Besl PJ, McKay ND (1992) Method for registration of 3-D shapes. In: Sensor fusion IV: control paradigms and data structures. pp 586–606

Blanco-Claraco J-L, Moreno-Duenas F-A, González-Jiménez J (2014) The Málaga urban dataset: high-rate stereo and LiDAR in a realistic urban scenario. Int J Rob Res 33:207–214

Blaser S, Nebiker S, Wisler D (2019) Portable image-based high performance mobile mapping system in underground environments-system configuration and performance evaluation. ISPRS Ann Photogramm Remote Sens Spatial Inform Sci 4:255–262

Blaser S, Meyer J, Nebiker S (2021) Open urban and forest datasets from a high-performance mobile mapping backpack—a contribution for advancing the creation of digital city twins. Int Arch Photogramm Remote Sens Spat Inf Sci 43:125–131

Burri M, Nikolic J, Gohl P, Schneider T, Rehder J, Omari S, Achtelik MW, Siegwart R (2016) The EuRoC micro aerial vehicle datasets. Int J Rob Res 35:1157–1163

Campos C, Elvira R, Rodriguez JJG, Montiel JMM, Tardós JD (2021) Orb-slam3: an accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans Rob 37:1874–1890

Chen H, Yang Z, Zhao X, Weng G, Wan H, Luo J, Ye X, Zhao Z, He Z, Shen Y, Schwertfeger S (2020) Advanced mapping robot and high-resolution dataset. Rob Auton Syst 131:103559

Chiang KW, Tsai G-J, Zeng JC (2021) Mobile mapping technologies. Springer

Choi Y, Kim N, Hwang S, Kibaek P, Yoon JS, An K, Kweon IS (2018) KAIST multi-spectral day/night data set for autonomous and assisted driving. IEEE Trans Intell Transp Syst 19:934–948

Chung TH, Orekhov V, Maio A (2023) Into the robotic depths: analysis and insights from the DARPA subterranean challenge. Annu Rev Control Robot Auton Syst 6:477–502

Cordts M, Omran M, Ramos S, Scharwächter T, Enzweiler M, Benenson R, Franke U, Roth S, Schiele B (2015) The Cityscapes Dataset. In: CVPR Workshop on The Future of Datasets in Vision

Dabek P, Szrek J, Zimroz R, Wodecki J (2022) An automatic procedure for overheated idler detection in belt conveyors using fusion of infrared and RGB images acquired during UGV robot inspection. Energies 15:601. https://doi.org/10.3390/en15020601

Di Stefano F, Torresani A, Farella EM, Pierdicca R, Menna F, Remondino F (2021) 3D surveying of underground built heritage: opportunities and challenges of mobile technologies. Sustainability 13:13289

Ebadi K, Bernreiter L, Biggie H, Catt G, Chang Y, Chatterjee A, Denniston CE, Deschênes S-P, Harlow K, Khattak S, Nogueira L, Palieri M, Petráček P, Petrlík M, Reinke A, Krátký V, Zhao S, Agha-mohammadi A, Alexis K, Carlone L (2022) Present and future of slam in extreme underground environments. arXiv preprint arXiv:220801787

Elhashash M, Albanwan H, Qin R (2022) A review of mobile mapping systems: from sensors to applications. Sensors 22:4262

Feng Y, Xiao Q, Brenner C, Peche A, Yang J, Feuerhake U, Sester M (2022) Determination of building flood risk maps from LiDAR mobile mapping data. Comput Environ Urban Syst 93:101759. https://doi.org/10.1016/j.compenvurbsys.2022.101759

Funk N, Tarrio J, Papatheodorou S, Popović M, Alcantarilla PF, Leutenegger S (2021) Multi-resolution 3D mapping with explicit free space representation for fast and accurate mobile robot motion planning. IEEE Robot Autom Lett 6:3553–3560

Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? The KITTI vision benchmark suite. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition. IEEE

Geiger A, Lenz P, Stiller C, Urtasun R (2013) Vision meets robotics: the KITTI dataset. Int J Rob Res 32:1231–1237

Giubilato R, Sturzl W, Wedler A, Triebel R (2022) Challenges of SLAM in extremely unstructured environments: the DLR planetary stereo, solid-state LiDAR, inertial dataset. IEEE Robot Autom Lett 7:8721–8728

Helmberger M, Morin K, Berner B, Kumar N, Cioffi G, Scaramuzza D (2022) The Hilti SLAM challenge dataset. IEEE Robot Autom Lett 7:7518–7525

Huang A, Antone M, Olson E, Fletcher L, Moore D, Teller S, Leonard J (2010) A high-rate, heterogeneous data set from the Darpa urban challenge. Int J Robot Res 29:1595–1601. https://doi.org/10.1177/0278364910384295

Jeong J, Cho Y, Shin Y-S, Roh H, Kim A (2019) Complex urban dataset with multi-level sensors from highly diverse urban environments. Int J Rob Res 38:642–657

Kanellakis C, Nikolakopoulos G (2016) Evaluation of visual localization systems in underground mining. In: 2016 24th Mediterranean Conference on Control and Automation (MED). pp 539–544

Kasper M, McGuire S, Heckman C (2019) A benchmark for visual-inertial odometry systems employing onboard illumination. In: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE

Kim G, Choi S, Kim A (2021) Scan context++: structural place recognition robust to rotation and lateral variations in urban environments. IEEE Trans Rob 38:1856–1874

Kim G, Yun S, Kim J, Kim A (2022) Sc-lidar-slam: a front-end agnostic versatile lidar slam system. In: 2022 International Conference on Electronics, Information, and Communication (ICEIC). pp 1–6

Klenk S, Chui J, Demmel N, Cremers D (2021) Tum-vie: The tum stereo visual-inertial event dataset. In: 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). pp 8601–8608

Knapitsch A, Park J, Zhou Q-Y, Koltun V (2017) Tanks and temples: benchmarking large-scale scene reconstruction. ACM Trans Graph (ToG) 36:1–13

Koval A, Karlsson S, Mansouri SS, Kanellakis C, Tevetzidis I, Haluska J, Agha-mohammadi A, Nikolakopoulos G (2022) Dataset collection from a SubT environment. Rob Auton Syst 155:104168. https://doi.org/10.1016/j.robot.2022.104168

Kramer A, Harlow K, Williams C, Heckman C (2022) ColoRadar: the direct 3D millimeter wave radar dataset. Int J Rob Res 41:351–360

Krátký V, Petráček P, Báča T, Saska M (2021) An autonomous unmanned aerial vehicle system for fast exploration of large complex indoor environments. J Field Robot 38:1036–1058

Lague D, Brodu N, Leroux J (2013) Accurate 3D comparison of complex topography with terrestrial laser scanner: application to the Rangitikei canyon (NZ). ISPRS J Photogramm Remote Sens 82:10–26

Leung K, Lühr D, Houshiar H, Inostroza F, Borrmann D, Adams M, Nüchter A, del Solar J (2017) Chilean underground mine dataset. Int J Rob Res 36:16–23

Liu Y, Fu Y, Chen F, Goossens Bart and Tao W, Zhao H (2021) Simultaneous Localization and Mapping related datasets: a comprehensive survey. arXiv preprint arXiv:210204036

Liu Y, Fu Y, Qin M, Xu Y, Xu B, Chen F, Goossens B, Yu H, Liu C, Chen L, Tao W, Zhao H (2023) BotanicGarden: A high-quality and large-scale robot navigation dataset in challenging natural environments. arXiv preprint arXiv:2306.14137

Macario Barros A, Michel M, Moline Y, Corre G, Carrel F (2022) A comprehensive survey of visual SLAM algorithms. Robotics. https://doi.org/10.3390/robotics11010024

Masiero A, Fissore F, Guarnieri A, Pirotti F, Visintini D, Vettore A (2018) Performance evaluation of two indoor mapping systems: low-cost UWB-aided photogrammetry and backpack laser scanning. Appl Sci. https://doi.org/10.3390/app8030416

Menna F, Torresani A, Battisti R, Nocerino E, Remondino F (2022) A modular and low-cost portable VSLAM system for real-time 3D mapping: from indoor and outdoor spaces to underwater environments. Int Arch Photogramm Remote Sens Spat Inf Sci 48:153–162

Nocerino E, Menna F, Remondino F, Toschi I, Rodr\’\iguez-Gonzálvez P (2017) Investigation of indoor and outdoor performance of two portable mobile mapping systems. In: Videometrics, Range Imaging, and Applications XIV. pp 125–139

Nüchter A, Elseberg J, Janotta P (2017) Towards Mobile Mapping of Underground Mines. In: Benndorf J, Buxton M (eds) Proceedings of Real Time Mining - International Raw Materials Extraction Innovation Conference. TU Bergakademie Freiberg, Amsterdam, pp 27–37

Petracek P, Kratky V, Petrlik M, Baca T, Kratochvil R, Saska M (2021) Large-scale exploration of cave environments by unmanned aerial vehicles. IEEE Robot Autom Lett 6:7596–7603

Quigley M, Conley K, Gerkey B, Faust J, Foote T, Leibs J, Wheeler R, Ng AY, others (2009) ROS: an open-source Robot Operating System. In: ICRA workshop on open source software. pp 5–10

Reinke A, Palieri M, Morrell B, Chang Y, Ebadi K, Carlone L, Agha-Mohammadi A-A (2022) LOCUS 2.0: robust and computationally efficient lidar odometry for real-time 3D mapping. IEEE Robot Autom Lett 7:9043–9050

Ren Z, Wang L, Bi L (2019) Robust GICP-based 3D LiDAR SLAM for underground mining environment. Sensors 19:2915

RIEGL Laser Measurement Systems GmbH (2019) RiSCAN PRO Operating & Processing Software

Riegl VZ-400i Datasheet (2019) http://www.riegl.com/uploads/tx_pxpriegldownloads/RIEGL_VZ-400i_Datasheet_2022-09-27.pdf. Accessed 30 Aug 2023

Rogers JG, Gregory JM, Fink J, Stump E (2020) Test Your SLAM! The SubT-Tunnel dataset and metric for mapping. In: 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE

Schops T, Schonberger JL, Galliani S, Sattler T, Schindler K, Pollefeys M, Geiger A (2017) A multi-view stereo benchmark with high-resolution images and multi-camera videos. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 3260–3269

Schubert D, Goll T, Demmel N, Usenko V, Stuckler J, Cremers D (2018) The TUM VI benchmark for evaluating visual-inertial odometry. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE

Seetharaman G, Lakhotia A, Blasch EP (2006) Unmanned vehicles come of age: the DARPA grand challenge. Computer 39:26–29

Smith M, Baldwin I, Churchill W, Paul R, Newman P (2009) The new college vision and laser data set. Int J Rob Res 28:595–599

Stach E, Pawłowska A, Matoga Ł (2014) The development of tourism at military-historical structures and sites–a case study of the building complexes of project riese in the owl mountains. Pol J Sport Tour 21:36–41

Sturm J, Engelhard N, Endres F, Burgard W, Cremers D (2012) A benchmark for the evaluation of RGB-D SLAM systems. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE

Szrek J, Wodecki J, Błażej R, Zimroz R (2020) An inspection robot for belt conveyor maintenance in underground MineInfrared thermography for overheated idlers detection. Appl Sci 10:4984. https://doi.org/10.3390/app10144984

Tak AN, Taghaddos H, Mousaei A, Bolourani A, Hermann U (2021) BIM-based 4D mobile crane simulation and onsite operation management. Autom Constr 128:103766. https://doi.org/10.1016/j.autcon.2021.103766

Thrun S, Hahnel D, Ferguson D, Montemerlo M, Triebel R, Burgard W, Baker C, Omohundro Z, Thayer S, Whittaker W (2003) A system for volumetric robotic mapping of abandoned mines. In: 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422). pp 4270–4275

Torresani A, Menna F, Battisti R, Remondino F (2021) A V-SLAM guided and portable system for photogrammetric applications. Remote Sens 13: 2351 https://doi.org/10.3390/rs13122351

Toschi I, Rodríguez-Gonzálvez P, Remondino F, Minto S, Orlandini S, Fuller A (2015) Accuracy evaluation of a mobile mapping system with advanced statistical methods. Int Arch Photogramm Remote Sens Spatial Inform Sci. https://doi.org/10.5194/isprsarchives-XL-5-W4-245-2015

Toschi I, Ramos MM, Nocerino E, Menna F, Remondino F, Moe K, Poli D, Legat K, Fassi F et al (2017) Oblique photogrammetry supporting 3D urban reconstruction of complex scenarios. Int Arch Photogramm Remote Sens Spatial Inform Sci 42:519–526

Tranzatto M, Dharmadhikari M, Bernreiter Lukas and Camurri M, Khattak S, Mascarich Frank and Pfreundschuh P, Wisth D, Zimmermann S, Kulkarni M, Reijgwart V, Casseau B, Homberger T, De Petris P, Ott Lionel and Tubby W, Waibel G, Nguyen H, Cadena C, Buchanan R, Wellhausen L, Khedekar N, Andersson O, Zhang L, Miki T, Dang T, Mattamala M, Montenegro M, Meyer K, Wu X, Briod A, Mueller M, Fallon M, Siegwart R, Hutter M, Alexis K (2022) Team CERBERUS wins the DARPA Subterranean Challenge: Technical overview and lessons learned. arXiv preprint arXiv:220704914

Trybała P (2021) LiDAR-based Simultaneous Localization and Mapping in an underground mine in Złoty Stok, Poland. In: IOP Conference Series. Earth and Environmental Science

Trybała P, Szrek J, Remondino F, Wodecki J, Zimroz R (2022) Calibration of a multi-sensor wheeled robot for the 3D mapping of underground mining tunnels. Int Arch Photogramm Remote Sens Spatial Inform Sci. https://doi.org/10.5194/isprs-archives-xlviii-2-w2-2022-135-2022

Trybała P, Kasza D, Wajs J, Remondino F (2023) Comparison of low-cost handheld lidar-based slam systems for mapping underground tunnels. Int Arch Photogramm Remote Sens Spat Inf Sci 48:517–524

Trzeciak M, Pluta K, Fathy Y, Alcalde L, Chee S, Bromley A, Brilakis I, Alliez P (2023) ConSLAM: construction data set for SLAM. J Comput Civ Eng 37:4023009

Vallet B, Mallet C (2016) 2—urban scene analysis with Mobile mapping technology. In: Baghdadi N, Zribi M (eds) Land surface remote sensing in urban and coastal areas. Elsevier, pp 63–100

Wang C, Wang W, Qiu Y, Hu Y, Scherer S (2020a) Visual memorability for robotic interestingness via unsupervised online learning. Computer vision—ECCV 2020. Springer International Publishing, Cham, pp 52–68

Wang W, Zhu D, Wang X, Hu Y, Qiu Y, Wang C, Hu Y, Kapoor A, Scherer S (2020b) TartanAir: A dataset to push the limits of visual SLAM. In: 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE

Xu W, Cai Y, He D, Lin J, Zhang F (2022) Fast-lio2: fast direct lidar-inertial odometry. IEEE Trans Rob 38:2053–2073

Yang X, Lin X, Yao W, Ma H, Zheng J, Ma B (2022) A robust LiDAR SLAM method for underground coal mine robot with degenerated scene compensation. Remote Sens 15:186

Zlot R, Bosse M (2013) Efficient large-scale 3D mobile mapping and surface reconstruction of an underground mine. In: Field and service robotics: Results of the 8th international conference. pp 479–493

Acknowledgements

The authors offer special thanks to Walimskie Drifts in Walim (https://sztolnie.pl) for making the facility available for data acquisition.

Funding

This work was partly supported by EIT RawMaterials GmbH within the activities of the AMICOS—Autonomous Monitoring and Control System for Mining Plants—project (Agreement No. 19018) and VOT3D—project (Agreement No. 21119). The work was also partly supported by the FAIR project, Piano Nazionale di Ripresa e Resilienza.

Author information

Authors and Affiliations

Contributions

Conceptualization, PT, JS, JW and FR; methodology, PT, JS, FR; software, PT; validation, PT, JS, JW and PK; investigation, PT, JS, JW and PK; resources, FR, JB and RZ; data curation, PT, JS and JW; writing—original draft preparation, PT, JS; writing—review and editing, PK, JW, FR, JB and RZ; visualization, PT, PK and JW; supervision, FR, JB and RZ; project administration, FR, JB and RZ; funding acquisition, FR, JB and RZ. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Trybała, P., Szrek, J., Remondino, F. et al. MIN3D Dataset: MultI-seNsor 3D Mapping with an Unmanned Ground Vehicle. PFG 91, 425–442 (2023). https://doi.org/10.1007/s41064-023-00260-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41064-023-00260-0