Abstract

Navigating mobile robots in crowded environments poses a significant challenge and is essential for the coexistence of robots and humans in future intelligent societies. As a pragmatic data-driven approach, deep reinforcement learning (DRL) holds promise for addressing this challenge. However, current DRL-based navigation methods have possible improvements in understanding agent interactions, feedback mechanism design, and decision foresight in dynamic environments. This paper introduces the model inductive bias enhanced deep reinforcement learning (MIBE-DRL) method, drawing inspiration from a fusion of data-driven and model-driven techniques. MIBE-DRL extensively incorporates model inductive bias into the deep reinforcement learning framework, enhancing the efficiency and safety of robot navigation. The proposed approach entails a multi-interaction network featuring three modules designed to comprehensively understand potential agent interactions in dynamic environments. The pedestrian interaction module can model interactions among humans, while the temporal and spatial interaction modules consider agent interactions in both temporal and spatial dimensions. Additionally, the paper constructs a reward system that fully accounts for the robot’s direction and position factors. This system's directional and positional reward functions are built based on artificial potential fields (APF) and navigation rules, respectively, which can provide reasoned evaluations for the robot's motion direction and position during training, enabling it to receive comprehensive feedback. Furthermore, the incorporation of Monte-Carlo tree search (MCTS) facilitates the development of a foresighted action strategy, enabling robots to execute actions with long-term planning considerations. Experimental results demonstrate that integrating model inductive bias significantly enhances the navigation performance of MIBE-DRL. Compared to state-of-the-art methods, MIBE-DRL achieves the highest success rate in crowded environments and demonstrates advantages in navigation time and maintaining a safe social distance from humans.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In recent years, the operational landscapes for mobile robots have progressively transitioned from static to dynamic environments teeming with dense crowds, such as shopping malls, hospitals, and restaurants. In these settings, pedestrians take center stage in decision-making, exhibiting highly unpredictable behaviors with implicit and intricate impacts on robots and fellow pedestrians. Therefore, robots are expected to have good decision foresight to effectively plan their paths, predict and avoid potential collisions, and achieve smooth and efficient navigation. Furthermore, constructing effective robot navigation strategies in such dynamic and complex environments also requires a thorough consideration of potential interactions between intelligent agents, encompassing both robot-human and human–human dynamics, to fully understand the complex behavioral patterns of these dynamic, intelligent agents.

Research on robot navigation methods has undergone initial exploration, with these methods broadly classified into two categories: model-driven and data-driven approaches. Specifically, the Artificial Potential Field (APF) [1] computes the change in the gradient of the virtual potential field to obtain the virtual force, including attractive and repulsive forces to guide the robot's movement so that the robot can be attracted to the target location and avoid obstacles. In subsequent developments, several variations and improvements of the APF have emerged. For instance, Abdalla et al. [2] combined APF with the fuzzy logic controller to enhance the robot's adaptability to complex navigation scenarios. Orozco-Rosas et al. [3] integrated the membrane-inspired evolutionary algorithm with APF to optimize the parameters for generating feasible and safe paths, showing significant improvements in the length of their navigation paths. Apart from APF, Helbing et al. [4] proposed the social force model (SFM), which directly describes the interactions between pedestrians, obstacles, and target locations using forces and calculates the resulting acceleration for guiding the robot to avoid obstacles while reaching the target. Reciprocal velocity obstacle (RVO) [5] assumes that other agents move at constant velocities or that all agents collectively work to avoid collisions and then calculate a joint collision-free velocity based on interactions with other agents to plan collision-free paths. Optimal reciprocal collision avoidance (ORCA) [6] formulates the search for the locally optimal velocity in the next time step as a linear programming problem, providing better stability at a slightly higher complexity than RVO. These methods intuitively and rigorously comply with navigation rules and possess good interpretability. However, these methods mainly rely on pre-designed models to react to the current state and local predictions and lack deeper analysis and understanding of complex pedestrian environments, resulting in robots that are less capable of foreseeing potential collisions or other safety hazards ahead of time when confronted with dynamic environments. Therefore, they are more prone to short-sightedness or even collisions than data-driven methods.

Given the stochastic and complex nature of pedestrian trajectories, data-driven methods divide the task of mobile robot navigation in crowded environments into two independent subtasks: pedestrian trajectory prediction and path planning. These methods leverage networks trained on extensive pedestrian motion datasets to predict accurate trajectory information, selecting appropriate navigation paths based on the predicted trajectories [7,8,9,10]. However, computational complexities associated with pedestrian trajectory prediction models and issues related to re-planning due to prediction errors often hinder these methods from meeting real-time requirements. Moreover, these methods may encounter freezing robot issues in densely crowded environments.

Deep reinforcement learning (DRL) employs deep networks to approximate value or policy functions, optimizing agent decision-making through environmental feedback, which is an exemplary data-driven approach [11]. Current DRL-based navigation methods in crowded environments consider robot-human potential interactions, designing reward functions based on collision avoidance and social distance maintenance rules. This approach has yielded impressive results in navigating mobile robots within crowded environments. For instance, collision avoidance with deep reinforcement learning (CADRL) [12] encodes the estimated time to reach the goal, enabling learning implicit cooperative behavior in dynamic environments. Its reward function includes penalties for collisions and violations of social distancing, encouraging the robot to plan high-quality navigation paths while maintaining a safe social distance from pedestrians. Social Attention Reinforcement Learning (SARL) [13] models the relative importance of humans to the robot using self-attention mechanisms, facilitating a comprehensive understanding of collective behavior in dynamic environments. Graph-based deep reinforcement learning method (SG-DQN) [14] utilizes a graph attention network (GAT) [15] to efficiently process agent states in dynamic environments and employs a dueling deep Q network (dueling DQN) [16] for high-quality learning of robot navigation strategies. Martinez-Baselga et al. [17] address exploration and exploitation challenges in DRL-based navigation methods in crowded environments, designing exploration strategies based on state uncertainty to foster the robot's curiosity about future states and enhance navigation performance.

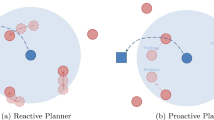

Although many studies have explored DRL-based navigation methods, there is still room for improvement in these approaches. The first potential improvement lies in understanding agent interactions in dynamic environments. In control tasks involving multiple agents, information exchange between agents can enrich their knowledge, enhance learning speed, and improve decision quality [18]. In the context of robot navigation in dynamic crowded environments addressed in this research, interactions among agents mainly include robot-human interactions and human–human interactions, which are crucial for a comprehensive understanding of complex behavioral patterns in dynamic navigation environments and can enable robots to adapt to dynamic environmental changes, thereby improving navigation performance and safety. However, existing DRL-based navigation methods such as CADRL [12] and SARL [13] primarily focus on potential interactions between robots and humans in the spatial domain while lacking sufficient consideration of human–human interactions and the effective utilization of temporal information. Therefore, there is potential for improvement in DRL-based navigation methods regarding the understanding of interactions among agents in dynamic environments. The second potential improvement lies in the design of feedback mechanisms. A perfect feedback mechanism can provide practical guidance to the robot to make reasonable decisions in complex environments and improve navigation performance [17]. However, current feedback mechanisms in some DRL-based navigation methods only consider positional factors, resulting in relatively simple feedback during navigation strategy learning [12,13,14]. Therefore, there is still room for improvement in the design of feedback mechanisms. The third potential improvement lies in decision foresight. In robot navigation tasks in static obstacle environments, robots plan paths by predicting future waypoints, so foresight is required [19]. In the dynamic, crowded environments considered in this research, pedestrian movements are highly randomized, demanding higher levels of foresight from robots to flexibly avoid potential collisions and promptly adjust navigation actions to adapt to dynamic environmental changes. In conclusion, as shown on the left side of Fig. 1a, there are potential areas for improvement in DRL-based navigation methods regarding understanding agent interactions, feedback mechanism design, and decision foresight.

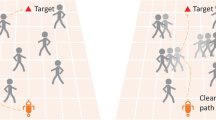

a Left and right illustrate the principal limitations of navigation methods based on DRL and the critical focal points of this study. For clarity, this subplot showcases only three pedestrians. b Left and right delineate navigation outcomes indicating robot collision and safety, corresponding to scenarios without/with model inductive bias. In the indicated navigation scenario, the robot is required to navigate from the starting point (0, − 4) to the endpoint (0, 4) at the fastest speed while maintaining a safe distance from pedestrians. The black line represents the robot's trajectory, while the lines of different colors represent the trajectories of pedestrians. The numbers along the lines indicate the time the robot or pedestrians took to reach those positions, marked every 4 s, with time indicators also applied at the final positions. The initial positions of pedestrians are marked with a pentagon, and their turning positions are marked with a square

Moreover, as a data-driven approach, DRL is adept at gathering data through interactions with the environment and refining strategies through continuous trial and error. Conversely, models excel at the induction and summarization of domain rules or prior knowledge, offering intuitive, rigorous, and highly interpretable characteristics. Therefore, combining DRL with models is a worthwhile idea to improve DRL, allowing DRL to fully incorporate the inductive biases of the models while learning from interactions with the environment, thereby achieving significantly enhanced navigation performance. The potential of this combination has been explored to some extent in existing DRL research and applications in three ways: DRL-based model improvement [20, 21], improving DRL's learning process with models [22,23,24], and improving DRL's components with models [25,26,27]. Among these ways, DRL-based model improvement refers to DRL leveraging its learning capacity to refine the components or parameters of models, thereby enhancing models. Improving DRL's learning process with models involves using models to collect experience or perform data augmentation, significantly boosting the efficiency and quality of DRL learning. The approach of improving DRL's components with models incorporates structured models into various aspects, such as state representation, network design, reward function construction, and action policy formulation, allowing DRL to directly benefit from the inductive biases of the model. In this study, given the existing potential for improvement in understanding agent interactions, feedback mechanism design, and decision foresight in DRL-based navigation methods, we adopt the approach of improving DRL's components with models to involve integrating the state-value network, reward function, and action policy of DRL with models to benefit from the model's inductive bias directly, thus achieving improvements in the three areas above.

Specifically, considering potential improvements in understanding agent interactions in DRL-based navigation methods, we integrate the angular pedestrian grid (APG) [28] and the gated recurrent unit (GRU) [29] into the design process of the DRL state value network to fully utilize the advantages of APG in representing interactions among pedestrians and GRU in capturing temporal information, thereby achieving a comprehensive understanding of potential interactions between agents in crowded environments. More specifically, we introduce a multi-interaction network to fully understand intelligent agent interactions in crowded environments, which consists of a pedestrian interaction module, a temporal interaction module, and a spatial interaction module. The pedestrian and temporal interaction modules incorporate the inductive biases of the APG and GRU, respectively, enabling the modeling of potential interactions among pedestrians and utilizing temporal information. Next, considering potential improvements in the design of feedback mechanisms in DRL-based navigation methods, we construct a reward system that fully accounts for the robot’s direction and position factors. The directional reward function in this system is built based on the angle between the resultant force acting on the robot from the APF and the actual motion direction of the robot, and the positional reward function is derived from navigation rules, which can provide reasoned evaluations for the robot's motion direction and position during the training process and enable it to receive comprehensive feedback. Additionally, recognizing potential room for improvement in decision foresight in DRL-based navigation methods, we incorporate Monte-Carlo tree search (MCTS) [30,31,32] into the action policy formulation of DRL to facilitate the long-term planning of robot actions and enhance the forward-looking nature of decision-making. Finally, by integrating the improvements above in understanding agent interactions, feedback mechanism design, and decision foresight, we propose a model ınductive bias enhanced deep reinforcement learning method (MIBE-DRL) to promote safe and efficient navigation of robots in dynamic crowd environments. The highlights of this paper are illustrated on the right side of Fig. 1a, while Fig. 1b compares robot navigation trajectories before and after enhancing model inductive bias. The contributions of this paper are summarized as follows:

-

1.

Considering potential improvements in understanding agent interactions in DRL-based navigation methods, we construct a multi-interaction network that benefits from the inductive biases of the APG and GRU, aiming to model the mutual influence among pedestrians and effectively leverage temporal information, thereby achieving a comprehensive understanding of potential interactions between agents in crowded environments;

-

2.

Recognizing potential improvements in feedback mechanism design and decision foresight in DRL-based navigation methods, we establish a reward system that fully accounts for the robot's direction and position factors, along with a forward-looking action strategy, to improve the feedback mechanism and encourage the execution of actions with long-term planning;

-

3.

By integrating the improvements in understanding agent interactions, feedback mechanism design, and decision foresight, we propose a novel DRL-based navigation method in crowded environments called MIBE-DRL, achieving safe and efficient robot navigation in dynamic environments;

-

4.

The results of a series of comparative experiments show that MIBE-DRL achieves the highest success rate compared to state-of-the-art methods and has advantages regarding navigation time and maintaining a safe social distance from humans. Ablation studies have demonstrated the effectiveness of improvements in intelligent agent interaction understanding, feedback mechanism design, and action foresight.

The subsequent sections of this paper are organized as follows: next section explains the problem formulation. Following section provides a detailed description of our methodology. Next section presents the experiments and analysis conducted to validate the effectiveness of the proposed approach. Following section is to give a detailed discussion of challenges, limitations, and future work. Last section concludes the paper.

Problem formulation

The navigation problem for robots can be understood as enabling a robot to avoid collisions with pedestrians in a dynamic environment while striving to maintain a safe social distance and simultaneously minimizing the time required to reach the goal. This study conceptualizes the problem as a sequential decision challenge within reinforcement learning, representing it as a Markov decision process (MDP) composed of the tuple \(\left\langle {S,A,P,R,\gamma } \right\rangle\), where \(S\) represents the state space, \(A\) denotes the action space, \(P\) is the state transition function, \(R\) is the reward function, and \(\gamma\) is the discount factor.

In this study, following the settings of the CrowdNav simulation environment [14, 17], both the robot and pedestrians are abstracted as circles with radius of \(r_{0}\) and \(r_{i}\), respectively. Considering that both the robot's state and the states of other pedestrians in crowded environments will influence the final decision, we use the joint state \(s^{t} = [s_{0}^{t} ,s_{1}^{t} ,...,s_{i}^{t} ,...,s_{N}^{t} ]\) as the representation of the state at time \(t\). Here, \(s_{0}^{t}\) represents the robot's state at time \(t\), \(s_{i}^{t}\) is the state of the pedestrian \(i\) at time \(t\), and \(N\) is the number of pedestrians. Specifically, the robot's state \(s_{0}^{t}\) is composed of its position \(p_{0}^{t} = [p_{0,x}^{t} ,p_{0,y}^{t} ]\), velocity \(v_{0}^{t} = [v_{0,x}^{t} ,v_{0,y}^{t} ]\), radius \(r_{0}\), goal \(g^{t} = [g_{x}^{t} ,g_{y}^{t} ]\), preset velocity \(v_{pres.}\), and motion direction \(\theta_{0}^{t}\), which can be expressed as:

The state \(s_{i}^{t}\) of pedestrian \(i\) at time \(t\) is composed of its position \(p_{i}^{t} = [p_{i,x}^{t} ,p_{i,y}^{t} ]\), velocity \(v_{i}^{t} = [v_{i,x}^{t} ,v_{i,y}^{t} ]\), and radius \(r_{i}\) and can be represented as follows:

For pedestrians, the states of other pedestrians are known, while the state of the robot remains unknown. Consequently, pedestrians exhibit a specific proactive collision avoidance capability among themselves but are unable to avoid the robot. This configuration imposes a significant demand on the navigation performance of the robot.

The robot's action at time \(t\) primarily consists of velocity \(v^{t}\) and direction \(\omega^{t}\), denoted as \(a^{t} = [v^{t} ,\omega^{t} ]\). Here, \(\omega^{t}\) can take 16 values, evenly distributed between 0 and \(2\pi\) radians. \(v^{t}\) can take five values within the range of 0 to \(v_{pres.}\). Actions with both velocity and direction set to 0 are also included in the action set, resulting in a final collection of 81 discrete actions.

This research employs the DQN [33] to learn robot navigation strategies in crowded environments. Specifically, at time \(t\), the robot interacts with the environment, obtaining state \(s^{t}\). It then selects and executes a corresponding action \(a^{t}\) based on a given policy \(\pi\), transitions to a new state \(s^{t + 1}\), and receives immediate reward. Ultimately, we aim to identify a deterministic optimal policy \(\pi^{*}\) that maximizes the expected discounted return. This policy can be shown as below:

where \(Q^{*} (s^{t} ,a^{t} )\) is the corresponding optimal state-action function, defined by the Bellman optimality equation:

where \(P(s^{t + 1} |s^{t} ,a^{t} )\) represents the probability of transitioning from state \(s^{t}\) to state \(s^{t + 1}\) after executing action \(a^{t}\). \(r(s^{t} ,a^{t} ,s^{t + 1} )\) is the reward from state \(s^{t}\) to state \(s^{t + 1}\) after executing action \(a^{t}\). \(\gamma\) is the discount factor, normalized by preset velocity \(v_{pres.}\) and time step \(\Delta t\).

Methodology

This section provides an overall description of MIBE-DRL, followed by detailed explanations of how model inductive bias enhances network design, reward function construction, and action strategy formulation within MIBE-DRL.

Overview

As depicted in Fig. 2, the proposed MIBE-DRL leverages the enhancement of model inductive biases in three main aspects: network design, reward function construction, and action policy formulation to understand agent interactions comprehensively, obtain comprehensive reward feedback, and make foresighted action decisions. In terms of network design, we introduce the multi-interaction network, consisting of the pedestrian interaction module, temporal interaction module, and spatial interaction module, which can benefit from the inductive biases of models like the APG and GRU to enable the modeling of pedestrian interactions and the utilization of temporal information, promoting a comprehensive understanding of potential interactions among agents in the environment. For reward function construction, we designed a reward system that fully considers the robot's orientation and position factors. It provides reasoned evaluations for the robot's motion direction and position during training, enabling it to obtain timely and informative feedback. Additionally, we integrated MCTS into the action policy formulation of MIBE-DRL to encourage the execution of actions with long-term planning, thus enhancing decision foresight. These operations promote a comprehensive understanding of the interaction among agents in crowded environments, develop feedback mechanisms, and facilitate the foresighted action strategy to achieve safe and efficient robot navigation in crowded environments.

Multi-interaction network

The primary challenge in robot navigation within crowded environments lies in understanding agents' interactions in dynamic settings [34]. Current navigation methods based on DRL have predominantly overlooked the impact of interactions among pedestrians on navigation tasks and have failed to adequately capture agents' temporal changes. In response, we propose a multi-interaction network to comprehensively consider interactions among agents in crowded environments. As illustrated in Fig. 2a, this network comprises a pedestrian interaction module, a temporal interaction module, and a spatial interaction module. The pedestrian interaction module in this network benefits from the inductive biases of the APG and can explicitly model the interactions among humans. The temporal and spatial interaction modules are designed based on the GRU and GAT, respectively, and can consider agent interactions within the environments in both temporal and spatial dimensions.

Pedestrian interaction module

Intuitively, interactions among pedestrians correlate with pedestrian decision-making, thereby indirectly influencing the robot's navigation in dynamic environments. In environments where pedestrians are sparse, the effects of interactions between pedestrians may be negligible. However, the lack of consideration for pedestrian interactions may decrease the robot's navigation performance in crowded environments. Given that the APG [28] can represent the mutual influence among humans, we utilize this model to construct the pedestrian interaction module, enabling MIBE-DRL to benefit from the APG's inductive biases and better understand the potential interactions among pedestrians.

As illustrated in Fig. 3, for pedestrian \(i\) at time \(t\), we initially define a circular region with a radius \(d_{huma.}\) to represent the pedestrian's influence range. The pedestrian's range of influence is then divided equally into \(U\) sectors and the closest distance to pedestrian \(i\) within each sector is selected as the encoded value for that sector. Subsequently, a one-dimensional vector \(I_{i}^{t} = [I_{i,1}^{t} ,I_{i,2}^{t} ,...,I_{i,u}^{t} ,...,I_{i,U}^{t} ]\) is obtained as the encoded output for pedestrian \(i\) at time \(t\), reflecting the interaction information with other humans within its influence area. Taking the uth sector region as an example, \(I_{i,u}^{t}\) represents the interaction information between pedestrian \(i\) and the other pedestrians in this sector region, according to the following equation:

where \((\alpha_{i,j}^{t} ,\beta_{i,j}^{t} )\) denotes the relative polar coordinates of pedestrian \(j\) in the polar coordinate system centered on pedestrian \(i\). From the two equations above, \(I_{i}^{t}\) is derived from the relative polar coordinates between pedestrians and is solely dependent on the positions of the pedestrians. Since this study focuses on navigation methods, the positional information of pedestrians is directly considered as known. In practical pedestrian navigation environments, robots can obtain surrounding pedestrians' positional and other status information by employing carried depth or binocular vision sensors in conjunction with well-trained visual perception algorithms. By expanding the state \(s_{i}^{t}\) of pedestrian \(i\) through encoding output result \(I_{i}^{t}\), we can obtain a new state \(\tilde{s}_{i}^{t} = [p_{i}^{t} ,v_{i}^{t} ,r_{i} ,I_{i}^{t} ]\) that incorporates a description of the interaction information among the surrounding pedestrians and pedestrian \(i\).

Schematic diagram of the angular pedestrian grid. This angular grid can encode the positional information of pedestrians to portray the interaction between pedestrian i and pedestrians within its influence range. Given that this study focuses primarily on navigation methods, we consider the positional information of pedestrians to be known. In practical pedestrian navigation environments, surrounding pedestrians' positional and status information can be obtained through carried depth or binocular vision sensors in conjunction with well-trained visual perception algorithms

In summary, the state \(\tilde{s}_{i}^{t}\) encoded through the pedestrian interaction module can capture changes in radial distance with continuous resolution, providing an intuitive representation of human–human interactions. Additionally, for two pedestrians closer in the distance, the pedestrian interaction module captures their angle changes more accurately, aligning with the real-world observation that individuals tend to pay more detailed attention to pedestrians nearby.

Temporal interaction module

The pedestrian interaction module can encode interactions among pedestrians using the APG, effectively representing the mutual interactions among pedestrians. Furthermore, considering the gating mechanism in the GRU [29], which controls the flow of information and determines whether to retain or discard information within a sequence, we leverage the GRU to design the temporal interaction module and make MIBE-DRL benefit from the inductive biases of the GRU.

As illustrated in Fig. 2a, for the robot's state \(s_{0}^{t}\) and the encoded state \(\tilde{s}_{i}^{t}\) of pedestrian \(i\) from the pedestrian interaction module at time t, the temporal interaction module transforms them into intermediate \(M_{0}^{t}\) and \(M_{i}^{t}\), respectively. The transformation process can be denoted as:

where \(M_{0}^{t}\) and \(M_{i}^{t}\) are the intermediate states of the robot and pedestrian \(i\) at time \(t\), \(F( \cdot )\) represents a fully connected network, and \(G( \cdot , \cdot )\) signifies the processing carried out by the GRU. Notably, the temporal interaction module addresses memory-related issues and resolves the problem of disparate dimensions between the robot's and pedestrians' states.

Spatial interaction module

In addition to the pedestrian and temporal interaction modules, the proposed multi-interaction network also includes the spatial interaction module. This module mainly comprises the GAT [15], which can encode interactions among agents in the spatial dimension. Initially, a graph is established with agents as nodes and connections between agents as edges. Subsequently, more advanced feature representations are obtained for the intermediate states through query matrix \(Q\) and key matrix \(K\). These features are concatenated to obtain attention coefficients between each pair of edges in the graph. Taking nodes \(m\) and \(n\) as an example, the calculation of attention coefficients from node \(m\) to node \(n\) is provided by the follow equation:

where \(q_{m} = f_{Q} (M_{m}^{t} )\) and \(k_{n} = f_{K} (M_{n}^{t} )\) represent advanced feature representations corresponding to intermediate states \(M_{0}^{t}\) and \(M_{i}^{t}\), respectively. \(||\) denotes the feature concatenation operation, \(f_{atte.} ( \cdot )\) is the attention function, and LeakyRELU is the activation function. Subsequently, attention coefficients are normalized to obtain attention weights:

where \(N_{m}\) is the neighborhood of node \(m\) in the graph, we consider all pedestrians in the dynamic environment in this research. Finally, by integrating the influences from all other agents within the neighborhood, the feature response \(h_{m}^{t}\) for node \(m\) can be derived:

Figure 4 illustrates the calculation process of the feature response \(h_{m}^{t}\). By estimating Q-values based on this feature response, the multi-interaction network encourages the robot to execute appropriate navigation actions.

Our multi-interaction network in MIBE-DRL is constructed from pedestrian, temporal, and spatial interaction modules. It integrates the inductive biases of the APG, GRU, and GAT to enable the comprehensive encoding of interactions among intelligent agents in dynamic environments, facilitating the understanding and inference of potential relationships among agents in complex dynamic systems.

Reward system

Accurate avoidance of pedestrians and prompt arrival at the goal are crucial for the robot navigation task in crowded environments. Therefore, establishing a rational reward feedback mechanism is essential. However, some current DRL-based navigation methods have only considered positional factors in their feedback mechanisms, resulting in relatively simple feedback during navigation policy learning [12,13,14]. In this study, we build a reward system that comprehensively considers the robot's orientation and position factors. The directional reward function in this system is constructed based on the angle between the resultant force acting on the robot from the APF [1] and the actual motion direction of the robot, and the positional reward function is derived from navigation rules, which can provide reasoned evaluations for the robot's motion direction and position during the training process and enable it to receive comprehensive feedback.

Direction reward function

Incorporating APF in the design of reward functions for DRL is a common method widely applied in mobile robot navigation, unmanned aerial vehicle trajectory planning, and manipulator trajectory planning. Specifically, in the early stages of training for mobile robot navigation, Wang et al. [35] utilized APF to predict the robot's next position and designed a reward function based on the distance between the predicted and actual positions to assist the robot's efficient exploration. For unmanned aerial vehicle track planning tasks, Zhou et al. [36] developed three artificial potential fields: the target gravitational potential field, threat source repulsive potential field, and altitude repulsive field, which are aimed at guiding the drone to approach the target, avoid threat sources, and maintain an appropriate altitude, respectively. Addressing manipulator trajectory planning tasks, Zheng et al. [37] proposed a combined reward function incorporating APF and time-energy function, consisting of target attraction reward, obstacle rejection reward, energy reward, and time reward. The first two were based on APF design, encouraging the manipulator to approach the target and navigate around obstacles. The latter two were designed based on the time-energy function to minimize energy consumption and complete the task quickly.

The works above utilize APF in designing reward functions and focus solely on positional factors. However, in mobile robot navigation tasks, the robot's direction significantly influences effective navigation. We notice that the direction of the resultant force acting on the robot in APF, to some extent, reflects the desired motion direction of the robot. Therefore, by using the angle between the direction of the resultant force and the actual motion direction of the robot, we construct a directional reward function to provide a reasonable evaluation of the robot's motion direction during training.

Following the theory of artificial potential fields, the robot experiences an attractive force in the potential field at the goal and a repulsive force in the potential field generated by obstacles (i.e., pedestrians). Specifically, the attractive potential field function at goal and the repulsive potential field function for pedestrian \(i\) can be represented as:

where \(\delta\) is the attractive potential field constant, \(\phi\) is the repulsive potential field constant, \(d_{g}^{t}\) is the distance between the robot and the goal, \(d_{i}^{t}\) is the distance between the robot and pedestrian \(i\), and \(d_{huma.}\) represents the radius of the influence range of pedestrians. Furthermore, the magnitudes of the attractive and repulsive forces, respectively, are the negative gradients of the attractive and repulsive potential fields, as shown below:

As illustrated in Fig. 5, the resultant force acting on the robot in its workspace is the vector sum of the attractive and repulsive forces, expressed as:

We use the resultant force's direction \(\theta_{resu.}^{t}\) to represent the expected direction and characterize the degree of fit between the robot's expected and actual directions by the magnitude of the angle between the robot's expected direction and the actual direction \(\theta_{0}^{t}\). Thus, the direction reward function \(r_{dire.}^{t}\) can be constructed, denoted as:

where \(\zeta\) represents a hyperparameter. The expected direction \(\theta_{resu.}^{t}\) and the actual direction \(\theta_{0}^{t}\) are represented in radians. We normalize the directional reward function using \(\pi\) to maintain a similar range as position reward function functions and promote a balanced overall learning process.

Position reward function

In addition to the above direction reward function, we pay full attention to the relative positions of the robot to the goal and pedestrians and combine the requirements of the robot to avoid obstacles and quickly reach the goal of designing a position reward function.

This reward function consists of the goal-guidance term and the obstacle-avoidance term. Specifically, the goal-guidance term guides the robot to reach the goal quickly, including the reward obtained when the robot reaches the goal and the continuous reward generated when the robot approaches the goal during navigation, as shown in the following equation:

where \(\varsigma\) is the reward value when the robot reaches the goal. \(d_{g}^{t - 1}\) and \(d_{g}^{t}\) represent the distance between the robot and the goal at time \(t - 1\) and \(t\), respectively. \(\vartheta\) is the scaling factor for the continuous reward. The obstacle-avoidance term alerts the robot to avoid collisions with pedestrians and maintain a certain safety distance from them, which can be shown below:

where \(r_{coll.}^{t}\) and \(r_{safe}^{t}\) represent the reward when a collision occurs between the robot and a pedestrian and the reward when the distance between the robot and pedestrian \(i\) is less than the safety distance. Their calculation methods are as follows:

where \(\tau\) is the penalty when a collision occurs between the robot and a pedestrian, \(d_{i}^{t}\) is the distance between the robot and pedestrian \(i\) at time \(t\), and \(d_{safe}\) is the preset social safety distance. The position reward function at time \(t\) can be expressed as:

Integrating the direction reward function and the position reward function can construct a reward system that simultaneously considers position and direction factors. This system provides comprehensive reward feedback for the robot during the learning process, represented as:

Action strategy

In navigation tasks within crowded environments, pedestrians closely interact with the robot and display highly stochastic behavior. The robot's navigation actions are expected to demonstrate foresight in order to effectively avoid collisions and execute natural navigation behaviors within crowded environments. To achieve this, we introduce MCTS [30,31,32] to formulate the action policy in DRL. By expanding the search tree, MCTS helps the system generate high-quality decision choices, enhancing the robot's navigation actions to be more forward-looking.

Specifically, as illustrated in Fig. 6, we initially treat the original Q-value estimation process as the first planning step. We then select the top \(w\) Q-values based on the original Q-value estimation, each corresponding to \(w\) optimal candidate actions. Subsequently, leveraging the current state and candidate actions, along with the environmental model, we plan the subsequent states and corresponding rewards to achieve a deeper level of planning. In this research, we utilize the crowd forecasting framework from relational graph learning (RGL) [32] to learn the environmental model from simulated trajectories. Furthermore, when the planning step is \(d\), the Q-value expansion is given by:

where the planning step \(d\) represents the search depth, which can balance the consideration of current and future states. The w denotes the search width. Increasing the tree's width enables MIBE-DRL to consider states more meticulously. Based on the expanded Q-values, actions with long-term planning can be achieved, imparting foresight to the action strategy. When \(d = 1\), the Q-value estimation degenerates to the original Q-value estimation result, at which point the action strategy lacks foresight. Compared to the original action strategy, the integration of MCTS endows the action strategy with more vital foresight, enabling better adaptation to dynamic and variable navigation environments.

Experiments

Simulation setup

The experimental environment for this study is established based on the CrowdNav simulation environment [14, 17]. The robot's navigation space is a square area of \({10} \times {10}\;{\text{m}}^{2}\), and the distance between the initial position and the goal is 8 m. The experiments are configured with two navigation scenarios: general dense and dense. In the general dense scenarios, the number of pedestrians \(N\) is set to 5, with their initial position and goal located on a circular path with a radius of 4 m. These pedestrians are controlled by the ORCA [6], allowing them to interact near the center of the circle around 4 s and reach their goals in approximately 8 s. In dense scenarios, the number of pedestrians \(N\) increases to 10. Apart from the five pedestrians with initial and target positions on the circular path, five additional pedestrians randomly sample their initial positions and goals within the navigation space. In the general dense or dense scenarios, pedestrians randomly reset a new goal within the square after reaching their respective goals, with the previous target position recorded as a turning point. Additionally, the robot is not visible to pedestrians. Thus, pedestrians do not have any avoidance strategies toward the robot. This necessitates the robot to have more anticipatory collision avoidance strategies.

Implementation details

Our experiments were built through the PyTorch framework, and the proposed MIBE-DRL was updated by Adam [38]. The learning rate was set to 0.001 during the experiments, and the discount factor \(\gamma\) was 0.9. The robot's preset velocity \(v_{pres.}\) was 1 m/s, and both the robot and pedestrians have a radius of 0.3 m. The safety distance \(d_{safe}\) between the robot and pedestrians was set to 0.2 m, and the radius \(d_{huma.}\) of the pedestrian's influence range was chosen as 3 m. The number of sectors \(U\) of the pedestrian interaction module was set to 12. In the reward system, the attraction potential field constant \(\delta\), the repulsion potential field constant \(\phi\), and the reward value \(\varsigma\) when the robot reaches the goal were all set to 1. The hyperparameter \(\zeta\) and the scaling factor for the continuous reward \(\vartheta\) were all 0.1. The penalty \(\tau\) was set to − 0.25. In the action policy, the width \(w\) and depth \(d\) of the MCTS were 2 and 5, respectively.

Quantitative evaluation

The proposed MIBE-DRL in this study extensively integrates the idea of combining data-driven and model-driven. It incorporates model-induced biases into network design, reward function construction, and action policy formulation in the deep reinforcement learning structure. To thoroughly assess the performance of the method, we conducted comparisons with six existing methods: ORCA [6], CADRL [12], long short-term memory reinforcement learning (LSTM-RL) [39], SARL [13], RGL [32], and SG-DQN [14]. Among them, ORCA is a classical model-driven method. CADRL is an early DRL-based navigation method that initially considers the interaction among agents using a shallow neural network. LSTM-RL incorporates long short-term memory (LSTM) [40] into the DRL framework, enabling a deeper understanding of the interactions among agents by focusing on the sequential information in the navigation environment. SARL incorporates the social attention mechanism into the DRL framework, taking into account the social aspects of pedestrians. RGL and SG-DQN adopt advanced graph convolutional network (GCN) [41] and GAT designs within the DRL framework, respectively, allowing for an adequate representation of the interactions among agents in crowded environments. Each method underwent 500 random tests in general and dense scenarios.

Throughout the experiments, metrics such as "Succ. rate" (success rate, probability of the robot reaching the goal without collisions), "Coll. rate" (collision rate, probability of collisions between the robot and humans), "Navi. time" (navigation time, time taken for the robot to reach the goal in seconds), "Safe. rate" (safety rate, ratio of steps where the robot maintains a safe social distance to the total steps), and "Stan. devi." (standard deviation of navigation times for successful navigation, representing the stability of the navigation strategy) were collected and reported in Tables 1 and 2.

Table 1 illustrates that ORCA exhibits the highest collision rate and performs relatively mediocrely regarding navigation time, safety rate, and navigation time standard deviation. This is because this model-driven approach is designed based on a direct understanding of navigation rules and cannot adequately consider the potential interactions among agents in dynamic environments. CADRL utilizes a shallow network to account for potential interactions in congested environments. It overlooks the mutual influence among pedestrians and lacks consideration in the temporal dimension, resulting in subpar navigation performance. LSTM-RL and SARL, utilizing long short-term memory (LSTM) and socially attentive network, respectively, for understanding potential interactions in navigation environments. Due to the excellent performance of these networks, their navigation performance outperforms CADRL with shallow networks. However, a considerable gap remains compared to MIBE-DRL, which incorporates model inductive bias. RGL and SG-DQN, while recognizing the potential influence among pedestrians in understanding pedestrian interactions, fail to capture the long-term dependency relationships of agent states in the temporal dimension. Consequently, their understanding of the interactions among agents in the navigation environment remains limited, leading to inferior navigation performance compared to MIBE-DRL.

Our MIBE-DRL, incorporating model inductive bias into deep reinforcement learning structures, comprehensively considers the interactions between pedestrians in the temporal and spatial dimensions, and the reward feedback mechanism and action policy of MIBE-DRL are also refined to a certain extent. As a result, MIBE-DRL has the highest navigation success rate, and the navigation time is reduced by at least 0.235 s. Additionally, MIBE-DRL demonstrates a navigation safety rate of 0.971 and a navigation time standard deviation of 1.603, both superior to all comparative methods, indicating that MIBE-DRL encourages the robot to maintain a socially comfortable distance from humans and exhibits a more stable navigation strategy. Overall, benefiting from the enhanced effects of model-induced biases, MIBE-DRL outperforms comparative methods in success rate, navigation time, safety rate, and navigation time standard deviation.

Table 2 reports the metrics for MIBE-DRL and comparative methods in dense scenarios. MIBE-DRL performs well, achieving a higher navigation success rate and a faster navigation time than the model-driven ORCA method. Furthermore, MIBE-DRL surpasses CADRL and LSTM-RL, two DRL-based methods, in success rate, navigation time, safety rate, and navigation time standard deviation. Notably, CADRL, relying on a shallow network to understand agent interactions, exhibits a higher collision rate than ORCA, indicating its poor adaptability to dense scenarios. Apart from a slightly lower navigation time standard deviation than RGL, MIBE-DRL outperforms RGL and SG-DQN in all metrics, demonstrating that MIBE-DRL provides an efficient, safe, and stable navigation strategy for robots in dense pedestrian environments, enabling the robot to reach the target quickly while successfully avoiding pedestrians.

Qualitative evaluation

To showcase qualitative experiment results, we depict the motion trajectories of robots driven by different methods in general dense and dense scenarios, recorded in Figs. 7 and 8. In these figures, a black line represents the robot’s trajectory, and pedestrian trajectories are depicted in other colored lines. Circles in the lines indicate integer-time positions, and numbers near the trajectory denote the time of that position, marked every 4 s, with a final time marking at the goal. Additionally, pedestrians’ initial and turning positions are marked with a pentagon and square, respectively. These figures serve as an effective tool for understanding the dynamics of robot navigation in the presence of pedestrians.

Qualitative experimental results of robot navigation in general dense scenarios. The black line in the figure represents the navigation trajectory of the robot, and the lines of different colors represent the trajectories of pedestrians. The numbers along the lines indicate the time the robot or pedestrians take to reach those positions, marked every 4 s, with time indicators also applied at the final positions. The initial positions of pedestrians are marked with a pentagon, and their turning positions are marked with a square

Qualitative experimental results of robot navigation in dense scenarios. The black line represents the robot’s trajectory, while the lines of other colors represent the pedestrians’ trajectories. The numbers around the lines indicate the time taken for the robot or pedestrians to reach those positions, marked every 4 s, with the final positions also marked with time indicators. Additionally, the initial and turning positions of the pedestrians are marked with a pentagon and a square, respectively

Figure 7 displays qualitative experiment results for navigation in general dense scenarios by various methods. Due to the limitations of model-driven methods in considering potential interactions among agents in dynamic environments, the ORCA exhibits longer navigation times and trajectories. In contrast, reinforcement learning methods show shorter navigation times than the model-driven ORCA. The CADRL tends to make significant turns only when very close to pedestrians, while the LSTM-RL method-driven robot begins turning around 1 s, resulting in better navigation performance. SARL, RGL, and SG-DQN method-driven robots achieve navigation times of 10.5 s, 10.5 s, and 10.0 s, respectively, significantly lower than ORCA, CADRL, and LSTM-RL, and they start turning around 1 s to avoid pedestrians. The proposed MIBE-DRL has an overall navigation time of 9.5 s, notably superior to the methods above, and its trajectory is smoother with no significant turns. Additionally, MIBE-DRL exhibits avoidance behavior towards pedestrians from the beginning.

Figure 8 illustrates qualitative experiment results for robot navigation in dense scenarios. Due to the limitations of model-driven methods, the ORCA experiences collisions during navigation. Both CADRL and LSTM-RL successfully drive the robot to the target position, but they make significant turns only around 3 s and 2 s, respectively, to avoid pedestrians. Furthermore, SARL, RGL, and SG-DQN methods achieve navigation times within 12 s, while the proposed MIBE-DRL takes only 10.2 s to reach the goal, faster than SARL, RGL, and SG-DQN by 1.6 s, 1.3 s, and 0.3 s, respectively. Overall, our method demonstrates excellent navigation performance in these scenarios.

Ablation study

A detailed ablation study was conducted to explore the enhancing effects of these components on robot navigation tasks in pedestrian environments, and the results are recorded in Tables 3 and 4. In the baseline of the ablation study, only fundamental spatial interactions between the robot and pedestrians are considered, and the robot is solely driven by an essential position reward function with no foresight in action selection. “Network,” “Reward,” and “Action” in tables represent the multi-interaction network, reward system, and action strategy, respectively, in MIBE-DRL, enhanced by model induction bias. Furthermore, the results for general dense and dense scenarios are depicted in Fig. 9a, b, respectively. In these figures, the left column represents the navigation results of MIBE-DRL without model induction bias enhancement, and the right column represents the navigation results of the proposed MIBE-DRL.

Comparison of robot navigation trajectories before and after model inductive bias enhancement. The black line in the figure is the navigation trajectory of the robot, while the lines of different colors are the trajectories of pedestrians. The numbers surrounding the lines indicate the time the robot or pedestrians take to reach those positions. Time markers are placed every 4 s along the trajectories, including the final positions. Additionally, the initial positions of pedestrians are marked with a pentagon, and their turning positions are marked with a square

Table 3 illustrates the enhancement effects of various model induction biases in general dense scenarios. Specifically, incorporating the multi-interaction network reduces the robot's navigation time by 0.693 s, and other metrics also show specific improvements. This suggests that careful consideration of interactions among intelligent agents in pedestrian environments enhances robot navigation performance. Introducing model induction bias into the reward function further enhances navigation performance, emphasizing the positive role of refining reward feedback mechanisms in improving robot navigation tasks. With the thorough integration of model induction bias into the deep reinforcement learning network, reward function, and action policy, the robot achieves optimal performance in all navigation aspects except the navigation time standard deviation, with the navigation time standard deviation being only 0.017 higher than the optimal value. This powerfully demonstrates model induction bias's positive impact on enhancing reinforcement learning's navigation performance. Table 4 also reflects similar experimental results. As the model induction bias is introduced separately into the network, reward function, and action policy, the robot's navigation time is reduced by 0.875 s, 0.194 s, and 0.788 s, respectively. Furthermore, there is an improvement in navigation success rate, navigation safety rate, and navigation time standard deviation. This indicates that introducing model induction bias significantly enhances the navigation performance of MIBE-DRL in dense scenarios.

As depicted in Fig. 9, introducing model induction bias leads to a noticeable reduction in robot navigation time, accompanied by smoother navigation trajectories without substantial turns. Further comparison of the trajectories reveals that MIBE-DRL without model induction bias enhancement tends to make turns only when close to pedestrians, whereas MIBE-DRL with model induction bias exhibits avoidance actions at earlier times. It can be seen that introducing model induction bias improves the navigation performance of MIBE-DRL, contributing positively to enhancing robot navigation performance in pedestrian environments.

Discussion

In this work, we introduce the model inductive biases into the design process of relevant components in DRL, including the value network, reward function, and action policy, which allows these components to benefit from the model inductive biases, leading to improvements in three key aspects: understanding agent interactions, feedback mechanism design, and action foresight. Our work not only enhances the navigation efficiency and safety of robots but also serves as a compelling example of how incorporating model inductive biases can elevate the performance of DRL.

However, the introduction of model-induced biases into DRL has its challenges. One key challenge is effectively embedding the model's inductive bias into the DRL framework, which demands meticulous algorithm architecture design. Second, it is a challenge to determine whether model inductive biases align with the specific tasks or environments. Different tasks and environments may require different rules and knowledge, necessitating the careful selection of models to ensure their effectiveness and applicability. Lastly, introducing model-induced biases may increase the complexity of DRL, underscoring the need to strike a balance between method complexity and effectiveness, a crucial consideration in practical operations.

Furthermore, our work has certain limitations that need further improvement and optimization. First, although MIBE-DRL achieves the highest navigation success rate compared to state-of-the-art methods and has advantages in maintaining a safe social distance from humans, there is still a certain probability of collision. In practical environments, collisions can be dangerous and lead to significant losses, even if the probability is minimal. Second, while MIBE-DRL benefits from the learning capabilities of data-driven methods and the inductive biases of models, the fusion of these operations increases the complexity of the method. Finally, the complexity of real-world navigation environments could present even more significant challenges for mobile robots, and the experimental scenarios in this study are relatively structured.

In our future research, we will strongly emphasize the safety of robot navigation tasks, aiming to reduce the probability of collisions significantly. We will also optimize the complexity of navigation methods and validate them in more unstructured and complex scenarios. Additionally, we will explore the integration of DRL and models in a broader range of robot tasks, thereby expanding the applicability and impact of our research.

Conclusion

This study introduces MIBE-DRL by introducing the model inductive biases into network design, reward function construction, and action policy formulation of DRL to enhance DRL-based navigation in crowded environments. The multi-interaction network in MIBE-DRL, encompassing pedestrian, temporal, and spatial modules, provides a nuanced understanding of interactions among agents. A reward system that comprehensively considers the robot's orientation and position factors allows for reasoned evaluations of its motion direction and position during training, enabling it to receive timely and informative feedback. The inclusion of MCTS promotes foresighted action strategies. Experimental results highlight the effectiveness of model induction biases, positioning MIBE-DRL with superior success rates, navigation times, and social distance maintenance compared to existing methods.

Data availability

The data supporting the findings of this study are available within the article.

References

Khatib O (1986) Real-time obstacle avoidance for manipulators and mobile robots. Int J Robot Res 5:90–98. https://doi.org/10.1177/027836498600500106

Abdalla TY, Abed AA, Ahmed AA (2017) Mobile robot navigation using PSO-optimized fuzzy artificial potential field with fuzzy control. IFS 32:3893–3908. https://doi.org/10.3233/IFS-162205

Orozco-Rosas U, Montiel O, Sepúlveda R (2019) Mobile robot path planning using membrane evolutionary artificial potential field. Appl Soft Comput 77:236–251. https://doi.org/10.1016/j.asoc.2019.01.036

Helbing D, Molnár P (1995) Social force model for pedestrian dynamics. Phys Rev E 51:4282–4286. https://doi.org/10.1103/PhysRevE.51.4282

Van Den Berg J, Lin M, Manocha D (2008) Reciprocal velocity obstacles for real-time multi-agent navigation. 2008 IEEE international conference on robotics and automation. IEEE, Pasadena, pp 1928–1935

Van Den Berg J, Guy SJ, Lin M, Manocha D (2011) Reciprocal n-body collision avoidance. In: Pradalier C, Siegwart R, Hirzinger G (eds) Robotics research. Springer, Berlin, pp 3–19

Alahi A, Goel K, Ramanathan V, Robicquet A, Fei-Fei L, Savarese S (2016) Social LSTM: human trajectory prediction in crowded spaces. 2016 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, Las Vegas, pp 961–971

Katyal KD, Hager GD, Huang C-M (2020) Intent-aware pedestrian prediction for adaptive crowd navigation. 2020 IEEE international conference on robotics and automation (ICRA). IEEE, Paris, pp 3277–3283

Sun J, Jiang Q, Lu C (2020) Recursive social behavior graph for trajectory prediction. 2020 IEEE/CVF conference on computer vision and pattern recognition (CVPR). IEEE, Seattle, pp 657–666

He Z, Sun H, Cao W, He HZ (2022) Multi-level context-driven interaction modeling for human future trajectory prediction. Neural Comput Appl 34:20101–20115. https://doi.org/10.1007/s00521-022-07562-1

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G, Petersen S, Beattie C, Sadik A, Antonoglou I, King H, Kumaran D, Wierstra D, Legg S, Hassabis D (2015) Human-level control through deep reinforcement learning. Nature 518:529–533. https://doi.org/10.1038/nature14236

Chen YF, Liu M, Everett M, How JP (2017) Decentralized non-communicating multiagent collision avoidance with deep reinforcement learning. 2017 IEEE international conference on robotics and automation (ICRA). IEEE, Singapore, pp 285–292

Chen C, Liu Y, Kreiss S, Alahi A (2019) Crowd-robot interaction: crowd-aware robot navigation with attention-based deep reinforcement learning. 2019 international conference on robotics and automation (ICRA). IEEE, Montreal, pp 6015–6022

Zhou Z, Zhu P, Zeng Z, Xiao J, Lu H, Zhou Z (2022) Robot navigation in a crowd by integrating deep reinforcement learning and online planning. Appl Intell 52:15600–15616. https://doi.org/10.1007/s10489-022-03191-2

Veličković P, Cucurull G, Casanova A, Romero A, Liò P, Bengio Y (2018) Graph attention networks. arXiv:1710.10903

Wang Z, Schaul T, Hessel M, van Hasselt H, Lanctot M, de Freitas N (2016) Dueling network architectures for deep reinforcement learning. arXiv:1511.06581

Martinez-Baselga D, Riazuelo L, Montano L (2023) Improving robot navigation in crowded environments using intrinsic rewards. 2023 IEEE international conference on robotics and automation (ICRA). IEEE, London, pp 9428–9434

Wang T, Peng X, Wang T, Liu T, Xu D (2024) Automated design of action advising trigger conditions for multiagent reinforcement learning: a genetic programming-based approach. Swarm Evol Comput 85:101475. https://doi.org/10.1016/j.swevo.2024.101475

Zhang L, Hou Z, Wang J, Liu Z, Li W (2023) Robot navigation with reinforcement learned path generation and fine-tuned motion control. IEEE Robot Autom Lett 8:4489–4496. https://doi.org/10.1109/LRA.2023.3284354

Zhou SK, Le HN, Luu K, Nguyen VH, Ayache N (2021) Deep reinforcement learning in medical imaging: a literature review. Med Image Anal 73:102193. https://doi.org/10.1016/j.media.2021.102193

Lyu J, Zhang Y, Huang Y, Lin L, Cheng P, Tang X (2022) AADG: automatic augmentation for domain generalization on retinal image segmentation. IEEE Trans Med Imaging 41:3699–3711. https://doi.org/10.1109/TMI.2022.3193146

Liao X, Shi J, Li Z, Zhang L, Xia B (2020) A model-driven deep reinforcement learning heuristic algorithm for resource allocation in ultra-dense cellular networks. IEEE Trans Veh Technol 69:983–997. https://doi.org/10.1109/TVT.2019.2954538

Wang Y, Jia Y, Zhong Y, Huang J, Xiao J (2023) Balanced incremental deep reinforcement learning based on variational autoencoder data augmentation for customer credit scoring. Eng Appl Artif Intell 122:106056. https://doi.org/10.1016/j.engappai.2023.106056

Liu T, Chen H, Hu J, Yang Z, Yu B, Du X, Miao Y, Chang Y (2024) Generalized multi-agent competitive reinforcement learning with differential augmentation. Expert Syst Appl 238:121760. https://doi.org/10.1016/j.eswa.2023.121760

Chen Z, Li J, Wu J, Chang J, Xiao Y, Wang X (2022) Drift-proof tracking with deep reinforcement learning. IEEE Trans Multimed 24:609–624. https://doi.org/10.1109/TMM.2021.3056896

Wang S, Khan A, Lin Y, Jiang Z, Tang H, Alomar SY, Sanaullah M, Bhatti UA (2023) Deep reinforcement learning enables adaptive-image augmentation for automated optical inspection of plant rust. Front Plant Sci 14:1142957. https://doi.org/10.3389/fpls.2023.1142957

Cai W, Wang T, Wang J, Sun C (2023) Learning a world model with multitimescale memory augmentation. IEEE Trans Neural Netw Learn Syst 34:8493–8502. https://doi.org/10.1109/TNNLS.2022.3151412

Pfeiffer M, Paolo G, Sommer H, Nieto J, Siegwart R, Cadena C (2018) A data-driven model for interaction-aware pedestrian motion prediction in object cluttered environments. 2018 IEEE international conference on robotics and automation (ICRA). IEEE, Brisbane, pp 1–8

Dey R, Salem FM (2017) Gate-variants of gated recurrent unit (GRU) neural networks. 2017 IEEE 60th international Midwest symposium on circuits and systems (MWSCAS). IEEE, Boston, pp 1597–1600

Lu Q, Tao F, Zhou S, Wang Z (2021) Incorporating actor-critic in Monte Carlo tree search for symbolic regression. Neural Comput Appl 33:8495–8511. https://doi.org/10.1007/s00521-020-05602-2

Hong H, Jiang M, Yen GG (2023) Improving performance insensitivity of large-scale multiobjective optimization via Monte Carlo tree search. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2023.3265652

Chen C, Hu S, Nikdel P, Mori G, Savva M (2020) Relational graph learning for crowd navigation. 2020 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, Las Vegas, pp 10007–10013

Arulkumaran K, Deisenroth MP, Brundage M, Bharath AA (2017) Deep reinforcement learning: a brief survey. IEEE Signal Process Mag 34:26–38. https://doi.org/10.1109/MSP.2017.2743240

Liu S, Chang P, Huang Z, Chakraborty N, Hong K, Liang W, McPherson DL, Geng J, Driggs-Campbell K (2023) Intention aware robot crowd navigation with attention-based interaction graph. 2023 IEEE international conference on robotics and automation (ICRA). IEEE, London, pp 12015–12021

Wang W, Wu Z, Luo H, Zhang B (2022) Path planning method of mobile robot using improved deep reinforcement learning. J Electr Comput Eng 2022:1–7. https://doi.org/10.1155/2022/5433988

Zhou Y, Shu J, Hao H, Song H, Lai X (2024) UAV 3D online track planning based on improved SAC algorithm. J Braz Soc Mech Sci Eng 46:12. https://doi.org/10.1007/s40430-023-04570-7

Zheng L, Wang Y, Yang R, Wu S, Guo R, Dong E (2023) An efficiently convergent deep reinforcement learning-based trajectory planning method for manipulators in dynamic environments. J Intell Robot Syst 107:50. https://doi.org/10.1007/s10846-023-01822-5

Kingma DP, Ba J (2017) Adam: a method for stochastic optimization. arXiv:1412.6980

Everett M, Chen YF, How JP (2018) Motion planning among dynamic, decision-making agents with deep reinforcement learning. 2018 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, Madrid, pp 3052–3059

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9:1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Kipf TN, Welling M (2017) Semi-supervised classification with graph convolutional networks. arXiv:1609.02907

Acknowledgements

The authors would like to thank the anonymous reviewers and editors for their selfless help to improve our manuscript.

Funding

This research received no external funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, M., Huang, Y., Wang, W. et al. Model inductive bias enhanced deep reinforcement learning for robot navigation in crowded environments. Complex Intell. Syst. (2024). https://doi.org/10.1007/s40747-024-01493-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40747-024-01493-1