Abstract

This paper mainly solves the individual consistency and group consensus in the decision-making with hesitant fuzzy preference relations (HFPRs). The worst consistency index (WCI) is used to measure the individual consistency level. The envelop of an HFPR called envelop of HFPR (EHFRP) is proposed in the consensus reaching process (CRP). Two algorithms are proposed: one is to improve the WCI, in which only one pair of elements are revised in the consistency improving process each time, which aims to preserve the decision makers’ (DMs’) original information as much as possible. Another algorithm is proposed to improve the consensus in the CRP. To aggregate individual EHFPRs into one group HFPR, a new induced ordered weighted averaging (IOWA) operator is presented, called envelope HFPR-IOWA (EHFPR-IOWA), which allows the experts' preference to be aggregated in such a way that the most consistent ones are given more weight. Finally, an illustrative example and comparisons with the existing methods are provided to show the effectiveness of the proposed method.

Similar content being viewed by others

Introduction

In the actual decision-making process, the decision maker (DM) is usually required to offer their preference values over a set of alternatives. Preference relation is a useful tool to express the DMs’ preferences, which has been widely used in decision making and has gotten much attention over the past decades. Many types of preference relations have been proposed, such as fuzzy preference relation (FPR) [1,2,3], multiplicative preference relation [4, 5], linguistic preference relation [6, 7], interval-valued preference relation [8], intuitionistic preference relation [9,10,11]. Fuzzy sets are widely used [12], but they can only use one preference value to express the DM’s preference. In order to solve this problem, Torra [13] defined the hesitant fuzzy set (HFS) which employs several values to express the membership degree of the alternative, and now has been widely investigated recently [14, 15]. Based on the concept of HFS and FPR, Xia and Xu [16] defined hesitant fuzzy preference relation (HFPR).

Consistency plays an essential role in decision making, which guarantees the information provided by the DMs is rational [17,18,19]. In HFPR, each hesitant fuzzy element (HFE) has a set of preference values that denote the hesitant degree to which one alternative is preferred over another alternative. If two HFEs have different number of values, two opposite normalization principles are proposed: (1) α-normalization [20], which removes some of the elements in the long length of the HFEs and, (2) β-normalization [21, 22], which adds some elements into the short length of the HFEs. Zhu [23] proposed α-normalization and β-normalization for HFPRs. Subsequently, an FPR with a high consistency level was obtained using the α-normalization and regression method, which was used as the consistency level of the HFPR. Zhu et al. [22] first used α-normalization and β-normalization methods. Then, they used a distance measure to measure the consistency of the HFPR, i.e., the distance between the original HFPR and the HFPR that achieved acceptable consistency. Xu et al. [24] proposed an estimation measure normalization method to measure consistency based on additive consistency. Zhang et al. [21] used the β-normalization method to convert all the HFSs in the original HFPR into the same length, then measured the consistency through the distance and proposed an automatic iterative algorithm to improve the consistency.

Consensus is a significant problem that has been investigated widely in recent years. Currently, the consensus reaching process (CRP) includes interactive CRPs [24,25,26,27,28] and automatic CRPs [29, 30]. Gathering preferences, computing the agreement level, consensus control, and feedback generation are the primary aspects of the iteration-based CRP [31]. In gathering preferences, the ordered weighted average (OWA) operator and the induce ordered weighted average (IOWA) operator are widely used to aggregate preference relations into a collective one. Chen et al. [32] proposed an improved OWA operator generation algorithm and applied it to multicriteria decision-making. Jin et al. [33] proposed some standard and general forms of the IOWA operator, which takes the OWA weight vector as the inductive information. In the CRP, consensus checking and improvement process are two important processes. When DMs make decisions on the same issue, one needs to check whether their opinions are satisfied the requirements. The moderator will guide the experts to change their preferences, and finally achieve the consensus. Chen et al. [34] used the large-scale group decision-making method and k-means clustering method as well as the consensus reaching process to determine the final satisfaction level and ranking of passenger demands. Xu et al. [24] proposed an interaction mechanism and automatic mechanisms to achieve the predetermined consistency and consensus through a normalization approach based on additive consistency. He and Xu [35] proposed a consensus model implemented by a selection process and a consensus improvement process. Li et al. [36] proposed a consensus measure based on extracting priority weight vectors and constructing a model to reach the predetermined consensus. Xu et al. [37] proposed a group decision making (GDM) model that dynamically and automatically adjusts the weight of decision makers and uses an iterative consensus algorithm to improve the group consensus degree. The condition of the algorithm to stop is that both the individual consistency index and the group consensus index are controlled within the threshold. Wu and Xu [38] proposed a reciprocal preference relation-based consensus support model for GDM, designed a consistency adjustment process to make inconsistent reciprocal preference relations into acceptable consistency, and used an interactive method to achieve the consensus reaching process. Zhang et al. [39] developed a model to improve the consistency index, but it did not consider the consensus problem.

Based on the above literature review, there are still some limitations:

-

(1)

In [20,21,22,23,24,25], these papers use α-normalization, β-normalization, or other normalization methods to calculate the consistency and consensus of HFPRs. These methods make the number of elements in the HFS is same. However, it is ignored that the normalization method will distort the original information of DMs or cause information deficiency, making the decision result inaccurate.

-

(2)

In the study of consistency and consensus under HFPRs, some scholars only considered consistency but ignored consensus; some scholars considered to adjust the consensus index based on dynamic expert contributions in GDM without considering the consistency index. The consensus achieved by such adjustment may not be accurate enough because it ignores the validity of preference information provided by individuals. Some literature investigated both consistency and consensus, but it is not clear in the article whether the final individual consistency reaches an acceptable level. They ignored the consistency index in the process of consensus adjustment, and it may happen that the consistency index decreased or appeared to have unacceptable consistency when consensus was reached.

To overcome these limitations, this paper uses a non-normalization method to study the consistency and consensus of HFPR, and then proposes two algorithms to improve consistency and consensus based on this. Specifically, the main work of the paper is in twofold:

-

(1)

Worst consistency index (WCI) is used to measure the consistency degree of an HFPR. This paper uses 0–1 linear programming model [40, 41] to obtain the WCI of an HFPR. An iterative algorithm is proposed to improve the WCI. In each iteration, only one pair of preference values which is farthest from the consistent FPR is revised. In this way, the original information of DMs can be preserved as much as possible.

-

(2)

As different HFEs have different values, and it is hard to aggregate individual HFPRs into a group HFPR. In order to solve this problem, the envelope of an HFPR is proposed, a new IOWA operator is presented, which is called envelope HFPR-IOWA (EHFPR-IOWA) and the CRP is then carried out. It indicates that the CRP can be achieved and it can preserve the DMs’ original information as much as possible.

The rest of the paper is organized as follows. Section “Preliminaries” introduces some basic knowledge related to HFPRs. Section “Individual consistency of HFPRs” introduces the definition of WCI, and presents a 0–1 programming model to obtain WCI. Then, an algorithm is proposed to improve the WCI. A CPR algorithm is devised to help the DMs to reach the consensus. In section “An illustrative example and comparative analysis”, some numerical examples and comparative analysis are provided to illustrate the effectiveness of the proposed models. Finally, some conclusions are drawn in section “Conclusion”.

Preliminaries

For the sake of completeness, some basic concepts are reviewed.

FPRs

FPRs are the most common tools to express DMs’ preferences over alternatives and widely used in decision-making. The definition of FPRs can be represented as follows.

Definition 1

([42]). Let \(X = \{ x_{1} ,x_{2} ,...,x_{n} \}\) be a finite set of alternatives. An FPR on X is represented by a matrix, \(R = (r_{ij} )_{n \times n} \subset X \times X\) in which \(r_{ij} = \mu (x_{i} ,x_{j} ):X \times X \to\) \([0,1]\) with R assumed to be reciprocal in the following sense

\(r_{ij}\) represents the degree of the preference or intensity of the alternative \(x_{i}\) over \(x_{j}\): \(r_{ij}\) = 1/2 indicates that \(x_{i}\) and \(x_{j}\) is indifferent, \(r_{ij}\) = 1 indicates that \(x_{i}\) is absolutely preferred to \(x_{j}\), and \(r_{ij}\) > 1/2 indicates that \(x_{i}\) is preferred to \(x_{j}\). DMs only need to provide preferences for the upper triangular positions, the rest elements can be obtained from the reciprocal property.

Definition 2

([3, 42]). An FPR \(R = (r_{ij} )_{n \times n}\) is additively consistent if the following additive transitivity is satisfied:

It shows that for a consistent reciprocal preference relation, the distance of any two rows is a constant. Summing both sides of \(r_{ij} = r_{ik} - r_{jk} + r_{kk}\) for all k ∈ N, it is derived as

If R is a consistent reciprocal FPR, Eqs. (1) and (2) are equivalent. For any reciprocal FPR \(R = (r_{ij} )_{n \times n}\), one can use Eq. (2) to construct a consistent FPR \(A = (a_{ij} )_{n \times n}\), where

It means that A is an additively consistent FPR. If R is not a consistent reciprocal FPR, some elements \(a_{ij}\) maybe out of the scope [0,1], but in [− q, 1 + q] where q > 0. In such a case, Herrera-Viedma et al. [3] proposed a method to transform matrix \(A = (a_{ij} )_{n \times n}\) into another matrix \(A^{\prime} = (a^{\prime}_{ij} )_{n \times n}\) where

\(A^{\prime}\) is an FPR with additive consistency, \(a^{\prime} \in [0,1]\).

Based on Definition 2, Wu et al. [43] defined the additive CI of an FPR R as follows.

Definition 3

([43]). Let \(R = (r_{ij} )_{n \times n} \subset X \times X\) be an FPR, then the CI(R) is

Obviously, the higher the value CI(R) is, the more the consistent R is. If CI(R) = 1, then R is perfectly consistent. However, the initial preferences do not guarantee the perfect consistency which is expressed by the DM, a threshold \(\overline{CI}\) is set beforehand. If the current consistency level is lower than the threshold \(\overline{CI}\), i.e., \(CI(R) < \overline{CI}\), the DM needs to revise their preferences. If \(CI(R) \ge \overline{CI}\), the acceptable consistency is achieved and the decision result given by the DM is reasonable.

HFSs and HFPRs

Due to the complexity of the decision problems and the lack of expertise of DMs, they may hesitate in decision-making process, and give several preference values. To address this situation, Torra [13] introduced the concept of HFSs.

Definition 4

([13]). Let X be a fixed set, an HFS on X is in terms of a function h that when applied to X returns a subset of [0,1].

To be easily understood, Xia and Xu [44] expressed the HFS by a mathematical symbol

where \(h_{E} (x)\) is a set of values in [0,1], which denotes the possible membership degrees of the element \(x \in X\) to the set E. For convenience, \(h_{E} (x)\) is called a hesitant fuzzy element (HFE).

Xia and Xu [16] combined HFS with FPR and defined the HFPR. Later, Xu et al. [24] revised their definition that the elements do not need to be sorted in ascending or descending order.

Definition 5

([24]). Let \(X = \{ x_{1} ,x_{2} ,...,x_{n} \}\) be a fixed set, then an HFPR H on X is presented by a matrix \(H = (h_{ij} )_{n \times n} \subset X \times X\) where \(h_{ij} = \{ h_{ij}^{s} |s = 1,2,...,\# h_{ij} \}\) (\(\# h_{ij}\) is the number of elements in \(h_{ij}\)) is an HFE indicating all the possible preference degree(s) of the alternative \(x_{i}\) over\({\text{x}}_{\text{j}}\). Moreover, \(h_{ij}\) should satisfy the following conditions:

where \(h_{ij}^{s}\) and \(h_{ji}^{s}\) is the sth elements in \(h_{ij}\) and \(h_{ji}\), respectively.

In the process of decision-making, DMs are not sure about a determined value; they are hesitant in several values. The concept of hesitancy degree is defined as follows.

Definition 6

([45]). Let h be a HFS on \(X = \{ x_{1} ,x_{2} ,...,x_{n} \}\), and for any \(x_{i} \in X\), \(l(h(x_{i} ))\) be the length of \(h(x_{i} )\). Denote.

\(Hd(h(x_{i} ))\) is called the hesitancy degree of \(h(x_{i} )\), and Hd(H) the hesitancy degree of H, respectively. The larger the value of Hd(H), the more hesitant the DM.If Hd(H) = 1, it indicates that the DM is hesitant completely and difficult to determine the value of membership.

Definition 7

([46]). Let \(h_{i}\) (i = 1, 2, …, n) be a collection of HFS, and let \(h^{ + } = \mathop {\max }\limits_{{h_{i} \in h}} \left( {\{ h_{i} \} } \right)\), \(h^{ - } = \mathop {\min }\limits_{{h_{i} \in h}} \left( {\{ h_{i} \} } \right)\) and \(env(h) = [h^{ - } ,h^{ + } ]\). Then h+, \(h^{ - }\) and env(h) are, respectively, called the lower bound, the upper bound and the envelope of h.

Example 1.

Let \(h = \{ 0.2,03,0.4,0.5,0.6\}\) be an HFS, its envelope is \(h^{ - } = \min \{ 0.2,0.3,0.4,0.5,0.6\} = 0.2\), \(h^{ + } = \max \{ 0.2,0.3,0.4,0.5,0.6\} = 0.6\), \(env(h) =\)\([0.2,0.6]\).

Definition 8

([47]). Let \(h_{1} = [h_{1}^{ - } ,h_{1}^{ + } ]\) and \(h_{2} = [h_{2}^{ - } ,h_{2}^{ + } ]\), then the degree of possibility of \(h_{1} \ge h_{2}\) is formulated by

and construct a FPR \(P = (p_{ij} )_{n \times n}\) where \(p_{ij} = p(h_{1} \ge h_{2} )\), \(p_{ij} > 0\), \(p_{ij} + p_{ji} = 1\), \(p_{ii} = 0.5\), i, j = 1, 2, …, n.

IOWA aggregation operator

Let \(X = \{ x_{1,} ,...,x_{n} \}\) be a finite set of n alternatives and \(E = \{ e_{1} ,...,e_{m} \}\) be a set of m DMs. \(H_{v} = (h_{ij,v} )_{n \times n}\) is an HFPR matrix given by DM \(e_{v} \in E\), \(v = 1,2,...,m\), where \(h_{ij,v}^{{}}\) represents \(e_{v}\)’s preference degree of the alternative \(x_{i}\) over \(x_{j}\).

Yager [48] proposed a procedure to evaluate the overall satisfaction of quantifier Q important (\(u_{v}\)) criteria (or experts) (\(e_{v}\)) by the alternative \(x_{j}\). In this procedure, once the satisfaction values to be aggregated have been ordered, the weighting vector associated with an OWA operator using a linguistic quantifier Q are calculated by the following expression

being \(T = \sum\nolimits_{v = 1}^{i} {u_{\sigma (v)} }\) the total sum of importance, and σ the permutation used to produce the ordering of the values to be aggregated. In our case, the consistency levels of the HFPRs are used to derive the “importance” values associated with the experts.

The IOWA operator was introduced by Yager and Filev [49] as an extension of the OWA operator to allow for a varied sequencing of the aggregated values.

Definition 9

([49]). An IOWA operator of dimension n is a function \(\Phi w:(R \times R)^{n} \to R\), to which a set of weights is associated, \(W = (w_{1} ,...,w_{m} )^{T}\) with \(w_{i} \in [0,1]\), \(\sum\nolimits_{i} {w_{i} = 1}\), and it is defined to aggregate the set of second arguments of a list of n two-tuples \(\{ < u_{1} ,p_{1} > ,..., < u_{n} ,p_{n} > \}\), the expression is as follows:

being σ a permutation of \(\{ 1,2,...,n\}\) such that \(u_{\sigma (i)} \ge u_{\sigma (i + 1)}\),\(\forall \, i = 1,...,n - 1\), i.e., \(< u_{\sigma (i)} ,p_{\sigma (i)} >\) is the two-tuple with \(u_{\sigma (i)}\), the ith highest value in the set \(\{ u_{1} ,...,u_{n} \}\).

In the above definition, the reordering of the set of values to be aggregated \(\{ p_{1} ,...,p_{n} \}\) is induced by the reordering of the set of values \(\{ u_{1} ,...,u_{n} \}\) associated with them, which is based upon their magnitude. Due to this use of the set of values \(\{ u_{1} ,...,u_{n} \}\), Yager called them the values of an order inducing variable and \(\{ p_{1} ,...,p_{n} \}\) the values of the argument variable [48,49,50,51].

Yager [48] considers the parameterized family of regular increasing monotone quantifiers

In general, when a fuzzy quantifier Q is used to compute the weight of IOWA operator.

Individual consistency of HFPRs

In this section, WCI of an HFPR is introduced, and a method is proposed to obtain a matrix which has the WCI in HFPR. Then, an iterative algorithm is used to adjust this matrix to reach the predefined threshold.

Worst consistency of HFPRs

For an HFPR H, all the set of possible FPRs \(\Omega\) can be represented as:

Clearly, \(\# h_{ij}\) is the number of elements in \(h_{ij}\), where \(1 \le i < j \le n\), then there will be \(\prod\nolimits_{i = 1}^{n - 1} {\prod\nolimits_{j = i + 1}^{n} {\# h_{ij} } }\) possible FPRs in \(\Omega_{H}\). For convenience, let \(l = \prod\nolimits_{i = 1}^{n - 1} {\prod\nolimits_{j = i + 1}^{n} {\# h_{ij} } }\) and let all the possible FPRs be denoted by \(B^{q} = (b_{ij}^{q} )_{n \times n}\) \((q = 1,2,...,l)\).

First, the definition of the WCI of an HFPR is as follows:

Definition 10

([39]). Let H be an HFPR and \(\Omega_{H}\) is the collection of all the possible FPRs associated with H, then the WCI of HFPR is

WCI(H) is determined by the FPR with the CI in \(\Omega_{H}\). It also provides the lower bound of the consistency level for an HFPR H. In addition, the larger the value WCI(H) is, the more the consistent H.

In the actual decision-making process, due to the difference in the knowledge background and technical ability of the DMs, the decision-making result of the DMs is not the optimal solution. Therefore, based on Definition 6, Zhang et al. [39] provided a method to calculate the WCI(H) as follows:

By introducing variables \(g_{ijk} =\)\(b_{ij} + b_{jk} - b_{ik} - 0.5\), \(|g_{ijk} | = f_{ijk}\), model (12) can be equivalently transformed into the following model:

Example 2.

Let H be an HFPR, which is shown as follows:

Based on \(l = \prod\nolimits_{i = 1}^{n - 1} {\prod\nolimits_{j = i + 1}^{n} {\# h_{ij} } }\), one can see that there are 72 possible FPRs in the original HFPR \(H = (h_{ij} )_{4 \times 4}\). Solving model (13), one can obtain six matrices can obtain that having the same consistency index WCI(H) = 0.7333, where

Definition 11: Let \(H = (h_{ij} )_{n \times n}\) be an HFPR and let \(\overline{WCI} \, (\overline{WCI} \ge 0)\) be the consistency threshold, if \(WCI(H) \le \overline{WCI}\), H called an acceptably consistent HFPR.

Obviously, when the WCI of a given HFPR \(H = (h_{ij} )_{n \times n}\) is acceptable, then all FPRs \(B = (b_{ij} )_{n \times n}\) belonging to the HFPR are acceptably consistent, where \(b_{ij} \in h_{ij}\). Controlling the worst consistency level of the original HFPR can ensure the rationality of the results since all FPRs of the original HFPR have been considered. Therefore, this paper believes that the consistency of an HFPR is acceptable only when its WCI meets the predefined consistency level.

Improving the WCI of HFPRs

This section details how to improve the WCI of an HFPR. When the WCI of HFPR does not reach the predetermined threshold, experts need to revise their preferences or consider constructing a new preference relation.

First, one can get a FPR B which has the WCI of the HFPR. If the WCI(H) is smaller than the predefined threshold \(\overline{WCI}\), one should adjust matrix B to achieve the predetermined threshold. Then one put the modified FPR into the original HFPR, and recalculate the WCI. To keep the original information as much as possible, only one pair of elements is revised every time in the adjustment process for the preference relations.

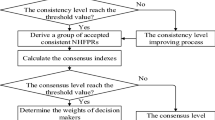

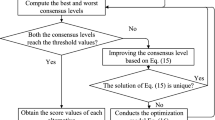

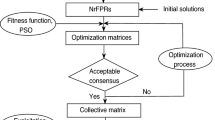

The WCI improving process for HFPRs is detailed in Algorithm 1.

Examples for consistency improvement

Example 3.

(Continued from Example 2) To demonstrate Algorithm 1, Example 1 is still used for analysis.

Setting \(\overline{WCI} = 0.9\), the consistency adjustment parameter of β = 0.6.

Algorithm 1 is used to examine and improve the WCI of H.

Round 1. The WCI based on model (13) is used, and one have WCI(H(1)) = 0.7333 and six FPR matrices which have the same WCI, denoted as \(B^{(1)t}\)

As \(WCI(H^{(1)} ) < \overline{WCI}\), the consistent FPR \(\tilde{B}^{(1)1}\) is calculated by Eq. (3). Then Step 4 in Algorithm 1 is applied, from which the deviation matrix \(\theta_{1}^{(1)1}\) is obtained;

As \((i_{\tau } ,j_{\tau } ) = (1,2)\) and \(b_{ij,f + 1}^{(p)t} = \beta b_{ij,f}^{(p)t} + (1 - \beta )\tilde{b}_{ij,f}^{(p)t} = b_{12,1 + 1}^{(1)1} = 0.6b_{12,1}^{(1)1} + (1 - 0.6)\tilde{b}_{12,1}^{(1)1}\)\(= 0.41\). After 7 iterations, the modified element at position (1,2) should be 0.5711, position (1, 3) should be 0.4655. And the modified matrix \(H^{(2)}\) is

Due to \(WCI(H^{(2)} ) = 0.8667 < 0.9\), a second round is needed.

Round 2. Based on model (13), \(WCI(H^{(2)} ) = 0.8667\) and two FPRs which have the same WCI, denoted as \(B^{(2)t}\). The adjusted FPR \(B^{(2)1}\), corresponding consistent FPR \(\tilde{B}^{(2)1}\) and deviation matrix \(\theta_{1}^{(2)1}\) are as follows:

Obviously, \((i_{\tau } ,j_{\tau } ) = (1,2)\) and \(b_{ij,f + 1}^{(p)t} = \beta b_{ij,f}^{(p)t} + (1 - \beta )\tilde{b}_{ij,f}^{(p)t} = b_{12,1 + 1}^{(2)1} = 0.6b_{12,1}^{(2)1}\)\(+ (1 - 0.6)\tilde{b}_{12,1}^{(2)1} = 0.4435\). After 2 iterations, the modified element at position (1,2) should be 0.6018. And the modified \(H^{(3)}\) is:

Due to \(WCI(H^{(3)} ) = 0.8904 < 0.9\), a third round is needed.

Round 3. Based on model (13), \(WCI(H^{(3)} ) = 0.8904\) and two FPRs which have the same WCI, denoted as \(B^{(3)t}\). The adjusted FPR \(B^{(3)1}\), corresponding consistent FPR \(\tilde{B}^{(3)1}\) and deviation matrix \(\theta_{1}^{(3)1}\) are:

From \(\theta_{1}^{(3)1}\), \((i_{\tau } ,j_{\tau } ) = (1,2)\) and \(b_{ij,f + 1}^{(p)t} = \beta b_{ij,f}^{(p)t} + (1 - \beta )\tilde{b}_{ij,f}^{(p)t} = b_{12,1 + 1}^{(2)1} = 0.6b_{12,1}^{(2)1}\)\(+ (1 - 0.6)\tilde{b}_{12,1}^{(2)1} = 0.6134\). After 1 iteration, the modified element at position (1, 2) should be 0.6134. And the modified matrix, \(H^{(4)}\) is:

Because \(WCI(H^{(4)} ) = 0.9 \ge 0.9\), Algorithm 1 is terminated and one have \(\tilde{H} = H^{(4)}\), only three elements in the upper triangular part of H are modified.

Calculate the hesitancy index of H by Eqs. (5)–(6), \(Hd(H)\) = 17/72, \(Hd(\tilde{H})\) = 17/72.

Zhang et al. [21] introduced a consistency improvement algorithm for HFPR. Using Algorithm 2 in Zhang et al. [21] to improve the consistency index, the adjusted HFPR \(\tilde{H}_{2}\) is

Table 1 illustrates the results between the proposed algorithm and Zhang et al. [21]’s method. Only 3 elements of the adjusted preference relation obtained using the proposed algorithm are changed. However, for the model in Zhang et al. [21], all of the elements are revised, it is hard for the DM to adjust all of their preferences. Further, the hesitancy degree about H and the adjusted matrix \(\tilde{H}\) using the proposed algorithm are preserved. When the algorithm in Zhang et al. [21]. is applied to adjust the HFPR H, the hesitancy degree is very large. As we can see, the HFPR obtained by this paper’s method can preserve the original opinions of DMs as much as possible.

Consensus building for HFPRs

In this section, the concept of EHFPRs is introduced, the measuring method of consensus index among group members is introduced, and then an algorithm of how to achieve group consensus is proposed.

Group consensus measure

Based on Definition 7, the concept of EHFPR is defined as follows:

Definition 12.

Let \(H = (h_{ij} )_{n \times n}\) be an HFPR, let \(G = (g_{ij} )_{n \times n} = ([g_{ij}^{ - } ,g_{ij}^{ + } ])_{n \times n}\) be an EHFPR, where \(g_{ij}^{ - }\) is the smallest value in \(h_{ij}\), and \(g_{ij}^{ + }\) is the largest term in \(h_{ij}\).

The distance between the EHFPRs can be defined as.

Definition 13.

Let \(G_{1} = (g_{ij,1} )_{n \times n} = [g_{ij,1}^{ - } ,g_{ij,1}^{ + } ]\) and \(G_{2} = (g_{ij,2} )_{n \times n} = [g_{ij,2}^{ - } ,g_{ij,2}^{ + } ]\) be two EHFPRs, the distance between G1 and G2 is defined as.

In consensus measure, two types are commonly used: one is the distance between the individual preference relation and the collective preference relation, the other are the distances among all the DMs. The first type of consensus measure is adopted in this paper. In this study, the EHFPR-IOWA operator is proposed to aggregate the individual HFPRs.

Based on Definition 9, the EHFPR-IOWA operator is defined as:

Definition 14.

Let \(E = \{ e_{1} ,e_{2} ,...,e_{m} \}\) be a set of DMs, and \(H_{v} = (h_{ij,v} )_{n \times n}\), \(v = 1,2,...,m\) be the HFPRs provided by the DMs on a set of alternatives \(X = \{ x_{1} ,x_{2} ,...,x_{n} \}\). The EHFPR-IOWA operator of dimension m, \(\Phi_{w}^{EHFPR}\) is an EHFPR-IOWA operator whose set of order inducing values is the set of worst consistency index values, \(\{ WCI_{1} ,WCI_{2} ,...,WCI_{m} \}\), associated with the set of DMs. Therefore, the collective HFPR is obtained as follows:

where Q is the fuzzy quantifier used to implement the fuzzy majority concept, and Eq. (8) is used to compute the weighting vector of the \(\Phi_{W}^{EHFPR}\).

Based on Eq. (16), one can obtain the collective preference relation. The group consensus is defined as:

Definition 15.

Let \(G_{v}\) (\(v = 1,2,...,m\)) be m EHFPRs provided by m individuals, where \(G_{v} = (g_{ij,v} )_{n \times n} = [g_{ij,v}^{ - } ,g_{ij,v}^{ + } ]\), \(v = 1,2,...,m\). Suppose \(G_{c} = (g_{ij,c} )_{n \times n} =\)\([g_{ij,c}^{ - } ,g_{ij,c}^{ + } ]\) is the group EHFPR aggregated by the EHFPR-IOWA operator. Then, the group consensus index (GCI) for Gv is.

If \(GCI(G_{v} ) = 1\), then the vth expert has perfect consensus with the group preference. Otherwise, the higher the value of \(GCI(G_{v} )\), the closer that expert is to the group.

If

then, all the DMs reach the consensus.

In fact, the predefined consensus threshold \(\overline{GCI}\) indicates the deviation degree between the individual preference relation and the group preference relation. In addition, this paper believes that the consensus is acceptable only when its GCI meets the predefined consensus threshold.

Consensus reaching process

In the GDM process, consensus process is essentially that consensus models need to be applied to assist the experts reach consensus. It means that most individuals are willing to revise their original preference values. By Definition 12, one can identify whose consensus level is not achieved.

In the following, an iterative procedure is proposed to achieve the consensus. This procedure stops until all the HFPRs reach an acceptable predefined consensus level or the maximum number of iterations is reached.

The detail of this consensus method is shown in Algorithm 2.

An illustrative example and comparative analysis

In this section, some examples are given to demonstrate the effectiveness of the proposed method.

An illustrative example

Supply Chain Management (SCM) is important for an industry. To reduce supply chain risk, maximize revenue, optimize business processes, and accomplish other goals, it is important to construct and SCM. It is a crucial issue to determine suitable supplies in SCM. The following example considers to select potential suppliers for a solar company with four potential suppliers (Zhang et al. [21]). Four managers were invited to provide their preference values for these four potential suppliers, and the four HFPRs Hv, v = 1, 2, 3, 4 are:

Without loss of generality, let \(\overline{WCI} = 0.9\), \(\overline{GCL} = 0.9\), \(\varsigma = 0.6\).

Step 1. Let \(f = 0\), \(H_{v(f)} = (h_{ij,v(f)}^{{}} )_{n \times n} = (h_{ij,v}^{{}} )_{n \times n}\), v = 1, 2, …, m.

Step 2. Using model (13), the WCI of the four HFPRs are WCI(H1) = 0.8333, WCI(H2) = 0.6, WCI(H3) = 0.7333, WCI(H4) = 0.8677.

The WCI of the four individual HFPRs are unsatisfactory. Let the consistency adjustment parameter \(\beta = 0.6\), Algorithm 1 is applied to improve the consistency of these HFPRs, and the improved HFPRs are:

The WCI for adjusted HFPRs are \(WCI(\tilde{H}_{1}^{{}} )\) = 0.9040, \(WCI(\tilde{H}_{2}^{{}} )\) = 0.9, \(WCI(\tilde{H}_{3}^{{}} )\) = 0.9, \(WCI(\tilde{H}_{4}^{{}} )\) = 0.9.

Based on the concept of envelope in Definition 9, one can obtain the EHFPRs \(\tilde{G}_{v} ,v = 1,2,...,m\) from HFPRs:

Step 3. The group preference relation is obtained by the EHFPR-IOWA operator. In this paper, \(Q(z) = z^{1/2}\) is used to represent fuzzy linguistic quantifier “most of”.

The detailed calculation steps are:

For example, the process of obtaining the group preference relationship \(H_{12,c}\) is:

WCI1 = 0.904, WCI2 = 0.9, WCI3 = 0.9, WCI4 = 0.9.

\(\tilde{g}_{12,1}^{ - } = 0.228\),\(\tilde{g}_{12,2}^{ - } = 0.3\), \(\tilde{g}_{12,3}^{ - } = 0.6018\), \(\tilde{g}_{12,4}^{ - } = 0.4\).

\(\sigma (1)\) = 1, \(\sigma (2)\) = 2, \(\sigma (3)\) = 3, \(\sigma (4)\) = 4.

\(T = WCI_{1} + WCI_{2} + WCI_{3} + WCI_{4}\) = 3.6040.

Q(0) = 0, \(Q\left( {\frac{{WCI_{4} }}{T}} \right) = 0.5008\), \(Q\left( {\frac{{WCI_{4} + WCI_{3} }}{T}} \right) = 0.7075\),\(Q\left( {\frac{{WCI_{2} + WCI_{3} + WCI_{4} }}{T}} \right) = 0.8662\), \(Q\left( {\frac{{WCI_{1} + WCI_{2} + WCI_{3} + WCI_{4} }}{T}} \right) = Q(1) = 1\).

w1 = 0.5008, w2 = 0.2067, w3 = 0.1587, w4 = 0.1338.

= 0.5008·0.2280 + 0.2067·0.3 + 0.1587·0.6018 + 0.1338·0.4

= 0.3252.

Other values can be obtained in a similar way, and the group consensus matrix is:

Step 4. Then using Eq.(17) to calculate the GCI, one obtain:\(GCI(\tilde{G}_{1} )\) = 0.8033, \(GCI(\tilde{G}_{2} )\) = 0.9125, \(GCI(\tilde{G}_{3} )\) = 0.8900, \(GCI(\tilde{G}_{4} )\) = 0.9226. As \(GCI(\tilde{G}_{1} )\) = 0.8033 < 0.9, \(GCI(\tilde{G}_{3} )\) = 0.8900 < 0.9, go to Step 5.

Step 5. Find the position of the element with the largest distance from the expert preference matrix to the group matrix and adjust it, one have:

Find the position of elements \(\theta_{{i_{\tau } j_{\tau } ,v(f)}}^{{}}\), where \(\theta_{{i_{\tau } j_{\tau } ,v(f)}}^{{}} = \max \{ \theta_{{i_{\tau } j_{\tau } ,v(f)}}^{ + } , \, \theta_{{i_{\tau } j_{\tau } ,v(f)}}^{ - } \}\). For \(\tilde{G}_{1(1)}\), since, \(\theta_{23,1(1)}^{{}} = \max \{ \theta_{23,1(1)}^{ - } ,\theta_{23,1(1)}^{ + } \}\)\(= \max \{ 0.2073,0.2859\} = 0.2859\) = \(\theta_{23,1(1)}^{ + }\). By Eq. (20), one have:

\(\tilde{g}_{23,1(2)}^{ + } = 0.6\tilde{g}_{23,1(1)}^{ + } + (1 - 0.6)g_{23,c(1)}^{ + }\) = 0.6⋅0.7863 + 0.4⋅0.5004 = 0.6719.

Similarly, other values can be obtained.

Step 6. Output the modified preference relations and group preference relation:

The consensus levels for the updated preference relations are \(GCI(\overline{H}_{1} )\) = 0.9082 \(GCI(\overline{H}_{2} )\) = 0.9085, \(GCI(\overline{H}_{3} )\) = 0.9001, \(GCI(\overline{H}_{4} )\) = 0.9225. The WCI of these adjustment matrices are \(WCI(\overline{H}_{1} )\) = 0.9040, \(WCI(\overline{H}_{2} ) = 0.9\), \(WCI(\overline{H}_{3} )\) = 0.9,\(WCI(\overline{H}_{4} )\) = 0.9119.

As we can see, the consensus has been reached. Then the alternatives can be ranked with the following steps.

Step 7. Based on AA operator, one can obtain the overall preference degree \(g_{i,c}\) (i = 1,2,3,4) of the alternative \(x_{i}\) (i = 1,2,3,4):

\(g_{1,c} = [0.4558,0.5162]\), \(g_{2,c} = [0.5136,0.5839]\), \(g_{3,c} = [0.5669,0.6158]\),

\(g_{4,c} = [0.3389,0.4085]\).

Step 8. Based on Eq. (7), and construct a FPR \(P = (p_{ij} )_{n \times n}\).

\(P = \left[ {\begin{array}{*{20}l} \begin{gathered} 0.5 \hfill \\ 0.9812 \hfill \\ 1 \hfill \\ 0 \hfill \\ \end{gathered} & \begin{gathered} 0.0188 \hfill \\ 0.5 \hfill \\ 0.857 \hfill \\ 0 \hfill \\ \end{gathered} \\ \end{array} \, \begin{array}{*{20}l} \begin{gathered} 0 \hfill \\ 0.143 \hfill \\ 0.5 \hfill \\ 0 \hfill \\ \end{gathered} & \begin{gathered} 1 \hfill \\ 1 \hfill \\ 1 \hfill \\ 0.5 \hfill \\ \end{gathered} \\ \end{array} } \right]\).

Step 9. Summing all elements in each line of the matrix P, i.e., \(p_{i} = \sum\nolimits_{j = 1}^{n} {p_{ij} }\), i = 1, 2, …, n: p1 = 1.5188, p2 = 2.6242, p3 = 4.357, p4 = 0.5, then one has \(p_{3} > p_{2} > p_{1} > p_{4}\). Therefore, the ranking of the alternatives is: \(x_{3} \succ x_{2} \succ x_{1} \succ x_{4}\), and the optimal alternative is \(x_{3}\).

Comparisons and discussions

Zhang et al. [21] introduced a decision support model for GDM to achieve the group consensus. To demonstrate the validity of the proposed method, a comparative study with Zhang et al. [21] method is conducted in this subsection.

Zhang et al. [21] used β-normalization to achieve the consensus, let the consistency threshold \(\overline{CI} = 0.9\), the consensus threshold \(\overline{GCI} = 0.9\), the consistency adjustment parameter λ = 0.6, and the consensus adjustment parameter θ = 0.6. Furthermore, in Zhang et al. [21]’s Algorithm 4, the DMs’ weights are given in advance as \(w = (0.1,0.5,0.3,0.1)^{T}\). If Algorithm 4 in Zhang et al. [18] is applied, then the normalized HFPRs are:

Using Zhang et al. [21]’s Algorithm 4, after two iterations, the adjusted HFPRs are:

Based on Zhang et al. [21]’s Algorithm 4, all the values in these HFPRs are changed. This means that the DM’s original information are distorted greatly.

Some comparative analyses with some of the related methods are also conducted.

-

(1)

In (Zhang et al. [21]), the β-normalization method is used and it requires that the elements in the HFPRs have the same length; however, the normalized HFPRs were different from the original HFPRs when the new values are added to the original elements. Further, the β-normalization based approach only considered some of the possible FPRs. The approach in Xu et al. [37] does not consider the individual consistency. Generally, the consistency of an HFPR demonstrates the inherent logic of the preferences in the HFPR; therefore, if the individual consistency level is unacceptable, the group decision derived by aggregating the individual preferences may be not reliable.

-

(2)

Zhu and Xu [52] used α-normalization to reduce the individual HFPR to FPR, and used the highest consistency level in FPR as the consistency of HFPR. However, it does not consider the adjustment process of consistency and used α-standardization, resulting in missing decision information for DM.

-

(3)

Zhang et al. [39] applied average consistency and best consistency indexes in their consistency control, they randomly generated some HFPRs and used mixed 0–1 linear programming model to improve the consistency index. But the consensus is not considered.

-

(4)

In this paper, both the consistency and consensus are considered. However, the methods in (Zhang et al. [39], Xu et al. [37]) only consider one of the consistency and consensus, these may cause decision result not accurate. In Zhang et al. [39]’s method, the weight of each expert is given in advance, and the contribution of the expert in the decision-making process is not considered. In Xu et al. [37]’s method, expert weights are dynamically adjusted in the process of achieving consensus, but consistency is not considered.

A brief comparison is provided in Table 2. In Table 2, ‘Consistency control’ meant that all individuals consistency levels still met the predefined consistency levels after the consensus process.

To summarize, Algorithm 1 considers all the possible FPRs associated with HFPRs without adding or deleting any values, and the WCI is introduced to guarantee that all possible FPRs are acceptably consistent. Then, Algorithm 2 is proposed to improve the consensus of the HFPRs.

Conclusion

Consistency and consensus play an important role in HFPRs. In this paper, two algorithms are proposed to improve the consistency and consensus. The main contributions of the paper are:

-

(1)

A non-standardized approach is used to adjust the consistency and consensus process of HFPR.

-

(2)

An iterative algorithm for adjusting the WCI of individual HFPRs is proposed. To maintain more original information, only the elements with the largest deviation values in the consistency matrix are adjusted in each iteration.

-

(3)

An iterative algorithm for group consensus of HFPR is proposed. When achieve group consensus the WCI keeping unchanged or improved. This avoids inaccurate decision results caused by preference relations provided by individuals who do not satisfy the consistency.

Some problems still need to be investigated, including the effects of different adjustment parameters on the inconsistency and consensus adjustment processes are not considered; the consistency and consensus thresholds in HFPR are artificially determined. In the future, we will focus on the impact of different adjustment parameters on the adjustment process of inconsistency and achieving group consensus adjustment and search for a more intelligent method to determine the thresholds.

References

Orlovsky SA (1978) Decision-making with a fuzzy preference relation. Fuzzy Sets Syst 1:155–157

Xu YJ, Patnayakuni R, Wang HM (2013) The ordinal consistency of a fuzzy preference relation. Inf Sci 224:152–164

Herrera-Viedma E et al (2004) Some issues on consistency of fuzzy preference relations. Eur J Oper Res 154(1):98–109

Saaty TL (1980) The analytic hierarchy process. McGraw-Hill, New York

Xu YJ, Li KW, Wang HM (2013) Distance-based consensus models for fuzzy and multiplicative preference relations. Inf Sci 253:56–73

Xu YJ, Da QL, Liu XW (2010) Some properties of linguistic preference relation and its ranking in group decision making. J Syst Eng Electron 21(2):244–249

Xu ZS (2005) Deviation measures of linguistic preference relations in group decision making. Omega 33(3):249–254

Barrenechea E et al (2014) Construction of interval-valued fuzzy preference relations from ignorance functions and fuzzy preference relations. Application to decision making. Knowl-Based Syst 58:33–44

Kumar PS (2022) Computationally simple and efficient method for solving real-life mixed intuitionistic fuzzy 3D assignment problems. Int J Softw Sci Comput Intell 9(3)

Kumar PS (2020) Developing a new approach to solve solid assignment problems under intuitionistic fuzzy environment. Int J Fuzzy Syst Appl 9(1):1–34

Xu ZS (2012) An error-analysis-based method for the priority of an intuitionistic preference relation in decision making. Knowl-Based Syst 33:173–179

Kumar PS (2016) A simple method for solving Type-2 and Type-4 fuzzy transportation problems. Int J Fuzzy Logic Intell Syst 16(4):225–237

Torra V (2010) Hesitant fuzzy sets. Int J Intell Syst 25:529–539

Liu PD, Mahmood T, Ali Z (2021) The cross-entropy and improved distance measures for complex q-rung orthopair hesitant fuzzy sets and their applications in multi-criteria decision-making. Complex Intell Syst 8(2):1167–1186

Krishankumar R et al (2021) An integrated decision-making COPRAS approach to probabilistic hesitant fuzzy set information. Complex Intell Syst 7(5):2281–2298

Xia MM, Xu ZS (2013) Managing hesitant information in Gdm problems under fuzzy and multiplicative preference relations. Int J Uncertain Fuzziness Knowl-Based Syst 21(06):865–897

Xu YJ et al (2021) Multiplicative consistency ascertaining, inconsistency repairing, and weights derivation of hesitant multiplicative preference relations. IEEE Trans Syst Man Cybern Syst. https://doi.org/10.1109/TSMC.2021.3099862

Xu YJ et al (2021) Algorithms to detect and rectify multiplicative and ordinal inconsistencies of fuzzy preference relations. IEEE Trans Syst Man Cybern Syst 51(6):3498–3511

Xu YJ et al (2022) Some models to manage additive consistency and derive priority weights from hesitant fuzzy preference relations. Inf Sci 586:450–467

Zhu B, Xu ZS (2014) Consistency measures for hesitant fuzzy linguistic preference relations. IEEE Trans Fuzzy Syst 22(1):35–45

Zhang ZM, Wang C, Tian XD (2015) A decision support model for group decision making with hesitant fuzzy preference relations. Knowl-Based Syst 86:77–101

Zhu B, Xu Z, Xu J (2014) Deriving a ranking from hesitant fuzzy preference relations under group decision making. IEEE Trans Cybern 44(8):1328–1337

Zhu B (2013) Studies on consistency measure of hesitant fuzzy preference relations. Procedia Comput Sci 17:457–464

Xu YJ, Cabrerizo FJ, Herrera-Viedma E (2017) A consensus model for hesitant fuzzy preference relations and its application in water allocation management. Appl Soft Comput 58:265–284

Wu ZB, Xu JP (2016) Managing consistency and consensus in group decision making with hesitant fuzzy linguistic preference relations. Omega 65:28–40

Chen Z-S et al (2021) Expertise-based bid evaluation for construction-contractor selection with generalized comparative linguistic ELECTRE III. Autom Constr 125

Lu YL et al (2022) Social network clustering and consensus-based distrust behaviors management for large-scale group decision-making with incomplete hesitant fuzzy preference relations. Appl Soft Comput 117:108373

Lu YL et al (2021) Consensus of large-scale group decision making in social network: the minimum cost model based on robust optimization. Inf Sci 547:910–930

Xu YJ et al (2018) Consistency and consensus models with local adjustment strategy for hesitant fuzzy linguistic preference relations. Int J Fuzzy Syst 20(7):2216–2233

Li MQ et al (2022) A trust risk dynamic management mechanism based on third-party monitoring for the conflict-eliminating process of social network group decision making. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2022.3159866

Labella Á, Estrella FJ, Martínez L (2017) AFRYCA 20: an improved analysis framework for consensus reaching processes. Prog Artif Intell 6(2):181–194

Chen Z-S et al (2019) An enhanced ordered weighted averaging operators generation algorithm with applications for multicriteria decision making. Appl Math Model 71:467–490

Jin L et al (2021) GnIOWA operators and some weights allocation methods with their properties. Int J Intell Syst 36(5):2367–2386

Chen Z-S et al (2021) Online-review analysis based large-scale group decision-making for determining passenger demands and evaluating passenger satisfaction: case study of high-speed rail system in China. Inf Fusion 69:22–39

He Y, Xu ZS (2017) A consensus reaching model for hesitant information with different preference structures. Knowl-Based Syst 135:99–112

Li J, Wang JQ, Hu JH (2019) Consensus building for hesitant fuzzy preference relations with multiplicative consistency. Comput Ind Eng 128:387–400

Xu YJ, Liu X, Xu LZ (2019) A dynamic expert contribution-based consensus model for hesitant fuzzy group decision making with an application to water resources allocation selection. Soft Comput 24(6):4693–4708

Wu ZB, Xu JP (2012) A concise consensus support model for group decision making with reciprocal preference relations based on deviation measures. Fuzzy Sets Syst 206:58–73

Zhang Z, Kou X, Dong Q (2018) Additive consistency analysis and improvement for hesitant fuzzy preference relations. Expert Syst Appl 98:118–128

Kumar PS (2020) Algorithms for solving the optimization problems using fuzzy and intuitionistic fuzzy set. Int J Syst Assurance Eng Manag 11(1):189–222

Kumar PS (2019) Intuitionistic fuzzy solid assignment problems: a software-based approach. Int J Syst Assurance Eng Manag 10(4):661–675

Tanino T (1984) Fuzzy preference orderings in group decision making. Fuzzy Sets Syst 12(2):117–131

Wu ZB, Jin BM, Xu JP (2018) Local feedback strategy for consensus building with probability-hesitant fuzzy preference relations. Appl Soft Comput 67:691–705

Xia MM, Xu ZS (2011) Hesitant fuzzy information aggregation in decision making. Int J Approx Reason 52(3):395–407

Li DQ, Zeng WY, Li JJ (2015) New distance and similarity measures on hesitant fuzzy sets and their applications in multiple criteria decision making. Eng Appl Artif Intell 40:11–16

Rodriguez RM, Martinez L, Herrera F (2012) Hesitant fuzzy linguistic term sets for decision making. IEEE Trans Fuzzy Syst 20(1):109–119

Xu ZS, Da QL (2002) The uncertain OWA operator. Int J Intell Syst 17(6):569–575

Yager RR (1996) Quantifier guided aggregation using OWA operators. Int J Intell Syst 11:49–73

Yager RR, Filev DP (1998) Operations for granular computing: Mixing words and numbers. In: IEEE International Conference on Fuzzy Systems at the World Congress on Computational Intelligence (WCCI 98), 123–128

Yager RR (2003) Induced aggregation operators. Fuzzy Sets Syst 137(1):59–69

Yager RR, Filev DP (1999) Induced ordered weighted averaging operators. IEEE Trans Syst Man Cybern-Part B Cybern 29(2):141–150

Zhu B, Xu ZS (2012) Regression methods for hesitant fuzzy preference relation. Technol Econ Dev Econ 19:S214–S227

Acknowledgements

This work was supported by the Fundamental Research Funds for the Central Universities under Grant Numbers 2019B69314.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Q., Liu, G., Zhang, T. et al. A consensus algorithm based on the worst consistency index of hesitant fuzzy preference relations in group decision-making. Complex Intell. Syst. 9, 1753–1771 (2023). https://doi.org/10.1007/s40747-022-00863-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00863-x