Abstract

Background

Peer assessment of performance in the objective structured clinical examination (OSCE) is emerging as a learning instrument. While peers can provide reliable scores, there may be a trade-off with students’ learning. The purpose of this study is to evaluate a peer-based OSCE as a viable assessment instrument and its potential to promote learning and explore the interplay between these two roles.

Methods

A total of 334 medical students completed an 11-station OSCE from 2015 to 2016. Each station had 1–2 peer examiners (PE) and one faculty examiner (FE). Examinees were rated on a 7-point scale across 5 dimensions: Look, Feel, Move, Special Tests and Global Impression. Students participated in voluntary focus groups in 2016 to provide qualitative feedback on the OSCE. Authors analysed assessment data and transcripts of focus group discussions.

Results

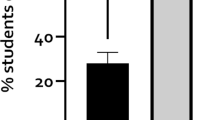

Overall, PE awarded higher ratings compared with FE, sources of variance were similar across 2 years with unique variance consistently being the largest source, and reliability (rφ) was generally low. Focus group analysis revealed four themes: Conferring with Faculty Examiners, Difficulty Rating Peers, Insider Knowledge, and Observing and Scoring.

Conclusions

While peer assessment was not reliable for evaluating OSCE performance, PE’s perceived that it was beneficial for their learning. Insight gained into exam technique and self-appraisal of skills allows students to understand expectations in clinical situations and plan approaches to self-assessment of competence.

Similar content being viewed by others

References

Carraccio C, Englander R. The objective structured clinical examination: a step in the direction of competency-based evaluation. Arch Pediatr Adolesc Med. 2000;154:736–41.

Khan R, Payne MWC, Chahine S. Peer assessment in the objective structured clinical examination: a scoping review. Med Teach. 2017;39:745–56.

Wilson M. Towards coherence between classroom assessment and accountability: University of Chicago Press; 2004.

Mislevy RJ, Haertel G, Riconscente M, Rutstein DW, Ziker C. Evidence-centered assessment design. Assessing model-based reasoning using evidence-centered design. Berlin: Springer; 2017. p. 19–24.

Mislevy RJ, Haertel GD. Implications of evidence-centered design for educational testing. Educational measurement: issues and practice, vol. 25. Hoboken: Wiley Online Library; 2006. p. 6–20.

Shepard LA. The role of assessment in a learning culture. Educational researcher, vol. 29. Thousand Oaks: Sage Publications Sage CA; 2000. p. 4–14.

Earl L. Assessment as learning. The keys to effective schools: educational reform as continuous improvement; 2007. p. 85–98.

Schuwirth LWT, Van der Vleuten CPM. Programmatic assessment: from assessment of learning to assessment for learning. Med Teach. 2011;33:478–85.

Nagy P. The three roles of assessment: gatekeeping, accountability, and instructional diagnosis. Can J Educ. 2000:262–79.

Schuwirth LWT, Van der Vleuten CPM. Programmatic assessment: from assessment of learning to assessment for learning. Med Teach. 2011;33:478–85.

Wiliam D. What is assessment for learning? Studies in educational evaluation, vol. 37. Berlin: Elsevier; 2011. p. 3–14.

Black P, Wiliam D. Assessment and classroom learning. Assess Ed. 1998;5:7–74.

Stiggins R, Chappuis J. Using student-involved classroom assessment to close achievement gaps. Theory Pract. 2005;44:11–8.

Young I, Montgomery K, Kearns P, Hayward S, Mellanby E. The benefits of a peer-assisted mock OSCE, vol. 11. Hoboken: The Clinical Teacher Wiley; 2014. p. 214–8.

Grover SC, Scaffidi MA, Khan R, Garg A, Al-Mazroui A, Alomani T, et al. Progressive learning in endoscopy simulation training improves clinical performance: a blinded randomized trial. Gastrointest Endosc. 2017;86(5):881–9.

Brown S. Assessment for learning. Learn Teach Higher Ed. 2004;1:81–9.

Earl L. Assessment as learning. The keys to effective schools: educational reform as continuous improvement; 2007. p. 85–98.

Chenot J-F, Simmenroth-Nayda A, Koch A, Fischer T, Scherer M, Emmert B, et al. Can student tutors act as examiners in an objective structured clinical examination? Med Educ. 2007;41:1032–8.

Basehore PM, Pomerantz SC, Gentile M. Reliability and benefits of medical student peers in rating complex clinical skills. Med Teach. 2014;36:409–14.

Moineau G, Power B, Pion A-MJ, Wood TJ, Humphrey-Murto S. Comparison of student examiner to faculty examiner scoring and feedback in an OSCE. Med Educ. 2011;45:183–91.

Iblher P, Zupanic M, Karsten J, Brauer K. May student examiners be reasonable substitute examiners for faculty in an undergraduate OSCE on medical emergencies? Med Teach. 2015;37:374–8.

Burgess A, Clark T, Chapman R, Mellis C. Senior medical students as peer examiners in an OSCE. Med Teach. 2013;35:58–62.

Creswell J. A concise introduction to mixed methods research: SAGE Publications; 2014.

Bloch R, Norman G. Generalizability theory for the perplexed: a practical introduction and guide: AMEE guide no. 68. Med Teach. 2012;34:960–92.

Brennan RL. Generalizability theory. Educ Meas Issues Pract. 1992;11:27–34.

Shavelson RJ, Webb NM, Rowley GL. Generalizability theory. 1992;

Crossley J, Russell J, Jolly B, Ricketts C, Roberts C, Schuwirth L, et al. “I’m pickin” up good regressions’: the governance of generalisability analyses. Med Educ. 2007;41:926–34.

Finch H. Comparison of the performance of nonparametric and parametric MANOVA test statistics when assumptions are violated. Methodology. Euro J Res Meth Behav Soc Sci. 2005;1:27.

Brennan RL. Generalizability theory. Educ Measure. 1992;11:27–34.

Walsh CM, Ling SC, Wang CS, Carnahan H. Concurrent versus terminal feedback: it may be better to wait. Acad Med. 2009;84:S54–7.

Hattie J, Timperley H. The power of feedback. Rev Educ Res. 2007;77:81–112.

Frierson HT, Hoban D. Effects of test anxiety on performance on the NBME part I examination. J Med Educ. 1987.

Cassady JC, Johnson RE. Cognitive test anxiety and academic performance. Contemp Educ Psychol. 2002;27:270–95.

Colbert-Getz JM, Fleishman C, Jung J, Shilkofski N. How do gender and anxiety affect students’ self-assessment and actual performance on a high-stakes clinical skills examination? Acad Med. 2013;88:44–8.

Lefroy J, Watling C, Teunissen PW, Brand P. Guidelines: the do’s, don’ts and don’t knows of feedback for clinical education. Perspectn Med Ed. 2015;4:284–99.

Cushing A, Abbott S, Lothian D, Hall A, Westwood OMR. Peer feedback as an aid to learning--what do we want? Feedback. When do we want it? Now! Med Teach. 2011;33:e105–12.

Cushing AM, Westwood OMR. Using peer feedback in a formative objective structured clinical examination. Med Educ. 2010;44:1144–5.

Tan CPL, Azila NMA. Improving OSCE examiner skills in a Malaysian setting. Med Educ. 2007;41:517.

Clark I. Formative assessment: ‘There is nothing so practical as a good theory.’. Aust J Educ. 2010;54:341–52.

Broadfoot PM, Daugherty R, Gardner J, Harlen W, James M, Stobart G. Assessment for learning: 10 principles. Cambridge: University of Cambridge School of Education; 2002.

Hattie J. Influences on student learning [internet]. Auckland: University of Auckland; 1999. Available from: http://geoffpetty.com/wp-content/uploads/2012/12/Influencesonstudent2C683.pdf

Woolf AD, Akesson K. Primer: history and examination in the assessment of musculoskeletal problems. Nat Clin Pract Rheumatol. 2008;4:26–33.

Hodges B, McIlroy JH. Analytic global OSCE ratings are sensitive to level of training. Med Educ. 2003;37:1012–6.

Funding

This project was financially supported by the Schulich Research Opportunities Program.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

Ethical approval for this study was granted by the Western University Research Ethics Board (REB: 106210). This research was performed in accordance with the ethical standards as laid down in the 1964 Declaration of Helsinki and its later amendments.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Khan, R., Chahine, S., Macaluso, S. et al. Impressions on Reliability and Students’ Perceptions of Learning in a Peer-Based OSCE. Med.Sci.Educ. 30, 429–437 (2020). https://doi.org/10.1007/s40670-020-00923-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40670-020-00923-2