Abstract

Purpose of review

The main research approaches in the field of addiction include qualitative studies, quantitative studies, and literature reviews. Researchers tend to have specific expertise in one, or perhaps two of these approaches, but are frequently asked to peer review studies using approaches and methods in which they are less well versed. This review aims to provide guidance to peer reviewers by summarizing key issues to attend to when reviewing studies of each approach.

Recent findings

A diverse range of research approaches are utilised in the study of addiction including quantitative, qualitative, and literature reviews. In this paper, we outline reporting standards for each research approach, and summarize how data are collected, analyzed, reported, and interpreted, as a guide for peer-reviewers to assess the robustness of studies.

Summary

Providing a good peer review requires that careful attention is paid to the specific requirements of the methods used. General principles of clarity around an evidence-based rationale, data collection and analysis, and careful interpretation remain fundamental, regardless of the method used. Reviews should be balanced and fair and based on the research and associated reporting requirements for the method used.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Introduction

Research in addiction studies spans an increasingly diverse range of substances and behaviors, and a correspondingly large array of prevalence, determinants, harms, preventions, and intervention efforts. The research approach taken in these studies is determined by the nature of the research question, with the main approaches being qualitative, quantitative, and literature reviews [1]. Qualitative studies can address questions around subjective experiences such as how social context influences recovery, whereas quantitative research addresses questions on “what and how much”, such as what attitudes people hold towards addiction or the degree to which a particular intervention improves symptom severity. Given the vast and diverse accumulation of research and knowledge, there are also reviews that provide summaries or a synthesis of current knowledge in a particular area. Commonly, researchers and reviewers are well versed in one approach or various methods within one approach but are less familiar with other approaches or methods.

Maintaining sufficient knowledge to report or peer review research findings can be challenging when working with a less familiar method. We have provided editorial support or peer review for dozens of journals, and noticed there are common issues in the submitted articles, as well as key information that reviewers miss. The aim of this work is therefore to guide peer reviewers of such research on key issues related to data collection, analysis, and reporting. To guide this discussion, we searched Pubmed, Google Scholar, and addiction journals author instructions for important guidelines pertaining to qualitative research, quantitative research, and literature reviews. We have provided a brief overview of each of these major approaches (Table 1) and their application in addiction studies, as well as an overview of reporting guidelines that are helpful to consider when conducting manuscript peer review. To aid readability, we have used a selection of examples that are focused on addictive substances and behaviors, but we expect that the content is relevant to any health-related field.

Section 1. Qualitative Research

There are many types of qualitative research designs including ethnography, grounded theory, narrative research, case studies, phenomenological analysis, action research, and community engaged research [2,3,4]. Qualitative research can be used to understand the process of assigning meaning to an experience or phenomenon as it occurs in a specific context or environment, and demonstrate changes in social, cultural, or environmental contexts [5]. Qualitative research is also used to explore new fields of study. For example, qualitative research was one of the first methodologies to demonstrate the role of social context on illicit drug use [6].

Data Collection in Qualitative Research

Key to peer reviewing qualitative research is determining the robustness of the data collection and analysis. The focus of qualitative research is to offer an in-depth look at experiences rather than gathering data to generalise to whole populations. Because qualitative research explores the experiences of participants, sample selection is highly important. Participants should have specific expertise related to the research questions. Depending on the data generation approach, qualitative research may involve small samples when the approach is interviews or in situations in which the experience is new or not frequently experienced in the population (e.g., 5–10 people) but typically the sample size is around 15–25 participants [7]. Decisions on sample size are guided by data saturation, which is defined as the point at which new data collection is no longer adding substantially to insights on experience [8].

Data generation can be through interviews, observations, focus groups, visual or audio media, or analysis of archival or publicly available data or documents. In health care, inclusive of addiction studies, interviews or focus group discussions are the most common data collection techniques [4]. Interviews involve in-depth, semi-structured conversations about participants’ personal experiences and the meanings they assign to them. In contrast, focus groups usually consist of 4–12 people, with the aim of exploring specific issues such as attitudes towards the involvement of significant others in models of addiction recovery [9] through to policy options for vaping [10]. Participants answer facilitator questions and are encouraged to interact with each other, thereby generating data that reflect a shared and contextual perspective through group interaction.

Interviews and focus groups require research questions to be collated into an interview schedule that can be structured (i.e., the same question/s asked of every participant) or semi-structured (i.e., there is flexibility to follow discussions in unexpected directions within specified constraints) [4]. A good interview schedule includes a brief series of open-ended questions (not close-ended questions that prompt yes/no responses) that explore depth rather than breadth [5]. Questions are grounded in the literature so that they advance knowledge rather than being created in isolation for a particular project. Because the interview schedule shapes the range of participant responses and perceptions, it is summarised in the methods and/or included as a table, appendix, or supplementary file. Ideally, the interview schedule and interview process should be piloted or reviewed by a panel with lived experience of the topic to identify the optimal set of questions within the allocated interview time [4].

Data Analysis in Qualitative Research

When conducting peer review of qualitative research, attention is paid to the proposed approach to data analysis and the degree of coherence between the planned approach and eventual outcome. Broadly, qualitative analysis is undertaken by sorting data into meaningful groups, themes, or categories to address the research question, aims or objectives. The way data are grouped can be via deductive or inductive categorisation depending on the research design. An inductive approach means the researcher derives categories or a coding framework from the data, while a deductive approach means the researcher already has a theory or existing knowledge that will be applied to the data [11, 12]. Data analysis can be done by manually using the transcripts or MS Excel, or via specific data analysis software such as NVivo.

Qualitative analyses generally require data familiarisation and initial coding, followed by grouping codes into similar categories and themes. Data analysis is more than grouping responses to questions and instead there should be analysis based on a specific technique. Two commonly used data analytic techniques are Thematic Analysis and Content Analysis. Thematic Analysis is a method for systematically identifying, organizing, and interpreting patterns of meaning across a dataset, involving specific steps for undertaking the analysis [13]. For example, researchers might identify themes such as barriers and facilitators to the uptake of smoking cessation services [14] through to challenges experienced by family impacted by addiction [9]. In recent years, Thematic Analysis has been extended to acknowledge the active role of the researcher in theme development terming it Reflexive Thematic Analysis [15, 16]. When reviewing a paper using Reflexive Thematic Analysis, one would expect evidence of an iterative approach to identifying, refining, and naming themes. Additionally, there should be a statement on the researcher’s role and an acknowledgment of how they might have influenced the data collection and analysis process [4]. In contrast, Qualitative Content analysis [12, 17] systematically organizes larger amounts of text, visual, or other non-numeric data into themes and meanings via a pre-existing or emergent coding scheme or data dictionary [17, 18]. The data are then systematically organized into the coding scheme by two or more coders, and frequencies are reported to help identify patterns, relationships, and trends in the data.

Quality and trustworthiness of qualitative analysis can be improved by addressing potential biases that can influence how data are collected and analysed. Researchers can report potential bias by disclosing their own experiences of the subject matter or check whether their interpretation of experience is consistent with research participants and other members of the research team [3, 18]. For example, data analysis of research in gambling studies could be biased by the researcher receiving funding from the gambling industry. Equally, analysis can be biased when the researcher is involved in anti-gambling or advocacy groups, or receives funding to provide the service that is the focus of the research [19]. Content analysis generally requires two or more coders, and inter-coder agreement is reported. However, other approaches, like Reflexive Thematic Analysis, can involve just one coder who debriefs with peers and reaches an agreement on themes which can be helpful in demonstrating transparency and rigor [15].

The synthesis and interpretation of qualitative results varies by research design, subject discipline, and analytic approach. The main themes, interpretations and findings are presented alongside evidence, usually in the form of quotes from participants. For each broad theme, one or two illustrative quotes are sufficient and long strings of quotes should be avoided [2]. Quotes should be presented so that the person cannot be identified but include any participant information that is relevant to the research question such as age, gender, or symptom severity. Interpretation and implications should move beyond description of a phenomena and connect to wider knowledge and literature to make an original contribution [3]. Depending on the research design, the development of a theory or model or comparison with the wider literature may occur in the results or discussion section of a manuscript.

Reporting Standards for Qualitative Research

There are a range of reporting standards for qualitative research that reviewers can use as a guide. These include the Standards for Reporting Qualitative Research (SRQR) [3], the 32-item Consolidated Criteria for Reporting Qualitative research (COREQ) for interviews and focus groups [4], and the Critical Appraisals Skills Programme [20]. Most addiction journals now accept qualitative studies and require adherence to one of these standards to indicate a commitment to quality and rigour in the research [21, 22]. As such, authors and reviewers should be familiar with these standards and carefully check the requirements of the relevant journal.

The most common issue identified in peer review of qualitative work is the lack of detail on study design and how the analysis was conducted [4, 21,22,23]. Information that is sometimes missing includes how the sample was recruited, the characteristics of the sample and their expertise on the topic (often presented as a participants characteristics table), what questions were asked, who collected the data, and how the data were analysed. Limitations reported in qualitative research can range from trustworthiness, sample selection, sample size, how the data were collected, and potential biases in data analysis [22].

Section 2. Quantitative Methods

Quantitative research can be employed to describe the characteristics of a population or phenomenon but also to examine associations between an exposure and outcome or relationships between variables. Quantitative studies can have experimental or observational research designs that are carried out in controlled or natural settings. In experimental studies, exposures are assigned to the participants by the researchers. Such studies may take the form of a randomised-controlled trial (RCT) where the exposure (i.e., an intervention) is assigned at random to one subsample of the study population while the other subsample receives the established standard of care, no care, or a placebo [24]. Random allocation in this type of study ensures limited confounding by baseline variables and can provide strong evidence of causal relationships between the exposure and outcome [24, 25]. Alternatively, quasi-randomised controlled trials involve non-random allocation to intervention, which may be necessary because of ethical or practical concerns [26]. In contrast, observational studies examine the influence of real-life exposures without any manipulation of the exposure [27]. Examples include cohort studies, in which participants are followed over time and outcomes of interest may or may not become apparent during subsequent timepoints, and case control studies, which follow participants over time but select participants based on the presence (i.e., the cases) or absence (i.e., controls) of an outcome and in which factors (i.e., the exposures) associated with the outcome are assessed retrospectively [28, 29]. Finally, cross-sectional studies are also observational in nature but there is no time component involved. Instead, any exposures and outcomes are measured at the same point in time, commonly making such studies less costly but unable to provide information on causal relationships [30]. Table 2 provides an overview of a selection of quantitative study designs.

Data Collection in Quantitative Research

A key task when reviewing a quantitative study is to assess the degree to which the study population and methods are appropriate to answer the research aims. Related to this are the external and internal validity of the study findings as a result of the study design. External validity refers to the extent to which the research findings are generalizable to the wider population or other settings [31, 32]. Sample sizes should be large enough to be representative of the population of interest and to detect an effect, while other considerations may be attrition in studies with follow-up evaluations and the number of variables to be included in the specified analysis [33, 34]. Researchers may use formal power calculations to justify the number of participants recruited into the study [32, 35]. Population studies may have a heterogeneous composition to be reflective of the general population to which they aim to generalize their findings. For example, a repeated cross-sectional study examining the rates of alcohol and marijuana abstinence, co-use and disorders in the United States used data from an annually conducted survey of 187,722 young adult students and non-students, resulting in a heterogeneous sample representative of the youth population [36]. However, other study designs may apply a strict set of selection criteria to limit confounding or enable generalization to a specific population. For example, a cross-sectional study which assessed predictors of alcohol misuse among Canadian Aboriginal youth had a smaller (191 youths) and less heterogeneous sample as the target population was more specific and it may not have been feasible or practical to recruit more participants [37].

In contrast, internal validity refers to the degree to which relationships between exposure and outcome are a true effect which is not distorted by the influence of extraneous or confounding variables. High internal validity means there is low systematic error in the design, conduct or analysis of a study such as participant selection, randomization, treatment fidelity or participant attrition [32]. Experimental studies like RCTs may use a narrow set of selection criteria, resulting in a more homogeneous population to limit potential confounding of the association between exposure and outcome. Although internal validity is a prerequisite for external validity, increasing the former may affect the latter. For example, studies investigating interventions for alcohol addiction commonly have exclusion criteria related to comorbidity and factors that predict attrition, but applying such criteria makes a large proportion of the target population, often those with more severe mental health issues, ineligible for participation, thereby reducing generalizability of findings [38].

Quantitative research collects numerical data and relies on the use of systematic and standardised approaches to do so, such as questionnaires, experiments, and observations [39]. To ensure study findings reflect what researchers set out to measure, it is essential to incorporate clear definitions of all relevant concepts and operationalise concepts by stating how they are measured [40]. Where possible, best practice is to use well-established scales that have been assessed on their psychometric properties. Widely used standardized instruments, such as the Addiction Severity Index [41], have been validated in various settings and languages [42]. When a topic has not been extensively studied, standardized and validated instruments may not be available and researchers may opt to adapt an instrument which measures a related concept or construct a bespoke instrument. When an adapted or bespoke instrument is employed, researchers are required to indicate the points of adaptation or provide the instrument in the supplementary materials.

Data Analysis

When conducting peer review of quantitative research, it is helpful to keep in mind some key points. Data analysis should be fully described in the methods including how the data were cleaned, prepared for analysis, and the specific statistical techniques used, with justification in terms of the research question, the sample size, and the data in question. In essence, data analysis will assess the difference between groups on two or more variables or the relationship between variables. Most statistical models underly one or more assumptions regarding the nature of the data, such as distribution of the data and presence of outliers, and models may produce misleading results when these are violated [43]. Missing data can also influence the results, especially when more than 10% of data is missing [44]. Missingness might be at random, but is more commonly missing at non-random, as when those with certain individual characteristics are less likely to provide a response to specific survey items or be retained in a longitudinal study. For example, RCTs and cohort studies have shown higher drop-out from in-person substance use disorder treatment programs among individuals with lower income [45]. Similar to participant recruitment and selection, missing data can result in underrepresentation or overrepresentation of population groups, and systematic differences underlying the missing data might lead to biased results and impact generalizability of the findings [46]. There are various analytical approaches to control for confounding and address missing data [44, 47] and significant statistical expertise may be important to advise on the most appropriate analysis technique. Most journals have statistical experts who can review methodology and therefore when peer reviewing, it is important to alert the editor to your skill set and the need for expert statistical review, if required.

Reporting Standards in Quantitative Research

There are a range of reporting standards that provide guidance on the information that should be included in manuscripts describing quantitative research. Table 2 provides an overview of standards including the American Psychological Association Journal Article Reporting Standards (JARS) for quantitative research in psychology and its extensions [40], the Consolidated Standards of Reporting Trials (CONSORT) [32], and the Strengthening The Reporting of Observational studies in Epidemiology (STROBE) [35]. When conducting peer review, it is important to use the relevant reporting guideline to check that key information is present and logically presented. Quantitative studies are often published in journals with limited word counts, but the evidence-based rationale for the study should still be established clearly and concisely. There should be sufficient justification and description of how and when data were collected, what type of target population was involved, and who the participants were, in terms of their age, gender, race or ethnicity, and any clinical or social characteristics that might affect the generalisability of the study findings. Authors should also clearly justify the analyses undertaken and report the results clearly according to expected standards. Almost always, there should be a clear distinction between results and discussion with interpretation undertaken later in the discussion section. The discussion should discuss the results and their implications in the context of existing research and theory, along with practice, policy, and future research implications, where relevant. Issues often noted by editors include authors simply restating the results in the discussion, without linking to other relevant literature or extrapolating well beyond their results.

Risk of bias tools can also help pinpoint potential sources of bias. Risk of bias refers to the likelihood that a study may report misleading results; hence, risk of bias tools assesses factors that can introduce bias, such as the methods of participant selection, allocation to groups, and outcome assessment and reporting. Risk of bias tools vary according to the study methodology because they assess specific components of studies. For example, there are specific tools to assess randomized [48] and non-randomised trials [49].

Section 3: Literature Reviews

Literature reviews provide a method to synthesise findings from quantitative and qualitative studies to answer specific questions about what is known about a particular topic. The value of literature reviews lies in their ability to provide a summary of current knowledge that is more comprehensive than any single study. Review purposes range from informing clinical decision-making to setting research priorities. Literature reviews can be undertaken to summarize, synthesize, appraise, or scope primary quantitative or qualitative published or unpublished literature. The current paper focuses on reviews that use a systematic search, as these reviews are increasingly prioritised for publication by journals. Table 3 provides an overview of common review types that use systematic searches. To reduce bias and increase transparency of data collection and analysis, reviews are prospectively registered (e.g., PROSPERO, Open Sciences Framework [50, 51]).

Key to peer review is checking there is a rationale for the selected review type. This selection is informed by the research aim, and each review type differs somewhat in its data collection, analysis, and synthesis. Systematic reviews use a systematic approach to identifying relevant studies and aim to explain or analyze the available evidence [52,53,54,55]. Examples include understanding the effectiveness of interventions like stigma reduction methods for substance use disorders [56] or understanding whether the concentration of THC in cannabis has changed over time [57]. Meta-analyses use a statistical approach to combine results from two or more studies. For example, meta-analyses can be used to determine the average rate of occurrence across settings or populations, such as the rates of help-seeking by people who gamble at different levels of gambling risk [58]. All meta-analyses are based on a systematic review but not all systematic reviews use a meta-analysis. Rapid reviews are pragmatic, time-sensitive, and emerge through stakeholder consultation [8, 59, 60]. Rapid reviews conducted during the recent pandemic have helped summarize the literature on topics such as the impact of COVID-19 public health responses on the risk for illicit drug overdose [61]. Scoping reviews use a systematic approach to data identification but are used to map the type and amount of evidence on a new or emerging area or to clarify definitions or concepts in the literature [62,63,64,65,66]. Unlike other review types, scoping reviews do not answer questions about feasibility, appropriateness, meaningfulness or effectiveness and, as such, should not be synthesising or reinterpreting the evidence [67]. An umbrella review, or review of systematic reviews, can be helpful when multiple systematic reviews have been conducted on the same topic. Umbrella reviews can explain and contrast similarities and differences across studies and synthesise the findings into a single summary [68, 69]. For example, there is a large body of evidence on the impact of advertising on gambling-related harm, and synthesizing this evidence has the potential to affect policy change [70].

Data Collection in Literature Reviews

Peer review of literature reviews includes careful examination of data collection methods. Data in literature reviews are secondary in that they are identified and extracted from a set of previous studies. Data are collected through systematic searches for published or unpublished literature. A systematic approach to identifying data is needed when the review purpose is to provide a comprehensive account of the available literature. The development of a systematic approach starts with finely developed eligibility criteria that are directly linked to the research aim and often relate to participants, interventions, comparisons, and outcomes [52, 54]. The inclusion and exclusion criteria are informed and justified by previous studies, such as restricting the review to prospective studies only or adolescent samples or solely unpublished studies. Eligibility can be narrow, like when the aim is to understand how marketing impacts binge drinking for adolescents [71] or broader, like the risk and protective factors for alcohol and tobacco-related disorders [72].

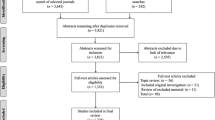

To locate relevant evidence, a search strategy is devised in conjunction with content or information management specialists [67, 73]. The search strategy details which databases will be searched and with which keywords. The selection of databases depends on the research aims and topic, but at least four databases ensure relevant studies are located [73, 74]. This combination could include Web of Science Core Collection, PubMed, Embase, MEDLINE, and Google Scholar, as well as domain-specific resources like PsycINFO for psychological studies, CINAHL for health-focused reviews, and the Cochrane Registry database for clinical trials. Databases do not list exactly same journals, and there is often a lag between publication and indexing, which is why the strategy starts with multiple databases [74]. The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA-S), a guide specifically developed for systematic searches, suggests a full list of unpublished sources (i.e., grey literature) to be searched, such as registries, and recommends that citation searches through reference lists are reported [75, 76]. Grey literature searches can include databases such as registries or repositories, Google searches, targeted websites, and consultation with experts [77]. Often in grey literature searches, there is no abstract, and instead, the executive summary or table of contents are screened. When conducting a Google or Google Scholar search, usually the number of records screened is limited (e.g., the first 100 records on a specific date), which should be justified and noted in the review [77].

Peer review should check that each step of the data collection process is documented in sufficient detail to enable replication of the study. Steps include extracting identified records into one of the purpose-built review or referencing systems (e.g., Covidence, Rayyan, Endnote) or a spreadsheet like Microsoft Excel and removing duplicates. The title and abstract of each record are assessed against the study eligibility criteria by one or more reviewers. A shortlist of studies that may meet the eligibility criteria are then fully screened by reviewing the full text. Data from studies meeting the eligibility criteria are extracted into a standardized spreadsheet or purpose-built review system (e.g., Covidence) reflective of the research aims, and commonly include study characteristics, such as population size, study focus, and setting, as well as data reflective of the research question, such as number of people with an outcome per group [67, 75]. More rigorous systematic reviews employ double data extraction by two researchers, with conflicts in decisions resolved through researcher discussion [78].

Data Analysis in Literature Reviews

When conducting peer review of literature reviews, it is helpful to examine how data have been synthesised and that the approach is consistent with the review type. Data analysis involves the synthesis or merging of data from included studies on each key variable through a narrative summary, critical appraisal, and, where appropriate, statistical calculation of the size of the effect via a meta-analysis [54, 79]. A narrative summary describes the results for each variable with a combination of frequency counts and thematic grouping, allowing for new insights. Systematic reviews, meta-analyses, rapid reviews, and reviews of reviews include a critical appraisal to assess the quality of the studies (see Table 3 for an overview). Assessing quality helps with the interpretation of findings as it provides an indicator of internal and external validity of included studies [80]. Meta-analyses produce a statistical summary of the included studies and use subgroup and sensitivity analyses to examine the effect with and without certain variables [54, 79, 81]. For example, in a study looking at global rates of self-exclusion from gambling venues using population prevalence data, an alternative rate was estimated by limiting the analysis to data extracted from higher-quality studies [82].

Reporting Standards in Literature Reviews

The role of peer review of literature reviews is to assess the quality of the manuscript against specific reporting standards. The main standard is PRISMA, which offers guidelines for systematic reviews and meta-analyses [83]. There are PRISMA extensions that guide reporting for different types of reviews, from diagnostic tests to health equity and mixed methods reviews that include both qualitative and quantitative data. There are also specific requirements for reporting systematic reviews that contain qualitative data only and that use qualitative methods for data analysis and synthesis [84]. Rapid reviews streamline the search strategy so transparent reporting is important [8, 59, 60, 85]. Presentation of the systematic search uses a flow chart that summarizes the count at each step [83]. The PRISMA flowchart differs according to the type of review and sources used to identify studies like websites, organisation, and reference list searching. Data synthesis almost always involves a table of the characteristics of included studies that is discussed in the text, and where there is a meta-analysis, results are presented as a forest plot, which is a graphical presentation which summarizes individual and pooled study results [54, 79]. Given scoping reviews aim to map the available literature, findings can be presented as a numerical count in a chart, table, or graphic [67].

Reporting standards are important for replication and for being able to assess the quality of the evidence. For example, a review of the methodological quality of reporting in systematic reviews for e-cigarettes and cigarette smoking reported that almost all reviews were of low quality because they missed reporting key content to enable replication, such as how the search was conducted and the lack of pre-registration [86]. Key areas to notice for peer review are that the inclusion and exclusion criteria reflect the research aims and that there was a protocol or plan made prior to starting the review.

A risk of bias tool, such as those mentioned in the section on quantitative research [48, 49] should also be applied in systematic reviews or meta-analyses that seek to conduct a comprehensive analysis of the quality of available literature or that aggregate data. Peer reviewers can also use risk of bias tools specifically designed for systematic reviews, such as AMSTAR 2 [87], to identify potential sources of bias within such reviews.

Conclusions

The best peer reviews are those that are balanced, fair, helpful, and directed towards the research. For this reason, peer review is most useful when it follows established guidelines, avoids personal preferences, and refers to ‘the research’ rather than ‘the author’. Furthermore, peer review is not about deciding if a paper submission fits with the journal scope, but instead involves advising the editor with an expert assessment of submitted research. Best practice peer reviews identifies study strengths but also weaknesses, such as poorly defined selection criteria that are not in line with the research aims.

Researchers are often called on to peer review studies that have topics which are consistent with their background, but that use a research approach or methods with which they are not as well acquainted. The purpose of this review was to provide information to support peer review in these situations. This review aimed to provide useful links and resources to guide the reader across these three commonly used methods. In addition to the presented content, peer reviewers may have to grapple with new methods like the use of AI or studies to develop consensus guidelines, but we believe that the same principles apply irrespective of the methods - consult the relevant reporting guidelines and consider the degree to which the submission has internal logic. That is, have the authors articulated a clear evidence-based rationale for the research and the methods used? The reviewer then considers the degree to which the research has been conducted as intended and that the discussion works to advance knowledge and locate the new findings within what is already known.

Data Availability

No datasets were generated or analysed during the current study.

References

Montero I, León OG. A guide for naming research studies in psychology. Int J Clin Health Psychol. 2007;7(3):847–62.

Neale J, Allen D, Coombes L. Qualitative research methods within the addictions. Addiction. 2005.

O’Brien BC, Harris IB, Beckman TJ, Reed DA, Cook DA. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med. 2014;89(9):1245–51.

Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. International journal for quality in health care. 2007;19(6):349 – 57. Guidelines for reporting qualitative studies that focus on interviews and focus groups.

Rhodes T, Coomber R. Qualitative methods and theory in addictions research. Addict Res Methods. 2010:59–78.

Maher L, Dertadian G. Qualitative research. Addiction. 2018;113(1):167–72.

Braun V, Clarke V. To saturate or not to saturate? Questioning data saturation as a useful concept for thematic analysis and sample-size rationales. Qualitative Res Sport Exerc Health. 2021;13(2):201–16.

King VJ, Stevens A, Nussbaumer-Streit B, Kamel C, Garritty C. Paper 2: performing rapid reviews. Syst Reviews. 2022;11(1):151.

Mardani M, Alipour F, Rafiey H, Fallahi-Khoshknab M, Arshi M. Challenges in addiction-affected families: a systematic review of qualitative studies. BMC Psychiatry. 2023;23(1):439.

Sanchez S, Kaufman P, Pelletier H, Baskerville B, Feng P, O’Connor S, et al. Is vaping cessation like smoking cessation? A qualitative study exploring the responses of youth and young adults who vape e-cigarettes. Addict Behav. 2021;113:106687.

Bijker R, Merkouris SS, Dowling NA, Rodda SN. The utility of ChatGPT in conducting qualitative content analysis: an analysis of web-based data. Under Rev. 2024.

Elo S, Kyngas H. The qualitative content analysis process. J Adv Nurs. 2008;62(1):107–15. https://doi.org/10.1111/j.1365-2648.2007.04569.x

Clarke V, Braun V. Thematic analysis. J Posit Psychol. 2017;12(3):297–8.

Iyahen EO, Omoruyi OO, Rowa-Dewar N, Dobbie F. Exploring the barriers and facilitators to the uptake of smoking cessation services for people in treatment or recovery from problematic drug or alcohol use: a qualitative systematic review. PLoS ONE. 2023;18(7):e0288409.

Braun V, Clarke V. Reflecting on reflexive thematic analysis. Qualitative Res Sport Exerc Health. 2019;11(4):589–97.

Braun V, Clarke V. One size fits all? What counts as quality practice in (reflexive) thematic analysis? Qualitative Res Psychol. 2021;18(3):328–52.

Mayring P. Qualitative content analysis: theoretical foundation, basic procedures and software solution. 2014.

Elo S, Kääriäinen M, Kanste O, Pölkki T, Utriainen K, Kyngäs H. Qualitative content analysis: a focus on trustworthiness. SAGE open. 2014;4(1):2158244014522633.

Shaffer PM, Ladouceur R, Williams PM, Wiley RC, Blaszczynski A, Shaffer HJ. Gambling research and funding biases. J Gambl Stud. 2019;35:875–86.

Long HA, French DP, Brooks JM. Optimising the value of the critical appraisal skills programme (CASP) tool for quality appraisal in qualitative evidence synthesis. Res Methods Med Health Sci. 2020;1(1):31–42.

Neale J, Hunt G, Lankenau S, Mayock P, Miller P, Sheridan J, et al. Addiction journal is committed to publishing qualitative research. Addiction. 2013;108(3):447–9.

Neale J, West R. Guidance for reporting qualitative manuscripts. Addiction (Abingdon England). 2015;110(4):549–50.

Clark JP. 15: How to peer review a qualitative manuscript. Peer Review in Health Sciences BMJ Books, London, UK. 2003:219 – 35.

Schulz KF, Grimes DA. Generation of allocation sequences in randomised trials: chance, not choice. Lancet. 2002;359(9305):515–9. Explanation of randomised trials which is part of a plain language series of reviews on quantitative research methodology in the Lancet Journal.

Tucker JA, Roth DL. Extending the evidence hierarchy to enhance evidence-based practice for substance use disorders. Addiction. 2006;101(7):918–32.

McKee M, Britton A, Black N, McPherson K, Sanderson C, Bain C. Interpreting the evidence: choosing between randomised and non-randomised studies. BMJ. 1999;319(7205):312–5.

Lu CY. Observational studies: a review of study designs, challenges and strategies to reduce confounding. Int J Clin Pract. 2009;63(5):691–7.

Breslow N. Design and analysis of case-control studies. Annu Rev Public Health. 1982;3(1):29–54.

Song JW, Chung KC. Observational studies: cohort and case-control studies. Plast Reconstr Surg. 2010;126(6):2234–42.

Wang X, Cheng Z. Cross-sectional studies: strengths, weaknesses, and recommendations. Chest. 2020;158(1):S65–71.

Huebschmann AG, Leavitt IM, Glasgow RE. Making health research matter: a call to increase attention to external validity. Annu Rev Public Health. 2019;40(1):45–63.

Altman DG, Schulz KF, Moher D, Egger M, Davidoff F, Elbourne D, et al. The revised CONSORT statement for reporting randomized trials: explanation and elaboration. Ann Intern Med. 2001;134(8):663–94.

Young AF, Powers JR, Bell SL. Attrition in longitudinal studies: who do you lose? Aust N Z J Public Health. 2006;30(4):353–61.

Eysenbach G. The law of attrition. J Med Internet Res. 2005;7(1):e402.

Vandenbroucke JP, Elm Ev, Altman DG, Gøtzsche PC, Mulrow CD, Pocock SJ, et al. Strengthening the reporting of Observational studies in Epidemiology (STROBE): explanation and elaboration. Ann Intern Med. 2007;147(8):W–163.

McCabe SE, Arterberry BJ, Dickinson K, Evans-Polce RJ, Ford JA, Ryan JE, et al. Assessment of changes in alcohol and marijuana abstinence, co-use, and use disorders among US young adults from 2002 to 2018. JAMA Pediatr. 2021;175(1):64–72.

Mushquash CJ, Stewart SH, Mushquash AR, Comeau MN, McGrath PJ. Personality traits and drinking motives predict alcohol misuse among Canadian aboriginal youth. Int J Mental Health Addict. 2014;12:270–82.

Humphreys K, Weisner C. Use of exclusion criteria in selecting research subjects and its effect on the generalizability of alcohol treatment outcome studies. Am J Psychiatry. 2000;157(4):588–94.

Coghlan D, Brydon-Miller M. The SAGE encyclopedia of action research. Sage; 2014.

Appelbaum M, Cooper H, Kline RB, Mayo-Wilson E, Nezu AM, Rao SM. Journal article reporting standards for quantitative research in psychology: The APA Publications and Communications Board task force report. American Psychologist. 2018;73(1):3. Guidelines for reporting quantitative research for research in psychology.

McLellan AT, Luborsky L, Woody GE, O’BRIEN CP. An improved diagnostic evaluation instrument for substance abuse patients: the Addiction Severity Index. J Nerv Ment Dis. 1980;168(1):26–33.

Mäkelä K. Studies of the reliability and validity of the Addiction Severity Index. Addiction. 2004;99(4):398–410.

Baggio S, Iglesias K, Rousson V. Modeling count data in the addiction field: some simple recommendations. Int J Methods Psychiatr Res. 2018;27(1):e1585.

Bennett DA. How can I deal with missing data in my study? Aust N Z J Public Health. 2001;25(5):464–9.

Lappan SN, Brown AW, Hendricks PS. Dropout rates of in-person psychosocial substance use disorder treatments: a systematic review and meta‐analysis. Addiction. 2020;115(2):201–17.

Gray L. The importance of post hoc approaches for overcoming non-response and attrition bias in population-sampled studies. Soc Psychiatry Psychiatr Epidemiol. 2016;51:155–7.

D’Onofrio BM, Sjölander A, Lahey BB, Lichtenstein P, Öberg AS. Accounting for confounding in observational studies. Ann Rev Clin Psychol. 2020;16(1):25–48.

Higgins JP, Savović J, Page MJ, Elbers RG, Sterne JA. Assessing risk of bias in a randomized trial. Cochrane handbook for systematic reviews of interventions. 2019:205 – 28.

Sterne JA, Hernán MA, McAleenan A, Reeves BC, Higgins JP. Assessing risk of bias in a non-randomized study. Cochrane handbook for systematic reviews of interventions. 2019:621 – 41.

Pieper D, Rombey T. Where to prospectively register a systematic review. Syst Reviews. 2022;11(1):8.

Stewart L, Moher D, Shekelle P. Why prospective registration of systematic reviews makes sense. Syst Reviews. 2012;1:1–4.

Khan KS, Kunz R, Kleijnen J, Antes G. Five steps to conducting a systematic review. J R Soc Med. 2003;96(3):118–21.

Munn Z, Stern C, Aromataris E, Lockwood C, Jordan Z. What kind of systematic review should I conduct? A proposed typology and guidance for systematic reviewers in the medical and health sciences. BMC Med Res Methodol. 2018;18:1–9. Helpful information for determining the difference between systematic reviews and scoping reviews and the limitations of each method.

Uman LS. Systematic reviews and meta-analyses. J Can Acad Child Adolesc Psychiatry. 2011;20(1):57.

Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Inform Libr J. 2009;26(2):91–108.

Livingston JD, Milne T, Fang ML, Amari E. The effectiveness of interventions for reducing stigma related to substance use disorders: a systematic review. Addiction. 2012;107(1):39–50.

Freeman TP, Craft S, Wilson J, Stylianou S, ElSohly M, Di Forti M, et al. Changes in delta-9‐tetrahydrocannabinol (THC) and cannabidiol (CBD) concentrations in cannabis over time: systematic review and meta‐analysis. Addiction. 2021;116(5):1000–10.

Bijker R, Booth N, Merkouris SS, Dowling NA, Rodda SN. Global prevalence of help-seeking for problem gambling: a systematic review and meta‐analysis. Addiction. 2022;117(12):2972–85.

Garritty C, Gartlehner G, Nussbaumer-Streit B, King VJ, Hamel C, Kamel C, et al. Cochrane Rapid Reviews Methods Group offers evidence-informed guidance to conduct rapid reviews. J Clin Epidemiol. 2021;130:13–22.

Haby MM, Chapman E, Clark R, Barreto J, Reveiz L, Lavis JN. What are the best methodologies for rapid reviews of the research evidence for evidence-informed decision making in health policy and practice: a rapid review. Health Res Policy Syst. 2016;14:1–12.

Nguyen T, Buxton JA. Pathways between COVID-19 public health responses and increasing overdose risks: a rapid review and conceptual framework. Int J Drug Policy. 2021;93:103236.

Fu J, Li C, Zhou C, Li W, Lai J, Deng S, et al. Methods for analyzing the contents of social media for health care: scoping review. J Med Internet Res. 2023;25:e43349.

Munn Z, Peters MD, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018;18:1–7.

Peters MD, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. JBI Evid Implement. 2015;13(3):141–6.

Peters MD, Marnie C, Tricco AC, Pollock D, Munn Z, Alexander L, et al. Updated methodological guidance for the conduct of scoping reviews. JBI Evid Synthesis. 2020;18(10):2119–26.

Tricco AC, Lillie E, Zarin W, O’brien K, Colquhoun H, Kastner M, et al. A scoping review on the conduct and reporting of scoping reviews. BMC Med Res Methodol. 2016;16:1–10.

Pollock D, Peters MD, Khalil H, McInerney P, Alexander L, Tricco AC, et al. Recommendations for the extraction, analysis, and presentation of results in scoping reviews. JBI Evid Synthesis. 2023;21(3):520–32.

Gates M, Gates A, Pieper D, Fernandes RM, Tricco AC, Moher D et al. Reporting guideline for overviews of reviews of healthcare interventions: development of the PRIOR statement. BMJ. 2022;378.

Pollock M, Fernandes RM, Pieper D, Tricco AC, Gates M, Gates A, et al. Preferred reporting items for overviews of reviews (PRIOR): a protocol for development of a reporting guideline for overviews of reviews of healthcare interventions. Syst Reviews. 2019;8:1–9.

McGrane E, Wardle H, Clowes M, Blank L, Pryce R, Field M, et al. What is the evidence that advertising policies could have an impact on gambling-related harms? A systematic umbrella review of the literature. Public Health. 2023;215:124–30.

Jernigan D, Noel J, Landon J, Thornton N, Lobstein T. Alcohol marketing and youth alcohol consumption: a systematic review of longitudinal studies published since 2008. Addiction. 2017;112:7–20.

Solmi M, Civardi S, Corti R, Anil J, Demurtas J, Lange S, et al. Risk and protective factors for alcohol and tobacco related disorders: an umbrella review of observational studies. Neurosci Biobehavioral Reviews. 2021;121:20–8.

Bramer WM, Rethlefsen ML, Kleijnen J, Franco OH. Optimal database combinations for literature searches in systematic reviews: a prospective exploratory study. Systematic reviews. 2017;6:1–12. Practical information on developing a comprehensive search strategy using combinations of databases for systematic searches.

Gusenbauer M, Haddaway NR. Which academic search systems are suitable for systematic reviews or meta-analyses? Evaluating retrieval qualities of Google Scholar, PubMed, and 26 other resources. Res Synthesis Methods. 2020;11(2):181–217.

Rethlefsen ML, Kirtley S, Waffenschmidt S, Ayala AP, Moher D, Page MJ, et al. PRISMA-S: an extension to the PRISMA statement for reporting literature searches in systematic reviews. Syst Reviews. 2021;10:1–19.

Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–73.

Godin K, Stapleton J, Kirkpatrick SI, Hanning RM, Leatherdale ST. Applying systematic review search methods to the grey literature: a case study examining guidelines for school-based breakfast programs in Canada. Syst Reviews. 2015;4:1–10.

Dowling N, Merkouris S, Dias S, Rodda S, Manning V, Youssef G, et al. The diagnostic accuracy of brief screening instruments for problem gambling: a systematic review and meta-analysis. Clin Psychol Rev. 2019;74:101784.

Lipsey MW, Wilson DB. Practical meta-analysis. SAGE publications, Inc; 2001.

Higgins JP, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD et al. The Cochrane collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343.

Egger M, Smith GD, Phillips AN. Meta-analysis: principles and procedures. BMJ. 1997;315(7121):1533–7.

Bijker R, Booth N, Merkouris SS, Dowling NA, Rodda SN. International prevalence of self-exclusion from gambling: a systematic review and Meta-analysis. Curr Addict Rep. 2023;10(4):844–59.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Bmj. 2021;372. Guidelines for reporting a systematic review, detailing each component expected in a submitted manuscript.

Tong A, Flemming K, McInnes E, Oliver S, Craig J. Enhancing transparency in reporting the synthesis of qualitative research: ENTREQ. BMC Med Res Methodol. 2012;12:1–8.

Hamel C, Michaud A, Thuku M, Skidmore B, Stevens A, Nussbaumer-Streit B, et al. Defining rapid reviews: a systematic scoping review and thematic analysis of definitions and defining characteristics of rapid reviews. J Clin Epidemiol. 2021;129:74–85.

Kim MM, Pound L, Steffensen I, Curtin GM. Reporting and methodological quality of systematic literature reviews evaluating the associations between e-cigarette use and cigarette smoking behaviors: a systematic quality review. Harm Reduct J. 2021;18:1–13.

Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;358.

Acknowledgements

Thank you to Luke Clarke for his feedback on the article aims and objectives.

Funding

No funds, grants, or other support was received for this research.

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Contributions

SR led the study conception and design. All authors contributed to the literature search and data analysis. SR, ND and SM prepared the first draft and RB, JL and SR critically revised the work. All authors read and approved the final manuscript. The Section Editors for the topical collection Gambling are ND and SM. Please note that Section Editors ND and SM were not involved in the editorial process of this article as they are co-authors.

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no conflicts of interest to declare in relation to this article.

Declaration of Interest

The 3-year declaration of interest statement of this research team is as follows: In the last three years, SR, ND, SM and JL have received research and consultancy funding from multiple sources, including via hypothecated taxes from gambling revenue. SR has received research funding from the Health Research Council of New Zealand, New Zealand Ministry of Health, Ember Korowai Takitini and from international sources including Victorian Responsible Gambling Foundation, New South Wales Office of Responsible Gambling. ND has received research funding from the Victorian Responsible Gambling Foundation, New South Wales Office of Responsible Gambling, Tasmanian Department of Treasury and Finance, Gambling Research Australia, Svenska Spel’s Independent Research Council, Health Research Council of New Zealand, and New Zealand Ministry of Health. SM has received research funding from the Victorian Responsible Gambling Foundation, New South Wales Office of Responsible Gambling, Gambling Research Australia, Health Research Council of New Zealand and the New Zealand Ministry of Health. She has been the recipient of a New South Wales Office of Responsible Gambling Postdoctoral Research Fellowship. JL and CH have received funding from Ministry of Health, New Zealand.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rodda, S.N., Bijker, R., Merkouris, S.S. et al. How to Peer Review Quantitative Studies, Qualitative Studies, and Literature Reviews: Considerations from the ‘Other’ Side. Curr Addict Rep (2024). https://doi.org/10.1007/s40429-024-00594-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s40429-024-00594-8