Abstract

In this study, a shape optimization problem for the two-dimensional stationary Navier–Stokes equations with an artificial boundary condition is considered. The fluid is assumed to be flowing through a rectangular channel, and the artificial boundary condition is formulated so as to take into account the possibility of ill-posedness caused by the usual do-nothing boundary condition. The goal of the optimization problem is to maximize the vorticity of the said fluid by determining the shape of an obstacle inside the channel. Meanwhile, the shape variation is limited by a perimeter functional and a volume constraint. The perimeter functional was considered to act as a Tikhonov regularizer and the volume constraint is added to exempt us from possible topological changes. The shape derivative of the objective functional was formulated using the rearrangement method, and this derivative was later on used for gradient descent methods. Additionally, an augmented Lagrangian method and a class of solenoidal deformation fields were considered to take into account the goal of volume preservation. Lastly, numerical examples based on gradient descent and the volume preservation methods are presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Partial differential equation (PDE) constrained optimization is among the most active fields in mathematics, mainly due to its applicability in engineering and physics. In particular, the system of the Navier–Stokes equations is the theme of a lot of papers because of its ability to closely mimic physical fluid flow. From this reason, several authors including Colin and Fabrie (2010), Desai and Ito (1994), and Fürsikov et al. (2000) have studied optimal control problems where the controls are designed to steer the fluid dynamics according to a given objective.

In this paper, we are interested in an optimization problem where the control is the shape of the domain instead of functionals. Several authors have considered such problems due to its applicability for example in aeronautics (Mohammadi and Pironneau 2004). Moubachir and Zolesio (2006) wrote an extensive introductory literature for shape optimization problems which are governed by fluid flows and are mostly described through the Navier–Stokes equations, and the objectives are mostly formulated as tracking functionals. For a more applied approach to fluid shape design problems, we refer the reader to Mohammadi and Pironneau (2010). Meanwhile, Kasumba and Kunisch (2012) analyzed a vorticity minimization problem that steers the fluid flowing through a channel to exhibit laminar flow. In the said literature, the authors considered a bounded domain, which compels the imposition of an input function on one end of the channel, and a do-nothing boundary condition on the other to capture outflow. However, due to the nonlinear nature of the Navier–Stokes equations, the outflow condition the authors considered can cause non-existence of weak solutions. For this reason, we propose an artificial boundary condition that can capture the outflow while making sure of the well-posedness of the state equations.

We also mention that although most shape design problems involving fluid flow are formulated as to minimize vorticity or drag, there are literatures where vorticity maximization is the goal. This type of maximization problems are mostly considered applicable, for example, in optimal mixing problems (Eggl and Schmid 2020; Mather et al. 2007). Recently, Goto et al. (2021) also showed that emergence of vortices has an interesting result in the field of information theory.

With all these, we consider the following shape optimization problem

where D is a hold-all domain which is assumed to be a fixed bounded connected domain in \({\mathbb {R}}^2\), \(\Omega \subset D\) is an open bounded domain, \({\mathcal {G}}({\varvec{u}},\Omega ) : = J({\varvec{u}},\Omega ) + \alpha P(\Omega ),\) J and P are vortex and perimeter functionals, respectively, given by

\(m>0\) is a given constant, and \({|}\Omega {|}:= \int _\Omega {{\,\mathrm{d\!}\,}}x\). Here, \({\varvec{u}}\) is the velocity field governed by a fluid flowing through a channel with an obstacle. The flow of the fluid is reinforced by an input function \({\varvec{g}}\) on the left end of the channel, which is denoted by \(\Gamma _{\mathrm{{in}}}\), whilst an outflow boundary condition is imposed to the fluid on the right end of the channel denoted by \(\Gamma _{\mathrm{{out}}}\). The boundary \(\Gamma _{\mathrm{f}}\) is the boundary of the submerged obstacle in the fluid. The remaining boundaries of the channel are denoted by \(\Gamma _{\mathrm{{w}}}\), upon which—together with the obstacle boundary \(\Gamma _{\mathrm{f}}\)—a no-slip boundary condition is imposed on the fluid. For the condition on \(\Gamma _{\mathrm{out}}\), let us point out that the usual candidate for capturing outflow is the so-called do-nothing condition, however such condition is not sufficient to ensure the existence of solutions to the Navier–Stokes equations. Aside from the do-nothing condition, one formulation caught our attention. An artificial boundary condition called the directional do-nothing shows to be a good candidate for imposing outflow while ensuring well-posedness of the Navier–Stokes system. However, this condition generates complications for optimization problems, especially when an adjoint approach is considered. In this paper, we decided to consider a boundary condition, which we call a convective boundary condition. The said conditions will be discussed even further in the coming section.

From here, when we talk about a domain \(\Omega \), we always consider it having the boundary \(\partial \Omega = {\overline{\Gamma }}_{\mathrm{in}}\cup {\overline{\Gamma }}_{\mathrm{w}}\cup {\overline{\Gamma }}_{\mathrm{out}}\cup {\overline{\Gamma }}_{\mathrm{f}}\), and that \(\mathrm {dist}(\partial \Omega \backslash \Gamma _{\mathrm{f}},\Gamma _{\mathrm{f}}) > 0\).

Due to the non-convexity of the vorticity functional J, possible non-existence of an optimal solution is a dilemma. Nevertheless, we shall show that J is sequentially continuous with respect to the state variables. Combine this with the continuity of the map \(\Omega \mapsto {{\varvec{u}}}\) with respect to some topology in the set of admissible domains, we circumvent the issue of existence of optimal shapes. We also propose a Tikhonov regularization in the form of the perimeter functional and a volume constraint to handle possible topological changesFootnote 1.

To numerically solve the optimization problem, we shall utilize a gradient descent method based on the first order Eulerian derivative of the shape functional \({\mathcal {G}}\). Although there are several methods to determine the derivative with respect to shape variations—for example, Gao et al. (2008) utilized minimax formulation and chain rule based on the Piola transform (see also the work of Schmidt and Schulz (2011) for computation of shape derivatives to general functionals)—the formulation of the shape derivative will be aided by the so-called rearrangement method which was formalized by Ito et al. (2008). However, this computation will not take into account the volume constraint. This compels us to utilize two methods: first is the augmented Lagrangian method based on (Nocedal and Wright 2006, Framework17.3) which was applied to shape optimization problems by Dapogny et al. (2018); and the other one is by using solenoidal deformation fields. Comparing the solutions of the two methods, we recognize a common profile—although the shapes are virtually different—which is a bean-shaped obstacle.

This paper is organized as follows: in the next section, we introduce the governing state equations, and its corresponding variational formulation. In Sect. 3, we establish the existence of optimal shapes, where we shall use the \(L^\infty \)-topology on the set of characteristic functions. The sensitivity of the objective functional will be analyzed in Sect. 4. Section 5 is dedicated to the discussion of the numerical treatment by which we shall make use of the derivative formulated in Sect. 4. In this section, we shall also show the convergence of numerical solutions to a manufactured exact solution with respect to the Hausdorff measure, and the \(H^1\) and \(L^2\) norms. Concluding remarks and possible future works will be discussed in Sect. 6.

2 Preliminaries

2.1 Governing equations and necessary function spaces

Let \(\Omega \subset D\) be an open and bounded domain, the motion of the fluid is described by the velocity \({\varvec{u}}\) and the pressure p which satisfy the stationary Navier–Stokes equations given by:

Here, \({\varvec{g}}\) is a divergence-free input function acting on the boundary \(\Gamma _{\mathrm{in}}\). The condition \({\varvec{u}}= 0\) on \(\Gamma _{\mathrm{{f}}}\cup \Gamma _{\mathrm{w}}\) corresponds to no-slip boundary condition. For boundary \(\Gamma _{\mathrm{out}}\), we choose an appropriate condition that will correspond to an outflow condition and that will give us a good energy estimate that is crucial in showing the existence of the velocity \({\varvec{u}}\) and for showing continuity with respect to domain variations.

Usually, the condition imposed to capture fluid outflow is what we call the do-nothing boundary condition. Mathematically, this condition is written by letting the product of the stress tensor and the vector normal to the boundary \(\Gamma _{\mathrm{out}}\) equal to zero, i.e.,

where \(\partial _{{\varvec{n}}\!}{\varvec{u}}:= (\nabla {\varvec{u}}){\varvec{n}}\), and \({\varvec{n}}\) is the outward unit vector normal to \(\Gamma _{\mathrm{out}}\). This condition has been considered as the outflow character in a lot of inquiries including that of Gresho (1991), among others.

This profile, however, does not ensure the possibility of obtaining a good energy estimate, let alone the existence of a weak solution. As such, several alternative weak formulations has been formulated to address this issue (Bruneau and Fabrie 1996; Heywood et al. 1992; Zhou and Saito 2016). To illustrate the severity of the issue, we note that to solve the existence of a weak solution for the Navier–Stokes equations coupled with the do-nothing boundary condition the computations will give us the following expression

Now, if \({\varvec{u}}\) and \({\varvec{v}}\) are identical, which will be a case when trying to establish the existence, specifically on the coercivity of the left-hand side of the weak formulation, it is imperative to have a knowledge on the sign of the above quantity. This will be challenging due to its cubic form. This problem is addressed and circumvented by Bruneau and Fabrie (1996) by introducing several versions of artificial boundary conditions for the Neumann condition. In this paper, we shall utilize one of the conditions introduced in the said literature, as well as establish its well-posedness.

We consider the Sobolev spaces \(W^{k,p}(D)\) for any domain \(D\subset {\mathbb {R}}^2\), \(k\ge 0\) and \(p\ge 1\). Note that if \(p=2\), then \(H^k(D)=W^{k,2}(D)\). For any domain \(\Omega \subset {\mathbb {R}}^2\), letting \(\Gamma _0 := \partial \Omega \backslash \Gamma _{\mathrm{out}} \)we define

We denote by \({{\mathbf {H}}}_{\Gamma _{0}}^r(\Omega )\) the closure of \({\mathcal {W}}(\Omega )\) with respect to the norm in \(H^r(\Omega )^2\). By utilizing Meyers–Serrin type arguments it can be easily shown that

To deal with the incompressibility condition, we shall take into account the following solenoidal spaces

The space \({\mathbf {W}}(\Omega )\) is dense in \({\mathbf {V}}(\Omega )\), and the spaces \({\mathbf {V}}(\Omega )\) and \({\mathbf {H}}(\Omega )\) satisfy the Galfand triple property, i.e., \({\mathbf {V}}(\Omega )\hookrightarrow {\mathbf {H}}(\Omega ) \hookrightarrow {\mathbf {V}}(\Omega )^*\), where the first imbedding is dense, continuous and compact. Lastly, for simplicity of notations, we shall denote by \((\cdot ,\cdot )_{{\mathcal {D}}}\) the \(L^2({\mathcal {D}})\), \(L^2({\mathcal {D}})^2\), or \(L^2({\mathcal {D}})^{2\times 2}\) inner products, where \({\mathcal {D}}\) is any measurable set.

2.2 Weak formulation and existence of solutions

Before we begin, let us first settle the issue on the regularity of the domain. Although the assumption that \(\Omega \) is of class \({\mathcal {C}}^{1}\) is sufficient to ensure the existence of weak solution to the Navier–Stokes equations, which we shall show later, this resulting solution will be established to be at most first differentiable. With this reason, if we want a higher regularity for the solution then additional regularity on the domain is needed to be imposed. So from here on out, we shall say that the domain \(\Omega \) satisfies (\(\hbox {H}_{\Omega }\)) if either of the following assumptions is satisfied:

The reason for mentioning the above regularity assumptions on \(\Omega \) is twofold: one—as referred to before—is for the regularity of the weak solution; and the other reason is to ensure that the extension of the input function \({{\varvec{g}}}\) that satisfies the properties below exists

Of course, if we assume minimum regularity on the domain, e.g., \(\Omega \) is of class \({\mathcal {C}}^{1}\), then we can easily show, by the virtue of (Girault and Raviart 1986, Lemma 2.2), that the extension of the input function \({{\varvec{g}}}\in H^{1/2}(\Gamma _{\mathrm{in}})^2\) that satisfies (2) exists and is in \(H^1(\Omega )^2\). However, this is not enough if we would wish to have higher regularity on the extension. To be precise, if we wish to extend \({{\varvec{g}}}\in H^{3/2}(\Gamma _{\mathrm{in}})^2\) in \(\Omega \) that satisfies (2) and that the extension is in \(H^2(\Omega )^2\), then the minimum regularity is for the domain to satisfy (\(\hbox {H}_{\Omega })\). To simplify things later, we shall use the same notation for the input function and its extension. That is, when we refer to the assumption that \({{\varvec{g}}}\in H^m(\Omega )^2\) and satisfies (2), we are implicitly saying that the input function is in \(H^{m - 1/2}(\Gamma _{\mathrm{in}})^2\).

By letting \({\tilde{{\varvec{u}}}} = {\varvec{u}}- {\varvec{g}}\), we consider the following variational problem: For a given domain \(\Omega \), find \({\tilde{{\varvec{u}}}}\in {\mathbf {V}}(\Omega )\) such that

where \({\mathbb {A}}:{{\mathbf {V}}}(\Omega )\times {{\mathbf {V}}}(\Omega )\times {{\mathbf {V}}}(\Omega )\rightarrow {\mathbb {R}}\) and \({\mathbb {C}}:{{\mathbf {V}}}(\Omega )\times {{\mathbf {V}}}(\Omega )\times {{\mathbf {V}}}(\Omega )\rightarrow {\mathbb {R}}\) are trilinear forms, respectively, defined as follows:

where the members of \({\mathbb {A}}\) are defined below:

-

(i)

\(a(\cdot ,\cdot )_{\Omega }:{\mathbf {V}}(\Omega )\times {\mathbf {V}}(\Omega )\rightarrow {\mathbb {R}}\) is a bilinear form defined by

$$\begin{aligned} a({\varvec{u}},{\varvec{v}})_{\Omega }=\int _{\Omega }\nabla {\varvec{u}}:\nabla {\varvec{v}}{{\,\mathrm{d\!}\,}}x, \end{aligned}$$ -

(ii)

\(b(\cdot ;\cdot ,\cdot )_\Omega : {\mathbf {V}}(\Omega )\times {\mathbf {V}}(\Omega )\times {\mathbf {V}}(\Omega )\rightarrow {\mathbb {R}}\) is a trilinear form given as

$$\begin{aligned} b({\varvec{w}};{\varvec{u}},{\varvec{v}})_\Omega = \int _\Omega [({\varvec{w}}\cdot \nabla ){\varvec{u}}]\cdot {\varvec{v}}{{\,\mathrm{d\!}\,}}x, \end{aligned}$$

and the action of \(\Phi \in {\mathbf {V}}(\Omega )^*\) is defined as

for all \({\varvec{\varphi }}\in {{\mathbf {V}}}(\Omega )\). Note that the variational equation (3) is achieved from assuming that \({{\varvec{u}}}\) satisfies the boundary condition

We further mention that this boundary equation is among the artificial conditions proposed by Bruneau and Fabrie (1996) for \(\Theta (a) = a\). As far as we are aware, this condition has never been analyzed, let alone be considered in an optimization problem. The problem arises in—as we have mentioned before—showing the coercivity of the operators of the left hand side of the variational equation and due to the non-homogeneous Dirichlet data on \(\Gamma _{\mathrm{in}}\). More obvious formulations have been considered by several authors (Zhou and Saito 2016; Braack and Mucha 2014) by considering the nonlinear part to be \(({{\varvec{u}}}\cdot {{\varvec{n}}})_{-}{{\varvec{u}}}\), i.e.,

where the notation \((\cdot )_{-}\) is defined as

This will automatically give a coercive left hand side, and hence the well-posedness of the state equations. However, this formulation will not be easy to handle when a variational approach for the optimization problem is utilized, and in the numerical treatment of the adjoint problem, to be specific.

Fortunately, we shall prove an analogous result to that of (Girault and Raviart 1986, Lemma IV.2.3) but takes into account the boundary integral on \(\Gamma _{\mathrm{out}}\) that will help to render the variational problem (3) well-posed. The mentioned analogy is shown in Lemma 4 and is proven in the Appendix.

We start the analysis by showing—under appropriate conditions—that \(\Phi \in {{\mathbf {V}}}(\Omega )^*\).

Proposition 1

Let \(\Omega \subset {\mathbb {R}}^2\) be of class \({\mathcal {C}}^{1}\), \({{\varvec{f}}}\in L^2(\Omega )^2\) and \({{\varvec{g}}}\in H^1(\Omega )^2\) satisfy (2). Then, \(\Phi \in {{\mathbf {V}}}(\Omega )^*\) and that

for some constant \({c}>0.\)

Proof

The proof will utilize the compact embeddings \(H^1(\Omega )^2 \hookrightarrow L^q(\partial \Omega )^2\) and \(H^1(\Omega )^2 \hookrightarrow L^q(\Omega )^2\) for \(q\ge 2\) (see for example (Adams and Fournier 2003, Part I, Theorem 6.3)). Indeed, if \({\varvec{\varphi }}\in {{\mathbf {V}}}(\Omega )\), then

where \({c} = \max \{1,\nu , {\tilde{c}}_1/2\}\) and \({\tilde{c}}_1\) is dependent on the \(L^\infty \)-norm of the outward normal vector \({{\varvec{n}}}\) which is bounded due to the regularity assumptions on the domain. Lastly, the linearity follows from the linearity of the action itself. \(\square \)

Any function \({\tilde{{\varvec{u}}}}\in {\mathbf {V}}(\Omega )\) that solves the variational equation (3) is said to be a weak solution to the Navier–Stokes equations (SE) with the boundary condition (BC1). The existence of the solution \({\tilde{{\varvec{u}}}}\) is summarized below.

Theorem 2

Let \(\Omega \) be of class \({\mathcal {C}}^{1},\) \({\varvec{f}}\in L^2(\Omega )^2\), and \({\varvec{g}}\in H^1(\Omega )^2\) satisfy (2). The solution \({\tilde{{\varvec{u}}}}\in {\mathbf {V}}(\Omega )\) to the variational problem (3) exists such that

for some constant \(c>0\).

The proof of the theorem will make use of the following lemmata whose proofs can be easily redone and can be based for example on (Temam 2001, Lemma II.1.8) and (Girault and Raviart 1986, Lemma IV.2.3).

Lemma 3

Let \({\varvec{u}},{\varvec{v}},{\varvec{w}}\in {\mathbf {V}}(\Omega )\), the trilinear form b satisfies the following properties.

-

1.

\({|}b({\varvec{u}};{\varvec{v}},{\varvec{w}})_{\Omega }{|} \le c\Vert {\varvec{u}}\Vert _{{\mathbf {H}}(\Omega )}^{1/2}\Vert {\varvec{u}}\Vert _{{\mathbf {V}}(\Omega )}^{1/2}\Vert {\varvec{v}}\Vert _{{\mathbf {H}}(\Omega )}^{1/2}\Vert {\varvec{v}}\Vert _{{\mathbf {V}}(\Omega )}^{1/2}\Vert {\varvec{w}}\Vert _{{\mathbf {V}}(\Omega )}\);

-

2.

\(b({\varvec{u}};{\varvec{v}},{\varvec{w}})_{\Omega } + b({\varvec{u}};{\varvec{w}},{\varvec{v}})_{\Omega } = \displaystyle \int _{\Gamma _{\mathrm{out}}} ({\varvec{u}}\cdot {\varvec{n}})({\varvec{v}}\cdot {\varvec{w}}){{\,\mathrm{d\!}\,}}s\);

-

3.

\(b({\varvec{u}};{\varvec{v}},{\varvec{v}})_{\Omega } = \displaystyle \frac{1}{2}\int _{\Gamma _{\mathrm{out}}} ({\varvec{u}}\cdot {\varvec{n}}){|}{\varvec{v}}{|}^2{{\,\mathrm{d\!}\,}}s\).

Lemma 4

For any \(\gamma >0\) there exists \({{\varvec{w}}}_0 = {{\varvec{w}}}_0(\gamma )\in H^1(\Omega )^2\) such that

Remark 1

We note that the assumption that the input function \({{\varvec{g}}}\) is in \(H^1(\Omega )^2\) is valid due to Lemma 4. Meaning to say, \({{\varvec{w}}}_0 = {{\varvec{g}}}\) not only on \(\Gamma _0\) but also inside \(\Omega \). In particular, we also get the estimate

Furthermore, let us also point out that even though the input function is a fixed Dirichlet profile on the boundary \(\Gamma _{\mathrm{in}}\), its extension is dependent on the domain \(\Omega \). This implies that the extension—which we chose to denote similarly as \({{\varvec{g}}}\)—in \(\Omega \) will also be sensitive with domain deformations.

Aside from the lemmata, it is noteworthy to point out the well definedness of the trilinear form b, i.e., \([({\varvec{u}}\cdot \nabla ){\varvec{v}}]\cdot {\varvec{w}}\in L^1(\Omega )\) whenever \({\varvec{u}},{\varvec{v}},{\varvec{w}}\in {\mathbf {V}}(\Omega )\).

Proof of Theorem 2

The proof utilizes Galerkin method by considering the finite dimensional subspaces \({\mathbf {V}}_n\subset {\mathbf {V}}(\Omega )\). We consider the projected problem on \({\mathbf {V}}_n\), i.e., we find solutions \({\tilde{{\varvec{u}}}}_n\in {\mathbf {V}}_n\) that satisfies for all \(\varvec{\varphi }\in {\mathbf {V}}_n\) the equation

To show the existence of solutions \(\tilde{{\varvec{u}}}_n\in {\mathbf {V}}_n\), we shall employ (Girault and Raviart 1986, Corollary IV.1.1), and assume for now the coercivity of \({\mathbb {B}}\) and its sequential weak continuity, which we shall prove later. Let us begin by introducing \(\Psi _n:{\mathbf {V}}_n\rightarrow {\mathbf {V}}_n\) defined by \((\Psi _n({{\varvec{u}}}), {\varvec{\varphi }}) = {\mathbb {B}}({{\varvec{u}}},{\varvec{\varphi }}) - \langle \Phi ,{\varvec{\varphi }} \rangle _{{{\mathbf {V}}}(\Omega )^*\times {{\mathbf {V}}}(\Omega )}\) for any \({\varvec{\varphi }}\in {\mathbf {V}}_n\), where the notation \((\cdot ,\cdot )\) is the inner-product in \({\mathbf {V}}_n\). From this operator, we notice that \(\tilde{{\varvec{u}}}_n\in {\mathbf {V}}_n\) is a solution to (8) if and only if \(\Psi _n(\tilde{{\varvec{u}}}_n) = 0\).

By the sequential weak continuity of \({\mathbb {B}}\) we get that \(\Psi _n\) is continuous. Since \({\mathbf {V}}_n\) is finite dimensional, we can use (Girault and Raviart 1986, Corollary IV.1.1) if we can find a constant \(\alpha > 0\) such that \((\Psi _n({{\varvec{u}}}), {{\varvec{u}}}) \ge 0\) whenever \({{\varvec{u}}}\in {\mathbf {V}}_n\) satisfies \(\Vert {{\varvec{u}}}\Vert _{V(\Omega )} = \alpha \).

From the coercivity of \({\mathbb {B}}\), we get that

where \(c>0\) is the coercivity constant of \({\mathbb {B}}\). So, if we choose \(\alpha = \Vert \Phi \Vert _{{\mathbf {V}}(\Omega )^*}/c\), we get \((\Psi _n({{\varvec{u}}}), {{\varvec{u}}}) \ge 0\) whenever \(\Vert {{\varvec{u}}}\Vert _{V(\Omega )} = \alpha \).

Let us now prove the coercivity of \({\mathbb {B}}\), i.e., for any \({\varvec{u}}\in {\mathbf {V}}(\Omega )\) there exists \(c>0\) such that

Indeed, by choosing \(\gamma =\frac{\nu }{2}\) in Lemma 4,

Furthermore,

These identities give us the coercivity

where \(c = \nu /2\). Using the coercivity above, and Lemma 1, for any \(n\ge 1\) we have

This gives us the uniform estimate,

for some \(c:=c(\nu ,\Omega )>0\). Hence, there exists \({\tilde{{\varvec{u}}}}\in {\mathbf {V}}(\Omega )\) such that

Using usual arguments (Gunzburger and Kim 1998; Kasumba and Kunisch 2012; Temam 2001; Girault and Raviart 1986), we can easily show that for any \(\varvec{\varphi }\in {\mathbf {V}}(\Omega )\), \({\mathbb {A}}({\tilde{{\varvec{u}}}}_n;{\tilde{{\varvec{u}}}}_n,\varvec{\varphi })_{\Omega }-\frac{1}{2}{\mathbb {C}}({\tilde{{\varvec{u}}}}_n;{\tilde{{\varvec{u}}}}_n,\varvec{\varphi }) \rightarrow {\mathbb {A}}({\tilde{{\varvec{u}}}};{\tilde{{\varvec{u}}}},\varvec{\varphi })_{\Omega }-\frac{1}{2}{\mathbb {C}}({\tilde{{\varvec{u}}}};{\tilde{{\varvec{u}}}},\varvec{\varphi }) \), i.e., \({\mathbb {B}}\) is sequential weak continuous.

Indeed, by virtue of Lemma 3(3), (8) can be written as

By following the proofs on the said references, it can be shown that the next convergences hold

So, what remains for us to show is that

We focus on the first convergence, since the others can be done similarly. Before we begin, we note that \(H^1(\Omega )\) is compactly embedded to \(L^q(\partial \Omega )\), for \(q\ge 2\) (see for example (Adams and Fournier 2003, Part I, Theorem 6.3)). This implies that \({\tilde{{\varvec{u}}}}_n\rightarrow {\tilde{{\varvec{u}}}}\) and \({\tilde{{\varvec{u}}}}_n\cdot {\varvec{n}}\rightarrow {\tilde{{\varvec{u}}}}\cdot {\varvec{n}}\) in \(L^q(\Gamma _{\mathrm{out}})^2\) and \(L^q(\Gamma _{\mathrm{out}})\), respectively. Hence, we have the following computation:

The right side approaches zero from the mentioned convergences in \(L^q(\Gamma _{\mathrm{out}})^2\) and \(L^q(\Gamma _{\mathrm{out}})\), which proves the claim. Therefore, \({\tilde{{\varvec{u}}}}\) solves (3) with the energy estimate

\(\square \)

Remark 2

(i) Even though we just assumed \({{\varvec{f}}}\in L^2(\Omega )^2\) and \({{\varvec{g}}}\in H^1(\Omega )^2\), as we shall see later, we can have these functions extended to the hold all domain. Furthermore, since \(\Omega \subset D\), the energy estimate can be extended to the hold-all domain D, i.e.,

and \(c>0\) is dependent on D, thanks to Faber–Krahn inequality.

(ii) As previously mentioned, it can be shown that a more regular domain yields a more regular solution. In particular, if \(\Omega \) satisfies (\(\hbox {H}_{\Omega })\), then the weak solution to (3) satisfies \(\tilde{{\varvec{u}}}\in {\mathbf {V}}(\Omega )\cap \,H^2(\Omega )^2\), and as a consequence of the Rellich–Kondrachov embedding theorem (Evans 1998, Chapter 5, Theorem 6) the solution is in \(C(\overline{\Omega })^2\).

The existence of the distribution p is a direct consequence of De Rham’s theorem (Temam 2001, Proposition I.1.1) in such a way that \(p\in L^2(\Omega )\). Furthermore, if we assume that

then one can show that the solution is unique.

In strong form, if we assume appropriate regularity on \(\Omega \), additionally if \({{\varvec{g}}}\in H^2(D)^2\) (a property which we shall implicitly assume from now on), and \({\tilde{{\varvec{u}}}}\in {\mathbf {V}}(\Omega )\cap H^2(\Omega )^2\) solves the variational equation (3), then \({\varvec{u}}= \tilde{{\varvec{u}}}+{\varvec{g}}\in H^2(\Omega )^2\) is regarded as the solution to the equations,

Before closing this section, we look at some operators which will be handy when we go to the investigation of sensitivities. First, let us introduce the Stokes operator \(A_\Omega :D(A_\Omega )\subset {\mathbf {H}}(\Omega )\rightarrow {\mathbf {H}}(\Omega ) \) defined by \(A_\Omega {\varvec{u}}= -P_\Omega \Delta {\varvec{u}}\) for \({\varvec{u}}\in D(A_\Omega )\). Here \(P_\Omega :L^2(\Omega )^2\rightarrow {\mathbf {H}}(\Omega )\) is the Leray projection that is associated with the decomposition \(L^2(\Omega )^2 = {\mathbf {H}}(\Omega )\oplus \nabla L^2(\Omega )\). As a direct consequence, if the domain \(\Omega \) satisfies (\(\hbox {H}_{\Omega })\) then \(D(A_\Omega ) = {\mathbf {V}}(\Omega )\cap H^2(\Omega )^2 \), hence item (ii) of the remark above. We mention, furthermore, that the Stokes operator is a self-adjoint positive operator with dense domain and compact inverse, this in turn, gives us the orthonormal basis of \({\mathbf {H}}(\Omega )\). This orthonormal basis makes the Galerkin method in the proof of Theorem 2 possible to utilize. With this operator, we can write the first member of \({\mathbb {A}}\) as follows,

We also introduce the operator \(B_\Omega :{\mathbf {V}}(\Omega )\times {\mathbf {V}}(\Omega )\rightarrow {\mathbf {V}}(\Omega )^*\) defined by

For any \({\varvec{u}}\in {\mathbf {V}}(\Omega )\), we shall also use the notation \(B_\Omega {{\varvec{u}}} := B_\Omega ({{\varvec{u}}},{{\varvec{u}}})\). Lastly, we consider the boundary operator \(C:{\mathbf {V}}(\Omega )\times {\mathbf {V}}(\Omega )\rightarrow H^{-\frac{1}{2}}(\Gamma _{\mathrm{out}})^2\) defined by

The well-definedness and continuity of the operator C can be established by utilizing the Rellich–Kondrachov embedding (Adams and Fournier 2003, Part I, Theorem 6.3).

3 Existence of optimal shapes

The purpose of this section is to establish the existence of a solution to the shape optimization problem. To be able to do this, a set of admissible domains \({\mathcal {O}}_{ad}\!\) will be considered to ensure the existence of state solutions as well as ensure that—assuming that it exists—the solution is embedded to the hold-all domain D. In this set of admissible domains, a topology will be endowed upon which \({\mathcal {O}}_{ad}\!\) itself is compact. Utilizing this compactness property, standard sequential arguments will then be followed to establish the promised existence of the optimal shape.

3.1 Cone property and the set of admissible domains

Before we begin, we assume that \(\Gamma _{\mathrm{out}}\subset \partial D\), which is not going to be an issue on the generality of the problem since \(\Gamma _{\mathrm{out}}\) is not a part of the free-boundary. Now, to define the topology on the set of admissible domains, we shall resort to the collection of domains that satisfy the cone property. Note that one way to define the topology on the set of admissible domains is by parametrizing the free-boundary. Examples of such parametrizations are found in Rabago and Azegami (2019, 2020) and the references therein. However defining the domains in such way will be problematic for the current problem since the free-boundary parametrization might lead to generating domains with varying volumes.

To pass through the challenge of preserving the volume, a classical way to define the topology on the set of admissible domains is by considering the collection of characteristic functions, which is a fact highlighted by Henrot and Privat (2010) and is rigorously discussed by Delfour and Zolesio (2011) and Henrot and Pierre (2014). But before we delve into this topology, let us first look at the manner by which the domains are defined. In particular, we shall consider domains which satisfy the cone property as defined by Chenais (1975).

Definition 1

Let \(h>0\) and \(\theta \in [0,2\pi ]\), and \(\xi \in {\mathbb {R}}^2\) such that \(\Vert \xi \Vert = 1\).

(i.) The cone of angle \(\theta \), height h and axis \(\xi \) is defined as

where \((\cdot ,\cdot )\) and \(\Vert \cdot \Vert \) denote the inner product and Euclidean norm in \({\mathbb {R}}^2\), respectively.

(ii.) A set \(\Omega \subset {\mathbb {R}}^2\) is said to satisfy the cone property if and only if for all \(x\in \partial \Omega \), there exists \(C_x=C(\xi _x,\theta ,h)\), such that for all \(y\in B(x,r)\cap \Omega \) we have \(y+C_x \subset \Omega \).

From this definition, we now define the set of admissible domains as

A sequence \(\{\Omega _n\}\subset {\mathcal {O}}_{ad}\!\) is said to converge to \(\Omega \in {\mathcal {O}}_{ad}\!\) if

where the function \(\chi _A\) for a set \(A\subset {\mathbb {R}}^2\) refers to the characteristic function defined by

Remark 3

-

(i.)

This set of admissible domains has been established to be non-empty (see the proof of Proposition 4.1.1 by Henrot and Pierre (2014)), which exempts us from the futility of the analyses we will be going through in the succeeding sections.

-

(ii.)

Note that this convergence may seem too weak in the sense that the \(\hbox {weak}^*\) convergence only assures us that the limit \(\chi _\Omega \) only satisfies \(\chi _\Omega (x) \in [0,1]\), however as pointed out by Henrot and Pierre (2014) in Proposition 2.2.1, this convergence holds in the space \(L^p_{loc}({\mathbb {R}}^2)\) and thus \(\chi _\Omega \) is an almost everywhere characteristic function. We refer the reader to (Delfour and Zolesio 2011, Chapter 5) and (Henrot and Pierre 2014, Chapter 2 Sect. 3) for a more detailed discussion on the topology of characteristic functions of finite measurable domains.

Before we mention the compactness of the set \({\mathcal {O}}_{ad}\!\), let us first look at an important implication of the cone property, i.e., the existence of a uniform extension operator. This is given by the lemma below.

Lemma 5

(Chenais (1975)) There exists \(K>0\) such that for all \(\Omega \in {\mathcal {O}}_{ad}\!\), there exists operators

which are linear and continuous such that \(\displaystyle \max _{\begin{array}{c} m = 0,1\\ d=1,2 \end{array}}\{ \Vert {\mathcal {E}}^d_\Omega \Vert _{{\mathcal {L}}_{m,d}}\} \le K\), where the operator norm is defined as

This lemma will be utilized in several occasions, for example when we show that the domain-to-state map is continuous. Meanwhile, the compactness of \({\mathcal {O}}_{ad}\!\) follows from the fact that it is closed and relatively compact—as defined by Chenais (1975)—with respect to the \(weak^*\)-\(L^\infty \) topology on \({\mathcal {U}}_{ad}:= \{\chi _\Omega :\Omega \in {\mathcal {O}}_{ad}\!\}\). One can also read upon the proof in (Henrot and Pierre 2014, Proposition 2.4.10). We shall not discuss the proof of such properties, nevertheless they are summarized on the lemma below.

Lemma 6

The set \({\mathcal {O}}_{ad}\!\) is compact with respect to the topology on \({\mathcal {U}}_{ad}\).

Aside from these properties, it is noteworthy to mention the set of admissible domains can be identified as Lipschitzian domains as well. Since we only consider the hold-all domain D to be possessing a bounded boundary, a proof of this property can be found in (Henrot and Pierre 2014, Theorem 2.4.7).

3.2 Well-posedness of the optimization problem

Now that we have defined a good topology on the set of admissible domains, this subsection is dedicated to establishing the existence of the solution to the shape optimization problem.

Recall that the functional J is written as a function of the state solution \({\varvec{u}}\) and of the domain \(\Omega \). Fortunately, we point out that for each \(\Omega \in {\mathcal {O}}_{ad}\!\) there exists a solution \({\varvec{u}}\in {\mathbf {V}}(\Omega )\) to the weak formulation (3), hence the map \(\Omega \mapsto {\varvec{u}}\). This implies that the well-posedness of the optimization will depend on the continuity of the domain-to-state map, which we shall briefly establish shortly. For now, let us consider the velocity–pressure formulation of (3) given by finding \((\tilde{{\varvec{u}}},p)\in H_{\Gamma _0}^1(\Omega )^2\times L^2(\Omega )\) such that

for all \(({\varvec{\varphi }},q)\in H_{\Gamma _0}^1(\Omega )^2\times L^2(\Omega )\), where \(d(\cdot ,\cdot )_\Omega :H_{\Gamma _0}^1(\Omega )^2\times L^2(\Omega )\rightarrow {\mathbb {R}}\) is defined as \(d(\tilde{{\varvec{u}}},p)_\Omega = -(p,\mathrm {div}\tilde{{\varvec{u}}})_{\Omega }\). The existence of the pair \((\tilde{{\varvec{u}}},p)\in H_{\Gamma _0}^2(\Omega )^2\times L^2(\Omega )\) that satisfies (11) follows from the existence of solution to (3) and because \(d(\cdot ,\cdot )_\Omega \) satisfies the inf-sup condition (Bertoluzza et al. 2017). Furthermore, the following energy estimate holds

The impetus for introducing the variational equation (11) is to make sure that the divergence-free property of the states will be preserved on any domain in \({\mathcal {O}}_{ad}\!\) and so that the said property will still be reflected when the uniform extension property in Lemma 5 is utilized.

Proposition 7

Let \(\{\Omega _n\}\subset {\mathcal {O}}_{ad}\!\) be a sequence that converges to \(\Omega \in {\mathcal {O}}_{ad}\!\). Suppose that for each \(\Omega _n\), \((\tilde{{\varvec{u}}}_n,p_n)\in H_{\Gamma _0}^1(\Omega _n)^2\times L^2(\Omega _n)\) is a solution of the variational equation (11) on the respective domain; then the extensions \((\overline{{\varvec{u}}}_n,{\overline{p}}_n):=({\mathcal {E}}_{\Omega _n}^2{\tilde{{\varvec{u}}}}_n, {\mathcal {E}}_{\Omega _n}^1p_n )\in H^1(D)^2\times L^2(D)\) converges to a state \((\overline{{\varvec{u}}},{\overline{p}} )\in H^1(D)^2\times L^2(D)\), such that \(({\tilde{{\varvec{u}}}},p )=(\overline{{\varvec{u}}},{\overline{p}} )\big \vert _{\Omega }\) is a solution to (11) in \(\Omega \).

Proof

From the uniform extension property, there exists \(K>0\) such that

Furthermore, from the energy estimate (12)

From the uniform boundedness of \(\{({\overline{{\varvec{u}}}}_n,{\overline{p}}_n )\}\) in \(H^1(D)^2\times L^2(D)\) and by the virtue of the Rellich–Kondrachov and Banach–Alaoglu theorems, there exists a subsequence of \(\{({\overline{{\varvec{u}}}}_n,{\overline{p}}_n )\}\), which we denote in the same manner, and an element \((\overline{{\varvec{u}}},{\overline{p}} )\in H^1(D)^2\times L^2(D)\) such that

Passing through the limit. The next step is to show that \(({\tilde{{\varvec{u}}}},p )\) solves (11) in \(\Omega \).

By the assumed domain convergence, we have  in \(L^\infty (D)\). Furthermore, since \((\tilde{{\varvec{u}}}_n,p_n)\) solves (11) in \(\Omega _n\), then for any \((\varvec{\psi },\phi )\in H^1(D)^2\times L^2(D).\)

in \(L^\infty (D)\). Furthermore, since \((\tilde{{\varvec{u}}}_n,p_n)\) solves (11) in \(\Omega _n\), then for any \((\varvec{\psi },\phi )\in H^1(D)^2\times L^2(D).\)

Here, for any function \(\vartheta : D\rightarrow {\mathbb {R}}\), the trilinear form \({\mathcal {A}}_{\vartheta }(\cdot ,\cdot ,\cdot )_D\) can be dissected into several components, namely

where \(a_\vartheta ({\varvec{u}},{\varvec{v}})_D = \int _{D}\vartheta \nabla {\varvec{u}}:\nabla {\varvec{v}}{{\,\mathrm{d\!}\,}}x \), and \(\Phi _\vartheta \in [H^1(D)^2]^*\) is defined as

Our goal is to show that \(({\tilde{{\varvec{u}}}},p )=(\overline{{\varvec{u}}},{\overline{p}} )\big \vert _{\Omega }\) is a solution to (11) by establishing that the following system holds

by utilizing (13) and the weak-\(^*\) limit of the characteristic functions on (14).

Using the same arguments as in Theorem 2, it can be easily shown that

Furthermore, from the assumed convergence of the characteristic functions, we infer that

Lastly, since \(\overline{{\varvec{u}}}_n \rightharpoonup \overline{{\varvec{u}}}\) in \(H^1(D)^2\) and \({\overline{p}}_n \rightharpoonup {\overline{p}}\) in \(L^2(D)\), then

By letting \(({\varvec{\varphi }},q)\in H_{\Gamma _0}^1(\Omega )^2\times L^2(\Omega )\), and defining \(({\varvec{\psi }},\phi )\in H^1(D)^2\times L^2(D)\) by \(({\varvec{\psi }},\phi ) = ({\varvec{\varphi }},q)\) in \(\Omega \) and \(({\varvec{\psi }},\phi )=(0,0)\) in \(D\backslash \overline{\Omega }\), we get the variational equation (11) in \(\Omega \).

Before we end this proof, we note that on (16), a quite challenging and questionable inference is the convergence \(a_{\chi _n}(\overline{{\varvec{u}}}_n,{\varvec{\psi }}) \rightarrow a_{\chi }(\overline{{\varvec{u}}},{\varvec{\psi }})\). The problem with this convergence is that as of now, we were only able to mention the weak convergence of the velocity fields in \(H^1(D)^2\) and the \(\hbox {weak}^*\) convergence of the characteristic functions in \(L^\infty (D)\). Fortunately, we can actually show that \(\chi _n\nabla \overline{{\varvec{u}}}_n\) converges strongly to \(\chi \nabla \overline{{\varvec{u}}}\) in \(L^2(D)^{2\times 2}\), which establishes the convergence in question.

Indeed, by substituting \(({\varvec{\psi }},\phi ) = (\overline{{\varvec{u}}}_n,p)\) into equation (14), by recalling the strong convergence of \(\overline{{\varvec{u}}}_n\) to \(\overline{{\varvec{u}}}\) in \(L^2(D)^2\) and the convergence  in \(L^\infty (D)\), and since \(\chi _n^2 = \chi _n\) and \(\chi ^2 = \chi \), we infer that

in \(L^\infty (D)\), and since \(\chi _n^2 = \chi _n\) and \(\chi ^2 = \chi \), we infer that

Here, we also used the implicit assumption that \({{\varvec{g}}}\in H^2(D)^2\); hence, the convergence on the second line. \(\square \)

Just to reiterate, Proposition 7 proves that the map \(\Omega \mapsto (\tilde{{\varvec{u}}},p)\) is continuous. This property will be instrumental to prove that the optimal shape exists. In fact, we shall use the fact that we can write the objective functional as a function that depends solely on the elements of \({\mathcal {O}}_{ad}\!\), and show that it is continuous with respect to this collection as well. To be precise, we prove the existence of a solution to the shape optimization problem on the theorem below. Before we begin, let us introduce the following notations which were made possible from the well definedness of the map \(\Omega \mapsto {{\varvec{u}}}\):

Theorem 8

Suppose that the assumptions for Theorem 2 together with the uniqueness assumption (9) hold; then, there exists \(\Omega ^*\in {\mathcal {O}}_{ad}\!\) such that

Proof

First, we show that the objective functional \({\mathcal {G}}\) is lower semicontinuous. This is done by showing that the component J is continuous (hence upper-semicontinuous) with respect to the state variable \({{\varvec{u}}} = \tilde{{\varvec{u}}} + {{\varvec{g}}}\), and using the lower-semicontinuity of the perimeter functional (Henrot and Pierre 2014, Proposition 2.3.7).

We shall then show that \({\mathcal {G}}\) is bounded from below, which will imply the existence of a minimizing sequence of domains whose evaluations will converge to an infimum value of the objective functional. Using the compactness of \({\mathcal {O}}_{ad}\!\), we shall then show that this sequence converges to a domain such that its evaluation coincides with the infimum.

Step 1: Lower semicontinuity of \({\mathcal {G}}\).

Note that we can estimate J by the \(H^1\) norm of the state \(\mathcal {{\varvec{u}}}\) by virtue of the following computation:

Furthermore, since Proposition 7 implies that the map \(\Omega \mapsto \tilde{{\varvec{u}}}+{\varvec{g}}\) is continuous, the map \(\Omega \mapsto J(\Omega )\) is also continuous, and hence upper-semicontinuous. Thus, the map \(\Omega \mapsto {\mathcal {G}}(\Omega )\) is lower-semicontinuous, i.e., for any sequence \(\{\Omega _n\}_n\subset {\mathcal {O}}_{ad}\!\) that converges to an element \(\Omega ^*\in {\mathcal {O}}_{ad}\!\), then

Step 2: Existence of a minimizing sequence.

First, using estimate (17), we can show that J is uniformly bounded. Indeed, from (17) and the energy estimate (5), we get

Furthermore, since any domain \(\Omega \in {\mathcal {O}}_{ad}\!\) is bounded, then the inner boundary \(\Gamma _{\mathrm{f}}\) is also bounded and that its perimeter can be bounded below uniformly, say \(\alpha P(\Omega ) \ge P^*\) for any \(\Omega \in {\mathcal {O}}_{ad}\!\). Therefore, \({\mathcal {G}}\) is bounded from below, i.e., for any \(\Omega \in {\mathcal {O}}_{ad}\!\)

Hence, there exists a sequence \(\{\Omega _n\}_n\subset {\mathcal {O}}_{ad}\!\) such that

Step 3: Existence of a minimizer for \({\mathcal {G}}\).

Since \({\mathcal {O}}_{ad}\!\) is compact, the sequence \(\{\Omega _n\}_n\) from Step 2 has a subsequence—which we shall denote similarly—that converges to an element \(\Omega ^*\in {\mathcal {O}}_{ad}\!\). Hence, from the lower-semicontinuity of \({\mathcal {G}}\), we establish that \(\Omega ^*\) is the minimizer of \({\mathcal {G}}\):

\(\square \)

4 Shape sensitivity analysis

This section is dedicated to investigate the sensitivity of the objective functional \({\mathcal {G}}\) with respect to domain variations. We start this section by introducing the identity perturbation method, where we consider domain variations generated by a given autonomous velocity.

4.1 Identity perturbation

We consider a family of autonomous velocity fields \(\varvec{\theta }\) belonging to \({{\varvec{\Theta }}}:= \{\varvec{\theta }\in C^{1,1}({\overline{D}};{\mathbb {R}}^2): \varvec{\theta }= 0 \text { on }\partial D\cup \Gamma _{\mathrm{in}}\cup \Gamma _{\mathrm{w}}\}\). From an element \(\varvec{\theta }\in {{\varvec{\Theta }}}\) we can define an identity perturbation operator \(T_t:{\overline{D}}\rightarrow {\mathbb {R}}^2\) by \(T_t(x) = x + t\varvec{\theta }(x).\) We note that this operator is generated as a direct consequence of the velocity method discussed by Sokolowski and Zolesio (1992) and Henrot and Pierre (2014).

A given domain \(\Omega \subset D\) is perturbed by means of the identity perturbation operator so that for some \(t_0:=t_0(\varvec{\theta })>0\) we get the family of perturbed domains \(\{\Omega _t: 0<t<t_0 \}\) with \(\Omega _t: = T_t(\Omega )\). The parameter \(t_0>0\) is chosen so that for any \(t\in (0,t_0)\), \(\mathrm {det}\nabla T_t >0\) and \(J_tM_t(M_t^\top )\) is coercive, i.e., for some \(0<\alpha _1 <\alpha _2\)

where \(J_t = \mathrm {det}\nabla T_t\), \(M_t(x) = (\nabla T_t(x))^{-1}\), and \(\nabla T_t\) denotes the Jacobian matrix of the operator \(T_t\).

By the definition of \(\varvec{\theta }\), \(\varvec{\theta }\equiv 0\) on \(\Gamma _{\mathrm{out}}\), \(\Gamma _{\mathrm{in}}\), and \(\Gamma _{\mathrm{w}}\), this implies that these boundaries are part of the perturbed domains \(\Omega _t\). To be precise, we have

Additionally, a domain that has at most \({\mathcal {C}}^{1,1}\) regularity preserves its said regularity with this transformation, this means that for \(0\le t\le t_0\), \(\Omega _t\) has \({\mathcal {C}}^{1,1}\) regularity given that the initial domain \(\Omega \) is a \({\mathcal {C}}^{1,1}\) domain.

Before we move further in this exposition, let us look at some vital properties of \(T_t\).

Lemma 9

(Sokolowski and Zolesio (1992); Delfour and Zolesio (2011)) Let \(\varvec{\theta }\in {{\varvec{\Theta }}}\), then for sufficiently small \(t_0>0\), the identity perturbation operator \(T_t\) satisfies the following properties:

-

\([t\mapsto T_t]\in C^1([0,t_0];C^{2,1}({\overline{D}},{\mathbb {R}}^2));\ \bullet \ [t\mapsto T_t^{-1}]\in C([0,t_0];C^{2,1}({\overline{D}},{\mathbb {R}}^2));\)

-

\([t\mapsto J_t]\in C^1([0,t_0];C^{1,1}({\overline{D}})); \bullet \ M_t,M_t^\top \in C^{1,1}({\overline{D}},{\mathbb {R}}^{2\times 2});\)

-

\(\frac{d}{dt}J_t\big \vert _{t=0} = \mathrm {div}\varvec{\theta }; \bullet \ \frac{d}{dt}M_t\big \vert _{t=0} = -\nabla \varvec{\theta }.\)

Let us recall Hadamard’s identity which will be integral for solving the necessary conditions.

Lemma 10

Let \(f\in C([0,t_0];W^{1,1}(D))\) and suppose that \(\frac{\partial }{\partial t}f(0)\in L^1(D)\), then

Proof

See Theorem 5.2.2 and Proposition 5.4.4 of Henrot and Pierre (2014). \(\square \)

4.2 Rearrangement method

To investigate the sensitivity of the objective functional with respect to shape variations generated by the transformation \(T_t\), we shall resort to a variational approach formalized by Ito et al. (2008), which is known by many as the rearrangement method. This approach gets rid of the tedious process of solving first the sensitivity of the state solutions, then solving the shape derivative of the objective functional. Aside from the convenience the rearrangement method poses, we also mention that using the usual methods—such as the chain rule, min-max formulation, etc.—will not take into account the linearization of the state on the fixed boundary \(\Gamma _{\mathrm{out}}\). This in turn will render the linearized state and the adjoint equation ill-posed. This problem, thankfully, is resolved by the rearrangement method which is focused on the Frechét derivative of the state operator.

To start with, we consider a Hilbert space \(Y(\Omega )\) and an operator

where the equation \(\langle E(y,\Omega ), \phi \rangle _{Y(\Omega )^*\times Y(\Omega )} = 0\) corresponds to a variational problem in \(\Omega .\)

Suppose that the free boundary is denoted by \(\Gamma _{\mathrm{f}}\subset \partial \Omega \), and \(g:Y(\Omega )\rightarrow {\mathbb {R}}\), the said method deals with the shape optimization

subject to

We define the Eulerian derivative of J at \(\Omega \) in the direction \(\varvec{\theta }\in {{\varvec{\Theta }}}\) by

where \(y_t\) solves the equation \(E(y_t,\Omega _t)=0\) in \(Y(\Omega _t)^*\). If \(d{\mathcal {J}}(y,\Omega )\varvec{\theta }\) exists for all \(\varvec{\theta }\in {{\varvec{\Theta }}}\) and that \(d{\mathcal {J}}(y,\Omega )\) defines a bounded linear functional on \({{\varvec{\Theta }}}\) then we say that \({\mathcal {J}}\) is shape differentiable at \(\Omega \).

The so-called rearrangement method is given as below:

Lemma 11

(cf Ito et al. (2008)) Suppose that the following assumptions hold

-

(A1)

There exists an operator \({\tilde{E}}:Y(\Omega )\times [0,t_0]\rightarrow Y(\Omega )^*\) such that \(E(y_t,\Omega _t)=0\) in \(Y(\Omega _t)^*\) is equivalent to

$$\begin{aligned} {\tilde{E}}(y^t,t) = 0\text { in }Y(\Omega )^*, \end{aligned}$$(20)with \({\tilde{E}}(y,0) = E(y,\Omega )\) for all \(y\in Y(\Omega ).\)

-

(A2)

Let \(y,v\in Y(\Omega )\). Then \(E_y(y,\Omega )\in {\mathcal {L}}(Y(\Omega ),Y(\Omega )^*)\) satisfies

$$\begin{aligned} \langle E(v,\Omega )-E(y,\Omega )-E_y(y,\Omega )(v-y),z\rangle _{Y(\Omega )^*\times Y(\Omega )} = {\mathcal {O}}(\Vert v-y \Vert _{Y(\Omega )}^2), \end{aligned}$$for all \(z\in Y(\Omega ). \)

-

(A3)

Let \(y\in Y(\Omega )\) be the unique solution of (19). Then for any \(f\in Y(\Omega )^*\) the solution of the following linearized equation exists:

$$\begin{aligned} \langle E_y(y,\Omega )\delta y, z\rangle _{Y(\Omega )^*\times Y(\Omega )} = \langle f, z\rangle _{Y(\Omega )^*\times Y(\Omega )} \text { for all }z\in Y(\Omega ). \end{aligned}$$ -

(A4)

Let \(y^t,y\in Y(\Omega )\) be the solutions of (20) and (19), respectively. Then \({{\tilde{E}}}\) and E satisfy

$$\begin{aligned} \lim _{t\searrow 0}\frac{1}{t}\langle {{\tilde{E}}}(y^t,t) - {\tilde{E}}(y,t) -E(y^t,\Omega ) + E(y,\Omega ) ,z\rangle _{Y(\Omega )^*\times Y(\Omega )} = 0 \end{aligned}$$for all \(z\in Y(\Omega ). \)

-

(A5)

\(g\in C^{1,1}({\mathbb {R}}^2,{\mathbb {R}}).\)

Let \(y\in Y(\Omega )\) be the solution of (19), and suppose that the adjoint equation, for all \(z\in Y(\Omega )\)

has a unique solution \(p\in Y(\Omega )\). Then, the Eulerian derivative of J at \(\Omega \) in the direction \(\varvec{\theta }\in {{\varvec{\Theta }}}\) exists and is given by

where \(\kappa \) is the mean curvature of the surface \(\Gamma _{\mathrm{f}}\).

Let us take note that one should be cautious when dealing with the variables \(y^t,y_t\) in (A1), in particular, one should remember the membership of these variables, i.e., \(y_t\in Y(\Omega _t)\) and \(y^t\in Y(\Omega )\). Furthermore, equation (20), usually, is derived by pulling the equation

back into the domain \(\Omega \) by the change of variables induced by \(T_t^{-1}\). We also mention that originally, assumption (A3) is written as the Hölder continuity of solutions \(y^t\in Y(\Omega )\) to (20) with respect to the time parameter \(t\in [0,\tau ]\) (Ito et al. 2008). Fortunately, from the same paper, Ito et al. (2008) have shown that assumption (A3) implies the aforementioned continuity. We cite the said result in the following lemma.

Lemma 12

Suppose that \(y\in Y(\Omega )\) solves (19) and \(y^t\in Y(\Omega )\) is the solution to (20). Assume furthermore that (A3) holds. Then, \(\Vert y^t-y \Vert _{Y(\Omega )} = o(t^\frac{1}{2})\) as \(t\searrow 0\).

Proof

See (Ito et al. 2008, Proposition 2.1). \(\square \)

We begin applying this method by introducing the velocity-pressure operator \({\mathbb {E}}(\cdot )_\Omega :X(\Omega )\rightarrow X(\Omega )^* \) defined by

where \(X(\Omega ) := {\mathbf {H}}^1_{\Gamma _0}(\Omega )\times L^2(\Omega )\), and \({{\mathbf {H}}}^{-1}(\Omega )\) is the dual of \( {\mathbf {H}}^1_{\Gamma _0}(\Omega )\). It can be easily shown that \(d(\cdot ,\cdot )_\Omega \) satisfies the inf-sup condition, hence there exists \(({\tilde{{\varvec{u}}}}, p)\in X(\Omega )\) such that for any \((\varvec{\varphi }, \psi )\in X(\Omega )\)

The element \({\tilde{{\varvec{u}}}}\in {\mathbf {H}}^1_{\Gamma _0}(\Omega )\), in particular, solves the variational equation (3).

Notation: Moving forward we shall use the following notations \(X := X(\Omega )\), \(X_t := X(\Omega _t)\), and \({{\mathbf {V}}}:={{\mathbf {V}}}(\Omega )\).

Our goal is to characterize, and of course show the existence of the Eulerian derivative of the objective functional

Now, from the deformation field \(T_t\), we let \(({\tilde{{\varvec{u}}}}_t,p_t)\in X_t\) be the solution of the equation

We perturb equation (25) back to the reference domain \(\Omega \) which gives us the operator \(\tilde{{\mathbb {E}}}:X\times [0,\tau ]\rightarrow X^*\) defined by

where—by denoting \((M_t^\top )_k\) the \(\hbox {k}^{th}\) row of \(M_t^\top \), and \({\varvec{v}}_k\) the \(\hbox {k}^{th}\) component of a vector \({\varvec{v}}\)—the components are defined as follows

and the element \(\Phi ^t\in {{\mathbf {H}}}^{-1}(\Omega ) \) is defined by

By construction, this operator satisfies (A1), in particular if \(({\tilde{{\varvec{u}}}}_t,p_t)\in X_t\) solves (25), then the translated element \((\tilde{{\varvec{u}}}^t,p^t)=({\tilde{{\varvec{u}}}}_t\circ T_t,p_t\circ T_t)\in X\) solves

Indeed, for sufficiently small values of \(t>0\) the coercivity of \({\mathbb {A}}_t(\cdot )-\frac{1}{2}{\mathbb {C}}(\cdot )\) can be easily verified, as well as the inf-sup condition for the bilinear form \(d_t\).

For property (A2), we introduce the linearization of the Stokes operator \(A_\Omega :{\mathbf {V}}\cap H^2(\Omega )^2 \rightarrow {\mathbf {V}}^*\), and of the bilinear operators \(B_\Omega :{\mathbf {V}}\times {\mathbf {V}}\rightarrow {\mathbf {V}}^*\) and \(C:{\mathbf {V}}\times {\mathbf {V}}\rightarrow H^{-\frac{1}{2}}(\Gamma _{\mathrm{out}})^2\) which were briefly discussed at the end of Sect. 2.

Proposition 13

The Fréchet derivative of the operators \(A_\Omega ,\) \(B_\Omega \) and C at the point \({\varvec{u}}\in {\mathbf {V}}\) in the direction \(\delta \!{\varvec{u}}\in {\mathbf {V}}\) are given as follows:

-

1.

\(\langle D_uA_\Omega ({\varvec{u}})\delta \!{\varvec{u}}, \varvec{\varphi }\rangle _{{\mathbf {V}}^*\times {\mathbf {V}}} = a(\delta \!{\varvec{u}},\varvec{\varphi })_{\Omega }\);

-

2.

\(\langle D_uB_\Omega {\varvec{u}}\delta \!{\varvec{u}}, \varvec{\varphi }\rangle _{{\mathbf {V}}^*\times {\mathbf {V}}} = b({\varvec{u}};\delta \!{\varvec{u}},\varvec{\varphi })_{\Omega } +b(\delta \!{\varvec{u}};{\varvec{u}},\varvec{\varphi })_{\Omega }\);

-

3.

\(\langle D_uB_\Omega ({\varvec{u}},{\varvec{v}})\delta \!{\varvec{u}}, \varvec{\varphi }\rangle _{{\mathbf {V}}^*\times {\mathbf {V}}} = b(\delta \!{\varvec{u}};{\varvec{v}},\varvec{\varphi })_{\Omega }\);

-

4.

\(\langle D_uB_\Omega ({\varvec{v}},{\varvec{u}})\delta \!{\varvec{u}}, \varvec{\varphi }\rangle _{{\mathbf {V}}^*\times {\mathbf {V}}} = b({\varvec{v}};\delta \!{\varvec{u}},\varvec{\varphi })_{\Omega }\);

-

5.

\(\langle D_u C\!{\varvec{u}}\delta \!{\varvec{u}},\varvec{\varphi }\rangle _{{\mathbf {H}}^{-\frac{1}{2}}\times {\mathbf {H}}^{\frac{1}{2}}} = \langle C({\varvec{u}},\delta \!{\varvec{u}}) + C(\delta \!{\varvec{u}},{\varvec{u}}),\varvec{\varphi }\rangle _{{\mathbf {H}}^{-\frac{1}{2}}\times {\mathbf {H}}^{\frac{1}{2}}};\)

-

6.

\(\langle D_u C({\varvec{u}},{\varvec{v}})\delta \!{\varvec{u}},\varvec{\varphi }\rangle _{{\mathbf {H}}^{-\frac{1}{2}}\times {\mathbf {H}}^{\frac{1}{2}}} =\langle C(\delta \!{\varvec{u}},{\varvec{v}}),\varvec{\varphi }\rangle _{{\mathbf {H}}^{-\frac{1}{2}}\times {\mathbf {H}}^{\frac{1}{2}}};\)

-

7.

\(\langle D_u C({\varvec{v}},{\varvec{u}})\delta \!{\varvec{u}},\varvec{\varphi }\rangle _{{\mathbf {H}}^{-\frac{1}{2}}\times {\mathbf {H}}^{\frac{1}{2}}} = \langle C({\varvec{v}},\delta \!{\varvec{u}}),\varvec{\varphi }\rangle _{{\mathbf {H}}^{-\frac{1}{2}}\times {\mathbf {H}}^{\frac{1}{2}}},\)

where we used the notations \(C\!{\varvec{u}}= C({\varvec{u}},{\varvec{u}})\), \({\mathbf {H}}^{\frac{1}{2}} = H^{\frac{1}{2}}(\Gamma _{\mathrm{out}})^2\) and \({\mathbf {H}}^{-\frac{1}{2}} = H^{-\frac{1}{2}}(\Gamma _{\mathrm{out}})^2\).

Proof

We only expose the parts where the nonlinearity occurs, i.e., we show that

and

Indeed, we have the following computations

and

On the last inequality, we used Rellich–Kondrachov embedding \(H^1(\Omega )^2 \hookrightarrow L^q(\partial \Omega )^2\) for \(q\ge 2\). \(\square \)

With these derivatives, we can determine the Fréchet derivative of \({\mathbb {E}}(\cdot )_\Omega \) at an element \(({\varvec{u}},p)\in X\) in the direction \((\delta \!{\varvec{u}},\delta p)\in X\), which we shall denote as \({\mathbb {E}}'({\varvec{u}},p) \in {\mathcal {L}}(X,X^*)\), given by

This gives us (A2). Indeed, by following the proof Proposition 13

Furthermore, by the same arguments done for the proof of Theorem 2 and since \(d(\cdot ,\cdot )\) satisfies the inf-sup condition, for any \({\mathcal {F}}\in X^*\), there exists a unique solution \((\delta \!{\varvec{u}},\delta p)\in X\) to

for all \((\varvec{\varphi },\psi )\in X.\) This implies (A3).

To verify (A4), we note that

Thus, by dividing the previous computation by t and by utilizing Lemmata 9 and 12, we infer that \({\mathbb {E}}(\cdot )_\Omega \) and \(\tilde{{\mathbb {E}}}\) satisfy (A4).

Having been able to show that the operator \({\mathbb {E}}(\cdot )_\Omega \) and \(\tilde{{\mathbb {E}}}\) satisfy (A1)-(A4), and from the fact that the objective functional mostly consists of squared-norms, i.e., \(P,J\in C^\infty (X,{\mathbb {R}}),\) the last remaining task to assure the existence of the Eulerian derivative of \({\mathcal {G}}\) is the unique existence of the solution \(({\varvec{v}},\pi )\in X\) to the adjoint problem

where \(G'\) corresponds to the derivative of the integrand of \({\mathcal {G}}\) with respect to \({\varvec{u}}\) whose action is given as

here the difference between the two curls are as follows: given a vector valued function \({\varvec{\varphi }} = (\varphi _1,\varphi _2)\) we have \(\nabla \times {\varvec{\varphi }} = \frac{\partial \varphi _2}{\partial x_1} - \frac{\partial \varphi _1}{\partial x_2}\), while if \(\psi \) is a scalar valued function then \(\mathbf {\nabla }\times \psi = \left( \frac{\partial \psi }{\partial x_2}, -\frac{\partial \psi }{\partial x_1} \right) \).

The unique existence of the adjoint variables \(({\varvec{v}},\pi )\in X\) can quite easily be established following the arguments of Theorem 2. Furthermore, from the regularity of the domain, the adjoint solution \({\varvec{v}}\) satisfies \({{\varvec{v}}}\in {{\mathbf {V}}}\cap H^2(\Omega )^2\), and that the solution can be looked at as the solution to the system

To finally characterize the shape derivative of \({\mathcal {G}}\), we start by evaluating

where \((\tilde{{\varvec{u}}},p),({{\varvec{v}}},\pi ) \in X\) solve (23) and (28), respectively. To do this, we write the operator \(\tilde{{\mathbb {E}}}\) with the pushed-forward form on \(\Omega _t\) and utilize Lemma 10, that is, we determine the derivative of

We note that in the expression of \(\tilde{{\mathbb {E}}}\) above, we added the term \(d({{\varvec{g}}}\circ T_t^{-1},\pi \circ T_t^{-1})_{\Omega _t}\) since the divergence of \({\varvec{g}}\) is zero in \(\Omega \). Denoting \(\varvec{\psi }_{{\tilde{u}}} = -(\nabla {\tilde{{\varvec{u}}}})^\top {\varvec{\theta }}\), \(\varvec{\psi }_{g} = -(\nabla {\varvec{g}})^\top {\varvec{\theta }}\), \(\varvec{\psi }_v = -(\nabla {\varvec{v}})^\top {\varvec{\theta }}\), \(\psi _p = -\nabla p\cdot {\varvec{\theta }}\), and \(\psi _{\pi } = -\nabla \pi \cdot {\varvec{\theta }}\) yields

Since \({\tilde{{\varvec{u}}}} = {\varvec{v}}= 0\) on \(\Gamma _{\mathrm{f}}\), and \(\mathrm {div}{\tilde{{\varvec{u}}}} = \mathrm {div}{\varvec{v}}= 0\), the integrals on the boundary \(\Gamma _{\mathrm{f}}\) vanish except for \((\nabla \tilde{{\varvec{u}}}:\nabla {{\varvec{v}}},{\varvec{\theta }}\cdot {{\varvec{n}}})_{\Gamma _{\mathrm{f}}}\) and \((\nabla {{\varvec{g}}}:\nabla {{\varvec{v}}},{\varvec{\theta }}\cdot {{\varvec{n}}})_{\Gamma _{\mathrm{f}}}\). Furthermore, since \(({{\varvec{u}}},p)=(\tilde{{\varvec{u}}}+{{\varvec{g}}},p)\), we get

To further simplify the expression above, we take into account the facts that \(({{\varvec{u}}},p)\) satisfies (10), and \(({{\varvec{v}}},\pi )\) on the other hand solves (29). We begin the simplification on the terms with \(\varvec{\psi }_v\) and \(\psi _{\pi }\), which we shall denote by \(I_1\).

The computation above utilized the divergence-free property of \(\tilde{{\varvec{u}}}\) and the identity \((\nabla {\varvec{v}})^\top {\varvec{\theta }} = \partial _{{\varvec{n}}}{{\varvec{v}}}({\varvec{\theta }}\cdot {{\varvec{n}}})\) on \(\Gamma _{\mathrm{f}}\). Furthermore, the boundary integral on \(\partial \Omega \) is simplified into just the integral on \(\Gamma _{\mathrm{f}}\) since \(\varvec{\theta }=0\) on \(\partial \Omega \backslash \Gamma _{\mathrm{f}}.\) Similarly, if we denote by \(I_2\) the terms containing \(\psi _u\) and \(\psi _p\), we have the following simplification,

We note that the last equality is achieved using Green’s curl identity (Monk 2003, Theorem 3.29). Combining the expressions obtained from simplifying \(I_1\) and \(I_2\), and since \({{\varvec{u}}}={{\varvec{v}}} = 0\) on \(\Gamma _{\mathrm{f}}\), then we can further simplify \({\mathcal {D}}_t\tilde{{\mathbb {E}}}\) as follows:

Since \(\mathrm {div}{{\varvec{u}}}=\mathrm {div}{{\varvec{v}}}=0\) in \(\Omega \), and \({{\varvec{u}}}={{\varvec{v}}} =0\) on \(\Gamma _{\mathrm{f}}\), by utilizing the definition of tangential divergence (Sokolowski and Zolesio 1992, p. 82) the integrals \((\pi {{\varvec{n}}}\cdot \partial _{{\varvec{n}}}{{\varvec{u}}},{\varvec{\theta }}\cdot {{\varvec{n}}})_{\Gamma _{\mathrm{f}}}\) and \((p{{\varvec{n}}}\cdot \partial _{{\varvec{n}}}{{\varvec{v}}},{\varvec{\theta }}\cdot {{\varvec{n}}})_{\Gamma _{\mathrm{f}}}\) both equate to zero. Hence, we get the following derivative,

With the computation of the derivative above, we finally characterize the Eulerian derivative of \({\mathcal {G}}\) which is shown in the following theorem.

Theorem 14

Let \(\Omega \subset D\) satisfy (\(\hbox {H}_{\Omega })\), \({{\varvec{f}}}\in L^2(\Omega )^2\), and \({{\varvec{g}}}\in H^2(\Omega )\) satisfying (2). Suppose furthermore that (9) holds, and that \((\tilde{{\varvec{u}}},p),({{\varvec{v}}},\pi )\in ({\mathbf {H}}_{\Gamma _0}^1(\Omega )\cap H^2(\Omega )^2)\times L^2(\Omega )\) are the unique solutions of (23) and (28), respectively. Then for any \({\varvec{\theta }}\in {{{\varvec{\Theta }}}}\), the Eulerian derivative of \({\mathcal {G}}\) exists and is characterized as

where \({{\varvec{u}}} = \tilde{{\varvec{u}}}+{{\varvec{g}}}\in H^2(\Omega )^2\), and \(\varvec{\tau }\) is the unit tangential vector on \(\Gamma _{\mathrm{f}}\).

Proof

The existence of the Eulerian derivative is implied due to the satisfaction of properties (A1)–(A5). Substituting (30) into (22) with \({\tilde{E}}=\tilde{{\mathbb {E}}}\), and \(y = ({\tilde{u}},p)\), we get

where \(j({{\varvec{u}}})\) is such that

Since J is quadratic in nature, its Fréchet derivative at \({{\varvec{u}}}\in H^1(\Omega )^2\) in the direction \(\delta {{\varvec{u}}}\in H^1(\Omega )^2\) can be computed as

Furthermore, we note that

From the two previous identities, we rewrite the second line in (31) as follows:

Therefore, from the assumed regularity of the domain, and by employing divergence theorem, we obtain

\(\square \)

Remark 4

(i) The shape derivative of \({\mathcal {G}}\) as we have formulated it agrees with the Zolesio–Hadamard Structure Theorem (Delfour and Zolesio 2011, Corollary 9.3.1), in it we were able to write the derivative in the form

In this case, we shall call \(\nabla G\) the shape gradient of the objective functional. Furthermore, this form gives us an intuitive gradient descent direction given by \({\varvec{\theta }} = -\nabla G\). This fact—together with the challenges with this chosen direction—will further be explored in the subsequent parts of the paper.

(ii) If one observes the adjoint equation (29), we can easily see why (BC2) will not be easily handled. In fact, if such condition is imposed instead of (BC1), it would be impossible to write the adjoint equation in its strong form. Furthermore, the weak form would include the term \(({\varvec{\varphi }}\cdot {{\varvec{n}}})_{-}\), where \({\varvec{\varphi }}\) is a test function. This expression is quite hard to treat numerically due to its discontinuous nature.

5 Numerical realization

We shall discuss the numerical implementation of the shape optimization problem in this section. We start by discussing the resolution on solving the nonlinearity on the state equations, then we proceed by introducing gradient descent methods based on the Eulerian derivative of the objective functional. The said gradient descent methods will include the rectification of the volume preservation issue, as required in the formulation of the problem. Lastly, we shall show the convergence—with respect to domain discretization—of the final shapes to a manufactured solution in terms of the final deformation fields and in terms of the Hausdorff distance.

5.1 Newton implementation of the Navier–Stokes equations

The nonlinearity is one of the challenges not only in the analysis but also in the numerical implementation of the Navier–Stokes equations. In this subsection, we shall discuss how this is resolved by means of a Newton’s method.

We begin by reiterating the fact that if \((\tilde{{\varvec{u}}},p)\in X\) is the unique solution of (10), then for any \({\mathcal {F}}\in X^*\), there exists a unique solution \((\delta \!{\varvec{u}},\delta p)\in X\) to

This implies that \({\mathbb {E}}'(\tilde{{\varvec{u}}},p)\in {\mathcal {L}}(X,X^*)\) is an isomorphism. Furthermore, by the inverse function theorem there exists a closed ball \({\mathcal {X}}((\tilde{{\varvec{u}}},p);\varepsilon )\) centered at \((\tilde{{\varvec{u}}},p)\in X\) with radius \(\varepsilon >0\) such that \((\tilde{{\varvec{u}}},p)\) is an isolated nonsingular solution.

With all the facts presented above, we propose the following Newton’s algorithm: we start with an initial element \((\tilde{{\varvec{u}}}^0,p^0)\), and we generate the following sequence \(\{(\tilde{{\varvec{u}}}^k,p^k) \}_k\) using the difference equation

or equivalently, denoting \((\delta \tilde{{\varvec{u}}}^{k+1},\delta p^{k+1}) = (\tilde{{\varvec{u}}}^{k+1}-\tilde{{\varvec{u}}}^{k},p^{k+1}-p^{k})\),

for all \(({\varvec{\varphi }},\psi )\in X\). In strong form, by letting \({{\varvec{u}}}^k = \tilde{{\varvec{u}}}^k + {{\varvec{g}}}\), we can write (32) as

where \({\mathcal {D}}_{\times }({{\varvec{u}}},{{\varvec{v}}}):= ({{\varvec{u}}}\cdot \times ){{\varvec{v}}} + ({{\varvec{v}}}\cdot \times ){{\varvec{u}}}\) for \(\times \in \{{\nabla },{{\varvec{n}}}\}\).

For a given \({\varvec{f}}\in L^2(\Omega )^2\), and \({{\varvec{g}}}\in H^2(\Omega )^2\), one can show—by following the arguments of Girault and Raviart (1986)—that if \({{\varvec{u}}}\in {{\mathbf {V}}}\cap H^2(\Omega )^2 \) is the solution to (10) there exists \(c<1\) such that the following convergence estimate holds

Solving numerically, we shall approximate the solution to (10) using (33), with the stopping criterion \(\Vert {{\varvec{u}}}^{k+1} - {{\varvec{u}}}^k \Vert _{H^1(\Omega )^2} < \varepsilon \) for sufficiently small \(\varepsilon >0\).

It is noteworthy to mention that Newton’s method for the Navier–Stokes equations with (BC1) is naturally constructed thanks to the absence of \((\cdot )_{-}\), on the other hand the linearization will not be easily handled if (BC2) is used.

5.2 Gradient descent methods for deformation fields

As mentioned before, in this part we discuss gradient methods based on the Eulerian derivative of the objective functional. Furthermore, due to the volume constraint we shall utilize two methods, namely the use of an augmented Lagrangian method based on Nocedal and Wright (2006), and of divergence-free deformation fields.

5.2.1 Augmented Lagrangian method

Note that the optimization problem can be written as the equality constrained optimization by

With this reason, we formulate the augmented Lagrangian given as

where \(\ell >0\) is a Lagrangian multiplier, and \(b>0\) is a regularizing parameter. We note that the quadratic term—aside from its regularizing effect—acts as a more strict penalizing term as compared to the usual Lagrangian methods. This method was formalized in the context of shape optimization by Dapogny et al. (2018). So to minimize the objective functional while not neglecting the constraint \({\mathcal {F}} = 0\), we instead minimize the augmented Lagrangian \({\mathcal {L}}\).

By following the same arguments as for solving the Eulerian derivative of \({\mathcal {G}}\), we can formulate the derivative of \({\mathcal {L}}\) and is solved as

where \(\nabla L = \nabla G - \ell + b({|}\Omega {|} - m).\)

5.2.2 Smooth extensions of the deformation fields and the unit normal vector

As mentioned in Remark 5, an intuitive gradient descent direction on the boundary \(\Gamma _{\mathrm{f}}\) is either \({\varvec{\theta }} = -\nabla G\), or \({\varvec{\theta }}=-\nabla L\) if one chooses to minimize the augmented Lagrangian. However, this choice of \({\varvec{\theta }}\) may cause irregularities to the deformed boundary \(\Gamma _{\mathrm{f}}\). For this reason, methods of approximating the deformation field \({\varvec{\theta }}\) and extending it to the domain \(\Omega \) have been proposed, for example Azegami and Takeuchi (2006) developed a seminal approach on such smoothing method. In our current problem, we shall adapt such smoothing extension for minimizing the augmented Lagrangian. In particular, we shall be tasked to solve for \({\varvec{\theta }}\in H^1_{{{\varvec{\Theta }}}}(\Omega )^2 := \{{\varvec{\varphi }}\in H^1(\Omega )^2; {\varvec{\varphi }}=0\text { on }\partial \Omega \backslash \Gamma _{\mathrm{f}} \}\) that solves the Robin equation

Meanwhile, we shall also utilize what was proposed by Simon and Notsu (2022b) by minimizing the objective functional itself but uses divergence-free deformation fields, i.e., we shall solve for \(({\varvec{\theta }},\vartheta )\in H^1_{{{\varvec{\Theta }}}}(\Omega )^2\times L^2(\Omega )^2\) that solves the following Stokes equation

for all \(({\varvec{\varphi }},\psi )\in H^1_{{{\varvec{\Theta }}}}(\Omega )^2\times L^2(\Omega )^2\). We note that on both methods \(\varepsilon ,\varepsilon _1,\varepsilon _2>0\) are chosen sufficiently small, so that \(-\varepsilon \partial _{{\varvec{n}}}{\varvec{\theta }}+{\varvec{\theta }} = -\nabla L\) and \(-\varepsilon _1\partial _{{\varvec{n}}}{\varvec{\theta }}+{\varvec{\theta }} + \varepsilon _2\vartheta {{\varvec{n}}} = -\nabla G\) on \(\Gamma _{\mathrm{f}}\), and hence are respective approximations for \(\varvec{\theta }= -\nabla L\) and \(\varvec{\theta }= -\nabla G\) on \(\Gamma _{\mathrm{f}}\).

Using the same narrative as above, we shall determine a smooth extension of the unit normal vector \({\varvec{n}}\) on \(\Gamma _{\mathrm{f}}\), by finding the vector \({\varvec{N}}\) that solves

The impetus for solving for such extension is the appearance of the mean curvature \(\kappa \) in the shape gradients. According to (Henrot and Pierre 2014, Proposition 5.4.8), the mean curvature of the surface \(\Gamma _{\mathrm{f}}\) may be determined using the identity \(\kappa = \mathrm {div}_{\Gamma _{\mathrm{f}}}{{\varvec{n}}}= \mathrm {div}{{\varvec{N}}}\) for any extension \({{\varvec{N}}}\), given that the surface is sufficiently smooth. Since our domain is \({\mathcal {C}}^{1,1}\)-regular, we have the freedom to apply such identity.

5.2.3 Gradient descent iterations

Having been able to lay out the necessary ingredients, we finally write here the iterative scheme of approximating the shape solution. Recall that we introduced the domain variations that utilizes the identity perturbation operator. One of the reason why we take advantage of such perturbation is its usability and simplicity for iterative numerical methods. In particular, for a given initial domain \(\Omega _0\) we shall generate a sequence \(\{\Omega _k\}_k\) by means of the difference equation \(\Omega _{k+1} = \Omega _k + t^k{\varvec{\theta }}^k.\) In this iteration, we note that the only changing boundary is the free-boundary \(\Gamma _{\mathrm{f}}\), so we denote the \(\hbox {k}^{th}\) iteration of the mentioned boundary by \(\Gamma _{\mathrm{f}}^k\) and the \(\hbox {k}^{th}\) iteration of \(\nabla G\) (or \(\nabla L\)) by \(\nabla G^k\) (resp. \(\nabla L^k\)), i.e., the states and the mean curvature are all evaluated in \(\Omega _k\) instead of \(\Omega \), and the deformation fields \({\varvec{\theta }}^k\) are determined by solving either (35) or (36), but with \(\Omega _k\), \(\Gamma _{\mathrm{f}}^k\) and \(\nabla G^k\) (resp. \(\nabla L^k\)) instead of \(\Omega \), \(\Gamma _{\mathrm{f}}\), and \(\nabla G\) (resp. \(\nabla L\)), respectively.

The time step on the other hand, just like most gradient descent methods, is determined using a line search method, i.e., for fixed parameters \(0<\sigma _1,\sigma _2 < 1\), \(j\in \{0,1,2,\ldots \}\), and by denoting

(or \({\mathcal {L}}(\Omega _k)\) for the case of the augmented Lagrangian ), we take \(t^k = {\hat{t}}_j^k\), where j is the smallest integer such that \({\mathcal {G}}(\Omega _k + {\hat{t}}_j^k{\varvec{\theta }}^k) < {\mathcal {G}}(\Omega _k )\) ( resp. \({\mathcal {L}}(\Omega _k + {\hat{t}}_j^k{\varvec{\theta }}^k) < {\mathcal {L}}(\Omega _k )\) ), and such that the perturbed domain \(\Omega _k + {\hat{t}}_j^k{\varvec{\theta }}^k\) does not exhibit mesh degeneracy.

Lastly, the parameters \(\ell ,b>0\) in the augmented Lagrangian will also be updated iteratively based on (Nocedal and Wright 2006, Framework 17.3). Given initial parameters \(\ell _0,b_0>0\), we generate the iterates \(\ell _k,b_k\) using the following rules

where \(\tau >1\) and \({\overline{b}}>0\) are given parameters. See also the work of Allaire and Pantz (2006) for a detailed discussion of volume preserving Lagrange multiplier schemes.

Summarizing these methods, the algorithmFootnote 2 below provides the steps for solving an approximate shape solution:

- Initialization::

-

Choose the parameters \(\nu ,\alpha , \gamma ,\varepsilon ,\varepsilon _1,\varepsilon _2,\varepsilon _3,\sigma _1,\sigma _2,\tau \) and \({\overline{b}}\); initialize the domain \(\Omega _0\), the solution \({{\varvec{u}}}_0\) (using Newton’s method (33)), and the parameters \(\ell _0\) and \(b_0\) (only if the augmented Lagrangian is being implemented).

- Step 1::

-

Evaluate \({\mathcal {G}}(\Omega _k)\) (or \({\mathcal {L}}(\Omega _k)\)); solve for the adjoint variable \({{\varvec{v}}}_k\) from (29), the mean curvature \(\kappa _k\) from (37), and the deformation field \({\varvec{\theta }}^k\) using (36), or (35) in the case of the augmented Lagrangian method.

- Step 2::

-

Set \(\Omega _{k+1} = \Omega _k + t^k{\varvec{\theta }}^k\), where \(t^k\) is chosen appropriately as discussed above.

- Step 3::

-

Solve the new solution \({{\varvec{u}}}_{k+1}\) and evaluate \({\mathcal {G}}(\Omega _{k+1})\) (or \({\mathcal {L}}(\Omega _{k+1})\)); if \({|}{\mathcal {G}}(\Omega _{k+1})-{\mathcal {G}}(\Omega _{k}){|} < \texttt {tol}\) (resp. \({|}{\mathcal {L}}(\Omega _{k+1})-{\mathcal {L}}(\Omega _{k}){|} < \texttt {tol}\)) then \(\Omega _{k+1}\) is accepted as the approximate solution.

- Step 4::

-

(Only for augmented Lagrangian method) Update the parameters \(\ell _{k+1},b_{k+1}\) using (39).

5.3 Numerical examples

This part shows examples of implementations of the algorithm previously discussed. We start with implementing the augmented Lagrangian method, and show its volume preserving limitations by simulating different values of the Lagrangian multiplier \(\ell _0\). We then simulate examples using the divergence-free deformation fields, and compare the solutions with the augmented Lagrangian method based on the convergence rate (number of iterations) and the volume preserving property. Lastly, we end by showing convergence of shape solutions to a manufactured solution based on the Hausdorff measure on the boundaries and on the \(\hbox {H}^1\)-convergence of the deformation fields.

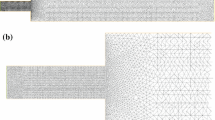

The simulations were all ran using FreeFem++ (Hecht 2012) on an Intel Core i7 CPU @ 3.80 GHz with 64GB RAM. The state, adjoint, and the resolution of the deformation fields (for the divergence-free deformation fields) are solved using triangular Taylor–Hood (P2–P1) finite elements, while the resolution of the mean curvature and of the deformation fields using the augmented Lagrangian method are respectively solved using P1 and P2 finite elements, all of which are solved with the UMFPACK solver. The input function \({{\varvec{g}}}\) is a Poiseuille-like function given by \({\varvec{g}}=(1.2(0.25 - x_2^2),0)^\top \) on \(\Gamma _{\mathrm{in}}\), for simplicity the external force is taken as \({{\varvec{f}}} = 0\), the viscosity parameter is chosen as \(\nu = 1/100\), and the Tikhonov parameter is chosen as \(\alpha = 7\) to ensure that \({\mathcal {G}}(\Omega ^*)\approx 0\) at the solution domain \(\Omega ^*\). As for the domain, we consider a rectangular outer boundary and a circular initial free(inner)-boundary, as shown in Fig. 2.

Meanwhile, the domain variation based on the deformation fields will be dealt with by using the movemesh command in FreeFem++, and the possible mesh degeneracy will be circumvented (aside from the choice of the step size) by utilizing the combination of the checkmovemesh and adaptmeshmesh commands.

Lastly, we mention that for the first two subparts of this subsection the mesh size will be taken uniformly and has size \(h = 1/60\), i.e., the diameter of all the triangles in the domain triangulation will be taken as 1/60. Furthermore, the stopping criterion of shape approximation is decided to be \(\texttt {tol}=10^{-4}\).

5.3.1 Augmented Lagrangian method