Abstract

There exist linear relations among tensor entries of low rank tensors. These linear relations can be expressed by multi-linear polynomials, which are called generating polynomials. We use generating polynomials to compute tensor rank decompositions and low rank tensor approximations. We prove that this gives a quasi-optimal low rank tensor approximation if the given tensor is sufficiently close to a low rank one.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let m and \(n_1, \cdots , n_m\) be positive integers. A tensor \(\mathcal {F}\) of order m and dimension \(n_1 \times \cdots \times n_m\) can be labeled such that

Let \(\mathbb {F}\) be a field (either the real field \(\mathbb {R}\) or the complex field \(\mathbb {C}\)). The space of all tensors of order m and dimension \(n_1 \times \cdots \times n_m\) with entries in \(\mathbb {F}\) is denoted as \(\mathbb {F}^{n_1\times \cdots \times n_m}\). For vectors \(v_i \in \mathbb {F}^{n_i}\), \(i=1,\cdots ,m\), their outer product \(v_1 \otimes \cdots \otimes v_m\) is the tensor in \(\mathbb {F}^{n_1\times \cdots \times n_m}\) such that

for all labels in the corresponding range. A tensor like \(v_1 \otimes \cdots \otimes v_m\) is called a rank-1 tensor. For every tensor \(\mathcal {F}\in \mathbb {F}^{n_1\times \cdots \times n_m}\), there exist vector tuples \((v^{s,1}, \cdots , v^{s,m})\), \(s=1,\cdots ,r\), \(v^{s,j} \in \mathbb {F}^{n_j}\), such that

The smallest such r is called the \(\mathbb {F}\)-rank of \(\mathcal {F}\), for which we denote \(\text {rank}_{\mathbb {F}}(\mathcal {F})\). When r is minimum, Eq. (1) is called a rank-r decomposition over the field \(\mathbb {F}\). In the literature, this rank is sometimes referenced as the Candecomp/Parafac (CP) rank. We refer to [1, 2] for various notions of tensor ranks. Recent work for tensor decompositions can be found in [3,4,5,6,7,8,9]. Tensors are closely related to polynomial optimization [10,11,12,13,14]. Tensor decomposition has been widely used in temporal tensor analysis including discovering patterns [15], predicting evolution [16], and identifying temporal communities [17], and in multirelational data analysis including collective classification [18], word representation learning [19], and coherent subgraph learning [20]. Tensor approximation has been explored in signal processing applications [21] and multidimensional, multivariate data analysis [22]. Various other applications of tensors can be found in [23,24,25]. Throughout the paper, we use the Hilbert-Schmidt norm for tensors:

The low rank tensor approximation (LRTA) problem is to find a low rank tensor that is close to a given one. This is equivalent to a nonlinear least square optimization problem. For a given tensor \(\mathcal {F}\in \mathbb {F}^{n_1\times \cdots \times n_m}\) and a given rank r, we look for r vector tuples \(v^{(s)}: = (v^{s,1}, \cdots , v^{s,m})\), \(s=1,\cdots ,r\), such that

This requires to solve the following nonlinear least squares optimization

When \(r = 1\), the best rank-1 approximating tensor always exists and it is equivalent to computing the spectral norm [26, 27]. When \(r>1\), a best rank-r tensor approximation may not exist [28]. Classically used methods for solving low rank tensor approximations are the alternating least squares (ALS) method [29,30,31], higher-order power iterations [32], semidefinite relaxations [10, 12], SVD-based methods [33], optimization-based methods [8, 34]. We refer to [3, 35] for recent work on low rank tensor approximations.

1.1 Contributions

In this paper, we extend the generating polynomial method in [7, 35] to compute tensor rank decompositions and low rank tensor approximations for nonsymmetric tensors. First, we estimate generating polynomials by solving linear least squares. Second, we find their approximately common zeros, which can be done by computing eigenvalue decompositions. Third, we get a tensor decomposition from their common zeros, by solving linear least squares. To find a low rank tensor approximation, we first apply the decomposition method to obtain a low rank approximating tensor and then use nonlinear optimization methods to improve the approximation. Our major conclusion is that if the tensor to be approximated is sufficiently close to a low rank one, then the obtained low rank tensor is a quasi-optimal low rank approximation. The proof is based on perturbation analysis of linear least squares and eigenvalue decompositions.

The paper is organized as follows. In Sect. 2, we review some basic results about tensors. In Sect. 3, we introduce the concept of generating polynomials and study their relations to tensor decompositions. In Sect. 4, we give an algorithm for computing tensor rank decompositions for low rank tensors. In Sect. 5, we give an algorithm for computing low rank approximations. The approximation error analysis is also given. In Sect. 6, we present numerical experiments. Some conclusions are made in Sect. 7.

2 preliminary

2.1 Notation

The symbol \({\mathbb {N}}\) (resp., \({\mathbb {R}}\), \({\mathbb {C}}\)) denotes the set of nonnegative integers (resp., real, complex numbers). For an integer \(r>0\), denote the set \([r] :=\{1, \cdots , r\}\). Uppercase letters (e.g., A) denote matrices, \(A_{ij}\) denotes the (i, j)th entry of the matrix A, and Curl letters (e.g., \({\mathcal {F}}\)) denote tensors, \({\mathcal {F}}_{i_1,\cdots ,i_m}\) denotes the \((i_1,\cdots ,i_m)\)th entry of the tensor \({\mathcal {F}}\). For a complex matrix A, \(A^{\top }\) denotes its transpose and \(A^*\) denotes its conjugate transpose. The Kruskal rank of A, for which we denote \(\kappa _A\), is the largest number k such that every set of k columns of A is linearly independent. For a vector v, the \((v)_i\) denotes its ith entry and \(\hbox {diag}(v)\) denotes the square diagonal matrix whose diagonal entries are given by the entries of v. The subscript \(v_{s:t}\) denotes the subvector of v whose label is from s to t. For a matrix A, the subscript notation \(A_{:,j}\) and \(A_{i,:}\), respectively, denote its jth column and ith row. Similar subscript notation is used for tensors. For two matrices A, B, their classical Kronecker product is denoted as \(A \boxtimes B\). For a set S, its cardinality is denoted as |S|.

For a tensor decomposition for \(\mathcal {F}\) such that

we denote the matrices

The \(U^{(j)}\) is called the jth decomposing matrix for \(\mathcal {F}\). For convenience of notation, we denote that

Then the above tensor decomposition is equivalent to \(\mathcal {F}= U^{(1)} \circ \cdots \circ U^{(m)}\).

For a matrix \(V \in \mathbb {C}^{p \times n_t}\), define the matrix-tensor product

is a tensor in \(\mathbb {C}^{n_1\times \cdots \times n_{t-1} \times p \times n_{t+1} \times \cdots \times n_m}\) such that the ith slice of \(\mathcal {A}\) is

2.2 Flattening matrices

We partition the dimensions \(n_1,n_2,\cdots ,n_m\) into two disjoint groups \(I_1\) and \(I_2\) such that the difference

is minimum. Up to a permutation, we write that \(I_1 = \{n_{1}, \cdots , n_{k} \}\), \(I_2 = \{n_{k+1}, \cdots , n_{m} \}.\) For convenience, denote that

For a tensor \(\mathcal {F}\in \mathbb {C}^{n_{1}\times \cdots \times n_{m}}\), the above partition gives the flattening matrix

This gives the most square flattening matrix for \(\mathcal {F}\). Let \(\sigma _{r}\) denote the closure of all rank-r tensors in \(\mathbb {C}^{n_{1}\times \cdots \times n_{m}}\), under the Zariski topology (see [36]). The set \(\sigma _r\) is an irreducible variety of \(\mathbb {C}^{n_1 \times \cdots \times n_{m}}\). For a given tensor \(\mathcal {F}\in \sigma _r\), it is possible that \({\text {rank}}(\mathcal {F}) > r.\) This fact motivates the notion of border rank:

For every tensor \(\mathcal {F}\in \mathbb {C}^{n_{1}\times \cdots \times n_{m}}\), one can show that

A property \(\texttt{P}\) is said to hold generically on \(\sigma _{r}\) if \(\texttt{P}\) holds on a Zariski open subset T of \(\sigma _r\). For such a property \(\texttt{P}\), each \(u \in T\) is called a generic point. Interestingly, the above three ranks are equal for generic points of \(\sigma _r\) for a range of values of r.

Lemma 1

Let s be the smaller dimension of the matrix \(\hbox {Flat}(\mathcal {F})\). For every \(r \leqslant s\), the equalities

hold for tensors \(\mathcal {F}\) in a Zariski open subset of \(\sigma _r\).

Proof

Let \(\phi _{1}, \cdots , \phi _{\ell }\) be the \(r \times r\) minors of the matrix

They are homogeneous polynomials in \(x^{i, j}(i=1, \cdots , r, j=1, \cdots , m) .\) Let x denote the tuple \(\left( x^{1,1}, x^{1,2}, \cdots , x^{r, m}\right) .\) Define the projective variety in \(\mathbb {P}^{ r\left( n_{1}+\cdots +n_{m}\right) -1}\)

Then \(Y:=\mathbb {P}^{r\left( n_{1}+\cdots +n_{m}\right) -1} \backslash Z\) is a Zariski open subset of full dimension. Consider the polynomial mapping \(\pi : Y \rightarrow \sigma _r\),

The image \(\pi (Y)\) is dense in the irreducible variety \(\sigma _{r}.\) So, \(\pi (Y)\) contains a Zariski open subset \(\mathscr {Y}\) of \(\sigma _r\) (see [37]). For each \(\mathcal {F}\in {\mathscr {Y}}\), there exists \(u \in Y\) such that \(\mathcal {F}= \pi (u)\). Because \(u \notin Z\), at least one of \(\phi _{1}(u), \cdots , \phi _{\ell }(u)\) is nonzero, and hence \({\text {rank}}\hbox {Flat}(\mathcal {F}) \geqslant r .\) By (6), we know (7) holds for all \(\mathcal {F}\in \mathscr {Y}\) since \({\text {rank}}(\mathcal {F}) \leqslant r .\) Since \(\mathscr {Y}\) is a Zariski open subset of \(\sigma _r\), the lemma holds.

By Lemma 1, if \(r \leqslant s\) and \(\mathcal {F}\) is a generic tensor in \(\sigma _{r}\), we can use \({\text {rank}}\hbox {Flat}(\mathcal {F})\) to estimate \({\text {rank}}(\mathcal {F})\). However, for a generic \({\mathcal {F}} \in \mathbb {C}^{n_{1} \times \cdots \times n_{m}}\) such that \({\text {rank}}\hbox {Flat}(\mathcal {F})=r\), we cannot conclude \(\mathcal {F}\in \sigma _r\).

2.3 Reshaping of tensor decompositions

A tensor \(\mathcal {F}\) of order greater than 3 can be reshaped to another tensor \(\widehat{\mathcal {F}}\) of order 3. A tensor decomposition of \(\widehat{\mathcal {F}}\) can be converted to a decomposition for \(\mathcal {F}\) under certain conditions. In the following, we assume a given tensor \(\mathcal {F}\) has decomposition (3). Suppose the set \(\{1,\cdots ,m\}\) is partitioned into 3 disjoint subsets

Let \(p_i=|I_i| \mathrm {~for~} i=1,2,3\). For the reshaped vectors

we get the following tensor decomposition:

Conversely, for a decomposition like (12) for \(\widehat{\mathcal {F}}\), if all \(w^{s,1}, w^{s,2}, w^{s,3}\) can be expressed as rank-1 products as in (11), then Eq. (12) can be reshaped to a tensor decomposition for \(\mathcal {F}\) as in (3). When the flattened tensor \(\widehat{\mathcal {F}}\) satisfies some conditions, the tensor decomposition of \(\widehat{\mathcal {F}}\) is unique. For such a case, we can obtain a tensor decomposition for \(\mathcal {F}\) through decomposition (12). A classical result about the uniqueness is the Kruskal’s criterion [38].

Theorem 1

(Kruskal’s criterion, [38]) Let \({\mathcal {F}} = U^{(1)} \circ U^{(2)} \circ U^{(3)}\) be a tensor with each \(U^{(i)} \in \mathbb {C}^{n_i \times r}\). Let \(\kappa _i\) be the Kruskal rank of \(U^{(i)}\), for \(i=1,2,3\). If

then \({\mathcal {F}}\) has a unique rank-r tensor decomposition.

The Kruskal’s criterion can be generalized for more range of r as in [39]. Assume the dimension \(n_1 \geqslant n_2 \geqslant n_3 \geqslant 2\) and the rank r is such that

or equivalently, for \(\delta =n_2+n_3-n_1-2\), r is such that

If \(\mathcal {F}\) is a generic tensor of rank r as above in the space \(\mathbb {C}^{n_1 \times n_2 \times n_3}\), then \(\mathcal {F}\) has a unique rank-r decomposition. The following is a uniqueness result for reshaped tensor decompositions.

Theorem 2

(Reshaped Kruskal Criterion, [39, Theorem 4.6]) For the tensor space \(\mathbb {C}^{n_1 \times n_2 \times \cdots \times n_m}\) with \(m \geqslant 3\), let \(I_1 \cup I_2 \cup I_3 =\{1,2,\cdots ,m\}\) be a union of disjoint sets and let

Suppose \(p_1 \geqslant p_2 \geqslant p_3\) and let \(\delta =p_2+p_3-p_1-2\). Assume

If \(\mathcal {F}\) is a generic tensor of rank r in \(\mathbb {C}^{n_1 \times n_2 \times \cdots \times n_m}\), then the reshaped tensor \(\widehat{\mathcal {F}}\in \mathbb {C}^{p_1 \times p_2 \times p_3}\) as in (12) has a unique rank-r decomposition.

3 Generating Polynomials

Generating polynomials can be used to compute tensor rank decompositions. We consider tensors whose ranks r are not bigger than the highest dimension, say, \(r\leqslant n_1\) where \(n_1\) is the biggest of \(n_1, \cdots , n_m\). Denote the indeterminate vector variables

A tensor in \(\mathbb {C}^{n_1 \times \cdots \times n_m}\) can be labeled by monomials like \(x_{1,i_1}x_{2,i_2}\cdots x_{m,i_m}\). Let

For a subset \(J \subseteq \{1,2,\cdots ,m \}\), denote that

A label tuple \((i_1,\cdots ,i_m)\) is uniquely determined by the monomial \(x_{1,i_1} \cdots x_{m,i_m}\). So a tensor \(\mathcal {F}\in \mathbb {C}^{n_1\times \cdots \times n_m}\) can be equivalently labeled by monomials such that

With the new labeling by monomials, define the bi-linear product

In the above, each \(c_{\mu }\) is a scalar and \({\mathcal {F}}\) is labeled by monomials as in (16).

Definition 1

([40, 41]) For a subset \(J \subseteq \{1,2,\cdots ,m\}\) and a tensor \({\mathcal {F}} \in \mathbb {C}^{n_1 \times \cdots \times n_m}\), a polynomial \(p \in \mathcal {M}_J\) is called a generating polynomial for \({\mathcal {F}}\) if

The following is an example of generating polynomials.

Example 1

Consider the cubic order tensor \({\mathcal {F}} \in \mathbb {C}^{3 \times 3 \times 3}\) given as

The following is a generating polynomial for \({\mathcal {F}}\):

Note that \(p \in \mathcal {M}_{\{1,2 \}}\) and for each \(i_3 = 1, 2, 3\),

One can check that for each \(i_3 = 1, 2, 3\),

This is because \(\begin{bmatrix}4&-2&-2&1 \end{bmatrix}\) is orthogonal to

for each \(i_3=1,2,3\).

Suppose the rank \(r \leqslant n_1\) is given. For convenience of notation, denote the label set

For a matrix \(G \in \mathbb {C}^{[r]\times J}\) and a triple \(\tau =(i,j,k) \in J\), define the bi-linear polynomial

The rows of G are labeled by \(\ell =1,2,\cdots ,r\), and the columns of G are labeled by \(\tau \in J\). We are interested in G such that \(\phi [G,\tau ]\) is a generating polynomial for a tensor \({\mathcal {F}} \in \mathbb {C}^{n_1 \times n_2 \times \cdots \times n_m}\). This requires that

The above is equivalent to the equation (\({\mathcal {F}}\) labeled as in (16))

Definition 2

([40, 41]) When (21) holds for all \(\tau \in J\), the G is called a generating matrix for \({\mathcal {F}}\).

For given G, \(j \in \{ 2, \cdots , m \}\) and \(k \in \{2,\cdots ,n_j \}\), we denote the matrix

For each (j, k), define the matrix/vector

Equation (21) is then equivalent to

The following is a useful property for the matrices \(M^{j,k}[G]\).

Theorem 3

([40, 41]) Suppose \(\mathcal {F}=\sum _{s=1}^r u^{s,1} \otimes \cdots \otimes u^{s,m}\) for vectors \(u^{s,j} \in \mathbb {C}^{n_j}\). If \(r \leqslant n_1\), \((u^{s,2})_1\cdots (u^{s,m})_1 \ne 0\), and the first r rows of the first decomposing matrix

are linearly independent, then there exists a G satisfying (24) and satisfying (for all \(j \in \{ 2, \cdots , m \}\), \(k \in \{2,\cdots ,n_j \}\) and \(s=1,\cdots ,r\))

4 Low Rank Tensor Decompositions

Without loss of generality, assume the dimensions are decreasing as

We discuss how to compute tensor decomposition for a tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times \cdots \times n_m}\) when the rank r is not bigger than the highest dimension, i.e., \(r \leqslant n_1\). As in Theorem 3, the decomposing vectors \((u^{s,1})_{1:r}\) are common eigenvectors of the matrices \(M^{j,k}[G]\), with \((u^{s,j})_k\) being the eigenvalues, respectively. This implies that the matrices \(M^{j,k}[G]\) are simultaneously diagonalizable. This property can be used to compute tensor decompositions.

Suppose G is a matrix such that (24) holds and \(M^{j,k}[G]\) are simultaneously diagonalizable. That is, there is an invertible matrix \(P \in \mathbb {C}^{r \times r}\) such that all the products \(P^{-1} M^{j,k}[G] P\) are diagonal for all \(j=2,\cdots , m\) and for all \(k = 2, \cdots , n_j\). Suppose \(M^{j,k}[G]\) are diagonalized such that

with the eigenvalues \(\lambda _{j,k,s}\). For each \(s =1,\cdots ,r\) and \(j=2,\cdots , m\), denote the vectors

When \(\mathcal {F}\) is rank-r, there exist vectors \(u^{1,1}, \cdots , u^{r,1} \in \mathbb {C}^{n_1}\) such that

The vectors \(u^{s,1}\) can be found by solving linear equations after \(u^{s,j}\) are obtained for \(j=2,\cdots ,m\) and \(s=1,\cdots ,r\). The existence of vectors \(u^{s,1}\) satisfying tensor decomposition (28) is shown in the following theorem.

Theorem 4

Let \({\mathcal {F}} =V^{(1)} \circ \cdots \circ V^{(m)}\) be a rank-r tensor, for matrices \(V^{(i)} \in \mathbb {C}^{n_i \times r}\), such that the first r rows of \(V^{(1)}\) are linearly independent. Suppose G is a matrix satisfying (24) and \(P \in \mathbb {C}^{r \times r}\) is an invertible matrix such that all matrix products \(P^{-1} \cdot M^{j,k}[G] \cdot P\) are simultaneously diagonalized as in (26). For \(j=2,\cdots ,m\) and \(s=1,\cdots , r\), let \(u^{s,j}\) be vectors given as in (27). Then, there must exist vectors \(u^{1,1}, \cdots , u^{r,1} \in \mathbb {C}^{n_1}\) such that tensor decomposition (28) holds.

Proof

Since the matrix \(P = \begin{bmatrix} p_1&\cdots&p_r \end{bmatrix}\) is invertible, there exist scalars \(c_1,\cdots , c_r \in \mathbb {C}\) such that

Consider the new tensor

In the following, we show that \(\mathcal {F}_{1:r,:,\cdots ,:} = \mathcal {H}\) and there exist vectors \(u^{1,1}, \cdots , u^{r,1} \in \mathbb {C}^{n_1}\) satisfying equation (28).

By Theorem 3, one can see that the generating matrix G for \(\mathcal {F}\) is also a generating matrix for \(\mathcal {H}\), so it holds that

Therefore, we have

By (29), one can see that

In (31), for each \(\tau = (i, 2, k) \in J\) and \(p = 1\), we can get

Similarly, for \(\tau = (i, 2, k) \in J\) and \(p=x_{3,j_3}\), we can get

Continuing this, we can eventually get \(\mathcal {H}= \mathcal {F}_{1:r,:,:,\cdots , :}\). Since the matrix \((V^{(1)})_{1:r,:}\) is invertible, there exists a matrix \(W \in \mathbb {C}^{n_1\times r} \) such that \(V^{(1)} = W (V^{(1)})_{1:r,:}.\) Observe that

Let \(u^{s,1}=W \cdot (c_sp_s)\) for \(s=1,\cdots ,r\). Then tensor decomposition (28) holds.

4.1 An Algorithm for Computing Tensor Decompositions

Consider a tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times n_2 \times \cdots \times n_m}\) with a given rank r. Recall that the dimensions are ordered such that \(n_1 \geqslant n_2 \geqslant \cdots \geqslant n_m\). We discuss how to compute a rank-r tensor decomposition for \(\mathcal {F}\). Recall \(A[{\mathcal {F}},j]\), \(b[{\mathcal {F}},j,k]\) as in (23), for \(j > 1\). Note that \(A[{\mathcal {F}},j]\) has the dimension \(N_j \times r\), where

If \(r \leqslant N_j\), then the matrices \(A[{\mathcal {F}},j]\) have full column rank for generic cases. For instance, \(r \leqslant N_3\) if \(m=3\) and \(r \leqslant n_2\). Since \(N_2\) is the smallest, we often use the matrices \(A[{\mathcal {F}},j]\) for \(j \geqslant 3\). For convenience, denote the label set

In the following, we consider the case that \(r \leqslant N_3\). For each pair \((j,k) \in \Upsilon \), linear system (24) has a unique solution, for which we denote

For \(j=2\), equation (24) may not have a unique solution if \(r > N_2\). In the following, we show how to get the tensor decomposition without using the matrices \(M^{2,k}[G]\). By Theorem 3, the matrices \(Y^{j,k}\) are simultaneously diagonalizable, that is, there is an invertible matrix \(P \in \mathbb {C}^{r \times r}\) such that all products \(P^{-1} Y^{j,k} P\) are diagonal for every \((j,k) \in \Upsilon \). Suppose they are diagonalized as

with the eigenvalues \(\lambda _{j,k,s}\). Write P in the column form

For each \(s =1,\cdots ,r\) and \(j=3,\cdots , m\), let

Suppose \({\mathcal {F}}\) has a rank-r decomposition

Under the assumptions of Theorem 3, linear system (24) has a unique solution for each pair \((j,k) \in \Upsilon \). For every \(j \in \{3,\cdots ,m\}\), there exist scalars \(c_{s,j},c_{s,1}\) such that

Then, we consider the sub-tensor equation in the vector variables \(y_1, \cdots , y_r \in \mathbb {C}^{n_2}\)

There are \(r n_2 \cdots n_m\) equations and \(rn_2\) unknowns. This overdetermined linear system has solutions such that

After all \(y_s\) are obtained, we solve the linear equation in \(z_1, \cdots , z_r \in \mathbb {C}^{n_1-r}\)

After all \(y_s, z_s\) are obtained, we choose the vectors (\(s=1,\cdots ,r\))

Then we get the tensor decomposition

Summarizing the above, we get the following algorithm for computing tensor decompositions when \(r\leqslant n_1\) and \(r \leqslant N_3\). Suppose the dimensions are ordered such that \(n_1 \geqslant n_2 \geqslant \cdots \geqslant n_m\).

Algorithm 1

(Rank-r tensor decomposition)

- Input:

-

A tensor \({\mathcal {F}}\in \mathbb {C}^{n_1 \times \cdots \times n_m}\) with rank \(r \leqslant \min (n_1, N_3)\).

- Step 1:

-

For each pair \((j,k) \in \Upsilon \), solve the matrix equation for the solution \(Y^{j,k}\):

$$\begin{aligned} A[\mathcal {F},j] Y^{j,k} \, = \, b[{\mathcal {F}},j,k]. \end{aligned}$$(40) - Step 2:

-

Choose generic scalars \(\xi _{j,k} \). Then compute the eigenvalue decomposition \(P^{-1} Y P = D\) for the matrix

$$\begin{aligned} Y \, :=\, \frac{1}{\sum \limits _{ (j,k) \in \Upsilon } \xi _{j,k}} \sum \limits _{ (j,k) \in \Upsilon } \xi _{j,k} Y^{j,k} . \end{aligned}$$ - Step 3:

-

For \(s = 1, \cdots , r\) and \(j \geqslant 3\), let \(v^{s,j}\) be the vectors as in (36).

- Step 4:

-

Solve linear system (37) for vectors \(y_1, \cdots , y_r\).

- Step 5:

-

Solve linear system (38) for vectors \(z_1, \cdots , z_r\).

- Step 6:

-

For each \(s = 1, \cdots , r\), let \(v^{s,1} = \begin{bmatrix} p_s \\ z_s \end{bmatrix}\) and \(v^{s,2} = y_s\).

- Output:

-

A tensor rank-r decomposition as in (39).

The correctness of Algorithm 1 is justified as follows.

Theorem 5

Suppose \(n_1 \geqslant n_2 \geqslant \cdots \geqslant n_m\) and \(r \leqslant \min (n_1, N_3)\) as in (33). For a generic tensor \(\mathcal {F}\) of rank-r, Algorithm 1 produces a rank-r tensor decomposition for \(\mathcal {F}\).

Proof

This can be implied by Theorem 4.

4.2 Tensor Decompositions Via Reshaping

A tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times \cdots \times n_m}\) can be reshaped as a cubic order tensor \(\widehat{\mathcal {F}}\) as in (12). One can apply Algorithm 1 to compute tensor decomposition (12) for \(\widehat{\mathcal {F}}\). If the decomposing vectors \(w^{s,1}, w^{s,2}, w^{s,3}\) can be reshaped to rank-1 tensors, then we can convert (12) to a tensor decomposition for \(\mathcal {F}\). This is justified by Theorem 2, under some assumptions. A benefit for doing this is that we may be able to compute tensor decompositions for the case that

with the dimension \(p_2\) as in Theorem 2. This leads to the following algorithm for computing tensor decompositions.

Algorithm 2

(Tensor decompositions via reshaping.) Let \(p_1, p_2, p_3\) be dimensions as in Theorem 2.

- Input:

-

A tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times \cdots \times n_m}\) with rank \(r \leqslant p_2\).

- Step 1:

-

Reshape the tensor \(\mathcal {F}\) to a cubic tensor \(\widehat{\mathcal {F}} \in \mathbb {C}^{p_1 \times p_2 \times p_3}\) as in (12).

- Step 2:

-

Use Algorithm 1 to compute the tensor decomposition

$$\begin{aligned} \widehat{\mathcal {F}} \, = \, \sum _{s=1}^r w^{s,1}\otimes w^{s,2} \otimes w^{s,3} . \end{aligned}$$(41) - Step 3:

-

If all \(w^{s,1}, w^{s,2}, w^{s,3}\) can be expressed as outer products of rank-1 tensors as in (11), then output the tensor decomposition as in (3). If one of \(w^{s,1}, w^{s,2}, w^{s,3}\) cannot be expressed as in (11), then the reshaping does not produce a tensor decomposition for \(\mathcal {F}\).

- Output:

-

A tensor decomposition for \(\mathcal {F}\) as in (3).

For Algorithm 2, we have a similar conclusion like Theorem 5. For cleanness of the paper, we do not repeat it here.

5 Low Rank Tensor Approximations

When a tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times \cdots \times n_m}\) has the rank bigger than r, the linear systems in Algorithm 1 may not be consistent. However, we can find linear least squares solutions for them. This gives an algorithm for computing low rank tensor approximations. Recall the label set \(\Upsilon \) as in (34). The following is the algorithm.

Algorithm 3

(Rank-r tensor approximation.)

- Input:

-

A tensor \({\mathcal {F}}\in \mathbb {C}^{n_1 \times n_2 \times \cdots \times n_m} \) and a rank \(r \leqslant \min (n_1, N_3)\).

- Step 1:

-

For each pair \((j,k) \in \Upsilon \), solve the linear least squares problem

$$\begin{aligned} \min \limits _{ Y^{j,k} \in \mathbb {C}^{r \times r} } \quad \bigg \Vert A[{\mathcal {F}},j] (Y^{j,k} )^T - b[{\mathcal {F}},j,k] \bigg \Vert ^2 . \end{aligned}$$(42)Let \(\hat{Y}^{j,k}\) be an optimizer.

- Step 2:

-

Choose generic scalars \(\xi _{j,k}\) and let

$$\begin{aligned} {\hat{Y}}[\xi ] \, = \, \frac{1}{\sum \limits _{ (j,k) \in \Upsilon } \xi _{j,k}} \sum \limits _{ (j,k) \in \Upsilon } \xi _{j,k} {\hat{Y}}^{j,k} . \end{aligned}$$Compute the eigenvalue decomposition \({\hat{P}}^{-1} \hat{Y}[\xi ] \hat{P} = \Lambda \) such that \(\hat{P} = \begin{bmatrix} \hat{p}_1&\cdots&\hat{p}_r \end{bmatrix}\) is invertible and \(\Lambda \) is diagonal.

- Step 3:

-

For each pair \((j,k) \in \Upsilon \), select the diagonal entries

$$\begin{aligned} \hbox {diag}[\hat{\lambda }_{j,k,1} \,\, \hat{\lambda }_{j,k,2} \,\, \cdots \,\, \hat{\lambda }_{j,k,r} ] \, = \, \hbox {diag} (\hat{P}^{-1} \hat{Y}^{j,k} \hat{P}). \end{aligned}$$For each \(s=1,\cdots ,r\) and \(j= 3, \cdots , m\), let

$$\begin{aligned} \hat{v}^{s,j} = (1, \hat{\lambda }_{j,2,2}, \cdots , \hat{\lambda }_{j,n_j,s} ). \end{aligned}$$ - Step 4:

-

Let \((\hat{y}_1, \cdots , \hat{y}_r)\) be an optimizer for the following least squares:

$$\begin{aligned} \min \limits _{ (y_1, \cdots , y_r) } \quad \bigg \Vert \mathcal {F}_{1:r, :, \cdots , :} - \sum _{s=1}^r \hat{p}_s \otimes y_s \otimes \hat{v}^{s,3} \otimes \cdots \otimes \hat{v}^{s,m} \bigg \Vert ^2. \end{aligned}$$(43) - Step 5:

-

Let \((\hat{z}_1, \cdots , \hat{z}_r)\) be an optimizer for the following least squares:

$$\begin{aligned} \min \limits _{ (z_1, \cdots , z_r) } \quad \bigg \Vert \mathcal {F}_{r+1:n_1, :, \cdots , :} - \sum _{s=1}^r z_s \otimes \hat{y}_s \otimes \hat{v}^{s,3} \otimes \cdots \otimes \hat{v}^{s,m} \bigg \Vert ^2. \end{aligned}$$(44) - Step 6:

-

Let \(\hat{v}^{s,1} = \begin{bmatrix} \hat{p}_s \\ \hat{z}_s \end{bmatrix}\) and \(\hat{v}^{s,2} = \hat{y}_s\) for each \(s=1,\cdots , r\).

- Output:

-

A rank-r approximation tensor

$$\begin{aligned} \mathcal {X}^{gp} \, :=\, \sum _{s=1}^r \hat{v}^{s,1} \otimes \hat{v}^{s,2} \otimes \cdots \otimes \hat{v}^{s,m}. \end{aligned}$$(45)

If \({\mathcal {F}}\) is sufficiently close to a rank-r tensor, then \(\mathcal {X}^{gp}\) is expected to be a good rank-r approximation. Mathematically, the tensor \(\mathcal {X}^{gp}\) produced by Algorithm 3 may not be a best rank-r approximation. However, in computational practice, we can use (45) as a starting point to solve the nonlinear least squares optimization

to improve the approximation quality. Let \(\mathcal {X}^{opt}\) be a rank-r approximation tensor

which is an optimizer to (46) obtained by nonlinear optimization methods with \(\mathcal {X}^{opt}\) as the initial point.

5.1 Approximation Error Analysis

Suppose the tensor \(\mathcal {F}\) has a best (or nearly best) rank-r approximation

Let \(\mathcal {E}\) be the tensor such that

We analyze the approximation performance of \(\mathcal {X}^{gp}\) when the distance \(\epsilon = \Vert \mathcal {E}\Vert \) is small. For a generating matrix G and a generic \(\xi = (\xi _{j,k})_{(j,k) \in \Upsilon }\), denote that

Recall the \(A[{\mathcal {F}},j]\), \(b[{\mathcal {F}},j,k]\) as in (23). Note that

Suppose \(\left( x^{s, j}\right) _{1} \ne 0\) for \(j=2, \cdots , m \).

Theorem 6

Let \(\mathcal {X}^{gp}\) be produced by Algorithm 3. Let \({\mathcal {F}},\mathcal {X}^{bs},\mathcal {X}^{opt},\mathcal {E},x^{s,j},\xi _{j,k}\) be as above. Assume the following conditions hold:

-

(i)

The subvectors \((x^{1,1})_{1:r}, \cdots , (x^{r,1})_{1:r}\) are linearly independent.

-

(ii)

All matrices \(A[ {\mathcal {F}},j ]\) and \(A[ \mathcal {X}^{bs},j ]\) (\(3 \leqslant j \leqslant m\)) have full column rank.

-

(iii)

The first entry \(\left( x^{s, j}\right) _{1} \ne 0\) for all \(j=2, \cdots , m \).

-

(iv)

The following scalars are pairwisely distinct

$$\begin{aligned} \sum _{ (j,k) \in \Upsilon } \xi _{j, k}(x^{1,j})_k, \cdots , \sum _{ (j,k) \in \Upsilon } \xi _{j ,k}(x^{r,j})_k . \end{aligned}$$(52)

If the distance \(\epsilon = \Vert \mathcal {F}- \mathcal {X}^{bs} \Vert \) is sufficiently small, then

where the constants in the above \(O(\cdot )\) only depend on \({\mathcal {F}}\) and \(\xi \).

Proof

By conditions (i) and (iii) and by Theorem 3, there exists a generating matrix \(G^{bs}\) for \(\mathcal {X}^{bs}\) such that

for all \(j \in \{2,\cdots ,m\}\) and \(k \in \{2,\cdots ,n_j\}\). Note that \(Y^{j,k}\) is the least squares solution to (42), so for each \((j,k) \in \Upsilon \),

(The superscript \(^\dagger \) denotes the pseudoinverse of a matrix.) By (49), for \(j=2,\cdots ,m\), we have

Hence, by the condition (ii), if \(\varepsilon >0\) is small enough, we have

for all \((j,k) \in \Upsilon \). This follows from perturbation analysis for linear least squares (see [42, Theorem 3.4]).

By (48) and Theorem 3, for \(s=1, \cdots , r\) and \((j,k) \in \Upsilon \), it holds that

This means that each \(\left( x^{s, 1}\right) _{1: r}\) is an eigenvector of \(M^{j, k}[G^{bs}]\), associated with the eigenvalue \(\left( x^{s, j}\right) _{k}\), for each \(s=\) \(1, \cdots , r\). The matrices \(M^{j, k}[G^{bs}]\) are simultaneously diagonalizable, by the condition (i). So \(M[\xi ,G^{bs}]\) is also diagonalizable. Note the eigenvalues of \(M[\xi ,G^{bs}]\) are the sums in (52). They are distinct from each other, by the condition (iv). When \(\epsilon >0\) is small enough, \(M[\xi ,G^{bs}]\) also has distinct eigenvalues. Write that

Note that \(Q^{-1} M[\xi ,G^{bs}]Q = D\) is an eigenvalue decomposition. Up to a scaling on \(\hat{P}\) in algorithm 3, it holds that

We refer to [43] for the perturbation bounds in (57). The constants in the above \(O(\cdot )\) eventually only depend on \(\mathcal {F},\xi \).

Note that \((\hat{y}_s,\cdots , \hat{y}_r)\) is the least squares solution to (43) and

Due to perturbation analysis of linear least squares, we also have

Note that the subvectors \((x^{s,1})_{r+1:n_1}\) satisfy the equation

Recall that \((\hat{z}_1, \cdots , \hat{z}_r)\) is the least squares solution to (44). Due to perturbation analysis of linear least squares, we further have the error bound

Summarizing the above, we eventually get \(\Vert \mathcal {X}^{g p}-\mathcal {X}^{bs} \Vert =O(\varepsilon )\), so

The constant for the above \(O(\cdot )\) eventually only depends on \(\mathcal {F}\), \(\xi \).

5.2 Reshaping for Low Rank Approximations

Similar to tensor decompositions, the reshaping trick as in Sect. 4.2 can also be used for computing low rank tensor approximations. For \(m>3\), a tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times n_2 \times \cdots \times n_m}\) can be reshaped as a cubic tensor \(\widehat{\mathcal {F}} \in \mathbb {C}^{p_1 \times p_2 \times p_3}\) as in (12). Similarly, Algorithm 3 can be used to compute low rank tensor approximations. Suppose the computed rank-r approximating tensor for \(\widehat{\mathcal {F}}\) is

Typically, the decomposing vectors \(\hat{w}^{s,1}, \hat{w}^{s,2},\hat{w}^{s,3}\) may not be reshaped to rank-1 tensors. Suppose the reshaping is such that \(I_1 \cup I_2 \cup I_3 =\{1,2,\cdots ,m\}\) is a union of disjoint label sets and the reshaped dimensions are

Let \(m_i = |I_i|\) for \(i=1,2,3\). By the reshaping, the vectors \(\hat{w}^{s,i}\) can be reshaped back to a tensor \(\hat{W}^{s,i}\) of order \(m_i\), for each \(i=1,2,3\). If \(m_i = 1\), \(\hat{W}^{s,i}\) is a vector. If \(m_i = 2\), we can find a best rank-1 matrix approximation for \(\hat{W}^{s,i}\). If \(m_i \geqslant 3\), we can apply Algorithm 3 with \(r=1\) to get a rank-1 approximation for \(\hat{W}^{s,i}\). In application, we are mostly interested in reshaping such that all \(m_i \leqslant 2\). Finally, this produces a rank-r approximation for \(\mathcal {F}\).

The following is a low rank tensor approximation algorithm via reshaping tensors.

Algorithm 4

(low rank tensor approximations via reshaping.)

- Input:

-

A tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times n_2 \times \cdots \times n_m}\) and a rank r.

- Step 1:

-

Reshape \(\mathcal {F}\) to a cubic order tensor \(\widehat{\mathcal {F}} \in \mathbb {C}^{p_1 \times p_2 \times p_3}\).

- Step 2:

-

Use Algorithm 3 to compute a rank-r approximating tensor \(\widehat{\mathcal {X}}^{gp}\) as in (62) for \(\widehat{\mathcal {F}}\).

- Step 3:

-

For each \(i=1,2,3\), reshape each vector \(\hat{w}^{s,i}\) back to a tensor \(\widehat{W}^{s,i}\) of order \(m_i\) as above.

- Step 4:

-

For each \(i=1,2,3\), compute a rank-1 approximating tensor \(\widehat{X}^{s,i}\) for \(\widehat{W}^{s,i}\) of order \(m_i\) as above.

- Output:

-

Reshape the sum \(\sum \limits _{s=1}^r \widehat{X}^{s,1} \otimes \widehat{X}^{s,2} \otimes \widehat{X}^{s,3}\) to a tensor in \(\mathbb {C}^{n_1 \times n_2 \times \cdots \times n_m}\), which is a rank-r approximation for \(\mathcal {F}\).

We can do a similar approximation analysis for Algorithm 4 as for Theorem 6. For cleanness of the paper, we do not repeat that.

6 Numerical Experiments

In this section, we apply Algorithms 1 and 3 to compute tensor decompositions and low rank tensor approximations. We implement these algorithms in MATLAB 2020b on a workstation with Ubuntu 20.04.2 LTS, Intel ®Xeon(R) Gold 6248R CPU @ 3.00GHz and memory 1TB. For computing low rank tensor approximations, we use the function \(cpd\_nls\) provided in Tensorlab 3.0 [44] to solve nonlinear least squares optimization (46). The \(\mathcal {X}^{gp}\) denotes the approximating tensor returned by Algorithm 3, and \(\mathcal {X}^{opt}\) denotes the approximating tensor obtained by solving (46), with \(\mathcal {X}^{gp}\) as the initial point. In our numerical experiments, if the rank r is unknown, we use the most square flattening matrix to estimate r as in (4) and Lemma 1.

Example 2

Consider the tensor \({\mathcal {F}}\in \mathbb {C}^{4 \times 4 \times 3}\) whose slices \({\mathcal {F}}_{:,:,1}, {\mathcal {F}}_{:,:,2}, {\mathcal {F}}_{:,:,3}\) are, respectively

By Lemma 1, the estimated rank is \(r=4\).

Applying Algorithm 1 with \(r=4\), we get the rank-4 decomposition \(\mathcal {F}= U^{(1)} \circ U^{(2)} \circ U^{(3)}\), with

Example 3

Consider the tensor in \(\mathbb {C}^{5 \times 4 \times 3 \times 3}\)

where the matrices \(V^{(i)}\) are

By Lemma 1, the estimated rank \(r=5\).

Applying Algorithm 1 with \(r=5\), we get the rank-5 tensor decomposition \(\mathcal {F}= U^{(1)} \circ U^{(2)} \circ U^{(3)} \circ U^{(4)}\), where the computed matrices \(U^{(i)}\) are

Example 4

Consider the tensor \({\mathcal {F}} \in \mathbb {C}^{5\times 5 \times 4}\) such that

for all \(i_1,i_2,i_3\) in the corresponding range. The 5 biggest singular values of the flattening matrix \(\hbox {Flat}({\mathcal {F}})\) are

Applying Algorithm 3 with rank \(r=2,3,4,5\), we get the approximation errors

r | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

\(\Vert {\mathcal {F}}-\mathcal {X}^{gp} \Vert \) | \(5.123\,\,7\times 10^{-1}\) | \(6.864\,\,7\times 10^{-2}\) | \(1.055\,\,8\times 10^{-2}\) | \(9.944\,\,9\times 10^{-3}\) |

\(\Vert {\mathcal {F}}-\mathcal {X}^{opt} \Vert \) | \(1.541\,\,0\times 10^{-1}\) | \(1.375\,\,4\times 10^{-2}\) | \(2.662\,\,5\times 10^{-3}\) | \(4.900\,\,2\times 10^{-4}\) |

For the case \(r=3\), the computed approximating tensor by Algorithm 3 and by solving (46) is \(U^{(1)} \circ U^{(2)} \circ U^{(3)}\), with

Example 5

Consider the tensor \({\mathcal {F}} \in \mathbb {C}^{6\times 6 \times 6 \times 5 \times 4}\) such that

for all \(i_1,i_2,i_3,i_4,i_5\) in the corresponding range. The 5 biggest singular values of the flattening matrix \(\hbox {Flat}({\mathcal {F}})\) are

Applying Algorithm 3 with rank \(r=2,3,4,5\), we get the approximation errors as follows:

r | 2 | 3 | 4 | 5 |

|---|---|---|---|---|

\(\Vert {\mathcal {F}}-\mathcal {X}^{gp} \Vert \) | \(9.814\,\,8 \times 10^{-3}\) | \(3.198\,\,7 \times 10^{-3}\) | \(5.794\,\,5\times 10^{-3}\) | \(1.012\,\,1 \times 10^{-5}\) |

\(\Vert {\mathcal {F}}-\mathcal {X}^{opt} \Vert \) | \(5.311\,\,1 \times 10^{-3}\) | \(2.262\,\,3\times 10^{-4}\) | \(3.088\,\,9 \times 10^{-5}\) | \(1.752\,\,3 \times 10^{-6}\) |

For the case \(r=3\), the computed approximating tensor by Algorithm 3 and by solving (46) is \(U^{(1)} \circ U^{(2)} \circ U^{(3)} \circ U^{(4)} \circ U^{(5)}\), with

Example 6

As in Theorem 6, we have shown that if the tensor to be approximated is sufficiently close to a rank-r tensor, then the computed rank-r approximation \(\mathcal {X}^{gp}\) is quasi-optimal. It can be further improved to a better approximation \(\mathcal {X}^{opt}\) by solving nonlinear optimization (46). In this example, we explore the numerical performance of Algorithms 3 and 4 for computing low rank tensor approximations. For the given dimensions \(n_{1}, \cdots , n_{m}\), we generate the tensor

where each \(u^{s, j} \in \mathbb {C}^{n_{j}}\) is a complex vector whose real and imaginary parts are generated randomly, obeying the Gaussian distribution. We perturb \(\mathcal {R}\) by another tensor \(\mathcal {E}\), whose entries are also generated with the Gaussian distribution. We scale the perturbing tensor \(\mathcal {E}\) to have a desired norm \(\epsilon \). The tensor \(\mathcal {F}\) is then generated as

We choose \(\epsilon \) to be one of \(10^{-2}, 10^{-4}, 10^{-6}\), and use the relative errors

to measure the approximation quality of \(\mathcal {X}^{\text {gp}}\), \(\mathcal {X}^{\text {opt}}\), respectively. For each case of \((n_1, \cdots , n_m), r\) and \(\epsilon \), we generate 10 random instances of \(\mathcal {R}, {\mathcal {F}}, \mathcal {E}\). For the case \((n_1, \cdots , n_m) = (20,20,20,20,10)\), Algorithm 4 is used to compute \(\mathcal {X}^{\textrm{gp}}\). All other cases are solved by Algorithm 3. The computational results are reported in Tables 1. For each case of \((n_{1}, \cdots , n_{m})\) and r, we also list the median of above relative errors and the average CPU time (in seconds). The \(\text {t}_\text {gp}\) and \(\text {t}_\text {opt}\) denote the average CPU time (in seconds) for Algorithms 3/4 and for solving (46), respectively.

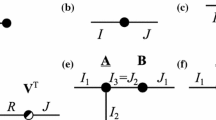

In the following, we give a comparison with the generalized eigenvalue decomposition (GEVD) method, which is a classical one for computing tensor decompositions when the rank \(r \leqslant n_2\). We refer to [45, 46] for the work about the GEVD method. Consider a cubic order tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times n_2 \times n_3}\) with \(n_1 \geqslant n_2 \geqslant n_3\). Suppose \(\mathcal {F}= U^{(1)} \circ U^{(2)} \circ U^{(3)}\) is a rank-r decomposition and \(r \leqslant n_2\). Assume its first and second decomposing matrices \(U^{(1)}, U^{(2)}\) have full column ranks and the third decomposing matrix \(U^{(3)}\) does not have colinear columns. Denote the slice matrices

One can show that

This implies that the columns of \((U^{(1)}_{1:r,r})^{-\top }\) are generalized eigenvectors of the matrix pair \((F_1^{{\top }}, F_2^{{\top }})\). Consider the transformed tensor

For each \(s=1,\cdots ,r\), the slice \(\hat{{\mathcal {F}}}_{s,:,:}=U^{(2)}_{:,s} \cdot (U^{(3)}_{:,s})^{\top }\) is a rank-1 matrix. The matrices \(U^{(2)}\), \(U^{(3)}\) can be obtained by computing rank-1 decompositions for the slices \(\hat{{\mathcal {F}}}_{s,:,:}\). After this is done, we can solve the linear system

to get the matrix \(U^{(1)}\). The following is the GEVD method for computing cubic order tensor decompositions when the rank \(r \leqslant n_2\).

Algorithm 5

(The GEVD method.)

- Input:

-

A tensor \(\mathcal {F}\in \mathbb {C}^{n_1 \times n_2 \times n_3}\) with the rank \(r \leqslant n_2\).

- 1.:

-

Formulate the tensor \(\hat{{\mathcal {F}}}\) as in (65).

- 2.:

-

For \(s=1,\cdots ,r,\) compute \(U^{(2)}_{:,s}\), \(U^{(3)}_{:,s}\) from the rank-1 decomposition of the matrix \(\hat{{\mathcal {F}}}_{s,:,:}\).

- 3.:

-

Solve linear system (66) to get \(U^{(1)}\).

- Output:

-

The decomposing matrices \(U^{(1)},U^{(2)},U^{(3)}.\)

We compare the performance of Algorithm 1 and Algorithm 5 for randomly generated tensors with the rank \(r \leqslant n_2\). We generate \(\mathcal {F}= U^{(1)} \circ U^{(2)} \circ U^{(3)}\) such that each \(U^{(i)} \in \mathbb {C}^{n_i \times r}\). The entries of \(U^{(i)}\) are randomly generated complex numbers. Their real and imaginary parts are randomly generated, obeying the Gaussian distribution. For each case of \((n_1,\cdots ,n_m)\) and r, we generate 20 random instances of \(\mathcal {F}\). Algorithm 5 is implemented by the function \(cpd\_gevd\) in the software Tensorlab. All the tensor decompositions are computed correctly by both methods. The average CPU time (in seconds) for Algorithm 1 is denoted as time-gp, while the average CPU time for the GEVD method is denoted as time-gevd. The computational results are reported in Table 2. The numerical experiments show that Algorithm 1 is more computationally efficient than Algorithm 5.

7 Conclusions

This paper gives computational methods for computing low rank tensor decompositions and approximations. The proposed methods are based on generating polynomials. For a generic tensor of rank \(r\leqslant \textrm{min}(n_1,N_3)\), its tensor decomposition can be obtained by Algorithm 1 . Under some general assumptions, we show that if a tensor is sufficiently close to a low rank one, then the low rank approximating tensor produced by Algorithm 3 is quasi-optimal. Numerical experiments are presented to show the efficiency of the proposed methods.

References

Landsberg, J.: Tensors: Geometry and Applications, Grad. Stud. Math., 128, AMS, Providence, RI, (2012)

Lim, L.H.: Tensors and hypermatrices. In: Hogben, L. (ed.) Handbook of Linear Algebra, 2nd edn. CRC Press, Boca Raton (2013)

Breiding, P., Vannieuwenhoven, N.: A Riemannian trust region method for the canonical tensor rank approximation problem. SIAM J. Optim. 28(3), 2435–2465 (2018)

Lathauwer, L., de Moor, B., Vandewalle, J.: Computation of the canonical decomposition by means of a simultaneous generalized Schur decomposition. SIAM J. Matrix Anal. Appl. 26(2), 295–327 (2004)

Lathauwer, L.: A link between the canonical decomposition in multilinear algebra and simultaneous matrix diagonalization. SIAM J. Matrix Anal. Appl. 28(3), 642–666 (2006)

Domanov, I., de Lathauwer, L.: Canonical polyadic decomposition of third-order tensors: Reduction to generalized eigenvalue decomposition. SIAM J. Matrix Anal. Appl. 35(2), 636–660 (2014)

Nie, J.: Generating polynomials and symmetric tensor decompositions. Found. Comput. Math. 17(2), 423–465 (2017)

Sorber, L., van Barel, M., de Lathauwer, L.: Optimization-based algorithms for tensor decompositions: canonical polyadic decomposition, decomposition in rank-\((L_r, L_r,1)\) terms, and a new generalization. SIAM J. Optim. 23(2), 695–720 (2013)

Telen, S., Vannieuwenhoven, N.: Normal forms for tensor rank decomposition. (2021) arXiv:2103.07411

Cui, C.F., Dai, Y.H., Nie, J.: All real eigenvalues of symmetric tensors. SIAM J. Matrix Anal. Appl. 35(4), 1582–1601 (2014)

Fan, J., Nie, J., Zhou, A.: Tensor eigenvalue complementarity problems. Math. Progr. 170(2), 507–539 (2018)

Nie, J., Wang, L.: Semidefinite relaxations for best rank-\(1\) tensor approximations. SIAM J. Matrix Anal. Appl. 35(3), 1155–1179 (2014)

Nie, J., Zhang, X.: Real eigenvalues of nonsymmetric tensors. Comput. Opt. Appl. 70(1), 1–32 (2018)

Nie, J., Yang, Z., Zhang, X.: A complete semidefinite algorithm for detecting copositive matrices and tensors. SIAM J. Optim. 28(4), 2902–2921 (2018)

Xiong, L., Chen, X., Huang, T.-K., Schneider, J., Carbonell, J. G.: Temporal collaborative filtering with bayesian probabilistic tensor factorization. In: Proceedings of the 2010 SIAM International Conference on Data Mining, 211–222, (2010)

Dunlavy, D.M., Kolda, T.G., Acar, E.: Temporal link prediction using matrix and tensor factorizations. ACM Trans. Knowl. Discov. Data 5(2), 1–27 (2011)

Araujo, M., Papadimitriou, S., Gunnemann, S., Faloutsos, C., Basu, P., Swami, A., Papalexakis, E. E., Koutra, D.: Com2: fast automatic discovery of temporal (‘comet’) communities. In: Pacific-Asia Conference on Knowledge Discovery and Data Mining, 271–283, (2014)

Nickel, M., Tresp, V., Kriegel, H.-P.: A three-way model for collective learning on multi-relational data. In: Proceedings of the 28th International Conference on Machine Learning, 809–816, (2011)

Jenatton, R., Roux, N., Bordes, A., Obozinski, G.R.: A latent factor model for highly multi-relational data. Adv. Neural. Inf. Process. Syst. 25, 3167–3175 (2012)

Boden, B., Gunnemann, S., Hoffmann, H., Seidl, T.: Mining coherent subgraphs in multi-layer graphs with edge labels. In: Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining, 1258–1266, (2012)

Kofidis, E., Regalia, P.A.: Tensor approximation and signal processing applications. Contemp. Math. 280, 103–134 (2001)

Pajarola, R., Suter, S. K., Ballester-Ripoll, R., Yang, H.: Tensor approximation for multidimensional and multivariate data. In: Anisotropy Across Fields and Scales, 73–98, (2021)

Guo, B., Nie, J., Yang, Z.: Learning diagonal gaussian mixture models and incomplete tensor decompositions. Vietnam J. Math. (2021). https://doi.org/10.1007/s10013-021-00534-3

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Nie, J., Yang, Z.: Hermitian tensor decompositions. SIAM J. Matrix Anal. Appl. 41(3), 1115–1144 (2020)

Friedland, S., Wang, L.: Spectral norm of a symmetric tensor and its computation. Math. Comput. 89, 2175–2215 (2020)

Qi, L., Hu, S.: Spectral norm and nuclear norm of a third order tensor (2019). arXiv:1909.01529

de Silva, V., Lim, L.-H.: Tensor rank and the ill-posedness of the best low rank approximation problem. SIAM J. Matrix Anal. Appl. 30(3), 1084–1127 (2008)

Comon, P., Luciani, X., de Almeida, A.L.F.: Tensor decompositions, alternating least squares and other tales. J. Chemom. 23, 393–405 (2009)

Mao, X., Yuan, G., Yang, Y.: A self-adaptive regularized alternating least squares method for tensor decomposition problems. Anal. Appl. 18(01), 129–147 (2020)

Yang, Y.: The epsilon-alternating least squares for orthogonal low-rank tensor approximation and its global convergence. SIAM J. Matrix Anal. Appl. 41(4), 1797–1825 (2020)

Lathauwer, L., de Moor, B., Vandewalle, J.: On the best rank-\(1\) and rank-\((R_1, R_2, \cdots , R_n)\) approximation of higher-order tensors. SIAM J. Matrix Anal. Appl. 21(4), 1324–1342 (2000)

Guan, Y., Chu, M.T., Chu, D.: Convergence analysis of an svd-based algorithm for the best rank-\(1\) tensor approximation. Linear Algebra Appl. 555, 53–69 (2018)

Friedland, S., Tammali, V.: Low-rank approximation of tensors. In: Numerical Algebra, Matrix Theory, Differential-Algebraic Equations and Control Theory. 377–411, Springer, (2015)

Nie, J.: Low rank symmetric tensor approximations. SIAM J. Matrix Anal. Appl. 38(4), 1517–1540 (2017)

Cox, D., Little, J., O’Shea, D.: Ideals, Varieties, and Algorithms: An Introduction to Computational Algebraic Geometry and Commutative Algebra. Springer, Berlin (2013)

Schafarevich, I.: Basic Algebraic Geometry I: Varieties in Projective Space. Springer-Verlag, Berlin (1988)

Kruskal, J.B.: Three-way arrays: rank and uniqueness of trilinear decompositions, with application to arithmetic complexity and statistics. Linear Algebra Appl. 18(2), 95–138 (1977)

Chiantini, L., Ottaviani, G., Vannieuwenhoven, N.: Effective criteria for specific identifiability of tensors and forms. SIAM J. Matrix Anal. Appl. 38(2), 656–681 (2017)

Nie, J.: Nearly low rank tensors and their approximations (2014). arXiv:1412.7270

Nie, J., Wang, L., Zheng, Z.: Higher Order Correlation Analysis for Multi-View Learning, Pacific Journal of Optimization, to appear, (2022)

Demmel, J.: Applied Numerical Linear Algebra. SIAM, New Delhi (1997)

Chatelin, F.: Eigenvalues of Matrices. SIAM, New Delhi (2012)

Vervliet, N., Debals, O., de Lathauwer, L.: Tensorlab 3.0 - numerical optimization strategies for large-scale constrained and coupled matrix/tensor factorization, In 2016 50th Asilomar Conference on Signals, Systems and Computers, 1733–1738, (2016)

Leurgans, S.E., Ross, R.T., Abel, R.B.: A decomposition for three-way arrays. SIAM J. Matrix Anal. Appl. 14, 1064–1083 (1993)

Sanchez, E., Kowalski, B.R.: Tensorial resolution: a direct trilinear decomposition. J. Chemometrics 4, 29–45 (1990)

Author information

Authors and Affiliations

Contributions

The authors have done analysis and computational results.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Jiawang Nie is partially supported by the NSF grant DMS-2110780. Li Wang is partially supported by the NSF grant DMS-2009689.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nie, J., Wang, L. & Zheng, Z. Low Rank Tensor Decompositions and Approximations. J. Oper. Res. Soc. China (2023). https://doi.org/10.1007/s40305-023-00455-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40305-023-00455-7