Abstract

Objectives

Published literature has levied criticism against the cost-minimisation analysis (CMA) approach to economic evaluation over the past two decades, with multiple papers declaring its ‘death’. However, since introducing the requirements for economic evaluations as part of health technology (HTA) decision-making in 1992, the cost-minimisation analysis (CMA) approach has been widely used to inform recommendations about the public subsidy of medicines in Australia. This research aimed to highlight the breadth of use of CMA in Australia and assess the influence of preconditions for the approach on subsidy recommendations

Methods

Relevant information was extracted from Public Summary Documents of Pharmaceutical Benefits Advisory Committee (PBAC) meetings in Australia considering submissions for the subsidy of medicines that included a CMA and were assessed between July 2005 and December 2022. A generalised linear model was used to explore the relationship between whether medicines were recommended and variables that reflected the primary preconditions for using CMA set out in the published PBAC Methodology Guidelines. Other control variables were selected through the Bolasso Method. Subgroup analysis was undertaken which replicated this modelling process.

Results

While the potential for inferior safety or efficacy reduced the likelihood of recommendation (p < 0.01), the effect sizes suggest that the requirements for CMA were not requisite for recommendation.

Conclusion

The Australian practice of CMA does not strictly align with the PBAC Methodology Guidelines and the theoretically appropriate application of CMA. However, within the confines of a deliberative HTA decision-making process that balances values and judgement with available evidence, this may be considered acceptable, particularly if stakeholders consider the current approach delivers sufficient clarity of process and enables patients to access medicines at an affordable cost.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Cost minimisation analysis (CMA) is widely used in submissions to the Pharmaceutical Benefits Advisory Committee (PBAC) in Australia |

Although the PBAC Methodology Guidelines establish stringent criteria for the use of CMA that align with best practices, this study reveals that the likelihood of recommendation was not substantially reduced when the evidence did not fully meet these requirements (for submissions between 2005 and 2022). |

The application of CMA, while not without its flaws, enables a pragmatic approach for balancing the robustness of evidence with the need to provide affordable access to medicines for patients. |

1 Introduction

Health technology assessment (HTA) supports the allocation of healthcare resources, which can be described as striking a balance between empirical evidence (and the interpretation of its uncertainty) with the values, principles, standards and case-based judgements within the healthcare, economic and societal context [1]. In Australia, this is stipulated in the Pharmaceutical Benefits Advisory Committee (PBAC) Methodology Guidelines, which posit that decision-making requires judgement and consideration of ‘less-readily quantifiable facts’, including equity [2].

The interpretation of the empirical evidence is facilitated through economic evaluations estimating the incremental costs and the incremental health outcomes compared with an alternative treatment (or no treatment), summarised using a cost-effectiveness ratio. A cost-effectiveness model is often used to structure and synthesise available evidence and evaluate uncertainty. Such models, much like prescription medicines, can provide transformative benefits when used properly (e.g. through translating disparate costs and clinical data into actionable insights for policymakers) but can lead to unintended negative outcomes when misused [3]. For example, modelling cost-effectiveness can be problematic without clinically relevant and statistically significant health gains as it could suggest the allocation of resources (or acceptance of a higher incremental cost-effectiveness ratio) despite the fact that failure to show a statistically significant improvement is not the same as establishing equivalence [4].

When alternative treatment options are deemed to be equivalent, cost minimisation analysis (CMA), a simplified form of cost effectiveness analysis (CEA), can be applied [5]. CMA assumes that treatment options are equivalent (without uncertainty) and narrows the analysis to focus on the difference in the included costs. The tempered scope of CMA reduces the time and resource requirements for generating the economic evaluation [6] and eschews the negative aspects of more complex CEA models, such as uncertainty related to the model and communication challenges [7,8,9]. However, the assumption of equivalence relies on robust clinical evaluation methods to establish equivalence in a health outcome, such as noninferiority trials powered such that confidence intervals, do not include a clinically meaningful difference. In practice, evidence is imperfect. Thus, CMA can require decision-makers to still interpret the parameter uncertainty and the potential for the omission of important outcomes when assessing this analysis.

Briggs and O’Brien [10] pronounced ‘the death’ of CMA in 2001, a sentiment echoed a decade later by Dakin and Wordsworth [6] who argued that the approach ‘should also be buried’. Dakin and Wordsworth [6] further highlighted a decline in the use of CMA in published economic evaluationsFootnote 1 after it reduced from 8% in 1999 to 1% in 2009. This period coincided with the negative literature of the early 2000s and the revision of a popular textbook on economic evaluations in 2005 that relegated CMA to the status of a ‘partial’ economic evaluation [11]. Subsequent publications reaffirmed opposition to CMA or emphasised the challenges with applying the approach appropriately [12,13,14,15], although some studies discuss its use in real-world settings [16,17,18].

The criticisms of CMA suggest a paucity of circumstances where equivalence can be conclusively established. Equivalent outcomes may be uncertain due to limitations in the evidence base, which may include conflicting evidence and be limited in the number of relevant health outcomes collected and at what interval [10]. Similarly, there can be uncertainty due to the range of potential costs, with the choice of costs to include or omit having a critical impact on the results of a CMA [19, 20]. Even identical interventions will generally not be equivalent across all health outcomes, at all times, due to statistical uncertainty. Other challenges, such as underpowered trials and establishing noninferiority margins, are detailed elsewhere (such as [6, 10, 14, 21,22,23,24]). Consequently, noninferiority must be narrowly defined in terms of health outcomes and time points, relying on the application of judgement to determine which health outcomes are most relevant [21]. While it may be true that these challenges are problematic for the theoretical application of CMA, the application of values and case-based judgement when interpreting this uncertainty falls within the remit of HTA [1]. Indeed, interviews with individuals involved in the HTA decision-making process found that ‘uncertainty increases the need for deliberation’, including when the ‘comparative clinical benefit…was unclear’ [25].

The utility of the CMA is exemplified in Australia, where, between 2010 and 2018, CMA was utilised in 42% of submissions to the HTA authority, the Pharmaceutical Benefits Advisory Committee (PBAC), resulting in 52% of recommendations [18]. This use is based on the PBAC Methodology Guidelines which state that CMA is the preferred approach for sponsors of new medicines to use in submissions for public subsidy in Australia when the clinical claim is noninferior [2]. Under Australian law, a medicine can only be recommended for government subsidy at a price higher than alternative treatments if it demonstrates superior health outcomes [26]. This legal framework enables the policy of reimbursing medications with the same demonstrated effectiveness for a specific indication at the same aggregate cost as an already reimbursed medication using CMA.

Overall, the PBAC Methodology Guidelines for CMA set stringent evidence requirements, which a review of global HTA guidelines found to be the only published HTA document that ‘reported detailed criteria for meeting clinical equivalence’ [16]. Indeed, the requirements for CMA appear to align with best practices [21], consistent with its stated aim [2]. First, the guidelines require equivalence to be established through ‘detailed analysis of clinical data’ that justifies the noninferiority of health outcome(s) by excluding potential inferiority of efficacy and safety outcomes through a rigorous assessment, such as through a non inferiority trial [21].Footnote 2 Additionally, the guidelines necessitate that claims of noninferiority must establish a noninferiority margin informed by a minimal clinically important difference [6].Footnote 3 Finally, the guidelines suggest that CMA should only be used when safety profiles are similar [27].Footnote 4 Although this requirement has limited direct theoretical foundations, the requirement is explicitly stated in the guidelines. Medicines may achieve similar outcomes but have differing safety profiles, which should not be mistaken for equivalence.

Despite the widespread use of CMA and well-documented methodology guidelines, there remains a substantial gap in the literature about the use of CMA in Australia. This gap is highlighted in the National Institute for Health and Care Excellence (NICE) Decision Support Unit Report on CMA, which did not highlight the prominence of CMA in Australia despite including a section on the international practice of CMA [13]. This report limited its consideration of the PBAC in Australia to a review of the methodology guidelines and a single paper on the potential use of CMA for biosimilars [2, 13, 28].

This study aims to provide insight into the breadth of use of CMA in Australia and assess the alignment of recommendations by the PBAC with the requirements set out in the PBAC Methodology Guidelines. To support this aim, the analysis dataset is included as an electronic supplement to allow interested parties to further interrogate the data [29].

2 Data and Methods

2.1 Overview

The revealed preferences of HTA Authorities, such as the PBAC, have been an area of research for decades. In a systematic review of revealed preferences, Ghijben, et al. identified six persistent factorsFootnote 5 influential in the PBAC’s recommendations [30]. However, the findings were based on analysis that included CMA alongside other economic evaluation approaches, which would have confounded the results, particularly since three of the six influential factors identified would have minimal applicability to CMA (budget impact, the incremental cost-effectiveness ratio, and its associated uncertainty) [30].

2.2 Information Sources

As the only public source of information regarding the PBAC recommendations, public summary documents (PSDs) of the PBAC meetings were used as the basis to measure adherence to the Methodology Guidelines. PSDs have been compiled and published following PBAC's consideration and recommendation of medicines since 2005 [31]. The published information provides the rationale and information supporting the PBAC recommendation, including 'information on the clinical and cost-effectiveness of medicines' relative to its comparator [31]. The structure of PSDs is well-defined and aims to provide consistent information across recommendations [32], although the structure and level of detail have changed over time [33].

2.3 Data

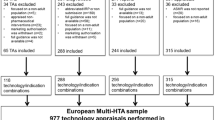

Similar to Harris, Li [34] and others (e.g. [18, 35,36,37,38,39,40]), this study independently collated a dataset of PBAC outcomes. All PSDs published between July 2005 and December 2022 and PBAC outcomes were downloaded from the PBS website [41]. The PSDs were assigned an identifier and linked to their PBAC outcome [41]. The PSD content was initially searched to exclude PSDs without reference to CMA (or related terms). The remaining PSDs were reviewed to confirm that they met the inclusion criteria as described in the data dictionary of the supplementary dataset [29] and illustrated in PRISMA 2020 Flow Diagram (Fig. 1) [42]. For instance, the PSD must reflect a major submissionFootnote 6 that requested the listing (or expansion) of a medicine (e.g. Secretariat reviews were excluded) where the PBAC assessment considered CMA for a unique product (i.e. excluding PSDs of fixed-dose combination products or where the comparator included the same active ingredients) with a final PBAC recommendation or rejection (e.g. ‘deferred’ submissions were excluded unless an addendum altered this status). The presence of other economic evaluation approaches (e.g. cost utility alongside CMA) did not result in exclusion.

Variables were initially recorded through a holistic review by a single reviewer using a LimeSurvey-based coding template [43], followed by targeted keyword searches for validation. Data validation and descriptive statistics were generated using Python 3.9.7 and various packages [44,45,46,47,48]. A second reviewer independently collected a five percent sample in a Microsoft Excel coding template. The initial inter-rater reliability was 90%. Discrepancies were resolved through discussion, 70% of which were resolved in favour of the initial coder. An extract of variables considered in the models are described in Table 1, although variables with over 95% sparsity or also shown with grouped levels were excluded from the statistical analysis.

The variables collected for this study were informed by past papers [35,36,37, 39, 49, 50] but with an additional focus on the requirements for using CMA set out in the methodology guidelines, which state that ‘a cost-minimisation approach is appropriate where there is a therapeutic claim of noninferiority (or superiority), the safety profile is equivalent or superior (in both nature and magnitude), and use of the proposed medicine is anticipated to result in equivalent or lesser costs to the health system’[2]. Drawing on this statement and the language set out in Table 2, the analysis focused on four theoretically justified variables for each reviewed submission: (1) the potential for inferior safety of a medicine was suggested, (2) the potential for inferior efficacy of a medicine was suggested, (3) the presence of a differing safety profile or (4) a nominated noninferiority margin was exceeded. As these four theoretically justified variables were based on stipulations in the PBAC Guidelines, the likelihood of a recommendation should be substantially reduced if any were observed.

The categorisation of these variables was intended to mirror the evaluative approach taken by the PBAC observed for submissions. Namely, the comparative effectiveness of a medicine in terms of safety and efficacy were considered as distinct assessments. Furthermore, the safety and efficacy assessments were conducted differently. The safety of a medicine appeared to have been assessed in terms of the potential for inferior safety (e.g. more frequently occurring or more severe adverse events) separate to the potential for differing safety profiles (e.g. different types of adverse events, even if not necessarily worse overall), while efficacy was also assessed in relation to a noninferiority margin.

Other variables were collected but not considered for inclusion in the model to ensure the stability of the statistical model and to avoid confounding effects that could distort the statistical analysis, including variables only relevant to rejections (such as the rationale for rejection) due to their inapplicability to positive recommendations. Similarly, variables closely linked to the four theoretically justified variables were not included to avoid collinearity, such as whether the PBAC accepted the medicine sponsor’s clinical claim, which was closely linked with the inclusion of language that suggested the potential for inferiority.

2.4 Statistical Analysis

Statistical analysis was conducted in RStudio [51]. Since a large number of potentially correlated variables can cause LASSO (least absolute shrinkage and selection operator) to be unstable [52,53,54], the Bootstrapped LASSO (Bolasso) method was used for the stable section of variables [55]; although, other approaches could be justified [56,57,58]. This approach generated 100 bootstrap samples and fit 500 iterations of a LASSO binomial model for each sample, with ten folds for cross-validation, using the R package glmnet [59]. Each LASSO iteration was subject to the constraint that four theoretically justified variables must be included (Sect. 2.3 and Table 2). Selected variables had nonzero coefficients for greater than 80% of LASSO iterations.

A logit binomial generalised linear model (GLM) was constructed using the selected variables and the dependent variable of a positive recommendation. The ‘margins’ package [60] was used to calculate the average marginal effect (AME) for included variables, which quantifies the difference in predicted recommendation rates between a true and false value for each variable, assuming other variables were held constant.

2.5 Subgroup Analysis

The statistical analysis procedure was repeated using only PSDs where the proposed comparator used for the CMA was deemed appropriate, based on language in the PSD indicating that a suitable comparator was presented in the submission. While an appropriate comparator is a prerequisite for conducting an economic evaluation as a component of the ‘PICO’ framework (population, intervention, comparator and outcome), the determination of suitability can be complex due to uncertainties relating to the standard of care and the emergence and reimbursement status of new treatment options [12]. Given the relevance of this assessment by the PBAC, we opted to retain this variable for the primary model and explored the impact of this exclusion through this secondary analysis.

Due to a small sample size and the fact that an appropriate comparator is a prerequisite for a recommendation, we did not conduct a subgroup analysis of the PSDs with an inappropriate comparator.

3 Results

3.1 Descriptive Statistics

In total, 447 reviewed PSDs were included, with 407 PSDs included in the subgroup analysis as 40 submissions had an inappropriate comparator (Table 3). The number of submissions per year that met the inclusion criteria were relatively stable, ranging between 19 and 33 between 2006 and 2023 (mean 26, standard deviation 4.2). Most submissions were first submissions (n = 352; 79%) rather than resubmissions (n = 95, 21%), and were generally for new medicines (62%) rather than requesting an extension of existing listings (38%). Most submissions were relatively straightforward in that they only included CMA as an economic evaluation (77%) and related to only one population (84%); although, 50% of submissions assessed multiple comparators.

Most submissions included evidence other than from direct trials (77%) versus only including evidence from direct trials (23%). The nature of the evidence was often mixed, with 44% of submissions including both favourable and unfavourable point estimates, while 23% were only favourable, 16% only unfavourable and 17% not reporting any point estimates. The types of medicines varied, with antineoplastics being the most common (20%). Most submissions were not considered to address an unmet need (75%) and were related to quality-of-life improvements (75%) rather than survival (25%).

The PBAC recommended 330 (74%) and rejected 117 submissions that considered CMA. PSDs that had a potential for inferior efficacy (61%) or safety (65%) were less likely than average to receive a positive recommendation, but PSDs with a different safety profile (72%) or having a nominated noninferiority margin exceeded (72%) were numerically similar to average. For submissions that only presented unfavourable point estimates, the recommendation rate was 54%.

The most common reason for the rejection of submissions was an apparent absence of sufficient evidence to support the claims of noninferiority or superiority, which was cited as a contributing factor in 90 of 118 rejections (Table 4). Given the relatively low recommendation rate observed for antineoplastic agents (64%) despite the public interest and awareness of cancer treatments, we explored potential differences in the rationale for the rejection between these medicines and other ATC2 categories. Compared with all other categories, rejections for antineoplastic agents were significantly less likely to cite an inappropriate comparator as a reason (odds ratio [OR] of 0.17, 95% confidence interval (CI) 0.05–0.59). While not statistically significant, there was a trend toward antineoplastic agents being more likely to be rejected due to insufficient evidence supporting claims of noninferiority or superiority (OR of 2.71, 95% CI 0.86–8.55).

3.2 Logit Binomial Generalised Linear Model

The selected variables, coefficients, average marginal effect (AME) and associated p values are presented in Table 5.

In the fitted model, the variable that reflected whether the PSD mentioned the potential for inferior efficacy had a statistically significant effect (p < 0.01) and an AME of − 0.12 (95% CI − 0.2 to − 0.05), which indicates an estimated reduction in the likelihood of recommendation by 12%. The variable that reflected whether the PSD mentioned the potential for inferior safety was not statistically significant (p = 0.07) and had a lower AME of − 0.07 (95% CI − 0.14 to 0.01), which indicates an estimated reduction in the likelihood of recommendation of 7%. Both the medicine having a different safety profile from the comparator (AME of − 0.04, 95% CI − 0.13 to 0.05) and the noninferiority margin being exceeded (AME of − 0.01, 95% CI − 0.09 to 0.11) were not statistically significant and had only modest effects on the likelihood of recommendation.

The remaining six variables were identified through the Bolasso method, subject to the constraint that any of the abovementioned variables that were ‘requirements’ for CMA be included. The comparator being considered appropriate had the strongest positive effect (AME of 0.56, 95% CI 0.44–0.68), reflective of the nearly one-to-one correspondence with an inappropriate comparator being the grounds for rejection. Other significant variables included whether the medicine was an antineoplastic agent (mapped to ATC2 L01), which indicates an estimated reduction in the likelihood of recommendation of 16% (AME of − 0.14, 95% CI − 0.22 to − 0.06), whether other economic evaluation types were included (AME of − 0.09, 95% CI − 0.17 to − 0.02), the assessment month being between March and July (AME of − 0.12, 95% CI − 0.20 to − 0.05), whether only unfavourable point estimates were presented (AME of − 0.12, 95% CI − 0.2 to − 0.04) or the PSD presented a risk ratio or hazard ratio that had a wide confidence interval (AME of − 0.1, 95% CI − 0.17 to − 0.03).

Notably, of the four theoretically justified variables, only the potential for inferior efficacy had a greater magnitude of effect than any of the variables identified through the Bolasso method.

3.3 Subgroup Analysis Excluding Submissions with an Inappropriate Comparator

Excluding the 42 submissions with an inappropriate comparator had limited effects on the inclusion and the modelled impact of selected variables which supports the robustness of the Bolasso method (Table 6). Only one variable (other than the excluded variable of having an inappropriate comparator) was not selected, which was ‘the RR/HR confidence intervals presented exceeded 1.5’. No new variables were selected and the statistical significance (or lack thereof) of variables were unchanged.

In the subgroup analysis, the potential for inferior efficacy had the greatest magnitude of effect of any of the variables identified through the Bolasso method with an AME of −0.14.

4 Discussion

4.1 Overview

This study aims to provide insight into the breadth of use of CMA in Australia and assess the influence of four variables derived from the requirements of CMA in the PBAC Methodology Guidelines. Four variables were identified as potential requirements to assess this alignment. The analytical approach aimed to model the effect of these four theoretically justified variables while identifying and controlling for confounding variables.

Overall, none of the four variables perfectly correlated with a negative recommendation as the methodology guidelines would suggest. Descriptive statistics show that submissions that presented only unfavourable point estimates had a lower recommendation rate (54%) compared with submissions with a potential for inferior efficacy (61%). Only two of the four theoretically justified variables were statistically significant in the fitted model. Further, the estimated effect of these four variables ranged from an inconsequential increase of 1% (the discussed noninferiority margin was exceeded) to a 12% (potential for inferior efficacy) reduction in the likelihood of recommendation. In contrast, the methodology guidelines state that the clinical claim of noninferiority ‘needs to be well justified’ to use CMA.

The base model also suggested that having an appropriate comparator was the most important criterion as the estimated probability of recommendation would otherwise decrease by 56%. However, the subgroup analyses that excluded this variable revealed that the potential for inferior efficacy had the greatest effect among the remaining variables, albeit at a modest 14% decrease in the estimated probability of recommendation. Accordingly, this study suggests that PBAC recommendations do not strictly align with the methodology guidelines for submissions that use CMA evidenced by the potential for inferior efficacy only reduced the likelihood.

The PBAC recommendations result from a comprehensive evaluation that considers a broad range of evidentiary and contextual factors [2], with conflicts of interest managed [61]. While rejections recount specific issues, positive recommendations are less likely to explicitly cite the specific decisive elements. This study found that submissions not fully adherent with all four variables derived from the PBAC Methodology Guidelines still received positive recommendations. This finding is analogous to cases where submissions present seemingly unfavourable point estimates yet receive positive recommendations. Such cases may occur due to confidence intervals indicating a range of potentially acceptable outcomes or broader contextual factors. This parallel illustrates the nuanced nature of recommendations, where apparent limitations in submissions may be mitigated by other factors.

It is instructive to consider the implication of this study and its aims with related bodies of literature. This study found that clinical evidence did not always meet the requirements specified in the PBAC Guidelines, which aligned with past reviews of submissions to the PBAC that found the quality of the supporting clinical evidence to be ‘poor fit for purpose’ [62, 63]. Prior studies have also highlighted that CMA is associated with a higher probability of success for submissions and reduced time to approval [18]. Within this study, some variables identified by the Bolasso method conformed with past assessments of revealed preference, namely direct versus indirect clinical evidence and cancer vs other disease areas [36, 64]. However, not all identified variables coincided with those from past reviews. This could be expected since this was the only assessment focused entirely on submissions that used CMA and took a more nuanced approach regarding the clinical evidence. For example, past reviews focused on more holistic acceptance of clinical evidence [34, 37, 50] or language related to the uncertainty of clinical evidence [39], while this study differentiated based on suggestions of the potential for inferiority.

The use of CMA in Australia reflects both the challenges and benefits of the approach. In practice, without evidence of superiority, a price premium supported by cost-effectiveness analysis could not be accepted by the PBAC [26]. CMA enables patients to access medicines that would otherwise not be available. This enables clinicians more treatment options when attempting to maximise their patients’ health, such as when they experience an adverse event, fail to respond to the medicine or prefer a medicine due to the mode or frequency of administration. Furthermore, resource allocation decisions must still be made, and an imperfect framework is preferable to none [65].

While this practice supports access to medicines, it diverges from the methodologically robust application of CMA established in the methodology guidelines. A failure to apply robust economic evaluation approaches could degrade health systems [15, 66], for example, by overpaying for inferior medicines at the expense of other medicines. Conversely, it could also lead to underpaying for potentially superior medicines.

This study suggests that it is unclear whether the current process effectively achieves its objectives. Given the significant costs involved in developing and evaluating a submission, relevant stakeholders, including the PBAC, the Government, clinicians and patients, should consider whether the current process delivers sufficient value and clarity of process and outcomes. Indeed, the ongoing Australian review of HTA methods may result in policy options that address some of the limitations of CMA [67, 68]. Evidence has suggested that the PBAC submission process is a bargaining process [25, 30, 34], so adopting a more streamlined process could be appropriate for some medicines and reduce resources. Options that adopt a ‘proportionate appraisal pathway’ [68] would have to weigh the trade-off in using robust economic evaluation approaches with a process that more clearly and accurately communicates the assessment criteria.

4.2 Strengths and Limitations

This paper was the first review focused entirely on cost minimisation analysis submissions, which enabled more nuanced consideration of the recommendations. Additionally, this publication includes a detailed data extract that can support continued research and alternative analytical approaches for a range of research objectives.

There were several limitations to the analysis. First, the information required to undertake a comprehensive and independent analysis for adherence to the methodology guidelines is not publicly available, and there may be elements of incomplete or reporting bias in PSDs; although, the quality and consistency of these PSDs, prepared by the Department of Health, appeared to have improved over time. Further, the study did not include relevant (local and global) commercial factors that a pharmaceutical company must consider when implementing a global market access strategy. Second, while an effort was made to extract information consistent with the PBAC's interpretation of the evidence, this extraction was extensive and subjective. This was assessed through a second reviewer independently coding a 5% random sample, stratified by year. As noted in Methods, initial concordance was 90%, and most discrepancies were subsequently resolved in favour of the initial coder. This process supported consistency and reliability in data extraction. Despite the subjectivity inherent when interpreting PSDs, the accuracy of the data was considered sufficient for the primary aim of the study. The underlying data are included as electronic supplements to allow for scrutiny and transparency [29]. Additionally, only information contained within the PSD of assessment was documented for that record, so the 95 resubmissions did not refer to information contained within prior submission(s), unless it was reproduced in the resubmission. In some instances, this meant commentary on the potential for inferiority was not reiterated despite the same supporting trials. Third, the submissions themselves are subject to numerous considerations, and there may be an element of self-selection by sponsors submitting to the PBAC, where the sponsor may be aware of exceptional circumstances that would influence the PBAC recommendation. The recommendation rate for nonadherent submissions may be artificially high due to exogenous or unobservable factors. For example, earlier interactions with the PBAC (e.g. before a resubmission) may have indicated a willingness to accept a claim of noninferiority despite the potential for inferiority. Finally, the fitted model was constructed to address the primary aim of this study and may not be suitable for other purposes, such as establishing a causal relationship between aspects of submissions and the likelihood of recommendation.

5 Conclusions

The existing literature on CMA presents an argument that HTA may be better off if the approach were ‘dead’ and ‘carted off’, with no strong counterarguments published. However, this silence may not imply agreement as it continues to be used in Australia and a pragmatic decision-maker might note that the use of CMA in Australia strikes a balance between the robustness of evidence and providing access to medicines, consistent with the goals of HTA.

While the findings from this study highlights that the Australian practice of CMA does not strictly align with the theoretically robust requirements set out in the PBAC Methodology Guidelines, its historical application has provided the PBAC a pragmatic framework that balances theory with providing patient access to medicines. Consequently, stakeholders should continue to assess whether this approach effectively delivers on objectives, including consistency and transparency of process and the appropriateness of outcomes.

Notes

Exclusively included economic evaluations published on the Centre for Reviews and Dissemination (CRD) databases, which was funded by the United Kingdom’s Department of Health and the National Institute for Health Research (NIHR) between 1994 and 2015 and may not have reflected the use of CMA in Australia.

‘The assumption of noninferiority (or superiority), with respect to both effectiveness and safety, needs to be well justified for the cost-minimisation approach to be accepted’.

‘Select a noninferiority margin to assure that the proposed medicine is not inferior to the main comparator by an important difference…It is common to estimate an unimportant difference as less than a minimal clinically important difference… The assumption of noninferiority (or superiority), with respect to both effectiveness and safety, needs to be well justified for the cost minimisation approach to be accepted…Compare the least favourable tail of a 95% [confidence interval] with the noninferiority margin and determine whether the 'worse' result would be regarded as noninferior’.

‘Irrespective of the therapeutic claim, if the adverse effect profiles of a proposed medicine and its main comparator are significantly different in nature, it is unlikely that the cost-minimisation approach will suffice’.

The six factors identified were budget impact, ICER, ICER uncertainty, trust in the clinical evidence, severity of disease and whether the pharmaceutical had previously been considered.

In 2021, the PBAC submission categorisation was changed from major and minor to be categorised numerically (1 through 4). The definition of category 1 and 2 align with that of Major submissions, so only these categories were assessed for 2021 and 2022 submissions.

References

Charlton V et al. We need to talk about values: a proposed framework for the articulation of normative reasoning in health technology assessment. Health Econ Policy Law. 2023:1–21.

Australian Government Department of Health, Guidelines for preparing a submission to the Pharmaceutical Benefits Advisory Committee. 2016: Canberra.

Jarrow RA. Risk management models: construction, testing, usage. J Deriv. 2011;18(4):89–98.

O’Brien BJ, Briggs AH. Analysis of uncertainty in health care cost-effectiveness studies: an introduction to statistical issues and methods. Stat Methods Med Res. 2002;11(6):455–68.

Drummond MF. Studies in economic appraisal in health care. 1981.

Dakin H, Wordsworth S. Cost-minimisation analysis versus cost-effectiveness analysis, revisited. Health Econ. 2013;22(1):22–34.

Briggs AH, et al. Model parameter estimation and uncertainty analysis. Med Decis Making. 2012;32(5):722–32.

HajiAliAfzali H, Karnon J. Exploring structural uncertainty in model-based economic evaluations. Pharmacoeconomics. 2015;33(5):435–43.

Otten TM, et al. Comprehensive review of methods to assess uncertainty in health economic evaluations. Pharmacoeconomics. 2023;41(6):619–32.

Briggs AH, O’Brien BJ. The death of cost-minimization analysis? Health Econ. 2001;10(2):179–84.

Drummond MF, et al. Methods for the economic evaluation of health care programmes. Oxford: Oxford University Press; 2005.

Shields GE, et al. Out of date or best before? A commentary on the relevance of economic evaluations over time. Pharmacoeconomics. 2022;40(3):249–56.

NICE Decision Support Unit. The use of cost minimisation analysis for the appraisal of health technologies. 2019 [cited 2020 November 2020] http://nicedsu.org.uk/cost-minimisation/.

Raymakers AJN, et al. ‘What is dead may never die’—cost-minimization analysis in the context of medical devices in Europe. Health Policy Technol. 2018;7(3):227–9.

Eckermann S. Avoiding Frankenstein’s monster and partial analysis problems: robustly synthesising, translating and extrapolating evidence. In: Health economics from theory to practice. Berlin: Springer; 2017. p. 57–89.

Hirst A, et al. PHP244—cost minimisation analysis: when and where? A review of Hta guidance on cost minimisation analysis. Value Health. 2016;19(7):A482.

Marshall J, et al. Trends in the use of cost-minimization analysis in Economic Assessments submitted to the SMC. Value Health. 2015;18(3):A94.

Lybrand S, Wonder M. Analysis of PBAC submissions and outcomes for medicines (2010–2018). Int J Technol Assess Health Care. 2020;36(3):224–31.

Robinson R. Costs and cost-minimisation analysis. BMJ. 1993;307(6906):726–8.

Drummond MF, et al. Methods for the economic evaluation of health care programmes. Oxford: Oxford University Press; 2015.

Haycox A. What is cost-minimisation analysis? In: Arnold RJG (ed) Pharmacoeconomics from theory to practice. 2020.

Wailoo A, Dixon S. The use of cost minimisation analysis for the appraisal of health technologies, R.B.T.N.D.S. Unit, Editor. 2019, University of Sheffield.

Span MM, et al. Noninferiority testing in cost-minimization studies: practical issues concerning power analysis. Int J Technol Assess Health Care. 2006;22(02):266.

Newby D, Hill S. Use of pharmacoeconomics in prescribing research. Part 2: cost-minimization analysis—when are two therapies equal? J Clin Pharm Therap. 2003;28(2):145–50.

Sellars M, et al. Making recommendations to subsidize new health technologies in Australia: a qualitative study of decision-makers’ perspectives on committee processes. Health Policy. 2024;139: 104963.

National Health Act. 1953: Commonwealth of Australia.

Products C.f.P.M. Points to consider on switching between superiority and non-inferiority. Br J Clin Pharmacol. 2001;52(3):223.

Taylor C, Jan S. Economic evaluation of medicines. Aust Prescr. 2017;40(2):76–8.

Tirrell Z, Norman A, Hoyle M, Lybrand S, Parkinson B. The use of the cost minimisation approach in Health Technology Assessment submissions to the Australian Pharmaceutical Benefits Advisory Committee. 2024: Macquarie University. https://doi.org/10.25949/26308576

Ghijben P, et al. Revealed and stated preferences of decision makers for priority setting in health technology assessment: a systematic review. Pharmacoeconomics. 2018;36(3):323–40.

Australian Government Department of Health. Procedure guidance for listing medicines on the Pharmaceutical Benefits Scheme Version 1.9. 2020. Accessed Nov 2020.

Australian Government Department of Health. Public summary documents explained. Pharmaceutical Benefits Scheme (PBS) 2020; https://www.pbs.gov.au/pbs/industry/listing/elements/pbac-meetings/psd/pbac-psd-psd-explained.

Flowers M, Lybrand S, Wonder M. Analysis of sponsor hearings on health technology assessment decision making. Aust Health Rev. 2020;44(2):258–62.

Harris A, Li JJ, Yong K. What can we expect from value-based funding of medicines? A retrospective study. Pharmacoeconomics. 2016;34(4):393–402.

Chim L, et al. Are cancer drugs less likely to be recommended for listing by the pharmaceutical benefits advisory committee in Australia? Pharmacoeconomics. 2010;28(6):463–75.

Mauskopf J, et al. Relationship between financial impact and coverage of drugs in Australia. Int J Technol Assess Health Care. 2013;29(1):92–100.

Karikios DJ, et al. Is it all about price? Why requests for government subsidy of anticancer drugs were rejected in Australia. Intern Med J. 2017;47(4):400–7.

Whitty JA, Scuffham PA, Rundle-Thiele SR. Public and decision maker stated preferences for pharmaceutical subsidy decisions. Vol. 9. 2011: Applied health economics and health policy.

Haque MM, et al. Factors associated with Pharmaceutical Benefits Advisory Committee decisions for listing medicines for diabetes and its associated complications. Aust Health Rev. 2022;47(2):139–47.

Gao Y, Laka M, Merlin T. Is the quality of evidence in health technology assessment deteriorating over time? A case study on cancer drugs in Australia. Int J Technol Assess Health Care. 2023;39(1): e28.

(PBS), P.B.S. PBAC Outcomes. 2023 [cited 2023; https://www.pbs.gov.au/info/industry/listing/elements/pbac-meetings/pbac-outcomes.

Page MJ, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Int J Surg. 2021;88: 105906.

Schmitz C. LimeSurvey: an open source survey tool. LimeSurvey Project Hamburg, Germany. http://www.limesurvey.org. 2012.

Foundation PS. Python language reference, in 3.9.7. 2023, Python Software Foundation Wilmington, DE, USA.

Virtanen P, et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat Methods. 2020;17(3):261–72.

Grinberg M. Flask web development: developing web applications with python. O'Reilly Media, Inc. 2018.

Myers J, Copeland R. Essential SQLAlchemy: mapping python to databases. O'Reilly Media, Inc. 2015.

McKinney W. pandas: a foundational Python library for data analysis and statistics. Python High Perform Sci Comput. 2011;14(9):1–9.

Schmitz S, et al. Identifying and revealing the importance of decision-making criteria for health technology assessment: a retrospective analysis of reimbursement recommendations in Ireland. Pharmacoeconomics. 2016;34(9):925–37.

Harris AH, et al. The role of value for money in public insurance coverage decisions for drugs in Australia: a retrospective analysis 1994–2004. Med Decis Making. 2008;28(5):713–22.

RStudio Team. RStudio: integrated development environment for R. 2020.

Huan X, Caramanis C, Mannor S. Sparse algorithms are not stable: a no-free-lunch theorem. IEEE Trans Pattern Anal Mach Intell. 2012;34(1):187–93.

Ravikumar P, Wainwright MJ, Lafferty JD. High-dimensional Ising model selection using ℓ1-regularized logistic regression. Ann Stat. 2010;38(3):1287–319.

Zhao P, Yu B. On model selection consistency of Lasso. J Mach Learn Res. 2006;7:2541–63.

Bach F. Model-consistent sparse estimation through the bootstrap. arXiv pre-print server, 2009.

Meinshausen N, Bühlmann P. Stability selection. J R Stat Soc Ser B (Stat Methodol). 2010;72(4):417–73.

Zou H, Hastie T. Regularization and variable selection via the elastic net. J R Stat Soc Ser B (Stat Methodol). 2005;67(2):301–20.

Wang S, et al. Random lasso. Ann Appl Stat. 2011;5(1):468–85.

Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. J Stat Softw. 2010;33(1):1.

Leeper TJ et al. Package ‘margins’: Marginal Effects for Model Objects. 2021, CRAN. p. 2019.

Department of Health and Aged Care. Managing Conflicts of Interest. Procedure guidance for listing medicines on the Pharmaceutical Benefits Scheme 2022; https://www.pbs.gov.au/info/industry/listing/procedure-guidance/3-confidentiality-transparency/3-2-managing-conflicts-of-interest.

Wonder M, Dunlop S. Assessment of the quality of the clinical evidence in submissions to the Australian pharmaceutical benefits advisory committee: fit for purpose? Value Health. 2015;18(4):467–76.

Hill SR, Mitchell AS, Henry DA. Problems with the interpretation of pharmacoeconomic analyses a review of submissions to the Australian pharmaceutical benefits scheme. JAMA. 2000;283(16):2116–21.

Cerri KH, Knapp M, Fernandez J-L. Untangling the complexity of funding recommendations: a comparative analysis of health technology assessment outcomes in four European countries. Pharm Med. 2015;29:341–59.

Weinstein MC, Stason WB. Foundations of cost-effectiveness analysis for health and medical practices. N Engl J Med. 1977;296(13):716–21.

Brousselle A, Lessard C. Economic evaluation to inform health care decision-making: promise, pitfalls and a proposal for an alternative path. Soc Sci Med. 2011;72(6):832–9.

The New frontier—delivering better health for all Australians. Inquiry into approval processes for new drugs and novel medical technologies in Australia. 2021.

Health Technology Assessment Policy and Methods Review Reference Committee, Health technology assessment policy and methods review consultation options paper, D.o.H.a.A. Care, Editor. 2024: Australia.

Acknowledgements

The authors thank Yuanyuan Gu, who provided statistical advice, and Varinder Jeet, who provided advice on a draft of the manuscript.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

Open access funding enabled and organized by CAUL and its Member Institutions. Z.T. was supported by an Australian Government Research Training Program Scholarship and a Macquarie University Australian Pharmaceutical Scholarship during his research candidature at Macquarie University. Five pharmaceutical companies contributed to the Macquarie University Australian Pharmaceutical Scholarship: Amgen Australia, Janssen Australia, MSD Australia, Pfizer Australia, and Roche Australia. No sources of financial assistance were received by S.L., A.N., M.H., and B.P. for this study.

Conflicts of interest

A.N., M.H., and B.P. are evaluators of submissions to the Pharmaceutical Benefits Advisory Committee (PBAC) for the Australian Government. S.L. is a full-time employee of Amgen Inc. The views expressed are the authors’ and do not reflect the views of the PBAC, the Australian Government, or Amgen Inc. Z.T. has no (non-funding) conflicts of interest to declare.

Availability of data

The data used in this analysis are included as an electronic supplement [29]. The Department of Health and Aged Care owns the copyright in the Pharmaceutical Benefits Advisory Committee (PBAC) Public Summary Documents (PSDs) and Outcomes. These materials are copyright Commonwealth of Australia, used under license.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Code availability

The code used in this study can be made available upon request.

Author contributions

M.H. and Z.T. provided the initial concept of paper, Z.T. and B.P. refined the scope of research, Z.T. gathered data and conducted the analysis. A.N. validated a sample of data. All authors contributed toward the draft and final manuscript.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Tirrell, Z., Norman, A., Hoyle, M. et al. Bring Out Your Dead: A Review of the Cost Minimisation Approach in Health Technology Assessment Submissions to the Australian Pharmaceutical Benefits Advisory Committee. PharmacoEconomics (2024). https://doi.org/10.1007/s40273-024-01420-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s40273-024-01420-9