Abstract

Introduction

The maculopathy in highly myopic eyes is complex. Its clinical diagnosis is a huge workload and subjective. To simply and quickly classify pathologic myopia (PM), a deep learning algorithm was developed and assessed to screen myopic maculopathy lesions based on color fundus photographs.

Methods

This study included 10,347 ocular fundus photographs from 7606 participants. Of these photographs, 8210 were used for training and validation, and 2137 for external testing. A deep learning algorithm was trained, validated, and externally tested to screen myopic maculopathy which was classified into four categories: normal or mild tessellated fundus, severe tessellated fundus, early-stage PM, and advanced-stage PM. The area under the precision–recall curve, the area under the receiver operating characteristic curve (AUC), sensitivity, specificity, accuracy, and Cohen’s kappa were calculated and compared with those of retina specialists.

Results

In the validation data set, the model detected normal or mild tessellated fundus, severe tessellated fundus, early-stage PM, and advanced-stage PM with AUCs of 0.98, 0.95, 0.99, and 1.00, respectively; while in the external-testing data set of 2137 photographs, the model had AUCs of 0.99, 0.96, 0.98, and 1.00, respectively.

Conclusions

We developed a deep learning model for detection and classification of myopic maculopathy based on fundus photographs. Our model achieved high sensitivities, specificities, and reliable Cohen’s kappa, compared with those of attending ophthalmologists.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

The maculopathy in highly myopic eyes is complex and its clinical diagnosis is a huge workload and subjective. |

We developed an accurate and reliable deep learning model based on color fundus images to screen myopic maculopathy. |

The artificial intelligence system could detect and classify normal or mild tessellated fundus, severe tessellated fundus, early pathologic myopia, and advance pathologic myopia. |

The model achieved high sensitivities, specificities, and reliable Cohen’s kappa compared with those of attending ophthalmologists. |

The artificial intelligence system was designed for easy integration into a clinical tool which could be applied in a large-scale myopia screening. |

Introduction

Pathologic myopia (PM) is a major cause of legal blindness worldwide and the prevalence of myopia-related complications is expected to continue increasing in the future, presenting a great challenge for ophthalmologists [1,2,3,4]. In East and Southeast Asia, the prevalence of myopia and high myopia in young adults is around 80–90% and 10–20%, respectively [5]. In China, the prevalence of myopia in 1995, 2000, 2005, 2010, and 2014 was 35.9%, 41.5%, 48.7%, 57.3%, and 57.1%, respectively, with a gradual upward trend [6]. According to the META-PM (meta analyses of pathologic myopia) classification system proposed by Ohno-Matsui et al., PM is defined as “eyes having equal to or more serious than diffuse choroidal atrophy” or “eyes having lacquer cracks, myopic choroidal neovascularization (CNV) or Fuchs spot” [7]. However, manual interpretation of fundus photographs is subject to clinician variability since clear definition of various morphological characteristics was lacking in the META-PM classification system.

Though tessellation is a common characteristic of myopia, it is occasionally an earlier sign of chorioretinal atrophy or staphyloma development as well [8]. The higher the degree of fundus tessellation was, the thinner the subfoveal choroidal thickness was [9,10,11]. Yan et al. reported that higher degree of fundus tessellation was significantly associated with longer axial length, more myopic refractive error, and best-corrected visual acuity (BCVA) [12]. These reports have indicated that severe fundus tessellation might be the first indicator of myopia-to-PM transition. And Foo et al. demonstrated that tessellated fundus had good predictive value for incident myopic macular degeneration [13]. Therefore, screening severe fundus tessellation which is defined as equal to or more serious than grade 2 proposed by Yan et al. is beneficial to detect people at high risk of PM [12]. When people present signs of PM, visual acuity might be gradually impaired. According to recent research, patients with severe PM which was defined as equal to or more serious than patchy chorioretinal atrophy or foveal detachment and/or active CNV presented significantly worse BCVA than those with common PM [14]. Whereas, diffuse atrophy and lacquer cracks (LCs) which cause mild vision impairment and progressed slowly were considered as early-stage PM [15, 16]. Considering the complex maculopathy in highly myopic eyes, a simplified PM classification model would facilitate early detection of population with high risks of PM and stratified management of PM. However, screening the large number of patients with myopia is a huge workload for ophthalmologists.

Fortunately, with the rapid development of artificial intelligence (AI) technologies, the application of AI could provide a potential solution for the increasing burden of myopia, attributed to its ability to analyze a tremendous amount of data. In the field of ophthalmology, the deep learning system has led to exciting prospects in the detection of papilledema, glaucomatous optic neuropathy, and diabetic retinopathy based on color fundus photographs [17,18,19,20]. As a result of the complexity of the classification and definition system of PM, the application of deep learning technology in PM lesion screening is still a challenge. As evidenced by Tan et al., Lu et al., Wu et al., the AI models based on fundus images have achieved good performance in diagnosing and classifying high myopia [21,22,23,24]. However, the value of AI implementation for screening severe tessellated fundus in patients with high myopia has not been fully explored. On the basis of our classification system, it is viable to design the AI algorithm to automatically detect people at high risk of PM and to identify PM.

This study aimed to develop and train the deep learning system to automatically detect normal or mild tessellated fundus, severe tessellated fundus, early-stage PM, and advanced-stage PM using a large data set of color retinal fundus images obtained from the ophthalmic clinics of the hospitals.

Methods

Data Acquisition

In this study, the use of retinal fundus images was approved by the Ethics Committee of Shanghai General Hospital, Shanghai Jiao Tong University School of Medicine, and adhered to the tenets of the Declaration of Helsinki (Approval ID: No. 2015KY156). Written informed consent forms were obtained from all participants.

The 45° color fundus photographs centered on macula were collected from 6738 participants at Shanghai Eye Disease Prevention and Treatment Center (SEDPTC) in China from 2016 to 2018, using the TOPCON DRI Triton. Images in which the fovea was not fully visible or over 50% of the total area was obscured were excluded. Finally, 8210 images with visible macula from 5778 patients were included for model development. On the basis of the patient’s code number, these images were divided into a training data set (90% of the images) and a validation data set (10% of the images) for validating the models.

To evaluate model performance, the algorithm was applied to another data set collected from SEDPTC and Shanghai General Hospital (SGH), which consisted of 2137 macula-centered fundus photographs from 1828 participants or patients.

Classification and Labeling of Myopic Maculopathy

All fundus photographs were independently classified and labeled by three retina specialists (YF, WW, and LY). When disagreements occurred, the final diagnosis was confirmed through a group discussion among the retina specialists and another senior expert (XX). Diagnoses made by three attending ophthalmologists (RW, LY, and DS) were recorded to compare with AI performance. The META-PM classification system was slightly modified on the basis of the risk of progression and impact on vision [8, 14, 25, 26]. In accordance with Yan et al., severe tessellated fundus was defined as equal to or more serious than grade 2 in this study [12]. Therefore, the images were classified into four groups: (1) normal or mild tessellated fundus, (2) severe tessellated fundus, (3) early-stage PM, and (4) advanced-stage PM (Fig. 1). Details are illustrated in Table 1.

Image Processing

Original fundus photographs were preprocessed for prominence to improve classification accuracy [27, 28] (Fig. 2). Image preprocessing includes the following modules: ROI interception, data denoising, augmentation, and normalization (Supplementary Fig. S1).

In the ROI interception module, we extracted the effective area by removing excessive black margins which may affect the identification of key feature information. Firstly, we converted the RGB images to grayscale images, in which the pixel value of background is equal to zero and the pixel value of effective area is greater than zero. Then, we used OpenCV tools to traverse the pixel information and get the location of effective area in grayscale images. Last, RGB images were cropped on the basis of the location of effective area.

In the data denoising module, an unsharp masking (USM) filter was applied to the cropped images to reduce noise interference during imaging according to the following formula [29]: \(I_{{\text{O}}} = a \cdot I_{{{\text{In}}}} + b \cdot G(\sigma )*I_{{{\text{In}}}} + c\), where \(I_{{{\text{I}}n}}\) represents the input image, \(I_{{\text{O}}}\) represents the standardized output image, \(G(\sigma )\) is a Gaussian filter with standard deviation s, and * represents the convolutional operator. Parameters a, b, c, and s were set to 4, 3.5, 128, and 30, respectively, on the basis of experience. The images were resampled to a resolution of 672 × 672 according to the code at Github (source code is at https://github.com/tensorflow/tpu/blob/master/models/official/efficientnet/efficientnet_builder.py).

In the data augmentation module, to increase the diversity of the data set and reduce the chance of overfitting [30], the horizontal and vertical flipping, rotation up to 60°, brightness shift within the range of 0.8–1.2, and contrast shift within the range of 0.9–1.1 were randomly applied to the images in the training data set, which increase its size to five times the original size.

In the data normalization module, the pixel values of images after augmentation were normalized within the range of 0–1. Then, z-score is used for standardization of the input image before deep learning.

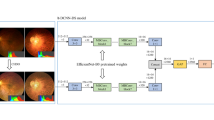

Deep Learning Algorithm Development

Our training platform is implemented by PyTorch framework with Python3.6 and CUDA10.0. Training equipment comprised a 2.60 GHz Intel(R) CPU and a Tesla V100-SXM2 GPU. EfficientNet-B8 architecture, an excellent convolutional neural network suitable for large-size input image, was adopted [31]. The EfficientNet-B8 model was transfer learned from pretrained weights on ImageNet [32]. Then, we replaced the final classification layers in the network and trained further with our data set.

The cross entropy was used as an objective function in our model during the training process. Training was performed with an initial learning rate of 10−2, weight decay coefficient for I2 regularization of 1e−5, and dropout for output layer of 0.5. Then, the stochastic gradient descent (SGD) optimizer was used for 80 epochs on the training data set, and each epoch is verified on the validation set to determine the final weight. To reduce overfitting of the model, early stopping strategy and sharpness aware minimization (SAM) optimizer were applied. The training process was stopped if the validation loss did not improve over 20 consecutive epochs. The model state with lowest validation loss was saved as the final state of the model.

Statistical Analysis

To determine the model performance, the receiver operating characteristic (ROC) curves were used and analyzed with Python software. According to the results of the classification model, the area under precision–recall (P–R) curve—the average precision value (AP), the area under ROC curve (AUC), sensitivity, specificity, and the overall accuracy were evaluated for the four groups.

Results

Characteristics of the Data Sets

The deep learning system was trained and validated on 8210 fundus photographs collected from 5778 participants (59.00% with photographs for both eyes; mean age 51.36 years old; 60.00% female), including 4920 (59.93%) normal or mild tessellated fundus images, 2110 (25.70%) severe tessellated fundus images, 870 (10.60%) early-stage PM images, and 310 (3.78%) advanced-stage PM images. And 10% out of 8210 fundus photographs were randomly selected for internal validation. A separated set of 2137 photographs, including 1053 (49.28%) normal or mild tessellated fundus images, 405 (18.95%) severe tessellated fundus images, 406 (19.00%) early-stage PM images, and 273 (12.77%) advanced-stage PM images, was used for externally testing the performance of the deep learning system (Table 2).

The characteristics of eyes are presented in Table 3. Patients with PM as compared with individuals without PM had a significantly longer axial length (P < 0.001), had a significantly worse BCVA (P < 0.001), and had a significantly higher refractive error (− 10.17 D vs. − 0.70 D, − 9.17 D vs. − 0.93 D, − 10.23 D vs. − 0.60 D in training, validation, and testing data set, respectively, P < 0.001). Similar to previous study, patients with severe tessellation fundus had a significantly longer axial length (P < 0.001), worse BCVA (P < 0.001), and higher refractive error (− 3.54 D vs. − 0.70 D, − 3.30 D vs. − 0.93 D, − 3.15 D vs. − 0.60 D in training, validation, and testing data set, respectively, P < 0.001). Moreover, clinical features showed no significant difference between the training set and internal validation set, which indicated that the training set and internal validation set were homogeneous.

Classification Performance in the Validation Data Set

In the validation data set (Table 4), the deep learning system discriminated normal or mild tessellated fundus from all the other types with an AUC of 0.98, a sensitivity of 93.10%, and a specificity of 97.60%. The deep learning system discriminated severe tessellated fundus from all the other types with an AUC of 0.95, a sensitivity of 92.90%, and a specificity of 93%. The system showed a sensitivity of 90.80% and specificity of 98.90%, with an AUC of 0.99, for screening early-stage PM from all the other types. Meanwhile, it differentiated advanced-stage PM from all the other types with a sensitivity of 96.80%, specificity of 99.90%, and an AUC of 1.00. The overall accuracy of the model was 92.90%.

Classification Performance in the External Testing Data Set

The system was further applied to an external testing data set to assess the generalizability. Similar to the results from the validation data set, the system discriminated normal or mild tessellated fundus, severe tessellated fundus, early-stage PM, and advanced-stage PM with an average precision of 0.99, 0.87, 0.94, and 0.97, a sensitivity of 92.00%, 92.60%, 88.20%, and 94.90%, and a specificity of 98.60%, 93.10%, 98.20%, and 99.50%, respectively; the areas under ROC was 0.99, 0.96, 0.98, and 1.00 (Fig. 3a). P–R curves were used to measure the P–R trade-off of the model due to the imbalance of the data sets (Fig. 3b). The overall accuracy of the deep learning system was 91.80%.

Performance of the deep learning model in the external testing data set using receiver operating characteristic (ROC) curves and precision–recall (P–R) curves. The external-testing data sets included ocular fundus photographs from SEDPTC and SGH. a ROC curves for the testing data set among the four categories. The area under ROC curves is presented as AUC. b P–R curves for the testing data set among the four categories. Average precision value (AP) was defined as the area under P–R curve

Meanwhile, the photographs of the external testing data set were also independently graded by three attending ophthalmologists, and the sensitivity and specificity were compared with those from the deep learning system. As illustrated in Table 5, the system showed an equal or even better sensitivity than the attending ophthalmologists, especially for discriminating severe tessellated fundus and early-stage PM, showing a significantly higher sensitivity at a similar specificity. In addition, the mean overall accuracy of the attending ophthalmologists was 90.07% (range 89.40–91.20%), which was lower than the deep learning model.

Visualizing the Prediction Process of Deep Learning System

The visualization of the prediction process for the deep learning system was displayed in the form of class activation map (CAM). As shown in Fig. 4, the highlighted areas were consistent with the region of tessellated fundus, diffuse atrophy, and patch atrophy, indicating that the system obtained generalized characteristics of tessellation, early-stage PM, and advanced-stage PM, respectively.

Discussion

A deep learning algorithm that is able to accurately screen and assess myopic maculopathy can also potentially provide significant benefits, allowing enhanced accessibility and affordability of myopic maculopathy screening for a large at-risk population, which improves the access to care and substantially decreases global costs particularly in remote and underserved communities. In particular, as a result of the outbreak of COVID-19 in 2019, the remote medical systems will be more important [33, 34]. An AI-integrated telemedicine platform will be a new direction of myopia healthcare in the post-COVID-19 period [35]. In this study, we developed an effective deep learning model using EfficientNet-B8 based on 8210 color fundus photographs and demonstrated its potential in screening myopic maculopathy. The AI model showed excellent performance in classifying normal fundus, severe tessellation, early-stage PM, and advanced-stage PM. In particular, the performance in classifying severe tessellation and early-stage PM was better than manual classification.

Previous studies showed that AI algorithms using deep learning neural networks have been applied for screening diabetic retinopathy, age-related macular degeneration, glaucoma, and papilledema [17, 19, 36, 37]. The Google team demonstrated that the deep learning system extracting information from fundus photographs could be applied to estimate the refractive error [38], which suggested that fundus images have information on the refractive powers. Our study also showed that patients with PM had a significantly higher refractive error compared with individuals without PM, as did patients with severe tessellation fundus compared with normal individuals (Table 3). Recently, several automatic systems for detecting PM have also been reported. Devda and Eswari developed a deep learning method with conventional neural network for detecting pathologic myopia [39]. Their work showed satisfactory performance in classification and segmentation of atrophy lesions. However, the development of their system was based on public databases. The amount of training and testing data sets involved in the development process was relatively small. Moreover, authoritative criteria for diagnosing PM were lacking. In our work, a large data set of 8210 color fundus photographs were used to develop the algorithm. Compared to public databases, data sets from the real world could afford more data complexity and original disease information. Du et al. also developed a deep learning algorithm to categorize the myopic maculopathy automatically on the basis of the META-PM categorizing system [40]. Compared with their system, our training data set was larger and our deep learning system was more powerful. In addition, Lu et al. designed deep learning systems with excellent performance to detect PM and myopic macular lesions according to the META-PM classification system [22, 23]. Compared with their research, severe tessellation fundus was added in our classification system in order to detect populations at high risk of PM promptly.

Tessellated fundus is one of the preliminary signs of myopia in general that does not impair central vision. However, Fang et al. reported that progressive and continuous thinning of choroid was associated with the progression to tessellation and diffuse chorioretinal atrophy [16]. Yan et al. also demonstrated that the higher the degree of fundus tessellation was, the thinner the subfoveal choroidal thickness was [12]. Cheng et al. demonstrated that the grade of fundus tessellation was associated with choroidal thickness and axial length in children and adolescents [41]. Moreover, similarities were found in the distribution pattern of choroid thinning between tessellated fundus and other lesions of myopic maculopathy [16]. And Foo et al. demonstrated that tessellated fundus had good predictive value for incident MM [13]. These findings indicated that tessellation might be the first sign for myopia to become pathologic. In addition, it has been reported that diffuse atrophy in childhood can develop into advanced myopic chorioretinal atrophy in later life, whereas these lesions have usually progressed from severe fundus tessellation [42]. Moreover, Kim et al. showed that the tessellated fundus was related to myopic regression after corneal refractive surgery, which indicated that tessellated fundus is associated with a myopic shift [43]. Therefore, discriminating severe fundus tessellation from common myopia is important for individuals with myopia, especially for those with high myopia, and the follow-up frequency of patients with severe tessellated fundus can be increased.

Moreover, to improve the screening efficiency for the population at high risk, the classification of myopic maculopathy lesions was simplified in our work according to the degree of vision impairment. Ruiz-Medrano et al. have demonstrated that people who presented with equal to or more serious than patchy chorioretinal atrophy or foveal detachment and/or active CNV showed worse visual acuity than common PM [14]. And 92.70–100% of eyes with patchy atrophy, myopic CNV, and macular atrophy showed progression and were associated with significant vision impairment based on a 10-year follow-up study [16, 25]. Therefore, these lesions were classified into advanced-stage PM in the present study (Table 1). In addition to receiving treatment in time, vision rehabilitation training and community management of the individuals with low vision are recommended for those diagnosed with advanced-stage PM. As a result of mild impairment of central vision, diffuse atrophy and LC alone were categorized into early-stage PM in the present study. Li et al. reported that half of the participants with diffuse chorioretinal atrophy had progression during a 4-year follow-up study, manifested as enlargement and newly formed diffuse chorioretinal atrophy [44]. Close follow-up is recommended to individuals when diagnosed with early-stage PM.

In addition, our study involved the following technology optimizations. To overcome difficulties due to the complicated manifestations such as atypical lesions, coexisting comorbidities, and posterior staphyloma, a channel attention module was added to suppress unnecessary channels, and a spatial attention module was added to capture the most abundant feature information of the maps. Moreover, a weighted cross-entropy loss function was used to minimize model decision boundary deviation caused by the imbalanced data sets. The weight coefficient was set to the reciprocal of the amount of data for each category. Lastly, a label smoothing strategy was applied during the training of the mild and severe tessellated fundus recognition model to reduce the impact of incorrect labels on the model and promote its generalization ability. And a USM filter was used to denoise and obtain effective information from images. Additionally, to discriminate severe from mild tessellated fundus, SAM was used as optimizer on the basis of SGD.

As illustrated in the class activation map (Fig. 4), the deep learning model could identify the position and distinguish features of lesions, which may potentially facilitate the diagnosis. In addition, as revealed in Table 5, the sensitivity of the deep learning algorithm for detecting severe tessellated fundus and early-stage PM was 92.60% and 88.20%, respectively, which were better than that by attending ophthalmologists, indicating that the AI system is reliable for screening. With the assistance of the AI system, basic examinations such as fundus photographs can be carried out in local community hospitals, which is convenient for both patients and ophthalmologists, especially for those in remote areas without retina experts.

Limitations of this study also need to be considered. Firstly, though we further confirmed that the performance of the model was better than attending ophthalmologists for detecting atypical lesions (Supplementary Fig. S2), a few photographs were still misdiagnosed, which might be attributed to the relatively low image quality or the microlesions. These reveal that the model requires higher image quality than retina experts. In addition, multimodal imaging for myopic eyes can facilitate in improving the accuracy of diagnosis. For example, diffuse atrophy appears as an ill-defined yellowish-white lesion in the posterior fundus on ophthalmoscopy, which exhibits mild hyperfluorescence in the late phase on fluorescein angiography (FA) [45]. Moreover, the choroidal thickness in the area of diffuse atrophy was markedly thinned on optical coherence tomography (OCT) [16, 46]. Additionally, LCs were assessed as yellowish linear lesions on ophthalmoscopy, as linear hypofluorescence in the late phase on indocyanine green angiography (ICGA), and linear hyperfluorescence in early-late phases on FA [47, 48]. Therefore, more real-world clinical data, such as images from FA, ICGA, or OCT, should be considered together in clinical labeling of the photographs in the future. And the number of photographs with early pathologic myopia could be increased during training which may improve the accuracy of this classification. Secondly, the fundus color might be different because of the difference in the degree of fundus pigmentation among races, which can decrease the diagnosis accuracy of atrophic lesions. Future research is warranted to investigate the model efficacy for other ethnic groups. Thirdly, although photographs were collected from two different clinical centers, the model performance based on photographs using other cameras is still unclear. Therefore, photographs collected from multiple fundus cameras are necessary to further improve the generalization and reliability of the AI model.

Conclusions

A deep learning algorithm was applied to identify normal fundus or mild tessellation, severe tessellated fundus, early stage of PM, and advanced stage of PM based on fundus photographs. Our AI model achieved performance comparable to that of experts. Owing to the promising performance of our AI system, it can assist ophthalmologists by reducing workload and saving time during large-scale myopia screening and long-term follow-up.

References

Iwase A, Araie M, Tomidokoro A, et al. Prevalence and causes of low vision and blindness in a Japanese adult population: the Tajimi study. Ophthalmology. 2016;113(8):1354–62. https://doi.org/10.1016/j.ophtha.2006.04.022.

Shimada N, Tanaka Y, Tokoro T, Ohno-Matsui K. Natural course of myopic traction maculopathy and factors associated with progression or resolution. Am J Ophthalmol. 2013;156:948-957.e1.

Holden BA, Fricke TR, Wilson DA, et al. Global prevalence of myopia and high myopia and temporal trends from 2000 through 2050. Ophthalmology. 2016;123(5):1036–42. https://doi.org/10.1016/j.ophtha.2016.01.006.

Tang YT, Wang XF, Wang JC, et al. Prevalence and causes of visual impairment in a Chinese adult population: the Taizhou eye study. Ophthalmology. 2015;122(7):1480–8. https://doi.org/10.1016/j.ophtha.2015.03.022.

Morgan IG, French AN, Ashby RS, et al. The epidemics of myopia: aetiology and prevention. Progress Retinal Eye Res. 2018;62:134–49. https://doi.org/10.1016/j.preteyeres.2017.09.004.

Shi XJ, Gao ZR, Leng L, Guo Z. Temporal and spatial characterization of myopia in China. Front Public Health. 2022;10:896926. https://doi.org/10.3389/fpubh.2022.896926.

Ohno-Matsui K, Kawasaki R, Jonas JB, et al. International photographic classification and grading system for myopic maculopathy. Am J Ophthalmol. 2015;159(5):877–83.e7. https://doi.org/10.1016/j.ajo.2015.01.022.

Yamashita T, Terasaki H, Tanaka M, Nakao K, Sakamoto T. Relationship between peripapillary choroidal thickness and degree of tessellation in young healthy eyes. Graefes Arch Clin Exp Ophthalmol. 2020;258(8):1779–85. https://doi.org/10.1007/s00417-020-04644-5.

Zhao X, Ding X, Lyu C, et al. Morphological characteristics and visual acuity of highly myopic eyes with different severities of myopic maculopathy. Retina. 2020;40(3):461–7. https://doi.org/10.1097/IAE.0000000000002418.

Wang NK, Lai CC, Chu HY, et al. Classification of early dry-type myopic maculopathy with macular choroidal thickness. Am J Ophthalmol. 2012;153(4):669–77. https://doi.org/10.1016/j.ajo.2011.08.039 (677.e1-2).

Zhou YP, Song ML, Zhou MoW, Liu YM, Wang FH, Sun XD. Choroidal and retinal thickness of highly myopic eyes with early stage of myopic chorioretinopathy: tessellation. J Ophthalmol. 2018. https://doi.org/10.1155/2018/2181602.

Yan YN, Wang YX, Xu L, Xu J, Wei WB, Jonas JB. Fundus tessellation: prevalence and associated factors: the Beijing Eye Study 2011. Ophthalmology. 2015;122(9):1873–80. https://doi.org/10.1016/j.ophtha.2015.05.031.

Foo LL, Xu LQ, Sabanayagam C, et al. Predictors of myopic macular degeneration in a 12-year longitudinal study of Singapore adults with myopia. Br J Ophthalmol. 2022. https://doi.org/10.1136/bjophthalmol-2021-321046.

Ruiz-Medrano J, Flores-Moreno I, Ohno-Matsui K, Cheung CMG, Silva R, Ruiz-Moreno JM. Correlation between atrophy-traction-neovascularization grade for myopic maculopathy and clinical severity. Retina. 2021;41:1867–73. https://doi.org/10.1097/IAE.0000000000003129.

Ohno-Matsui K, Lai TY, Lai CC, Cheung CM. Updates of pathologic myopia. Prog Retin Eye Res. 2016;52:156–87. https://doi.org/10.1016/j.preteyeres.2015.12.001.

Fang YX, Yokoi T, Nagaoka N, et al. Progression of myopic maculopathy during 18-year follow-up. Ophthalmology. 2018;125(6):863–77. https://doi.org/10.1016/j.ophtha.2017.12.005.

Milea D, Najjar RP, Zhubo J, et al. Artificial intelligence to detect papilledema from ocular fundus photographs. N Engl J Med. 2020;382(18):1687–95. https://doi.org/10.1056/NEJMoa1917130.

Yang HK, Kim YJ, Sung JY, et al. Efficacy for differentiating nonglaucomatous versus glaucomatous optic neuropathy using deep learning systems. Am J Ophthalmol. 2020;216:140–6. https://doi.org/10.1016/j.ajo.2020.03.035.

Varadarajan AV, Bavishi P, Ruamviboonsuk P, et al. Predicting optical coherence tomography-derived diabetic macular edema grades from fundus photographs using deep learning. Nat Commun. 2020;11(1):130. https://doi.org/10.1038/s41467-019-13922-8.

Gulshan V, Peng LL, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402–10. https://doi.org/10.1001/jama.2016.17216.

Tan NM, Liu J, Wong DK, et al. Automatic detection of pathological myopia using variational level set. Annu Int Conf IEEE Eng Med Biol Soc. 2009. https://doi.org/10.1109/IEMBS.2009.5333517.

Lu L, Ren PF, Tang XY, et al. AI-Model for identifying pathologic myopia based on deep learning algorithms of myopic maculopathy classification and “Plus” lesion detection in fundus images. Front Cell Dev Biol. 2021;9:719262. https://doi.org/10.3389/fcell.2021.719262.

Lu L, Zhou EL, Yu WS, et al. Development of deep learning-based detecting systems for pathologic myopia using retinal fundus images. Commun Biol. 2021;4:1225. https://doi.org/10.1038/s42003-021-02758-y.

Wu ZQ, Cai WJ, Xie H, et al. Predicting optical coherence tomography-derived high myopia grades from fundus photographs using deep learning. Front Med (Lausanne). 2022;9: 842680. https://doi.org/10.3389/fmed.2022.842680.

Shih YF, Ho TC, Hsiao CK, Lin LL. Visual outcomes for high myopic patients with or without myopic maculopathy: a 10 year follow up study. Br J Ophthalmol. 2006;90(5):546–50. https://doi.org/10.1136/bjo.2005.081992.

Fang YX, Du R, Nagaoka N, et al. OCT-based diagnostic criteria for different stages of myopic maculopathy. Ophthalmology. 2019;126(7):1018–32. https://doi.org/10.1016/j.ophtha.2019.01.012.

Jain A. Fundamentals of digital image processing. Englewood Cliffs, NJ: Prentice Hall, 1989.

Sharmila TS, Ramar K, Raja TSR. Impact of applying pre-processing techniques for improving classification accuracy. SIViP. 2014;8(1):149–57.

Carranza-Rojas J, Calderon-Ramirez S, Mora-Fallas A, Granados-Menani M, Torrents-Barrena J. Unsharp masking layer: injecting prior knowledge in convolutional networks for image classification. In: Tetko I, Kůrková V, Karpov P, Theis F, editors. Artificial neural networks and machine learning – ICANN 2019: Image Processing. ICANN 2019. Lecture Notes in Computer Science, vol 11729. Cham: Springer; 2019. https://doi.org/10.1007/978-3-030-30508-6_1.

Li ZW, Guo C, Nie DY, et al. Development and evaluation of a deep learning system for screening retinal hemorrhage based on ultra-widefield fundus images. Transl Vis Sci Technol. 2020;9(2):3. https://doi.org/10.1167/tvst.9.2.3.

Tan M, Le Q V. EfficientNet: rethinking model scaling for convolutional neural networks. 2019. https://doi.org/10.48550/arXiv.1905.11946.

Zhang K, Liu XH, Xu J, et al. Deep-learning models for the detection and incidence prediction of chronic kidney disease and type 2 diabetes from retinal fundus images. Nat Biomed Eng. 2021;5:533–45. https://doi.org/10.1038/s41551-021-00745-6.

Wong CW, Tsai A, Jonas JB, et al. Digital screen time during the COVID-19 pandemic: risk for a further myopia boom? Am J Ophthalmol. 2021;223:333–7. https://doi.org/10.1016/j.ajo.2020.07.034.

Wang J, Li Y, Musch DC, et al. Progression of myopia in school-aged children after COVID-19 home confinement. JAMA Ophthalmol. 2021;139:293–300. https://doi.org/10.1001/jamaophthalmol.2020.6239.

Zhang CC, Zhao J, Zhu Z, et al. Applications of artificial intelligence in myopia: current and future directions. Front Med (Lausanne). 2022;9:840498. https://doi.org/10.3389/fmed.2022.840498.

Yim J, Chopra R, Spitz T, et al. Predicting conversion to wet age-related macular degeneration using deep learning. Nat Med. 2020;26(6):892–9. https://doi.org/10.1038/s41591-020-0867-7.

Medeiros FA, Jammal AA, Mariottoni EB. Detection of progressive glaucomatous optic nerve damage on fundus photographs with deep learning. Ophthalmology. 2021;128(3):383–92. https://doi.org/10.1016/j.ophtha.2020.07.045.

Varadarajan AV, Poplin R, Blumer K, et al. Deep learning for predicting refractive error from retinal fundus images. Invest Ophthalmol Vis Sci. 2018;59(7):2861–8. https://doi.org/10.1167/iovs.18-23887.

Devda J, Eswari R. Pathological myopia image analysis using deep learning. Proc Comput Sci. 2019;165:239–44. https://doi.org/10.1016/j.procs.2020.01.084.

Du R, Xie SQ, Fang YX, et al. Deep learning approach for automated detection of myopic maculopathy and pathologic myopia in fundus images. Ophthalmol Retina. 2021;5:1235–44. https://doi.org/10.1016/j.oret.2021.02.006.

Cheng TY, Deng JJ, Xu X, et al. Prevalence of fundus tessellation and its associated factors in Chinese children and adolescents with high myopia. Acta Ophthalmol. 2012. https://doi.org/10.1111/aos.14826.

Yokoi T, Jonas JB, Shimada N, et al. Peripapillary diffuse chorioretinal atrophy in children as a sign of eventual pathologic myopia in adults. Ophthalmology. 2016;123(8):1783–7. https://doi.org/10.1016/j.ophtha.2016.04.029.

Kim J, Ryu IH, Kim JK, et al. Machine learning predicting myopic regression after corneal refractive surgery using preoperative data and fundus photography. Graefes Arch Clin Exp Ophthalmol. 2022;260(11):3701–10. https://doi.org/10.1007/s00417-022-05738-y.

Li ZX, Liu R, Xiao O, et al. Progression of diffuse chorioretinal atrophy among patients with high myopia: a 4-year follow-up study. Br J Ophthalmol. 2020. https://doi.org/10.1136/bjophthalmol-2020-316691.

Liu R, Guo XX, Xiao O, et al. Diffuse chorioretinal atrophy in Chinese high myopia: the ZOC-BHVI High Myopia Cohort Study. Retina. 2020;40(2):241–8. https://doi.org/10.1097/IAE.0000000000002397.

Ang M, Wong CW, Hoang Q, et al. Imaging in myopia: potential biomarkers, current challenges and future developments. Br J Ophthalmol. 2019;103(6):855–62. https://doi.org/10.1136/bjophthalmol-2018-312866.

Liu CF, Liu L, Lai CC, et al. Multimodal imaging including spectral-domain optical coherence tomography and confocal near-infrared reflectance for characterization of lacquer cracks in highly myopic eyes. Eye (Lond). 2014;28(12):1437–45. https://doi.org/10.1038/eye.2014.221.

Ohno-Matsui K, Morishima N, Ito M, Tokoro T. Indocyanine green angiographic findings of lacquer cracks in pathologic myopia. Jpn J Ophthalmol. 1998;42:293–9. https://doi.org/10.1016/s0021-5155(98)00008-2.

Acknowledgements

Funding

This work was supported by grants from the National Natural Science Foundation of China (No. 81970846, No. 82171100), National Key R&D Program of China (2016YFC0904800, 2019YFC0840607, 2019YFC1710204) and National Science and Technology Major Project of China (2017ZX09304010), Shanghai Public Health System Three-Year Plan Personnel Construction Subjects (GWV-10.2-YQ40). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. The Rapid Service Fee was funded by the authors.

Author Contributions

Conceptualization: Lin He, Weijun Wang, Xun Xu, and Ying Fan; Formal analysis: Ruonan Wang, Dandan Sun, Luyao Ye, Lili Yin, Jiangnan He, Hao Zhou, Haidong Zou and Jianfeng Zhu; Methodology: Ruonan Wang, Qiuying Chen, Dandan Sun, Luyao Ye, Jiangnan He, Lin He, Lijun Zhao, Difeng Huang, Qichao Tan and Bo Liang; Writing (original draft): Ruonan Wang, Jiangnan He, and Qiuying Chen; Writing (review & editing): Ruonan Wang, Ying Fan, Weijun Wang and Xun Xu.

Disclosures

The affiliation of author Lin He has changed as below: Bio-X Center, Key Laboratory for the Genetics of Developmental and Neuropsychiatric Disorders (Ministry of Education), Shanghai Jiao Tong University, Shanghai, China. Ruonan Wang, Jiangnan He, Qiuying Chen, Luyao Ye, Dandan Sun, Lili Yin, Hao Zhou, Lijun Zhao, Jianfeng Zhu, Haidong Zou, Qichao Tan, Difeng Huang, Bo Liang, Weijun Wang, Ying Fan and Xun Xu have nothing to disclose.

Compliance with Ethics Guidelines

Informed consent was obtained from all subjects involved in the study. This study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Ethics Committee at the Shanghai General Hospital (Approval ID: No. 2015KY156).

Data Availability

The presented data in this study are available from the corresponding author upon reasonable request.

Author information

Authors and Affiliations

Corresponding authors

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Wang, R., He, J., Chen, Q. et al. Efficacy of a Deep Learning System for Screening Myopic Maculopathy Based on Color Fundus Photographs. Ophthalmol Ther 12, 469–484 (2023). https://doi.org/10.1007/s40123-022-00621-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40123-022-00621-9