Abstract

In this paper, we introduce a numerical method to obtain an accurate approximate solution of the integro-differential delay equations with state-dependent bounds. The method is based basically on the generalized Mott polynomial with the parameter-\(\beta\), Chebyshev–Lobatto collocation points and matrix structures. These matrices are gathered under a unique matrix equation and then solved algebraically, which produce the desired solution. We discuss the behavior of the solutions, controlling their parameterized form via \(\beta\) and so we monitor the effectiveness of the method. We improve the obtained solutions by employing the Mott-residual error estimation. In addition to comparing the results in tables, we also illustrate the solutions in figures, which are made up of the phase plane, logarithmic and standard scales. All results indicate that the present method is simple-structured, reliable and straightforward to write a computer program module on any mathematical software.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Integro-differential equations (IDEs) and their delay types (IDDEs) govern many physical phenomena emerging in mathematics, mechanics, engineering, biology, economics, electrodynamics and oscillating magnetic field [1,2,3,4,5,6,7,8]. These varieties encourage many researchers in all over the world to give more attention than ever before. Because the sophisticated phenomena can be easily described with the aid of IDDEs. As these phenomena are evolved, finding the physical behavior of IDDEs becomes far more difficult. For example, state-dependent Riccati equation modeling vehicle state estimation [9], state-dependent delay Volterra equations considered in viscoelasticity theory [10] and the system of state-dependent delay differential equation describing forest growth [11] can be found in the literature. Thus, a difficult task appears while interpreting the physical responses of these complex structures, analytically. Therefore, several numerical methods have recently been focused and established more on IDEs and their various types. To this end, Kürkçü et al. [12, 13] have solved IDEs and IDEs of difference type by means of Dickson matrix-collocation method. Reutskiy [14] has utilized the backward substitution method for solving the neutral Volterra–Fredholm IDEs. Chen ve Wang [15] have dealt with the neutral functional–differential equation with proportional delays using the variational iteration method. Bellen and Zennaro [4] have investigated the convergence and numerical solution of state-dependent delay differential equations. Gökmen et al. [16] have proposed Taylor polynomial method for solving the Volterra-type functional integral equations. Gülsu et al. [17] have used Chebyshev polynomial for delay differential equations. Savaşaneril and Sezer [18, 19] have employed Taylor and Taylor–Lucas polynomial method for searching the solution of Fredholm IDEs and pantograph-type delay differential equations, respectively. Maleknejad and Mahmoidi [20] have obtained the Taylor and block–pulse numerical solutions of Fredholm integral equation. Rohaninasa et al. [21] have established Legendre collocation method to solve Volterra–Fredholm IDEs. Yüzbaşı [22] have approached the numerical solutions of pantograph-type Volterra IDES with the aid of Laguerre polynomial. Gümgüm at al. [23] have obtained the Lucas polynomial solutions of functional IDEs involving variable delays.

All above studies motivate us to develop a numerical method and deal with the highly stiff problems, such as the integro-differential delay equations with state-dependent bounds in this paper. In the literature, there is no study maintaining the numerical solution of such equations. By way of this study, we can investigate their physical responses numerically, evaluating the obtained values in tables and figures. This paper is organized as follows: “Fundamental properties of Mott polynomial” section mentions some properties of the Mott polynomials. “Constructing method of solution via matrix relations” section establishes new matrix relations and method solution. “Mott-residual error estimation” section gives Mott-residual error estimation as an algorithmic sense. “Numerical examples” section includes stiff numerical examples solved with the aid of the present method. “Conclusions” section presents the discussions about the present method and its efficiency by taking into account the results in “Numerical examples” section. The functional integro-differential delay equations with state-dependent bounds are of the form

subject to the initial conditions

where y(t), \({P_{r}}\left( t \right)\), g(t) and \(K_q\left( {t,s} \right)\) are analytic functions on [a, b]; \(\sigma _{r}\) and \(\tau _{q}\) are real constant delays \(\left( \sigma _{r},\,\tau _{q} \ge 0 \right)\); \(\lambda _q\), \({c_{q}}\), \({d_{q}}\)\(\left( c_{q}<d_{q}\right)\) and \(\psi _k\) are proper constants.

Our aim in this study is to efficiently obtain an accurate approximate solution of Eq. (1) by developing the Mott matrix-collocation method, which was previously introduced in [24]. Besides, the parameter-\(\beta\) in the generalized Mott polynomial is used as a control parameter in the numerical approximations. Hence, we can control the obtained solutions in terms of their more consistent structures. The approximate solution comes out to be in the form (see [24])

where \(y_{n}\), \(n = 0,1, \ldots ,N\) are unknown Mott coefficients to be calculated by the method and \({S_n}\left( {t,\beta } \right)\) is the generalized Mott polynomial [25]. Chebyshev–Lobatto collocation points used in the matrix systems are defined to be (see [23])

where \(i = 0,1, \ldots ,N\) and \(a = {t_0}< {t_1}< \cdots < {t_N} = b\).

Fundamental properties of Mott polynomial

In this section, we briefly describe some fundamental properties of Mott polynomial, which is used as a basis of the matrix-collocation method. In 1932, Mott [26] originally introduced the polynomial while monitoring the roaming behaviors of electrons for a problem in the theory of electrons. After this exploration, Erdèlyi et al. [27] established the explicit formula of the polynomial \({S_n}\left( t \right)\) as follows:

where \({}_3{F_0}\) is a generalized hypergeometric function.

In 1984, Roman [28] presented both an associated Sheffer sequence and a generating function for the polynomial as follows:

where \({S_0}\left( t \right) = 1\), \({S_1}\left( t \right) = - \frac{t}{2}\), \({S_2}\left( t \right) = \frac{{{t^2}}}{4}\), \({S_3}\left( t \right) = - \frac{{3t}}{4} - \frac{{{t^3}}}{8}\) and \({S_4}\left( t \right) = \frac{{{t^2}}}{2} + \frac{{{t^4}}}{{16}}\).

On the other hand, a triangle coefficient matrix of the polynomial can be found in A137378 of OEIS [29]. In 2014, Kruchinin [25] converted the polynomial to a generalized form with a parameter-\(\beta\):

where the Mott polynomial is obtained for \(\beta = 0.5\). For further properties of the polynomial, the reader can refer to [25,26,27,28].

Constructing method of solution via matrix relations

In this section, the fundamental matrix relations are presented to construct method of solution. Let us first state the solution form (3) in the matrix relation [24]

where

Now, inserting \(t\rightarrow t - \sigma _r\) into the matrix relation (5), then we get

where

Similarly, it holds that

By the matrix relation (6), the left hand side of Eq. (1) is of the matrix relation form

Now, the matrix relation of integral part of Eq. (1) is given. First, the kernel function \(K_{q}(t,s)\) can be written in the truncated Taylor series form [16, 18],

where

and

Then, it holds from the matrix relations (7) and (9) that

where

Recalling the matrix relations (8) and (10) and collocation points (4), we thus write the combined matrix relation as

More briefly, we can construct the matrix relation (11) as the fundamental matrix equation

where

Using the matrix relation (5), we similarly state the matrix relation of the initial conditions (2) as the following:

By the matrix equation (12), we are now ready to constitute the method of solution

Then, it follows that

On the other hand, we can construct the matrix relation of Eq. (13) as

where

Replacing the row(s) of the matrix relation (15) by the last \(m_1\) row(s) in \({{\varvec{W}}}\), we then obtain the augmented matrix

We solve the augmented matrix (16) only if rank\(\tilde{{{\varvec{W}}}}\!=\!\text {rank}\left[ {{\tilde{{{\varvec{W}}}}}\,\,;\,\,{\tilde{{{\varvec{G}}}}}} \right] \!=\!N+1\). We can state \({{\varvec{Y}}} \!=\! {\left( {{\tilde{{{\varvec{W}}}}}} \right) ^{ - 1}}{\tilde{{{\varvec{G}}}}}\). Thus, the Mott coefficients appearing in the form (3) are obtained, and then, they are substituted into the form (3); we finally reach the Mott polynomial solution with the parameter-\(\beta\).

Mott-residual error estimation

The residual error analysis has successfully been employed in [7, 8, 12, 13, 16, 22, 30]. For this motivation, we introduce the Mott-residual error estimation technique including the Mott polynomial and a residual function to improve the Mott polynomial solution (3) of Eq. (1). Algorithmic procedure of this technique can be described for the present method as

- Step 1:

\({R_N}(t) \leftarrow \sum \nolimits _{r = 0}^{m_1}{P_{r}}\left( t \right) y_N^{(r)}\left( t - \sigma _{r} \right) -\sum \nolimits _{q = 0}^{{m_2}} \lambda _q \int \limits _{c_{q}y_N\left( t \right) }^{d_{q}y_N\left( t \right) } { {K_q}\left( {t,s} \right) {y_N\left( s - \tau _{q} \right) }\hbox {d}s}-g\left( t \right) ,\)

- Step 2:

\({{e_N}(t)} \leftarrow {y(t)} - {{y_N}(t)} = - {R_N}(t),\)

- Step 3:

\(0 \leftarrow \sum \nolimits _{k = 0}^{{m_1} - 1} { e_N^{(k)}\left( a \right) },\)

- Step 4:

Solve the error problem consisting of Steps 2 and 3,

- Step 5:

\({e_{N,M}}(t) \leftarrow \sum \nolimits _{n = 0}^M {y_n^*{S_n}\left( {t,\beta } \right) } \,,\,\mathrm{{ }}\left( {M > N} \right)\), where \({S_n}\left( {t,\beta } \right)\) is the Mott polynomial and \({e_{N,M}}(t)\) is a Mott-estimated error function,

- Step 6:

\({y_{N,M}}(t) \leftarrow {y_N}(t) + {e_{N,M}}(t)\), where \({y_{N,M}}(t)\) is a corrected Mott polynomial solution.

Thus, we improve the Mott polynomial solution and it is worth specifying that the corrected error function is of the form \({E_{N,M}}(t) = y(t) - {y_{N,M}}(t)\).

Numerical examples

In this section, we apply the present method to solve some stiff problems concerned with Eq. (1). To do this, we develop a computer program routine on Mathematica 11. The obtained solutions and numerical values are elucidated in figures and tables.

Example 1

Consider the second-order FIDE with state-dependent bounds and multi-delays

subject to the initial conditions \(y\left( 0 \right) = 1\), \(y'\left( 0 \right) = 0\), and \(t,s \in [0,1]\). Here, the constant delays are \(\left\{ \left\{ \sigma _0=0.6,\,\sigma _1=1\right\} ,\,\left\{ \tau _0=0.5,\, \tau _1=0.1\right\} \right\}\) and

By the fundamental matrix equation (14), we construct the fundamental matrix equation as

After applying the described procedure to the equation above, we easily get the augmented matrix

Solving this matrix system, we get

and it holds from Eq. (3) that

which is the exact solution.

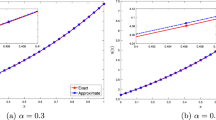

Comparison of the Mott polynomial with the control parameter \(\beta =1.5\) and exact solutions in terms of N on [0, 1] for Example 2 with \(\sigma _2=\tau _0=0.5\)

Oscillatory behavior of the Mott polynomial \((\beta =1.5)\) and exact solutions on [0, 10] for Example 2 with \(\sigma _2=\tau _0=0.5\)

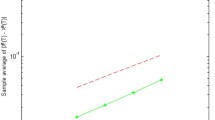

Logarithmic scaled plot of \(L_\infty\) error with respect to \(\beta\) for Example 2 with \(L=1\) and \(\sigma _2=\tau _0=0.5\)

Example 2

Consider the fourth-order IDDE with state-dependent bounds and variable coefficients

subject to the initial conditions \(y\left( 0 \right) = 1\), \(y'\left( 0 \right) = 0\), \(y''\left( 0 \right) = -1\), \(y'''\left( 0 \right) = 0\), and \(t,s \in [0,L]\). Here, the exact solution is \(y\left( t \right) = \cos \left( t \right)\) and for \(\sigma _2=\tau _0=0\),

Similarly, g(t) can be calculated via the exact solution for various values of \(\{\sigma _2,\,\tau _0\}\).

Taking different truncation limit N and \(L=\{1,\,10\}\), we solve the problem by using both the present method and Taylor collocation method [16, 18] to compare the obtained results. We later employ the Mott-residual error estimation to improve the solution. It is important to state that we investigate the effects of \(\beta\) and the delays on the Mott polynomial solutions. Therefore, the following discussion is made:

Table 1 shows the absolute errors for fixed \(\sigma _2=\tau _0=0.01\) and \(\beta =1.5\). Also in there, the better numerical results are obtained in comparison with Taylor collocation method [16, 18].

When \(L=\{1,\,10\},\) the oscillatory behaviors of the solutions coincide properly with the exact solution in Figs. 1 and 2 , respectively.

\(L_\infty\) errors obtained with \(N=12\), \(\beta =1.5\) are investigated with respect to different delays \(\sigma _2\) and \(\tau _0\) in Table 2. The best approximation stands for \(8.92e{-}10\) when \(\sigma _2=\tau _0=1.\)

Similarly, the behavior of \(L_\infty\) errors obtained with the fixed \(N=12\) and \(\sigma _2=\tau _0=0.5\) is demonstrated with respect to \(\beta\) in the logarithmic scaled plot shown in Fig. 3.

Oscillatory behavior of the Mott polynomial \((\beta =1.5)\) and exact solutions with respect to \(\sigma _1\) in [0, 15] for Example 3 with \(\varepsilon =0.1\), \(F=2\), and \(\omega =2\)

Oscillatory behavior the Mott polynomial \((\beta =1.5)\) and exact solutions with respect to \(\sigma _1\) in [0, 15] for Example 3 with \(\varepsilon =0.45\), \(F=2\) and \(\omega =2\)

Phase plane behavior of the Mott polynomial \((\beta =1.5)\) and exact solutions in phase plane for Example 3 with \(\varepsilon =0.1\), \(F=2\), \(\omega =2\) and \(L=15\)

Phase plane behavior of the Mott polynomial \((\beta =1.5)\) and exact solutions in phase plane for Example 3 with \(\varepsilon =0.45\), \(F=2\), \(\omega =2\) and \(L=15\)

Logarithmic scaled plot of \(L_\infty\) error with respect to \(\beta\) for Example 3 with \(L=1\), \(\varepsilon =0.1\), \(\sigma _1=0\), \(F=2\) and \(\omega =2\)

Example 3

Consider the second-order external forced oscillatory differential equation exposing to single time-delayed effect

subject to the initial conditions \(y\left( 0 \right) = 0\) and \(y'\left( 0 \right) = 1\). A under-damped parameter \(|\varepsilon |<1\), an external force F, a non-resonance excitation \(\omega \ne 1\) [5]. Here, \(L=\{1, 15\}\) and also the exact solution of the problem is unknown, but it can be approached numerically with the aid of Mathematica as

Previously, Kalmar–Nagy and Balachandran [5] have studied the linear oscillator differential equation with external forcing, under-damped system and non-resonance excitation. They have determined the steady-state response and magnification factor. In this example, by exposing the linear oscillator equation [5] to the delayed effect \(\sigma _1\), let us seek the numerical solutions for different N, M, \(\sigma _1\), \(\varepsilon\), \(\beta\), and the fixed \(F=\omega =2\). Thus,

By increasing N and M, we demonstrate the absolute errors for \(\sigma _1=0\) and \(\beta =1\) in Table 3. This indicates that N and M enable us to enhance the accuracy of the method.

Figs. 4 and 5 illustrate the oscillatory response of both the Mott polynomial \(y_{25}(t)\) and Mathematica solutions for \(L=15\), \(\sigma _1={0, 0.5}\) and \(\varepsilon =\{0.1, \,0.45\}\). Figures 6 and 7 also illustrate these solutions in the phase plane.

The decreasing \(L_\infty\) error diagram obtained with \(N=12\) is demonstrated with respect to the control parameter \(\beta\) in Fig. 8.

In addition, we draw the attention to the fact that both \(\varepsilon\) and \(\sigma _1\) have a different effect on the Mott polynomial solution.

Conclusions

An efficient numerical method based on the generalized Mott polynomial, Chebyshev–Lobatto collocation points and the matrix structures has been proposed to solve stiff IDDEs with state-dependent bounds, which are introduced for the first time with this paper. Thanks to the simplicity of the present method, the obtained solutions have been accurately approximated to the exact and Mathematica solutions. Controlling the optimum value of the parameter-\(\beta\) in the solutions is of importance as can be seen in Figs. 3 and 8. Therefore, this parameter plays a specific role in numerical approximations. The Mott-residual error estimation has effectively improved the obtained solutions as seen from Tables 1 and 3 . The effects of the delays have been monitored differently. So, we here want to state from Table 2, Figs. 4 and 5 that the delays change the behavior of the problems in a physical sense. By investigating all results, as N is increased, the accuracy of the method increases. Thus, we conclude that the present method could be very applicable and reliable for solving other well-known phenomena, such as partial differential and fractional differential equations after making some required modifications on the proposed method.

References

Dehghan, M., Shakeri, F.: The use of the decomposition procedure of Adomian for solving a delay differential equation arising in electrodynamics. Phys. Scr. 78, p. 11 (Article No. 065004) (2008)

Dehghan, M., Shakeri, F.: Solution of an integro-differential equation arising in oscillating magnetic field using He’s homotopy perturbation method. Prog. Electromagn. Res. PIER 78, 361–376 (2008)

Kot, M.: Elements of Mathematical Ecology. Cambridge University Press, Cambridge (2001)

Bellen, A., Zennaro, M.: Numerical Methods for Delay Differential Equations. Numerical Mathematics and Scientific Computation. The Clarendon Press Oxford University Press, New York (2003)

Kalmar-Nagy, T., Balachandran, B.: Forced harmonic vibration of a Duffing oscillator with linear viscous damping. In: Kovacic, I., Brennan, M.J. (eds.) The Duffing Equation (2011). https://doi.org/10.1002/9780470977859.ch5

Kürkçü, Ö.K., Aslan, E., Sezer, M.: A numerical method for solving some model problems arising in science and convergence analysis based on residual function. Appl. Numer. Math. 121, 134–148 (2017)

Yüzbaşı, Ş.: Improved Bessel collocation method for linear Volterra integro-differential equations with piecewise intervals and application of a Volterra population model. Appl. Math. Model. 40, 5349–5363 (2016)

Yüzbaşı, Ş., Sezer, M., Kemanc, B.: Numerical solutions of integro-differential equations and application of a population model with an improved Legendre method. Appl. Math. Model. 37, 2086–2101 (2013)

Hoek, R., Alirezaei, M., Schmeitz, A., Nijmeijer, H.: Vehicle state estimation using a state dependent Riccati equation. IFAC-PapersOnLine 50, 3388–3393 (2017)

Andrade, B., Siracusa, G.: On evolutionary Volterra equations with state-dependent delay. Comput. Math. Appl. 75, 1181–1190 (2018)

Magal, P., Zhang, Z.: A system of state-dependent delay differential equation modelling forest growth II: boundedness of solutions. Nonlinear Anal. Real World Appl. 42, 334–352 (2018)

Kürkçü, Ö.K., Aslan, E., Sezer, M.: A numerical approach with error estimation to solve general integro-differential-difference equations using Dickson polynomials. Appl. Math. Comput. 276, 324–339 (2016)

Kürkçü, Ö.K., Aslan, E., Sezer, M.: A novel collocation method based on residual error analysis for solving integro-differential equations using hybrid Dickson and Taylor polynomials. Sains Malays. 46, 335–347 (2017)

Reutskiy, SYu.: The backward substitution method for multipoint problems with linear Volterra–Fredholm integro-differential equations of the neutral type. J. Comput. Appl. Math. 296, 724–738 (2016)

Chen, X., Wang, L.: The variational iteration method for solving a neutral functional–differential equation with proportional delays. Comput. Math. Appl. 59, 2696–2702 (2010)

Gökmen, E., Yuksel, G., Sezer, M.: A numerical approach for solving Volterra type functional integral equations with variable bounds and mixed delays. J. Comput. Appl. Math. 311, 354–363 (2017)

Gülsu, M., Öztürk, Y., Sezer, M.: A new Chebyshev polynomial approximation for solving delay differential equations. J. Differ. Equ. Appl. 18, 1043–1065 (2012)

Baykuş, N., Sezer, M.: Solution of high-order linear Fredholm integro-differential equations with piecewise intervals. Numer. Methods Partial Differ. Equ. 27, 1327–1339 (2011)

Savaşaneril, N.B., Sezer, M.: Hybrid Taylor–Lucas collocation method for numerical solution of high-order pantograph type delay differential equations with variables delays. Appl. Math. Inf. Sci. 11, 1795–1801 (2017)

Maleknejad, K., Mahmoidi, Y.: Numerical solution of linear Fredholm integral equation by using hybrid Taylor and block-pulse functions. Appl. Math. Comput. 149, 799–806 (2004)

Rohaninasab, N., Maleknejad, K., Ezzati, R.: Numerical solution of high-order Volterra–Fredholm integro-differential equations by using Legendre collocation method. Appl. Math. Comput. 328, 171–188 (2018)

Yüzbaşı, Ş.: Laguerre approach for solving pantograph-type Volterra integro-differential equations. Appl. Math. Comput. 232, 1183–1199 (2014)

Gümgüm, S., Savaşaneril, N.B., Kürkçü, Ö.K., Sezer, M.: A numerical technique based on Lucas polynomials together with standard and Chebyshev–Lobatto collocation points for solving functional integro-differential equations involving variable delays. Sakarya Univ. J. Sci. 22, 1659–1668 (2018). https://doi.org/10.16984/saufenbilder.384592

Kürkçü, Ö.K.: A new numerical method for solving delay integral equations with variable bounds by using generalized Mott polynomials. Eskişehir Technical University. J. Sci. Tech. A Appl. Sci. Eng. 19, 844–857 (2018). https://doi.org/10.18038/aubtda.409056

Kruchinin, D.V.: Explicit formula for generalized Mott polynomials. Adv. Stud. Contemp. Math. 24, 327–332 (2014)

Mott, N.F.: The polarisation of electrons by double scattering. Proc. R. Soc. Lond. A 135, 429–458 (1932)

Erdèlyi, A., Magnus, W., Oberhettinger, F., Tricomi, F.G.: Higher Transcendental Functions, vol. III. McGraw-Hill Book Company Inc., New York (1955)

Roman, S.: The Umbral Calculus. Pure and Applied Mathematics, vol. 111. Academic Press Inc., London (1984)

Solane, N.J.: The on-line encyclopedia of integer sequences. https://oeis.org/A137378

Çelik, İ.: Collocation method and residual correction using Chebyshev series. Appl. Math. Comput. 174, 910–920 (2006)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kürkçü, Ö.K. A numerical method with a control parameter for integro-differential delay equations with state-dependent bounds via generalized Mott polynomial. Math Sci 14, 43–52 (2020). https://doi.org/10.1007/s40096-019-00314-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-019-00314-8