Abstract

In this paper, we present a collocation method based on Gaussian Radial Basis Functions (RBFs) for approximating the solution of stochastic fractional differential equations (SFDEs). In this equation the fractional derivative is considered in the Caputo sense. Also we prove the existence and uniqueness of the presented method. Numerical examples confirm the proficiency of the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Fractional calculus introduced because it can fill the existing gap for describing a large amount of work in engineering [1],[2], and different phenomena in nature such as biology, physics [3, 4]. Mathematicians and physicists have been created numerous articles about fractional differential equations(FDEs) for finding analytical and numerical methods, including Adomian Decomposition Method [5], Variational Iteration Method [6, 7], Homotopy perturbation Method [8] and homotopy analysis method [9]. H. Rezazadeh et al. have generalized the Floquet system to the fractional Floquet system in 2016 [10]. Stochastic Differential Equations (SDEs) models play a great role in various sciences such as physics, economics, biology, chemistry and finance [11,12,13,14,15]. The reader has at least knowledge about independence, expected values and variances and also basic definitions of stochastic, that is necessary to read articles in this field [16]. M.Khodabin et al. approximate solution of stochastic Volterra integral equations in 2014 [17], also R.Ezzati et al. work on a stochastic operational matrix based on block pulse functions in 2014 [18]. We introduce SFDEs [19] :

for \(0\le \alpha \le 1\) and \(t\in \left[ 0,T\right] \) , where \(D^\alpha \) is the Caputo fractional derivative of order \(\alpha \) which will be defined later. \(\sigma \) is Max amplitude of noise also \(\int ^{t}_{t_{0}} g(t,s)dw(s)\) is the stochastic term, that produce some noise in our result, throughout the paper we putting \(\sigma =1\). SFDEs play a remarkable role for physical applications in nature [20,21,22].

Using RBFs for solving partial differential equations (PDEs) are very popular among many researchers, during the last two decades [23, 24]. Also RBFs is applied in mechanics [25], Kdv equation [26], Klein-Gordon equation [27], then in 2012 Vanani et al. used RBF for solving fractional partial differential equations [28]. Gonzalez-Gaxiola and Gonzalez-Perez used Multi-Quadratic RBF for approximating the solution of the Black-Scholes equation in 2014 [29].

The motivation of this paper is to extend the application of the RBF to solve SFDEs.

The layout of the paper is the following. In Sect. 2 some essential definitions of fractional calculus is proposed. In Sect. 3 we explain using RBFs method for SFDEs and prove the existence and uniqueness of the presented method. In Sect. 4 various examples are solved to illustrate the effectiveness of the proposed method. Also a conclusion is given in the last section.

Preliminaries and notations

In this section, we give some basic definitions and properties of fractional calculus which are defined as follow [4]

Definition 2.1

The Caputo fractional derivative of order \(\nu \) is defined as

where \(D^p\) is the classical differential operator of order p

Remark 2.2

For the Caputo derivative we have

Remark 2.3

\(D^{-\vartheta }\) is defined as \(D^{-\vartheta } f(t)=\frac{1}{\Gamma (\vartheta )}\int _0^t f(t)(t-\zeta )^{\vartheta -1} d\zeta ,\qquad t>0,\quad 0< \vartheta \le 1.\)

Definition 2.4

Let \((\Omega , F, \rho )\) be a probability space with a normal filteration \((F_t)_{t\ge 0}\) and \(w=\{w(t):t\ge 0\}\) be a Brownian motion defined over this filtered probability space. Consider the following SFDE

for \(t\in [0,T]\), and \(\nu \in \Omega \). For simplicity of notation we drop the variable \(\nu \) so we have the following equation

from remark (2.3) we can see

therefore, we have

Also we admit the following assumptions.

Assumption 2.5

Suppose f and g are \(L^2\) measurable functions satisfying

for some constants \( K_1, K_2, K_3, K_4 \) and for every \(x,y\in \mathbb {R}\) and \(0\le m, n \le t \le T=1.\)

Stochastic integral 2.6

Now we should explain the approximation of the stochastic term. White noise is known as the derivative of the brownian motion W(s) [30], so we approximating the term \(\frac{dw_{s}}{dt}\) . Let \(t_{0}=0\prec t_{1}=\Delta t \prec \cdots \prec t_{N}=T=1,\) with \(t_{i}=i \Delta t\) , for \(i=0,\ldots ,N\) be a partition of \(\left[ 0,1\right] \). This method introduced in [31] , we approximate \(\frac{dw_{s}}{dt}\) by \(\frac{d\hat{w}_{s}}{dt}\)

where \(\gamma _{i} \sim N(0,1) \) is introducing by

where

Collocation method based on RBFs for solving SFDEs

The Radial basis functions method has been known as a powerful tool for solving ordinary, partial and fractional differential equations and also integral equations and etc. So in this section we use this method for solving (2). Before that we consider some preliminaries.

Interpolation by RBFs

Let \(\{t_1,\ldots ,t_N\}\) be a given set of distinct points in \(\left[ 0,T\right] \subseteq \mathbb {R}\). Then the approximation of a function u(t) using RBFs \(\varphi (t)=\varphi (\left\| t\right\| )\), can be written in the following form [32, 33]

where \(p_0,\ldots ,p_m\) form a basis for m-dimensional linear space \(P_m([0,T])\) of polynomials of total degree less than or equal to m on the [0, T]. Suppose \(C^{m}_{N}([0,T])=span\{\varphi _0,\ldots ,\varphi _N,p_0,\ldots ,p_m\}\) then \(\pi _{N,m}:C([0,T])\rightarrow C^{m}_{N}([0,T])\) is the collocation projector on the collocation points \(X=\{t_0,\ldots ,t_N\}\subset [0,T]\). Since enforcing the interpolation conditions \(\pi _{N,m}(t_i)=u(t_i),\quad i=1,\ldots ,N\), leads to a system of N linear equations with \(N+m\) unknown, usually we add m additional conditions:

Using RBFs for solving SFDEs (2)

Let \(D=[0,T] \subseteq \mathbb {R} \) and \(u:C([0,T]) \rightarrow \mathbb {R}\) and also suppose that \(u_N\) is the approximation of u based on these functions so we can write

N is the number of nodal points within the domain D and \(c_i\) denotes the shape parameter. Also we know there are different kinds of RBFs, but in this research we need only one of them with titled Gaussian that we represented as follow:

The collocation method based on RBF basis for solving (2) can be written in the following form:

where

Lemma 3.1

(Existence and Uniqueness) Assume that there exists a constant \(K_1>0\) such that

and

for each \( t\in [0,T] \) and all \( x,y\in \mathbb {R}^{n}\), then Eq.(2) has an unique solution on [0, T] .

Proof

First, we transform Eq.(2) in to a fixed point problem. For this purpose consider the operation

defined by

As we can see according to Eq.(2) we have

If we show P is a contraction operator, using the Banach contraction principle we conclude P has a fixed point and we conclude Eq.(2) has a unique solution. Consequently applying Eq.(3) it is easy to see that

On the other side using the assumption of the lemma we know that \(\frac{K_1}{\alpha \Gamma (\alpha )}<1\), therefore the proof is completed. \(\square \)

Illustrative example

In this section, we solve SFDEs using RBFs and Galerkin method [19], these equations don’t have exact solution so we use numerical approximation for sufficiently small partition on t. While we have this relation

then we approximate the solution in the form of RMSError [34] as follow

In this paper, we introduce RMSError after 50 and 60 times run the program for different points and \(\sigma \).

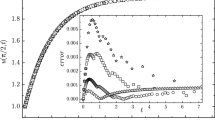

Example 4.1

Consider the following SFDE:

We have table 1,2,3 after 60 times run the program with \(n=17\).

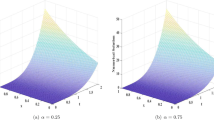

Example 4.2

Consider the following SFDE:

For \(\alpha =\frac{1}{2}\) and various \(\sigma \) we have table 4 after 50 times run the program with nodal points \(n=12\) and for \(\alpha =\frac{3}{2}\) and different value for \(\sigma \) we have table 5 after 50 times run the program with \(n=11\).

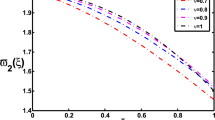

Example 4.3

Consider the following SFDE:

Example 4.4

Consider the following SFDE:

In this work, the accuracy of approximate solution, when taking larger n and smaller \(\sigma \), is expected that more accurate the approximate results.

Conclusion

The main goal of this work was to purpose an efficient algorithm for the stochastic fractional differential equations. In this paper, while we don’t have exact solution for SFDEs we used RBFs to approximate the solution of these kind of equations. In addition, we discussed about existence and uniqeness of the presented method. The present RMS Error in the tables shows that the results are highly accurate in comparison with another method using by Galerkin algorithm.

References

Maimardi, F.: Fractional calculus and waves in linear viscoelasticity. Imperial College Press, London (2010)

Alvelid, M., Enelund, M.: Modeling of constrained thin rubber layer with emphasis on damping. J. Sound Vib. 300, 662–675 (2007)

Atanackovich, T.M., Stankovic, B.: On a system of differential equations with fractional derivatives arising in rod theory. J. Phys. A 37(4), 1241–1250 (2004)

A. A. Kilbas, H.M. Srivastava, J. J. Trujillo, Theory and applications of fractional differential equations, vol.204 of North-Holland Mathematics studies. Elsevier, Amsterdam(2006)

El-Tawil, M.A., Bahnasawi, A.A., Abdel-Naby, A.: solving Riccati Differential Equation using Adomian Decomposition Method, Appl. Math. Comput. 157(2), 503–514 (2004)

He, J. H.: Variation iteration method-a kind of nonlinear analytical technique: some examples. Int. J Non-linear Mech. 34, 699–708 (1999)

Inc, M.: The approximate and exact solutions of the space -and time-fractional burgers equations with initial conditions by VIM. J. Math. Anal. Appl. 345, 476–484 (2008)

Abbasbandy, S.: homotopy perturbation method for quadratic Riccati Differential Equation and comparison with Adomian Decomposition Method, Appl. Math. Comput. 172, 485-490 (2006)

Abbasbandy, S.: The application of homotopy analysis method to nonlinear equations arising in heat transfer, Phys. Lett. A 360, 109-113 (2006)

Rezazadeh, H., Aminikhah, H., Refahi Sheikhani, A. H.: Analytical studies for linear periodic systems of fractional order, Math Sci 10, 1321 (2016)

Glasserman, P.: Monte Carlo Method in Financial Engineering. Applications of Mathematics, Vol. 53 (Springer, New York, 2004)

Kloeden, P., Platen, E.: Numerical solution of stochastic differential equations(springer, Berlin/NewYork, 1992)

\(\ddot{O}\)hinger,: Stochastic Processes in Polymeric Fluids (Springer, Berlin, 1996). Tools and examples for developing simulation algorithms

P. Ru\(\acute{e}\). vill\(\acute{a}\)-Freixa, K. Burrage, Simulation methods with extended stability for stiff biochemical kinetics. BMC Syst. Biol. 4(110), 1–13 (2010)

Dung, N.T.: Fractional stochastic differential equations with applications of finance. J. Math. Anal. Appl. 397, 334–348 (2013)

Higham, D.J.: An algorithmic introduction to numerical simulation of Stochastic Differential Equations. Soc. Indust. Appl. Math. 43(3), 525–546

Khodabin, M., Maleknejad, K., Damercheli, T.: Approximate solution of the stochastic Volterra integral equations via expansion method. IJIM 6(1), 41–48 (2014)

Ezzati, R., Khodabin, M., Sadati, Z.: Numerical Implementation of Stochastic Operational Matrix Driven by a Fractional Brownian Motion for Solving a Stochastic Differential Equation, Abstract and Applied Analysis Volume 2014, Article ID 523163, 11 pages

Kamrani, M.: Numerical solution of stochastic differential equations. Numer Algor. 68, 81–93 (2015)

Enelund, M. Josefson, B.L.: Time-domain finite element analysis of viscoelastic structures with fractional derivatives constitutive relations. AIAAJ. 35(10), 1630–1637 (1997)

Friedrich, C.: linear viscoelastic behavior of branched polybutadiens: a fractional calculus approach. Acta Polym. 385–390 (1995)

Govindan, T.E., Josh, M.C.: Stability and optimal control of stochastic functional differential equations with memory. Numer. Funct. Anal. Optim. 13(3–4), 249–265 (1992)

Frank, C., Schaback, R.: solving partial differential equations by collocation using radial basis functions. Appl. Comut. 93, 73–82 (1998)

Kansa, E.J.: multiquadric a scattered data approximation scheme with applications to computational fluid dynamics. Comput. Math. Appl. 19, 147–161 (1990)

Tran-Cong, T., Mai-Dug, N., Phan-Thien, N.: BEM-RBF approch for viscoelastic flow analysis. Eng Anal Boundary Element 26, 757–762 (2002)

Shen, Q.: A meshless method of lines for the numerical solution of Kdv equation using radial basis functions. Eng. Anal. Boundary Element. 33, 1171–1180 (2009)

Dehghan, M., Shokri, A.: Numerical solution of the nonlinear Klein-Gordon equation using radial basis functions, J.of. Comput. Appl. Math. 230, 400–410 (2009)

Vanani, S.K., Aminataei, A.: On the numerical solution of fractional partial differential equations. Math. Compute. Appl. 17(2), 140–151 (2012)

Gonzalez-Gaxiola, O.: Pedro pablo Gonzalez-Perez, Nonlinear black-scholes equation thorough Radial Basis Functions, J.of Appl. Math. Bioinformatics 4, 75–86 (2014)

Oksendal, B.: Stochastic Differential Equations, An Introduction with Applications, Springer; 6th edn

Allen, E.J., Novosel, S.J., Zhang, Z.: Finite element and difference approximation of some linear stochastic partial differential equations. Stochast. Stochast. Rep. 64(12), 117142 (1998)

Bohmann, M.D.: Radial Basis Functions, Theory and implementations. Cambridge University Press, Cambridge (2003)

Wendland, Scattered Data Approximation, Cambrige University Press (2005)

Chai, T., Draxler, R. R.: Root mean square error(RMSE) or mean absolute error (MAE)?- Argument against avoiding RMSE in the literature . Geosci. Model Dev. 1247–1250, 2014

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ahmadi, N., Vahidi, A.R. & Allahviranloo, T. An efficient approach based on radial basis functions for solving stochastic fractional differential equations. Math Sci 11, 113–118 (2017). https://doi.org/10.1007/s40096-017-0211-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-017-0211-7