Abstract

In this paper, new periodic fractional trigonometric functions with the period 2π α are presented. We have generalized the Floquet system to the fractional Floquet system. The fractional derivatives are described with the use of modified Riemann–Liouville derivative. Moreover, the stability analysis of fractional Floquet system is introduced.

Similar content being viewed by others

Introduction

The study of systems governed by ordinary differential equation with period coefficients is of basic importance in many branches such as mathematics, physics, chemistry, biology, mechanics and finance, such systems are known as Floquet systems [1, 2]. The Floquet systems are defined with the n × n matrix function A as \(x'=A(t)x\), where the components in matrix A are continuous and periodic function with smallest positive period w, that is, \(A(t+w)=A(t)\) for all ts. Although the coefficient matrix in \(x'=A(t)x\) is periodic, in general solutions they are not considered as periodic. The idea of Floquet systems has been stated by Gaston Floquet in the early 1880s, and later he established his celebrated theorem on the structure of solutions of periodic differential equations [3]. In this paper, we first focus our attention on fractional Floquet system, and then we consider the stability analysis for this class system.

The fractional order calculus establishes the branch of mathematics dealing with differentiation and integration under an arbitrary order of the operation, that is the order can be any real or even complex number, not only the integer one. Although the history of fractional calculus is more than three centuries old, it only has received much attention and interest in the past 20 years; the reader may refer to [4–6] for the theory and applications of fractional calculus. The generalization of dynamical equations using fractional derivatives proved to be useful and more accurate in mathematical modeling related to many interdisciplinary areas. Applications of fractional order differential equations include: electrochemistry [7], porous media [8] and so on [9–11]. It is worth noting that recently much attention has been paid to the distributed-order differential equations and their applications in engineering fields that both integer-order systems and fractional order systems are special cases of distributed-order systems. The reader may refer to [12–14]. The analytic results on the existence and uniqueness of solutions to the fractional differential equations have been investigated by many authors [5, 6].

Preliminaries and notations

Basic definitions

We give some basic definitions and properties of the fractional calculus theory used in this work.

Definition 1

Let \(f:{\mathbb R} \rightarrow {\mathbb R}\), \(t\rightarrow f(t)\) denote a continuous (but not necessarily differentiable) function and let partition \(h>0\) in the interval [0, 1]. The Jumarie’Derivative is defined through the fractional difference [15]:

where FW \(f(t)=f(t+h)\). Then the fractional derivative is defined as the following limit

This definition is close to the standard definition of derivatives, and as a direct result, the αth derivative of a constant \(0\,<\, \alpha\, \le\, 1\) is zero.

Definition 2

The Riemann–Liouville fractional integral operator of order \(\alpha >0\) is defined as [16]:

Definition 3

The modified Riemann–Liouville derivative is defined as [16]:

and

The proposed modified Riemann–Liouville derivative as shown in Eq. (4) is strictly equivalent to Eq. (2).

Definition 4

Fractional derivative of compounded functions is defined as [16]:

Definition 5

The integral with respect to \((\mathrm{d}t)^{\alpha }\) is defined as the solution of fractional differential equation [17]:

Lemma 1

Let f(t) denotes a continuous function then the solution of the Eq. (6) is defined as [17]:

Definition 6

Function f(t) is αth differentiable then the following equalities holds:

Mittag-Leffler function

The Mittag-Leffler function which plays a very important role in the fractional differential equations was in fact introduced by Mittag-Leffler in 1903 [18]. The Mittag-Leffler function \(E_{\alpha }(t)\) is defined by the power series:

which

As further result of the above formula

The matrix extension of the mentioned Mittag-Liffler function for \(A\in M_{m}\) is defined as in the following representation:

If \(A,B\in {\mathbb R}^{n\times n}\) and \(\alpha >0\), then it is easy to prove the following nice properties of Mittag-Leffler matrix \(E_{\alpha } (At^{\alpha })\):

-

(i)

\(E_{\alpha }^{-1} (At^{\alpha } )\approx E_{\alpha } (-At^{\alpha } ),\)

-

(ii)

If P is a non-singular matrix, then \(E_{\alpha } (P^{-1} AP)=P^{-1} E_{\alpha } (A)P\),

-

(iii)

\(E_{\alpha } ((A+B)t^{\alpha } )\approx E_{\alpha } (At^{\alpha } )E_{\alpha } (Bt^{\alpha } )\) if and only if \(AB=BA\),

-

(iv)

\(E_{\alpha }^{-1} (At^{\alpha } )\approx E_{\alpha } (A(-t)^{\alpha })\).

Corollary 1

[19] If the matrix A is diagonalizable, that is, there exists an invertible matrix T such that

then, we have

Next, suppose the matrix A is similar to a Jordan canonical form, that is there exists an invertible matrix T such that

where j i, \(1\, \le \, i\, \le \, r\) has the following form

and \(\sum _{i=1}^{r}n_{i} =n\). Obviously,

and

where \(\mathcal {C}_{k}^{j}\), \(1\le j\le n_{i} -1,1\le i\le r\) are the binomial coefficients.

Fractional trigonometric functions and Mittag-Leffler logarithm function

The idea of the fractional trigonometric functions has been stated by Jumarie [20] asserting that these functions are not periodic. Now, we introduce new fractional trigonometric functions which are periodic with the period \(2\pi _{\alpha } \approx 2\pi\). Analogous with the trigonometric function, we can write

and

with

These fractional functions have the period \(2\pi _{\alpha } \approx 2\pi\). Figure 1 shows \(\sin _{\alpha } (t^{\alpha } )\) for \(\alpha =1,\;0.95,\;0.9\) which is periodic with the period \(2\pi _{\alpha } \approx 2\pi\).

Some properties of the fractional trigonometric functions are presented as follows:

The fractional functions \(\sin _{\alpha } (\omega ^{\alpha } t^{\alpha } )\) and \(\cos _{\alpha } (\omega ^{\alpha } t^{\alpha } )\) both are periodic functions with the period \((2\pi _{\alpha } /\omega )\).

In addition Eq. (11) provides the equalities

There are similar formulas like for \(\cos _{\alpha } (t-s)^{\alpha }\)and \(\sin _{\alpha } (t-s)^{\alpha }.\)

Substituting \(\theta\) for both t and s in the addition formulas gives

Additional formulas come from combining the equations

we add the two equations to get \(\cos _{\alpha } 2\theta ^{\alpha } \approx 2\cos _{\alpha }^{2} \theta ^{\alpha } -1\) and subtract the second from the first to get \(\cos _{\alpha } 2\theta ^{\alpha } \approx 1-2\sin _{\alpha }^{2} \theta ^{\alpha }\).

Definition 7

\(Ln_{\alpha } t\) denotes the inverse function of the \(E_{\alpha }(t)\), referred to as Mittag-Leffler logarithm, clearly \(E_{\alpha }(Ln_{\alpha }t)=t\) and the Mittag-Leffler logarithm function is defined as [20]:

Fractional linear system and its stability analysis

Here, we will consider the following linear fractional differential system with modified Riemann–Liouville fractional derivative

with initial value \(x(0)=x_{0} =(x_{10} ,x_{20} ,\ldots ,x_{no} )^{T}\), where \(x=(x_{1} ,x_{2},\ldots ,x_{n} )^{T}\), \(\alpha \in (0,1]\) and \(A\in {\mathbb R}^{n\times n}\). By implementation of the Laplace transform on the above system and using the initial condition, the general solution can be written as

The stability of the equilibrium of system (18) was first defined and established by Matignon as follows [21].

Definition 8

The linear fractional differential system (18) is said to be

(i) stable if for any initial value \(x_{0}\), there exists a ε > 0 such that for all t ≥ 0,

(ii) asymptotically stable if at first it is stable and \(\mathop {\lim }\nolimits _{t\rightarrow \infty } \left\| x(t^{\alpha } )\right\| =0\).

Theorem 1

The linear fractional differential system (18) is asymptotically stable if all the eigenvalues of A satisfy

We can very easily prove Theorem 1 analogously using Proposition 3.1 in [21].

Now, we state the following important existence–uniqueness theorem for solutions of initial value problems (21).

Theorem 2

([22]) Let \(0<\alpha \le 1\), \((0,b)\subset {\mathbb R}\), U be an open connected set in \({\mathbb R}^{n+1}\), \(\Delta =(0,b)\times U\) and \((t_{0} ,x_{0} )\in \Delta\). If

are continuous matrices in \(\left[ 0,b\right]\), then equation

has a unique solution \(x(t^{\alpha } )\), continuous in (0, b], such that \(x(t_{0}^{\alpha } )=x_{0}.\)

Fractional Floquet system

In this Section, we will consider fractional order linear periodic differential equations involving modified Riemann–Liouville derivative that can be written in the form

where we assume that \(f_{\alpha } :(a,b)\rightarrow {\mathbb R}\) is continuous periodic function with smallest positive periodic \(w_{\alpha }\), that is, \(f_{\alpha } ((t+w_{\alpha } )^{\alpha } )\approx f_{\alpha } (t^{\alpha } )\).

Also, the solution of Eq. (22) obtained with respect to the Mittag-Leffler function is as

where C is a constant.

Example 1

Consider the fractional order linear periodic differential equation

Thus, by (23), \(x(t^{\alpha } )\approx C\, E_{\alpha } (\frac{1}{i^{\alpha +1} } \cos _{\alpha } (t^{\alpha } ))\), where for \(t\in {\mathbb R}\) is a general solution on \({\mathbb R}\) for (24).

Definition 9

We say x is a solution of (22) on an interval \(L\subset (0,b)\) if x is a continuously \(\alpha\)th differentiable function on L and for \(t\in L\), x satisfies (22).

In the rest of this section, we will generalize linear periodic systems to fractional periodic systems involving modified Riemann–Liouville derivative form

where \(a_{\alpha _{ij}} (t^{\alpha })\), \((i,\,j=1,2,\ldots ,n)\) are given continuous periodic functions with smallest positive periodic \(w_{\alpha }\) on an interval L.

This system can be transformed to a vector–matrix form as

where

and

where the components in matrix \(A_{\alpha }\) are continuous and periodic functions with smallest positive period w α (saying \(A_{\alpha } ((t+w_{\alpha } )^{\alpha } )\approx A_{\alpha } (t^{\alpha } )\)).

Consider the matrix fractional differential equation

where

are \(n\times n\) matrix variables and \(A_{\alpha }\) is an n × n continuous matrix function on L.

Theorem 3

(Existence–Uniqueness Theorem) If the entries of the square matrix \(A_{\alpha }\) are continuous on an interval L containing \(t_{0}\), then the initial value problem

has one and only one solution X on the whole interval L.

Proof

The proof is similar to that of Theorem 2.21 in [2]. \(\square\)

Definition 10

An n × n matrix fractional function \(\Phi _{\alpha }\), defined on an interval L, is called a fractional fundamental matrix of the linear system (3.5) if \(\Phi _{\alpha }\) is a solution of the fractional matrix equation (27) on L and \(\det \Phi _{\alpha } (t^{\alpha } )\ne 0\) on L.

Theorem 4

If \(\Phi _{\alpha }\) is a fractional fundamental matrix for \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\) , then, for an arbitrary n × n non-singular constant matrix C, \(\Psi _{\alpha } =\Phi _{\alpha } C\) is a general fractional fundamental matrix of \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\).

Proof

Since \(\Phi _{\alpha }\) is a fractional fundamental matrix solution to \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\) and setting \(\Psi _{\alpha } =\Phi _{\alpha } C\), we have

and also \(\Psi _{\alpha }\) is continuously \(\alpha\)th differentiable function on L. Thus, \(\Psi _{\alpha } =\Phi _{\alpha } C\) is a solution of the matrix fractional equation (3.6). Since \(\Phi _{\alpha }\) is a fractional fundamental matrix solution to (26), Definition 10 implies that \(\det [\Phi _{\alpha } (t^{\alpha } )] \ne 0\). As well, since, \(\det [C] \ne 0\). Hence,

for \(t\in L\), and by Definition 10, \(\Psi _{\alpha } =\Phi _{\alpha } C\) is a fractional fundamental matrix of (27). \(\square\)

Theorem 5

If C is an n × n non-singular matrix, then there is a matrix B such that \(E_{\alpha } (B)=C\).

Proof

To avoid some tedious calculations, we prove this theorem for a 2 × 2 matrices. For the eigenvalues \(\mu _{1} ,\; \mu _{2}\ne \;0\) of nonsingular matrix C. We consider two special cases:

Case I Let

then, in this case we are looking for a diagonal matrix

so that \(E_{\alpha } (B)=C\). For this purpose, according to the definition of the Mittag-Leffler function, we pick \(b_{1}\) and \(b_{2}\) so that

Hence, the matrix B can be taken as

Case II Let

then, we seek a matrix B of the form

so that \(E_{\alpha } (B)=C\). We choose the parameters \(a_{1}\) and \(a_{2}\) so that

Hence, in the view of the inverse function derivative, the matrix B can be taken as

Case III When \(C \in \mathbb {R}^{2\times 2}\) is an arbitrary matrix such that \(\det [C]\ne 0\). By the Corollary 1, there is a non-singular matrix P such that \(C=PJP^{-1}\), where

Now, by the previous two cases there is a matrix \(B_{1}\) so that \(E_{\alpha } (B_{1} )=J\).

If we set the matrix B as

then, we see that

Similarly, for the higher order of n, the matrix B can be easily found. \(\square\)

Example 2

For example, consider

Here, we know that the solution is in general

for \(t\in {\mathbb R}\), where \(\beta , \mu \in {\mathbb R}\) denote two constants. Using all the above definitions, the fractional fundamental matrix is

Theorem 6

(Fractional Floquet’s Theorem) Every fractional fundamental matrix solution \(\Phi _{\alpha } (t^{\alpha } )\) of (26) has the form

where \(P_{\alpha }(t^{\alpha })\), B are \(n\times n\) matrices, \(P_{\alpha } ((t+w_{\alpha } )^{\alpha } )\approx P_{\alpha } (t^{\alpha } )\) for all t and B is a constant.

Proof

Assume that \(\Phi _{\alpha } (t^{\alpha } )\) is a fractional fundamental matrix solution of (26). Then \(\Phi _{\alpha } ((t+w_{\alpha } )^{\alpha } )\) is also a fractional fundamental matrix solution, since \(A_{\alpha } (t^{\alpha } )\) is periodic of period \(w_{\alpha }\). Therefore, there is a nonsingular matrix C such that

From Theorem 5, there is a matrix B so that \(C=E_{\alpha } (w_{\alpha } B)\). For this matrix B, let \(P_{\alpha } (t^{\alpha } )\approx \Phi _{\alpha } (t^{\alpha } )E_{\alpha } (B(-t)^{\alpha } )\). Then

and the theorem is proved. \(\square\)

Definition 11

The eigenvalues \(\mu _{1} ,\mu _{2} ,\ldots ,\mu _{n}\) of \(C=\Phi _{\alpha }^{-1} (0)\Phi _{\alpha } (w_{\alpha } )\) are called the multipliers of the fractional Floquet system \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\), where \(\Phi _{\alpha } (t^{\alpha } )\) is a fractional fundamental matrix of system \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\).

Example 3

Solving the following equation,

we get that

so that

As a result \(E_{\alpha } \left( \frac{(\pi _{\alpha } )^{\alpha } }{2} \right)\) is the multiplier for this fractional differential equation.

Theorem 7

Let \(\Phi _{\alpha } (t^{\alpha } )\approx P_{\alpha } (t^{\alpha } )E_{\alpha } (Bt^{\alpha } )\) be the fractional fundamental matrix in Theorem 6. Then, x is a solution of the fractional Floquet system \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\) if and only if the vector function y defined by \(y(t^{\alpha } )=P_{\alpha }^{-1} (t^{\alpha } )x(t^{\alpha } )\) be a solution of

Proof

Assume that x is a solution of the fractional Floquet system \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\). Then, for some vector \(x_{0} \in \mathbb {R}^{n\times 1}\) we have \(x(t^{\alpha } )=\Phi _{\alpha } (t^{\alpha } )x_{0}\).

Now, by setting \(y(t^{\alpha } )=P_{\alpha }^{-1} (t^{\alpha } )x(t^{\alpha } )\), we get

which is a solution of (30).

Conversely, assume that y is a solution of system (30) and set \(x(t^{\alpha } )=P_{\alpha } (t^{\alpha } )y(t^{\alpha } )\). Since y is a solution of \(D_{t}^{\alpha } \, y=By\), there is a vector \(y_{0}\in \mathbb {R}^{n\times 1}\) such that \(y(t^{\alpha } )=E_{\alpha } (Bt^{\alpha } )y_{0}\).

It follows that

which is a solution of the fractional Floquet system \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\). \(\square\)

Theorem 8

Two matrices A and B are called similar if there exists a nonsingular matrix A such that \(A=TBT^{-1}\) [23].

Theorem 9

A fractional Floquet system \(D_{t}^{\alpha } \, x =A_{\alpha } (t^{\alpha } )x\) with the multipliers \(\mu _{1} ,\mu _{2} ,\ldots ,\mu _{n}\) is

-

(i)

asymptotically stable on \([0,\infty )\) if all multipliers satisfy \(\left| \mu _{i} \right| <1,\; 1\le i\le n,\)

-

(ii)

unstable on \([0,\infty )\), when there is an \(i_{0} ,\; 1\le i_{0} \le n,\) such that \(\left| \mu _{i_{0} } \right| >1\).

Proof

Without loss of generality, we prove this theorem for \(2\times 2\) matrices \(A_{\alpha }\). Let \(\Phi _{\alpha } (t^{\alpha } )\approx P_{\alpha } (t^{\alpha } )E_{\alpha } (Bt^{\alpha } )\) and C be the same as in the Theorem 6. Therefore, the matrix B can be chosen such that \(E_{\alpha } (Bw^{\alpha } )=C\).

Suppose the matrix B is similar to a Jordan canonical form, i.e., there exists an invertible matrix M such that \(B=MJM^{-1}\). Now by letting \(\lambda _{1} ,\, \lambda _{2}\) as the eigenvalues of B, we see that for the matrix C we have

where either

Since the eigenvalues of H are the same as the eigenvalues of C, we take the multipliers \(\mu _{i}\) as \(\mu _{i} =E_{\alpha } (\lambda _{i} w^{\alpha } )\), \(i=1,2\). Since \(\left| \mu _{i} \right| =E_{\alpha } (Re(\lambda _{i} )w^{\alpha } )\), we have that

Since according to the Theorem 7, there is a one-to-one correspondence between solutions of the fractional Floquet system \(D_{t}^{\alpha } \, x=A_{\alpha } (t^{\alpha } )x\) and system (30).

For constant \(Q_{1} >0\), we have

and for constant \(Q_{2} >0\), we get

Finally by Theorem 1 the results can be derived. \(\square\)

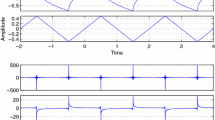

The numerical approximations of equation (3.8) when \(\alpha =1,\; 0.98,\; 0.95\)

In Example 3, we saw that the multiplier of the fractional differential equation (29) is \(\mu =E_{\alpha } (\frac{(\pi _{\alpha } )^{\alpha } }{2} )\). When \(0<\alpha \le 1\), then the solution fractional differential equation (29) is unstable, since we always have \(\left| \mu \right| >1\). Figure 2 indicates that equation (29) with parameters \(\alpha =1,\; 0.98,\; 0.95\) is unstable.

Example 4

We can show \(\Phi _{\alpha } (t^{\alpha } )\) in the form

is a fractional fundamental matrix for the fractional Floquet system

Since

the multipliers are \(\mu _{1}=\mu _{2}=E _{\alpha }(-(2\pi _{\alpha })^{\alpha })\). When \(0<\alpha \le 1\), then the solution system (31) is asymptotically stable, as we always have \(\left| \mu _{i} \right| <1\) for \(i=1,2\). Figure 3 indicates that the solution fractional Floquet system (3.10) with parameters \(\alpha =1,\; 0.98,\; 0.95\) is asymptotically stable.

The numerical approximations of fractional Floquet system (31) when \(\alpha =1,\; 0.98,\; 0.95\)

Conclusion

In the present article, we have recalled some properties of the Mittag-Leffler function and Mittag-Leffler logarithm function as described in [20]. Then we have presented fractional trigonometric function and the fractional Floquet system based on the modified Riemann–Liouville derivation. Since, the study of stability for the fractional Floquet system is very important, the asymptotical stability for such systems has been investigated. We have shown the fractional Floquet system is asymptotically stable if all multipliers have real parts between -1 and 1. Finding the stability of nonlinear periodic fractional systems and delay linear periodic fractional systems can be an interesting topic for future research work.

References

Hale, J.K.: Ordinary Differential Equations, Pure and Applied Mathematics, vol. XXI. Wiley-Interscience, New York (1969)

Kelley, W.G., Peterson, A.C.: The theory of differential equations: classical and qualitative. Springer Science, Business Media (2010)

Floquet, G.: Sur les equations differentielles lineaires a coeffcients peroidiques. Ann. Ecole Norm. Sup. 12, 47–49 (1883)

Oldham, K.B., Spanier, J.: The Fractional Calculus. Acadmic Press, New York (1974)

Podlubny, I.: Fractional differential equations. Academic Press, San Diego (1999)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, San Diego (2006)

Reyes-Melo, E., Martinez-Vega, J., Guerrero-Salazar, C., Ortiz-Mendez, U.: Application of fractional calculus to the modeling of dielectric relaxation phenomena in polymeric materials. J. Appl. Poly. Sci. 98, 923–935 (2005)

Schumer, R., Benson, D.: Eulerian derivative of the fractional advection-dispersion equation. J. Contam. 48, 69–88 (2001)

Ansari, A., Refahi Sheikhani, A., Saberi Najafi, H.: Math. Methods Appl. Sci. Solution to system of partial fractional differential equations using the fractional exponential operators 35, 119–123 (2012)

Ansari, A., Refahi, A.: Sheikhani and S. Kordrostami, On the generating function \(e^{xt+y\varphi (t)}\) and its fractional calculus. Cent. Euro. J. Phy. (2013). doi:10.2478/s11534013-0195-3

Ansari, A., Sheikhani, A.R.: New identities for the wright and the mittag-leffler functions using the laplace transform. Asian-Euro. J. Math. 7, 1450038 (2014)

H. Saberi Najafi, A. Refahi Sheikhani, and A. Ansari, Stability Analysis of Distributed Order Fractional Differential Equations, Abstract and Applied Analysis, Vol. 2011, Article ID 175323, 12 pages, 2011. doi:10.1155/2011/175323

H. Aminikhah, A. Refahi Sheikhani, H. Rezazadeh, Stability Analysis of Distributed Order Fractional Chen System. Sci. World J. (2013). doi:10.1155/2013 /645080 (Article ID 645080, 13 pages, 2013)

H. Aminikhah, A. Refahi Sheikhani, and H.Rezazadeh, Stability analysis of linear distributed order fractional system with multiple time delays, U.P.B. Sci. Bull. 77 Series A, 207–218 (2015)

Jumarie, G.: Stochastic differential equations with fractional Brownian motion input. Int. J. Syst. Sci. 24, 1113–1132 (1993)

Jumarie, G.: Modified Riemann–Liouville derivative and fractional Taylor series of non-differentiable functions further results. Comp. Math. Appl. 51, 1367–1376 (2006)

Jumarie, G.: Oscillation of Non-Linear Systems Close to Equilibrium Position in the Presence of Coarse-Graining in Time and Space. Non. Anal. Modell. Control 14, 177–197 (2009)

Mittag-Leffler, M.G.: Sur la nouvelle fonction \(E_{\alpha }(x),\) Comptes Rendus. Acad. Sci. Paris 137, 554–558 (1903)

Qian, D., Li, C., Agarwal, R.P., Wongd, P.J.Y.: Stability analysis of fractional differential system with Riemann–Liouville derivative. Math. Comp. Model. 52, 862–874 (2010)

Jumarie, G.: Laplace’s transform of fractional order via the Mittag-Leffler function and modified Riemann–Liouville derivative. Appl. Math. Lett. 22, 1659–1664 (2009)

Matignon, D.: Stability results for fractional differential equations with applications to control processing. Comp. Eng. Syst. Appl. 2, 963–968 (1996)

Bonilla, B., Rivero, M., Trujillo, J.J.: On systems of linear fractional differential equations with constant coefficients. Appl. Math. Comp. 187, 68–78 (2007)

B. N. Datta, Numerical Linear Algebra and Applications, SIAM, 2nd Edition (2010)

Acknowledgments

We are very grateful to anonymous referees for their careful reading and valuable comments which led to the improvement of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Rezazadeh, H., Aminikhah, H. & Refahi Sheikhani, A.H. Analytical studies for linear periodic systems of fractional order. Math Sci 10, 13–21 (2016). https://doi.org/10.1007/s40096-015-0172-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-015-0172-7