Abstract

In this work, we consider fractional variational problems depending on higher order fractional derivatives. We obtain optimality conditions for such problems and we present and discuss some examples. We conclude with possible research directions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus and the calculus of variations are subjects that have attracted many scientists over time. To the best of our knowledge, it was F. Riewe who first linked these two topics in his work [20]: the fractional Euler–Lagrange equation was one of the formulas presented therein.

The fractional variational calculus theory has been developed in this century. A good account of works within the subject was published in the last years, see, e.g., Refs. [1, 3, 4, 6, 8, 9, 11, 14]. Despite many works are available in the literature, studies with a Lagrangian depending on derivatives of order greater than one are scarce; we could only find three works [1, 2, 17]. However: (1) the necessary conditions presented in Refs. [1, 2] are derived formally and there is little care with the boundary conditions used in the various problems considered in those papers; (2) in Ref. [17], the theory is developed using Riemann–Liouville fractional derivatives with restrictions in their order.

In this work, we consider and study a fractional variational problems depending on higher order Caputo fractional derivatives. Precisely, we define the functional space in which we search for solutions of the problem and derive a first-order optimality condition, in integral form, and then the Euler–Lagrange equation. We also mention other possible direction on how to generalize the problems depending on only one fractional derivative, namely by considering a sequential fractional derivative (cf. Remark 3.11 below). We have not ever seen such an approach in the literature before. We note that, contrary to the classical case, our working problems could not be solved in general using the results in Ref. [5], namely the Pontryagin maximum principle. Indeed, the Caputo fractional state equation consists of a vector of fractional derivatives all of the same order \(\alpha >0\) and, here, we deal with fractional derivatives of different orders (cf. for example (5) below).

The last section of this work deals with a sufficient condition for the basic problem, i.e., for the fractional variational problem depending on only one fractional derivative. It is hard to find results stating sufficient conditions for existence of minimizers to the functional in question, being the existing ones mostly related with convexity of the Lagrangian function L [7]. In the classical theory of the calculus of variations, one of the deepest results is the Jacobi sufficient (and necessary, by the way) condition [23, Theorem 10.5.1]. An analogue of this result for problems containing fractional derivatives does not exist in the literature; perhaps because the chain rule is heavily used in the classical proof, namely, \((f^2(t))'=2f(t)f'(t)\) (The uninformed reader must be aware that the fractional chain rule does not look so ‘nice’ as above). We note that even the classical Jacobi condition is not easy to use in practice, since the solution of a certain second-order differential equation must be known, which is often impossible. In order to bypass this issue, Garaev derived a sufficient condition for the classical problem based on an inequality [15]. Inspired by this recent work, we worked to obtain a sufficient condition for the fractional (basic) problem. We emphasize though that an analogue of the necessary and sufficient classical Jacobi condition for the fractional variational problem is still an open question.

2 Fractional calculus

In this section, we make a (very) brief introduction to the concepts and results we will use in this work. For a thorough study of the subject, we refer the reader to the monographs [10, 18].

Throughout the text, we always consider two real numbers a and b with \(a<b\). For \(\alpha >0\) and an interval [a, b], the Riemann–Liouville left and right fractional integrals of order \(\alpha \) of a function f are defined by

respectively. We also put \(I_{a^+}^0f=I_{b^-}^0f=f\).

Now, let \(n-1<\alpha <n\) with \(n\in \mathbb {N}\). The Riemann–Liouville left and right fractional derivatives of order \(\alpha \) of a function f are defined by

respectively. Finally, the Caputo left and right fractional derivatives of order \(\alpha \) of a function f are defined by

respectively.

Remark 2.1

It is well known that, if \(f\in C^n\), then \({^c}D^\alpha _{a^+}f(t)=I_{a^+}^{n-\alpha }f^{(n)}(t)\).

The following formulas will be used repeatedly, so we provide them for the benefit of the reader.

Proposition 2.2

[18, cf. Property 2.1 p.71] Let \(\alpha ,\beta >0\). Then,

when they are defined.

The following result is [18, Lemma 2.22] but for other functional spaces. For completion, we present a proof here.

Lemma 2.3

Suppose \(0<\alpha \le 1\). If \(f,\ {^c}D_{a^+}^\alpha f\in C[a,b]\), then \(I_{a^+}^\alpha {^c}D_{a^+}^\alpha f(t)=f(t)-f(a)\) on [a, b].

Proof

By hypothesis, \(I_{a^+}^\alpha {^c}D_{a^+}^\alpha f{:}{=}g\in C[a,b]\). Applying \(I_{a^+}^{1-\alpha }\) to both sides we obtain (since \({^c}D_{a^+}^\alpha f\) is continuous) \(I_{a^+}^1DI_{a^+}^{1-\alpha }[f-f(a)](t)=I_{a^+}^{1-\alpha }g(t)\) or \(I_{a^+}^{1-\alpha }[f-f(a)](t)=I_{a^+}^{1-\alpha }g(t)\). Applying \({^c}D_{a^+}^{1-\alpha }\) to both sides and using [18, Lemma 2.21], we get \(g(t)=f(t)-f(a)\). The proof is done. \(\square \)

3 Variational problems

For a natural number n and real numbers \(\alpha _i\), \(i\in \{1,\ldots ,n\}\), we define the condition below:

We define the space of functions \(\mathcal {F}_n=\{f\in C^{n-1}[a,b]: {^c}D^{\alpha _n}_{a^+}f(t)\in C[a,b]\}\). The space of variations is defined by \(\mathcal {V}_n=\{f\in \mathcal {F}_n: f^{(j)}(a)=0=f^{(j)}(b), \text{ for } \text{ all } j\in \{0,\ldots ,n-1\}\}\).

It follows some preliminary results, essential to deduce the desired necessary optimality condition.

Lemma 3.1

Let \(\alpha _i\), \(i\in \{1,\ldots ,n-1\}\) be real numbers satisfying (2). For \(f\in C[a,b]\), we have

Proof

For \(t<b\) and \(M=\max _{t\in [a,b]}|f(t)|\), we have (recall (2))

Therefore,

showing that the right hand side of the last inequality is defined at \(t=b\).

We now consider \(a\le c<b\). We want to show that

We have

Now, we assume that \(b>s>c\), being the proof of the case \(s<c\) analogous (of course that when \(c=a\), then \(s>a\)). We have

Now, \((t-s)^{\alpha _n-\alpha _i-1}-(t-c)^{\alpha _n-\alpha _i-1}\ge 0\ (\le 0)\) accordingly \(\alpha _n-\alpha _i-1<0\) (\(\alpha _n-\alpha _i-1> 0\)). Therefore, suppose first that \(\alpha _n-\alpha _i-1<0\). Then,

which proves (3) for this case. If \(\alpha _n-\alpha _i-1> 0\), then

and the proof of (3) is done. The result now follows in view that \((b-t)^{n-\alpha _n}\) is continuous on [a, b). \(\square \)

Lemma 3.2

(Higher order of Bois–Reymond lemma). Let \(n\in \mathbb {N}\) and \(n-1<\alpha \le n\). Suppose that \(f\in C[a,b]\). If

then f is a polynomial of degree at most \(n-1\).

Proof

By Fubini’s theorem and Remark 2.1, we get

Since \(n-1<\alpha \le n\) then we know from the proof of Lemma 3.1 that \(I_{b^-}^{n-\alpha }[(b-\cdot )^{\alpha -n}f]\in C[a,b]\). Therefore, by [19, Theorem I], there exist real numbers \(c_0,\ldots ,c_{n-1}\) such that

Applying the operator \(D_{b^-}^{n-\alpha }\) to both sides of the previous equality and using Proposition 2.2, we get

where we now are assuming that \(\alpha \ne n\) (the case \(n=\alpha \) is just the classical one). Finally, we get the desired result for \(t\in [a,b)\) by multiplying both sides of the previous equality by \((b-t)^{n-\alpha }\), and then, at \(t=b\) by the continuity of f. \(\square \)

Lemma 3.3

Let \(n\in \mathbb {N}\backslash \{1\}\) and \(i\in \{1,\ldots ,n-1\}\). Suppose that \(\alpha _i\) satisfy (2). For \(f\in C[a,b]\) and \(\eta \in \mathcal {V}_n\cap C^n[a,b]\), we have

Proof

Using the semigroup property (see, e.g., [18, Lemma 2.3]), we have

Using the fractional integration by parts formula (cf. [18, Lemma 2.7]), we immediately obtain the desired result. \(\square \)

Remark 3.4

We note that for \(\eta \in \mathcal {V}_n\cap C^n[a,b]\), the equality in (4) holds for \(\alpha _i=0\) if one let \(D_{a^+}^0\eta \) denote \(\eta \). Indeed, in that case,

where we have used [18, Lemma 2.22].

3.1 Necessary conditions

Let \(n\in \mathbb {N}\) and consider real numbers \(\alpha _i\) satisfying (2). Our working problem is the following:

for \(y\in \mathcal {F}_n\). We assume \(L(t,u,v_1,\ldots ,v_n):[a,b]\times \mathbb {R}^{n+1}\rightarrow \mathbb {R}\) together with its partial derivatives \(L_u\) and \(L_{v_i}\), \(i\in \{1,\dots ,n\}\), to be continuous functions.

Definition 3.5

We say that \(y\in \mathcal {F}_n\) is a local minimum of (5)–(6) if y satisfies (6) and there exists a \(\delta >0\) such that \(\mathcal {L}(y)\le \mathcal {L}(z)\) for all \(z\in \mathcal {F}_n\) (satisfying (6)) with \(\Vert y-z\Vert <\delta \), where \(\Vert \cdot \Vert \) is a norm.

Theorem 3.6

If \(y\in \mathcal {F}_n\) is a local minimum of the problem (5)–(6), then there exists real constants \(c_0,c_1,\ldots ,c_{n-1}\) such that y satisfies the equation

where we use the notation \(\bar{t}=(t,y(t),{^c}D^{\alpha _1}_{a^+}y(t),{^c}D^{\alpha _2}_{a^+}y(t),\ldots ,{^c}D^{\alpha _n}_{a^+}y(t))\). We herein assume the usual convention that empty sums equal zero, i.e., \(\sum _{i=1}^0 g(i)=0\) for every sequence g.

Proof

Under our hypothesis on L, \(L_u\), \(L_v\) and taking into account the spaces \(\mathcal {F}_n\) and \(\mathcal {V}_n\) we may conclude that, for a solution \(y\in \mathcal {F}_n\) of (5)–(6) and any (fixed) variation \(\eta \in \mathcal {V}_n\), the quantity \(\frac{d}{d\varepsilon }\mathcal {L}(y+\varepsilon \eta )\) equals zero at \(\varepsilon =0\). Standard calculations then show that

By hypothesis, each of the functions \(L_u(\bar{s})\) and \(L_{v_i}(\bar{s})\) (\(i\in \{1,\ldots ,n\}\)) is continuous on [a, b]. Therefore, by Lemma 3.3 and Remark 3.4, we get

or

where we put \(v_0=u\) and \(\alpha _0=0\). By Lemma 3.1, the function \(f\in C[a,b]\), hence (7) follows by Lemma 3.2.

\(\square \)

Corollary 3.7

(Euler–Lagrange equation). Suppose that \(n-1<\alpha _n<n\). Then, under the conditions of Theorem 3.6, y satisfies the following equation:

Proof

We start observing that

where we have used the binomial theorem and [13, Lemma 2.1]. Therefore,

where we put \(\bar{c}_{k,n}=\sum _{i=k}^{n-1}(-1)^{i}c_i(-b)^{i-k}\). By performing the change of variables \(k=n-r\), we get

Suppose now that y satisfies (7). Then, we have that

where the real numbers \(\bar{c}_{n-i,n}\) are given above. Since the functions \((b-\cdot )^{\alpha _n-n}L_{u}(\bar{\cdot }),(b-\cdot )^{\alpha _n-n}L_{v_i}(\bar{\cdot })\in L_1[a,b]\) and \(D^{\alpha _n}_{b^-}(b-t)^{\alpha _n-i}=0\), we conclude the desired result, after applying \(D_{b^-}^{\alpha _n}\) to both sides of (9). \(\square \)

Remark 3.8

From the proof of Corollary 3.7, it is easily seen that if \(\alpha _i=i\) for all \(i\in \{1,\ldots ,n\}\), then we may remove the restriction on the Euler–Lagrange equation at \(t=b\) and (8) becomes

where \(\bar{t}=(t,y(t),y'(t),y''(t),\ldots ,y^{(n)}(t))\). The previous equation is known by the higher order Euler–Lagrange equation and may be found, e.g., in [23, Formula (3.9)].

Remark 3.9

Equation (8) generalizes a result of [12], i.e., if we let \(n=1\) in Corollary 3.7, then we get [12, Corollary 3.1].

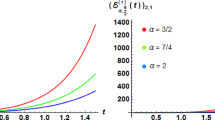

Example 3.10

For \(1<\alpha \le 2\), consider the problem of finding \(y\in \mathcal {F}_2\) such that

By Theorem 3.6, there exist two constants \(c_0\) and \(c_1\) such that

Applying the operator \(I_{0^+}^{\alpha }\) to both sides of the previous equality and using the boundary conditions we obtain, after some calculations,

Observe that \(y''(0)\) is not defined when \(\alpha <2\), hence the only candidate (for a solution of (11)) \(y\notin C^{2}[0,1]\).

Remark 3.11

The fact that, in general, for two positive orders \(\alpha ,\beta \), \(^cD_{a^+}^\alpha {^cD}_{a^+}^\beta \ne {^cD}_{a^+}^{\alpha +\beta }\) (cf. [10, Remark 3.4]), gives raise to several ways of generalizing the variational problem containing derivatives of higher order. For example, we may consider the problem (for the sake of exposition we now consider two fractional orders)

where we assume \(0<\alpha _1,\alpha _2\le 1\) and \(1<\alpha _1+\alpha _2\le 2\) and, again, \(L(t,u,v_1,v_2):[a,b]\times \mathbb {R}^{3}\rightarrow \mathbb {R}\) together with its partial derivatives \(L_u\) and \(L_{v_i}\), \(i\in \{1,2\}\), are continuous functions. In such a problem we seek solutions in the space of functions \(\mathbb {F}=\{f\in C[a,b]:\{{^c}D^{\alpha _1}_{a^+}f,{^c}D^{\alpha _2}_{a^+}{^c}D^{\alpha _1}_{a^+}f\}\in C[a,b]\}\). We may then follow the same steps as in Theorem 3.6, taking smooth variations (so that \(^cD_{a^+}^{\alpha _2} {^cD}_{a^+}^{\alpha _1}\eta ={^cD}_{a^+}^{\alpha _2+\alpha _1}\eta \)), and deduce that if \(y\in \mathbb {F}\) satisfies (12)–(13), then

where we use the notation \(\bar{t}=(t,y(t),{^c}D^{\alpha _1}_{a^+}y(t),{^c}D^{\alpha _2}_{a^+}{^c}D^{\alpha _1}_{a^+}y(t))\).

For example, if we consider the analogue problem to (11),

then, using (14), we obtain

for some constants \(c_0,c_1\in \mathbb {R}\). Applying Lemma 2.3 twice on (15), we finally deduce that

Observe that since \((t^{\alpha _1})'\) is not defined at zero for \(\alpha _1<1\), then \({^cD}_{0^+}^{\alpha _1+\alpha _2}t^{\alpha _1}\) does not exist.

3.2 A sufficient condition

We now consider \(n=1\) and \(0<\alpha \le 1\). Then, the basic fractional variational problem is the following:

The first- and second-order necessary optimality conditions for the problem (16)–(17) are given by (for L(t, u, v), we denote by \(L_u\), \(L_{uv}\) and \(L_{vv}\) the first- and second-order partial derivatives, which we assume from now on to exist and to be continuous),

where \( g_y(s)=(b-s)^{\alpha -1} L_u(s,y(s),{^c}D^\alpha _{a^+}y(s))\) and

Remark 3.12

Conditions (18) and (19) are called fractional Euler–Lagrange equation (in integral form) and fractional Legendre’s necessary condition, respectively.

It follows the main result of this section.

Theorem 3.13

Suppose that \(0<\alpha \le 1\) and let \(y\in \mathcal {F}_1\) be a solution of the Euler–Lagrange equation (18) and satisfy (17). Assume that

-

(i)

\(L_{uv}(\overline{t})\ge 0\) and \(L_{vv}(\overline{t})>0\), for all \(t\in [a,b]\),

-

(ii)

The function \((b-\cdot )^{\alpha -1}L_{uv}(\overline{\cdot })\in I_{b^-}^\alpha (L_1[a,b])=\{f:f=I_{b^-}^\alpha \phi ,\ \phi \in L_1[a,b]\}\) and

$$\begin{aligned} L_{uu}(\overline{t})+(b-t)^{1-\alpha }D_{b^-}^\alpha [(b-\cdot )^{\alpha -1}L_{uv}(\overline{\cdot })](t)\ge c>0,\quad t\in [a,b]. \end{aligned}$$

Then, the problem given by (16)–(17) has a local minimum at y.

Proof

Let \(\eta \in \mathcal {V}_1\). We start by noticing that we may proceed as in [16, Section 25] to obtain

where \(\delta \mathcal {L}(y,\eta )\) is the first variation, which is given by (cf. (1) in [6, Proposition 3.1])

and

Here, \(\varepsilon _1,\varepsilon _2,\varepsilon _3\rightarrow 0\) as \(\Vert \eta \Vert _{\alpha }\rightarrow 0\), where

Observe that \(\delta \mathcal {L}(y,\eta )=0\). Indeed, (21) transforms into (cf. the details within the proof of [12, Theorem 3.1])

or (recall (18))

which then equals to \(kI_{a^+}^{\alpha }{^cD}_{a^+}^{\alpha }\eta (b)\) and \(I_{a^+}^{\alpha }{^cD}_{a^+}^{\alpha }\eta (b)=0\) by Lemma 2.3.

Now, by [22, Theorem 2], we infer that \({^c}D^\alpha _{a^+}\eta ^2\in C[a,b]\) and

Hence,

By [21, Corollary 2 p. 46], we obtain

Therefore,

Let \(d=\min _{t\in [a,b]}L_{vv}(\overline{t})>0\) and define \(m=\min \{c,d\}\). Then, (22) is greater or equal than

On the other hand, by applying Young’s inequality, we get

where \(|\varepsilon _1(t)|\le \gamma ,\ |\varepsilon _2(t)|\le \gamma ,\ |\varepsilon _3(t)|\le \gamma \). From (20), we conclude that

because \(\gamma \) can be chosen arbitrarily small for all sufficiently small \(\Vert \eta \Vert _{\alpha }\). The proof is done. \(\square \)

Example 3.14

Let \(\alpha \in (0,1]\). Consider the Lagrangian given by \(L_{\alpha }(t,u,v)=v^2+(1-t)^\alpha uv\), for \((t,u,v)\in [0,1]\times \mathbb {R}^2\).

We have

Assume that \(y\in \mathcal {F}_1\) satisfies the Euler–Lagrange equation (18), i.e.,

The conditions in i. of Theorem 3.13 are trivially satisfied. By Proposition 2.2, \((1-\cdot )^{\alpha -1}L_{uv}(\overline{\cdot })\in I_{1^-}^\alpha (L_1[a,b])\) and

whence ii. of Theorem 3.13 is also satisfied.

Therefore, since \(y=0\) satisfies (23), then it furnishes a minimum to

We note that the function L defined above is not (jointly) convex (cf. [23, Theorem 10.7.2]) because \(L_{uu}L_{vv}-L^2_{uv}=-(1-t)^{2\alpha }<0\) for \(t<1\). Hence, one cannot rely on such convexity results to show that the problem has solutions, even when \(\alpha =1\).

4 Conclusions

In this work, we derived first-order optimality conditions for variational problems depending on higher order fractional derivatives. We considered formulations with fixed endpoint conditions. It is clear that using common techniques, we may obtain transversality conditions, deduce optimality conditions for isoperimetric problems (depending on higher order fractional derivatives), and so on (see, e.g., [3, 6]).

As mentioned in the Introduction, the problem (5)–(6) cannot be solved using the optimal control theory developed in Ref. [5]. It would definitely be interesting to prove a Pontryagin maximum principle that could be applied to derive optimality conditions for (5)–(6), in analogy with the classical case.

We also obtained a result containing a sufficient condition for the simplest fractional variational problem to have solutions. The result is pertinent because in the fractional setting, we are unaware of a Jacobi-type result analogous to the classical setting. However, we note that solving fractional Euler–Lagrange equations analytically is in most of the cases impossible, being a good research direction, the investigation of conditions ensuring existence of solutions to such type of equations.

Data availability

This manuscript has no associate data.

References

Agrawal, O.P.: Fractional variational calculus in terms of Riesz fractional derivatives. J. Phys. A 40(24), 6287–6303 (2007)

Agrawal, O.P.: Generalized multiparameters fractional variational calculus. Int. J. Differ. Equ. 2012, 521750, (2012)

Almeida, R.; Ferreira, R.A.C.; Torres, D.F.M.: Isoperimetric problems of the calculus of variations with fractional derivatives. Acta Math. Sci. Ser. B (Engl. Ed.) 32(2), 619–630 (2012)

Atanacković, T.M.; Konjik, S.; Pilipović, S.: Variational problems with fractional derivatives: Euler–Lagrange equations. J. Phys. A 41(9), 095201 (2008)

Bergounioux, M.; Bourdin, L.: Pontryagin maximum principle for general Caputo fractional optimal control problems with Bolza cost and terminal constraints. ESAIM Control Optim. Calc. Var. 26, 38 (2020)

Bourdin, L.; Ferreira, R.A.C.: Legendre’s necessary condition for fractional Bolza functionals with mixed initial/final constraints. J. Optim. Theory Appl. 190(2), 672–708 (2021)

Bourdin, L.; Odzijewicz, T.; Torres, D.F.M.: Existence of minimizers for fractional variational problems containing Caputo derivatives. Adv. Dyn. Syst. Appl. 8(1), 3–12 (2013)

Cresson, J.; Jiménez, F.; Ober-Blöbaum, S.: Continuous and discrete Noether’s fractional conserved quantities for restricted calculus of variations. J. Geom. Mech. 14(1), 57–89 (2022)

Cresson, J.; Szafrańska, A.: About the Noether’s theorem for fractional Lagrangian systems and a generalization of the classical Jost method of proof. Fract. Calc. Appl. Anal. 22(4), 871–898 (2019)

Diethelm, K.: The analysis of fractional differential equations, vol. 2004. Lecture Notes in Mathematics. Springer-Verlag, Berlin (2010)

Feng, X.; Sutton, M.: On a new class of fractional calculus of variations and related fractional differential equations. Differ. Integral Eqs. 35(5–6), 299–338 (2022)

Ferreira, R.A.C.: Fractional calculus of variations: a novel way to look at it. Fract. Calc. Appl. Anal. 22(4), 1133–1144 (2019)

Ferreira, R.A.C.: Discrete fractional calculus and fractional difference equations. SpringerBriefs in Mathematics. Springer, Cham (2022).

Ferreira, R.A.C.; Malinowska, A.B.: A counterexample to a Frederico-Torres fractional Noether-type theorem. J. Math. Anal. Appl. 429(2), 1370–1373 (2015)

Garaev, K.G.: Remark to the main problem of calculus of variations. Lobachevskii J. Math. 42(12), 2785–2788 (2021)

Gelfand, I.M.; Fomin, S.V.: Calculus of variations, revised English edition translated and edited by Richard A. Silverman. Prentice-Hall Inc, Englewood Cliffs (1963)

Idczak, D.; Majewski, M.: Fractional fundamental lemma of order \(\alpha \in (n-\frac{1}{2}, n)\) with \(n\in N, n\ge 2\). Dynam. Systems Appl. 21(2–3), 251–268 (2012)

Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J.: Theory and applications of fractional differential equations. North-Holland Mathematics Studies, vol. 204. Elsevier Science B.V., Amsterdam (2006)

Martini, R.: A generalization of the lemma of Du Bois-Reymond. Nederl. Akad. Wetensch. Proc. Ser. A 76, 331–334 (1973)

Riewe, F.: Nonconservative Lagrangian and Hamiltonian mechanics. Phys. Rev. E 53(2), 1890–1899 (1996)

Samko, S.G.; Kilbas, A.A.; Marichev, O.I.: Integrals, Fractional and Derivatives (Transl. from the 1987 Russian original). Gordon and Breach Science Publishers, Yverdon (1987)

Tuan, H.T.; Trinh, H.: Stability of fractional-order nonlinear systems by Lyapunov direct method. IET Control Theory Appl. 12(17), 2417–2422 (2018)

van Brunt, B.: The Calculus of Variations. Universitext. Springer-Verlag, New York (2004)

Acknowledgements

The author gratefully appreciates the comments and observations made by the referee, which notably contributed to the final version of this manuscript.

Funding

Rui A. C. Ferreira was supported by the “Fundação para a Ciência e a Tecnologia (FCT)" through the program “Stimulus of Scientific Employment, Individual Support-2017 Call” with reference CEECIND/00640/2017.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author has no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ferreira, R.A.C. Calculus of variations with higher order Caputo fractional derivatives. Arab. J. Math. 13, 91–101 (2024). https://doi.org/10.1007/s40065-023-00447-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40065-023-00447-8