Abstract

While social vulnerability assessments (SVA) use spatial indicators and indices that have become state of the art, they also receive substantial critique. This article analyzes, by means of a literature review of 63 articles, if and in which aspects such an indicator approach is regarded as useful by scientific studies. The findings indicate a need for more research on the validation and justification of indicators. This article supports the conceptual development of SVA by adding to reflection about advancements and applications, but also shortcomings. The main advancement area discussed is validation and the demand for establishing benchmark criteria for vulnerability. Based on this, longitudinal monitoring of vulnerability and validation studies are conceivable based on existing SVA, but these efforts demand more conceptual development.

Similar content being viewed by others

1 Introduction

While vulnerability assessments (VA) have become acknowledged state-of-the-art methods, for example, in recent strategy publications at the international level (United Nations 2015), but methodological debate and development is on-going (Ford et al. 2010; Kuhlicke et al. 2011a; Preston et al. 2011; Gallina et al. 2016). Vulnerability assessments have become commonplace with descriptions of procedures, types, methods, and conceptual backgrounds available in textbooks (Wisner et al. 2004; Birkmann 2013; Fuchs and Thaler 2018) or guidelines (Fritzsche et al. 2014). Assessment methods include either qualitative empirical assessments (Anderson and Woodrow 1998) or semi-quantitative, often spatially explicit, place-based approaches (Cutter et al. 2003). While much has been established, it still appears necessary to critically investigate the opportunities as well as the limitations of VA, especially place-based or mapping approaches (de Sherbinin 2014). A large number of original research papers consist of singular case study results that are published once, but often lack critical reflection about shortcomings or fail to stimulate follow-up studies on long-term developments in vulnerability or overlook later insights in methodological improvement opportunities. Quite a number of review papers, however, have already covered overviews and comparisons of the state-of-the-art and of specific methodological traits. These advances are particularly notable for (social) vulnerability in specific hazard contexts such as climate change (Ford et al. 2010; Preston et al. 2011), floods (Rufat et al. 2015; Terti et al. 2015), and multi-risks (Gallina et al. 2016), on validation methodology in general (Tate 2012) as well as social capacities (Kuhlicke et al. 2011a).

This article narrows down the analysis of VA to social vulnerability assessments, selects one case study as a benchmark (Fekete 2009), and reflects upon that baseline study’s main findings and identified gaps. Building up from this starting line, the scholarly reception, usage, and shortcomings are analyzed by comparing (Fekete 2009) with the usages and critique of this study and the SVA approach employed by other authors. My own reflections on identified shortcomings have already been published (Fekete 2012a), but certain aspects of the issue demand further investigation; validation demands and opportunities could also permit further development towards longitudinal monitoring of vulnerability and disaster risk. In order to investigate positive findings as well as constraints that have been identified since 2009, it is necessary to conduct a systematic literature review. The following research questions have guided this article:

-

How valid is the approach of the original article, as documented by other publications citing it?

-

Which aspects of findings and constraints have been addressed since 2009?

-

What other ideas have further developed social vulnerability indicator approaches and which expectations about validation can be derived from existing literature, aided also by own further analysis with recent data?

After completion of the literature review, conceptual considerations on validation criteria, benchmarks, and methodological advancements are used to integrate VA with other concepts, such as criticality assessment or risk management goals. These new potential directions are then briefly outlined.

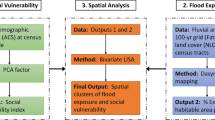

2 Review of the Development of Social Vulnerability Assessments: A Case Study

The author’s original study (Fekete 2009) applied and adjusted an existing vulnerability index approach according to the methodological approach used in the United States (Cutter 1996; Cutter et al. 2003) and in accordance with a theoretical framework of vulnerability (Birkmann 2006). The original research question asked whether social vulnerability could also be identified at a national scale and county-unit-level in Germany, where this had not been conducted before. The study combined an inductive approach using factor analysis and principal component analysis (PCA) with the deductive guidance of a conceptual focus on exposure, susceptibility, and capacity components of vulnerability. The result was a set of indicators of potential vulnerability, developed from an ex ante perspective on disaster risk. While this resembled the state-of-the-art, the Fekete (2009) study went one step further and analyzed one attempt at statistically validating the hypothetical indicators and their variables with a real case event of river flooding in Germany in 2002. Over 1600 household interviews, primarily conducted and analyzed by project partners (Kreibich et al. 2005; Thieken et al. 2007), were reanalyzed using logistic regression, which captured damages and losses but also included socioeconomic profiles and reactions, such as temporary abandonment of housing, financial and social capacities, and satisfaction with damage compensation. The main finding of the study was a methodological procedure to validate vulnerability indicators and correlations between certain socioeconomic and demographic profiles of affected people such as age, education, and income, with flood-impact reactions such as temporary evacuation, shelter, and satisfaction. Shortcomings identified were a lack of knowledge and supporting literature that could advise which variables would serve to validate or benchmark a hypothetical vulnerability indicator. Also unavailable were other approaches with which to compare the validity of the approach employed in the Fekete study. Obvious constraints were a lack of spatial and temporal resolution, which limited the possibilities of up- or downscaling of the findings (Fekete 2010; Fekete et al. 2010).

In order to address the first two research questions, all publications that cited the Fekete article from 2009 to 2016 were analyzed. Google Scholar was used as a search engine because it allows testing and updating this analysis by fellow researchers worldwide, without accessibility constraints or the publishing company selection focus used by other platforms such as Scopus. Constraints of the Google Scholar search results are sometimes an erroneous number of citations due to double counting of publications. An advantage is the provision of links to PDFs hosted on different sites.

On 19 February 2018, 220 citations of the benchmark article (Fekete 2009) were found on Google Scholar; another 127 were located on Scopus. Since both samples were not congruent, as some were listed only in Google Scholar, some only in Scopus, only one source was further used for consistency: Google Scholar as it contains the bigger sample. Only those research articles with 10 citations or more (in Google Scholar) were included into the analysis. This resulted in 64 full research papers, peer-reviewed and published in academic journals with one exception: a book chapter by Torsten Welle and colleagues (Welle et al. 2014). Because this potential contribution was incorrectly selected by Google Scholar (correct author, but a later publication), it was excluded from further analysis. The logic for limiting the literature to 63 items is to not overextend the scope of this small literature review within a research paper. The threshold of 10 citations is randomly selected, as it is hypothesized that those papers already found some acceptance and usage amongst peers. Figure 1 shows the distribution of the number of publications on SVA in relation to the 2009 Fekete baseline article between 2009 and 2016.

Publications with social vulnerability assessments that refer to Fekete (2009) per year

A number of questions were of interest: in which contexts were other publications dealing with social vulnerability since 2009? Context here means in which countries are social vulnerability assessments (SVA) common? Also pertinent is the hazard context in which social vulnerability is embedded. What is the scale of the research area, and what is the size of research unit for the investigation? Which term or terms might be used locally for vulnerability?

These questions were criteria in the literature review and each was analyzed using spread-sheets with categories. The results (Fig. 1) show a decline in citation of the article (Fekete 2009) in publications beginning in 2012, continuing through 2013, and extending into mid-2014. A modest recovery then occurred to a stable level in 2015 and 2016. It would be interesting to investigate whether there also was an overall trend in publications utilizing SVA in 2012/2013. Most articles (56) were research papers, much fewer were review or purely conceptual papers (8). Review papers were relatively highly cited, however, with 2 out of 8 having more than 100 citations, while 6 out of the 56 research papers had more than 100 citations. The majority of the articles were published in the journal Natural Hazards (17), followed by Natural Hazards and Earth Systems Science (7) and Environmental Science and Policy (4). Sixty articles could be accessed with full text, 3 only with abstracts.

The term “social vulnerability” is used in 31 publications, half of the total publications subset. The term socioeconomic vulnerability has been used 4 times, and, of course, many terms similar to SV have been used as well. But it is interesting that the term “resilience” has been used only 5 times. Given the popularity of the term and the plethora of assessments of similar fields such as community resilience or urban resilience, it might be interpreted that SVA, especially in the spatial and indicator approach followed by the original 2009 article, are distinct from the traditional resilience assessment line. Although this assessment is too brief to be a scientifically satisfyingly assessment, it is suggestive relative to other findings that show resilience is adopted later in certain countries and is used in different contexts that are also more conceptual. In contrast, (spatial) SVA and similar quantitative approaches favor the term “vulnerability assessment” (Fekete et al. 2014). Another interesting aspect is that around half of the publications have not used the term SV and preferred another term such as resilience, risk, or vulnerability. This might be an indication that there are still lingering uncertainties about the definition and scope of the terms.

As hazard context, the majority of the articles, not surprisingly, focus on floods (22), since the original 2009 comparison article deals with flood issues, followed by general natural hazards (12) and climate change (8). Five articles did not define the hazard context and in several more articles it was rather difficult to find the hazard context explicitly mentioned. This could point to the conceptual approach of SV in which main focus is not on the hazard. But it might also be interesting to consider the debate of hazard-dependent or independent vulnerability (Schneiderbauer and Ehrlich 2006). It is noteworthy that also studies on technological hazards, health risks, and armed conflict have used similar approaches.

Countries for which SVA have been conducted are dominated by studies from the United States (9), followed by global approaches (6). But it is striking that SVA approaches similar to the original article have been applied in many countries worldwide. The majority of studies are conducted in Europe (18), followed by North America (11), which is understandable because the context of the original study was Germany, an industrialized country in the Global North. The sample, however, is much too small to allow any interpretations of the state of SV or SVA in general, which applies to all the other criteria analyzed in the literature review.

Analyzing what is commonly termed “scale” of analysis, but what actually should be differentiated into research areas (meaning whole area that is investigated) and research units (meaning measurement units within the research area) (Gibson et al. 2000; Fekete et al. 2010), was quite challenging, since many articles were not explicit about the unit of measurement. Urban level was most common (13), closely followed by municipal level (9), but also national (6) and county (6). Overall, administrative boundaries were most common, with fewer raster or grid approaches (Table 1).

Regarding quantitative or qualitative approaches, almost all publications followed a semi-quantitative approach by establishing indicators or an index based on either statistical socioeconomic and demographic data and spatial data or based on interview data. Geographic Information Systems are used to combine, compute, and visualize the indicators by 46 of the 63 articles analyzed. Eight articles did not conduct SVA, but rather analyzed theoretical or methodological aspects.

The 63 publications referred to the 2009 article by citing it in different contexts. The most common references were made to the importance or type of validation approach (14). This was followed by those who made reference to the selection and justification of variables (9) or indicators/index (7) for the SV indicators. The 2009 benchmark article was also often just mentioned as a general reference to show that the publications were in line with other studies (7). Critical aspects, such as a lack of non-static approaches to SVA or validation barriers were used surprisingly few times, as would have been expected from a critical self-reassessment (Fekete 2012a). Overall, none of the publications were critical specifically about the 2009 article, which was somehow expected given experience with peers and stakeholders (Fekete 2012a), but also might be anticipated given difficulties in review processes or feedback at conferences. Probably, the discussion about critique on top-down, quantitative, desktop approaches in SVA happens in other fora (Weichselgartner and Kelman 2014). Not all articles citing the 2009 article have been analyzed; critique might be published in those. Critique is very helpful and necessary and it must be stressed that not only many aspects of the original study from 2009, but also aspects of my other publications on SVA contain imperfections. Another finding is that, contrary to expectations, no other publication (in this sample) used the 2009 study as a direct data source or comparison study. It is gratifying to see so much advancement in the field since 2009, for example in SVA validation approaches conducted by experts with much better depth and knowledge than could be engaged in the 2009 study (Tate 2012, 2013; Rufat et al. 2015).

As a conclusion, the approach from the 2009 study seems successful enough to continue with it, while certain aspects still have to be improved. The advancements should focus on shortcomings in the previous study (Fekete 2009) by (1) analyzing and interpreting single indicators and not the overall index only; (2) analyzing not only a static snapshot, but three 5-year snapshots in dynamic comparison; (3) interpreting spatial and temporal heterogeneity; (4) differentiating cities and rural counties; and (5) conceptually separating national societal vulnerability from community-scale and individual human vulnerability. This will hopefully add new insights also to those aspects most commonly used from the 2009 study in other research studies, such as validation, selection of variables, and composition of indicators and indices, when incorporated into dynamic and longitudinal assessments. Not all of that process is captured in this article, but more time is needed to conduct research on a range of SVA variables. As a major constraint, this review is limited by sample design to those publications citing the one original 2009 article. That means that extrapolation to SVA in general is not possible. Another constraint is that articles before 2009 and other relevant publications have not been considered in this review. Also, publications that have been cited fewer than 10 times are not selected, which means that some important deviations from the result or important additions may be missing. More recent publications also are less likely to have reached 10 citations already, which is another bias. But this study’s selections were made on purpose in order to have a sample that can be logically justified by its connection to work with a similar conceptual design and methodological content. Therefore, the following section will also stick to the original method, design, and data sources and expand the discussion, started in the review section, about aspects of interpreting the same type of indicators with recent data.

3 Further Development of the Approach and Demands on Validation

The author’s own approach in 2009 includes many shortcomings and, thereafter, more detailed descriptions of the approach, selection and justification of variables, factor analysis, PCA, as well as spatial autocorrelation tests have been published (Fekete 2010). Also undertaken was a discussion of scale effects, such as the selection of time versus spatial scales, up-scaling options, and constraints (Fekete et al. 2010). It was important to summarize critique received from other peers and envisioned “end users” in a separate article (Fekete 2012a). Still, many frustrations of peers with such quantifying, aggregating, and “accountant-style” top-down approaches have not been answered yet (Weichselgartner and Kelman 2014). Some of those disappointments are triggered probably by false expectations raised by our publications. For example, usefulness for end users has been aimed at. Or maps per se are easily misunderstood representing reality. However, since the literature review found validation the aspect most mentioned, the following section will focus on this aspect, what can and needs to be amended and developed.

3.1 Vulnerability Validation Criteria

Other publications have already addressed further needs and approaches to amend the statistical methodologies and sensitivity analyses (Tate 2012) as well as selection and common usages of variables in SVA (Rufat et al. 2015). But one major question in the 2009 approach has not been addressed: which types of information could serve as criteria or benchmarks to validate vulnerability in the sense of logical testing of whether a hypothetical vulnerability assumption has proven significant during or after some real crisis or disaster event? In 2009, the following three dependent variables were used in a logistic regression as vulnerability validation criteria: (1) people affected by the flood since they had to leave their homes; (2) people seeking emergency shelters; and (3) people satisfied with damage compensation.

Regarding the range of possible criteria for testing a “revealed” vulnerability, Table 2 includes just a very limited selection. The problem is that vulnerability indications can be read and interpreted quite differently; what one author would regard as susceptibility might be regarded as exposure or lack of capacities by another.

Exposure, damage, and loss are probably the most straightforward validation criteria for testing assumed vulnerability, especially if the assumed weakness has resulted in disproportionally higher impacts. But even this criterion produces potential uncertainty. Were vulnerability aspects really the primary causal agent? Or were other variables the ones that stimulated observed impact depths? Caution is also necessary when time has elapsed between historical and recent damage events, since populations, behaviors, land use patterns, and so on (and therefore vulnerabilities) may have changed. Because exposure is most related to hazard aspects, certain studies (Anderson and Woodrow 1998; Davidson and Shah 1997) and even recent UNISDR (United Nations International Strategy for Disaster Reduction) definitions have separated exposure from vulnerability. To validate the core of vulnerability, susceptibilities must be identified and then validated. Because susceptibilities (also sometimes termed sensitivities) define characteristics that are nested within the object and subject analyzed, damage or loss impacts often are intensified. In Table 2, the criterion by which people see refugee shelters is such an example. Within the group of people who had to leave their homes, a small subgroup ended up in public emergency shelters. Since the 2002 river-flood event was not a surprise, but rather was an event that developed over days and weeks, certain assumptions can be made. Because other data from the household survey identify alternatives to emergency shelters, such as people going to relatives, friends, or an affordable hotel, we assumed that emergency shelters were an indication of those people who had no social networks or other options than to seek refuge in public shelters. This reveals not a physical susceptibility but an absence of social ties and/or the existence of societal conditions that constitute a type of susceptibility. At the same time, the same susceptibility also indicates a society able to provide such capacities as emergency shelters. Summarizing the above, this is not a perfect vulnerability validation criterion, but it meets many assumptions about the complicated nature of susceptibility.

The third validation criterion used in our previous study, people satisfied (or unsatisfied) with damage regulation after the flood, is also a criterion not easy to grasp. It indicates societal moods and personal reactions and the validation found correlations with socioeconomic indicators of unemployment and education level. It is an interesting validation criterion not so much because of its precision, but because it captures a soft aspect, one of the intangibles; not a physical susceptibility but more of a sociocultural one. It also indicates societal capacities to compensate flood losses by insurance and governmental aid as well as the challenges associated with getting the right aid to people in time and in satisfying amounts. Since it represents a social reaction (satisfaction) that would not exist without the existence of the capacity (damage regulation service), we have displayed it as an example of “capacities” in the table. But one might rightly argue for it being a susceptibility component as well.

The range of possible and necessary vulnerability validation criteria is much broader. Physical vulnerabilities of human beings could be validated by death tolls, physical wounds, diseases, or health issues. Psychological vulnerabilities could be validated by mental traumata. All of the following vulnerability characteristics are more indirectly related to causing deaths or health impacts. For example, poverty or social exclusion, lack of capacities and so on all per se do not kill people directly during a disaster event. But they provide conditions (physical exposure), lack of alternatives, and so forth to foster or force vulnerabilities (root causes, dynamics, and so on) to develop into disaster risk pathways.

3.2 Aggregation Aspects and Possible Interpretations of Indicators

If a social vulnerability index (SVI) was composed as a reassessment of the 2009 approach, the individual indicators would deserve more scrutiny regarding their explanatory power. Since the index approach was much criticized for blurring the individual indicators that compose it, we pass over the index and instead investigate how the indicators would influence the overall picture of vulnerability. This is due to our findings on the acceptance of vulnerability indicators by end users and decision makers. There are hindrances to acceptance, such as mayors not appreciating being labelled as vulnerable (Fekete 2012a) or misunderstanding maps (Fekete et al. 2015). Other constraints are overexpectations on the part of the end users who utilize scientific results when published. In a recent project (Fekete et al. 2017), end users were more enthusiastic about being involved in the science and not just being used as interview sources. This is in line with recently promoted participatory approaches that codesign and codevelop research within a more nuanced understanding of producing knowledge (Weichselgartner and Kasperson 2010; Weichselgartner and Pigeon 2015). This cooperative approach contradicts other preexisting notions of “producing policy-relevant information” first and then distributing it to end users at the end, which can fail (Fekete 2012a). Many vulnerability models are perceived as black boxes as stakeholders are not involved in designing it. Aggregated indices may add to this black-box perception, when composition of indicators becomes too complex or hidden to immediately grasp their meaning (Fekete et al. 2015). One underlying question is, do end users have enough confidence in the resulting indicators to actually use them in their decision making? The reviewed publications analyzed in this article do not tackle this issue. The following section will not be able to answer this question, but will conceptually analyze the problem by looking at selected examples of the single indicators displayed in Fig. 2 to identify which aspects could be interpreted by users.

Data source Administrative digital boundaries were retrieved from Federal Office of Cartography and Geodesy (2017). Individual demographic statistics were derived from Federal Office of Statistics (2017), extracted, categorized, normalized, and visualized in QGIS by the author

Indicator maps related to social vulnerability in Germany at county and city administrative levels.

Figure 2 shows five of six indicators validated in 2009 by using a second data set from a real case flood event. These indicators were composed for the year 2015 in the upper row of Fig. 2. The visual comparison already reveals a strong spatial correlation between the first three indicators, unemployment rate, basic education, and elderly population. What does this say about explanatory power? It might be seen as three indicators hardening an assumption that certain spatial areas in Eastern German have higher levels of assumed vulnerability. On the other hand, the confounding influence is very high—at least unemployment and low education levels are almost intuitively correlated. It must be considered for future SVI whether or not fewer indicators are a better approach than collecting as many as feasible. And it must be considered whether spatial heterogeneity should not also be guiding the selection of indicators for an index. For example, population numbers and one-apartment buildings (an indicator of home-ownership) highlight different counties and areas and might be valuable in adding other thematic plus spatial aspects to consider. Both spatial and temporal heterogeneity are important factors to consider in method design in order to assess the validity of an indicator or index. The lower row of maps in Fig. 2 displays in green colors where data changes between 2005 and 2015 result in lower (hypothetical) vulnerability. Brown colors indicate increasing vulnerability. Visual interpretation shows that unemployment, while above average in Eastern Germany, also exhibited above average decrease from 2005 to 2015. Basic education and one-apartment buildings in almost all counties and cities have changed in a direction that ameliorates vulnerability, while age went up, a reflection of demographic change in an ageing society. The spatial explanatory power of the latter three indicators might be questioned—when not reflecting spatially different patterns, are these useful (vulnerability) indicators? Of course, the absolute rate of change must also be analyzed, investigating minimum and maximum values and fluctuations per year—when an indicator fluctuates up and down around just low changes in values, the explanatory power might be regarded as low. Except when a variable is a (normatively justified) key indicator, then even small changes can be magnified in weight by incorporating other, less important indicators.

3.3 Benchmarking Vulnerability: Criticality Steps and Service Target Levels

While it is already a challenge to identify validation criteria for vulnerability, it is even more challenging to identify thresholds or benchmarks (Cutter et al. 2010). Vulnerability typically displays ranges of possible degrees; it rarely depicts thresholds that determine when to speak of vulnerability or when vulnerability is inevitably turning into disaster loss. Of course, GIS maps visualize degrees of vulnerability, by standard deviations, natural breaks, quantiles, and so on. But there is rarely justification behind such breaks beyond statistical argumentation that is related to validations with real damage cases. What is a tipping-point when a certain number of people with certain characteristics will inevitably have to leave a place or will get killed?

There are other terms and lines of research where such thresholds are investigated. For example, loss and damage research (Wrathall et al. 2015), risk assessments using traditional loss and probability matrices (Federal Office of Civil Protection and Disaster Assistance 2010), or under the term criticality or severity. For example, failure mode effects and criticality analyses differentiate degrees of criticality according to stages of loss—from loss of human lives to loss of aircraft, which are damages that cannot be repaired, down to maintenance issues (US DoD 1980). Such categorization could serve as an example by which to differentiate vulnerability.

Finding evidence to back up thresholds is difficult. After the 2009 study, our research in other fields, such as critical infrastructure and civil protection and risk and crisis management, suggests the need to add next to criticality other concepts that complement existing vulnerability frameworks. The demand for a methodology to justify often hidden and underlying reasons for prioritizing one value such as human lives over economic or ecological loss has been observed in risk and crisis management approaches in civil protection. In order to address this limitation, vulnerability and risk assessments have been included in a more comprehensive framework that incorporates preparation and application phases. This expansion includes validation and communication structures called risk management frameworks (IRGC 2012; ISO 2009; Federal Ministry of the Interior 2008). Prioritization of what is “measured” by the risk and vulnerability assessment is termed “protection” or a “risk management goal” (Fekete et al. 2012). A methodology is suggested in those studies to order the underlying values that exist in a civil protection agency, for example, to prioritize saving human lives over economic interests. But this methodology is useful also for economic risk assessments or ecological assessments by making the value decision explicit: the overall human value is selected—maximize saving human lives. Time characteristics such as quickness of effect or duration enhance this humanitarian value. Although still underrepresented in VA, time restrictions have been found to be an ubiquitarian characteristic that can be applied to almost any indicator or process. For example, population density is a generally good indicator for exposure to flood disaster risk, but in combination with onset speed of the flood or daytime population in a city it becomes much more precise. Risk management goals are composed of the value to protect or analyze and thresholds of countermeasures (capacities) that should be achieved. Such thresholds can be zero death visions in road safety, or a delimitation of climate change-related temperature incline to 2 degrees, for example (Fekete 2012b). These thresholds or “goals” could also be useful for VA and they can either be decided upon as a strategic goal or be based on real cases or measurements. An example is the service time for fire fighters to reach their destination [varying between 5 and 180 min in Europe (Weber 2013) or USA (Sa’adah 2004)].

4 Conclusion

First, an existing SVA approach has been analyzed by its usage in a literature review in order to identify if and what aspects of the SVA were also used by other studies or regarded as useful. The findings to these research questions show that validation is often still lacking, yet is often regarded as an important component of a SVA. The findings also show common agreement on certain indicator selections and usage of spatial assessments in a great number of countries worldwide. The abundance of urban area assessments is in line with a general focus on cities within disaster risk and resilience research (Fekete and Fiedrich 2018). Guidance on how to conduct validations, usage of existing studies for cross-validation, and guidance on variables and indicator selection is still wanting.

In the second part, this article has therefore discussed possible validation criteria and benchmarks. Benchmarks that form a world-wide data base of disaster cases with an explicit focus on revealed vulnerability are still missing. Accounts of human and economic losses still address mainly the exposure component and therefore undervalue the core of vulnerability, termed susceptibility. In the absence of validation criteria or benchmarks, this article has therefore suggested insights from methodologies from related fields such as critical infrastructure or risk and crisis management. Concepts such as criticality steps, risk management goals, and target levels, as well as integrative risk and crisis management frameworks can help to advance vulnerability from a relative degree estimate to a threshold value. While all these approaches have their own limitations and constraints, they may stimulate the further conceptual and applied development of VA.

References

Anderson, M.B., and P.J. Woodrow. 1998. Rising from the ashes: Development strategies in times of disaster. Boulder: Lynne Rienner.

Armaş, I., and A. Gavriş. 2013. Social vulnerability assessment using spatial multi-criteria analysis (Sevi Model) and the social vulnerability index (Sovi Model)—A case study for Bucharest, Romania. Natural Hazards and Earth System Sciences 13(6): 1481–1499.

Asadzadeh, A., T. Kötter, and E. Zebardast. 2015. An augmented approach for measurement of disaster resilience using connective factor analysis and analytic network process (F’anp) model. International Journal of Disaster Risk Reduction 14: 504–518.

Balica, S.F., N.G. Wright, and F. van der Meulen. 2012. A flood vulnerability index for coastal cities and its use in assessing climate change impacts. Natural Hazards 64(1): 73–105.

Bergstrand, K., B. Mayer, B. Brumback, and Y. Zhang. 2015. Assessing the relationship between social vulnerability and community resilience to hazards. Social Indicators Research 122(2): 391–409.

Birkmann, J. 2006. Measuring vulnerability to promote disaster-resilient societies: Conceptual frameworks and definitions. In Measuring vulnerability to natural hazards: Towards disaster resilient societies. 1st edn., ed. J. Birkmann, 9–54. Tokyo: United Nations University Press.

Birkmann, J. 2013. Measuring vulnerability to promote disaster-resilient societies and to enhance adaptation: Conceptual frameworks and definitions. In Measuring vulnerability to natural hazards: Towards disaster resilient societies. 2nd edn. (completely revised), ed. J. Birkmann, 9–79. Tokyo: United Nations University Press.

Bui, D.T., B. Pradhan, H. Nampak, Q.-T. Bui, Q.-A. Tran, and Q.-P. Nguyen. 2016. Hybrid artificial intelligence approach based on neural fuzzy inference model and metaheuristic optimization for flood susceptibilitgy modeling in a high-frequency tropical cyclone area using GIS. Journal of Hydrology 540: 317–330.

Cai, H., Lam, N.S.-N., Zou, L., Qiang, Y., Li, K. 2016. Assessing community resilience to coastal hazards in the lower Mississippi River Basin. Water 8: 46.

Castillo, M.E, E.M. Baldwin, R.S. Casarin, G.P. Vanegas, and M.A. Juaréz. 2012. Characterization of risks in coastal zones: A review. Clean—Soil, Air, Water 40(9): 894–905.

Cutter, S.L. 1996. Vulnerability to environmental hazards. Progress in Human Geography 20(4): 529–539.

Cutter, S.L., B.J. Boruff, and W.L. Shirley. 2003. Social vulnerability to environmental hazards. Social Science Quarterly 84(2): 242–261.

Cutter, S.L., C.G. Burton, and C.T. Emrich. 2010. Disaster resilience indicators for benchmarking baseline conditions. Journal of Homeland Security and Emergency Management 7(1): Article 51.

Cutter, S.L., C.T. Emrich, D.P. Morath, and C.M. Dunning. 2013. Integrating social vulnerability into federal flood risk management planning. Journal of Flood Risk Management 6(4): 332–344.

Davidson, R.A., and H.C. Shah. 1997. An urban earthquake disaster risk index. Stanford University, Department of Civil Engineering, John A. Blume Earthquake Engineering Center. Report No. 121. Stanford digital repository. Stanford, CA: John A. Blume Earthquake Engineering Center. https://www.google.com/search?source=hp&ei=HVh1XLXLD5Hj_Aasq6j4CQ&q=Davidson+Urban+Earthquake+disaster+risk+index+1997&btnK=Google+Search&oq=Davidson+Urban+Earthquake+disaster+risk+index+1997&gs_l=psy-ab.3…757.95298..104025…26.0..0.177.6865.63j12….2..0….1..gws-wiz…..0..35i39j0j0i131j0i67j0i131i10j0i10j0i22i30j33i299j33i160j33i22i29i30j33i10.dTzwZGNEfx8. Accessed 26 Feb 2019.

de Sherbinin, A. 2014. Mapping the unmeasurable? Spatial analysis of vulnerability to climate change and climate variability. Ph.D. thesis. Enschede, Netherlands: ITC-University of Twente. http://ciesin.columbia.edu/documents/de_Sherbinin_2014_PhDThesis_UTwente.pdf. Accessed 1 Apr 2018

Dickin, S.K., C.J. Schuster-Wallace, and S.J. Elliott. 2013. Developing a vulnerability mapping methodology: Applying the water-associated disease index to Dengue in Malaysia. PLOS ONE 8(5): Article e63584.

Federal Ministry of the Interior (Bundesminesterium des Innern). 2008. Protecting critical infrastructures—Risk and crisis management: A guide for companies and government authorities. Berlin: Federal Ministry of the Interior. https://www.bbk.bund.de/SharedDocs/Downloads/BBK/DE/Publikationen/PublikationenKritis/Protecting-Critical-Infrastructures.pdf?__blob=publicationFile. Accessed 3 Mar 2019.

Federal Office of Cartography and Geodesy (Bundesamt für Kartographie und Geodäsie). 2017. Verwaltungsgebiete von Deutschland. Frankfurt am Main: Bundesamt für Kartographie und Geodäsie. http://www.geodatenzentrum.de/geodaten/gdz_rahmen.gdz_div?gdz_spr=deu&gdz_akt_zeile=5&gdz_anz_zeile=1&gdz_unt_zeile=13&gdz_user_id=0. Accessed 1 Apr 2018 (in German).

Federal Office of Civil Protection and Disaster Assistance (Bundesamt für Bevölkerungsschutz und Katastrophenhilfe). 2010. Method for risk analysis in civil protection (Methode für Die Risikoanalyse im Bevölkerungsschutz). Wissenschaftsforum Band 8. Bonn: Bundesamt für Bevölkerungsschutz und Katastrophenhilfe. http://www.bbk.bund.de/SharedDocs/Downloads/BBK/DE/Publikationen/Wissenschaftsforum/Bd8_Methode-Risikoanalyse-BS.pdf?__blob=publicationFile. Accessed 1 Apr 2018 (in German).

Federal Office of Statistics (Statistische Ämter des Bundes und der Länder). 2017. Regional data bank of Germany (Regionaldatenbank Deutschland). https://www.destatis.de/DE/ZahlenFakten/LaenderRegionen/Regionales/Regionaldatenbank/Regionaldatenbank.html. Accessed 1 Apr 2018 (in German).

Fekete, A. 2009. Validation of a social vulnerability index in context to river-floods in Germany. Natural Hazards and Earth System Sciences 9(2): 393–403.

Fekete, A. 2010. Assessment of social vulnerability to river-floods in Germany. Ph.D. dissertation. Bonn: Institute for Environment and Human Security, United Nations University.

Fekete, A. 2012a. Spatial disaster vulnerability and risk assessments: Challenges in their quality and acceptance. Natural Hazards 61(3): 1161–1178.

Fekete, A. 2012b. Safety and security target levels: Opportunities and challenges for risk management and risk communication. International Journal of Disaster Risk Reduction 2: 67–76.

Fekete, A., M. Damm, and J. Birkmann. 2010. Scales as a challenge for vulnerability assessment. Natural Hazards 55(3): 729–747.

Fekete, A., and F. Fiedrich, eds. 2018. Urban disaster resilience and security: Addressing risks in societies. Cham, Switzerland: Springer.

Fekete, A., G. Hufschmidt, and S. Kruse. 2014. Benefits and challenges of resilience and vulnerability for disaster risk management. International Journal of Disaster Risk Science 5(1): 3–20.

Fekete, A., P. Lauwe, and W. Geier. 2012. Risk management goals and identification of critical infrastructures. International Journal of Critical Infrastructures 8(4): 336–353.

Fekete, A., K. Tzavella, I. Armas, J. Binner, M. Garschagen, C. Giupponi, V. Mojtahed, M. Pettita, et al. 2015. Critical data source; tool or even infrastructure? Challenges of Geographic Information Systems and Remote Sensing for disaster risk governance. ISPRS International Journal of Geo-Information 4(4): 1848–1869.

Fekete, A., K. Tzavella, and R. Baumhauer. 2017. Spatial exposure aspects contributing to vulnerability and resilience assessments of urban critical infrastructure in a flood and blackout context. Natural Hazards 86(1): 151–176.

Felsenstein, D., and M. Lichter. 2014. Social and economic vulnerability of coastal communities to sea-level rise and extreme flooding. Natural Hazards 71(1): 463–491.

Fernandez, P., S. Mourato, and M. Moreira. 2015. Social vulnerability assessment of flood risk using GIS-based multicriteria decision analysis. A case study of Vila Nova De Gaia (Portugal). Geomatics, Natural Hazards and Risk 7(4): 1367–1389.

Finch, C., C.T. Emrich, and S.L. Cutter. 2010. Disaster disparities and differential recovery in New Orleans. Population and Environment 31(4): 179–202.

Ford, J.D., E.C.H. Keskitalo, T. Smith, T. Pearce, L. Berrang-Ford, F. Duerden, and B. Smit. 2010. Case study and analogue methodologies in climate change vulnerability research. Wiley Interdisciplinary Reviews: Climate Change 1(3): 374–392.

Frigerio, I., and M. De Amicis. 2016. Mapping social vulnerability to natural hazards in Italy: A suitable tool for risk mitigation strategies. Environmental Science & Policy 63: 187–196.

Frigerio, I., S. Ventura, D. Strigaro, M. Mattavelli, M. De Amicis, S. Mugnano, and M. Boffi. 2016. A GIS-based approach to identify the spatial variability of social vulnerability to seismic hazard in Italy. Applied Geography 74: 12–22.

Fritzsche, K., S. Schneiderbauer, P. Bubeck, S. Kienberger, M. Buth, M. Zebisch, and W. Kahlenborn. 2014. Vulnerability sourcebook: Concept and guidelines for assessments. Bonn and Eschborn: Deutsche Gesellschaft für Internationale Zusammenarbeit (GIZ). https://www.adelphi.de/en/system/files/mediathek/bilder/vulnerability_sourcebook_guidelines_for_assessments_adelphi_giz_2014.pdf. Accessed 1 Apr 2018.

Fuchs, S., and T. Thaler, eds. 2018. Vulnerability and resilience to natural hazards. New York: Cambridge University Press.

Gallina, V., S. Torresan, A. Critto, A. Sperotto, T. Glade, and A. Marcomini. 2016. A review of multi-risk methodologies for natural hazards: Consequences and challenges for a climate change impact assessment. Journal of Environmental Management 168: 123–132.

Gibson, C.C., E. Ostrom, and T.-K. Ahn. 2000. The concept of scale and the human dimensions of global change: A survey. Ecological Economics 32(2): 217–239.

Grenier, P., A.-C. Parent, D. Huard, F. Anctil, and D. Chaumont. 2013. An assessment of six dissimilarity metrics for climate analogs. Journal of Applied Meteorology and Climatology 52(4): 733–752.

Hiete, M., M. Merz, T. Comes, and F. Schultmann. 2012. Trapezoidal fuzzy dematel method to analyze and correct for relations between variables in a composite indicator for disaster resilience. OR Spectrum 34(4): 971–995.

Holand, I.S., and P. Lujala. 2013. Replicating and adapting an index of social vulnerability to a new context: A comparison study for Norway. The Professional Geographer 65(2): 312–328.

IRGC (International Risk Governance Council). 2012. An introduction to the IRGC risk governance framework. Geneva: International Risk Governance Council. https://www.preventionweb.net/publications/view/51238. Accessed 3 Mar 2019.

ISO (International Organization for Standardization). 2009. Iso/Iec 31000:2009. Risk management—Principles and guidelines. Geneva: ISO. https://www.iso.org/iso-31000-risk-management.html. Accessed 3 Mar 2019.

Karagiorgos, K., T. Thaler, M. Heiser, J. Hübl, and S. Fuchs. 2016a. Integrated flash flood vulnerability assessment: Insights from East Attica, Greece. Journal of Hydrology 541(Part A): 553–562.

Karagiorgos, K., T. Thaler, J. Hübl, F. Maris, and S. Fuchs. 2016b. Multi-vulnerability analysis for flash flood risk management. Natural Hazards 82(1 Supplement): 63–87.

Khan, S. 2012. Vulnerability assessments and their planning implications: A case study of the Hutt Valley, New Zealand. Natural Hazards 64(2): 1587–1607.

Khan, F.A., and A. Salman. 2012. A simple human vulnerability index to climate change hazards for Pakistan. International Journal of Disaster Risk Science 3(3): 163–176.

Khazai, B., T. Kunz-Plapp, C. Büscher, and A. Wegner. 2014. Vuwiki: An ontology-based semantic wiki for vulnerability assessments. International Journal of Disaster Risk Science 5(1): 55–73.

Kienberger, S. 2012. Spatial modelling of social and economic vulnerability to floods at the district level in Búzi, Mozambique. Natural Hazards 64(3): 2001–2019.

Kienberger, S., S. Lang, and P. Zeil. 2009. Spatial vulnerability units—Expert-based spatial modelling of socio-economic vulnerability in the Salzach catchment, Austria. Natural Hazards and Earth System Sciences 9: 767–778.

Kok, M., M. Lüdeke, P. Lucas, T. Sterzel, C. Walther, P. Janssen, D. Sietz, and I. de Soysa. 2016. A new method for analysing socio-ecological patterns of vulnerability. Regional Environmental Change 16(1): 229–243.

Koks, E.E., B. Jongman, T.G. Husby, and W.J.W. Botzen. 2015. Combining hazard, exposure and social vulnerability to provide lessons for flood risk management. Environmental Science & Policy 47: 42–52.

Kreibich, H., A.H. Thieken, T. Petrow, M. Müller, and B. Merz. 2005. Flood loss reduction of private households due to building precautionary measures—Lessons learned from the Elbe flood in August 2002. Natural Hazards and Earth System Sciences 5(1): 117–126.

Kubal, C., D. Haase, V. Meyer, and S. Scheuer. 2009. Integrated urban flood risk assessment—Adapting a multicriteria approach to a city. Natural Hazards and Earth System Sciences 9(6): 1881–1895.

Kuhlicke, C., A. Scolobig, S. Tapsell, A. Steinführer, and B. De Marchi. 2011a. Contextualizing social vulnerability: Findings from case studies across Europe. Natural Hazards 58(2): 789–810.

Kuhlicke, C., A. Steinführer, C. Begg, C. Bianchizza, M. Bründl, M. Buchecker, B. De Marchi, M.D.M. Tarditti, et al. 2011b. Perspectives on social capacity building for natural hazards: Outlining an emerging field of research and practice in Europe. Environmental Science & Policy 14(7): 804–814.

Künzler, M., C. Huggel, and J.M. Ramírez. 2012. A risk analysis for floods and lahars: Case study in the cordillera Central of Colombia. Natural Hazards 64(1): 767–796.

Lee, G., K.S. Jun, and E.S. Chung. 2013. Integrated multi-criteria flood vulnerability approach using fuzzy topsis and Delphi technique. Natural Hazards and Earth System Sciences 13(5): 1293–1312.

Lee, M.-H., I.W. Jung, and D.-H. Bae. 2011. Korean flood vulnerability assessment on climate change. Journal of Korea Water Resources Association 44(8): 653–666.

Mazumdar, J., and S.K. Paul. 2016. Socioeconomic and infrastructural vulnerability indices for cyclones in the eastern coastal states of India. Natural Hazards 82(3): 1621–1643.

Mendes, J.M., A.O. Tavares, L. Cunha, and S. Freiria. 2011. Social vulnerability from natural and technological hazards in Portugal (A vulnerabilidade social aos perigos naturais e tecnológicos em Portugal). Revista Crítica de Ciências Sociais 93: 95–128.

Merz, M., M. Hiete, T. Comes, and F. Schultmann. 2013. A composite indicator model to assess natural disaster risks in industry on a spatial level. Journal of Risk Research 16(9): 1077–1099.

Müller, A., J. Reiter, and U. Weiland. 2011. Assessment of urban vulnerability towards floods using an indicator-based approach—A case study for Santiago De Chile. Natural Hazards and Earth System Sciences 11(8): 2107–2123.

Nelson, K.S., M.D. Abkowitz, and J.V. Camp. 2015. A method for creating high resolution maps of social vulnerability in the context of environmental hazards. Applied Geography 63: 89–100.

Nguyen, K.V., and H. James. 2013. Measuring household resilience to floods: A case study in the Vietnamese Mekong River Delta. Ecology and Society 18(3): Article 13.

Nguyen, T.T.X., J. Bonetti, K. Rogers, and C.D. Woodroffe. 2016. Indicator-based assessment of climate-change impacts on coasts: A review of concepts, methodological approaches and vulnerability indices. Ocean & Coastal Management 123: 18–43.

Oulahen, G., L. Mortsch, K. Tang, and D. Harford. 2015. Unequal vulnerability to flood hazards: “Ground truthing” a social vulnerability index of five municipalities in metro Vancouver, Canada. Annals of the Association of American Geographers 105(3): 473–495.

Park, M., Y. Song, S. Kim, and M. Park. 2012. A study on the assessment method for high-risk urban inundation area using flood vulnerability index. Journal of the Korean Society of Hazard Mitigation 12(2): 245–254.

Preston, B.L., E.J. Yuen, and R.M. Westaway. 2011. Putting vulnerability to climate change on the map: A review of approaches, benefits, and risks. Sustainability Science 6(2): 177–202.

Roy, D.C., and T. Blaschke. 2015. Spatial vulnerability assessment of floods in the coastal regions of Bangladesh. Geomatics, Natural Hazards and Risk 6(1): 21–44.

Rufat, S., E. Tate, C.G. Burton, and A.S. Maroof. 2015. Social vulnerability to floods: Review of case studies and implications for measurement. International Journal of Disaster Risk Reduction 14(4): 470–486.

Sa’adah, R. 2004. The eight-minute ALS response time standard: A review and discussion of its use as a strategic results goal by the district of Colombia. Washington, DC: District of Colombia, Fire and Emergency Medical Services Department.

Scheuer, S., D. Haase, and V. Meyer. 2011. Exploring multicriteria flood vulnerability by integrating economic, social and ecological dimensions of flood risk and coping capacity: From a starting point view towards an end point view of vulnerability. Natural Hazards 58(2): 731–751.

Schneiderbauer, S., and D. Ehrlich. 2006. Social levels and hazard (in)dependence in determining vulnerability. In Measuring vulnerability to natural hazards: Towards disaster resilient societies, ed. J. Birkmann, 78–102. Tokyo: United Nations University Press.

Siagian, T.H., P. Purhadi, S. Suhartono, and H. Ritonga. 2014. Social vulnerability to natural hazards in Indonesia: Driving factors and policy implications. Natural Hazards 70(2): 1603–1617.

Sietz, D., S.E.M. Choque, and M.K.B. Lüdeke. 2012. Typical patterns of smallholder vulnerability to weather extremes with regard to food security in the Peruvian Altiplano. Regional Environmental Change 12(3): 489–505.

Solangaarachchi, D., A.L. Griffin, and M.D. Doherty. 2012. Social vulnerability in the context of bushfire risk at the urban-bush interface in Sydney: A case study of the Blue Mountains and Ku-Ring-Gai local council areas. Natural Hazards 64(2): 1873–1898.

Stângă, I.C., and A. Grozavu. 2012. Quantifying human vulnerability in rural areas: Case study of Tutova Hills (Eastern Romania). Natural Hazards and Earth System Sciences 12(6): 1987–2001.

Sterzel, T., M. Lüdeke, M. Kok, C. Walther, D. Sietz, I. de Soysa, P. Lucas, and P. Janssen. 2014. Armed conflict distribution in global drylands through the lens of a typology of socio-ecological vulnerability. Regional Environmental Change 14(4): 1419–1435.

Tate, E. 2012. Social vulnerability indices: A comparative assessment using uncertainty and sensitivity analysis. Natural Hazards 63(2): 325–347.

Tate, E. 2013. Uncertainty analysis for a social vulnerability index. Annals of the Association of American Geographers 103(3): 526–543.

Terti, G., I. Ruin, S. Anquetin, and J.J. Gourley. 2015. Dynamic vulnerability factors for impact-based flash flood prediction. Natural Hazards 79(3): 1481–1497.

Thieken, A., H. Kreibich, M. Müller, and B. Merz. 2007. Coping with floods: Preparedness, response and recovery of flood-affected residents in Germany in 2002. Hydrological Sciences - Journal des Sciences Hydrologiques 52(5): 1016–1037.

Tripathi, R., S.K. Sengupta, A. Patra, H. Chang, and Il W. Jung. 2014. Climate change, urban development, and community perception of an extreme flood: A case study of Vernonia, Oregon, USA. Applied Geography 46: 137–146.

United Nations. 2015. Sendai framework for disaster risk reduction 2015–2030. Geneva: United Nations UNISDR. https://www.unisdr.org/files/43291_sendaiframeworkfordrren.pdf. Accessed 26 Feb 2019.

US DoD (United States Department of Defense). 1980. Mil-Sta-1629a. Military standard. Procedures for performing a failure mode, effects, and criticality analysis. Washington, DC: US DoD.

Weber, M. 2013. Brandschutzbedarfsplanung im Europäischen Vergleich (Fire Protection Planning in European Comparison). BRANDSchutz 67(September): 720–727 (in German).

Weichselgartner, J., and R. Kasperson. 2010. Barriers in the science-policy-practice interface: Toward a knowledge-action-system in global environmental change research. Global Environmental Change 20(2): 266–277.

Weichselgartner, J., and I. Kelman. 2014. Geographies of resilience: Challenges and opportunities of a descriptive concept. Progress in Human Geography 39(3): 249–267.

Weichselgartner, J., and P. Pigeon. 2015. The role of knowledge in disaster risk reduction. International Journal of Disaster Risk Science 6(2): 107–116.

Welle, T., Y. Depietri, M. Angignard, J. Birkmann, F. Renaud, and S. Greiving. 2014. Vulnerability assessment to heat waves, floods, and earthquakes using the move framework: Test case Cologne, Germany. In Assessment of vulnerability to natural hazards, ed. J. Birkmann, S. Kienberger, and D.E. Alexander, 91–124. Bonn: Elsevier.

Werg, J., T. Grothmann, and P. Schmidt. 2013. Assessing social capacity and vulnerability of private households to natural hazards—Integrating psychological and governance factors. Natural Hazards and Earth System Sciences 13(6): 1613–1628.

Wilhelmi, O.V., and R.E. Morss. 2013. Integrated analysis of societal vulnerability in an extreme precipitation event: A Fort Collins case study. Environmental Science & Policy 26: 49–62.

Wisner, B., P. Blaikie, T. Cannon, and I. Davis. 2004. At risk: Natural hazards, people´s vulnerability and disasters. 2nd edn. London: Routledge.

Wrathall, D.J., A. Oliver-Smith, A. Fekete, E. Gencer, M.L. Reyes, and P. Sakdapolrak. 2015. Problematising loss and damage. International Journal of Global Warming 8(2): 274–294.

Yang, S., S. He, J. Du, and X. Sun. 2015. Screening of social vulnerability to natural hazards in China. Natural Hazards 76(1): 1–18.

Yoon, D.K. 2012. Assessment of social vulnerability to natural disasters: A comparative study. Natural Hazards 63(2): 823–843.

Zhang, N., and H. Huang. 2013. Social vulnerability for public safety: A case study of Beijing, China. Chinese Science Bulletin 58(19): 2387–2394.

Zhou, Y., N. Li, W. Wu, and J. Wu. 2014a. Assessment of provincial social vulnerability to natural disasters in China. Natural Hazards 71(3): 2165–2186.

Zhou, Y., N. Li, W. Wu, J. Wu, and P. Shi. 2014b. Local spatial and temporal factors influencing population and societal vulnerability to natural disasters. Risk Analysis 34(4): 614–639.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Fekete, A. Social Vulnerability (Re-)Assessment in Context to Natural Hazards: Review of the Usefulness of the Spatial Indicator Approach and Investigations of Validation Demands. Int J Disaster Risk Sci 10, 220–232 (2019). https://doi.org/10.1007/s13753-019-0213-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13753-019-0213-1