Abstract

The paper provides a comprehensive overview of modeling and pricing cyber insurance and includes clear and easily understandable explanations of the underlying mathematical concepts. We distinguish three main types of cyber risks: idiosyncratic, systematic, and systemic cyber risks. While for idiosyncratic and systematic cyber risks, classical actuarial and financial mathematics appear to be well-suited, systemic cyber risks require more sophisticated approaches that capture both network and strategic interactions. In the context of pricing cyber insurance policies, issues of interdependence arise for both systematic and systemic cyber risks; classical actuarial valuation needs to be extended to include more complex methods, such as concepts of risk-neutral valuation and (set-valued) monetary risk measures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cyber risks constitute a major threat to companies worldwide.Footnote 1 In the last years, the estimated costs of cyber crime have continuously been increasing—from approximately USD 600 billion in 2018 to more than USD 1 trillion in 2020, cf. CSIS [25]. Consequently, the market for cyber insurance is experiencing strong growth, providing contracts that mitigate the increasing risk exposure—with significant potential ahead. However, cyber insurance differs from other lines of business in multiple ways that pose significant challenges to insurance companies offering cyber coverage:

-

Data on cyber events and losses is scarce and typically not available in the desired amount or granularity.

-

Cyber threats are evolving dynamically in a highly non-stationary cyber risk landscape.

-

Aggregate cyber risks arise due to common IT architectures or complex interconnections that cannot easily be captured.

-

The term ‘cyber’ risk itself comprises many different types of risk with different root causes and types of impact.

Insurance companies cannot solely rely on standard actuarial approaches when modeling and pricing cyber risks. Their traditional methods need to be complemented by novel and innovative techniques for both underwriting and quantitative risk management. The current paper provides the following main contributions:

-

(i)

We present a comprehensive overview of the state of the art of modeling and pricing cyber insurance. In contrast to other surveys (see, e.g., [39]) that focus on a high-level review of the literature, we explain the underlying mathematical concepts and discuss their advantages and drawbacks.Footnote 2

-

(ii)

The second main contribution of the paper is a classification of cyber risks into three different types: idiosyncratic, systematic, and systemic cyber risks. While the distinction between idiosyncratic and systemic risks is common in the current cyber insurance literature (see, e.g., [116]), a further refinement is necessary. The three risk types can be described as follows:

-

Idiosyncratic risks refer to cyber risks at the level of individual policyholders that are independent from risks of others parties. This might, for example, be caused by internal errors within the company. Prototypical idiosyncratic risks are independent risks in large insurance pools that allow to apply classical actuarial techniques.

-

Systematic risks are cyber risks that result from common vulnerabilities of entities affecting different firms at the same time, e.g., firms belonging to the same industry sector or region, or firms that utilize the same software, server, or computer system. These risks can be modeled via common risk factors. In classical actuarial and financial mathematics, systematic risks include financial market risks as well as stochastic fluctuations and evolutions of mortality rates within a population.

-

Systemic risks are cyber risks caused by local or global contagion effects in interconnected systems or by strategic interaction. Examples are worm-type malware or supplier attacks. These risks are similar to important feedback mechanisms observed in financial crises, e.g., contagion in networks of counterparties or fire sales of stressed market participants in illiquid markets. Models include random processes with feedback, or locally and globally interacting processes. We will also include strategic interactions in this category which are studied in game theory.

Idiosyncratic and systematic cyber risks can be captured by classical approaches of actuarial and financial mathematics; systemic cyber risks require different methodologies such as epidemic network models which focus on the interconnectedness of the entities. We suggest pricing techniques that adequately incorporate interdependence for both systematic and systemic cyber risks by combining the concepts of risk-neutral valuation and risk measures.

-

The paper is structured as follows. Section 2 reviews classical actuarial approaches. We begin with an introduction to the frequency-severity approach in the context of cyber risk and discuss how to model both idiosyncratic and systematic risks in this framework. We explain how dependence is captured in such models. Systemic cyber risks are considered in Sect. 3. Three different modeling approaches for interconnectedness, contagion, and interaction between entities are discussed, with a special focus on their advantages and possible drawbacks. In Sect. 4, we describe pricing methods for cyber insurance contracts that are applicable in the face of idiosyncratic, systematic, and systemic risks. Section 5 discusses open questions for future research.

2 Classical actuarial approaches applied to cyber risks

The pricing of cyber insurance contracts as well as quantitative cyber risk management require sound models for the loss distributions, customized to the application purpose. While classical actuarial premium principles are essentially related to the expected claims amount (plus a safety loading), quantitative risk management particularly refers to extreme losses in the tail of the distribution and their quantification in terms of risk measures such as Value at Risk or Average Value at Risk, see Sect. 4.

In actuarial mathematics, a standard model for insurance losses—used across all lines of business— is the frequency-severity approach, also called collective risk model. For a certain time interval [0, t], \(t>0\) (typically \(t=1\) year), a collective of policyholders causes a random number of claims \({\mathcal {N}}_t\) (frequency) with corresponding random loss sizes \({\mathcal {Y}}_1, {\mathcal {Y}}_2,\ldots \) (severity) generating the total claim amount

Calculations within the frequency-severity approach typically rely on the following mathematical assumptions (see, e.g., [88]):

-

(C1)

Claims occur at arrival times \(0\le T_1\le T_2\le \ldots \). The number of claims in the time interval [0, t], \(t\ge 0\), is defined by

$$\begin{aligned} {\mathcal {N}}_t:=\#\{j\ge 1 \;\vert \; T_j\le t \}, \end{aligned}$$i.e., \({\mathcal {N}}=({\mathcal {N}}_t)_{t\ge 0}\) constitutes a counting process on \([0,\infty )\).

-

(C2)

The jth claim arriving at time \(T_j\) causes the claim size \({\mathcal {Y}}_j\). It is assumed that the sequence \(({\mathcal {Y}}_j)_{j\ge 1}\) of claim sizes consists of independent and identically distributed random variables.

-

(C3)

Claim sizes and claim numbers are assumed to be independent from each other.

In contrast to classical insurance risks, however, cyber risk is more challenging in different ways. In particular, the standard assumptions of the frequency-severity approach as well as classical statistical techniquesFootnote 3 are no longer applicable:

-

Claims data is not available in sufficient quantity or in the required granularity.

-

Technology and cyber threats are evolving rapidly, i.e., the cyber environment is highly non-stationary.

-

Cyber incidentsFootnote 4 may affect different policyholders at the same time, i. e., the typical assumption of independence for insurance risks does not hold any longer. Moreover, there is—in contrast to natural catastrophe risk—no simple geographical delimitation of dependent risks.

Nonetheless, the frequency-severity approach can be customized to account for cyber risk—at least as a first approximation and for certain types of non-systemic cyber risks, which can be subdivided into idiosyncratic and systematic risks (as defined in Sect. 1). In the frequency-severity approaches presented below, we explicitly distinguish between techniques suitable for modeling idiosyncratic or systematic incidents. In the context of cyber insurance, however, a third class of risks can be identified, namely systemic risks, i.e., cyber risks resulting from contagion between interconnected entities. Proper modeling of such risks goes beyond the classical framework of actuarial modeling and requires appropriate models for networks, (cyber) disease spread, and strategic interaction. Hence, we discuss the modeling of systemic cyber risks separately in Sect. 3, while the pricing for all types of cyber risks is discussed in Sect. 4.

To present frequency-severity approaches in the context of cyber risk in a unified and practically applicable way, we use the following notation and definitions. We consider an insurer’s portfolio of n policyholders (firms) exposed to the considered type of cyber risk incidents. Each firm admits an individual risk profile characterized by a vector of covariates, e.g., industry sector, size, IT security level, which are elicitable, for example, via a questionnaire or from public information. Using the covariates, the insurer’s portfolio is decomposed into homogeneous groups, labeled \(\{1,\ldots , K\}\), with covariates vector \(x^k\) for group k. We denote by \(n_k\), \(k=1,\ldots ,K\), the number of firms in group k, i.e., \(n_1+\ldots +n_K=n\). For pricing purposes, these homogeneous groups can be viewed as tariff cells, i.e., the insurance firm should charge all firmsFootnote 5 within group k the same premium \(\pi _k\). In particular, if \(n_k\) is large, then the premium of the idiosyncratic cyber risk can be derived from the law of large numbers as the expected claims amount per firm of group k plus a suitable safety loading to avoid ruin in the long run.

Both idiosyncratic and systematic incidents can be grouped into different cyber risk categories, labeled \(\{1,\ldots , C\}\). Categories may include, for example, data breach, fraud, and business interruption. Two exemplary actuarial classification approaches are sketched and discussed in Appendix A. Cyber risk is modeled per risk category \(c\in \{1,\ldots ,C\}\) and per group \(k\in \{1,\ldots , K\}\). A pair \(m:=(c,k)\) is called a cyber risk module. The total number of modules \(C\cdot K\) is a trade-off between homogeneity and availability of data for statistical estimation.

Within this framework, we model the losses for an insurance company – for each cyber risk module as well as on an aggregate level. For this purpose, we first focus on frequency-severity based approaches to modeling cyber risks in the spirit of the classical collective risk model. Second, we add dependence to our cyber risk model in order to capture accumulation risks. Note that appropriate dependence modeling is particularly important for calculating capital requirements in quantitative risk management, since the underlying risk measures refer to events in the extreme tail of the loss distribution.

2.1 Frequency and severity

A frequency-severity model may be applied on the level of each cyber risk module \(m=(c,k)\). For simplicity, we describe the losses per risk category of individual firms by a collective risk model. This can be justified as follows: Since all firms in any group are (approximately) homogeneous, they will be charged the same premium for any given risk category. From the point of view of the insurance company, only aggregate losses are relevant, i.e., an artificial allocation of losses to individual companies for pricing purposes will produce the correct implications. We thus describe the losses per risk category at the level of any individual firm by a collective risk model with the same severity as the corresponding module, but with a suitably reduced frequency.

For a firm i in group k and a fixed risk category c, i.e., a cyber risk module \(m=(c,k)\), we consider the frequency and severity model \(({\mathcal {N}}_{t}^{m,i},({\mathcal {Y}}^{m,i}_j)_{j\ge 1})\). Then the total claim amount of firm i up to time t can easily be obtained by summing up:

In mathematical terms, all quantities correspond to random variables on a suitable probability space \((\Omega ,{\mathcal {F}},{\mathbb {P}})\), where \({\mathbb {P}}\) plays the role of the statistical measure that models the relative frequency with which events occur.

As outlined in the introduction of this section, one of the most common assumptions in the frequency-severity model is assumption (C3), i. e., claim numbers and sizes are independent of each other. This assumption facilitates and simplifies many calculations regarding the compound total claim amount process. In particular, the expected total claim amount and its variance follow from Wald’s formulas:

However, the independence assumption may not always be reasonable—e.g., if hidden factors influence both frequency and severity: Sun et al. [106] detect a positive nonlinear dependence between frequency and severity in hacking breach risks at the firm level. A firm with a strong cyber self protection is expected to experience both fewer and weaker hacking attacks than companies with weak self protection mechanisms. In mathematical terms, the authors capture this dependence between frequency and severity by the Gumbel copula, see also Sect. 2.2.

2.1.1 Frequency

Let \({\mathcal {N}}_t^{m,i}\) denote the number of incidents in module \(m=(c,k)\) until time t that are allocated to a firm i in group k, and let \(({\mathcal {N}}_t^{m,i})_{t\ge 0}\) denote the corresponding counting process. At the aggregate level,

will count the total number of incidents per module \(m= (c,k)\) and the total number of incidents per cyber risk category c, respectively.

Poisson Process

A simple counting process for incidents—reflecting non-stationarity of cyber risk—is a time-inhomogeneous Poisson process with intensity function \(\lambda ^m\) per firm for cyber risk module m.

Definition 2.1

(Time-inhomogeneous Poisson process) A counting process \(({\mathcal {N}}_t)_{t \ge 0}\) is called a time-inhomogeneous Poisson process on \((\Omega ,{\mathcal {F}},{\mathbb {P}})\) with locally integrable rate (or intensity) function \(\lambda :[0,\infty )\rightarrow [0,\infty )\) if:

-

1.

\({\mathcal {N}}_0= 0\),

-

2.

the process has independent increments,

-

3.

for any time interval (s, t], the number of incidents is Poisson distributed with mean \(\int _s^t\lambda (u)\,du\), i.e.,

$$\begin{aligned} {\mathcal {N}}_t-{\mathcal {N}}_s\sim {\text {Poiss}}\left( \int _s^t\lambda (u)\,du\right) . \end{aligned}$$

Unless the intensity function is constant, the increments of a time-inhomogeneous Poisson process are non-stationary. The cumulative rate function \(\int _0^t\lambda (u)\,du\) corresponds to the expected number of incidents up to time t.

Zeller and Scherer [116] adopt this approach for idiosyncratic incidents. For each policyholder i of group k and module \(m=(c,k)\), the number of idiosyncratic incidents \(({\mathcal {N}}_t^{m,i})_{t\ge 0}\) is assumed to follow a time-inhomogeneous Poisson process with intensity \(\lambda ^m=\lambda ^{(c,k)}\). Clearly, for each cyber risk category c, the intensity at the level of an individual firm i depends on the covariates \(x^k\) of group k (but not on the individual policyholder i), and Zeller and Scherer [116] propose a generalized additive model

to estimate the intensity rates.Footnote 6 In particular, similarities and deviations of the risk profiles of the K groups—expressed in terms of the covariate vectors \(x^k\), \(k=1,\ldots ,K\)—are reflected by the intensity functions \(\lambda ^{(c,k)}\).

Since idiosyncratic incidents are independent across firms, the total number of incidents \({\mathcal {N}}_t^{m, agg}\), \(t\ge 0\), per module \(m= (c,k)\) as well as the total number of incidents \({\mathcal {N}}_t^{(c)}\), \(t\ge 0\), per cyber risk category c, respectively, are again time-inhomogeneous Poisson processes with respective intensities

More delicate, however, is the case of systematic cyber risk incidents. In particular, frequency distributions of different policyholders might be subject to dependencies due to joint underlying cyber risk factors \({\mathcal {R}}^1,\ldots ,{\mathcal {R}}^d\), representing, for example, the random discovery of exploits in commonly used software, improvements in cyber security, or the technological progress of tools for cyber attacks.

Cox Process

Such dependencies between counting processes can be captured in the context of Cox processes, also called doubly stochastic Poisson processes, extending the notion of a time-inhomogeneous Poisson process to a random intensity.

Definition 2.2

(Cox process) A Cox process \(({\mathcal {N}}_t)_{t\ge 0}\) is a counting process described by a random intensity process \((\lambda _t)_{t\ge 0}\) such that conditional on the specific realization \(t\mapsto \lambda _t(\omega )\), \(\omega \in \Omega \), the process \(({\mathcal {N}}_t)_{t\ge 0}\) is a time-inhomogeneous Poisson process with intensity \(t\mapsto \lambda (t)=\lambda _t(\omega )\).

A reasonable assumption could be that the intensity is a function of the current state of random cyber risk factors, i.e., for an \({\mathbb {R}}^d\)-valued stochastic process \({\mathcal {R}}_t=({\mathcal {R}}^1_t,\ldots ,{\mathcal {R}}_t^d)\), \(t\ge 0\), of cyber risk factors and a function \(\lambda : {\mathbb {R}}^d\rightarrow [0,\infty )\), the intensity process is defined as

More generally, the intensity process could be modeled as a function of the whole history of cyber risk factors, i.e.,

In summary, in the case of systematic cyber risk, a reasonable model for the number of incidents \({\mathcal {N}}_t^{m,i}\) up to time t allocated to policyholder i in group k for module \(m=(c,k)\) could be to assume that \(({\mathcal {N}}_t^{m,i})_{t\ge 0}\) follows a Cox process with intensity process \(\lambda _t^m=\lambda ^m({\mathcal {R}}_t)\), \(t\ge 0\), defined in terms of a suitable function \(\lambda ^m:{\mathbb {R}}^d\rightarrow [0, \infty )\), such that conditional on the cyber risk factors \(t\mapsto {\mathcal {R}}_t(\omega )=({\mathcal {R}}^1_t(\omega ),\ldots , {\mathcal {R}}_t^d(\omega ))\) the counting processes \(({\mathcal {N}}_t^{m,i})_{t\ge 0}\), \(m = (c,k)\), \(c =1,\ldots ,C\), \(k=1, \ldots , K\), are independent time-inhomogeneous Poisson processes. In particular, conditional independence implies that—conditional on the specific realization \(t\mapsto \lambda ^m_t(\omega )\)—the total number of incidents \({\mathcal {N}}_t^{m, agg}\), \(t\ge 0\), per module \(m= (c,k)\) and the total number of incidents \({\mathcal {N}}_t^{(c)}\), \(t\ge 0\), per cyber risk category c are again time-inhomogeneous Poisson processes with intensities

in analogy to (1).

In contrast to the time-inhomogeneous Poisson process, the increments of a Cox process \(({\mathcal {N}}_t)_{t\ge 0}\) are in general no longer independent, but subject to autocorrelation. More precisely, for any \(s<t\le u<v\), the tower property of conditional expectation implies

i.e., the autocorrelation depends on the random intensity process. The statistical analysis of Bessy–Roland et al. [7] yields empirical evidence for autocorrelation in the number of attacks, and thus provides an additional rationale for Cox processes when modeling claims frequency. The specification of an intensity process that reproduces the empirically observed autocorrelation appears to be challenging.

2.1.2 Severity

Every claim occurring in the frequency-severity model triggers a loss size that is modeled as a random variable. We let \({\mathcal {Y}}_{j}^{m,i}\) denote the claim size of the jth event allocated to firm i for module \(m=(c,k)\) and assume that \(({\mathcal {Y}}_j^{m,i})_{j\ge 1}\), \(i=1, \dots , n_k\), is a collection of non-negative independentFootnote 7 and identically distributed random variables. One among many different possible approaches is to assume that the key governing parameter for the choice of the claim size distribution is the incident category c; characteristics of group k then determine distributional details, e.g., parameter values.

Due to the limited availability of loss data, empirical research on cyber risk severity distributions has mostly focused on the category of data breaches. For this category, open source data bases, such as the Privacy Rights Clearinghouse Chronology of Data Breaches, are available and regularly updated. Data breach severities are found to follow strongly heavy-tailed distributions such as power-law (see, e.g., [80]), log-normal (see, e.g., [37]) or generalized Pareto distributions (GPD) (see, e.g., [112] or [106]). For cyber risk categories different from data breaches, less data is publicly available. Consequently, fewer papers have appeared that empirically analyze the respective severity distributions.

An exception is Dacorogna et al. [29] who study a non-public database of the French Gendarmerie Nationale on cyber complaints and describe a process for cleaning the data. Their analysis suggests that losses are heavy-tailed. Dacorogna et al. [30] refine the analysis and provide a tool for classifying attacks based on the fatness of the tail. Another promising direction are studies based on data on operational risk such as Biener et al. [9] or Eling and Wirfs [41]. These approaches offer the benefit of being able to analyze all categories of cyber incidents simultaneously. In particular, Eling and Wirfs [41] detect distributional differences between small and large claim sizes for all considered cyber incident categories. The authors propose a composite distribution approach, where excess losses over a threshold are modeled using a GPD and the remaining smaller losses are modeled using a simple parametric distribution such as a gamma or log-normal distribution. In general, composite distribution approaches constitute a flexible modeling tool to take the empirically observed distributional differences between body and tail of severity distributions adequately into account. A composite distribution approach can be formalized as follows.

For each module m, we choose a threshold \(\theta ^m\) distinguishing small from large cyber claims. Small and large claims, i.e., the body and tail of the severity distribution, are then modeled separately: The i.i.d. claim sizes follow a composite distribution with density

where \(f_{\text {small}}^m, f_{\text {large}}^m\) are probability density functions modeling the sizes of small and large claims in module m, respectively, and \(C_1^m\), \(C_2^m\) are normalizing constants that are additionally constrained by continuity conditions at the threshold \(\theta ^m\). Depending on the characteristics of the module m, different choices for \(f_{\text {small}}^m, f_{\text {large}}^m\) may be suitable. Examples include

-

Small Claims: PERT, Normal, Gamma, Log-Normal, GPD, Kernel Distribution

-

Large Claims: GPD

The composite distribution approach is well-suited for modeling non-life insurance severity distributions in general, and cyber risks in particular.Footnote 8 As discussed here, the methodology is independent of time, i.e., it provides only a snapshot of the current cyber environment. In the light of the fast-evolving, non-stationary cyber landscape, the suitability of the model must, however, be regularly validated and updated. For further details and discussions, we refer the interested reader to the excellent summaries provided by Zeller and Scherer [116], Sect. 2.1, or Eling [39], in particular Tables 4 and 6, and to Cooray and Ananda [23] for an application of composite distributions in a non-cyber specific context.

2.1.3 On calibration and application

In general, frequency-severity models are well-understood, easy to implement and to calibrate if a sufficient amount of data is available. They are also straightforward to explain, for example, to an executive board of an insurance company; this is partly due to their prevalence in actuarial modeling. For frequency modeling, intensities can, e.g., be fit to data using generalized additive models (as in Zeller and Scherer [116] and described above), maximum- or marginal likelihood, or Bayesian methods. Cox processes are generally more difficult to estimate – the choice of a calibration method critically depends on the law of the underlying common risk factor processes.Footnote 9

For the statistical analysis of the severity, there exist well-known estimation techniques including maximum-likelihood, see, e.g., Maillart and Sornette [80] or Edwards et al. [37] for applications in a cyber severity context, or the peaks-over-threshold method for fitting a GPD to the tail of a distribution, see, e.g., McNeil et al. [85] and Embrechts et al. [42]. For a general review on methods for the parameter estimation of GPDs, including maximum-likelihood, the method of moments, the probability weighted moments method, and Bayesian approaches, see also de Zea Bermudez and Kotz [32] and de Zea Bermudez and Kotz [33].

The practical application of frequency-severity models to cyber risk is challenging, in particular due to the limited amount of available data and its insufficient quality. Moreover, Poisson and Cox processes do not capture the systemic interaction between different (groups of) policyholders; see also Reinhart [98] for a discussion of the frequency-severity model presented by Zeller and Scherer [116]. An alternative are Hawkes processes that incorporate systemic self-excitation into frequency models, see Sect. 3.1. Like Cox processes, Hawkes processes are able to capture autocorrelation observable in the data.

2.2 Dependence modeling

The distribution of the total claim amount per module and at the portfolio level is affected by the underlying dependence structures. For cyber risk, dependencies may be present at different levels including:

-

dependence between frequency distributions or between severity distributions of different policyholders in the same homogeneous group (e.g., due to the random evolution of common cyber security measures and cyber threats over time),

-

dependence between frequency and severity—in contrast to the classical framework of frequency-severity models (e.g., due to unobservable random factors within a tariff class such as heterogeneous levels of cyber self protection).

One approach to deal with the first type of dependencies are Cox processes as described in Sect. 2.1.1. In this section, we review further approaches to model dependence in the context of cyber risk that have been proposed in the literature.

2.2.1 Common risk factors

Common risk factors capture dependence for systematic risks; the factors are random quantities to which all risks are jointly exposed. Common risk factors appear in static as well as in dynamic models and have been widely used in the cyber risk modeling literature. For example, they are key elements of the cyber risk models proposed by Böhme [10], Böhme and Kataria [11] and Zeller and Scherer [116]. Cox processes, as introduced in Sect. 2.1.1, are an example of dynamic factor models.

Böhme [10] captures dependence using one common risk factor in a static model. The factor represents a common vulnerability in a portfolio of n individual risks. The connection between individual risks and the latent risk factor is studied on the basis of linear correlation.Footnote 10 Common risk factors also appear in the cyber risk model of [116]. The authors use marked point processes with two-dimensional marks: the first component describes the strength of an attack, and the second component represents the subset of companies affected. Dependence among firms occurs due to the restriction of incidents to certain industry sectors which is modeled via a common risk factor. The paper suggests a conceptual framework, but does not yet calibrate the model to real data.

2.2.2 Copulas

In actuarial applications, copulas are a standard tool that fully characterizes the dependence structure of the components of finite-dimensional random vectors. A d-dimensional copula \({\mathcal {C}}:[0,1]^d\rightarrow [0,1]\) is the distribution function of a d-dimensional random vector with uniform one-dimensional marginal distributions.

Theorem 2.1

(Sklar’s Theorem)

-

1.

For any d-dimensional distribution function F with margins \(F_1,\ldots ,F_d\) there exists a copula \({\mathcal {C}}\) with

$$\begin{aligned} F(x_1,\ldots ,x_d)={\mathcal {C}}(F_1(x_1),\ldots ,F_d(x_d))\quad \text{ for } \text{ all } x_1,\ldots ,x_d\in [-\infty ,\infty ]. \end{aligned}$$(2)If all \(F_i\) are continuous, then \({\mathcal {C}}\) is unique.

-

2.

Conversely, for a given copula \({\mathcal {C}}\) and given one-dimensional distribution functions \(F_1,\ldots ,F_d\), the function F in (2) is a d-dimensional distribution function with copula \({\mathcal {C}}\) and marginal distribution functions \(F_1,\ldots ,F_d\).

Property 1 states that a copula extracts the dependence structure of a random vector from its multivariate distribution, while property 2 provides a flexible construction principle of multivariate models by combing marginal distributions and copulas to multivariate distributions. Prominent examples of copulas are:

-

Gaussian copula: Letting \(\Phi ^{-1}\) be the quantile function of the standard normal distribution and \(\Phi _{\Sigma }\) the joint cumulative distribution function of a multivariate normal distribution with covariance matrix \(\Sigma \), the corresponding Gaussian copula is given by

$$\begin{aligned} {\mathcal {C}}_\Sigma ^{\textrm{Ga}}(u_1,\ldots , u_{d}) = \Phi _{\Sigma } (\Phi ^{-1}(u_1),\ldots , \Phi ^{-1}(u_{d})) \quad ({(u_1,\ldots ,u_d)}\in [0,1]). \end{aligned}$$ -

t-copula: Let \(t_{\nu ,\Sigma }\) signify the distribution function of a d-dimensional t-distribution \(t_d(\nu ,0,\Sigma )\) for a given correlation matrix \(\Sigma \) and with \(\nu \) degrees of freedom, and let \(t_\nu \) denote the distribution function of a univariate t-distribution with \(\nu \) degrees of freedom. The corresponding t-copula takes the form

$$\begin{aligned} {\mathcal {C}}^t_{\nu ,\Sigma }(u_1,\ldots ,u_d)=t_{\nu ,\Sigma }(t_\nu ^{-1}((u_1),\ldots ,t_\nu ^{-1}(u_{d}))\quad ({(u_1,\ldots ,u_d)}\in [0,1]). \end{aligned}$$Like the Gaussian copula, the t-copula is an implicit copula that is extracted from a given parametric multi-variate distribution.

-

Archimedean copulas: Explicit copulas are constructed from given functions; the prime example are Archimedean copulas. We consider a suitable continuous function \(\psi :[0,\infty )\rightarrow [0,1]\) with \(\psi (0) = 1\), \(\lim _{x\rightarrow \infty } \psi (x) = 0\), and \(\psi \) strictly decreasing on \([0, \psi ^{-1}(0)]\), where \(\psi ^{-1}\) denotes its generalized inverse. The Archimedean copula with generator \(\psi \) is given by

$$\begin{aligned} {\mathcal {C}}_\psi ^{\textrm{Ar}}(u_1,\ldots , u_{d}) = \psi ^{-1} (\psi (u_1) +\ldots + \psi (u_{d})) \quad ({(u_1,\ldots ,u_{d})}\in [0,1]). \end{aligned}$$A special case is the Gumbel copula for \(\psi _\theta (s) = (-\ln (s))^\theta \), \(\theta \in [1,\infty )\) that is applied in the cyber model of Sun et al. [106].

2.2.3 On calibration and application

Common risk factor models are able to capture dependence from bottom-up and are widely used in economics. From a practical perspective, they are particularly useful when a modeler is confident that random outcomes are influenced by common external factors. In Cox processes, described in Sect. 2.1.1, the common factors enter the model via the intensity. Their estimation depends on the specific choice of the distribution of the underlying risk factors. For the class of linear factor models, a large amount of statistical estimation methods exist. Important techniques are time series regression, cross-sectional regression (at each time point), and principal component analysis, see, e.g., McNeil et al. [85] and the references therein.

Another approach are copulas; these are theoretically able to represent every form of static dependence. They can be viewed as a top-down approach that imposes a dependence structure without modeling the underlying mechanisms, as contrasted with factor models that can be interpreted as a bottom-up approach. Copulas have already been used in the literature on cyber risk. Herath and Herath [62] model the loss distribution at a single firm using a copula that captures the dependence structure between the number of affected computers of the firm and the overall severity of the loss. In Böhme and Kataria [11] dependence between different firms is captured using a t-copula with a given linear correlation coefficient.

Another example is an application of copulas in a modified collective risk model in which the standard independence assumption is relaxed. For the incident category c of hacking data breaches, Sun et al. [106] observe upper tail dependence between frequency and severity. This may be caused by hidden factors such as the degree of cyber self protection. They propose to model this dependence for any firm i in module m up to time t via a Gumbel copula.

Eling and Jung [40] and Liu et al. [79] apply vine copulas in the context of data breaches. Vine copulas are very flexible, and their calibration is quite tractable, since high-dimensional dependence structures are decomposed into components of lower dimension. For detailed information on vine copulas we refer to Czado [26], Czado and Nagler [27], and an online collection of material on vine copulas, see TU Munich, Statistics Research Group [107].

In general, the choice of a suitable copula estimation method depends on the structure of the chosen copula model: parametric, semiparametric or nonparametric. A good survey on various methods is Hofert et al. [65]. In a fully parametric model, both the copula and the marginal distributions are completely characterized by (vector) parameters. The maximum likelihood (ML) method can be applied to the dependence and the marginal part either jointly or sequentially. The sequential approach is often referred to as the method of inference functions for margins (IFM), see, e.g., the surveys in Choroś et al. [22], Sect. 2.1, or McNeil et al. [85], Sect. 7.6. Semiparametric approaches typically still involve a parametric copula model, but a nonparametric model for the marginals. Here, classically, the marginal distributions are estimated via their empirical distribution functions. Estimation of the full model can then be performed using a maximum-pseudo likelihood approach, in which the nonparametric marginal estimators are inserted, see the seminal paper of Genest et al. [53]. This approach is considered to be more robust than the parametric ML and IFM methods in many practical applications, see Kim et al. [70], unless substantial information is available on a parametric class to which the margins belong to. Nonparametric copula models may be estimated on the basis of different variations of nonparametric marginal and joint distribution function estimates, see, e.g., the seminal paper of Deheuvels [34] using empirical distribution functions or Chen and Huang [21] (and the references therein) for kernel-based estimators of the copula (or copula density).

3 Systemic cyber risks

Systemic risk generally refers to the possibility that distortions in a system may spread across many entities and be augmented due to local or global feedback effects. This is in contrast to systematic risk that introduces dependence via exogenous factors. Systemic risk refers to the internal mechanism of a system in which the behavior of the various entities has a sequential impact. It is often associated with a cascading risk propagation such that

“in case of an adverse local shock (infection) to a system of interconnected entities, a substantial part of the system, or even the whole system, finally becomes infected due to contagion effects.”Footnote 11

As a consequence of the 2008 financial crisis, systemic risk was intensively studied in systems of interdependent financial institutions, see, e.g., Staum [105]. This concept is also important in the context of cyber risk, since agents and organizations in cyber systems are interconnected, for example within IT networks or via business contacts.Footnote 12 The relevance of systemic cyber threats has been emphasized by leading regulatory and macroprudential institutions, cf. WEF [110] and ESRB [46]. Examples of contagious threats include the WannaCry and NotPetya cyber attacks where the corresponding malware spread through networks of interconnected IT devices and firms, causing tremendous losses to cyber systems worldwide.Footnote 13

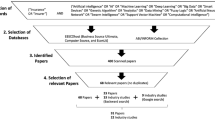

Modeling systemic cyber risks requires models of feedback effects, local and global interaction, as well as strategic interaction. We describe three concrete methodological approaches (see Fig. 1): Firstly, self-excitation of cyber incidents can be captured by Hawkes processes on an aggregate level (Sect. 3.1); in this respect, Hawkes processes can be interpreted as a top-down approach. Secondly, epidemic network models (Sect. 3.2) capture the interconnectedness and cascading propagation of risks; this bottom-up approach may focus on local connections, but can also capture global interaction via aggregate, mean-field quantities. Both approaches can be viewed as mechanistic interaction models in which rational or strategic behavior of agents is typically not mirrored. This is the focus of the third approach, game-theoretic models (Sect. 3.3). These study explicitly the strategic interaction of interconnected entities, usually under strongly simplified connectivity assumptions; notions of equilibria typically characterize the solutions.

3.1 Hawkes processes

Systematic dependence of cyber incidents can be modeled by Cox processes; these permit to capture empirical features such as the autocorrelation of cyber attacks. Cox processes focus on common factors, but they do not model contagion in interconnected systems. An alternative are Hawkes processes, self-exciting processes, that mirror feedback effects, a specific form of systemic cyber risk; they also capture the stylized fact of autocorrelation of the number of events.

Definition 3.1

(Hawkes process) A one-dimensional Hawkes process \(({\mathcal {N}}_t)_{t\ge 0}\) is a point process with jump times \(T_1,T_2,\ldots \) and with random intensity \(t\mapsto \lambda _t\), given by

where \(\mu (\cdot )\) is a baseline intensity of jumps, and where \(\varphi \) is the excitation function or kernel function resp. which expresses the positive influence of past incidents at time \(T_n\) on the current value of the intensity.

From a conceptual point of view, Hawkes processes allow to capture—besides autocorrelation of the number of cyber risk incidents—excitation effects, by coupling the arrival rate of events with the number of past incidents. In particular, this allows modeling systemic incidents that affect a very large number of counterparties at the same time, e.g., the spread of worm-type malware.

Self-excitation of cyber incidents for each policyholder as well as the excitation between policyholders of different groups can be modeled by a multivariate Hawkes model. More precisely, for all cyber risk modules \(m=(c,k)\) and for any policyholder i of group k, the intensity of the counting process \(({\mathcal {N}}_t^{m,i})_{t\ge 0}\) takes the form

where

-

\(t\mapsto \mu ^{(c,k)}(t)\) is the deterministic base intensity function, depending on the cyber risk module \(m=(c,k)\) only,

-

\(t\mapsto \varphi ^{c,k,l}_{i,j}(t)\) are self- and mutually-exciting maps (called kernels), depending on both the cyber risk module \(m=(c,k)\), the other group l and the individual policyholders i, j,

-

and \(T_n^{(c,l,j)}\), \(n\in {\mathbb {N}}\), are the claims arrival times of policyholder j in group l with respect to the cyber risk category c.

In this multivariate Hawkes model, the kernels \(\varphi ^{c,k,k}_{i,i}\) describe the self-excitation for policyholder i of group k, while the \(\varphi ^{c,k,l}_{i,j}\) for different policyholders \(i\not =j\) model contagion between policyholders and across groups.

3.1.1 On calibration and application

Using suitable parametric functions for both the baseline intensity and the kernels of Hawkes processes can in principle be estimated by maximum-likelihood methods—provided that data is available in the desired amount and granularity. Data availability is, of course, still a major challenge in cyber insurance. Model calibration and statistical parameter estimates in a cyber context are, e.g., presented in Bessy-Roland et al. [7] focusing on data breaches. Further, Hawkes processes are also used in an empirical study of cyber risk contagion in Baldwin et al. [4]. In the context of financial data, maximum-likelihood methods and graphical goodness-of-fit are, e.g., discussed in Embrechts et al. [43]. Da Fonseca and Zaatour [28] develop an estimation by the method of moments which is fast compared to likelihood estimation. A general discussion including Bayesian estimation is presented in Daley and Vere-Jones [31], see also Giesecke [54], Errais et al. [45], and Aït-Sahalia et al. [1].

Since Hawkes processes can be easily incorporated with a classical actuarial frequency model for systemic cyber risk, they can be integrated into the standard collective risk model if complemented by an appropriate severity modeling approach. In principle, the severities of systemic events could be chosen as described in Sect. 2.1.2 for idiosyncratic and systematic events. Due to the limited amount of data and uncertainty about the possible impact of future systemic cyber incidents, accurate modeling of systemic severities is extremely challenging in practice.

Hawkes processes take a top-down approach to modeling systemic cyber risk and neglect the specific infection processes that underlie risk contagion in interconnected systems. Important aspects of risk amplification and possible accumulation scenarios may not be adequately captured. This is the main attractive feature of epidemic network models; their disadvantage is their increased complexity.

3.2 Epidemic network models

Interconnectedness constitutes a key characteristic of cyber systems. Systemic cyber risks may spread and amplify in networks of interconnected companies, economic actors, or financial institutions. Cyber network models for contagious risk propagation consist of the following three key components:

-

1.

A network (also called graph) whose nodes represent components or agents. These entities could be individual corporations, subsystems of computers, or single devices. The edges of the network correspond to possible transition channels, e.g., IT connections or exchange of data/computer code, see Sect. 3.2.1;

-

2.

A spread process on the network that models the propagation of a contagious cyber risk, like the spread of a computer virus, a Trojan, or ransomware,Footnote 14 see Sect. 3.2.2;

-

3.

A loss model which determines the severity of cyber events and the monetary impact on different agents in the network, see Sect. 3.2.3.

3.2.1 Networks

Definition 3.2

(Network) A \( {network}\)Footnote 15 (or graph) \(G\) is an ordered pair of sets \( G= ({\mathcal {V}}, {\mathcal {E}} )\), where \({\mathcal {V}} \ne \emptyset \) is a countable set of N elements, called \({nodes}\) (or \({vertices}\)), and \({\mathcal {E}}\) is a set of pairs \( (i, j)\), \(i, j \in {\mathcal {V}}\), of different nodes, called \({edges}\) (or \({links}\)). If all edges in \({\mathcal {E}}\) are unordered, formally, \((i,j) \in {\mathcal {E}} \Rightarrow (j,i) \in {\mathcal {E}}\), then \(G\) is called an \({undirected~network}\). Otherwise, the network \(G\) is called \({directed}\).

The network structure is encoded in its adjacency matrix \(A=(a_{ij})_{i,j\in \{1,\ldots ,N\}}\in \{0,1\}^{N\times N}\), which is defined by its entries

By definition, G is undirected if and only if \( A\) is symmetric. Examples of undirected network topologies with \(N=8\) nodes are depicted in Fig. 2.

In applied network analysis, the exact network structure is often unknown. In this case, random network models enable sampling from a class of networks with given fixed topological characteristics (such as the overall number of nodes).Footnote 16

In the cyber insurance literature, network models are mainly applied to study risk contagion, e.g., modeling the propagation of malware in IT networks of interconnected firms or devices. In addition to an underlying network, an appropriate model of the contagion process that captures epidemic spread is needed.

3.2.2 Epidemic spread processes

Models of infectious disease spread dynamics have been studied extensively in mathematical biology and epidemiology, dating back at least to the seminal work of Kermack and McKendrick [68].Footnote 17 In this paper, we focus on epidemic network models for populations of entities.

At any point in time, each node is in a particular state, which may change over time as it interacts with other nodes. According to their state, individuals are divided into various compartments, e.g., individuals that are susceptible (S) to an infection, infected (I) individuals, or individuals who have recovered (R) from the infection. For a network of N nodes, the spread process at time t can be described by a state vector

where E is the set of compartments. Both Markov and non-Markov processes have been considered in the context of epidemic spread processes.Footnote 18

Markovian Spread Models

In Markovian spread models on networks, the evolution of the state vector X(t) is described by a (in many cases: time-homogeneous) continuous-time Markov chain on the discrete state space \(E^N\). The Markov models SIS (Susceptible-Infected-Susceptible) and SIR (Susceptible-Infected-Recovered) form a class of commonly used models for epidemic propagation in networks. They are distinguished by the presence (SIR) or absence (SIS) of immunity: Reinfection events are only possible in the SIS framework because in the SIR model recovered individuals acquire (permanent) immunity, i.e., the models are based on the two different sets of compartments \(E=\{S,I\}\) and \(E=\{S,I,R\}\).

In both models, a transition of X from one state in \(E^N\) to another is possible only if exactly one node changes its state \(X_i\) in E. State changes may occur through infection or recovery: It is assumed that each node may be infected by its infected neighbors, but can be cured independently of all other nodes in the network. Each node is endowed with an independent exponential clock and changes its state when the exponential clock rings. Letting \(\tau >0\) and \(\gamma > 0\), the rates of these transitions are illustrated in Fig. 3 and given as follows \((i=1,\ldots ,N)\):

where \(Z=S\), for the SIS, and \(Z=R\) for the SIR model, respectively.

The exponential transition times enable an intuitive stochastic simulation algorithm: the well-known Gillespie algorithm, first introduced in Gillespie [55] and Gillespie [56]; see Appendix C for details.

For practical purposes such as the pricing of cyber insurance contracts, we often do not need the full information provided by the Markov chain evolution, but only the dynamics of specific quantities such as moments or (infection) probabilities. Of particular interest are the dynamics of the state probabilities of individual nodes \({\mathbb {P}}(X_i(t)=x_i),\; t\ge 0\). They can be derived from Kolmogorov’s forward equation and written in general form as (\(i=1,\ldots ,N\))

where \(q_{zy}\) denotes the transition rate of the entire process X from \(z\rightarrow y\). In natural sciences, this equation is also known under the term master equation. For the SIS and SIR models, using Bernoulli random variables \(S_i(t):= \mathbbm {1}_{\{X_i(t) = S\}}\), \(I_i(t):= \mathbbm {1}_{\{X_i(t) = I\}}\), and (for SIR) \(R_i(t):= \mathbbm {1}_{\{X_i(t) = R\}}\), the dynamics of state probabilities of individual nodes (4) can conveniently be written via moments:

-

SIS model:Footnote 19 Since \(E =\{ I,S\}\), we have \(S_i(t)=1-I_i(t)\), i.e., the evolution of X is fully described by the evolution of the vector \(I(t)=(I_1(t),\ldots , I_N(t))\), and the single node infection dynamics for \(i=1,\ldots , N\) are given by

$$\begin{aligned} \frac{d {\mathbb {E}}[I_i(t)]}{d t} = -\gamma {\mathbb {E}}[I_i(t)] + \tau \sum _{j=1}^N a_{ij}{\mathbb {E}}[I_j(t)] - \tau \sum _{j=1}^N a_{ij}{\mathbb {E}}[I_i(t)I_j(t)], \end{aligned}$$(5)since \({\mathbb {P}}(X_i(t)=I)={\mathbb {P}}(I_i(t)=1)={\mathbb {E}}[I_i(t))].\) This system of N equations is not closed as second order moments \({\mathbb {E}}[I_i(t)I_j(t)]\), i.e., second order infection probabilities, appear.

-

SIR model: The dynamics of the recovery Bernoulli random variable \(R_i(t)\) result from the dynamics of \(I_i(t)\) and \(S_i(t)\) due to \({\mathbb {E}}[R_i(t)] = 1-{\mathbb {E}}[S_i(t)] - {\mathbb {E}}[I_i(t)]\). Equation (4) corresponds to:

$$\begin{aligned} \begin{aligned} \frac{d{\mathbb {E}}[S_i(t)]}{dt}&= -\tau \sum _{j=1}^N a_{ij} {\mathbb {E}}[S_i(t)I_j(t)] , \\ \frac{d{\mathbb {E}}[I_i(t)]}{dt}&= \tau \sum _{j=1}^N a_{ij} {\mathbb {E}}[S_i(t)I_j(t)] - \gamma {\mathbb {E}}[I_i(t)] ,\\ \end{aligned} \end{aligned}$$(6)for \(i = 1,2,\ldots , N\). Again, the system is not closed due to the presence of second order moments.

The main problem with systems (5) and (6) is the fact that they are not closed: They depend on second order moments, which, in turn, depend on third order moments, etc. For example, the fully closed SIS model yields \(\sum _{i=1}^N \left( {\begin{array}{c}N\\ i\end{array}}\right) = 2^N-1\) moment (i.e., infection probability) equations. Solving these systems exactly becomes intractable for networks of realistic size. To deal with this issue, the following two approximation approaches have been proposed:

-

1.

Monte Carlo simulation: Monte Carlo simulation using the Gillespie algorithm (see Appendix C) constitutes a powerful tool to obtain various quantity estimates related to the evolution of the epidemic spread. In particular, this includes the state probability dynamics of individual nodes (4).Footnote 20

-

2.

Moment closures: If a set of nodes \(J\subset {\mathcal {V}}\) is infected, this increases the probability of other nodes in the network (that are connected to the set J via an existing path) to become infected as well. Node states do not evolve independently and are to some extent correlated. To break the cascade of equations and to make ODE systems tractable, the moment closure approach consists in factorizing moments beyond a certain order k, substituting all higher-order moments. This is done by considering the exact moment equations up to this order k and closing the system by approximating moments of order \(k+1\) in terms of products of lower-order moments using a mean-field function. A detailed description of two different types of moment closures is provided in Appendix D. However, a major problem with moment closures is that only little is known about rigorous error estimates.Footnote 21 This presents an important avenue for future research.

Non-Markovian Spread Models

Non-Markovian models possess conditional distributions that may depend on the past and on further random factors. In contrast to the Markovian setup, where transition times are necessarily exponential, non-Markovian models might allow additional flexibility to freely choose the distributions of infection and recovery times. In addition, dependence among the infection times may be included. This generality may improve the quality of a fit to real-world data. However, the extended generality in comparison to Markov models is typically associated with reduced tractability. For this reason, non-Markovian models are less commonly considered. In addition, a similar scope of flexibility can also be achieved within the class of Markovian models by extending the dimension of the state space; but this comes again at the price of increased complexity and possibly reduced tractability.

A simple example of a non-Markovian model for the spread of cyber risks has been proposed by Xu and Hua [114]. The model does not include immunity, i.e., the underlying compartment set is the same as for the Markovian SIS model. The considered waiting times in the model are:

-

The individual recovery times \(T^{recov}_i\) of infected nodes.

-

For nodes i which are in the susceptible state, two different types of infections are considered, internal infections from within the network and external infections coming from outside:

-

1.

Internal infection times: Let the random variable \(K_i(t) = \sum _{j=1}^N a_{ij} I_j(t)\) denote the number of infected neighbors of node i at time t. Infectious transmissions to node i are given with waiting times \(T_{i_1},\ldots ,T_{i_{K_i}}\). These times share the same marginal distribution \(F_i\). Their underlying dependence structure is captured by a prespecified copula.

-

2.

External infection times: A random variable \(T^{out}_i\) with distribution \(G_i\) models the arrival time of threats from outside the network to node i. \(T^{out}_i\) is assumed to be independent of times \(T_{i_1},\ldots ,T_{i_{K_i}}\).

-

1.

To simulate the process, the waiting times for all nodes are generated according to their current state (i.e., recovery times for all infected nodes, and internal and external infection times for all susceptible nodes). The minimum of these waiting times determines the next event (infection or recovery). After this change, all quantities are recomputed and the process is repeated until a prespecified stopping criterion is met.Footnote 22

Finally, note that a Markovian SIS model with outside infectionsFootnote 23 can be obtained as a special case by choosing exponentially distributed infection and recovery times and assuming independence between all waiting times.

3.2.3 Loss models

Given the underlying network, and the epidemic spread process X on it, the third and final ingredient of a cyber risk network model is given by a suitable loss model \(Y_{i,j}\) for each node \(i=1,\ldots ,N\), where j describes the number of loss events. In the existing literature, loss models are kept rather simple as the focus lies on modeling the cyber-epidemic spread. We give two examples:

-

1.

In Fahrenwaldt et al. [47], cyber attacks are launched in a two-step procedure: First, using a random process, times of attacks on the entire network (loss events) \(t_1, t_2,\ldots \) are generated. Second, for each node i, a possible random loss \(L_{i,j}\) is modeled, where j describes the index of the corresponding attack time. The loss, however, only materializes if node i is infected at the attack time. This is captured by the loss model

$$\begin{aligned} Y_{i,j} = L_{i,j} \cdot \mathbbm {1}_{X_i(t_j)=I}, \quad i=1,\ldots N, \quad j =1,2, \ldots . \end{aligned}$$ -

2.

In Xu and Hua [114], the loss model \(Y_{i,j}\) is given by

$$\begin{aligned} Y_{i,j} = \eta _i (D_{i,j}) + C_i(T^{recov}_{i,j}), \quad i=1,\ldots N, \quad j = 1,2,\ldots \end{aligned}$$with a legal cost function \(\eta _i\), the number \(D_{i,j}\) of data damaged in the infection j, and a cost function \(C_i\) depending on the recovery time \(T^{recov}_{i,j}\) of node i for infection event j. Here, the recovery time \(T^{recov}_{i,j}\) for each event j is obtained from the infection dynamics, while the data loss sizes \(D_{i,j}\) are assumed to follow a beta distribution.

3.2.4 On calibration and application

Epidemic network models in the cyber insurance literature mostly focus on a general assessment of the underlying structure of systemic cyber risks: aspects of risk contagion and propagation are characterized in a qualitative sense. For example, Fahrenwaldt et al. [47] study the effect of homogeneous, star-shaped, and clustered topologies on the resulting overall insurance losses in regular networks, demonstrating the strong impact of the network topology on risk propagation. Further, epidemic network models could also be applied to identify critical initial infection locations or critical network links that may augment cyber losses. The models are thus particularly useful for counterfactual simulations and have not yet been calibrated to real-world data.

More applications of epidemic network models to cyber risk contagion can be found in the engineering literature. However, these works do not study risk emergence on a global level. Instead, they analyze cyber risks which are building from the microstructure of interconnected IT devices in local environments. For example, Powell [97] focuses on local IT authentication procedures, where the corresponding vectors of lateral movements within a network can be interpreted as edges of a directed mathematical graph. Possible attack vectors are evaluated using classical metrics from network theory and epidemic spreading models of SIR type. More technical and IT-related aspects of cyber security issues in smart grids, i.e., networked power systems for energy production, distribution, and consumption, are surveyed and discussed in Wang and Lu [108].

However, for the quantitative assessment of systemic cyber risk from a regulatory or actuarial perspective, contagion among different companies needs to be studied on a global scale. A major challenge for accurate modeling is the estimation of the exact network structure and the epidemic parameters of past and future incidents—particularly due to data limitations and the speed of technological evolution. In Appendix E, we provide a brief overview and classification of existing estimation approaches for epidemic network models that are not necessarily related to cyber; in our view, however, it is conceivable that such approaches could also be implemented and further developed in a cyber context in future cyber risk research.

To overcome the estimation challenge, top-down approaches have been proposed in the literature. In Hillairet and Lopez [63], the impact of massive global-scale cyber-incidents, like the WannaCry scenario, on insurance losses and assistance services is determined. While network contagion is implicitly considered, it is not modeled within an actual network framework; instead, the authors choose the original population-based SIR model of Kermack and McKendrick [68] which describes deterministic dynamics of the total numbers of susceptible, infected, and recovered individuals within the global population of IT devices. The corresponding ODE system is given by

with constant global population size \(N = S(t) + I(t) + R(t)\). Parameters N, \(\tau \), and \(\gamma \) are estimated from data of the WannaCry cyber incident.

Given this global spread, the focus of the paper lies on the stochastic evolution of the insurer’s local portfolio consisting of \(n<<N\) policyholders and their corresponding losses. The influence of the global cyber epidemic on the local portfolio is captured by the hazard rate \(\lambda _{T_i^{infec}}\) of the policyholders’ infection times \(T_i^{infec}\):

i.e., the local hazard rates are assumed to be proportional to the number of infected individuals in the global population.

Most recently, this model has further been extended by replacing the homogeneous global population model with a network scenario of interconnected industry sectors, see Hillairet et al. [64]. The underlying directed and weighted network structure is derived from OECD data that measures the economic flow between different industries, and this data is interpreted as a reasonable estimate of the digital dependence between these sectors. Contagion between sectors is modeled using a deterministic multi-group SIR model for the total numbers of susceptible, infected, and recovered companies in the sectors. Due to the scarcity of data currently available, such top-down approaches present promising avenues for risk management and actuarial modeling.

Additionally, future research should analyze the implementation of more realistic loss models, that, e.g., contain different types of cyber events and capture their characteristic severity distributions (see also the discussion on classical frequency-severity approaches in Sect. 2.1.2). This would further strengthen the applicability of network models in practice.

3.3 Game-theoretic models and strategic interaction effects

In addition to contagion due to the interconnectedness of entities in cyber networks, potentially different objectives of the actors and their strategic interaction constitute a key characteristic of systemic cyber risk. The risk exposure of individuals is often interdependent, since it is influenced by the behavior of other actors. Game theory provides a suitable framework to study this dimension in the cyber ecosystem.

In the first part of this section, we briefly review and provide a short mathematical introduction to game theoretic approaches applied to study cyber risk and cyber insurance (Sect. 3.3.1). For an exhaustive review of the corresponding literature, we refer to the surveys Böhme and Schartz [19], Böhme et al. [12], and Marotta et al. [81]. We will adopt the notation from Marotta et al. [81]. Sect. 3.3.2 evaluates the considered models.

3.3.1 Game theoretic modeling approaches

The majority of game theoretic contributions focuses on self protection of interdependent actors in the presence or the absence of cyber insurance. A key question is whether and under which conditions cyber insurance provides incentives for self protection and improves global IT security. In this section, we presentFootnote 24 the main ideas and characteristics of such models.

Three Different Types of Actors in the Game

We consider three types of strategic players with different objectives: potential buyers of insurance (for simplicity, called agents), insurance companies, and the regulator.

-

1.

Agents are the potential cyber insurance policyholders. To capture interdependence, most models assume that agents form a network. Agent i aims to maximize her expected utility

$$\begin{aligned} \max {\mathbb {E}}[U_i(W_i)], \end{aligned}$$where

-

\(U_i\) denotes the utility function of agent i. Various types of utility functions are considered in the literature; most of them satisfy the classical von-Neumann-Morgenstern axioms. While some papers, such as Naghizadeh and Liu [90], Pal [93], and Pal et al. [94], allow for heterogeneous preferences, the majority of models assumes homogeneous preferences, i.e., \(U_i=U\) across all agents.

-

\(W_i\) is the financial position of agent i at the end of the insurance period. The value \(W_i\) depends on whether the agent has bought an insurance contract or not, on her investment \(C_i\) in cyber security, and on potential losses \(L_i\) in case the agent is affected by a cyber attack.

The agent’s self protection level \(x_i\) is a crucial model component when studying interdependence.Footnote 25 Most of the existing literature falls into either of the following two distinct categories: Some assume that only two security states are possible, secured or not, with the corresponding constant cost C or 0. Others propose a continuous scale of security levels, e.g., \(x_i\in [0,1]\). The value of \(x_i\) affects

-

the cost of self protection \(C_i\):

For a continuous spectrum of security levels, i.e., \(x_i\in [0,1]\), \(C_i=C(x_i)\) is typically assumed to be an increasing convex function of \(x_i\), reflecting that user costs rapidly increase when improving security.

-

agent i’s probability of becoming infected \(p_i := {\mathbb {P}}(I_i=1)\):

Obviously, this probability depends on the individual security level \(x_i\) of the agent i, but—due to interdependence—it may also be influenced by the individual security levels of other network participants.

Within this framework, agent i’s expected utility can be computed

-

(a)

without insurance:

$$\begin{aligned} {\mathbb {E}}[U_i(W_i)] = (1-p_i)\cdot U_i(W_i^0-C_i) + p_i\cdot U_i(W_i^0-L_i-C_i) \end{aligned}$$ -

(b)

with insurance:

$$\begin{aligned} {\mathbb {E}}[U_i(W_i)] = (1-p_i)\cdot U_i(W_i^0-\pi _i-C_i) + p_i\cdot U_i(W_i^0-L_i-C_i-\pi _i+\hat{L}_i) \end{aligned}$$

where

-

\(W_i^0\) denotes the initial wealth of agent i.

-

\(\pi _i\) is the insurance premium of agent i set by the insurer. This premium depends on the type of insurance market; we will discuss different models below.

-

\(L_i\) is the potential loss of agent i that is governed by a binary distribution: only two possible scenarios are considered. Either the agent experiences a cyber attack with a fixed loss size, or she is not attacked which corresponds to no loss. This particular setting excludes the possibility of different types of cyber attacks. Multiple attacks are also not considered.Footnote 26 The majority of game theoretic models relies on the assumption of constant homogeneous losses for all agents, i.e., \(L_i\equiv L\).

-

\(\hat{L}_i\) is the cover in case of loss which is specified in the insurance contract. Most papers assume full coverage, i.e., \(\hat{L}_i=L_i\), but some consider alternatively partial coverage, e.g., in order to mitigate the impact of information asymmetries, cf. Mazzoccoli and Naldi [84], Pal [93], Pal et al. [94].

-

-

2.

Insurance Companies: The insurer sets the cyber insurance premiums and specifies the insurance cover \(\hat{L}_i\). Insurance premiums depend on the market structure:

-

Competitive market: This is the prevailing model in the literature. The profits of the insurers are zero in this case; customers pay fair premiums. Competitive markets are a boundary case that almost surely leads to the insurer’s ruin in the long run.

-

Monopolistic market / Representative insurer: Another extreme is a market with only one insurance company. In these models, the impact of a monopoly can be studied. An alternative consists in studying objective functions that are different from the insurer’s profit. This situation is mostly studied in the context of regulation: The insurer represents a regulatory authority and is not aiming for profit maximization, but focuses on the wealth distribution in order to incentivize a certain standard of IT protection.Footnote 27

-

Immature market/Oligopoly: Instead of a monopoly, imperfect competition is studied with multiple insurers that may earn profits. The increments between the fair price and the insurance premium is determined by the markets structure.Footnote 28

-

-

3.

Regulator: Market inefficiencies and a lack of cyber security may be mitigated by regulatory policies. Regulatory instruments include mandatory insurance, fines and rebates, liability for contagion, etc. The choice of policies and their impact can be studiedFootnote 29 by introducing a third party, the regulator. The objective of the regulator is to maximize a social welfare function. This could, for example, be chosen as the sum of the expected utilities of the agents

$$\begin{aligned} \sum _i {\mathbb {E}}[U_i(W_i)]. \end{aligned}$$

Interdependent Self Protection in IT Networks

The strategic interaction of the three types of players introduced above is modeled as a game. The agents form an interconnected network and optimize their expected utility. Their individual security level and the amount of cyber insurance coverage serve as their controls. The insurance companies are provider of risk management solutions. In some models, a regulator is included as a third party with the aim to improve welfare, e.g., by implementing standards of protection in cyber systems.

The network topologies are, typically, quite stylized to guarantee tractability. For example, two-agent models are considered in Ogut et al. [92]. Most papers investigate complete graphs, e.g., Ogut et al. [92], Schwartz and Sastry [100] and Pal et al. [94]. Bolot and Lelarge [15] and Yang and Lui [115], in contrast, investigate networks with degree heterogeneity, but restrict their analysis to Erdős-Rényi random graphs.

Agents are interdependent in the network, since the infection probability \(p_i\) depends on the local security level \(x_i\) and levels of the other nodes \(y_{i} := (x_1,\ldots , x_{i-1}, x_{i+1}, \ldots , x_N)\) (or at least of i’s neighbors). In some cases, \(p_i\) is assumed to depend on an overall network security level as well.Footnote 30 However, in contrast to the models from Sect. 3.2, attacks do not result from a dynamic contagion process; instead, the infection is assumed to be static and the values \(p_i\) are derived from ad hoc schemes. The most common oneFootnote 31 assumes a continuous spectrum of security levels and computes \(p_i\) as the complementary probability of the case that neither a direct nor an indirect attack occurs:

where

-

\(p_i^{dir} = \psi _i(x_i)\) denotes the probability of direct infection of i through threats from outside the network. It is interpreted as a function of the individual security level \(x_i\).

-

\(p_i^{cont} = 1-\prod _{j\ne i} (1-h_{i,j}\psi _j(x_j))\) is the probability for node i to become infected through contagion. The probability for i to be infected via node j is given by \(h_{i,j}\), i.e., \(h_{i,j}\ne 0\) only if i and j are adjacent. This is where the underlying network topology comes into play.

In the absence of information asymmetries, the game between agents and the insurer(s) involves three perspectives:Footnote 32

-

1.

A legal framework is set by the regulator (if a regulator is present).

-

2.

Agents specify their levels of self protection and insurance protection and select from the available contract types to maximize their expected profits.

-

3.

Insurance companies compute the corresponding contract details, i.e., premiums \(\pi _i\) and indemnities \(\hat{L}_i\). In absence of information asymmetries between agents and the insurer(s), the protection levels of policyholders can be observed by the insurer and are reflected by the contract.

The model may be augmented to incorporate information asymmetries:

-

Moral hazard: A dishonest policyholder may behave in a way that increases the risk, if the insurer cannot properly monitor the policyholder’s behavior. In the game, this is represented by the possibility for agents to modify their self protectionFootnote 33 levels.

-

Adverse selection: Agents with larger risks have a higher demand for insurance than safer ones. The degree of the policyholders’ risk tolerances cannot be observed by the insurer. The self protection levels of policyholders is not precisely known by the insurer when the contract details are computed.

In most papers, cyber insurance is not associated with additional incentives to enhance self protection. In contrast, agents may prefer to buy insurance instead of investments in self protection, i.e., from a welfare perspective, they underinvest in security. These observations may be interpreted as an indication that regulatory interventions are necessary, such as fines and rebates, mandatory cyber insurance, or minimal investment levels.Footnote 34

3.3.2 On calibration and application

Many questions remain to be answered in future research, since the existing game theoretic models of cyber insurance and cyber security are oversimplified—thus, not yet fully applicable to real-world data:

-

Simplified network topologies: In the vast majority of the discussed literature, networks are assumed to be homogeneous. However, agents are typically heterogeneous in reality which substantially alters the cyber ecosystem. Network contagion and cyber loss accumulation are highly sensitive to the topological network arrangement; for example, important determinants are the presence (or the absence) of central hub nodes or clustering effects, see, e.g., Fahrenwaldt et al. [47]. For appropriate risk measurement and management these aspects need to be taken into account explicitly.

-

Static contagion: A key feature of cyber risk in networks is the systemic amplification of disturbances. From the insurer’s perspective, the contagion dynamics will clearly influence tail risks; an example are catastrophic incidents that affect a large fraction of its portfolio. Such events may be critical in terms of the insurer’s solvency. An understanding of cyber losses and an evaluation of countermeasures requires dynamic models of contagion processes.

-

Constant losses: In all considered game-theoretic models, the agent’s losses are assumed to be constant, i.e., modeled as binary random variables. However, in reality we observe that the severity of instances varies substantially due to the heterogeneity of cyber events, ranging from mild losses (e.g. malfunctioning of email accounts) to very large losses (e.g. attacks on production facilities, or breakdowns of systems).

Cyber insurance and instruments to control cyber risk depend on the structures of networks, the dynamics of epidemic spread processes, as well as loss models—and vice versa. These feedback loops need to be properly incorporated in future research. Key ingredients of systemic cyber risks—the interconnectedness captured by epidemic network models, and strategic interaction described in game-theoretic models—must be combined.

4 Pricing cyber insurance

Cyber risk comprises both non-systemic risk, further subdivided into idiosyncratic and systematic cyber risk, cf. Sect. 2, and systemic risk, cf. Sect. 3. Classical actuarial pricing, however, relies on the principle of pooling, and it is thus applicable for idiosyncratic cyber risks only. For systematic and systemic cyber risk, the appropriate pricing of insurance contracts requires more sophisticated concepts and techniques. A discussion of current industry practice for pricing cyber risks can be found in Romanosky et al. [99]. However, the described approaches do not yet cover the full complexity of cyber risk such that further (scientific) efforts are necessary. In this section, we explain and suggest suitable pricing techniquesFootnote 35 tailored to the three different components of cyber risk.

4.1 Pricing of non-systemic cyber risks

In non-life insurance, contracts are usually signed for 1 year. At renewal time, the insurer may adjust premium charges as well as terms and conditions, while the policyholder can decide whether or not to continue the contract. Premium calculation thus typically refers to loss distributions on a one-year time horizon. In this section, we adopt this market convention and consider premiums payable annually in advance.Footnote 36

In this chapter, we are concerned with a general pricing approach, and we do not restrict ourselves to frequency-severity models. We do, however, adopt some of the notations presented earlier. As introduced in Sect. 2, losses and associated premiums are considered in the granularity of cyber risk categories \(c\in \{1,\ldots ,C\}\) and homogeneous groups \(k\in \{1,\ldots , K\}\) of policyholders. Each pair \(m=(c,k)\) is called a cyber risk module. In terms of a modular system, the premium per risk category serves as a component for the overall premium. Homogeneous groups—specified for example in terms of covariates—correspond to tariff cells, i.e., any policyholder in group k should pay the same premium \(\pi ^{m,\text {non-sys}}\) per risk category c. We denote by \(n_k\) the number of policyholders in group k and assume that volumes and distributions of risks within a group are identical. Although adopting the previously introduced notation, we do not necessarily consider a frequency-severity approach, but discuss pricing strategies that may also be applied in a more general framework. The methodology is inspired by Wüthrich et al. [113] and Knispel et al. [74].

To decouple the pricing of idiosyncratic and systematic cyber losses in the absence of systemic risk, one possible approach is to construct a decomposition of the total non-systemic claims amount \({\mathcal {S}}_1^{m,\text {non-sys}}\) on a 1-year time horizon. This decomposition takes the form

where the total systematic claims equal \({\mathcal {S}}_1^{m, \text {systematic}}\) and the term \({\mathcal {S}}_1^{m, \text {idio}}\) denotes the total idiosyncratic fluctuations around the systematic claims. We explain below how a premium can be computed per risk group. Finally, a smoothing algorithm might be helpful in order to avoid structural breaks between the premiums of risk groups with similar covariates. The terms \({\mathcal {S}}_1^{m, \text {idio}}\) and \({\mathcal {S}}_1^{m, \text {systematic}}\) are unique only up to a constant that may be subtracted of one of the terms and added to the other.

In order to obtain a decomposition (7), we consider the \(\sigma \)-algebra \({\mathcal {F}}\) that encodes the systematic information. This is, for example, the information that is generated by observing the underlying exogenous stochastic factors. The full information at the time horizon of one year is jointly generated by the \(\sigma \)-algebra \({\mathcal {F}}\) and idiosyncratic fluctuations, also called technical risks, sometimes explicitly encoded by another \(\sigma \)-algebra \({\mathcal {T}}\). A decomposition (7) can be obtained by setting