Abstract

This study investigates an optimal consumption–investment problem in which the unobserved stock trend is modulated by a hidden Markov chain that represents different economic regimes. In the classic approach, the hidden state is estimated using historical asset prices, but recent technological advances now enable investors to consider alternative data in their decision-making. These data, such as social media commentary, expert opinions, COVID-19 pandemic data and GPS data, come from sources other than standard market data sources but are useful for predicting stock trends. We develop a novel duality theory for this problem and consider a jump-diffusion process for alternative data series. This theory helps investors identify “useful” alternative data for dynamic decision-making by providing conditions for the filter equation that enable the use of a control approach based on the dynamic programming principle. We apply our theory to provide a unique smooth solution for an agent with constant relative risk aversion once the distributions of the signals generated from alternative data satisfy a bounded likelihood ratio condition. In doing so, we obtain an explicit consumption–investment strategy that takes advantage of different types of alternative data that have not been addressed in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The optimal consumption–investment problem is a classic problem of modern finance. An investor’s objective is to maximise the expected utility of consumption and terminal wealth over a finite horizon. By formulating the problem in a continuous-time framework, Merton’s pioneering work [35] became the cornerstone for the development of a stochastic optimal control theory to solve this type of problem. Many generalisations of the classic models have since been developed to more accurately model asset price dynamics. For example, Elliott and van der Hoek [20], Chen et al. [12], Sotomayor and Cadenillas [44], Yin and Zhou [50] and Zhou and Yin [52] study a regime-switching model in which the model coefficients are assumed to be modulated by a Markov chain. The different states of the chain are interpreted as different economic states or market modes. Bäuerle and Rieder [5, 6, 39], Honda [25] and Sass et al. [41] argue that the states of the Markov chain are not directly observable so that investors must learn and estimate them from observation, leading to partial information formulations. The literature refers to this model as a hidden Markov model.

In the past, investors have only been able to learn about the hidden state of the economy from easily accessible historical asset prices. However, investors are now actively acquiring alternative data, using modern technology to supplement their decision-making. Social media commentary, internet search results, COVID-19 pandemic data and GPS data are examples of such alternative data, that is, data that come from sources other than standard market data sources but are useful for predicting economic trends. Recent studies such as Frey et al. [24], Callegaro et al. [9], Fouque et al. [23] and Sass et al. [41] support the use of aggregate consumption and macroeconomic indicators and expert opinions as additional sources of observation. The effective use of alternative data can improve estimation accuracy and the performance of risk-sensitive benchmarked asset management; see Davis and Lleo [17, 18].

However, incorporating alternative data into dynamic decision-making creates new technical difficulties because of the additional randomness of these data. The aforementioned studies apply stochastic control techniques to an equivalent primal problem, the so-called separated problem, which is deduced from the original primal problem via filtering. This solution procedure is similar to that of a stochastic control problem with partial information, but the additional randomness complicates the mathematical analysis of the solvability of the problem and the eligibility of the solution procedure. Indeed, studies rarely discuss the conditions under which alternative data and their corresponding filters allow a stochastic control framework, such as the dynamic programming principle (DPP), to be applied to the underlying problem. One exception is the study of Frey et al. [24], which requires the density functions of the signals generated from alternative data to be continuously differentiable with common bounded support and to be uniformly bounded from below by a positive constant. This obviously excludes Gaussian signals and the most commonly used distributions. According to this criterion, [24] prove the DPP and show that there exists a unique value function for power utility. In other words, the relevance of different types of alternative data for dynamic decisions remains unclear. The lack of rigorous results in a general setting limits our understanding of optimal policies and the use of alternative data from various sources.

To fill this theoretical gap, we propose a new methodology based on duality theory that can be applied to general types of alternative data in the context of consumption–investment problems with a more general class of utility functions, in particular power utility functions with a negative exponent. We provide new and concrete results for specific problems that supplement those in the literature. For example, we identify in Condition 2.1 a bounded likelihood ratio (BLR) condition for alternative data signals in a bull–bear economy for an agent with power utility. That condition allows us to check the eligibility of signals from a wide range of distributions, such as Gaussian, exponential family and Gaussian mixture distributions. We provide three examples in Sect. 2.6.

Following the literature, we postulate the price of risky assets as a geometric Brownian motion in which the drift is modulated by a hidden economic state, which also affects alternative data. Inspired by Davis and Lleo [19], the alternative data are sampled from a regime-switching jump-diffusion process with parameters depending on the hidden state. This consideration aims to capture the realistic nature of alternative data sources, such as ecosystems, electricity prices, manufacturing and production forecasts; see for example Sethi and Zhang [42], Xi [46], Xi and Zhu [48], Yin and Zhu [51], Zhu et al. [53], Weron et al. [45] and the references therein. It also covers examples studied in the literature (including Callegaro et al. [9, Eq. (2.2) and Example 3.8] and Frey et al. [24, Sect. 2]). When alternative data are incorporated into hidden economic state estimations for dynamic decision-making, our problem formulation involves both a market and alternative data filtering scheme, so that appropriate regularity is required for the alternative data generation process to ensure the use of the DPP based on the adopted filter. Such use of alternative data is a clear difference from problems formulated using conventional jump-diffusion factor processes.

For the above general setup, our main theorem (Theorem 3.2) establishes an equivalence between the primal partial information problem and the dual problem, the latter simply involving a minimisation over a set of equivalent local martingale measures. To the best of our knowledge, our study is the first to extend the use of the duality approach from a partial information framework using a single observation process (such as Karatzas and Zhao [28], Lakner [33, 34], Pham and Quenez [37], Putschögl and Sass [38] and Sass and Haussmann [40]) to mixed-type observations using alternative data. The aforementioned studies characterise their dual formulation based on a single equivalent martingale measure, whereas we use non-unique equivalent martingale measures because of the additional randomness of alternative data. Once the dual problem is solved, the solution of the primal problem is obtained using convex duality. We find that the dual problem, which is a stochastic control problem but differs greatly from the primal problem, is more tractable. This enables us to use the DPP for the dual stochastic control problem under a general abstract condition for the filter equation. In terms of application, this condition describes the type of alternative data that can be considered “useful” for dynamic decision-making with the DPP. Specifically, the dual problem can be read at the analytical level of the Hamilton–Jacobi–Bellman (HJB) equation, thus providing a dual equation and improving our understanding of the optimal strategy. To demonstrate the entire solution procedure, we apply our general methodology to a concrete case study and explicitly derive a feedback optimal consumption–investment strategy by analysing the dual equation in Sect. 2. We prove a verification theorem (Theorem 2.7) which shows that the dual value function is the unique smooth solution of the dual equation. These results are obtained under a mild condition (Condition 2.1) for alternative data signals, including commonly seen examples that have not been addressed previously.

This study provides technical contributions to overcome the mathematical challenges to obtain these new results. In the framework of the aforementioned case study, the filter process is a jump-diffusion process with Lévy-type jumps, that is, the intensity of the jump measure depends on the filter process itself. This subtle feature creates analytical challenges in establishing the verification theorem. One may expect to derive the dual equation via the DPP first heuristically and then, based on the regularity of the solution to the dual equation (i.e., existence, uniqueness and smoothness), to verify the desired dual value function by formally applying Itô’s formula and a martingale argument. However, rigorously proving this regularity is difficult because the dual equation is a degenerate partial integro-differential equation (PIDE) with embedded optimisation. To overcome these difficulties, we first show that the dual value function is a bounded Lipschitz-continuous function and therefore a \(C^{1}\)-function of its arguments in Proposition 4.5. The result is technically innovative, as we introduce an auxiliary process and use Radon–Nikodým derivatives to address the Lévy-type jumps of the filter process. As an immediate consequence, the filter process turns out to be a Feller process (Proposition 2.6), indicating that the DPP is valid and the solution procedure is feasible. We then show that the dual equation has a unique smooth (\(C^{1,2}\)) solution. The method is based on the link between viscosity solutions and classical solutions for PIDEs, following Pham [36], Davis et al. [15] and Davis and Lleo [16]. However, our context differs from theirs in that ours contains an optimisation embedded in the nonlocal integro-differential operator of the PIDE. This distinct feature leads to both nonlinearity and degeneracy in the state space boundaries, so that we need to address both difficulties simultaneously. Finally, we obtain explicit formulas for the optimal strategies and wealth processes in terms of functions of the solution to the dual equation in Proposition 2.8.

We believe that an extensive analysis of such a well-known case study is a valuable contribution to the literature. Although some studies in the stochastic control literature examine a controlled jump-diffusion model (such as Barles and Imbert [4], Davis and Lleo [16], Pham [36] and Seydel [43]), most jump mechanisms are exogenous and do not depend on the state process. To the best of our knowledge, the only related result presented in Frey et al. [24, Sect. 4] proposes a distributional transformation and reconstruction of the filter process as an exogenous type of jump, so that the techniques used in the literature discussed above can be applied. Their approach imposes restrictive conditions on alternative data and a predominant constraint on trading strategies to obtain the necessary technical estimates. In contrast, we derive technical estimates to develop empirically testable conditions that are consistent with the abstract general condition of the duality approach, and then we solve the HJB equations for the dual problem in a more general setting.

The remainder of this paper is organised as follows. For simplicity and better illustration, Sect. 2 begins with a concrete optimal consumption–investment problem in a bull–bear stock market, where expert opinions are considered alternative data. We detail the solution procedure for solving such a stochastic optimisation problem and provide an explicit solution to the constant relative risk aversion (CRRA) utility function. This enables us to articulate the main mathematical challenges of the solution procedure and the advantage of the dual formulation. By considering a general regime-switching jump-diffusion model for alternative data series and a general set of utility functions, Sect. 3 develops the duality approach under partial information using alternative data. Specifically, we prove an equivalence between the primal and dual problems and present a condition for the filter process that ensures the validity of the DPP in the dual problem. Section 4 presents the proof of the verification theorem (Theorem 2.7) used in Sect. 2 to show that the dual value function is the unique classical solution of the HJB equation. Section 5 concludes the paper.

2 Expert opinions as alternative data

Before developing our duality theory with alternative data in a general setting, we specifically consider expert opinions and power utilities to present the solution procedure of our duality approach. This specification allows us to make the dual formulation and regularity of the approach transparent without an overwhelming notational burden. We also show that the solution procedure involves a stochastic optimal control problem in the dual problem and produces optimal solutions at the analytical level of the HJB equation in the dual problem. Section 3 then presents our analysis in a general setting.

2.1 A hidden Markov bull–bear financial market

For a fixed date \(T>0\), which represents the fixed terminal time or investment horizon, we consider a filtered probability space \((\Omega ,\mathcal{F},\mathbb{F},\mathbb{P})\), where ℙ denotes the physical measure and \(\mathbb{F}: = (\mathcal{F}_{t})_{t\in [0,T]}\) the full information filtration, satisfying the usual conditions, i.e., \(\mathbb{F}\) is right-continuous and complete. For a generic process \(G\), we denote by \(\mathbb{F}^{G} = (\mathcal{F}_{t}^{G})_{t\in [0,T]}\) the natural filtration generated by \(G\), made right-continuous and augmented with ℙ-nullsets.

We consider a two-regime hidden Markov financial market model in which the transitions of the “true” regime are described by a two-state continuous-time hidden Markov chain \(\alpha =(\alpha _{t})_{t\in [0,T]}\) valued in \(\mathcal{S}:=\{1,2\}\) on \((\Omega ,\mathcal{F},\mathbb{F},\mathbb{P})\). This model considers bull and bear markets, with \(\alpha _{t}=1\) indicating the “bull market” and \(\alpha _{t}=2\) the “bear market” state at time \(t\). The Markov chain is characterised by a generator \(\mathbf{A}\) of the form

For times \(t\in [0,T]\), we describe the financial market model as follows:

(i) The risk-free asset is given by \(S^{0}_{t} = e^{rt}\) with a risk-free interest rate \(r > 0\).

(ii) The risky asset \(S=(S_{t})_{t\in [0,T]}\) satisfies the stochastic differential equation (SDE) given by

where \((W_{t})_{t\in [0,T]}\) is a standard \(\mathbb{F}\)-Brownian motion on \((\Omega ,\mathcal{F},\mathbb{P})\) independent of \(\alpha \). The volatility of the risky asset \(\sigma \) is a positive constant, and its drift function \(\mu \) satisfies \(\mu (\alpha _{t}) \in \{\mu _{1},\mu _{2}\}\), where \(\mu _{1}>\mu _{2}\) are constant drifts under bull and bear markets, respectively.

Unlike the Markov-modulated regime-switching model, which treats \(\alpha \) as observable, we assume that the representative agent does not observe \(\alpha \) directly. The agent’s observation process has two components: the asset price process \(S\) and an alternative data process in the form of expert opinions. Specifically, the agent receives noisy signals about the current state of \(\alpha \) at discrete time points \(T_{k}\). The aggregated alternative data process \(\eta \) is a standard marked point process that depends on the Markov chain and is described by the double sequence \((T_{k}, Z_{k} )_{k\ge 0}\) representing the times at which the signal arrives and complemented by a sequence of random variables, one for each time, which denote the size of the signal; it satisfies

We assume that the intensity of the signal arrivals is given by a constant \(\lambda \). In other words, the signal arrival time is independent of the hidden state. The signal \(Z_{k}\) takes values in a set \(\mathcal{Z} \subseteq \mathbb{R}\), and given \(\alpha _{T_{k}} = i\in \{1,2\}\), the distribution of \(Z_{k}\) is absolutely continuous with the Lebesgue density \(f_{i}(z)\). Equivalently to (2.2), we have

where \(\delta _{(T_{k}, \Delta \eta _{T_{k}})}(\,\cdot \,, \,\cdot \,)\) denotes the Dirac measure at the point \((T_{k}, \Delta \eta _{T_{k}}) \in [0,T]\times \mathcal{Z}\); so \(N\) is an integer-valued random measure on \([0,T]\times \mathcal{Z}\), where \((\mathcal{Z}, \mathcal{B}(\mathcal{Z}))\) is a given Borel space. Specifically, the \(\mathbb{F}\)-dual predictable projection (see Definition D.2 in Appendix D) of the random measure \(N\) is given by  .

.

In other words, the information available to the agent is given by the observation filtration \(\mathbb{H} = (\mathcal{H}_{t})_{t\in [0,T]}\) with \(\mathbb{H}: = \mathbb{F}^{S} \vee \mathbb{F}^{\eta} \subseteq \mathbb{F}\). This is a partial information setting because (2.1)–(2.3) constitute a filtering system in which \(\alpha \) and \((S, \eta )\) play the roles of state and observation, respectively.

2.2 The optimal consumption–investment problem

Let \(\vartheta _{t}\) be the net amount of capital allocated to the risky asset and \(c_{t}\) the rate at which capital is consumed at time \(t\). The agent’s wealth process \(V^{v,\vartheta ,c}\) corresponding to the choice \((\vartheta ,c)\) and initial wealth \(v \in \mathbb{R}_{++}:=(0,\infty )\) follows

Formally, we define the agent’s choices as follows:

(h1) \(\vartheta =(\vartheta _{t})_{t \in [0, T]}\) is an investment process if it is a real-valued ℍ-predictable process with trajectories that are locally square-integrable on \([0,T)\).

(h2) \(c=(c_{t})_{t \in [0, T]}\) is a consumption process if it is a real-valued nonnegative ℍ-predictable process with trajectories that are locally integrable on \([0,T)\).

As a class of admissible controls, we consider pairs of processes \((\vartheta ,c)\) satisfying (h1) and (h2) and such that the corresponding wealth process \(V\) is nonnegative. The quantity to be maximised in our optimisation problem is

where \(U_{i}:(0,\infty )\rightarrow \mathbb{R}\), \(i=1,2\), is a utility function of the form

Note that the pair \((\vartheta ,c)\) must be predictable for the available information flow ℍ. Therefore, the stochastic control problem is under a partial information framework that contains more available information than that of classic partial information problems due to alternative data. These alternative data improve the estimation of the state \(\alpha \) of the economy if they contain useful information. The estimation procedure is known as filtering, and we need to study the conditions that make expert opinions useful under a filtering scheme.

2.3 Filtering

Using standard notations in the filtering literature, the optional projection of a generic process \(g=(g_{t})_{t\in [0,T]}\) onto the filtration ℍ is denoted by \(\hat{g}_{t} = \mathbb{E}[g_{t}| \mathcal{H}_{t}]\). The filter of the hidden Markov chain \(\alpha \) is \(\pi =(\pi _{t})_{t\in [0,T]}\) with \(\pi _{t} = \mathbb{P}[\alpha _{t}=1|\mathcal{H}_{t}]\). For a process of the form \(g_{t} = G(\alpha _{t})\), its optional projection is given by

We define the process \(\widetilde{W}=(\widetilde{W}_{t})_{t\in [0,T]}\) such that for any \(t\in [0, T]\),

where \(\theta \) is the bounded function defined as

Then \(\widetilde{W}\) is a \((\mathbb{P},\mathbb{H})\)-Brownian motion (the so-called innovation process) according to classical results of filtering theory; see Bain and Crisan [3, Proposition 2.30]. We define the predictable random measure \(\gamma ^{\mathbb{H}}\) and the function \(\hat{f}:[0,1]\times \mathcal{Z}\rightarrow \mathbb{R}\) as

and \(\gamma ^{\mathbb{H}}\) is known as the ℍ-dual predictable projection of \(N\) according to the standard results of filtering theory; see Ceci and Colaneri [11, Proposition 2.2]. We thus introduce the ℍ-compensated jump measure of \(N\) given by

According to standard arguments from filtering theory (see Callegaro et al. [9, Theorem 3.6]), the filter \(\pi \) is the unique strong solution of the Kushner–Stratonovich equation given by

with the initial value \(\pi _{0}= x \in [0,1]\) and the function \(\xi :[0,1]\times \mathcal{Z} \rightarrow \mathbb{R} \) defined as

Note that the last term in (2.10) can be expressed as

where the last term in the above equation is equal to 0 because both \(f_{1}\) and \(f_{2}\) are density functions defined on \(z\in \mathcal{Z}\). Therefore, this is equivalent to writing (2.10) as

2.4 Primal and dual control problems

As we want to apply dynamic programming techniques, we start by embedding the optimisation problem into a family of problems indexed by generic time–space points \((t,x,v)\in [0, T]\times [0,1]\times \mathbb{R}_{++}\) which denote the starting time, the initial guess of the filter process and the initial wealth level. We denote the domain of \((t,x)\) by \(\mathcal{U}_{T}:=[0,T)\times (0,1)\) and set \(\overline{\mathcal{U}}_{T}:=[0,T]\times [0,1]\).

For any given and fixed \((t,x)\in \overline{\mathcal{U}}_{T}\), we define the filtration \(\mathbb{H}^{t}:= (\mathcal{H}_{s}^{t})_{s\in [t,T]}\) by

where \(\mathcal{N}_{\mathbb{P}}\) is the family of all sets that are contained within a ℙ-nullset and \(\widetilde{W}\) and \(N\) are defined in (2.7) and (2.3). The solution of (2.12) on \([t,T]\) with the initial guess \(\pi _{t}=x\) is denoted by \(({\pi}_{s})_{s\in [t,T]}\). We introduce the measure \(\mathbb{P}^{t,{x}}\) on \(\mathcal{H}_{T}^{t}\) such that \(\mathbb{P}^{t,{x}}[{\pi}_{t} = {x}]=1\), with \(\mathbb{E}^{t,{x}}\) being the expectation operator under \(\mathbb{P}^{t,{x}}\).

For \(v\in \mathbb{R}_{++}\), consider all pairs \((\vartheta ,c)\) of \(\mathbb{H}^{t}\)-predictable processes that are defined analogously to (h1) and (h2), and \(V^{t,x,v,\vartheta ,c}\) is the solution of (2.4) starting at time \(t\) from \(v\) under the control \((\vartheta ,c)\). The class \(\mathcal{A}(t,x,v)\) of admissible controls, which depend on the initial value \((t,x,v)\in \overline{\mathcal{U}}_{T}\times \mathbb{R}_{++}\), is defined as the set of pairs \((\vartheta ,c)\) satisfying the above requirements and such that we have the no-bankruptcy constraint

Clearly, the admissible set is not empty for all \(v\in \mathbb{R}_{++}\) because for each initial value, the null strategy \((\vartheta ,c)\equiv (0,0)\) is always admissible. The agent’s objective function is postulated to be

We define the primal problem as

with \(J\) being its value function, which we call the primal value function. To apply the duality approach, we define the convex dual function \(\widetilde{U}_{i}\) of the concave utility function \(U_{i}\) as

where \(I_{i}(\,\cdot \,)\) is the inverse function of \(\partial _{c}U_{i}(\,\cdot \,)\). For the function \(U_{i}\) in (2.6), we have \(\widetilde{U}_{i}(y)=-y^{\beta}/\beta \) with \(I_{i}(y) = y^{\beta -1}\) and \(\beta :=-\kappa /(1-\kappa )\), \(i=1,2\). We also introduce the process \((Z_{s}^{\nu})_{s\in [t,T]}\) with initial value \(Z_{t}^{\nu}=1\) defined for an \(\mathbb{H}^{t}\)-predictable process \((\nu (s,z))_{s\in [t,T]}\) indexed by \(\mathcal{Z}\) (see Definition D.1 in Appendix D) as

where \(\hat{\theta}(\pi )\) is the optional projection of \(\theta (\alpha )\) defined in (2.8) and \(\hat{f}\) is defined in (2.9). We consider the admissible set of all \((\nu (s,z))_{s\in [t,T]}\) that satisfies the Lépingle–Mémin condition (see Ishikawa [26, Theorem 1.4]) given by

Let \(\Theta ^{t}\) be the set of admissible \((\nu (s,z))_{s\in [t,T]}\). Specifically,

which is not empty as \(\nu \equiv 0 \) is admissible. As \(\hat{\theta}\) is bounded, the local martingale \(Z^{\nu}\) is a martingale for all \(\nu \in \Theta ^{t}\). We thus define a \(\mathbb{P}^{t,x}\)-equivalent probability measure \(\mathbb{Q}^{\nu}\) on \((\Omega ,\mathcal{H}^{t}_{T})\) via \({d\mathbb{Q}^{\nu}}/{d\mathbb{P}^{t,x}}\vert _{\mathcal{H}^{t}_{T}} = Z^{\nu}_{T}\). We observe that

and that \(Z^{\nu}\) satisfies the SDE

where \((\pi _{s})\) is the solution of (2.12) with \(\pi _{t}= x\). In addition, for each \(\nu \in \Theta ^{t}\),

Let \((t,x,v)\in \overline{\mathcal{U}}_{T}\times \mathbb{R}_{++}\), \((\vartheta ,c) \in \mathcal{A}(t,x,v)\), \(\nu \in \Theta ^{t}\) and set \(V=V^{t,x,v,\vartheta ,c}\). Itô’s lemma shows that \(( e^{-rT}Z^{\nu}_{T}V_{T} + \int _{t}^{T} e^{-rs}Z^{\nu}_{s}c_{s} dt) \) is a \((\mathbb{P},\mathbb{H})\)-supermartingale (as a positive local martingale), which implies that due to the arbitrariness of \(\nu \in \Theta ^{t}\),

With the definition of \(\widetilde{U}_{i}\) in (2.15), for all \(y\in \mathbb{R}_{++}\), \((\vartheta ,c) \in \mathcal{A}(t,x,v)\) and \(\nu \in \Theta ^{t}\), the agent’s objective function \(\widetilde{J}\) defined in (2.13) satisfies

Taking the supremum of \(\widetilde{J}\) over \((\vartheta ,c)\in \mathcal{A}(t, v,x)\), the primal value function \(J\) defined in (2.14) satisfies for any \(\nu \in \Theta ^{t}\) and \(y\in \mathbb{R}_{++}\) that

Thus the right-hand side of (2.20) is an upper bound of \(J\). Taking the infimum over \(\nu \in \Theta ^{t}\) on the right-hand side of (2.20), we consider for \((t,x,y)\in \overline{\mathcal{U}}_{T}\times \mathbb{R}_{++}\) the dual optimisation problem

where \(\widetilde{L}(t,x,y;\nu ) = \mathbb{E}^{t,x}[\widetilde{U}_{1}(y e^{-r(T-t)}Z_{T}^{ \nu}) + \int _{0}^{T} \widetilde{U}_{2}( y e^{-r(s-t)}Z_{s}^{\nu}) dt] \). Let \(\hat{L}\) be the value function associated with this problem, called the dual value function, so that

It then follows from (2.20) that

There is no duality gap between the primal problem (2.14) and the dual problem (2.21) when we have equality in (2.23). The current formulation suggests that one can first work on the dual problem and then transform it back into the primal problem by closing the duality gap.

2.5 HJB in the dual problem

The dual problem reduces the original problem with two control variables to only one control process \(\nu \in \Theta ^{t}\). The natural choice for solving this problem is a heuristic use of the DPP: for any \(\mathbb{H}^{t}\)-stopping time \(\tau \) valued in \([t,T]\), we have

Therefore, the HJB equation of the dual value function is derived as

where the dynamics of (2.16) for \(Z^{\nu}\) and of (2.10) for \(\pi \) generate the operator

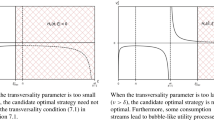

Intuitively, the dual optimiser \(\nu ^{*}\) can be constructed in feedback form via the first-order conditions in the HJB equation (2.25) if the candidate process is admissible, i.e., fulfilling conditions (2.17) and (2.18). The remaining task is to determine regularity conditions under which the alternative data and corresponding filters enable the above prescription.

2.6 Regularity: bounded likelihood ratio

In Sect. 3, we study regularity much more generally, in terms of the choice of utility functions and alternative data processes, such that the above prescription is true. However, regularity turns out to be more abstract. Using the expert opinion setting in this section, we provide concrete technical conditions for the probability density functions of alternative data signals that validate (2.24) and the proposed solution procedure.

Condition 2.1

The probability density functions \(f_{1}\) and \(f_{2}\) of the signals in (2.2) have the same support \(\mathcal{Z}\) and admit finite second moments such that

for \(0\le b_{\min}<1<b_{\max}\). We also want the dissimilarity between the two distributions to be reasonably bounded. Specifically, we use Amari’s alpha-divergence measure \(D_{a}(f_{1} \| f_{2})\) (see Amari [2, Chap. 3.5]) with \(a=3\) to characterise such dissimilarity and require that for some constant \(C\), we have

The interpretation of Condition 2.1 is that it ensures a kind of bounded likelihood ratio — we should not expect the arriving signals to be particularly strong in terms of distinguishing between the two regimes. Otherwise, the situation becomes similar to direct observation of the state \(\alpha \). Note that Condition 2.1 based on the dual problem covers a wider range of signals than those based on the primal problem in the literature. Indeed, it clearly covers examples in Frey et al. [24, Assumption 5.1 and Remark 5.2], i.e., continuously differentiable densities with common bounded support that are uniformly bounded from below by a strictly positive constant. Furthermore, Condition 2.1 covers more examples of discrete and continuous distributions defined in unbounded domains. We list a few examples below.

Example 2.2

Consider two exponential family distributions as

where \(v_{j}\) is a parameter of the distribution and \(g_{j}\) is a fixed feature of the family, such as \((1,x,x^{2})\) in the Gaussian case. Condition 2.1 holds if there exists a constant \(C\) such that \(\sum _{j} (v_{j}^{(2)}-v_{j}^{(1)})g_{j}(z) \le C\) and \(\sum _{j} (3v_{j}^{(1)}-2v_{j}^{(2)})g_{j}(z) \le C\) for all \(z\). We provide an example that clearly satisfies these conditions as

Example 2.3

As a direct extension of Example 2.2, Condition 2.1 holds for a Gaussian density \(f_{2}(z)\) and \(f_{1}(z):=\sum _{j=1}^{n} a_{j} f_{1}^{(j)}(z)\) with \(\sum _{j} a_{j} = 1\), which is a mixture of Gaussian distribution densities, when each pair \((f_{1}^{(j)}, f_{2})\) fulfils the conditions in Example 2.2.

Example 2.4

Consider a mixture distribution and a Gamma distribution defined on \(\mathbb{R}_{++}\) given as

where \(a_{1},a_{2}\in (0,1)\) and \(\mathcal{G}(a):=\int _{\mathbb{R}_{++}} z^{a-1}e^{-z}dz\) is the Gamma function. Condition 2.1 holds when \(b_{\min}=0\), \(b_{\max}=\max \{ 1/a_{2}a_{1},1/(1-a_{2})e\} / \mathcal{G}(a_{1})\) and with \(L_{F}=e^{3}\mathcal{G}(a_{1})^{2}\mathcal{G}(2-2a_{1})+2e^{2} \mathcal{G}(a_{1})^{2}a_{1}^{2}\).

Under Condition 2.1, we derive the following two useful properties of the filter process \(\pi \). The proofs are provided in Appendix C.

Proposition 2.5

Under Condition 2.1, both 0 and 1 are unattainable boundaries for the filter process \(\pi \), the solution of (2.12). In other words, they cannot be reached from inside the state space \((0,1)\).

Proposition 2.6

Under Condition 2.1, the Markov filter process \(\pi ^{x_{0}}:=(\pi ^{x_{0}}_{t})_{t\ge 0}\), defined as the solution of (2.12) starting from time 0 and a given starting point \(x_{0}\in (0,1)\), is a Feller process. For any bounded and continuous function \(f\), it follows that \(x \mapsto P_{t}f(x): = \mathbb{E}[f({\pi}^{x}_{t})]\) is continuous for all \(t\ge 0\) and \(\lim _{t\downarrow 0}P_{t}f(x) = f(x)\).

Proposition 2.5 implies that when characterising the dual value function \(\hat{L}\) using the HJB method, no conditions should be imposed on the boundaries of the filter, neither on the value of the function nor on its partial derivatives (see Bayraktar et al. [7, Definition 2.5 and Remark 2.6]). Proposition 2.6 implies that a similar initial guess of the hidden state will lead to similar developments in filtering and that the filter itself changes in a reasonably continuous manner. The Feller property of the filter process further validates the DPP in (2.24) (see Theorem 3.1 in a general setting in Sect. 3). According to Propositions 2.5 and 2.6, we have the following verification theorem.

Theorem 2.7

Under Condition 2.1, the dual value function \(\hat{L}\) is the unique classical solution in \(C(\overline{\mathcal{U}}_{T}\times \mathbb{R}_{++})\cap C^{1,2,2}({ \mathcal{U}}_{T}\times \mathbb{R}_{++})\) of the HJB equation (2.25), subject to the boundary condition \(\hat{L}(T,x,y) = \widetilde{U}_{2}(y)\) for \(x \in [0,1] \) and \(y \in \mathbb{R}_{++}\). In addition, \(\hat{L}\) satisfies

where \(\hat{\Lambda}\in C(\overline{\mathcal{U}}_{T})\cap C^{1,2}({ \mathcal{U}}_{T})\), \(\beta =-\kappa /(1-\kappa )\) and \(\kappa \) is the risk aversion parameter of the utility function defined in (2.6). For \(y\in \mathbb{R}_{++}\), the dual problem (2.21) admits a dual optimiser \(\nu ^{*}\in{\Theta}^{t}\) given by

Proof

See Sect. 4. □

Given that there exists a dual optimiser in \(\Theta ^{t}\) for the dual problem (2.21), we now turn to the proof that there is no duality gap. We have the following result (a special case of Theorem 3.2 below) that closes the duality gap and derives the optimal controls for the primal problem. The proof is provided in Appendix C.

Proposition 2.8

Under Condition 2.1, there is no duality gap between the primal and dual problems (2.14) and (2.21). For fixed \((t,x,v)\in \mathcal{U}_{T}\times \mathbb{R}_{++}\), the optimal wealth process is given by

where \(\hat{\Lambda}\) and \({\nu}^{*}\) are given in Theorem 2.7and \((Z_{s}^{{\nu}^{*}})_{s\in [t,T]}\) satisfies

The optimal controls \(({\vartheta}^{*},{c}^{*})\) of the primal problem take the feedback forms

3 Duality with alternative data: a general dynamic programming approach

In this section, we present a general dynamic programming approach to solve the optimal choice problem based on duality, under a broader class of time-dependent utility functions (Assumption A.1) and more general alternative data situations. We note that our results can be easily extended to cases with more than two economic states, which corresponds to a finite-state hidden Markov chain \(\alpha \).

We start by describing the general alternative data model \(\eta \) that serves as the setting for our (abstract) results. In numerous practical scenarios, systems have discontinuous trajectories and structural changes. Commonly used jump-diffusion models in financial asset pricing models (see Cont and Tankov [13, Chap. 1]) may fail to account for structural changes in alternative data from outside the standard financial market. Accordingly, we are interested in a regime-switching jump-diffusion model because it incorporates discontinuous changes with regime-switching jump sizes and intensities. Mathematically, we model \(\eta \) by using

where \(B\) is a standard \(\mathbb{F}\)-Brownian motion, \(N_{\eta}(dt,dz)\) is an \(\mathbb{F}\)-adapted integer-valued random measure on \([0,T] \times \mathcal{Z}\), both independent of the Brownian motion \(W\) and the hidden Markov chain \(\alpha \). In particular, the intensity measure of \(N_{\eta}\) is given by \(\gamma (\alpha _{t-},dz)dzdt\), which depends on the hidden state. To avoid unnecessary technical details, we simply assume what we need: (3.1) has a unique strong solution. Some sufficient conditions are summarised in Assumption D.3 in Appendix D.

To proceed, we need to know the structure of \(\mathcal{Q}\) introduced in (2.19), i.e., the set of all ℙ-equivalent probability measures ℚ on \(\mathcal{H}_{T}\) for which the discounted price of the risky asset is a ℚ-martingale. This requires us to define the innovation processes associated with the diffusion and jump parts of (3.1). Recalling the notations introduced at the beginning of Sect. 2.3 and using (2.7), we define a \((\mathbb{P},\mathbb{H})\)-Brownian motion \(\widetilde{B}\) and an ℍ-compensated jump measure \(\overline{m}^{\pi}\) as

where \(m(d t, d q):=\sum _{s: \Delta \eta _{s} \neq 0} \delta _{(s, \Delta \eta _{s})}(d t, d q)\) is the integer-valued random measure associated with the jumps of the process \(\eta \), \(\lambda _{t}(\alpha _{t-})\phi _{t}(\alpha _{t-}, dq)dt\) is the ℙ-dual predictable projection of \(m\) (see Ceci [10, Proposition 3]) that satisfies

and \(\pi _{t} = \mathbb{P}[\alpha _{t}=1|\mathcal{H}_{t}]\) is the unique solution to the Kushner–Stratonovich equation

All \((\mathbb{P},\mathbb{H})\)-local martingales can be constructed via the triplet \((\widetilde{W},\widetilde{B},\overline{m}^{\pi})\) (see Proposition D.4 in Appendix D). Hence for any given \(t\in [0,T]\), ℚ belongs to \(\mathcal{Q}\) if and only if its Radon–Nikodým derivative with respect to \(\mathbb{P}^{t,x}\) on \(\mathcal{H}^{t}_{T}\) is given by the Doléans-Dade exponential \(Z^{\nu}\) (with a slight abuse of notation) defined for \(s\in [t,T]\) as

for a pair \(\nu =(\nu _{D},\nu _{J})\) consisting of an \(\mathbb{H}^{t}\)-predictable process \((\nu _{D}(u))\) and an \(\mathbb{H}^{t}\)-predictable process \((\nu _{J}(u,q))\) indexed by ℝ, satisfying the Lépingle–Mémin condition

Under the current general setting, the dual optimisation problem is formulated as

A notable advantage of solving the dual problem in the context of general alternative data situations is the broad applicability of the DPP approach. We refer to Žitković [54, Theorem 3.17] which shows that the DPP is valid when the filter process is a Feller process. This condition provides important insight into the type of alternative data considered “useful” in terms of problem verification, that is, the solution procedure illustrated in Sect. 2.5. We cite [54, Theorem 3.17] as follows for completeness.

Theorem 3.1

Suppose that the filter process \(({\pi}_{t})_{t\in [0,T]}\), the unique solution to the Kushner–Stratonovich system (3.2), is a Feller process. Then the DPP holds for the dual value function \(\hat{L}\) defined in (3.4); specifically, we have:

-

i)

For any \(\mathbb{H}^{t}\)-stopping time \(\tau \) valued in \([t,T]\) and each \((t,x,y)\in \overline{\mathcal{U}}_{T} \times \mathbb{R}_{++} \),

$$\begin{aligned} \hat{L}(t,x,y) = \inf _{\mathbb{Q}\in \mathcal{Q}} \mathbb{E}^{t,x} \bigg[ \hat{L}(\tau , \pi _{\tau},ye^{r(t-\tau )}Z^{\nu}_{\tau}) + \int _{t}^{\tau} \widetilde{U}_{2}(s, ye^{r(t-s)}Z^{\nu}_{s}) ds \bigg]. \end{aligned}$$ -

ii)

For any \(\epsilon >0\), an \(\epsilon \)-optimal \(\mathbb{Q}^{*} \in \mathcal{Q}\) can be associated with any triple \((t,x,y)\in \overline{\mathcal{U}}_{T} \times \mathbb{R}_{++}\) in a universally measurable way.

We can now present the main result of this section, whose proof is provided in Appendix A. This establishes the equivalence between the primal and dual problems.

Theorem 3.2

For a class of time-dependent utility functions with suitable growth conditions (Assumption A.1), suppose that the dual optimiser \(\mathbb{Q}^{y}\in \mathcal{Q}\) for (3.4) exists for all \(y\in \mathbb{R}_{++}\). Then for any initial wealth level \(v\in \mathbb{R}_{++}\), there exists a real number \(y^{*}= y(v) >0\) such that

where \(J\) is the primal value function and \(\nu ^{y^{*}}\) is the dual optimiser of (3.4) for \(y^{*}\). Specifically, there is no duality gap. There exists a pair \((\vartheta ^{*},c^{*})\in \mathcal{A}(t,x,v)\), with \(c^{*}_{s} = I_{2}(s, e^{-r(s-t)}y^{*}\widetilde{Z}_{s}^{\mathbb{Q}^{y^{*}}})\) and \(V_{T}^{t,x,v, \vartheta ^{*},c^{*}} = I_{1}( T, e^{-r(T-t)}y^{*} \widetilde{Z}_{T}^{\mathbb{Q}^{y^{*}}})\), that is optimal for the primal problem (2.14).

4 Dual value function as a classical solution of the HJB equation

4.1 Proof of Theorem 2.7

The main difficulties arise from the nonlinear integro-differential term and the degeneracy induced by the filter process, which cannot be addressed directly via the PDE theory of classical solutions. We first deduce the form of \(\hat{L}\) given by (2.26). Recalling that \(\widetilde{U}_{i}(y) = - y^{\beta}/\beta \), it is clear by the definition (2.22) that \(\hat{L}\) is written as

For a fixed \(y\in \mathbb{R}_{++}\), the dual optimisation in (2.21) is therefore reduced to the auxiliary dual problem defined as

over \(\nu \in \Theta ^{t}\), where “maximise” or “minimise” depends on the sign of the utility parameter \(\kappa \) in (2.6). With a change of measure, \(\Lambda \) can be written as

where \(\widetilde{\mathbb{E}}^{t,x,\nu}\) denotes the expectation associated with the measure \(\widetilde{\mathbb{P}}^{t,x,\nu}\) which is defined via \({d\widetilde{\mathbb{P}}^{t,x,\nu}}/{d\mathbb{P}^{t,x}}\vert _{ \mathcal{H}^{t}_{T}} = \widetilde{Z}_{T}^{\nu}\) and

recalling that \(\hat{\theta}(x) = \theta (1)x+ \theta (2)(1-x)\) and \(\hat{f}(x,z)= f_{1}(z)x+ f_{2}(z)(1-x)\) for \(z \in \mathcal{Z}\). In addition, under \(\widetilde{\mathbb{P}}^{t,x,\nu}\), the dynamics of the filter process \(\pi \) evolve as

with

By Girsanov’s theorem, \(W^{\beta}: = \widetilde{W} + \beta \int _{t}^{\cdot} \hat{\theta}( \pi _{u}) du \) is a standard \((\mathbb{P}^{t,x,\nu},\mathbb{H})\)-Brownian motion and \(\widetilde{N}^{\beta}(ds,dz):= N(ds,dz)-e^{\beta \nu (s,z)}\lambda \hat{f}(\pi _{s-},z)dzds\) is the ℍ-compensated Poisson random measure under \(\widetilde{\mathbb{P}}^{t,x,\nu}\). Depending on the sign of the utility parameter, \(\kappa <0\) (resp. \(0<\kappa <1\)), the value function associated with the auxiliary dual problem is defined as

We find that Theorem 2.7 is equivalent to the following result.

Theorem 4.1

Under Condition 2.1, the function \(\hat{\Lambda}(t,x)\in C(\overline{\mathcal{U}}_{T})\cap C^{1,2}({ \mathcal{U}}_{T})\) is the unique classical solution of the HJB PIDE given as

with

with the boundary condition \(\hat{\Lambda}(T,x)=1\) for \(x\in [0,1]\). Furthermore, we have \(\hat{\Lambda}(t,x) = \Lambda (t,x;{\nu}^{*}) \), where \({\nu}^{*}\in \Theta ^{t}\) is the Markov policy given by

The proof is divided into several steps organised into three subsections. A preliminary step consists of showing that the control processes in the auxiliary dual problem (4.3) can be restricted to those \(\nu \) in \(\Theta ^{t}\) taking values in \([-M,M]\) for a sufficiently large fixed positive constant \(M\). We call this set \(\Theta ^{t,M}\) and the corresponding constrained auxiliary dual value function \(\Lambda ^{M}(t,x)\). We first present lower and upper bounds for \(\hat{\Lambda}\). These estimates are used to show that the restriction on \(\nu \) can be removed.

Proposition 4.2

There exist positive constants \(C_{\ell}\) and \(C_{u}\) that only depend on the utility parameter \(\kappa \) such that

Proof

Consider the function \(h(d): = e^{\beta d}-1+\beta (1-e^{d})\). For \(\kappa <0\), note that \(0<\beta <1\) and therefore \(h\) satisfies \(h(d)\le 0\) for \(d \in \mathbb{R}\). Using (4.1), we have

which implies that

For \(0<\kappa <1\), note that \(\beta <0\) and therefore \(h\) satisfies \(h(d)\ge 0\) for \(d \in \mathbb{R}\). Similar arguments show that

As the above lower and upper bounds do not depend on the initial state of the filter process, \(C_{\ell}\) and \(C_{u}\) can be constructed for any given \(\kappa <1\) with \(\kappa \neq 0\). □

We propose the following auxiliary lemma.

Lemma 4.3

When \(\kappa <0\), assume that the constrained auxiliary dual value function \(\Lambda ^{M}(t,x)\) is the unique classical solution in \(C(\overline{\mathcal{U}}_{T})\cap C^{1,2}({\mathcal{U}}_{T})\) of the HJB equation

with

subject to the boundary condition \(\Lambda ^{M}(T,x) = 1\) for \(x\in [0,1]\). When \(0<\kappa <1\), assume instead the same, but with max in (4.9) replaced by min. Let \(\Lambda ^{M}\) be the constrained auxiliary dual value function with

Then \(\Lambda ^{M}(t,x) = \hat{\Lambda}(t,x)\), where \(\hat{\Lambda}\) is the unconstrained value function in (4.3).

Proof

We provide a proof for the case where \(\kappa <0\), but the case \(0<\kappa <1\) follows the same logic. The maximum selector on the right-hand side of (4.9) induces a Markov policy \(\hat{\nu}^{M}\), defined for \((s,x)\in \overline{\mathcal{U}}_{T}\) and indexed by \(\mathcal{Z}\), as

Using (4.8) (note that the estimates also hold for the constrained auxiliary dual value function \(\Lambda ^{M}\)), it follows that \(\frac{1}{1-\beta}|\ln \frac{\Lambda ^{M}(s,\xi (x,z))}{\Lambda ^{M}(s,x)}| < M\) for \(M\) satisfying (4.11) so that the constraints \(\nu \in [-M,M]\) in (4.9) can be removed. For fixed \((t,x)\in \mathcal{U}_{T}\) and \((\pi _{s})_{s\ge t}\) defined as in (4.2), we have for \(s\in [t,T]\) that

This inequality and the Feynman–Kac formula imply that for \(\nu \in \Theta ^{t}\),

Taking the supremum over \(\nu \in \Theta ^{t}\), we have \(\Lambda ^{M} \ge \hat{\Lambda}\) and therefore \(\Lambda ^{M} = \hat{\Lambda}\) by definition. Given that \({\Lambda}^{M}\) is continuous and bounded, the Markov policy \(\hat{\nu}\) defined in (4.7) is bounded, continuous and locally Lipschitz in \(x\). Thus the Markov control process \(\nu ^{*}\) in (4.7) belongs to \(\Theta ^{t,M}\subseteq \Theta ^{t}\). From the definition of \(\nu ^{*}\), the inequalities in (4.12) and (4.13) become equalities for \(\nu ={\nu}^{*}\). Hence \(\Lambda ^{M}(t,x) = \hat{\Lambda}(t,x) = \Lambda (t,x;{\nu}^{*})\). Finally, substituting the Markov policy \(\nu ^{*}\) into the HJB equation (4.9), we obtain that \(\Lambda ^{M}\) satisfies (4.4). □

In the rest of this section, we prove Theorem 4.1. It is convenient to restrict the control set to \(\Theta ^{t,M}\) with a sufficiently large \(M\) for now and remove this restriction later via Lemma 4.3. To help readers better understand the main idea of the proof, we provide an overview before discussing it in detail.

Step 1: \(\Lambda ^{M}(t,x)\) is Lipschitz in \(t\) and \(x\) on the state space \(\overline{\mathcal{U}}_{T}\). The analytical challenges come from the Lévy-type jumps of the filter process in (4.2), because the compensator of the jump measure \(N(dt,dz)\) depends on the filter itself. To overcome this difficulty, we need to introduce an auxiliary process through the Radon–Nikodým derivatives and give the necessary estimates under Condition 2.1. The results are summarised in Sect. 4.2.

Step 2: \(\Lambda ^{M}\) is a viscosity solution of the HJB PIDE (4.9). We adopt a classical definition (Definition 4.8) of a viscosity solution and show that \({\Lambda}^{M}\) is a viscosity solution of (4.9) in Theorem 4.9 in Sect. 4.3.

Step 3: From PIDE to PDE. Let \(M\) be sufficiently large; we change the notation and rewrite the HJB PIDE (4.9) as a parabolic PDE given by

where the functions \(d_{0}(\,\cdot \,)\) and \(\mathcal{I}_{\beta}(\,\cdot \,)\) are defined in (4.5) and (4.6).

Step 4: \(\Lambda ^{M}\) is a viscosity solution of the PDE (4.14). We consider a viscosity solution \(g\) of the PDE (4.14), which is interpreted as an equation for an “unknown” \(g\), with the last term \(\mathcal{I}_{\beta}({\Lambda}^{M})\) prespecified with \({\Lambda}^{M}\) characterised in Step 2. We demonstrate that \({\Lambda}^{M}\) also solves the PDE (4.14) in the viscosity sense. We need to show the equivalence of two definitions of viscosity solutions to the HJB PIDE (4.9) (i.e., Definitions 4.8 and 4.10; the first is the classical definition while the second has no replacement of the solution with a test function in the nonlocal integro-differential term). The results are presented in Proposition 4.11 and Corollary 4.12.

Step 5: Uniqueness of the viscosity solution to the PDE (4.14). It is clear that \(g = {\Lambda}^{M}\) is a viscosity solution for both the PDE (4.14) and the PIDE (4.9), as the two equations are essentially the same. However, if a function \(g\) solves the PDE (4.14), this does not mean that it also solves the PIDE (4.9), because the term \(\mathcal{I}_{\beta}({\Lambda}^{M})\) in the PDE (4.14) depends on \({\Lambda}^{M}\), regardless of the choice of \(g\). Thus we must show that the PDE (4.14) admits a unique viscosity solution. This requires applying a comparison result (see Amadori [1, Theorem 2]) for viscosity solutions to HJB equations with degenerate coefficients on the boundary.

Step 6: Existence of a classical solution to the PDE (4.14). The PDE (4.14) is parabolic when \(\mathcal{I}_{\beta}({\Lambda}^{M})\) is considered an autonomous term. We refer to the literature on degenerate parabolic PDEs (see e.g. Fleming and Rishel [22, Appendix E] and Bayraktar et al. [7, Theorem 2.8]) to show the existence of a classical solution to the PDE (4.14). The result is presented in Theorem 4.16.

The results of Steps 3–6 are summarised in Sect. 4.4. Finally, we conclude that \({\Lambda}^{M}\) is a classical solution in \(C(\overline{\mathcal{U}}_{T})\cap C^{1,2} (\mathcal{U}_{T})\) of (4.9), and with Lemma 4.3, the proof of Theorems 4.1 and 2.7 is complete.

4.2 Lipschitz-continuity of the auxiliary constrained dual value function \({\Lambda}^{M}\)

We first show the Lipschitz-continuity of \(\Lambda ^{M}(t,x)\) with respect to \(x\), for each fixed \(t\in [0,T]\). We initiate this analysis by establishing the Lipschitz property at \(t=0\) using a specific Lipschitz constant. As this constant is independent of the choice of \(t\), we extend this analysis to achieve uniform Lipschitz-continuity of \(\Lambda ^{M}(t,x)\) with respect to \(x\) for all \(t\in [0,T]\). Unlike Frey et al. [24, Sect. 4] where the authors reformulate the dynamics of the filter process into an exogenous Poisson random measure while maintaining the law of the original filter process, we now establish other necessary estimates of the value function by introducing an auxiliary process via the Radon–Nikodým derivatives. This method effectively enables us to work under general alternative data signals satisfying Condition 2.1.

The path space of \((\pi _{t})_{t\in [0,T]}\) is denoted by \(D_{T}:=D([0,T];[0,1])\), and \(\mathcal{D}_{T}\) is the usual \(\sigma \)-field of \(D_{T}\). Moreover, \(P_{1}\) is the probability distribution on \((D_{T},\mathcal{D}_{T})\) induced by \((\pi _{t})_{t\in [0,T]}\) under \(\widetilde{\mathbb{P}}^{0,x,\nu}\) for a given control process \(\nu \in \Theta ^{0,M}\). Standard arguments show that with \(\mathcal{L}^{\nu}\) defined in (4.10), the process

is a martingale under \(P_{1}\) for each point \(x\in [0,1]\) and each function \(g\in C^{2}([0,1])\), and \(P_{1}\) is the unique probability distribution with this property.

We introduce an auxiliary process \(Y\) under a reference probability measure \(\overline{\mathbb{P}}\) that satisfies

where the functions \(\overline{\mu}\) and \(\overline{\sigma}\) are defined in (4.2), \(W^{\beta}\) is a standard Brownian motion and \(N_{2}\) is a Poisson random measure with intensity measure given by \(\lambda f_{1}(z)dzdt\) under \(\overline{\mathbb{P}}\). Note that \(Y\) is a jump-diffusion process with an exogenous Poisson random measure. The process \(Y\) with initial condition \(x\) is denoted by \(Y^{x}\). To ensure that (4.16) has a unique strong solution, the coefficients \(\overline{\mu}\), \(\overline{\sigma}\) and \(\xi \) must satisfy certain Lipschitz and growth conditions; see Ceci and Colaneri [11, Appendix A]. We verify these conditions in the following result; its proof is presented in Appendix C.

Lemma 4.4

Under Condition 2.1, there exist a positive constant \(C\) and a function \(\rho :\mathcal{Z} \rightarrow \mathbb{R}_{++}\) with \(\int _{\mathcal{Z}}\rho (z)^{2} f_{1}(z)dz <\infty \) such that for all \(x\) and \(y \in [0,1]\),

The probability distribution on \((D_{T},\mathcal{D}_{T})\) induced by \((Y_{t})_{t\in [0,T]}\) under \(\overline{\mathbb{P}}\) is denoted by \(P_{2}\). We show that \(P_{1}\) is absolutely continuous with respect to \(P_{2}\) and that the corresponding Radon–Nikodým derivative reads

where \(\mathbf{E}_{j}\) indicates the expectation over \(z\in \mathcal{Z}\) under the density function \(f_{j}\) with \(j=1,2\), \((z_{i})\) is the sequence of jump sizes, and \((\tau _{i})\) and \(n(T)\) are the sequence of jump times and the total number of jumps up to \(T\), respectively, which are given by

Note that for \(t>0\), when \(T\) in (4.19) is replaced by \(t\), we have

where \(\widetilde{N}_{2}(ds,dz)= N_{2}(ds,dz)-\lambda f_{1}(z)dzdt\) is the compensated random measure under \(\overline{\mathbb{P}}\). The operator \(\widetilde{\mathcal{L}}^{\nu}\) associated with \(Y\) is given by

It follows that the process \(\widetilde{K}_{g}(t): = g(Y_{t}) - g(x) - \int _{0}^{t} \widetilde{\mathcal{L}}^{\nu} g(Y_{s}) ds \), \(t \in [0,T]\), is a martingale under \(P_{2}\) for each point \(x\in [0,1]\) and each function \(g(x)\in C^{2}([0,1])\), and \(P_{2}\) is the unique probability distribution with this property. Replacing \(\pi \) by \(Y\) in \(K_{g}(t)\) defined in (4.15) and applying integration by parts, we have

As both \(\Xi \) and \(\widetilde{K}_{g}\) are martingales under \(P_{2}\), it follows that \(\Xi K_{g}\) is a martingale under \(P_{2}\). Now for any \(A \in \mathcal{D}_{T}\), we set \(\widetilde{P}_{1}[A] = \int _{A} \Xi _{T}(Y) dP_{2}\). We can clearly see that \(K_{g}\) is a martingale under \(\widetilde{P}_{1}\). We conclude that \(\widetilde{P}_{1} = P_{1}\) by the uniqueness of \(P_{1}\).

Having established the preparatory results above, we can now provide the main result of this subsection. For the sake of precision, the solutions to (4.2) and (4.16) starting from \(x\) are denoted by \(\pi ^{x}\) and \(Y^{x}\), respectively.

Proposition 4.5

The value function \({\Lambda}^{M}(t,x)\) is Lipschitz-continuous in \(x\).

Proof

For \(x,y\in [0,1]\), we have

where

We first focus on the term \({A}\). For ease of notation, we write

For \(\nu \in [-M,M]\), as \(\Gamma \) is bounded, we have \(\overline{\mathbb{E}}[ {A}_{T}] \le C \overline{\mathbb{E}}[\vert \Xi _{T}(Y^{x})- \Xi _{T}(Y^{y})\vert ]\) for a positive constant \(C\). Using the inequality

for any two positive sequences \((a_{i})_{i=1, \dots , n}\) and \((b_{i})_{i=1, \dots , n}\), we obtain

with the constant \(C_{1} = 2\max \{\lambda T,1+b_{\max}\}e^{\beta M}\). In the last inequality, we use the fact that \(|\exp (-a)-\exp (-b)|\le |a-b|\) for any bounded \(a\) and \(b\). We find that the term in the last line is finite because \(Y_{t}\) is always in the interval \([0,1]\). From the Cauchy–Schwarz inequality, we further obtain

Recall that \(n(T)\) is the total number of jumps of a Poisson process with constant intensity rate \(\lambda \) before \(T\). It follows that for \(C_{2}= (\lambda Te^{\beta M}(1+b_{\max})+1)\),

It remains to show that there exists a constant \(C\) such that

Note that

Applying Itô’s lemma to the function \(|Y^{x}_{t} - Y^{y}_{t}|^{2}\) and using Kunita [31, Corollary 2.12], we obtain

By the Lipschitz properties of \(\overline{\mu}\), \(\overline{\sigma}\) and \(\xi \) given in Lemma 4.4, we obtain

for a constant \(C\). Thus by Gronwall’s inequality, we obtain (4.20).

We now consider the term \(B\). From the Cauchy–Schwarz inequality, we obtain

for a constant \(C\); in the second inequality, we use \(|\exp (-a)-\exp (-b)|\le |a-b|\), and in the last inequality, we use the fact that \(\Gamma (x,\nu )\) is Lipschitz-continuous in the state variable \(x\) when \(\nu \in [-M,M]\). Recalling (4.20), it remains to show that

The above analysis can be extended to the other two terms \(\int _{0}^{T} {A}_{t} dt\) and \(\int _{0}^{T} {B}_{t} dt\). Due to the arbitrariness of \(\nu \in \Theta ^{0,M}\), the proof is complete. □

Next, we show the continuity of \(\Lambda ^{M}(t,x)\) in the time variable \(t\). The following estimates of the filter process \(\pi \) are used; their proof is reported in Appendix C.

Proposition 4.6

For an arbitrary \(\nu \in \Theta ^{t,M}\), \((\pi _{s}^{t,x,\nu})_{s\in [t,T]}\) is the solution of (4.2) starting from \((t,x)\in \overline{\mathcal{U}}_{T}\). For any \(k\in [0,2]\), there exists a positive constant \(C\) such that for all \(0\le t \le s \le T\),

Proposition 4.7

There exists a positive constant \(C\) such that for all \(t\), \(s\in [0,T]\) and \(x\), \(y\in [0,1]\),

Proof

Let \(0\le t< s \le T\); by applying Theorem 3.1, we obtain

According to the boundedness of \(\Gamma \) when \(\nu \in [-M,M]\), the Lipschitz-continuity of \(\Lambda ^{M}\) in \(x\) due to Proposition 4.5, and (4.22), there exists a constant \(C\) such that

Finally, we obtain \(|\Lambda ^{M}(t,x) - \Lambda ^{M}(s,x)| \le (C+T^{\frac{1}{2}})|s-t|^{ \frac{1}{2}}\), and with Proposition 4.5, the proof is complete. □

4.3 The function \(\Lambda ^{M}\) is a viscosity solution of the HJB PIDE (4.9)

We adapt the notion of viscosity solution introduced by Barles and Imbert [4, Definition 1] to the case of integro-differential equations; it is based on a test function and interprets (4.9) in a weaker sense. We focus on the case where \(\kappa <0\), and we can follow a similar logic for \(0<\kappa <1\).

Definition 4.8

A bounded function \(g \in C(\overline{\mathcal{U}}_{T})\) is a viscosity supersolution (subsolution) of (4.9) if for any bounded test function \(\psi \in C^{1,2}(\overline{\mathcal{U}}_{T})\) such that \((t_{0},x_{0})\in {\mathcal{U}}_{T}\) is a global minimum (maximum) point of \(g-\psi \) with \(g(t_{0},x_{0})=\psi (t_{0},x_{0})\), we have

(resp. \(\leq 1\)), where

A bounded function \(g\) is a viscosity solution of (4.9) if it is both a viscosity subsolution and a viscosity supersolution of (4.9).

We obtain the following result.

Theorem 4.9

The function \({\Lambda}^{M}\) is a bounded Lipschitz-continuous viscosity solution of the HJB PIDE (4.9) in \({\mathcal{U}}_{T}\), subject to the terminal condition \({\Lambda}^{M}(T,x)=1\) for \(x\in [0,1]\).

Proof

Step 1: Viscosity supersolution. Take \((t_{0},x_{0})\in {\mathcal{U}}_{T}\) and \(\psi \in C^{1,2}(\overline{\mathcal{U}}_{T})\) with \(0 = (\Lambda ^{M}-\psi )(t_{0},x_{0}) = \min _{(t,x)\in \mathcal{U}_{T}}( \Lambda ^{M}(t,x)-\psi (t,x))\) and therefore \(\Lambda ^{M} \ge \psi \) on \({\mathcal{U}}_{T}\). Let \((t_{k},x_{k})\) be a sequence in \({\mathcal{U}}_{T}\) with \(\lim _{k\rightarrow \infty} (t_{k},x_{k}) = (t_{0},x_{0})\) and define the sequence \(( \varphi _{k} )\) as \(\varphi _{k}: = \Lambda ^{M}(t_{k},x_{k}) - \psi (t_{k},x_{k})\). By the continuity of \(\Lambda ^{M}\) (Proposition 4.7), we have \(\lim _{k\rightarrow \infty} \Lambda ^{M}(t_{k},x_{k}) = \Lambda ^{M}(t_{0},x_{0})\); so \(\lim _{k\rightarrow \infty} \varphi _{k} =0 \).

Consider a given control \({\nu} \in \Theta ^{t,M}\), denote the filter process (the solution of (4.2)) with the initial state \(\pi _{t_{k}}^{k} = x_{k}\) by \(\pi ^{k}\) and define the stopping time \(\tau _{k}\) as

for a given constant \(\epsilon _{0}\in (0,1/2)\) and  ; then we have \(\lim _{k \rightarrow \infty} \tau _{k} = 0\). Using Theorem 3.1, we obtain

; then we have \(\lim _{k \rightarrow \infty} \tau _{k} = 0\). Using Theorem 3.1, we obtain

thus by the definition of \(\varphi _{k}\),

where \(\zeta ^{k}(s):= \exp (\int _{t_{k}}^{s}\Gamma (\pi _{u}^{k},\nu _{u})du)\). Applying Itô’s lemma to \(\zeta ^{k}\psi \), we have

By assumption, the last two terms are martingales under \(\widetilde{\mathbb{P}}^{t_{k},x_{k},\nu}\). Thus

Recalling (4.24), we obtain

We now wish to let \(k\rightarrow \infty \), but we cannot directly apply the mean value theorem because \(u \mapsto g(u, \pi ^{k}_{u},\nu _{u})\) is not continuous for the function \(g\) in general. We first show that the last term on the right-hand side of (4.25) satisfies

for \(\epsilon (\beta _{k})\rightarrow 0\) as \(\beta _{k} \rightarrow 0\), where \(\beta _{k}\) is defined in (4.23). By choosing a sufficiently small \(\epsilon _{0}\) and from the local Lipschitz-continuity of the bounded continuous function \(\psi \in C^{1,2}(\overline{\mathcal{U}}_{T})\), we have

for a constant \(C_{\epsilon _{0}}\) depending on \(\epsilon _{0}\). In addition, as \(\Gamma (x,\nu )\) is bounded and Lipschitz-continuous in \(x\) when \(\nu \in \Theta ^{t,M}\), we find

The uniform norm of the function \(\psi \) is denoted by \(\| \psi \|\), and we obtain

Combined with (4.22) in Proposition 4.6, we obtain (4.26). Using the continuity of \(\partial _{t} \psi \), \(\partial _{x}\psi \) and \(\partial _{xx}\psi \) and (4.17) in Lemma 4.4, similar arguments give

Next, using (4.17), we have

As \(\int _{\mathcal{Z}} \rho (z) (f_{1}(z) +f_{2}(z)) dz<\infty \) under Condition 2.1, we find

By substituting these estimates into (4.25), we obtain

Finally, using \(k\rightarrow \infty \), \(t_{k} \rightarrow t_{0}\), \({\varphi _{k}}/{\beta _{k}} \rightarrow 0\), \(\epsilon (\beta _{k}) \rightarrow 0\), the mean value theorem, the bounded convergence theorem and replacing \(\nu \) by a constant strategy, we obtain

As \(\nu \in [-M,M]\) is arbitrary, we obtain the supersolution viscosity inequality.

Step 2: Viscosity subsolution. Take some \((t_{0},x_{0})\in{\mathcal{U}}_{T}\) and \(\psi \in C^{1,2}(\overline{\mathcal{U}}_{T})\) with \(0 = ( \Lambda ^{M}-\psi )(t_{0},x_{0}) = \max _{(t,x)\in{\mathcal{U}}_{T}}( \Lambda ^{M}(t,x)-\psi (t,x) )\) and thus \(\Lambda ^{M} \le \psi \) on \({\mathcal{U}}_{T}\). We aim to establish the subsolution viscosity inequality in \((t_{0},x_{0})\). We reason by contradiction and assume that there exists \(\ell >0\) such that

As \(\mathcal{L}^{\nu} \psi \) is continuous, there exists an open set \(\mathcal{N}_{\epsilon _{0}}\) around \((t_{0},x_{0})\) given by

for \(\epsilon _{0}\in (0,1/2)\) such that for \(x\in \mathcal{N}_{\epsilon _{0}}\),

Take \(\iota >0\) such that

for a constant \(C_{M}=\max _{x\in [0,1], \nu \in [-M,M]} \max \{-\Gamma (x,\nu ),0\}\), which is finite. Let \((t_{k},x_{k})\) be a sequence in \(\mathcal{N}_{\epsilon _{0}}\) with \(\lim _{k\rightarrow \infty} (t_{k},x_{k}) = (t_{0},x_{0})\). We further define the sequence \(( \varphi _{k} )\) as \(\varphi _{k}: = \Lambda ^{M}(t_{k},x_{k}) - \psi (t_{k},x_{k})\). By continuity of \(\Lambda ^{M}\) and \(\psi \), we have \(\lim _{k\rightarrow \infty} \varphi _{k} = 0\). For \(k \ge 1\) and \(\epsilon _{k} >0\) with \(\lim _{k\rightarrow \infty} \epsilon _{k} = 0\), consider an \(\epsilon _{k}\)-optimal control \(\nu ^{*,k}\) so that

We denote the filter process (the solution of (4.2)) with the initial state \(\widetilde{\pi}_{t_{k}}^{k} = x_{k}\) and the control \(\nu = \nu ^{*,k}\) by \(\widetilde{\pi}^{k}\), and we define the stopping time

By definition, we then have \(\Lambda ^{M}(\tau _{k},\widetilde{\pi}^{k}_{ \tau _{k}}) -\psi ( \tau _{k},\widetilde{\pi}^{k}_{ \tau _{k}}) \le -\iota e^{-\epsilon _{0} C_{M}} \). If we now let \(\widetilde{\zeta}^{k}(s):= \exp (\int _{t_{k}}^{s}\Gamma ( \widetilde{\pi}_{u}^{k},\nu ^{*,k}_{u})du)\), we get

From the above calculations, we have

However, from the optimality of \(\Lambda ^{M}\) and (4.27), we have

By selecting \(\epsilon _{k} = \varphi _{k}\), we have \(\Lambda ^{M}(t_{k},x_{k})\le \Lambda ^{M}(t_{k},x_{k}) - \iota \), which is a contradiction, and therefore we have shown the subsolution inequality. □

4.4 The function \(\Lambda ^{M}\) is a classical solution of the HJB equation (4.14)

We introduce an alternative definition of the viscosity solution first suggested by Pham [36, Lemma 2.1] and formalised in various contexts, as in Barles and Imbert [4], Davis et al. [15] and Seydel [43], and we show that this alternative definition is equivalent to Definition 4.8. In this definition, the integro-differential operator is evaluated using the actual solution.

Definition 4.10

A bounded function \(g \in C(\overline{\mathcal{U}}_{T})\) is a viscosity supersolution (subsolution) of (4.9) if for any bounded test function \(\psi \in C^{1,2}(\overline{\mathcal{U}}_{T})\) such that \((t_{0},x_{0})\in {\mathcal{U}}_{T}\) is a global minimum (maximum) point of \(g-\psi \) with \(g(t_{0},x_{0})=\psi (t_{0},x_{0})\), we have

(resp. \(\leq 1\)), where

A bounded function \(g\) is a viscosity solution of (4.9) if it is both a viscosity subsolution and a viscosity supersolution of (4.9).

Proposition 4.11

Definitions 4.8and 4.10of viscosity solutions are equivalent.

Proof

See Appendix C. □

With Theorem 4.9, we immediately obtain the following corollary corresponding to Step 4 in the proof of Theorem 4.1 in Sect. 4.1.

Corollary 4.12

The function \({\Lambda}^{M}\) is a viscosity solution of the PDE (4.14).

Given the results above, we formally define the functional \(\mathcal{I}_{\beta}(g)\) as

Under Condition 2.1, \(\mathcal{I}_{\beta}(g)\) is well defined for any bounded function \(g\). We observe that for a sufficiently large \(M\) (given in (4.11) in Lemma 4.3), we have

Thus we can rewrite the HJB PIDE (4.9) as the equivalent parabolic PDE (4.14), as stated in Step 3 of Sect. 4.1. We provide the following result on \(\mathcal{I}_{\beta}\), which is used when we prove the uniqueness and existence of a classical solution of the PDE (4.14). Its proof is provided in Appendix C.

Lemma 4.13

The functional \(\mathcal{I}_{\beta}({\Lambda}^{M})(t,x)\) is bounded and Lipschitz-continuous in \(x\) on \(\overline{\mathcal{U}}_{T}\); therefore, it is Hölder-continuous in \(x\) with an exponent \(0<\iota <1\).

As described in Step 5, we can use the comparison result in Amadori [1, Theorem 2] for the degenerate parabolic PDE to show the uniqueness of the solution of the PDE (4.14).

Theorem 4.14

We take \(u\) and \(v\) as a bounded continuous subsolution and a bounded lower continuous supersolution, respectively, to (4.14), subject to the terminal condition \(u(T,x) = v(T,x) = 1\) for \(x\in [0,1]\). Then \(u \le v\) on \({\mathcal{U}}_{T}\).

By virtue of Proposition 2.5 (the boundaries of the filter process are unattainable), Proposition 4.2 (boundedness of \(\Lambda ^{M}\)) and Lemma 4.4 (Lipschitz and growth properties for the coefficients of the PDE), we easily check that the assumptions in [1, Assumptions 1 and 2] are satisfied in our case.

Corollary 4.15

The value function \({\Lambda}^{M}\) is the unique viscosity solution of the parabolic PDE (4.14) subject to the terminal condition \({\Lambda}^{M}(T,x)=1\) for \(x\in [0,1]\).

Following Step 6, it remains to establish the existence of a classical solution to the PDE (4.14). We propose the following theorem, whose proof is provided in Appendix B.

Theorem 4.16

The PDE (4.14) admits a classical solution \(g\in C^{1,2}(\mathcal{U}_{T})\) subject to the terminal condition \(g(T,x) = 1\) for \(x\in [0,1]\).

5 Conclusion

We have established the first duality approach to an optimal investment–consumption problem with partial information and mixed observations. Interestingly, the inclusion of alternative data places our problem in the family of incomplete markets, and our dual problem is an optimisation problem for a set of equivalent local martingale measures. We comprehensively demonstrate its application in a bull–bear economy by drawing on expert opinions as a complementary source of observation. The analytically tractable results for the power utility function show that the optimal investment and consumption policies are determined from the solution of a PIDE, which takes into account the effect of alternative observations.

References

Amadori, A.L.: Uniqueness and comparison properties of the viscosity solution to some singular HJB equations. Nonlinear Differ. Equ. Appl. 14, 391–409 (2007)

Amari, S.I.: Differential-Geometrical Methods in Statistics. Springer, Berlin (2012)

Bain, A., Crisan, D.: Fundamentals of Stochastic Filtering. Springer, Berlin (2009)

Barles, G., Imbert, C.: Second-order elliptic integro-differential equations: viscosity solutions’ theory revisited. Ann. Inst. Henri Poincaré, Anal. Non Linéaire 25, 567–585 (2008)

Bäuerle, N., Rieder, U.: Portfolio optimization with Markov-modulated stock prices and interest rates. IEEE Trans. Autom. Control 49, 442–447 (2004)

Bäuerle, N., Rieder, U.: Portfolio optimization with jumps and unobservable intensity process. Math. Finance 17, 205–224 (2007)

Bayraktar, E., Kardaras, C., Xing, H.: Valuation equations for stochastic volatility models. SIAM J. Financ. Math. 3, 351–373 (2012)

Brémaud, P.: Point Processes and Queues, Martingale Dynamics. Springer, New York (1980)

Callegaro, G., Ceci, C., Ferrari, G.: Optimal reduction of public debt under partial observation of the economic growth. Finance Stoch. 24, 1083–1132 (2020)

Ceci, C.: Risk minimizing hedging for a partially observed high frequency data model. Stoch. Int. J. Probab. Stoch. Process. 78, 13–31 (2006)

Ceci, C., Colaneri, K.: Nonlinear filtering for jump diffusion observations. Adv. Appl. Probab. 44, 678–701 (2012)

Chen, K., Jeon, J., Wong, H.Y.: Optimal retirement under partial information. Math. Oper. Res. 47, 1802–1832 (2022)

Cont, R., Tankov, P.: Financial Modelling with Jump Processes. Chapman & Hall, London (2003)

Cvitanić, J., Karatzas, I.: Convex duality in constrained portfolio optimization. Ann. Appl. Probab. 2, 767–818 (1992)

Davis, M., Guo, X., Wu, G.: Impulse control of multidimensional jump diffusions. SIAM J. Control Optim. 48, 5276–5293 (2010)

Davis, M., Lleo, S.: Jump-diffusion risk-sensitive asset management II: jump-diffusion factor model. SIAM J. Control Optim. 51, 1441–1480 (2013)

Davis, M., Lleo, S.: Debiased expert forecasts in continuous-time asset allocation. J. Bank. Finance 113, 105759 (2020)

Davis, M., Lleo, S.: Risk-sensitive benchmarked asset management with expert forecasts. Math. Finance 31, 1162–1189 (2021)

Davis, M., Lleo, S.: Jump-diffusion risk-sensitive benchmarked asset management with traditional and alternative data. Annals of Operations Research. 336, 661–689 (2024)

Elliott, R.J., van der Hoek, J.: An application of hidden Markov models to asset allocation problems. Finance Stoch. 1, 229–238 (1997)

Federico, S., Gassiat, P., Gozzi, F.: Utility maximization with current utility on the wealth: regularity of solutions to the HJB equation. Finance Stoch. 19, 415–448 (2015)

Fleming, W.H., Rishel, R.W.: Deterministic and Stochastic Optimal Control. Springer, Berlin (2012)

Fouque, J.P., Papanicolaou, A., Sircar, R.: Filtering and portfolio optimization with stochastic unobserved drift in asset returns. Commun. Math. Sci. 13, 935–953 (2015)

Frey, R., Gabih, A., Wunderlich, R.: Portfolio optimization under partial information with expert opinions. Int. J. Theor. Appl. Finance 15, 1250009 (2012)

Honda, T.: Optimal portfolio choice for unobservable and regime-switching mean returns. J. Econ. Dyn. Control 28, 45–78 (2003)

Ishikawa, Y.: Stochastic Calculus of Variations for Jump Processes. de Gruyter, Berlin (2023)

Karatzas, I., Lehoczky, J.P., Shreve, S.E., Xu, G.L.: Martingale and duality methods for utility maximization in an incomplete market. SIAM J. Control Optim. 29, 702–730 (1991)

Karatzas, I., Zhao, X.: Bayesian adaptive portfolio optimization. In: Jouini, É., et al. (eds.) Handbooks in Mathematical Finance: Option Pricing, Interest Rates and Risk Management, pp. 632–669. Cambridge University Press, Cambridge (2001)

Komatsu, T.: Markov processes associated with certain integro-differential operators. Osaka J. Math. 10, 271–303 (1973)

Kramkov, D., Schachermayer, W.: The asymptotic elasticity of utility functions and optimal investment in incomplete markets. Ann. Appl. Probab. 9, 904–950 (1999)

Kunita, H.: Stochastic differential equations based on Lévy processes and stochastic flows of diffeomorphisms. In: Rao, M.M. (ed.) Real and Stochastic Analysis: New Perspectives, pp. 305–373. Birkhäuser, Boston (2004)

Ladyženskaja, O.A., Solonnikov, V.A., Ural’tseva, N.N.: Linear and Quasi-Linear Equations of Parabolic Type. Am. Math. Soc., Providence (1988)

Lakner, P.: Utility maximization with partial information. Stoch. Process. Appl. 56, 247–273 (1995)

Lakner, P.: Optimal trading strategy for an investor: the case of partial information. Stoch. Process. Appl. 76, 77–97 (1998)

Merton, R.C.: Optimum consumption and portfolio rules in a continuous-time model. J. Econ. Theory 3, 373–413 (1971)

Pham, H.: Optimal stopping of controlled jump diffusion processes: a viscosity solution approach. J. Math. Syst. Estim. Control 8, 127–130 (1998)

Pham, H., Quenez, M.C.: Optimal portfolio in partially observed stochastic volatility models. Ann. Appl. Probab. 11, 210–238 (2001)

Putschögl, W., Sass, J.: Optimal consumption and investment under partial information. Decis. Econ. Finance 31, 137–170 (2008)

Rieder, U., Bäuerle, N.: Portfolio optimization with unobservable Markov-modulated drift process. J. Appl. Probab. 42, 362–378 (2005)

Sass, J., Haussmann, U.G.: Optimizing the terminal wealth under partial information: the drift process as a continuous time Markov chain. Finance Stoch. 8, 553–577 (2004)

Sass, J., Westphal, D., Wunderlich, R.: Expert opinions and logarithmic utility maximization for multivariate stock returns with Gaussian drift. Int. J. Theor. Appl. Finance 20, 1750022 (2017)

Sethi, S.P., Zhang, Q.: Hierarchical Decision Making in Stochastic Manufacturing Systems. Springer, Berlin (2012)

Seydel, R.C.: Existence and uniqueness of viscosity solutions for QVI associated with impulse control of jump-diffusions. Stoch. Process. Appl. 119, 3719–3748 (2009)

Sotomayor, L.R., Cadenillas, A.: Explicit solutions of consumption-investment problems in financial markets with regime switching. Math. Finance 19, 251–279 (2009)

Weron, R., Bierbrauer, M., Trück, S.: Modeling electricity prices: jump diffusion and regime switching. Phys. A, Stat. Mech. Appl. 336, 39–48 (2004)

Xi, F.: Asymptotic properties of jump-diffusion processes with state-dependent switching. Stoch. Process. Appl. 119, 2198–2221 (2009)

Xi, F., Zhu, C.: On Feller and strong Feller properties and exponential ergodicity of regime-switching jump diffusion processes with countable regimes. SIAM J. Control Optim. 55, 1789–1818 (2017)

Xi, F., Zhu, C.: On the martingale problem and Feller and strong Feller properties for weakly coupled Lévy type operators. Stoch. Process. Appl. 128, 4277–4308 (2018)

Yang, Z., Koo, H.K.: Optimal consumption and portfolio selection with early retirement option. Math. Oper. Res. 43, 1378–1404 (2018)

Yin, G., Zhou, X.Y.: Markowitz’s mean–variance portfolio selection with regime switching: from discrete-time models to their continuous-time limits. IEEE Trans. Autom. Control 49, 349–360 (2004)

Yin, G., Zhu, C.: Hybrid Switching Diffusions: Properties and Applications. Springer, Berlin (2009)

Zhou, X.Y., Yin, G.: Markowitz’s mean-variance portfolio selection with regime switching: a continuous-time model. SIAM J. Control Optim. 42, 1466–1482 (2003)

Zhu, C., Yin, G., Baran, N.A.: Feynman–Kac formulas for regime-switching jump diffusions and their applications. Stoch. Int. J. Probab. Stoch. Process. 87, 1000–1032 (2015)

Žitković, G.: Dynamic programming for controlled Markov families: abstractly and over martingale measures. SIAM J. Control Optim. 52, 1597–1621 (2014)

Acknowledgements

The authors would like to thank the Associate Editor and two anonymous referees for their insightful comments and suggestions that allowed substantially improving the paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work described in this paper was substantially supported by grants from the Research Grants Council of the Hong Kong Special Administrative Region, China (Project No. GRF15305422, RMG8601495 and GRF14308422). K. Chen was also financially supported by a research grant from the Hong Kong Polytechnic University (Project No. PolyU P0038553).

Appendices

Appendix A: Proof of Theorem 3.2

Without loss of generality, we prove the result for starting time \(t=0\) and arbitrary initial guess \(x\in [0,1]\). For ease of notation, we remove the subscripts \(t\) and \(x\).

Assumption A.1

The time-dependent utility function \(U_{i}(t,c)\in C^{2}([0,T]\times \mathbb{R}_{++})\) for \(i=1,2\) has the following properties for any given \(t\in [0,T]\):

-

i)

The function \(U_{i}(t,c)\) is strictly concave with respect to \(c\) and

$$\begin{aligned} \lim _{c\rightarrow 0+} \partial _{c} U_{i}(t,c) = \infty ,\qquad \lim _{c\rightarrow \infty} \partial _{c} U_{i}(t,c) = 0. \end{aligned}$$ -

ii)

There exist \(c_{0}>0\), \(\zeta \in (0,1)\) and \(\iota >1\) such that

$$ \zeta \partial _{c}U_{i}(t,c) \ge \partial _{c} U_{i}(t, \iota c) \qquad \text{for }c>c_{0}. $$ -

iii)

There exist positive constants \(K\) and \(k_{0}\) such that

$$\begin{aligned} \limsup _{c\rightarrow \infty} \max _{t \in [0,T]} \partial _{c} U_{i}(t,c) c^{k_{0}} \le K. \end{aligned}$$