Abstract

Gabion weirs are environment-friendly structures widely used for irrigation and drainage network purposes. These structures' hydraulic performance is fundamentally different from solid weirs' due to their porosity and the existence of a through-flow discharge. This paper investigates the reliability and suitability of a number of Machine learning models for estimation of hydraulic performance of gabion weirs. Generally, three different Boosting ensemble models, including Gradient Boosting, XGBoost, and CatBoost, are compared to the well-known Random Forest and a Stacked Regression model, with respect to their accuracy in prediction of the discharge coefficient and through-flow discharge ratio of gabion weirs in free flow conditions. The Bayesian optimization approach is used to fine-tune model hyper-parameters automatically. Recursive feature elimination analysis is also performed to find optimum combination of features for each model. Results indicate that the CatBoost model has outperformed other models in terms of estimating the through flow discharge ratio (Qin/Qt) with R2 = 0.982, while both XGBoost and CatBoost models have shown close performance in terms of estimating the discharge coefficient (Cd) with R2 of CatBoost equal to 0.994 and R2 of XGBoost equal to 0.992. Weakest results were also produced by Decision tree regressor with R2 = 0.821 and 0.865 for estimation of Cd and Qin/Qt values.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Gabion weirs are porous structures made of boulders with minimal construction cost and negative environmental effects (Yue et al. 2021, Zhang et al. 2019a,b). Generally, these structures benefit from higher stability, durability, and proper hydraulic performance (Zhang et al. 2019a,b, Fang et al. 2021, Liu et al. 2022). Besides, physical particles such as sediments and chemical substances can pass through the porous body of these structures, which reduces sedimentation and increases flow aeration by increasing the downstream flow turbulence (Mohamed 2010; Fathi-moghaddam et al. 2018; Rahmanshahi and Bejestan 2020). The hydraulic performance of porous and solid broad-crested weirs has been studied experimentally (Azimi et al. 2013; Wüthrich and Chanson 2014; Zhang and Chanson 2016; Pirzad et al. 2021; Salmasi et al. 2021) and numerically (Jiang et al. 2018; Safarzadeh and Mohajeri 2018; Nourani et al. 2021, Yin et al. 2022a, b).

Even though solid and gabion weirs have similar geometrical shapes, the presence of the through flow highly affects their hydraulic performance (Shen et al. 2017, Chen et al. 2022). The relative diameter of filling particles is a dominant factor controlling their hydraulic performance by affecting the through flow discharge (Fathi-moghaddam et al. 2018). As a result, some researchers have concentrated on investigating how through and overflow interact under various submerged and free flow situations, and even some (Fathi-moghaddam et al. 2018; Shariq et al. 2020) have offered formulae to calculate through flow discharge. The effect of the broad-crested weir's geometrical shape is also extensively studied. Sargison and Percy (2009) state that Solid weirs' discharge coefficient decreases as the upstream slope increases. Madadi et al. (2014) experimentally investigated the effect of upstream slope on the discharge coefficient of broad crested weirs. They concluded that by increasing the slope of the upstream ramp, the separation zone dimensions increase, which reduces the discharge coefficient. According to (Fathi-moghaddam et al. 2018) the material size significantly affects the weir behaviour. For larger filling particles, decreasing the slope of side ramps increases the upstream water head and subsequently lowers the discharge coefficient of gabion weirs, whereas, for smaller filling particles, a downstream slope enhances weir performance. The use of soft computing techniques in the field of hydraulic engineering has expanded to include a number of issues in recent years including estimation of sediment scour in streams (Pandey et al. 2020, 2022; Tao et al. 2021), hydraulic performance of solid weirs such as piano-key weirs (Olyaie et al. 2019; Zounemat-Kermani and Mahdavi-Meymand 2019), side-weirs (Dursun et al. 2012), labyrinth weirs (Norouzi et al. 2019; Wang et al. 2022), cylindrical weirs (Ismael et al. 2021), solid broad crested weirs (Hameed et al. 2021) and the hydraulic performance and energy dissipation of gabion weirs (Salmasi and Sattari 2017). However, two of the most utilized methods seem to be support vector machines (Azimi et al. 2019) and Feedforward neural networks (Khatibi et al. 2014). Utilizing an automated evolutionary hyperparameter optimization approach, Tao et al. (Tao et al. 2021) investigates the accuracy of proposed XGBoost-Ga method against some machine learning methods such as decision trees, support vector machines and linear regression and concludes that the proposed XGBoost-Ga method is the superior. Azimi et al. (Azimi et al. 2019) studied the ability of six different support vector machines to predict the discharge coefficient of side weirs. They suggested that the superior model is a function of the Froude number. Norouzi et al. (Norouzi et al. 2019) compared the performance of multilayer perceptron (MLP), support vector machines (SVM), and radial basis networks for estimation of the discharge coefficient of labyrinth weirs. They concluded that the MLP model outperforms other models.

Considering that the standalone models usually face the problem of overfitting, ensemble learning models have become widely used in different applications to reduce overfitting and increase the accuracy by combining diverse base learners (Yin et al. 2022a, b, Dai et al. 2022). Osman et al. (2021) compared the performance of artificial neural networks, SVMs and the XGBoost algorithm in terms of their accuracy for estimating the groundwater level in Malaysia. Their results indicated that for every considered combination of input models, the XGBoost outperforms other models. Jiajai et al. (Wu et al. 2022) evaluated the performance of ANNs and XGBoost in terms of their accuracy in identifying leakages in water distribution networks. They concluded that the XGBoost outperforms the ANN by 5.54% in the estimation of leakage zone and by 2.7% in predicting the leakage level. Pham et al. (2021) evaluated the performance of different Boosting methods including Adaptive Boosting (AdaBost), Boosted Generalized Linear Models (BGLM), Extreme Gradient Boosting (XGB) ensemble models, and the Deep Boosting (DB) model in terms of their accuracy in estimating the flood hazard susceptibility of areas and suggested that DB model outperforms other model by 2%. More recent CatBoost ensemble model is also utilized in some studies including (Huang et al. 2019; Zhang et al. 2020; Guo et al. 2022) however it seems to be still unknown to the majority of hydraulic community. Zhang et al. (2020) evaluated the accuracy of CatBoost, Random forest, and the generalized regression neural network (GRNN) for estimation of daily evapotranspiration in arid regions and reported that CatBoost outperformed other models. On the other hand, Guo et al. (2022) compared the accuracy of CatBoost, XGBoost, and Neural networks with the genetic algorithm-based automated machine learning algorithm (Auto-ML) and reports that the Auto-ML model outperforms other model in terms of the accuracy in estimation of water logging depth and location in urban areas.

So far, different machine learning models have been utilized as surrogate models for estimation of the discharge coefficient of different solid weirs, yet only a few studies have focused on gabion weirs and no studies has considered the through flow of porous weirs due to the practical problems with their calculation and measurement resulted in the lack of a reliable dataset for such measurements. Besides, the application of ensemble models and specifically more recent and advanced models such as XGBoost and CatBoost models are also limited to few studies. Hence, this paper utilizes some base models including SVM, and Decision trees, along with some ensemble models such as Random Forest as a bagging ensemble, a Stacking ensemble model, and three different Boosting ensembles including Gradient Boosting Decision trees (GBDT), Extreme gradient Boosting model (XGB), and the more recent CatBoost model. Recursive feature elimination analysis is performed to find the optimal combination of features for each model. All models are then fine-tuned using the Bayesian hyper-parameter optimization method in order to improve their performance in estimation of the discharge coefficient and the through flow discharge of broad-crested gabion weirs.

Materials and method

Experimental data

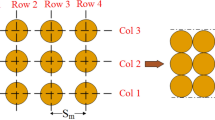

In this study, experimental data of broad crested gabion weirs (Moradi and Fathi-Moghaddam 2014) and Salmasi et al. (2017) in free flow conditions are used to provide sufficient data to train and test the models. The specifications of the experimental models are presented in Table 1 and Fig. 1. Experimental conditions include square weirs with 15 × 15 dimensions with different combinations of upstream and downstream slopes filled with particles with median diameters of 5–31 mm and a porosity of 39–51%. The discharge coefficients for the abovementioned models are presented in Fig. 2.

To calculate the discharge ratio through the porous body of gabion weirs, the multivariate regression equation suggested by Fathi-moghaddam et al. (2018) is utilized as follows:

Calculated through flow ratio (Qin/Qt) of the abovementioned data using Eq. (1) is presented in Fig. 3.

Dimensional analysis

In free-flow conditions, discharge and discharge coefficient could be related as in Eq. (2). Hence, the effects of other hydraulic factors (i.e., weir geometry and fluid characteristics) must be taken into account in the calculation of \(C_{d}\).

where g is the gravitational acceleration, H is the total upstream head (\(H = h + v^{2} /2g\)), h is the head over the weir, and v is the approach velocity.

For a PE weir, the discharge coefficient, \(C_{d}\), can be expressed by the following functional relationship:

in which f is a functional symbol, P is the weir height, ρ is water density, μ is water viscosity, and \(\sigma\) is surface tension.

Using the Π theorem of dimensional analysis (Barenblatt 1987) for the parameters in Eq. (2), the discharge coefficient for the free-flow condition can be expressed by the following dimensionless groups;

where Re is the Reynolds number; and W represents the Weber number.

Dataset preparation

In this paper, values of H lower than 4 cm over the weir are neglected to eliminate the effect of surface tension and hence the weber number. Values of H/Lc are also neglected as they are equivalent to H/P values. Consequently, the training dataset includes 6 features as input, including Re, H/P, dm/P, n, tan(a), and tan(b), with all values Standardized using mean and standard deviation of values. Discharge coefficient data extracted from (Moradi and Fathi-Moghaddam 2014) includes 324 values for trapezoidal weirs, and those from Salmasi et al. (2017) include 44 data for rectangular weirs without upstream and downstream slopes. Hence, the whole dataset used to train and evaluate models includes 368 data points, of which 70% are used as training and 30% are used to evaluate the model performance. Correlation coefficients between different input and output features are presented in Fig. 4. It is clear that H/P values are highly correlated with the Cd and Qin values. In addition, dm/P, porosity (n), and upstream slope show a moderate correlation with the Qin values.

Single and ensemble learning algorithms

Support vector machines (SVM)

SVMs (Cortes and Vapnik 1995) are a type of supervised machine learning algorithms with both applications in classification and regression which guarantees obtaining a global optimum solution. The core idea behind SVM is that it assigns training samples to spatial coordinates in order to maximize the distance between the two categories. Then, depending on which side of the gap they fall, new samples are projected into that same area and predicted to belong to a category. Real-world issues are often more complicated, necessitating more accurate hypotheses than those offered by existing linear learning machines, which also have certain computing drawbacks. Thus, using different kernels (Aizerman and Control 1964) available such as Radial Basis Function (Azimi et al. 2019) and polynomial kernels would be useful in examining correlations among real-world situations in order to create a specific model. Support vector regression (SVR) is a supervised-learning technique that trains using a loss function that penalizes both high and low misestimates equally. Vapnik's -insensitive approach ignores absolute values of errors above and below the estimate that are fewer than a predefined threshold by constructing a flexible tube with a small radius symmetrically around the estimated function. In this fashion, although points outside the tube are penalized, points within the tube that are above or below the function are not. One of the main advantages of SVR is that its computational cost is independent of the size of the input space. Additionally, it has a significant generalization ability and decent prediction accuracy (Awad and Khanna 2015). This research evaluates RBF and polynomial Kernels in terms of their accuracy for predicting discharge coefficient and through flow discharge.

Decision Trees (DT)

A class of supervised learning techniques known as decision trees (Breiman et al. 1983) are a good example of a universal function approximator, despite the difficulty of achieving this universality in their fundamental structure. Classification and regression are both possible applications for them. A Decision Tree is a collection of branches that are linked by decision nodes and finish in leaf nodes (DT). The decision node of the tree includes several alternative leaf nodes that reflect the model output, with each branch representing an algorithmic option. As a label or a continuous value, this may be used in classification and regression. Decision nodes make up a large portion of the DT's structure. Using the smallest possible tree to minimize overfitting is a fundamental aim for machine learning models when it comes to correctly representing the relationships between input and output. An ensemble DT model (EDT) is often employed, in which a number of trees are utilized to generate a final model at once (i.e., bootstrap aggregation or bagging) or sequentially (i.e., boost) (Jain et al. 2020).

Random forest regression (RFR)

An RFR method is a regression technique based on machine learning. Using bagging and random subspace as a foundation, RFR is a stable foundation upon which to develop. Hence, a number of learner trees are generated, which are then merged to get an overall prediction. To train the learning trees, original training data are used to generate bootstrap samples. Each bootstrap sample (Db) is created by randomly picking n instances from the original training data (D), which comprises N instances. It is possible to replace the bootstrap samples with fresh instances. Db is about two-thirds the size of D and contains no duplicate instances. Using the vector of input data, x, k distinct regression trees are constructed for each bootstrap sample. Low bias and high variance are characteristics of regression trees. Random forest predictions are generated by averaging the predictions of K regression trees, hk (x) (N. et al. 2021) as follows:

Stacked regression

Stacking regressions first presented by (Breiman 1996) is a technique for combining many predictors linearly to enhance prediction accuracy. The algorithm mainly consists of 2 steps, including (1) specifying a list of base learners and training each on the dataset and (2) using the predictions of the base learners as input to train the value of the meta-learner and predict new values with the meta-learner. This study's defined structure of the stacked regression includes support vector machines with polynomial and RBF kernel along with Ridge regression as level-1 models and the Decision Tree Regressor as the Meta model, as depicted in Fig. 5.

Gradient boosting

Gradient Boosted Decision Trees (GBDT) (Friedman 2001) a supervised learning technique first introduced by Jerome H. Friedman starts with a set of {xi,yi} values where xi represent the input values and yi are corresponding target values. The Gradient Boosting technique then involves iteratively creating a set of functions of F0,F1,……Ft,…Fm which are then used to form the corresponding loss function L(yi,Ft) that estimates yi. to improve the estimations, another function of Ft+1 = Ft + ht+1(x) is created so that ht+1 is as follows:

where H Where H is the collection of candidate Decision Trees being considered for inclusion in the ensemble. Hence, the expected loss function could be defined as (Hancock and Khoshgoftaar 2020):

XGBoost

First introduced by Chen et al. (Chen and Guestrin 2016), extreme gradient boosting (XGB) is a variant of gradient boosting decision trees in which each base learner learns from the previous one to reduce its error. In general, the XGBoost technique builds a more robust aggregated model by combining many base learners (decision trees). Trees' weights are used in an XGBoost algorithm to approximate the final outcome. For each dataset with m characteristics, Eq. (8) is being used to forecast the outcome:

Incorrectly defining the parameters of decision trees, such as their depth or number of iterations, may lead to overfitting. The XGBoost algorithm's excellent regularisation capabilities are what make it adequate. XGBoost penalizes models for overfitting by including and regularising approaches. XGBoost model optimization needs the tweaking of many hyperparameters. As the XGBoost method is a sort of ensemble and gradient boosting technique, its hyper-parameters may be divided into four categories: ensemble hyper-parameters, tree hyper-parameters, sub-sampling hyper-parameters, and regularisation hyper-parameters (Tao et al. 2021).

CatBoost

Propsed by Prokhorenkova et al. (2018) is an improved version of the GBDTs. First, CatBoost handles high-cardinality categorical data better than Gradient Boosting. CatBoost employs one-hot encoding for low-cardinality categories. Besides, CatBoost benefits from the Ordered Boosting method. By assuming D as the set of all available data for training the GBDT model, and having in mind that the decision tree ht+1 is the tree that minimizes the loss function (L), Ordered Boosting could be expressed as using the same examples used for computation of Ordered Target Statistics for computation of ht+1. Oblivious Decision Trees (ODTs) are a crucial component in CatBoost's method for constructing Decision Trees. CatBoost builds a collection of ODTs. ODTs are complete binary trees, hence if there are n levels, there will be 2n nodes. In addition, all non-leaf nodes in the ODT will use the same criterion for splitting. CatBoost extends GBDT's abilities so that it could account for feature interactions so that believe CatBoost will pick the most effective feature combinations throughout training (Prokhorenkova et al. 2018; Hancock and Khoshgoftaar 2020). CatBoost is highly sensitive to the definition of hyperparameters so a proper tuning of hyper-parameters would be essential.

Hyperparameter optimization

Optimization of hyper-parameters is an important task when it comes to automated machine learning (AutoML). AutoML automates complex operations, including model parameter optimization, without the need for human knowledge and so plays a crucial role in improving the performance of machine learning models (Tao et al. 2021). Bayesian optimization is a powerful method for figuring out the extreme values of computationally challenging functions. (Brochu et al. 2016). Additionally, it may be used to compute difficult-to-calculate, difficult-to-analyze derivatives, or nonconvex functions. By integrating the prior distribution of the function f(x) with the sample data, Bayesian optimization makes the assumption that it can utilize prior knowledge to determine where the function f(x)' is minimized in terms of a given criterion. The function u, also known as the acquisition function and serving as the criteria, is used to choose the next sample point in order to maximize predicted utility. It is necessary to take into account both seeking regions with high values (exploitation) and areas with high uncertainty (exploration) in order to decrease the number of samplings, which also increases accuracy (Wu et al. 2019). The prior distribution of the function f, which is mainly dependent on Bayesian optimization, is not always determined by objective criteria but may be partially or entirely determined by subjective judgements. Most people believe that the prior distribution used in Bayesian optimization matches the Gaussian process rather well. Bayesian optimization employs the Gaussian process to fit data and update the posterior distribution because it is flexible and easy to use (Wu et al. 2019).

In this study, Bayesian optimization is utilized to automatically tune the hyper-parameters of all models to estimate the discharge coefficient and through flow discharge. Best models are then reconstructed and used to calculate accuracy metrics. Figure 6 visualizes the relationship between hyper-parameters and the obtained accuracy for the XGBoost regression model during the performed optimization process. The hyper-parameters obtained from the optimization process for each model are presented in Tables 2 and 3.

Model development and accuracy assessment

All models are developed and implemented using Scikit-learn (DT, AdaB,RF) (Pedregosa et al. 2011), Mlxtend (Stacked reg.) (Raschka 2018), XGBoost library (Chen 2016–2022), and the CatBoost (LLC 2017–2022) library. Three accuracy measures are used to evaluate the accuracy of the utilized ensemble models, including Mean absolute error, Mean squared error and the correlation coefficient which are frequently used in similar research for regression tasks, i.e. see (Azimi et al. 2019) (Hameed et al. 2021).

Mean Absolute error (MAE):

Mean-squared error (MSE):

and the correlation coefficient (R2):

The target value from experimental data \(\widehat{y}_{i}\) is the model predictions and \(\overline{y}\) represents the mean values. Figure 7 presents the general workflow of the current paper.

Results and discussion

Feature importance and recursive feature elimination

Considering the sensitivity of models to the input data and features, the relative importance of input features for different algorithms to estimate the discharge coefficient Cd and the through-flow discharge ratio Qin/Qt are presented in Figs. 8 and 9, respectively. It could be concluded that the porosity (n) with the weak correlation of (− 0.26) with the Cd, had a higher correlation than upstream and downstream slopes. Yet, its relative importance for all regression models is the lowest among all the features. Besides, the relative flow head (H/P) and the relative particle size (dm/P) have the highest relative importance for estimating Cd in all models except the CatBoost, in which the importance if downstream and upstream slopes are higher than that of dm/P. Regarding models trained for estimation of Qin/Qt, the lowest importance of features belongs to the downstream slope tan(b) while the importance of porosity (n) is increased especially for CatBoost and GBDT models. Yet the most important feature is the relative flow head (H/P). In order to investigate the effect of different feature combination on the models accuracy, a recursive feature elimination (RFE) test is performed for all models. Generally results indicate that models perform best when all features are included yet the only exception is GBDT which had higher accuracy with the 4 best features. Results of the RFE test for XGB are depicted in Fig. 10. Best models are then recreated and fitted on the training dataset combining the results of hyper parameter optimization and RFE test.

Estimation of the discharge coefficient (C d)

Based on the optimization results, the best models were then rebuilt and trained over the train-test dataset.

The results obtained from single and ensemble models in terms of their accuracy in predicting the discharge coefficient are presented in Table 4 and Fig. 11. It is indicated that the base DT model performs poorly in estimation of the discharge coefficient. Standalone support vector machine with RBF kernel (SVR) and the support vector model with a third degree polynomial kernel (SVP) models have shown proper performance of R2 = 0.96 and 0.931, respectively. Among ensemble models, the well-known Random forest (RF) model as a representative of bagging techniques has improved the accuracy of the based DT model by 9% showing an accuracy of R2 = 0.921 and RMSE and MSE equal to 0.0245 and 0.00171, respectively, while it is still lower than that of support vector machines. The stacking regression method composed of SVMs and the DT as meta-model however shows a higher R2 = 0.964 with MAE = 0.175 and MSE = 0.00061 compared to the optimized GBDT model with R2 = 0.962 and MAE = 0.161, and MSE = 0.0061. Consequently, the variance and the standard deviation of the residuals in the stacked model is higher than that of GBDT model despite its higher R-squared value. XGB and CatBoost offer higher accuracy among other models with R2 = 0.974 and 0.982, respectively. CatBoost outperforming the XGB in terms of all accuracy measures by 0.8% margin in R2 value. Consequently, it could be concluded that even though the XGB model outperformed all other models, CatBoost has outperformed XGB considerably by increasing 1.2% the R2 value and reducing MSE value for almost 31%.

Estimation of the through-flow discharge ratio (Q in)

The results obtained from single and ensemble models in terms of their accuracy in predicting the Qin/Qt ratio are presented in Table 5 and Fig. 12. Clearly, the poorest results obtained from Decision Tree model with R2 = 0.865 and Random Forest with and R2 = 0.947 and MAE = 0.0219. The SVP and SVR models have shown proper accuracy of R2 = 0.968 and R2 = 0.976, respectively, while the Stacking regression ensemble model has resulted in an accuracy of R2 = 0.978 which could be considered a slight improvement of the performance of the base SVR model. GBDT model shows a significant performance improvement compared to other models with R2 = 0.989, MAE = 0.0087 and MSE = 0.00012. Utilizing the XGBoost and CatBoost algorithms, which produced MAE = 0.0079 and 0.0071 and MSE of 0.000093 and 0.000094, respectively, and may be deemed to have almost comparable performance, model accuracy is improved up to R2 = 0.992.

Residuals and feature importance analysis

Residuals are the difference between the observed value of the dependent variable (y) and the expected value (ŷ). The residuals plot depicts the difference between residuals on the vertical axis and the dependent variable on the horizontal axis, aiding in the identification of error-prone areas of the target. The residuals of the different algorithms by means of estimation of the discharge coefficient Cd and the through-flow discharge ratio Qin/Qt are presented in Figs. 13 and 14, respectively. A comparison of the residuals shows that the distribution of residuals around the centreline for all models is fairly random, suggesting that the models have generalized well, yet the CatBoost model shows almost zero residual for the training phase, which could be considered as overfitting. It is also worth noting that the majority of errors arise from Cd values close or above 1 which represents the experiments with lower discharge and limited training data.

Model sensitivity analysis

Considering that the sensitivity analysis is an important step in each ML project, this paper studies the sensitivity of the top two models against the elimination of different features. Hence, at each step, one feature is eliminated and models are trained on the rest of the features. Obtained values of R2 score are presented in Table 6. Results indicate that the most important features affecting the results are H/P, upstream slope and downstream slope. It should be noted that elimination of Reynolds number has increased the accuracy of CatB model to R2 = 0.994 while it has reduced Cd values.

Conclusion

The current study makes use of some the well-known machine learning techniques such as Support vector machines, Decision trees(DT) and Random Forest (RF) models, as well as the stacking ensemble technique and three different Boosting ensemble models, including Gradient Boosting Decision Trees (GBDT), Extreme gradient Boosting model (XGB), and the more recent CatBoost model in order to evaluate the effectiveness of these models in estimation of the discharge coefficient (Cd) and the through-flow discharge ratio( Qin/Qt) of broad-crested gabion weirs. A total of 368 data points from literature were extracted and used to train and evaluate the model's accuracy. The Bayesian optimization framework as well as the recursive feature elimination analysis are combined in order to improve the accuracy of the utilized ML models. Results indicate that the weakest performance with R2 = 0.82 and 0.865 was obtained using the DT model to estimate the discharge coefficient and through flow discharge. The use of the stacking model improved the accuracy of base models to R2 = 0.9643 and 0.978 for estimation of Cd and Qin/Qt, respectively. The maximum accuracy for estimating the discharge coefficient was achieved from the CatBoost model with R2 = 0.982, MAE = 0.0126, and MSE = 0.00033. All boosting strategies produced accuracies higher than R2 = 0.962 in terms of both the Cd and Qin/Qt estimation. Utilizing a sensitivity analysis for the top two models of XGBoost and CatBoost, a slight increase in performance of models was identified in terms of estimation of Qin/Qt ratio. While estimating the Qin/Qt values, CatBoost has outperformed other models including XGBOOst slightly with R2 = 0.994 against 0.922 of XGboost model. Yet, the distribution of error residuals obtained from XGBoost is slightly better distributed, showing a slightly better performance in the model's generalisation.

Data availability

The data that support the findings of this study are available from the corresponding authors upon reasonable request.

References

Aizerman MAJA, Control R (1964) Theoretical foundations of the potential function method in pattern recognition learning. Autom Remote Control 25:821–837

Awad M, Khanna R (2015). Support vector regression. Efficient learning machines: theories, concepts, and applications for engineers and system designers. Berkeley, CA, Apress: 67–80

Azimi AH, Rajaratnam N, Zhu DZ (2013) Discharge characteristics of weirs of finite crest length with upstream and downstream ramps. J Irrig Drain Eng 139(1):75–83. https://doi.org/10.1061/(ASCE)IR.1943-4774.0000519

Azimi H, Bonakdari H, Ebtehaj I (2019) Design of radial basis function-based support vector regression in predicting the discharge coefficient of a side weir in a trapezoidal channel. Appl Water Sci 9(4):78. https://doi.org/10.1007/s13201-019-0961-5

Breiman L (1996) Stacked regressions. Mach Learn 24(1):49–64. https://doi.org/10.1007/BF00117832

Breiman L, Friedman JH, Olshen RA, Stone CJ (1983). Classification and Regression Trees.

Brochu E, Cora V, de Freitas N (2016) A tutorial on bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning. University of British Columbia, Department of Computer Science

Chen Z, Liu Z, Yin L, Zheng W (2022) Statistical analysis of regional air temperature characteristics before and after dam construction. Urban Clim. https://doi.org/10.1016/j.uclim.2022.101085

Chen T, Guestrin C (2016). XGBoost: a scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. San Francisco, California, USA, Association for Computing Machinery: 785–794

Chen T (2016–2022). "https://github.com/dmlc/xgboost."

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297. https://doi.org/10.1007/BF00994018

Dai L, Wang Z, Guo T, Hu L, Chen Y, Chen C, Chen J (2022) Pollution characteristics and source analysis of microplastics in the qiantang river in southeastern China. Chemosphere (oxford) 293:133576. https://doi.org/10.1016/j.chemosphere.2022.133576

Dursun OF, Kaya N, Firat M (2012) Estimating discharge coefficient of semi-elliptical side weir using ANFIS. J Hydrol 426–427:55–62. https://doi.org/10.1016/j.jhydrol.2012.01.010

Fang X, Wang Q, Wang J, Xiang Y, Wu Y, Zhang Y (2021) Employing extreme value theory to establish nutrient criteria in bay waters: a case study of Xiangshan Bay. J Hydrol. https://doi.org/10.1016/j.jhydrol.2021.127146

Fathi-moghaddam M, Sadrabadi MT, Rahmanshahi M (2018) Numerical simulation of the hydraulic performance of triangular and trapezoidal gabion weirs in free flow condition. Flow Meas Instrum 62:93–104. https://doi.org/10.1016/j.flowmeasinst.2018.05.005

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Ganesh N, Jain P, Choudhury A, Dutta P, Kalita K, Barsocchi P (2021) Random forest regression-based machine learning model for accurate estimation of fluid flow in curved pipes. Processes 9(11):2095

Guo Y, Quan L, Song L, Liang H (2022) Construction of rapid early warning and comprehensive analysis models for urban waterlogging based on AutoML and comparison of the other three machine learning algorithms. J Hydrol 605:127367. https://doi.org/10.1016/j.jhydrol.2021.127367

Hameed MM, AlOmar MK, Khaleel F, Al-Ansari N (2021) An extra tree regression model for discharge coefficient prediction: novel, practical applications in the hydraulic sector and future research directions. Math Probl Eng 2021:7001710. https://doi.org/10.1155/2021/7001710

Hancock JT, Khoshgoftaar TM (2020) CatBoost for big data: an interdisciplinary review. J Big Data 7(1):94. https://doi.org/10.1186/s40537-020-00369-8

Huang G, Wu L, Ma X, Zhang W, Fan J, Yu X, Zeng W, Zhou H (2019) Evaluation of CatBoost method for prediction of reference evapotranspiration in humid regions. J Hydrol 574:1029–1041. https://doi.org/10.1016/j.jhydrol.2019.04.085

Ibrahem Ahmed Osman A, Najah Ahmed A, Chow MF, Feng Huang Y, El-Shafie A (2021) Extreme gradient boosting (Xgboost) model to predict the groundwater levels in Selangor Malaysia. Ain Shams Eng. J. 12(2):1545–1556. https://doi.org/10.1016/j.asej.2020.11.011

Ismael AA, Suleiman SJ, Al-Nima RRO, Al-Ansari N (2021) Predicting the discharge coefficient of oblique cylindrical weir using neural network techniques. Arab J Geosci 14(16):1670. https://doi.org/10.1007/s12517-021-07911-9

Jain P, Coogan SCP, Subramanian SG, Crowley M, Taylor S, Flannigan MD (2020) A review of machine learning applications in wildfire science and management. Environ Rev 28(4):478–505. https://doi.org/10.1139/er-2020-0019

Jiang L, Diao M, Sun H, Ren Y (2018) Numerical modeling of flow over a rectangular broad-crested weir with a sloped upstream face. Water 10(11):1663

Khatibi R, Salmasi F, Ghorbani MA, Asadi H (2014) Modelling energy dissipation over stepped-gabion weirs by artificial intelligence. Water Resour Manage 28(7):1807–1821. https://doi.org/10.1007/s11269-014-0545-y

Liu E, Chen S, Yan D, Deng Y, Wang H, Jing Z, Pan S (2022) Detrital zircon geochronology and heavy mineral composition constraints on provenance evolution in the western pearl river mouth basin, northern south China sea: a source to sink approach. Mar Pet Geol 145:105884. https://doi.org/10.1016/j.marpetgeo.2022.105884

LLC, Y. (2017–2022). "catboost.ai."

Madadi MR, HosseinzadehDalir A, Farsadizadeh D (2014) Investigation of flow characteristics above trapezoidal broad-crested weirs. Flow Meas Instrum 38:139–148. https://doi.org/10.1016/j.flowmeasinst.2014.05.014

Mohamed HI (2010) Flow over gabion weirs. J Irrig Drain Eng 136(8):573–577. https://doi.org/10.1061/(ASCE)IR.1943-4774.0000215

Moradi, M. and M. Fathi-Moghaddam (2014). Investigation of the effect of upstream and downstream slopes of broad-crested gabion weirs on discharge coefficient. In: 10th International River Engineering Conference Ahwaz,Iran.

Norouzi R, Daneshfaraz R, Ghaderi A (2019) Investigation of discharge coefficient of trapezoidal labyrinth weirs using artificial neural networks and support vector machines. Appl Water Sci 9(7):148. https://doi.org/10.1007/s13201-019-1026-5

Nourani B, Arvanaghi H, Salmasi F (2021) Effects of different configurations of sloping crests and upstream and downstream ramps on the discharge coefficient for broad-crested weirs. J Hydrol 603:126940. https://doi.org/10.1016/j.jhydrol.2021.126940

Olyaie E, Banejad H, Heydari M (2019) Estimating discharge coefficient of PK-weir under subcritical conditions based on high-accuracy machine learning approaches. Iran J Sci Technol Trans Civ Eng 43(1):89–101. https://doi.org/10.1007/s40996-018-0150-z

Pandey M, Zakwan M, Khan MA, Bhave S (2020) Development of scour around a circular pier and its modelling using genetic algorithm. Water Supply 20(8):3358–3367. https://doi.org/10.2166/ws.2020.244%JWaterSupply

Pandey M, Jamei M, Ahmadianfar I, Karbasi M, Lodhi AS, Chu X (2022) Assessment of scouring around spur dike in cohesive sediment mixtures: a comparative study on three rigorous machine learning models. J Hydrol 606:127330. https://doi.org/10.1016/j.jhydrol.2021.127330

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Louppe G, Prettenhofer P, Weiss R, Weiss RJ, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Pham QB, Pal SC, Chakrabortty R, Norouzi A, Golshan M, Ogunrinde AT, Janizadeh S, Khedher KM, Anh DT (2021) Evaluation of various boosting ensemble algorithms for predicting flood hazard susceptibility areas. Geomat Nat Haz Risk 12(1):2607–2628. https://doi.org/10.1080/19475705.2021.1968510

Pirzad M, Pourmohammadi MH, GhorbanizadehKharazi H, SolimaniBabarsad M, Derikvand E (2021) Experimental study on flow over arced-plan porous weirs. Water Supply 22(3):2659–2672. https://doi.org/10.2166/ws.2021.446%JWaterSupply

Prokhorenkova L, Gusev G, Vorobev A, Dorogush AV, Gulin A (2018). CatBoost: unbiased boosting with categorical features. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. Montréal, Canada, Curran Associates Inc.: 6639–6649

Rahmanshahi M, Bejestan MS (2020) Gene-expression programming approach for development of a mathematical model of energy dissipation on block ramps. J Irrig Drain Eng 146(2):04019033. https://doi.org/10.1061/(ASCE)IR.1943-4774.0001442

Raschka S (2018) MLxtend: providing machine learning and data science utilities and extensions to Python’s scientific computing stack. J Open Source Softw 3:638

Safarzadeh A, Mohajeri SH (2018) Hydrodynamics of rectangular broad-crested porous weirs. J Irrig Drain Eng. https://doi.org/10.1061/(ASCE)IR.1943-4774.0001338

Salmasi F, Sattari MT (2017) Predicting discharge coefficient of rectangular broad-crested gabion weir using M5 tree model. Iran J Sci Technol Trans Civ Eng 41(2):205–212. https://doi.org/10.1007/s40996-017-0052-5

Salmasi F, Sabahi N, Abraham J (2021) Discharge coefficients for rectangular broad-crested gabion weirs: experimental study. J Irrig Drain Eng 147(3):04021001. https://doi.org/10.1061/(ASCE)IR.1943-4774.0001535

Sargison JE, Percy A (2009) Hydraulics of broad-crested weirs with varying side slopes. J Irrig Drain Eng 135(1):115–118. https://doi.org/10.1061/(ASCE)0733-9437

Shariq A, Hussain A, Ahmad Z (2020) Discharge equation for the gabion weir under through flow condition. Flow Meas Instrum 74:101769. https://doi.org/10.1016/j.flowmeasinst.2020.101769

Shen X, Hong Y, Zhang K, Hao Z (2017) Refining a distributed linear reservoir routing method to improve performance of the CREST model. J Hydrol Eng 22(3):4016061. https://doi.org/10.1061/(ASCE)HE.1943-5584.0001442

Tao H, Habib M, Aljarah I, Faris H, Afan HA, Yaseen ZM (2021) An intelligent evolutionary extreme gradient boosting algorithm development for modeling scour depths under submerged weir. Inf Sci 570:172–184. https://doi.org/10.1016/j.ins.2021.04.063

Wang F, Zheng S, Ren Y, Liu W, Wu C (2022) Application of hybrid neural network in discharge coefficient prediction of triangular labyrinth weir. Flow Meas Instrum 83:102108. https://doi.org/10.1016/j.flowmeasinst.2021.102108

Wu J, Chen XY, Zhang H, Xiong LD, Lei H, Deng SH (2019) Hyperparameter optimization for machine learning models based on bayesian optimizationb. J Electron Sci Technol 17(1):26–40. https://doi.org/10.11989/JEST.1674-862X.80904120

Wu J, Ma D, Wang W (2022) Leakage identification in water distribution networks based on XGBoost algorithm. J Water Resour Plan Manage 148(3):04021107. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001523

Wüthrich D, Chanson H (2014) Hydraulics, Air Entrainment, and Energy Dissipation on a Gabion Stepped Weir. J. Hydr. Eng 140(9):04014046. https://doi.org/10.1061/(ASCE)HY.1943-7900.0000919

Yin L, Wang L, Keim BD, Konsoer K, Zheng W (2022a) Wavelet Analysis of Dam Injection and Discharge in Three Gorges Dam and Reservoir with Precipitation and River Discharge. Water 14(4):567. https://doi.org/10.3390/w14040567

Yin L, Wang L, Zheng W, Ge L, Tian J, Liu Y, Liu S (2022b) Evaluation of empirical atmospheric models using swarm-C satellite data. Atmosphere 13(2):294. https://doi.org/10.3390/atmos13020294

Yue Z, Zhou W, Li T (2021) Impact of the indian ocean dipole on evolution of the subsequent ENSO: relative roles of dynamic and thermodynamic processes. J Clim 34(9):3591–3607. https://doi.org/10.1175/JCLI-D-20-0487.1

Zhang G, Chanson H (2016) Gabion stepped spillway: interactions between free-surface, cavity, and seepage flows. J Hydr Eng 142(5):06016002. https://doi.org/10.1061/(ASCE)HY.1943-7900.0001120

Zhang K, Ali A, Antonarakis A, Moghaddam M, Saatchi S, Tabatabaeenejad A, Moorcroft P (2019a) The sensitivity of north american terrestrial carbon fluxes to spatial and temporal variation in soil moisture: an analysis using radar-derived estimates of root-zone soil moisture. J Geophys Res Biogeosci 124(11):3208–3231. https://doi.org/10.1029/2018JG004589

Zhang K, Wang S, Bao H, Zhao X (2019b) Characteristics and influencing factors of rainfall-induced landslide and debris flow hazards in Shaanxi Province, China. Nat Hazard 19(1):93–105. https://doi.org/10.5194/nhess-19-93-2019

Zhang Y, Zhao Z, Zheng J (2020) CatBoost: a new approach for estimating daily reference crop evapotranspiration in arid and semi-arid regions of Northern China. J Hydrol 588:125087. https://doi.org/10.1016/j.jhydrol.2020.125087

Zounemat-Kermani M, Mahdavi-Meymand A (2019) Hybrid meta-heuristics artificial intelligence models in simulating discharge passing the piano key weirs. J Hydrol 569:12–21. https://doi.org/10.1016/j.jhydrol.2018.11.052

Acknowledgements

Thank are due to all the authors for their contributions. All authors have read and agreed to the submitted version of the manuscript.

Funding

The authors gratefully acknowledge the financial support from the National Natural Science Foundation of China (Grant Nos. 52179060 and51909024).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

There is no conflict of interest in this manuscript.

Ethical approval

The author consents to participate in the works under the Ethical Approval and Compliance with Ethical Standards.

Ethical conduct

The principles of ethical and professional conduct have been followed.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Azma, A., Tavakol Sadrabadi, M., Liu, Y. et al. Boosting ensembles for estimation of discharge coefficient and through flow discharge in broad-crested gabion weirs. Appl Water Sci 13, 45 (2023). https://doi.org/10.1007/s13201-022-01841-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13201-022-01841-x