Abstract

The replication or reproducibility crisis in psychological science has renewed attention to philosophical aspects of its methodology. I provide herein a new, functional account of the role of replication in a scientific discipline: to undercut the underdetermination of scientific hypotheses from data, typically by hypotheses that connect data with phenomena. These include hypotheses that concern sampling error, experimental control, and operationalization. How a scientific hypothesis could be underdetermined in one of these ways depends on a scientific discipline’s epistemic goals, theoretical development, material constraints, institutional context, and their interconnections. I illustrate how these apply to the case of psychological science. I then contrast this “bottom-up” account with “top-down” accounts, which assume that the role of replication in a particular science, such as psychology, must follow from a uniform role that it plays in science generally. Aside from avoiding unaddressed problems with top-down accounts, my bottom-up account also better explains the variability of importance of replication of various types across different scientific disciplines.

Similar content being viewed by others

Notes

These related events include Daryl Bem’s use of techniques standard in psychology to show evidence for extra-sensory perception (2011), the revelations of high-profile scientific fraud by Diederik Stapel (Callaway 2011) and Marc Hauser (Carpenter 2012), and related replication failures involving prominent effects such as ego depletion (Hagger et al. 2016).

The quotation reads: “the scientifically significant physical effect may be defined as that which can be regularly reproduced by anyone who carries out the appropriate experiment in the way prescribed.” See also Popper (1959, p. 45): “Only when certain events recur in accordance with rules or regularities, as in the case of repeatable experiments, can our observations be tested—in principle—by anyone. … Only by such repetition can we convince ourselves that we are not dealing with a mere isolated ‘coincidence,’ but with events which, on account of their regularity and reproducibility, are in principle inter-subjectively testable.” Zwaan et al. (2018, pp. 1, 2, 4) also quote Dunlap (1926) (published earlier as Dunlap (1925)) for the same point.

Schmidt (2009, pp. 90–2), citing much the same passages of Popper (1959, p. 45) as the others mentioned, also provides a similar explanation of replication’s importance, appealing to general virtues such as objectivity and reliability. (See the first paragraphs of Schmidt (2009, p. 90; 2017, p. 236) for especially clear statements, and Machery (2020) for an account of replication based on its ability to buttress reliability in particular.) But for him, that explanation only motivates why establishing a definition of replication is important in the first place; it plays no role in his definition itself. Thus, by drawing on Schmidt’s account of what replication is, I am not committing to his and others’ stated explanations of why is important.

For example, it is compatible with modifications or clarifications of how interpretation plays an essential role in determining what data models are or what they represent, either for Suppes’ hierarchy (Leonelli 2019) or Bogan and Woodward’s (Harris 2003). It is also compatible with interactions between the levels of data and phenomena (or experiment) in the course of a scientific investigation (Bailer-Jones 2009, Ch. 7).

That’s not to say there is no interesting relationship between low-level underdetermination and the question of scientific realism, only that it much more indirect. See Laymon (1982) for a discussion thereof and Brewer and Chinn (1994) for historical examples from psychology as they bear on the motivation for theory change.

The first function, concerning mistakes in data analysis, does not appear in Schmidt (2009, 2017). That said, neither he nor I claim that our lists are exhaustive, but they do seem to enumerate the most common types of low-level underdetermination that arise in the interpretation of the results of psychological studies. One type that occurs more often in the physical sciences concerns the accuracy, precision, and systematic error of an experiment or measurement technique; I hope in future work to address this other function in more detail. It would also be interesting to compare the present perspective to that of Feest (2019), who, focusing on the “epistemic uncertainty” regarding the third and sixth functions, arrives at a more pessimistic and limiting conclusion about the role of replication in psychological science.

This is also analogous to the case of the demarcation problem, on which progress might be possible if one helps oneself to discipline-specific information (Hansson 2013).

Of course, there is a variety of quantitative and qualitative methods in psychological research, and qualitative methods are not always a good target for statistical analysis. But the question of whether the data are representative of the population of interest is important regardless of whether that data is quantitative or qualitative.

Meehl (1967) wanted to distinguish this lack of precise predictions from the situation in physics, but perhaps overstated his case: there are many experimental situations in physics in which theory predicts the existence of an effect determined by an unknown parameter, too. Meehl (1967) was absolutely right, though, that one cannot rest simply with evidence against a non-zero effect size; doing so abdicates responsibility to find just what the aforementioned patterns of human behavior and mental life are.

Online participant services such as Amazon Turk and other crowdsourced methods offer a potentially more diverse participant pool at a more modest cost (Uhlmann et al. 2019), but come with their own challenges.

“Big science” is a historiographical cluster concept referring to science with one or more of the following characteristics: large budgets, large staff sizes, large or particularly expensive equipment, and complex and expansive laboratories (Galison and Hevly 1992).

For secondary sources on MSRP, see Musgrave and Pigden (2016, §§2.2, 3.4)

For more on this, see Musgrave and Pigden (2016, §4).

In what follows, I use my own examples rather than Guttinger’s, with the exception of some overlap in discussion of Leonelli (2018).

Leonelli (2018) has argued that this possibility is realized in certain sciences that focus on qualitative data collection, but it is yet unclear whether this is really due to pragmatic limitations on the possibility of replications, rather than a lack of underdetermination, low-level or otherwise.

References

Bailer-Jones, D.M. (2009). Scientific models in philosophy of science. Pittsburgh: University of Pittsburgh Press.

Baker, M. (2016). 1,500 scientists lift the lid on reproducibility. Nature, 533(7604), 452–454.

Begley, C.G., & Ellis, L.M. (2012). Raise standards for preclinical cancer research: drug development. Nature, 483(7391), 531–533.

Bem, D.J. (2011). Feeling the future: experimental evidence for anomalous retroactive influences on cognition and affect. Journal of Personality and Social Psychology, 100(3), 407.

Benjamin, D.J., Berger, J.O., Johannesson, M., Nosek, B.A., Wagenmakers, E.-J., Berk, R., Bollen, K.A., Brembs, B., Brown, L., Camerer, C., & et al. (2018). Redefine statistical significance. Nature Human Behaviour, 2(1), 6.

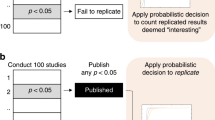

Bird, A. (2018). Understanding the replication crisis as a base rate fallacy. The British Journal for the Philosophy of Science, forthcoming.

Bogen, J., & Woodward, J. (1988). Saving the phenomena. The Philosophical Review, 97(3), 303–352.

Brewer, W.F., & Chinn, C.A. (1994). Scientists’ responses to anomalous data: Evidence from psychology, history, and philosophy of science. In PSA: Proceedings of the biennial meeting of the philosophy of science association, (Vol. 1 pp. 304–313): Philosophy of Science Association.

Button, K.S., Ioannidis, J.P., Mokrysz, C., Nosek, B.A., Flint, J., Robinson, E.S., & Munafò, M.R. (2013). Power failure: why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience, 14(5), 365–376.

Callaway, E. (2011). Report finds massive fraud at Dutch universities. Nature, 479(7371), 15.

Camerer, C.F., Dreber, A., Forsell, E., Ho, T.-H., Huber, J., Johannesson, M., Kirchler, M., Almenberg, J., Altmejd, A., Chan, T., & et al. (2016). Evaluating replicability of laboratory experiments in economics. Science, 351(6280), 1433–1436.

Carpenter, S. (2012). Government sanctions Harvard psychologist. Science, 337(6100), 1283–1283.

Cartwright, N. (1991). Replicability, reproducibility, and robustness: comments on Harry Collins. History of Political Economy, 23(1), 143–155.

Chen, X. (1994). The rule of reproducibility and its applications in experiment appraisal. Synthese, 99, 87–109.

Dunlap, K. (1925). The experimental methods of psychology. The Pedagogical Seminary and Journal of Genetic Psychology, 32(3), 502–522.

Dunlap, K. (1926). The experimental methods of psychology. In Murchison, C. (Ed.) Psychologies of 1925: Powell lectures in psychological theory (pp. 331–351). Worcester: Clark University Press.

Feest, U. (2019). Why replication is overrated. Philosophy of Science, 86(5), 895–905.

Feyerabend, P. (1970). Consolation for the specialist. In Lakatos, I., & Musgrave, A. (Eds.) Criticism and the growth of knowledge (pp. 197–230). Cambridge: Cambridge University Press.

Feyerabend, P. (1975). Against method. London: New Left Books.

Fidler, F., & Wilcox, J. (2018). Reproducibility of scientific results. In Zalta, E.N. (Ed.) The Stanford encyclopedia of philosophy. Metaphysics Research Lab, Stanford University, winter 2018 edition.

Franklin, A., & Howson, C. (1984). Why do scientists prefer to vary their experiments? Studies in History and Philosophy of Science Part A, 15 (1), 51–62.

Galison, P., & Hevly, B.W. (Eds.). (1992). Big science: the growth of large-scale research. Stanford: Stanford University Press.

Gelman, A. (2018). Don’t characterize replications as successes or failures. Behavioral and Brain Sciences, 41, e128.

Gillies, D.A. (1971). A falsifying rule for probability statements. The British Journal for the Philosophy of Science, 22(3), 231–261.

Gómez, O.S., Juristo, N., & Vegas, S. (2010). Replications types in experimental disciplines. In Proceedings of the 2010 ACM-IEEE international symposium on empirical software engineering and measurement, ESEM ’10. New York: Association for Computing Machinery.

Greenwald, A.G., Pratkanis, A.R., Leippe, M.R., & Baumgardner, M.H. (1986). Under what conditions does theory obstruct research progress? Psychological Review, 93(2), 216–229.

Guttinger, S. (2020). The limits of replicability. European Journal for Philosophy of Science, 10(10), 1–17.

Hagger, M.S., Chatzisarantis, N.L., Alberts, H., Anggono, C.O., Batailler, C., Birt, A.R., Brand, R., Brandt, M.J., Brewer, G., Bruyneel, S., & et al. (2016). A multilab preregistered replication of the ego-depletion effect. Perspectives on Psychological Science, 11(4), 546–573.

Hansson, S.O. (2013). Defining pseudoscience and science. In Pigliucci, M., & Boudry, M. (Eds.) Philosophy of pseudoscience: reconsidering the demarcation problem (pp. 61–77). Chicago: University of Chicago Press.

Harris, T. (2003). Data models and the acquisition and manipulation of data. Philosophy of Science, 70(5), 1508–1517.

Lakatos, I. (1970). Falsification and the methodology of scientific research programmes. In Lakatos, I., & Musgrave, A. (Eds.) Criticism and the growth of knowledge (pp. 91–196). Cambridge: Cambridge University Press.

Lakens, D., Adolfi, F.G., Albers, C.J., Anvari, F., Apps, M.A., Argamon, S.E., Baguley, T., Becker, R.B., Benning, S.D., Bradford, D.E., & et al. (2018). Justify your alpha. Nature Human Behaviour, 2(3), 168.

Laudan, L. (1983). The demise of the demarcation problem. In Cohan, R., & Laudan, L. (Eds.) Physics, philosophy, and psychoanalysis (pp. 111–127). Dordrecht: Reidel.

Lawrence, M.S., Stojanov, P., Polak, P., Kryukov, G.V., Cibulskis, K., Sivachenko, A., Carter, S.L., Stewart, C., Mermel, C.H., Roberts, S.A., & et al. (2013). Mutational heterogeneity in cancer and the search for new cancer-associated genes. Nature, 499(7457), 214–218.

Laymon, R. (1982). Scientific realism and the hierarchical counterfactual path from data to theory. In PSA: Proceedings of the biennial meeting of the philosophy of science association, (Vol. 1 pp. 107–121): Philosophy of Science Association.

LeBel, E.P., Berger, D., Campbell, L., & Loving, T.J. (2017). Falsifiability is not optional. Journal of Personality and Social Psychology, 113(2), 254–261.

Leonelli, S. (2018). Rethinking reproducibility as a criterion for research quality. In Boumans, M., & Chao, H.-K. (Eds.) Including a symposium on Mary Morgan: curiosity, imagination, and surprise, volume 36B of Research in the History of Economic Thought and Methodology (pp. 129–146): Emerald Publishing Ltd.

Leonelli, S. (2019). What distinguishes data from models? European Journal for Philosophy of Science, 9(2), 22.

Machery, E. (2020). What is a replication? Philosophy of Science, forthcoming.

Meehl, P.E. (1967). Theory-testing in psychology and physics: a methodological paradox. Philosophy of Science, 34(2), 103–115.

Meehl, P.E. (1990). Appraising and amending theories: the strategy of Lakatosian defense and two principles that warrant it. Psychological Inquiry, 1(2), 108–141.

Musgrave, A., & Pigden, C. (2016). Imre Lakatos. In Zalta, E.N. (Ed.) The Stanford encyclopedia of philosophy. Metaphysics Research Lab, Stanford University, winter 2016 edition.

Muthukrishna, M., & Henrich, J. (2019). A problem in theory. Nature Human Behaviour, 3(3), 221–229.

Norton, J.D. (2015). Replicability of experiment. THEORIA. Revista de Teoría Historia y Fundamentos de la Ciencia, 30(2), 229–248.

Nosek, B.A., & Errington, T.M. (2017). Reproducibility in cancer biology: making sense of replications. Elife, 6, e23383.

Nosek, B.A., & Errington, T.M. (2020). What is replication? PLoS Biology, 18(3), e3000691.

Nuijten, M.B., Bakker, M., Maassen, E., & Wicherts, J.M. (2018). Verify original results through reanalysis before replicating. Behavioral and Brain Sciences, 41, e143.

Open Science Collaboration (OSC). (2015). Estimating the reproducibility of psychological science. Science, 349(6251), aac4716.

Popper, K.R. (1959). The logic of scientific discovery. Oxford: Routledge.

Radder, H. (1992). Experimental reproducibility and the experimenters’ regress. PSA: Proceedings of the biennial meeting of the philosophy of science association (Vol. 1 pp. 63–73). Philosophy of Science Association.

Rosenthal, R. (1990). Replication in behavioral research. In Neuliep, J.W. (Ed.) Handbook of replication research in the behavioral and social sciences, volume 5 of Journal of Social Behavior and Personality (pp. 1–30). Corte Madera: Select Press.

Schmidt, S. (2009). Shall we really do it again? The powerful concept of replication is neglected in the social sciences. Review of General Psychology, 13(2), 90–100.

Schmidt, S. (2017). Replication. In Makel, M.C., & Plucker, J.A. (Eds.) Toward a more perfect psychology: improving trust, accuracy, and transparency in research (pp. 233–253): American Psychological Association.

Simons, D.J. (2014). The value of direct replication. Perspectives on Psychological Science, 9(1), 76–80.

Simons, D.J., Shoda, Y., & Lindsay, D.S. (2017). Constraints on generality (COG): a proposed addition to all empirical papers. Perspectives on Psychological Science, 12(6), 1123–1128.

Stanford, K. (2017). Underdetermination of scientific theory. In Zalta, E.N. (Ed.) The Stanford encyclopedia of philosophy. Metaphysics Research Lab, Stanford University, winter 2017 edition.

Suppes, P. (1962). Models of data. In Nagel, E., Suppes, P., & Tarski, A. (Eds.) Logic, methodology and philosophy of science: proceedings of the 1960 international congress (pp. 252–261). Stanford: Stanford University Press.

Suppes, P. (2007). Statistical concepts in philosophy of science. Synthese, 154(3), 485–496.

Uhlmann, E.L., Ebersole, C.R., Chartier, C.R., Errington, T.M., Kidwell, M.C., Lai, C.K., McCarthy, R.J., Riegelman, A., Silberzahn, R., & Nosek, B.A. (2019). Scientific Utopia III: crowdsourcing science. Perspectives on Psychological Science, 14(5), 711–733.

Zwaan, R.A., Etz, A., Lucas, R.E., & Donnellan, M.B. (2018). Making replication mainstream. Behavioral and Brain Sciences, 41, e120.

Acknowledgments

Thanks to audiences in London (UK XPhi 2018), Burlington (Social Science Roundtable 2019), and Geneva (EPSA2019) for their comments on an earlier version, and especially to the Pitt Center for Philosophy of Science Reading Group in Spring 2020: Jean Baccelli, Andrew Buskell, Christian Feldbacher-Escamilla, Marie Gueguen, Paola Hernandez-Chavez, Edouard Machery, Adina Roskies, and Sander Verhaegh.

Funding

This research was partially supported by a Single Semester Leave from the University of Minnesota, and a Visiting Fellowship at the Center for Philosophy of Science at the University of Pittsburgh.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: EPSA2019: Selected papers from the biennial conference in Geneva

Guest Editors: Anouk Barberousse, Richard Dawid, Marcel Weber

Rights and permissions

About this article

Cite this article

Fletcher, S.C. The role of replication in psychological science. Euro Jnl Phil Sci 11, 23 (2021). https://doi.org/10.1007/s13194-020-00329-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13194-020-00329-2