Abstract

With the increasing influence of machine learning algorithms in decision-making processes, concerns about fairness have gained significant attention. This area now offers significant literature that is complex and hard to penetrate for newcomers to the domain. Thus, a mapping study of articles exploring fairness issues is a valuable tool to provide a general introduction to this field. Our paper presents a systematic approach for exploring existing literature by aligning their discoveries with predetermined inquiries and a comprehensive overview of diverse bias dimensions, encompassing training data bias, model bias, conflicting fairness concepts, and the absence of prediction transparency, as observed across several influential articles. To establish connections between fairness issues and various issue mitigation approaches, we propose a taxonomy of machine learning fairness issues and map the diverse range of approaches scholars developed to address issues. We briefly explain the responsible critical factors behind these issues in a graphical view with a discussion and also highlight the limitations of each approach analyzed in the reviewed articles. Our study leads to a discussion regarding the potential future direction in ML and AI fairness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Machine learning-based models have undoubtedly brought remarkable advancements in various fields, demonstrating their ability to make accurate predictions and automate decision-making processes. However, many real-world applications of machine learning (ML) models, such as determining admission to a university [1], screening job applicants [2,3,4], disbursing government subsidies [5, 6], identifying persons at high risk of disease [7], and so on, are prone to bias. The inherent biases and limitations in the training data and algorithms can lead to discriminatory outcomes and perpetuate societal biases. Discriminatory outcomes refer to situations where machine learning models produce predictions or decisions that systematically favor or disadvantage particular groups more than others [8, 9]. Societal biases are the preconceived notions, prejudices, or stereotypes in a society that can lead to unfair advantages or disadvantages for specific individuals or groups. ML and AI researchers have raised many questions about the source and the solution to the fairness issues in AI (FAI) and discussed various types of biases [10].

ML models can exhibit various unfairness issues, encompassing biases and discriminatory outcomes. Discussions often revolve around biases in training datasets, discriminatory behavior exhibited by predictive models, and the challenge of interpreting and explaining the outcomes produced by these models. Biases in the training dataset usually refer to the data representing disparities and discrimination against certain groups based on attributes such as race, gender, or socioeconomic status, which Ml models may inadvertently amplify. The press and literature gradually started to discuss these types of ML model bias in the early twenty-first century [11, 12]. Also, ML models can exhibit bias towards specific groups despite unbiased training data. Other than these issues, the prediction outcome’s unexplainable and uninterpretable nature is another widespread fairness issue. Explainability and interpretability refer to the logical reasoning of outcomes with available alternative profiles. For example, suppose a person is denied credit from a bank. In that case, explanations provide feedback on where exactly his profile could be altered to get the credit, such as increasing monthly income, decreasing loan amount, or changing race. Some of these changes may not be possible, such as changing a person’s race to get credit from a back, which makes the bank’s credit assigning model unfair or biased towards a group of people [13]. In addition, researchers also report some other forms of bias, including inconsistent predictions and inherent biases within the data [14, 15].

As these bias types can compromise the integrity and reliability of decision-making procedures, impeding the advancement the ML model originally intended to enable, achieving fairness in ML predictions is essential [16]. Avoiding fairness concerns across diverse domains, including sign language analysis (e.g., [17, 18]), image object analysis (e.g., [19, 20]), non-linear data analysis (e.g., [21, 22]) and graph data analysis (e.g., [23]), could provoke doubts regarding the credibility and reliability of machine learning methodologies in respective fields. Advancement in ensuring fairness requires continuous research, development, and implementation of approaches to mitigate predictions’ discrimination. Numerous researchers have discussed and proposed various approaches to this ongoing challenge in recent years, leading to rapid and dynamic growth in research within the field [24,25,26,27,28]. As a consequence of this growth, comprehending the existing issues and methodologies within the field can be time-consuming, highlighting the need for dedicated efforts to stay up-to-date with the latest advancements. It even requires much effort for people new to the field as a researcher. Literature review articles aid in this situation and provide comprehensive information so that researchers and practitioners can understand the proposed methodologies and their limitations with minimal effort. Also, it allows for examining different fairness definitions, evaluation metrics, and bias mitigation strategies employed in various domains. Moreover, a literature review helps identify gaps, challenges, and open research questions in the pursuit of fairness, enabling researchers to build upon existing work and propose novel approaches. Additionally, it aids in creating a shared knowledge base and promotes collaboration within the research community, ultimately contributing to developing more robust, transparent, and equitable machine learning models.

Although there are many literature review articles on fairness-ensuring approaches, some limitations persist in these works. Firstly, many studies need more discussion regarding the article exploring and collecting process [29,30,31,32]. Secondly, recent methodologies presented in these articles may need to be updated as researchers continue advancing the field [29]. In this regard, it is common for some approaches to lose relevance and for new approaches to gain significant impact, shaping the direction of research in machine learning and AI. Therefore, staying updated with the latest advancements is essential to ensure continued progress and relevance. Thirdly, although the usual goal of fairness-related articles is to generalize fairness definitions from various perspectives and develop an approach where this defined fairness is ensured, some literature review articles highlight various fairness definitions more than developed fair approaches [29]. However, understanding the procedures to ensure fairness is as crucial as comprehending the various fairness-related terminologies. Lastly, there is a need for a more standardized evaluation and classification of fairness methodologies from the perspective of their addressed fairness issues. Most reviews classify fairness-ensuring methodologies based on when the researchers are incorporating a bias mitigation strategy (Prior to the model implementation, after the model implementation, or during the model implementation). We need to connect these fairness-ensuring methodologies with the specific issue types. Emerging academics often require more direction for understanding a classification of methodologies from the perspective of specific fairness issues they solve. Researchers often adhere to conventional methodologies when addressing specific challenges in their field. For example, scholars usually explore debiasing techniques for removing inherent data bias and generate counterfactual examples to explain model prediction.

Motivation to follow systematic mapping approach visualized in the diagram’s sequential phases (contained within square boxes) from top to bottom. Comprehensive review articles assist us in learning current trends. Over time, the reviews with a systematic mapping approach and reviews without a systematic mapping approach are affected by the emergence of new literature. The article collection process aids in consolidating emerging information with existing reviews, enabling continued comprehension of contemporary trends, even over an extended period

To solve these issues, we offer a comprehensive mapping analysis of some recent fairness concerns and academics’ proposed strategies. A comprehensive mapping study can present a clear idea of how to explore this field of research, which is especially helpful for aspiring scholars. Besides, a mapping study is a valuable tool for identifying and retrieving recent articles, facilitating the collection of related studies in subsequent years, even if the discussed articles become outdated. In this regard, the impact of a mapping study can endure longer than that of conventional review articles. Figure 1 graphically represents our motivation to follow a systematic mapping study. Our paper also generalizes fairness-related terminologies with appropriate examples and the adopted approaches for ensuring them. Finally, we also present a taxonomy of fairness-ensuring methodologies from the perspective of fairness issues they solve. This discussion can be a good source for identifying new trends in proposed solutions, their limitations, and potential future directions in state-of-the-art articles. We summarize our contributions as follows,

-

We offer a systematic mapping study of 94 articles that address fairness concerns, bias mitigation strategies, fairness terminology, and metric definitions across distinct research groups. Our mapping study method presents an adaptable search query for multiple databases that explains the article filtering process and an overview of how the number of articles increased/decreased over time and which countries are mostly involved in these 94 articles. This description of the filtering process facilitates the credibility of the work. Also, new researchers can follow or tune the query to review more updated papers for an extended period.

-

We classify the fairness issues first and then further classify the fairness-ensuring methodologies adopted to solve each type of fairness issue. We also represent the graphical taxonomy of fairness issues in Fig. 6, the taxonomy of methodologies in Fig. 7, and a taxonomy representing specific methodologies targeted to solve specific issues and their limitations in Fig. 10 for researchers to understand this area’s current trends easily.

-

We describe each type of fairness issue, summarize the approaches described in the filtered articles and discuss their limitations. We also provide a detailed definition of the fairness terminologies explored in the filtered articles for applying in the bias mitigation approaches, explain them with related examples, and provide the metric definition of the fairness terminology (if available).

-

We provide ideas for future contributions that researchers have yet to explore.

-

We summarize the publicly available datasets that other researchers have explored in the filtered articles and open-source tools that other researchers have proposed for mitigating bias or identifying bias in a dataset.

We organize the rest of the paper as follows: Sect. 2 presents the background material necessary to follow our discussion. Then, section 3 discusses our methods of this mapping study along with the research questions we are attempting to answer in this article and the developed query. Section 4 represents the findings from our mapping study in the form of answers to current research trends and most engaged countries and individuals, the first two research questions mentioned in section 3. The rest of the research question answers are regarding the analysis of the filtered papers. The research problems discussed in the filtered papers, the adopted methodologies to solve them, and the limitations or challenges of these methodologies are in section 5, 6, 7 accordingly. Next, the following two sections, 8 and 9, represent the answers to the last two questions regarding future direction and publicly available datasets, tools, and source code. We also discuss threats to the validity of our work in section 10. Finally, we conclude in section 11 with a general summary of our contributions.

2 Background

Several notable literature review articles have examined the landscape of fairness-ensuring methodologies. Some articles generalized explanations of bias types and their sources from various perspectives. For example, Reuben Binns discussed two types of unfairness issues from the perspective of discrimination against groups: algorithmic discrimination against protected feature groups and lack of individual fairness in the algorithm as the bias [29]. Algorithmic discrimination against protected feature groups refers to the situation where machine learning algorithms result in unfair treatment or unfavorable outcomes for certain groups of individuals based on their protected attributes, such as race, gender, or age. Lack of individual fairness in algorithms refers to the situation where the algorithm treats similar individuals differently, leading to unfair outcomes. This fairness issue is problematic because it can result in unjust outcomes for individuals who are similar in relevant respects but are treated differently by the algorithm. Both types of unfairness can arise when the algorithm uses inappropriate features or biased training data to make decisions. Unlike Reuben Binns, Mehrabi et al. discussed biases from the perspective of the source of the bias. They explained three types of ML model biases: training data bias, algorithm bias, and user-generated data bias [31]. Here, training data bias refers to biases in the data used to train machine learning models, resulting in models that reflect and reinforce the biases present in the data. Next, Algorithm bias refers to the bias that may be introduced into machine learning models by the algorithms used to train them. This bias can result from the selection of a particular algorithm or from how we implement the algorithm. Finally, User-generated data bias refers to the bias when we train the model with user-generated data that may reflect the biases and preferences of the users who generated it rather than being representative of the population as a whole.

These review articles emphasize discussing the adopted fairness-ensuring methodologies and often classify these methodologies. Generally, they classify these methodologies into pre-processing, in-processing, and post-processing [30, 31]. Simon Caton organized a taxonomy with these classes and subdivided them further to lead a conversation on current methodologies [30]. Firstly, Pre-processing methods involve manipulating the training data before feeding it into the machine learning algorithm. This process generally involves data cleaning, feature selection, feature scaling, or sampling methods to ensure the data is balanced and representative of the population. Examples of pre-processing methods include data augmentation and demographic parity-ensuring methods. Data augmentation indicates data modification to balance underrepresented classes, and demographic parity-ensuring strategies indicate equalizing the proportion of positive outcomes across different protected groups. Secondly, in-processing methods modify the machine learning algorithm during the training process to ensure fairness. These methods involve modifying the objective function or adding constraints to the optimization problem to ensure a fair outcome from the model. Examples of in-processing methods include adversarial training, where a separate model predicts the protected attribute and the original model ensures that it does not use this information to make predictions, and equalized odds, where the algorithm is optimized to ensure that the true-positive rates and false-positive rates are equal across different protected groups. Lastly, the post-processing methods involve modifying the output of the machine learning algorithm to ensure fairness. These methods involve adding a fairness constraint to the output, adjusting the decision threshold, or applying a re-weighting scheme to the predictions to ensure they are fair. Examples of post-processing methods include calibration and reject option classification. Calibration in machine learning refers to adjusting a model’s output to match the true probability of an event occurring better. A reject option allows the model to abstain from predicting uncertain inputs rather than making a potentially inaccurate prediction. Overall, these three categories and taxonomies of methods provide a range of options for researchers and practitioners to address bias and discrimination in machine learning models.

Along with leading a discussion regarding issues and methodologies, these articles represent fairness-related terminologies and metrics. In this regard, Chen et al. categorized fairness definitions into two groups: individual fairness and group fairness [32]. Individual fairness entails treating similar individuals equally, regardless of their group membership. In contrast, group fairness aims to ensure that the model treats different groups of individuals fairly, regardless of their protected attributes such as gender, age, or race. This type of fairness ensures that the algorithm does not discriminate against any specific group. Besides them, Mehrabi et al. proposed a more granular concept of fairness, called subgroup fairness, that focuses on ensuring fairness for relevant subgroups of individuals based on protected attributes and other relevant factors [31]. This fairness involves identifying subgroups of individuals with particular characteristics and ensuring that the algorithm treats them fairly. Another vital aspect of fairness-ensuring methodologies is measuring the degree of fairness a model achieves. To this end, scholars have proposed various fairness metrics that quantify different aspects of fairness. One review article comprehensively discussed these metrics and categorized them into different types [30]. The first category is abstract fairness metrics, based on mathematical properties such as independence, separation, and sufficiency. The second category is group fairness metrics, which measure how well the algorithm performs for different groups of individuals based on their protected attributes. Finally, the fourth category is individual and counterfactual fairness metrics, which consider the hypothetical scenario of how the model would have behaved if specific protected attributes were different. These fairness definitions and metrics are crucial in evaluating the performance of fairness-ensuring methodologies and can guide the development of algorithms that achieve the desired level of fairness.

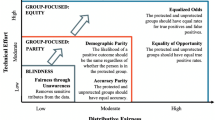

Although the reviews offer valuable insights into different aspects of fairness, their limitations indicate the need for a more systematic and organized mapping study. For example, one significant limitation of these reviews is the need for more discussion on the interrelationships between the classification of fairness issues and the appropriate fairness-ensuring methodologies. While these reviews with methodology-based taxonomies/classifications bring synthesized insights into discussing the current fairness trends [29,30,31,32], it is difficult to link up these methodology classifications with the fairness issues that they solve and with the issues that they generate themselves. For academics, it is crucial to acknowledge that different types of fairness issues may require different types of methodologies for mitigation. Thus, a more detailed exploration of the links between the classification of fairness issues and the corresponding fairness-ensuring methodologies could facilitate the development of more effective and tailored solutions to address fairness issues in machine learning. Understanding the interconnection between issue groups and adopted method groups enables researchers to learn the appropriate method types they need to develop for a specific issue. Figure 2 depicts a graphical representation of different aspects of various review structures that motivate our study. In this context, we propose taxonomy delineating pivotal factors that give rise to diverse classes of fairness concerns. Additionally, we categorize the methodologies employed to address each issue class and outline their respective limitations. By establishing these connections between fairness issue groups, corresponding resolution approaches, and their constraints, our taxonomy provides a comprehensive overview of prevailing trends within this domain.

3 Method of mapping study

We followed mapping techniques from other articles to analyze the major research trends in Ethical Machine Learning over the past two decades [33, 34]. Our mapping techniques involve identifying relevant publications by conducting a comprehensive search of four major databases, including ACM DL, IEEE Xplore, SpringerLink, and Science Direct, focusing on papers on the fairness concept. We selected these databases because they are widely renowned within the research community. To ensure a systematic approach, we followed the search and selection process recommended by B. Kitchenham [33, 34] and structured our research queries on key subject phrases and synonyms of those words for various indexing sites based on the process described by D. Das et al. [35]. Finally, we filtered the studies based on their relevance to our goal. We represent the filtered studies regarding year, countries, and authors. We elaborately describe these steps below.

3.1 Research question development and refinement

This mapping study investigates how ethical AI and ML model researchers developed and utilized approaches to mitigate bias. We directed our efforts toward structuring and refining the research inquiries in alignment with Creswell et al.’s guidelines [36]. Drawing from Creswell et al. and Wayne et al., our approach involved formulating research questions comprising a primary inquiry accompanied by subsidiary inquiries [36, 37]. This methodology mirrors the approach adopted by previous researchers in constructing pivotal central and subsidiary questions pertinent to their objectives [35, 38, 39]. Our overarching objective revolves around delving into state-of-the-art research on fairness concerns, prompting an exploration of the involved researchers and their geographic affiliations within this research domain. This exploration encompasses their resolved challenges, methodologies employed, prospective research avenues, and experimental tools or datasets. Consequently, we have formalized the ensuing research questions (RQs):

-

i.

What is the state-of-the-art research on fairness issues in AI?

-

ii.

Which countries and individuals are the most engaged in this field of study?

-

iii.

What problem have they solved?

-

iv.

What method have they adopted to solve that problem?

-

v.

What are the next challenges of the research?

-

vi.

What is the future direction?

-

vii.

Did they provide the source code and the dataset?

We maintained documentation while reading articles and determining the answers to the questions mentioned above for each article.

3.2 Query design

Our query development process involves breaking down the study subject into a few key phrases. Then we attempted other combinations of synonymous words to those phrases. There were a total of three segments in our search query. First, we included the search phrase “Artificial Intelligence" in the initial segment of our query. We also included similar terms such as “AI", “ML", and “Machine Learning" in that portion. Next, we considered keywords, such as model, prediction, outcome, decision, algorithm, or learning for the second segment, as we wanted to explore the articles focusing on fairness ensuring only for ML models. In the third segment, we used concepts synonymous with ethical fairness or bias, such as fairness, fairness, ethics, ethical, bias, discrimination, and standards, to narrow our search results. Finally, for the last segment, we chose ‘mitigating bias’, ‘bias mitigation’, ‘removing bias’, ‘bias removal’, ‘fairness definition’, ‘explanation’, and ’interpretation’ keywords. Figure 3 depicts the search query.

3.3 Article collection, organization, filtering and mapping

We selected research based on our search query, and our search query yielded a significant number of articles. Nevertheless, only some of these articles were within the scope of our research. During the initial screening, we filtered these papers. Following, we applied targeted screening approaches to filter out publications with insignificant impact on this subject, excessive length, published in languages other than English, and repeated or identical research. We also attempted to see if the full text of the article was available publicly and if the author’s claim was well-referenced. Finally, we analyzed the results from our search query for multiple ranges. For example, we attempted to find our query terms in the paper’s ‘abstract’, ‘introduction’, ‘conclusion’ and ‘title’ or ‘anywhere in the text’, and so on. Then, we looked through the related work section of the remaining review articles, adding significant research that the search query had missed. In case these additional papers belong to any of the four databases (IEEE Xplore, ACM DL, SpringerLink, and Science Direct), we add them as ‘referenced’ for those databases, and if they are from other sources, we add them to the ‘other’ section. Table 1 shows the results of our search queries. We extensively investigated the relevant works once we had reduced them to ninety-four publications to uncover current trends in fairness issues, adopted methodologies to solve these issues, fairness-related must-know terminologies, remaining challenges, popularly utilized datasets, and developed tools in this area. We also discussed the probable scope of improvement in model fairness disclosed in some of those filtered articles and from our understanding.

4 Result of the mapping study

Scholars have devoted considerable attention to exploring the counterfactual concept in machine learning and artificial intelligence to ensure fair prediction. In our study, we searched 420 research articles to identify contributions in this field, ultimately selecting 94 articles that closely aligned with the scope of fairness. In the following subsections, we represented our findings by answering the first two research questions enumerated in Section 3.1.

4.1 State of the art of research in Ethical AI

Although researchers have been studying machine learning models since the early nineteenth century, the unfairness of predictive machine learning models is a relatively recent topic.

Figure 4 displays the number of processed papers per year, revealing a significant increase in the number of papers after year 2016. It indicates the growing interest of academics in the field of fair prediction.

4.2 Engaged countries and individuals

Figure 5 indicates that concerns regarding fairness in ML and AI models have gained widespread attention and are not limited to any specific group of researchers. During our analysis, we did not notice any particular author with significantly more publications. However, we noticed that many articles came from authors from the United States. Out of the 94 papers analyzed, the highest number of publications on this topic came from the United States, followed by the UK with approximately one-fifth of the author’s numbers of the US, and Germany in third place with roughly one-seventh of the author’s numbers of the US.

5 Addressed fairness issues

The filtered articles describe some challenges, and we summarized them into a few common problem groups: biased training data, bias toward feature groups, biased decision models, lack of prediction transparency, and inherent bias. We discuss some of the common key factors that cause these biases, represented in a graphical view in Fig. 6. Bold oval shapes represent the issues, and the other oval shapes, with multiple outward arrows, represent the key factors. The arrows represent the contribution of key factors in generalized fairness issues.

5.1 Biased training data

Bias in the data refers to the presence of systematic errors or inaccuracies that deplete the fairness of a model if we use these biased data to train a model. Bias can potentially exist in all data types as bias can arise from a list of factors [95]. Among these factors, measurement bias is a potential source of data bias in ML that occurs when the measurements or assessments used to collect data systematically overestimate or underestimate the true value of the characteristic being measured [85, 103, 110, 111]. For example, training data in criminal justice systems often includes prior arrests and family/friend arrests as attributes to assess the probability of repeating a crime in the future. As a result, it can lead to racial profiling or disparities in sentencing practices because we cannot confidently guarantee that an individual from a group will behave similarly to others.

Next, representation bias is another crucial factor for a biased training dataset. It refers to the bias in a dataset or model that results from under or over-representing certain groups or characteristics in the data, which can lead to biased or inaccurate predictions for those groups [91, 103, 104]. For example, Yang et al. have highlighted the issue of underrepresented images, particularly for the representation of people and their attributes in the ImageNet dataset, where only a small percentage of images (\(1\%\), \(2.1\%\)) are from China and India, and a comparatively more extensive portion of images are from the United States (\(45\%\)) [91]. Similarly, Shankar et al. demonstrated that classifier performance is notably lower for underrepresented categories trained on ImageNet [104]. Additionally, word embeddings, learned from large corpora of text data, represent words as vectors in a high-dimensional space in various NLP (Natural Language Processing)-based predictive models. However, these word embeddings encode various issues, such as gender biases and lack of diversity, which contribute to the model’s inability to generalize well to new data [86, 87, 123]. Also, when we train a word embedding model on a dataset where the word “doctor" is more associated with the word “man" and “nurse" is more associated with “woman", the model may learn to associate gender with these professions, even when it is not necessary for the given task. In this regard, Zhang et al. argue that while researchers designed many machine learning models to optimize and maximize accuracy, they may also inadvertently learn and propagate existing biases in the training data [92].

Sampling bias slightly differs from the representation bias [103, 131]. Sampling bias occurs when the sample data for training does not represent the population targeted to generalize. In contrast, representation bias is an inadequate representation of the real-world distribution of the data. For example, if a researcher wants to study the height of people in a particular country but only samples people from a single city, the results may only represent part of the country’s population. The sample may be biased toward people from that specific city, resulting in inaccurate conclusions about the height of the country’s population. Subsequently, another aspect that can make the model predictions inaccurate is label bias [92]. It occurs when the labels assigned to data instances are biased in some way. For example, a dataset of movie reviews may have been labeled by individuals with a particular preference for a certain genre, leading to biased labels for movies of other genres.

Besides them, Aggregation bias refers to a type of bias that arises when a model is used to make predictions or decisions for groups of individuals with different characteristics or from different populations [113]. It occurs when a single model is used to generalize across different groups or sub-populations and can lead to sub-optimal performance for some groups. For example, scholars study blood glucose (sugar) levels such as HbA1c (widely used to diagnose and monitor diabetes), which usually differ across ethnicities and genders. Thus, a single model may become biased towards the dominant population and not work equally well for all groups (if combined with representation bias) [61].

Depending on the specific application and context, there may also be other sources of bias in training data that can potentially lead to unfair model outcomes. Thus, processing the training data to remove existing bias is often necessary for many machine learning-based models [92]. Otherwise, If we train the model on a biased dataset, it can lead to unfair outcomes and perpetuate existing societal inequalities. Therefore, it is essential to identify and mitigate bias in the data to ensure that machine learning models are fair and equitable. Scholars in the articles primarily address this step as pre-processing [123].

5.2 Inherent bias

Inherent bias, also known as intrinsic bias, refers to the bias inherent in the studied data or problem rather than the bias introduced during the modeling or analysis process [62]. Along with all the discussed biases, we can observe inherent biases in multiple ways, such as prediction inconsistency and prediction falsification due to partial data. Prediction inconsistency is a different type of bias addressed as leave-one-out unfairness. Although a definite cause is yet to be discovered, scholars often held many of the above biases responsible for prediction inconsistency [84]. Prediction, regardless of its accuracy, is expected to be constant. This bias refers to a situation where including or removing only one instance from the dataset and retraining the model on this modified dataset alters the prediction outcome for another instance entirely irrelevant to that included or deleted instance [84]. In other words, the model’s predictions are not consistently fair for all individuals in the dataset when the model is retrained on the remaining data after removing a single data point. As a result, the predictions for the removed data point may change in an unfair or biased way. The leave-one-out unfairness problem is particularly relevant for datasets where individual data points are sensitive. It makes ML models unreliable and untrustworthy in serious implementations such as predicting recidivism or determining creditworthiness criminal detection.

Another significant inherent bias source is the Historical discrimination. Even if the algorithms used in decision-making processes are unbiased, the data they are trained on may contain historical biases, leading to discriminatory outcomes [62]. Calmon et al. stated that the presence of historical discrimination in linear datasets, such as the widely studied Adult Income dataset used for evaluating fairness in machine learning, can result in biased predictions despite high model performance when using such biased data for training [97, 124]. For example, suppose a training dataset for an employee hiring algorithm only includes data from past hires, and past hiring practices were biased against certain groups. In that case, the algorithm will continue perpetuating that bias in hiring decisions. Historical bias can be challenging to address because it reflects broader societal biases deeply ingrained in our institutions and culture. Even if we design a fair decision-making system according to a particular definition of fairness, the data it uses to learn may still reflect historical biases and lead to unfair decisions [105]. However, it is vital to recognize and address historical bias in machine learning models to prevent perpetuating unfair and discriminatory practices.

5.3 Bias toward feature groups

Bias towards protected feature groups refers to a type of bias where a machine learning algorithm may unintentionally favor or discriminate against certain protected feature groups, such as women, colored, or ethnic minorities when making decisions. This bias is problematic because it perpetuates existing societal inequalities and can result in unfair outcomes for specific individuals or groups, especially in high-stakes domains [98, 125]. For example, a protected feature-dependent model for predicting recidivism rates could result in more false positives for certain groups, leading to lengthy prison sentences or increased monitoring, even when the individual may not pose a significant risk. Many factors can lead to bias toward protected feature groups. For example, training and inherent data bias can also be responsible for discriminating against people with protected feature groups. Also, other factors, such as unbalanced feature data, confounding variables, and predicting attribute’s connection to protected feature can contribute to this bias in addition to those mentioned in section 5.1.

We refer to a dataset with severely skewed or uneven value distribution across various features as having unbalanced feature data. In other words, when a dataset has a significantly larger or smaller number of instances of certain features or categories within features compared to others, it indicates unbalanced feature data. For example, suppose we utilize a model that announces verdicts, and the training data contains gender information as a data feature. If, in the data, females are verdict more times than males for training an RAI, the RAI model may perpetuate these biases and unfairly target females (specific groups) [67].

Confounding variables can be another reason the model is biased toward certain feature groups [114]. If a protected feature correlates with other variables that affect the outcome variable, then the protected feature can become a confounding variable. It can lead to training a model in a biased way that can associate the confounding variables with the outcome variable. For example, consider a study investigating the relationship between caffeine consumption and heart disease risk. If the study does not control for age, then age may act as a confounding variable, as older people tend to have a higher risk of heart disease and may also consume more caffeine. In this case, the study may mistakenly conclude that caffeine consumption is associated with a higher risk of heart disease when in fact, it is the confounding variable of age that is responsible for the observed relationship.

Additionally, dependency on protected features may lead to poorer outcomes [98]. When a machine learning model relies heavily on protected features, it can lead to biased predictions that favor certain protected groups over others. For example, a loan approval model that relies heavily on race as a feature may be biased against certain racial groups. It may happen if the model fails to identify other strongly correlated features that are not sensitive or if the dataset lacks enough features other than the protected feature. As a result, the model may unfairly deny loans to members of certain groups.

Other than these reasons, scholars also mention other aspects, such as Dwork et al. stating that the inability to learn the distribution of sensitive attributes in the training data is a potential reason for bias towards protected features [99]. The authors define sensitive attributes as those protected by anti-discrimination laws (race, gender, and age). Mishler et al. discussed that if we train RAI models on datasets having sensitive features, they may become biased against certain races or gender [67]. As the reason for bias toward feature groups, some articles also claim that false positive outputs are as harmful as false negative outputs in many high-stakes decisions for a dataset with protected attributes [63]. For example, in a criminal justice system, falsely predicting someone is likely to re-offend (a false positive) could lead to unjust incarceration or other forms of harm [64, 115, 117].

5.4 Decision model bias

The above sections 5.1, 5.2, and 5.3 describe how data bias can ruin fair predictions for some ML models. However, a predictive ML model can be unfair even though the training dataset is not biased or contains protected attributes such as race, gender, or age [98, 125, 132]. Algorithmic bias is a potential bias that can introduce discrimination or unfairness in the model. It refers to the bias introduced by the algorithm rather than inherent in the input data [88, 118].

Another reason is the hidden biases. Despite having a balanced distribution in the dataset and being free of sensitive feature correlation, it still can contain hidden biases, which refer to the biases present in the data used to train an ML model that is not immediately apparent or identifiable [45]. These biases can result in discriminatory outcomes for specific groups. For example, using zip codes in the model may inadvertently incorporate racial or economic factors that are not directly related to criminal behavior. Using zip code as an attribute can lead to over-predicting the likelihood of recidivism for specific groups and under-predicting it for others, resulting in unjust outcomes.

Besides them, many Risk Assessment Instruments (RAI) implement ML-based models and may only emphasize prediction accuracy, which can eventually lead to unfairness [132]. Risk assessment instruments (RAIs) are machine learning models used to assess the likelihood of recidivism or future criminal behavior in individuals [110, 111, 119]. Unfairness in these tools can lead to severe consequences that are not adequately justified, such as serving more years in jail for being colored individuals [110, 111].

Additionally, evaluation bias refers to a type of bias that arises while evaluating machine learning models, and thus, it is not related to data bias. It happens when the performance of a model is assessed in a way that is biased toward certain groups or outcomes, leading to misleading or incorrect conclusions [95, 103]. For example, suppose we adopt an evaluation method solely based on its overall accuracy without considering the model’s performance on different subgroups. In that case, the evaluation outcome may hide that the model performs poorly on certain protected groups while delivering high accuracy. It can lead to adopting biased models that appear to perform well overall but are discriminatory towards certain groups. Mitigating these biases can help ensure a fair model, build trust in machine learning systems, and increase their adoption in various domains.

5.5 Lack of prediction transparency

Machine learning models can be complex and challenging to interpret, making it hard to understand how the model makes decisions and identify potential sources of bias [89, 90, 106, 120]. A model can be unfair if a model lacks transparency. Authors identified transparency issues generated while developing the ML algorithm, including non-interpretable predictions [59], unexplainable outcomes [58], lack of contrastive fairness [42], lack of transparency [73] and lack of actionable alternative profiles [70]. These issues can lead to unexpected vulnerabilities, hidden biases, and negative impacts on various stakeholders [58, 68,69,70, 81].

First, Non-interpretable predictions of ML models refer to predictions made by models that humans need help to understand meaningfully. Here, non-interpretable predictions can occur when the model is very complex, such as deep neural networks and adversarial networks, or when the training data is too large or diverse to be easily understood, for example, latent representations of an encoder [59]. Next, unexplainable outcomes in machine learning refers to situations where the model’s predictions need more justification [58]. The machine learning model provides a single outcome without explaining why the model picked this particular choice out of several possibilities in the final forecast. It makes it difficult for humans to understand how and why the model arrived at a particular decision [48, 50, 52, 57].

Moreover, contrastive fairness aims to ensure fairness in decisions by comparing outcomes for similar individuals who differ only in a protected attribute (such as race or gender). Lack of contrastive fairness in models can make the model biased favorably or unfavorably towards a group of stakeholders [42]. For example, if a job screening model is biased toward male candidates over females with similar qualifications, the company must modify the algorithm to consider them equally. Lastly, lack of actionable alternative profiles limits the model’s capability to produce other feature value combinations that would help to generate an expected output. Actionable alternative profile refers to providing a set of alternative actions or decisions that could be taken in response to the outcome of a machine learning model [70]. For example, a machine learning model in medical diagnosis may predict a patient’s high risk of developing a particular disease. However, instead of just providing this information to the healthcare provider, the model could also suggest alternative courses of action or treatment options that could reduce the risk or prevent the disease. Having actionable alternative profiles is crucial for ensuring the reliability of a decision, as more than relying on a single decision may be required.

5.6 Multiple definitions of fairness

Many scholars propose different definitions of model fairness from various perspectives to address these issues. Different definitions of fairness often lead to conflicting objectives, challenging developers and policymakers. For instance, group fairness requires equal treatment of different protected groups, while individual fairness demands that the model treat similar individuals similarly. Ensuring equal outcomes for all protected groups may require setting different thresholds for different groups, which may violate the principle of treating individuals equally regardless of their group membership [93]. Conversely, ensuring equal treatment for all individuals may lead to unequal outcomes, which may be unfair. Meanwhile, predictive parity focuses on equalizing the proportion of true positives across different groups. In contrast, equalized odds aim to balance true positive and false positive rates for a model’s prediction.

In practice, implementing one definition of fairness may cause violations of other definitions, leading to a trade-off between competing objectives. Also, if a model is designed to be fair according to a particular definition of fairness, it may still exhibit unintended biases and unfairness when used in practice. Therefore, it is essential to consider multiple definitions of fairness and the trade-offs between them when designing and evaluating machine learning models to minimize the risk of creating discriminatory outcomes. Therefore, there is often a trade-off between different notions of fairness that the model must carefully consider for decision-making systems. A few articles discuss the challenges of defining and achieving variously defined fairness in machine learning models and propose various solutions to address these challenges [98, 99, 105].

6 Adopted methodologies by authors to solve these issues

Authors from the filtered papers adopted many techniques to solve these biases. While some authors have proposed practical models to remove and detect data bias, protected-feature discrimination, unexplainable prediction, model bias, or prediction inconsistency, some authors have provided fairness definitions and metrics to remove ambiguity and discuss the trade-offs of these concepts. We generalize and classify these methodologies according to the specific problem types they solve. Figure 7 depicts the methodologies scholars have followed to solve generalized issues. The figure’s first column (containing ‘Biased training data,’ ‘inherent bias,’ ‘bias toward protected feature group,’ ‘decision model bias,’ ‘lack of prediction transparency,’ and ‘multiple definitions of fairness’) contains all the generalized issues. We also discuss these classes elaborately in the following sections.

6.1 Methodologies to mitigate data bias

We generalize the techniques adopted by the authors of the filtered articles.

6.1.1 Extending and diversifying

In this technique, people modify the data to diversify the model’s input data and implement it for identifying bias and modifying the model [96, 121, 122, 129, 133]. One method proposes an approach to understanding a model’s bias sources by adding counterfactual instances in the data points. First, they propose to modify the input data to create diversified new data points similar to the original data points but with more critical features changed. Then, the model identifies and quantifies any bias in the model by comparing the model’s predictions on the original data points and the corresponding counterfactual instances [121]. Another method follows this procedure, uses path-specific counterfactuals, and adjusts for bias along specific paths [129]. Similar to these techniques, the ‘Counterfactual Fairness with Regularization (CFR)’ method aims to remove the direct effect of sensitive attributes on the predicted outcome while preserving as much accuracy as possible. The approach involves constructing counterfactual instances and ensuring fairness for each individual under different sensitive attribute values and then using regularization to encourage the model to make similar predictions for similar individuals with different sensitive attribute values [133]. This method ensures individual fairness, and there are other fairness concepts similar to counterfactuals, such as the group fairness assumption and the counterfactual fairness assumption. Some scholars also propose integrating all these counterfactual fairness concepts into the model similarly for unbiased classification, clustering, and regression [122].

6.1.2 Adversarial techniques

Many scholars discuss adversarial techniques for data debiasing by removing or reducing the impact of sensitive features that could lead to biased predictions [98, 116]. In adversarial training, we generally train a model to predict the outcome while being attacked by an adversary trying to infer the sensitive attributes. One adversarial technique involves training a neural network with a different fairness branch to prevent bias based on a protected attribute in the learned representations. They train the network adversarially, where the fairness branch competes against the main classification task to achieve accuracy and fairness [28]. Zhang et al. proposed an adversarial technique with a predicting branch/network and an adversary branch/network [92]. The primary or predictor branch predicts Y given X and learns the weight W with an optimization function like stochastic gradient descent (SGD) aiming to minimize the loss. The output layer passes through the adversary branch aiming to predict Z. The architecture of the adversary network depends on the fairness issue they aim to solve. In aiming for goals like Demographic Parity, the adversary would predict the protected variable Z using only the input’s predicted label \({\hat{Y}}\) (and not the actual label Y), while withholding its own learning process. Similarly, for achieving Equality of Odds, the adversary utilizes both the predicted label \({\hat{Y}}\) and the true label Y. For achieving Equality of Opportunity for a specific class y, the adversary restricts its instances to only those where \(Y=y\) [31]. Demographic Parity, Equality of Odds and Equality of Opportunity is defined in section 6.5. Figure 8 depicts a visualization of the architecture for such adversarial techniques.

The architecture of adversarial network visualized by Zhang et al. [92]

Some scholars explore GAN, where the generator generates indistinguishable synthetic data while the discriminator differentiates between real-world and synthetic data. To ensure fairness, a fairness critic in an additional adversarial objective ensures that the representations learned by the generator are not biased toward any particular group [46]. Besides them, another method divides the training into two deep neural networks: a representation network predicts the protected attribute and another network keeps training on the source dataset and fine-tuning on a target dataset to maintain high accuracy and transferability across datasets [100].

6.1.3 Re-sampling and re-labelling

Imbalance in some dataset features contributes to developing data bias [107]. As a result, some researchers explore pre-processing the dataset to mitigate dataset bias. Re-sampling and re-labeling are two such processes, and many research results validated their effectiveness. Re-sampling addresses data imbalance that causes bias in machine learning models. In a dataset, if the number of instances belonging to one class is significantly higher than the other classes, then the model may be biased towards the majority class. Re-sampling techniques refer to oversampling the minority class or undersampling the majority class to create a balanced dataset. It ensures more representative data, diverse data from various sources and populations, and balanced data across different groups [92, 98]. Besides re-sampling the input data, scholars also propose re-labeling data instances to mitigate bias. Bolukbasi et al. proposed to modify the training data by explicitly identifying gender-neutral words and using them to adjust the gender-specific words in the embedding data [123]. For example, they replace the word “he" with “she" and vice versa in the text data, creating balanced examples of each gender association. They also use gender-neutral word pairs (no association with a particular gender), such as “doctor" and “nurse", to help the model learn a more balanced representation of gender-related concepts [123]. In this regard, Kamiran et al. proposed a ‘massaging’ method that used and extended a Naïve Bayesian classifier to rank and learn the best candidates for re-labeling [26, 63].

6.1.4 Thresholding and causal methods

Researchers also explore post-processing techniques, such as thresholding for training data bias removal, especially for RAIs [25, 27, 134]. In the post-processing technique, scholars adjust the predictions to meet a fairness constraint after training the model on data. Thresholding is one such post-processing technique where a threshold is set on the model’s output to ensure a certain level of fairness. For example, Hardt et al. introduce the concept of “equality of opportunity”, which means that each group’s true positive rate and false positive rate should be equal, regardless of the protected attribute [125]. They propose a method to enforce this constraint using a constrained optimization problem, penalizing models with disparate impacts on different groups while maximizing overall accuracy. Other than thresholding, causal methods can ensure model fairness by analyzing and modeling the causal relationships between input features, the predicted outcome, and the sensitive attribute. The goal is to identify the direct and indirect causal relationships between these variables and to use this information to create fair and unbiased models. In this context, Salimi et al. propose that repairing the training data to remove unfair causal relationships is more efficient than implementing correlation-based fairness metrics to address the root causes of unfairness. They propose a framework with three steps: identifying the causal relationships between the input features and the output label, identifying any causal relationships between the input features and protected attributes, and then implementing causal inference and database repair techniques to remove any unfair causal relationships between the input features and the output label [94]. Like these techniques, Razieh et al. use causal inference methods to estimate counterfactual outcomes for different subgroups and then use these estimates to make fair predictions [135]. Furthermore, Depeng et al. propose the GAN that they train with a novel loss function that penalizes the model for violating causal constraints. The proposed method ensures that the model does not use the protected attribute to make predictions, thereby reducing bias in the data [112].

6.1.5 Balancing instance distribution

Lastly, With the goal of accurate image classification models, Yang et al. introduce a two-step approach to filtering and balancing the distribution of images in the popular Imagenet dataset of people from different subgroups [91]. In the filtering step, they remove inappropriate images that reinforce harmful stereotypes or depict people in degrading ways. In the balancing step, they adjust the distribution of images from different racial and gender subgroups to ensure that the dataset represents each subgroup equally. They supported their proposal by showing fairer classification performance than in classification in the original Imagenet dataset [91]. Along with distribution balancing, some articles also propose data collection processes to determine if the predictions require fairness, inspiring model modification to ensure fairness. For example, Buolamwini et al. propose the Gender Shades Benchmark, a benchmark dataset designed to evaluate gender classification systems regarding intersectional accuracy disparities. This dataset consists of a diverse and representative set of images that includes darker-skinned individuals and women who do not conform to traditional gender norms [95].

6.2 Methodologies to mitigate bias towards protected features

To mitigate bias toward certain groups, scholars propose identifying the source of the bias first and then mitigating the bias along the route. Many of the methods mentioned above maintain this trend, whereas some filtered articles specifically explore protected feature bias mitigation strategies more. In addition to the methods mentioned earlier, scholars also consider additional techniques to reduce bias against protected attributes.

6.2.1 Implementing transformation theory

Transformation theory is a framework for improving model fairness by transforming the input data to mitigate the effect of sensitive attributes on the model’s predictions. The model first predicts the protected attribute and then uses this to generate transformed data that removes the effect of the sensitive attribute. Then, they generate a fair model by training in the transformed data. For example, Meike et al. map the input data into a metric space where distances represent the similarity between individuals and find the minimum-cost way to transport the distribution of protected attribute values from biased to unbiased dataset [65]. Paula et al. followed this approach and proposed two methods, one that uses a generative adversarial network to learn the optimal transport plan and another that directly estimates the transport plan using a convex optimization algorithm. They expect the resulting model to achieve fairness for the protected attribute while maintaining accuracy [101]. Some scholars also have explored convex objective functions to minimize the correlation in previous years [124].

6.2.2 Implementing a fairness critic

To understand if a model is biased toward certain groups, scholars explored and proposed several theoretical and practical fairness concepts to mitigate bias towards protected groups. Implementing a fairness critic network to learn a fair representation of the data by training an adversarial network is another remarkable idea to mitigate bias against protected attributes. Researchers mainly implement this idea for RAI models (classifier models). The fairness critic is a separate neural network that takes the learned representations as input and outputs a score that reflects the level of bias in the representation. The classifier maximizes prediction accuracy, while the fairness critic maximizes fairness. The two objectives compete against each other in a minimax game. By doing so, the model learns a representation that maximizes accuracy while minimizing bias towards protected attributes [108]. Scholars also approach generative approaches combined with a fairness critic network. For example, Depeng et al. introduced an adversarial objective-based fairness loss function in the GAN framework to generate realistic and unbiased, specifically concerning protected attributes [136]. The critic network distinguishes between valid and generated (that violate fairness constraints) samples. The model encompasses a generator \(G_{Dec}\) with a conditional distribution \(P_G(x, y, s)\) that generates the fake data.

In equation (1) x, y = data pair, s = protected or sensitive attribute, \(P_G(s) = P_{data}(s)\), \(P_G(x, y|s = 1) = P_G(x, y|s = 0)\) for ensuring statistical parity constraints and the fairness critic differentiates the two types of generated samples \(P_G(x, y|s = 1)\) and \(P_G(x, y|s = 0)\) indicating if the synthetic samples are from protected or unprotected groups.

Structure of FairGAN as represented in [136]

Figure 9 represents a visualization of their model [136]. The proposed approach is evaluated on several benchmark datasets and shown to produce realistic and fair samples [136].

6.2.3 Disparate impact-based methods

Recently, scholars also explored the disparate impact of recidivism prediction instruments and offered several solutions to ensure protected group fairness in these tools [64, 93, 115, 124]. “disparate impact remover" is one such method. It modifies the data distribution to ensure equal group representation in the training data [124]. The method achieves this by adjusting the weights of each training example based on the protected attribute’s distribution, thus equalizing the acceptance rate across groups. Also, there is a framework for evaluating the fairness of prediction models and demonstrating how to apply it to assess the fairness of recidivism prediction instruments [64]. This framework has three steps: identifying the group protected by anti-discrimination laws or ethical considerations, identifying the outcome variable, and evaluating prediction fairness through the “disparate impact" and “equal opportunity" tests.

6.2.4 Embedding data into lower-dimension

Another renowned protected-feature bias mitigation approach is to learn fair representations by mapping the original data into a lower-dimensional space that preserves the relevant information for the downstream task while removing the discriminatory features [98]. This method aims to learn a representation invariant to protected attributes such as race or gender, thereby ensuring that the downstream classifier will not make decisions based on these attributes.

6.3 Methodologies to mitigate model bias

6.3.1 Visualizing data

Some articles explore visualizing the data effectively to identify the data source and remove model bias, as hidden biases contribute to discrimination in ML-model prediction. For example, Dwork et al. propose an interactive visualization tool called the “What-if tool" to increase awareness of the potential sources of discrimination in machine learning models [99]. It provides an intuitive and user-friendly interface that allows users to explore the impact of various changes on the fairness and accuracy of machine learning models in real-time and visualize its outputs. Users can change input data points, adjust thresholds, and modify other parameters to observe how different decisions affect model performance and fairness through a variety of fairness and performance metrics.

6.3.2 Introducing social cost matrix into misclassification cost matrix

Developers also implement ML models, especially classification models, as risk assessment instruments (RAI). Researchers proposed several approaches to eliminate RAI bias, such as CF-based and adversarial debiasing-based approaches. Firstly, Kamiran et al. proposed a CF-based solution using a decision-theoretic framework in classification [44]. Their proposed framework extends the standard cost-sensitive learning approach by introducing a social cost matrix to capture the societal costs associated with different types of errors. The model also includes a two-step process for building a discrimination-aware classifier. In the first step, the model learns a classifier that minimizes the expected cost of misclassification. In the second step, the model modifies the cost matrix with the social cost matrix to compute the optimal decision boundary using the modified cost matrix [44]. They further demonstrated the implementation of the discrimination-aware classifier using a threshold-based approach. The threshold-based approach uses the expected costs of misclassification and discrimination to compute a threshold value that balances the trade-off between the two costs. The classifier then classifies instances as positive or negative based on whether their predicted probability exceeds the threshold value [44].

6.3.3 Generalizing fairness definition and metrics

Definition development for fairness terminologies and metrics for measuring fairness in the model outcome is necessary before developing fair models and bias reduction techniques. Thus, many researchers have attempted to propose fairness-related terminologies by generalizing definitions of fairness from psychology, statistics, quantum computing, and many more fields. Scholars implement these variously proposed fairness concepts in a model development step. For example, after defining “equalized odds" and “calibration", Kleinberg et al. propose a statistical method called the “direct constrained optimization" method. They formulate the model optimization problem as a constrained optimization problem to maximize accuracy subject to fairness constraints. This optimization process involves solving for a set of model parameters that satisfy the fairness constraints, such as requiring equal false positive or false negative rates across groups or limiting the difference in average risk scores between groups while maximizing the model’s accuracy. They further adjust the risk scores by including a post-processing step [132]. Lum et al. propose another statistical approach similar to the previous one by incorporating “group fairness” for calculating the trade-off and solving the constrained optimization problem using convex optimization [109]. A few articles also propose new metrics such as calibration error to quantify the performance of calibrations of these methods [126]. A calibration error metric measures the discrepancy between predicted probabilities and the observed outcomes in an ML model. It quantifies the calibration performance of the model by evaluating how well the model predicted the probabilities and how they align with the true probabilities of the events or classes. Afterward, “disparate impact-aware Naive Bayes” and “equalized odds-aware Naive Bayes” based strategies are two noteworthy fairness approaches that ensure that the predictions are disparate impact-free and the false positive and negative rates are equal across different protected groups [66]. We further discuss all the proposed fairness-related definitions of the filtered papers in section 6.5.

6.3.4 Incorporating accuracy and fairness metrics

Usually, any ML models follow accuracy matrices for developing the model. However, to decrease prediction bias, some scholars propose statistical methods incorporating fairness and accuracy metrics while developing an ML model and balancing the trade-offs between fairness and accuracy, especially in risk assessment instruments [109, 126, 132].

6.3.5 Protected attribute info discarding

The adversarial debiasing method tries to learn a debiased representation of the data by training a neural network to predict an outcome while at the same time being forced to discard any information about the protected attribute. Madras et al. propose an adversarial training-based method to address issues of fairness and bias in machine learning models [100]. The discriminator predicts the sensitive attribute from the learned representation, while the generator produces a representation that is both predictive of the task and fair. Another approach is the “jointly constrained Naive Bayes”, which restricts the classifier to use only a subset of features that are minimally correlated with the protected attribute [66]. Furthermore, Calmon et al. proposed quantifying the relationship between protected attributes and other features and minimizing the mutual information between protected and remaining features. Finally, the authors stated that the sensitive attribute is not used to make classification decisions and presented convincing experimental results to support that fairness and accuracy are balanced [47].

6.3.6 Statistical framework to alter objective function

The paper proposes a statistical framework for developing fair predictive algorithms that explicitly consider fairness constraints during model training. It introduces a fairness penalty term to the objective function that penalizes the algorithm for its deviation from a desired level of fairness. This framework is designed to balance fairness and accuracy and can be applied to a range of machine learning models [109].

6.4 Methodologies to ensure prediction transparency, explainability, interpretability

Researchers have emphasized providing prediction explanations and interpretations to maintain transparency of the model predictions. Explanation and interpretation of the prediction confirm the outcome’s legitimacy and transparency about fairness [59, 79]. In addition, prediction explanation provides alternative feature combinations to modify the model outcome, and prediction interpretation validates the model’s outcome (probability of other outcome occurrences without dependency on protected attributes) [50, 68, 72]. To explain and interpret a model’s outcome from the fairness perspective, Counterfactual explanations have gained significant popularity among the various approaches [71]. The counterfactual analysis involves asking “what-if” questions to determine how changing one or more features of a particular instance would affect the model’s output. We can use this technique to identify instances where a model’s output may be unfair and to make corrections to improve fairness [58]. Many scholars have proposed counterfactual approaches that received scholars’ attention, such as performance improvement for multi-agent learning, causal inference in machine learning, explanation for system decision of black-box models, the actionable alternative outcome of existing and new AI models, etc. [70, 137,138,139,140,141,142]. Academics are exploring trade-offs to generate counterfactual explanations and methods to utilize the generated CFs to provide explainable and interpretable model outcomes.

6.4.1 Perturbation-based methods

One common approach to generating CF is the perturbation-based method. A perturbation-based method is used in various fields, including machine learning and optimization, to analyze the behavior of a system by modifying input features and keeping the remaining features fixed to observe changes in the prediction. In machine learning, scholars mainly employ perturbation-based methods to assess a model’s robustness, sensitivity, or generalization. Perturbation distance, or feature or input distance, measures the extent of modification or change applied to input features when generating counterfactual explanations. Wachter et al. proposed a method to generate CF explanations for predictions without accessing the model’s internal architecture. This method turns the CF generation problem into an optimization problem with an objective function that considers measuring perturbation distance between instances. Search algorithms such as gradient descent or genetic algorithms are employed to find suitable counterfactual instances. The generated counterfactuals address interpretability challenges [143].

6.4.2 Optimization-based methods

Another approach is the optimization-based method, which slightly differs from the perturbation-based method. After generating CF instances by solving the optimization problem (minimizing original and CF instance differences), the method focuses on satisfying certain constraints, such as individual and group fairness of the generated CFs. By applying this approach, Kusner et al. (2017) introduced a framework for generating counterfactual explanations by minimizing the distance in a latent feature space [127]. Besides them, Samali et al. developed an optimization technique to ensure fairness in methods by creating representations with similar richness for different groups in the dataset [144]. They represented experimental results showing that men’s faces have lower reconstruction errors than women’s in an image dataset. They developed a dimensionality reduction technique utilizing an optimization function mentioned in equation (2).

Here, A and B are two subgroups, \(U_A\) and \(U_B\) denote matrices whose rows correspond to rows of U, U contain members of subgroups A and B given m data points in \(R_n\). Their proposed algorithm is summed up in two steps: firstly, it relaxes the objective to a semidefinite program (SDP) and solves it. Secondly, it solves a linear program that would reduce the rank of the solution [144].

6.4.3 Rule-based methods

Additionally, rule-based methods have been proposed, such as the Anchors algorithm by Ribeiro et al., which generates rule-based explanations by identifying the smallest set of features that must be true for a specific prediction [130].

6.4.4 Combining multiple methods

These different methods offer diverse ways to generate counterfactual explanations, allowing researchers and practitioners to choose the most suitable approach for their needs. Some other scholars emphasize generating diverse CFs to explore the explanation space and identify diverse and coherent explanations. This method combines multiple techniques such as heuristics, optimization algorithms, sampling methods for searching, and pruning techniques. It also captures the trade-off between diversity and coherence. It may penalize redundant or overlapping explanations while rewarding diverse and coherent explanations. Candidate explanations generated from this method are diverse and coherent [76].

6.4.5 Multi-modal alternative profiles

Besides the linear counterfactual generation methods mentioned above, scholars also explore multi-modal CF generation. For example, Abbasnejad et al. propose generating counterfactual instances by modifying both the input image and the generated text. These modifications capture alternative visual and linguistic explanations, resulting in different model predictions. This function typically includes terms encouraging visual fidelity, linguistic coherence, and dissimilarity from the original instance [43].

6.5 Fairness terminologies and metrics definitions

The filtered articles proposed various fairness-related terminologies to mitigate fairness issues by implementing them in bias reduction strategies. We present generalized descriptions of these definitions.

-

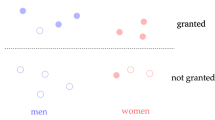

Disparate impact: Feldman et al. describe the disparate impact as a situation in which a decision-making process disproportionately impacts members of a protected group, regardless of intent [99] or in other words, disparate impact is a predictor that makes different errors for different feature groups [93, 125]. Disparate impact can be measured using statistical techniques such as the “disparate impact ratio", which compares the proportion of favorable outcomes (such as job offers) between different groups. If the ratio is significantly different between groups, it suggests that the model is exhibiting disparate impact. For example, from a dataset of three men and five women, this job offering algorithm offers jobs to two men and two women, then the ratio for men \(\frac{2}{3}\) is significantly larger than \(\frac{2}{5}\). It indicates the presence of disparate impact.

-

Causal fairness: A decision rule is causally fair for a protected attribute if changing that protected attribute while holding all other variables constant does not change the probability of receiving a positive outcome [125]. In other words, the protected attribute should not cause any outcome differences. For example, in a dataset of people’s information, such as age, gender, salary, and mortgage rate, if a credit card-allowing algorithm provides the exact prediction (allow credit card for that person) in the presence and absence of gender, then the algorithm has causal fairness.

-

Demographic parity: Dwork et al. state that demographic parity is satisfied if the proportion of positive outcomes is equal across all groups [99]. Keeping the meaning same, Hardt et al. defined the demographic parity when the true positive rate (TPR) is equal across all groups [125]. Emphasizing the positive outcome, Feldman et al. claimed that a classifier has demographic parity if its positive predictive value (PPV) is equal across all groups [102]. For example, in job applications, if the model selects \(10\%\) of male and \(10\%\) of female candidates for interviews, then demographic parity is satisfied. Here, \(10\%\) is the TPR and PPV for both males and females, which is equal. The classifier predicts negative outputs for \(90\%\) males and females, indicating the negative predictive value.

-

Equalized odds and calibration: In the context of fair machine learning, ‘equalized odds’ is a fairness criterion that requires the true positive and false positive rates to be equal across different groups defined by a sensitive attribute, such as race or gender [132]. True positive (TP) and false positive (FP) rates are commonly used performance metrics in classification tasks. Specifically, equalized odds means that the probability of a positive outcome (e.g., being approved for a loan or receiving a medical intervention) should be the same for individuals in different groups who have the same true status (e.g., whether they will pay back the loan or whether they have a particular medical condition).

-