Abstract

Convolutional Neural Networks (CNNs) are efficient tools for pattern recognition applications. They have found applications in wireless communication systems such as modulation classification from constellation diagrams. Unfortunately, noisy channels may render the constellation points deformed and scattered, which makes the classification a difficult task. This paper presents an efficient modulation classification algorithm based on CNNs. Constellation diagrams are generated for each modulation type and used for training and testing of the CNNs. The proposed work depends on the application of Radon Transform (RT) to generate more representative patterns for the constellation diagrams to be used for training and testing. The RT has a good ability to represent discrete points in the spatial domain as curved lines. Several pre-trained networks including AlexNet, VGG-16, and VGG-19 are used as classifiers for modulation type from the spatial-domain constellation diagrams or their RTs. Several simulation experiments are presented in this paper to compare different scenarios for modulation classification at different Signal-to-Noise Ratios (SNRs) and fading channel conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Modulation of the transmitted signals is one of the fundamental steps in the transmission chain of wireless communication systems. In adaptive modulation systems, the modulation type is varied in each transmission in response to the SNR to enhance the Bit Error Rate (BER) performance. Modulation classification is an important step in the receiver chain of several wireless communication applications, e.g. electronic surveillance systems, and electronic warfare. Different traditional methods have been used for modulation classification.

In Al-Makhlasawy et al. (2012) and Keshk et al. (2015), Mel-Frequency Cepstral Coefficients (MFCCs) have been used as features and Neural Networks (NNs) and Support Vector Machines (SVMs) have been investigated as modulation classifiers for Orthogonal Frequency Division Multiplexing (OFDM) systems. Higher-order statistics have also been used. Higher-order moments have been utilized in Abu-Romoh et al. (2018). The main advantage of relying on such statistical features is their robustness to noise. Nevertheless, estimating these higher-order statistics endures large computational complexity. Another study introduced different types of images called contour stellar images for modulation classification (Lin et al. 2017). Signal identification can be implemented on different scales. It can be applied for Single-Carrier (SC), OFDM, single-antenna and Multiple Input Multiple Output (MIMO) systems (Eldemerdash et al. 2016).

For modulation classification, Feature-Based (FB) and Likelihood-Based (LB) methods have been investigated. The FB methods depend on feature selection and detection methods to provide sub-optimal performance, which results in reduced complexity and reduced sensitivity to model mismatches (Dobre et al. 2007). Most of the FB methods require frequency synchronization. Generally, they depend on the extraction of robust features and the design of the classifier. On the other hand, the LB methods depend on the likelihood function of the modulated signal, and they rely on Bayesian estimation of the modulation type considering prior information such as noise and channel models (Xu et al. 2010). Usually, they have high computational complexity and are not suitable for highly dynamic environments. Machine learning, especially pattern recognition, played a major role in the classification of the modulation type. Both K-Nearest Neighbor (KNN) and Support Vector Machine (SVM) classifiers have been applied for modulation classification (Aslam et al. 2012; Peng et al. 2017), and Medium Access Control (MAC) protocol classification (Hu et al. 2014).

Deep Learning (DL) is a new type of machine learning. It has gained an increased interest in recent years and has achieved a remarkable success in classification tasks, mainly due to its high-performance capability achieved by the large number of involved hidden layers. It is used in several application fields such as computer vision (Jean et al. 2016), bioinformatics (Min et al. 2017), economics (Nosratabadi et al. 2020), security (Liu et al. 2020), and natural language processing (Wu et al. 2016). For modulation type classification based on DL, some studies have been reported e.g., (Mendis et al. 2016; Ali et al. 2017; Wang et al. 2017; O’Shea et al. 2018). Recently, DL models have been proposed for modulation classification without requiring prior information such as the channel model (Lin et al. 2017; Jiang et al. 2020). In Zhang et al. (2020), a CNN was used to classify 11 different modulation types in the RadioML2016.10A dataset, with an accuracy exceeding 70%. To improve the accuracy, DL models such as Recurrent Neural Networks (RNNs) (Lin et al. 2017) and fusion methods have been utilized.

In wireless communication applications, DL has several advantages compared to traditional classifiers. A shortcoming of DL techniques is the need for large training datasets. However, in wireless communication settings, we can make use of the high transmission rate to obtain the necessary datasets. This is specifically true if we consider limited possibilities of transmission patterns, which is the exact case with adaptive modulation, when the modulation is confined to a set of well-defined modulation types.

An important challenge for wireless communication is the manual feature selection, which is completely solved by the automated extraction of features that is inherent in DL (Al-Makhlasawy et al. 2020). In Eltaieb et al. (2019), contour stellar images with different colors have been used for modulation classification. These images are used as inputs for the CNN to identify the modulation types and their orders. Pre-trained CNNs including AlexNet, ResNetv4, VGG-16, and GoogLeNet-v2 have been used for modulation classification (Eltaieb et al. 2019). Radon Transforms (RTs) of constellation diagrams have been used with Singular Value Decomposition (SVD) for blind optical modulation classification in Eltaieb et al. (2019). In Al‐Makhlasawy et al. (2020), a scheme based on DL was proposed to deal with modulation classification in the presence of Adjacent Channel Interference (ACI). This scheme depends on the generated constellation diagrams for the received signals.

In this paper, we depend on CNNs for modulation classification. These CNNs work on constellation diagrams for the classification task. To enhance the accuracy of modulation classification in Al-Makhlasawy et al. (2020), we use the RTs of the constellation diagrams for different modulation types as inputs for the CNNs. Seven types of modulation are considered in the classification process. These types are BPSK, QPSK, 8PSK, 16PSK, 8QAM, 16QAM, and 32QAM. AlexNet, VGG-16, and VGG-19 are used as classifiers.

2 Problem formulation

Following a general wireless transmission chain, the analog signal is firstly converted into a baseband signal, at the transmitting end, through the consecutive processes of sampling, quantization, and encoding. Then, this signal is transmitted over a certain frequency band to the receiving end. The received signal is given by:

where \(s(t)\) is the transmitted signal, \(r(t)\) is the received signal and \(n(t)\) is the Additive White Gaussian Noise (AWGN). The signal \(s(t)\) can be expressed as:

where Am is the modulation amplitude, an is the symbol sequence, Ts is the symbol period, fc is the carrier frequency, fm is the modulation frequency, ϕ0 is the initial phase, g(t) is the line code function, and ϕm is the modulation phase.

In digital wireless communication systems, representation of the amplitude and phase of the received signal is done efficiently with the aid of the constellation diagram of that signal. The receiver divides the received signal \(s(t)\) to obtain the in-phase (I) component and quadrature (Q) component that are orthogonal to each other (Goldsmith 2005). The transmitted signal can be represented in terms of the two orthonormal I/Q basis in the form of \(a+jb\), which is called a constellation point.

3 Radon transform (RT)

The RT is an integral transform that maps a function \(f(x,y)\) defined on one plane to another function \(W(d,\theta )\) on a different plane (Radon 1986). This transform was first introduced by Radon (1917), who provided a formulation for the inverse transform as well.

The RT is obtained with the following equation (Wikipedia 2005):

where \(f(x,y)\) is the constellation diagram image, \(x\) and \(y\) represent the coordinate point, \(\theta\) is the angle of projection, \(d\) is the normal from the origin to the line of projection, \(W(d,\theta )\) is the RT image, and \(\delta (.)\) is the Dirac delta function. The collection of all values of this integral in a single matrix is the RT. The angle of projection is taken from \(0\) to \(\pi /2\) due to the symmetry property of the RT. Figure 1 shows the constellation diagrams and their RTs for different modulation types at different SNRs.

4 Constellation diagram classification with DL

The DL is used in several scientific fields. It depends on multiple layers of neurons for operation, where the output of a specific layer is fed into the consecutive one (Abu-Romoh et al. 2018). Each layer is prepared for extracting a huge amount of data. Deep neural networks depend on learning a large amount of features from the data in order to identify patterns (Peng et al. 2018), without the need for manual feature extraction. A network can collect simple features, through multiple nonlinear transformations in order to generate more complex features, autonomously (Meng et al. 2018). In the literature, there exists a group of well-designed pre-trained CNNs that can be used through transfer learning to execute new identification and classification tasks. In the following sub-sections, we list the pre-trained CNNs that we will apply on our datasets.

4.1 AlexNet

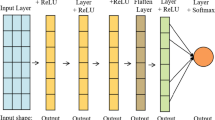

AlexNet is used in various applications with a huge and deep architecture. It includes a large number of neurons up to 650 thousands and 60 million parameters. It was implemented to classify 1.2 million images from 1000 groups. It contains five convolutional layers as well as three fully-connected layers with a 1000-channel softmax layer. To enhance the performance and decrease the time of training, Rectified Linear Units (ReLUs) are adopted as neurons with non-saturating nonlinearity (Krizhevsky et al. 2012). The ReLUs are connected to fully-connected and convolutional layers. If we add a randomization mechanism to the first two fully-connected layers, the speed of convergence can be doubled.

4.2 Deep VGGNet

With the success of CNNs in image classification, an effective and simple design for CNNs was investigated, namely VGG (Simonyan et al. 2014). The VGG-16 and VGG-19 networks are composed of 16 and 19 layers, respectively. The VGG network is larger than the AlexNet. It depends on a stack of 3 × 3 filters. The concurrent placement of 3 × 3 filters eliminates the need for large-size filters. The exploitation of small-size filters grants an advantage of low computational complexity by decreasing the number of parameters. The VGG network complexity is reduced by inserting 1 × 1 convolution masks in between the convolutional layers. An inserted mask targets learning a linear set of the resultant feature maps.

To tune the network, after each convolutional layer, max-pooling is implemented, and padding is performed to preserve the spatial resolution. The VGG achieves particularly good results for localization problems and image identification tasks. High computational cost is the essential restriction associated with the VGG network, even with the use of small-size filters. This is mainly attributed to the use of about 140 million parameters (Khan et al. 2020).

The VGG-16 network contains three fully-connected layers and thirteen convolutional layers. Two combined 3 × 3 convolution masks have a field of 5 × 5. By using more layers, the expressiveness of features is increased. The integrated layers are followed by the ReLU layer with either a max-pooling or average pooling operation. Pooling layers result in a decrease in the spatial dimensions of features, and hence they enhance the classification performance. The final output layers are fully-connected layers.

The VGG-19 network is characterized by its simplicity as it utilizes 3 × 3 convolution masks that are mounted on top to increase depth. Max-pooling layers are used to decrease the volume size of data. In the training phase, for feature extraction, convolutional layers are used. Max-pooling layers are associated with the convolutional layers to decrease the feature dimensionality. In a convolutional layer, 64 kernels are used for feature extraction from the input images. To prepare the feature vector, fully-connected layers are used. The 10-fold cross-validation is applied in the testing phase depending on the softmax activation.

5 Simulation experiments

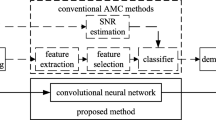

In this paper, we have used CNN models for modulation classification in wireless communication systems. The communication system with modulation classification is illustrated in Fig. 2. We used seven types of modulation including BPSK, QPSK, 8PSK, 16PSK, 8QAM, 16QAM, and 32QAM. We used a CNN with three convolutional layers as a classifier. In addition, we investigated the utilization of pre-trained networks such as AlexNet, VGG-16, and VGG-19 as modulation type classifiers. The inputs of these classifiers are the RTs of the constellation diagrams. We generated more than 15,000 images of constellation diagrams for different modulation types at different SNRs. For the training phase, the RTs are used to enhance the accuracy of classification in the presence of AWGN and fading channel effects.

The CNN with three convolutional layers has been applied on the constellation diagram images and their RTs, and the results are shown in Fig. 3. We notice that with RTs of constellation diagrams for an AWGN channel, the classification accuracy reaches 100% at an SNR of 5 dB for different modulation types. For the classification of BPSK based on RTs, we achieve a good accuracy at − 5 dB SNR. At − 5 dB, the classification of 32QAM based on constellation diagrams achieves a good accuracy. For the AlexNet classifier, the accuracy is 100% for different modulation types at an SNR of 10 dB from constellation diagrams as shown in Fig. 4. For VGG-16, 8QAM classification is performed with high accuracy at low SNRs as shown in Fig. 5. The accuracy reaches 100% for different modulation types when RTs are used at 5 dB, but the accuracy reaches 100% at 10 dB when the constellation diagrams are used. At an SNR of 5 dB, the accuracy is 100% for different modulation types with VGG-19, when the RTs are used as shown in Fig. 6.

For fading channel, the CNN classifier with RTs of constellation diagrams has higher accuracies than those with constellation diagrams only as shown in Fig. 7. At low SNRs, the classification accuracy of 16QAM is good with constellation diagrams. With RTs of constellation diagrams, 32QAM achieves a good accuracy of classification at low SNRs as well. We notice that the accuracies are low compared to those obtained in the case of AWGN channels. For the AlexNet classifier, the accuracy is 100% at the SNR of 10 dB. Both 8PSK and QPSK achieve high accuracy of classification at low SNRs as shown in Fig. 8. As shown in Fig. 9, 16QAM achieves a high accuracy of classification at low SNRs, when the VGG-16 classifier is applied. Figure 10 illustrates the accuracy for the VGG-19 classifier over a fading channel.

6 Conclusion and future work

In this paper, we investigated the utilization of RTs of constellation diagrams for modulation classification with CNNs. The RTs of the constellation diagrams can enhance, for several modulation types, the performance of the classifiers and increase the accuracy to reach acceptable values at small values of SNR. The proposed classifier has been applied at different SNRs with AWGN and fading channels. In addition, we investigated the utilization of pre-trained networks such as AlexNet, VGG-16, and VGG-19 for modulation classification. These classifiers achieve good performance. Moreover, the fading channel reduces the accuracy of classification. We notice that the proposed CNN achieves higher accuracy than those of other classifiers at low SNRs. In the future plan, to enhance the accuracy of the classifiers, we can use other transforms to extract features from the constellation diagrams such as Gabor transform, and Speeded-Up Robust Feature (SURF) transform. In addition, the SVD can also be investigated with the RT, as it has previously succeeded in the classification of optical modulation formats.

Availability of data and material

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Abu-Romoh M, Aboutaleb A, Rezki Z (2018) Automatic modulation classification using moments and likelihood maximization. IEEE Commun Lett 22(5):938–941

Ali A, Yangyu F (2017) Automatic modulation classification using deep learning based on sparse autoencoders with nonnegativity constraints. IEEE Signal Process Lett 24(11):1626–1630

Al-Makhlasawy RM, Elnaby MA, El-Khobby HA, El-Rabaie S, El-samieFE (2012) Automatic modulation recognition in OFDM systems using cepstral analysis and support vector machines. J Telecommun Syst Manag 1(105):2167–0919

Al-Makhlasawy RM, Hefnawy AA, Abd Elnaby MM, Abd El‐Samie FE (2020) Modulation classification in the presence of adjacent channel interference using convolutional neural networks. Int J Commun Syst. https://doi.org/10.1002/dac.4295

Aslam MW, Zhu Z, Nandi AK (2012) Automatic modulation classification using combination of genetic programming and KNN. IEEE Trans Wirel Commun 11(8):2742–2750

Dobre OA, Abdi A, Bar-Ness Y, Su W (2007) Survey of automatic modulation classification techniques: classical approaches and new trends. IET Commun 1(2):137–156

Eldemerdash YA, Dobre OA, Öner M (2016) Signal identification for multiple-antenna wireless systems: achievements and challenges. IEEE Commun Surv Tutor 18(3):1524–1551

Eltaieb RA, Farghal AE, HossamEl-din HA, Saif WS, Ragheb A, Alshebeili SA, Shalaby HM, Abd El-Samie FE (2019) Efficient classification of optical modulation formats based on singular value decomposition and radon transformation. J Lightwave Technol 38(3):619–631

Goldsmith A (2005) Wireless communications. Cambridge University Press

Hu S, Yao YD, Yang Z (2014) MAC protocol identification using support vector machines for cognitive radio networks. IEEE Wirel Commun 21(1):52–60

Jean N, Burke M, Xie M, Davis WM, Lobell DB, Ermon S (2016) Combining satellite imagery and machine learning to predict poverty. Science 353(6301):790–794

Jiang K, Zhang J, Wu H, Wang A, Iwahori Y (2020) A novel digital modulation recognition algorithm based on deep convolutional neural network. Appl Sci. https://doi.org/10.3390/app10031166

Keshk ME, Abd El-Naby M, Al-Makhlasawy RM, El-Khobby HA, Hamouda W, Abd Elnaby MM, Abd El-Samie FE (2015) Automatic modulation recognition in wireless multi-carrier wireless systems with cepstral features. Wirel Pers Commun 81(3):1243–1288

Khan A, Sohail A, Zahoora U, Qureshi AS (2020) A survey of the recent architectures of deep convolutional neural networks. Artif Intell Rev 53(8):5455–5516

Krizhevsky A, Sutskever I, Hinton E (2012) ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105

Lin Y, Tu Y, Dou Z, Wu Z (2017) The application of deep learning in communication signal modulation recognition. In: IEEE/CIC international conference on communications in China (ICCC), pp 1–5

Liu X, Xie L, Wang Y, Zou J, Xiong J, Ying Z, Vasilakos A (2020) Privacy and security issues in deep learning: a survey. IEEE Access 9:4566–4593

Mendis GJ, Wei J, Madanayake A (2016) Deep learning-based automated modulation classification for cognitive radio. In: IEEE international conference on communication systems (ICCS), pp 1–6

Meng F, Chen P, Wu L, Wang X (2018) Automatic modulation classification: a deep learning enabled approach. IEEE Trans Veh Technol 67(11):10760–10772

Min S, Lee B, Yoon S (2017) Deep learning in bioinformatics. Brief Bioinform 18(5):851–869

Nosratabadi S, Mosavi A, Duan P, Ghamisi P, Filip F, Band SS, Gandomi AH (2020) Data science in economics: comprehensive review of advanced machine learning and deep learning methods. Mathematics. https://doi.org/10.3390/math8101799

O’Shea TJ, Roy T, Clancy TC (2018) Over-the-air deep learning based radio signal classification. IEEE J Sel Top Signal Process 12(1):168–179

Peng S, Jiang H, Wang H, Alwageed H, Yao YD (2017) Modulation classification using convolutional neural network based deep learning model. In: 26th IEEE wireless and optical communication conference (WOCC), pp 1–5

Peng S, Jiang H, Wang H, Alwageed H, Zhou Y, Sebdani MM, Yao YD (2018) Modulation classification based on signal constellation diagrams and deep learning. IEEE Trans Neural Netw Learn Syst 30(3):718–727

Radon J (1917) Über die Bestimmung von FunktionendurchihreIntegralwertelängsgewisserMannigfaltigkeiten. Class Pap Mod Diagn Radiol 69:262–277

Radon J (1986) On the determination of functions from their integral values along certain manifolds. IEEE Trans Med Imaging 5(4):170–176

Simonyan K, Andrew Z (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint. http://arxiv.org/abs/1409.1556

Wang F, Wang Y, Chen X (2017) Graphic constellations and DBN based automatic modulation classification. In: IEEE 85th vehicular technology conference (VTC Spring), pp 1–5

Wikepdia (2005) Radon transform. https://en.wikipedia.org/wiki/Radon_transform. Accessed 5 Jan 2021

Wu Y, Schuster M, Chen Z, Le QV, Norouzi M, Macherey W, Krikun M, Cao Y, Gao Q, Macherey K, Klingner J (2016) Google’s neural machine translation system: bridging the gap between human and machine translation. arXiv preprint. http://arxiv.org/abs/1609.08144

Xu JL, Su W, Zhou M (2010) Likelihood-ratio approaches to automatic modulation classification. IEEE Trans Syst Man Cybern Part C (Appl Rev) 41(4):455–469

Zhang H, Huang M, Yang J, Sun W (2020) A data preprocessing method for automatic modulation classification based on CNN. IEEE Commun Lett 25(4):1206–1210

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB). The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ghanem, H.S., Al-Makhlasawy, R.M., El-Shafai, W. et al. Wireless modulation classification based on Radon transform and convolutional neural networks. J Ambient Intell Human Comput 14, 6263–6272 (2023). https://doi.org/10.1007/s12652-021-03650-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-021-03650-7