Abstract

We consider multicomponent local Poisson structures of the form \({\mathcal {P}}_3 + {\mathcal {P}}_1\), under the assumption that the third-order term \({\mathcal {P}}_3\) is Darboux–Poisson and nondegenerate, and study the Poisson compatibility of two such structures. We give an algebraic interpretation of this problem in terms of Frobenius algebras and reduce it to classification of Frobenius pencils, i.e. linear families of Frobenius algebras. Then, we completely describe and classify Frobenius pencils under minor genericity conditions. In particular,we show that each Frobenuis pencil is a subpencil of a certain maximal pencil. These maximal pencils are uniquely determined by some combinatorial object, a directed rooted in-forest with edges and vertices labelled by numerical marks. They are also naturally related to certain pencils of Nijenhuis operators. We show that common Frobenius coordinate systems admit an elegant invariant description in terms of the corresponding Nijenhuis pencils.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Foreword

Nijenhuis operator is a (1,1)-tensor field \(L= L^i_j \) on an n-dimensional manifold M such that its Nijenhuis torsion vanishes. Nijenhuis geometry, as initiated in [6] (where all necessary definitions can also be found) and further developed in [7, 8, 28], studies Nijenhuis operators and their applications. There are many topics in mathematics and mathematical physics in which Nijenhuis operators appear naturally; this paper is devoted to the study of \(\infty \)-dimensional compatible Poisson brackets of type \({\mathcal {P}}_3 + {\mathcal {P}}_1\), where the lower index i indicates the order of the homogeneous bracket \({\mathcal {P}}_i\) (the necessary definitions will be given in Sect. 1.2). Nijenhuis geometry allows us to reformulate the initial problem, originated from mathematical physics, first into the language of algebra and then into the language of differential geometry and finally solve it using the machinery of differential geometry in combination with that of algebra. Translating back the results gives a full description of (nondegenerate) compatible Poisson brackets of type \({\mathcal {P}}_3 + {\mathcal {P}}_1\) such that \({\mathcal {P}}_3\) is Darboux–Poisson.

1.2 Mathematical Setup

The construction below is a special case of the general approach suggested in [25]. For \(n=1\), the construction can be found in [24], see also [12, 35, 42].

We work in an open disc \(U\subset {\mathbb {R}}^n\) with coordinates \(u^1,\ldots ,u^n\). Our constructions are invariant with respect to coordinate changes so one may equally think of \((u^1,\ldots ,u^n)\) as a coordinate chart on a smooth manifold M.

Consider the jet bundles (of curves) over U. Recall that for a point \({\textsf{p}}\in {U}\), the \(k^{\text {th}}\) jet space \(J^k_{{\textsf{p}}}U\) at this point is an equivalence class of smooth curves \(c:(-\varepsilon ,\varepsilon ) \rightarrow U\) such that \(c(0)={\textsf{p}}\). The parameter of the curves c will always be denoted by x. The equivalence relation is as follows: two curves are equivalent if they coincide at c(0) up to terms of order \(k+1\).

For example, for \(k=0\), the space \(J^0_{{\textsf{p}}}U\) contains only one element and the definition of \(J_{{\textsf{p}}}^1U\) coincides with one of the standard definitions of the tangent space \(T_{{\textsf{p}}}U\).

It is known that \(J^k_{{\textsf{p}}}U\) is naturally equipped with the structure of a vector space of dimension \(n\times k\) with coordinates denoted by

Namely, a curve \(c(x)= (u^1(x),\ldots ,u^n(x))\) with \(c(0)={{\textsf{p}}}\) viewed as an element of \(J_{{\textsf{p}}}^kU\) has coordinates

We denote by \(J^kU\) the union \( \bigcup _{{{\textsf{p}}}\in U} J^k_{{\textsf{p}}}U\). It has a natural structure of a \(k\times n\)-dimensional vector bundle over U. The coordinates \((u^1,\ldots ,u^n)\) on U and (1) on \(J^k_{{\textsf{p}}}U\) generate a coordinate system

on \(J^kU\) adapted to the bundle structure. Any \(C^\infty \) curve \(c:[a,b] \rightarrow U, \ x\mapsto (u^1(x),\ldots ,u^n(x)) \) naturally lifts to a curve \({{\widehat{c}}}\) on \(J^kU\) by

Next, for every \({{\textsf{p}}}\in U\) denote by \({\Pi }[J^k_{{\textsf{p}}}U]\) the algebra of polynomials in variables (1) on \(J^k_{{\textsf{p}}}U\). It has a natural structure of an infinite-dimensional vector bundle over U. Let \({\mathfrak {A}}_k\) denote the algebra of \(C^\infty \)-smooth sections of the bundle \({\Pi }[J^k_{{\textsf{p}}}U]\). Notice that we have natural inclusion \({\mathfrak {A}}_k \subset {\mathfrak {A}}_{k+1}\) and set \({\mathfrak {A}}=\bigcup _{k=0}^\infty {\mathfrak {A}}_k\). In simple terms, the elements of \({\mathfrak {A}}\) are finite sums of finite products of coordinates

with coefficients being \(C^\infty \)-functions on U. The summands in this sum, i.e. terms of the form \(a_{i_1 \ldots i_n}^{j_1 \ldots j_n}(u) (u^1_{x^{i_1}})^{j_1} \ldots (u^n_{x^{i_n}})^{j_n} \) with \(a_{i_1 \ldots i_n}^{j_1 \ldots j_n}(u)\not \equiv 0\) will be called differential monomials. The differential degree of such a differential monomial is the number \(i_1j_1+ i_2j_2+ \cdots +i_nj_n\). For example, \(f(u) u^1_{x^2} (u^2_{x})^2\) has differential degree \(1\times 2+ 2\times 1=4\). Differential degree of an element of \({\mathfrak {A}}\) is the maximum of the differential degrees of its differential monomials, and it is a nonnegative integer number. Elements of \({\mathfrak {A}}\) will be called differential polynomials.

Generators of this algebra are coordinates \(u^i_{x^j}\) and functions on U. Every element of \({\mathfrak {A}}\) can be obtained from finitely many generators using finitely many summation and multiplication operations.

The following two linear mappings will be important for us. The first one, called the total x-derivative and denoted by D (another standard notation used in literature is \(\tfrac{d}{dx}\)), is defined as follows. One requires that D satisfies the Leibnitz rule and then defines it on the generators of \({\mathfrak {A}}\), i.e. on functions f(u) and coordinates (4), by setting

Clearly, the operation D increases the differential degree by one at most.

Next, denote by \(\tilde{{\mathfrak {A}}}\) the quotient algebra \({\mathfrak {A}}/{D({\mathfrak {A}})}\). The tautological projection \({\mathfrak {A}} \rightarrow \tilde{{\mathfrak {A}}}\) is traditionally denoted by \({\mathcal {H}} \mapsto \int {\mathcal {H}} dx \in \tilde{{\mathfrak {A}}}\). In simple terms, it means that we think that two differential polynomials \({\mathcal {H}}, \bar{{\mathcal {H}}}\) are equal, if their difference is a total derivative of a differential polynomial.

Note that by construction, the operation D has the following remarkable property, which explains its name and also the notation \(\tfrac{d}{dx}\) used for D sometimes in literature. For any curve \(c:[a,b]\rightarrow U\) whose lift (3) will be denoted by \({{\widehat{c}}}\) and for any element \({\mathcal {H}} \in {{\mathfrak {A}}}\) we have:

The second mapping is the mapping from \({\mathfrak {A}}\) to an n-tuple of elements of \({\mathfrak {A}}\). The mapping will be denoted by \(\delta \) and will be called the variational derivative. Its \(i^{\text {th}}\) component will be denoted by \(\tfrac{\delta }{\delta u^i}\) and for an element \({\mathcal {H}}\in {\mathcal {A}}\) it is given by the Euler–Lagrange formula:

(only finitely many elements in the sum are different from zero so the result is again a differential polynomial). It is known, see, e.g. [24], that for an element \({\mathcal {H}}\in {\mathfrak {A}}\) we have \(\delta {\mathcal {H}} =0\) if and only if \({\mathcal {H}}\) is a total x-derivative. Then, we again see that the variational derivative does not depend on the choice of differential polynomial in the equivalence class \({\mathcal {H}} \subset {\mathfrak {A}}\). Then, the mapping \(\delta \) induces a well-defined mapping on \(\tilde{{\mathfrak {A}}}, \) which will be denoted by the same letter \(\delta \). One can think of \(\delta {\mathcal {H}}\) as a covector with entries from \(\tilde{{\mathfrak {A}}}\), because the transformation rule of its entries under the change of u-coordinates is a natural generalisation of the transformation rule for (0,1)-tensors.

Following [16, 17], let us define a (homogeneous, nondegenerate) Poisson bracket of order 1. We choose a contravariant flat metric \(g=g^{ij}\) of any signature whose Levi-Civita connection will be denoted by \(\nabla = (\Gamma _{jk}^i)\). Next, consider the following operation \({{\mathcal {A}}}_g: \tilde{{\mathfrak {A}}} \times \tilde{{\mathfrak {A}}} \rightarrow \tilde{{\mathfrak {A}}}\): for two elements \({\mathcal {H}}, {\bar{{\mathcal {H}}}} \in \tilde{{\mathfrak {A}}} \), we set

In the formula above and later in the text, we sum over repeating indices and assume \(\Gamma ^{is}_{j} = \Gamma ^s_{pj} g^{pi}\). The components \(\Gamma ^{is}_{j}\) will be called contravariant Christoffel symbols, when we speak about different metrics we always raise the index by the own metric. A common way to write the operation \({{\mathcal {A}}}_g\) which we also will use in our paper assumes applying it to \(\tfrac{\delta {\mathcal {H}}}{\delta u^\beta }\) and multiplication with \(\tfrac{\delta \bar{{\mathcal {H}}}}{\delta u^\alpha }\) (and of course summation and projection to \(\tilde{{\mathfrak {A}}}\)):

It is known, see,e.g. [16,17,18], that the operation \({{\mathcal {A}}}_g\) given by (6) defines a Poisson bracket on \(\tilde{{\mathfrak {A}}}\), that is, it is skew-symmetric and satisfies the Jacobi identity. Moreover, one can show that the operation constructed by g and \(\Gamma \) via (6) defines a Poisson bracket if and only if g is flat, that is, its curvature is zero, and \(\Gamma _{jk}^i\) is the Levi-Civita connection of g. It is also known that the construction (6) does not depend on the coordinate system on U.

Next, let us define a (nondegenerate, homogeneous) Darboux–Poisson structure of order 3. We choose a nondegenerate contravariant flat metric \(h= h^{ij}\) of arbitrary signature and define the operation \({{\mathcal {B}}}_h:\tilde{{\mathfrak {A}}} \times \tilde{{\mathfrak {A}}} \rightarrow \tilde{{\mathfrak {A}}}\) by the formula:

In the formula, we have used the same conventions as above, i.e. assume summation over repeating indices. Moreover, similar to formula (7), we did not write \({\mathcal {H}}, {\bar{{\mathcal {H}}}}\) in the formula. They are assumed there as follows: the differential operator (8) is applied to \(\tfrac{\delta {\mathcal {H}}}{\delta u^\alpha }\), the result is multiplied by \(\tfrac{\delta \bar{{\mathcal {H}}}}{\delta u^\beta }\), and then we perform summation with respect to the repeating indices \(\alpha , \beta \).

As in the case of order 1, the operation \({{\mathcal {B}}}_h\) given by (8) defines a Poisson bracket on \(\tilde{{\mathfrak {A}}}\). The construction of this Poisson bracket is independent on the choice of coordinate system on U. However, in contrast to the case of order 1, the form (8) is not the most general form for a local Poisson bracket on \(\tilde{{\mathfrak {A}}}\) of order 3. In fact, the word Darboux indicates that in a certain coordinate system (flat coordinate system for h in our case), the coefficients of the Poisson structure are constants.Footnote 1 In this Darboux coordinate system, the Christoffel symbols \(\Gamma ^i_{jk}\) are all zero and formula (8) reduces toFootnote 2

with the components \(h^{\alpha \beta }\) of the metric h being constants.

Poisson structures \({\mathcal {P}}_1\) of order 1 are always Darboux–Poisson, but there are examples, see,e.g. [21, 22, 37], of Poisson structures \({\mathcal {P}}_3\) of order 3 which are not Darboux–Poisson.

Similar to the finite-dimensional case, a Poisson structure \({\mathcal {P}}\) and choice of a ‘Hamiltonian’ \({\mathcal {H}}\in \tilde{{\mathfrak {A}}}\) allow one to define the Hamiltonian flow, which in our setup is a system of n PDEs on n functions \(u^i(t,x)\) of two variables, t and x. It is given by:

For example, in the case of the Poisson structure (6) for a Hamiltonian of degree 0 (i.e. for a function H on U), the Hamiltonian flow is given by

Such systems of PDEs are called Hamiltonian systems of hydrodynamic type.

In our paper, we study compatibility of non-homogeneous Poisson structures of type \({\mathcal {P}}_3+{\mathcal {P}}_1\) such that the part of order 3 is Darboux–Poisson. That is, we have 4 nondegenerate Poisson structures: \({{\mathcal {A}}}_g\) and \({{\mathcal {A}}}_{{\bar{g}}}\) constructed by flat metrics g and \({\bar{g}}\) by (6), and \({{\mathcal {B}}}_h\) and \({{\mathcal {B}}}_{{\bar{h}}}\) constructed by flat metrics h and \({\bar{h}}\) by (8). We assume that \({{\mathcal {A}}}_g + {{\mathcal {B}}}_h\) and \({{\mathcal {A}}}_{{\bar{g}}} + {{\mathcal {B}}}_{{\bar{h}}}\) are (non-homogeneous) Poisson structures and ask the question when these structures are compatible in the sense that any of their linear combinations is a Poisson structure [32]. Since it is automatically skew-symmetric, the compatibility is equivalent to the Jacobi identity for each linear combination of \({{\mathcal {A}}}_g + {{\mathcal {B}}}_h\) and \({{\mathcal {A}}}_{{\bar{g}}} + {{\mathcal {B}}}_{{\bar{h}}}\).

The meaning of the word ‘nondegenerate’ relative to the Poisson structures under discussion is as follows: the metrics \(g,{\bar{g}}, h, {\bar{h}}\) which we used to construct them are nondegenerate, i.e. they are given by matrices with nonzero determinant. Additional nondegeneracy condition, natural from the viewpoint of mathematical physics, is as follows: the operators \(R_h= {\bar{h}} h^{-1}\) and \(R_g= {\bar{g}} g^{-1}\) have n different eigenvalues. Under these conditions, we solve the problem completely: we find explicitly all pairs of such Poisson structures.

Let us comment on the assumption that \({\mathcal {P}}_3\) is Darboux–Poisson. The compatibility of two geometric Poisson brackets \({{\mathcal {P}}}_3 +{\mathcal {P}}_1\) and \(\bar{{\mathcal {P}}}_3 + \bar{{\mathcal {P}}}_1\) amounts to a highly overdetermined PDE system which is expected to imply additional conditions on the third-order parts, from which Darboux–Poisson is a natural candidate. Indeed, in the literature, we have not found any example of compatible Poisson brackets \({{\mathcal {P}}}_3 +{\mathcal {P}}_1\) and \(\bar{{\mathcal {P}}}_3 + \bar{{\mathcal {P}}}_1\) such that \({\mathcal {P}}_3\) and \(\bar{{\mathcal {P}}}_3\) are not Darboux–Poisson and nonproportional. Even in the case when \(\bar{{\mathcal {P}}}_3=0\), only few examples are known, namely the compatible brackets for WDVV system from [23] (this example is three-dimensional and moreover, \({\mathcal {P}}_1=0\)) and a family of compatible brackets \({{\mathcal {P}}}_3 +{\mathcal {P}}_1\) and \(\bar{{\mathcal {P}}}_1\) constructed in [31] in dimensions 1 and 2.

Our main motivation came from the theory of integrable systems, in which many famous integrable systems have been constructed and analysed using compatible Poisson brackets. Those of the form \({\mathcal {P}}_3 + {\mathcal {P}}_1\), described and classified in the present paper, generalise compatible Poisson brackets related to KdV, Harry Dym, Camassa-Holm and Dullin-Gottwald-Holm equations. The applications of such new brackets will be developed in a series of separate papers, of which the first one has already appeared, [10]. In this paper, we have generalised the above equations for an arbitrary number of components and have constructed new integrable PDE systems that have no low-component analogues. Note that in [10], we used the simplest pencil of compatible Poisson structures of type \({\mathcal {P}}_3 + {\mathcal {P}}_1\) (so-called AFF-pencil from Sect. 2.3); more complicated pencils will lead to more new families of integrable multicomponent PDEs.

Let us also comment on a more physics-oriented approach to the construction above, see, e.g. [14]. Physicists often view x as a space coordinate, and \((u^1,\ldots ,u^n)\) as field coordinates. In the simplest situation, the values \(u^1,\ldots ,u^n\) at x may describe some physical values (e.g. pressure, temperature, charge, density, momenta). The total energy of the system is the integral over the x variable of some differential polynomial in \(u^1,\ldots ,u^n\), and the Hamiltonian functions \({\mathcal {H}}\in {\mathfrak {A}}\) have then the physical meaning of the density of the energy, i.e. of the integrand in the formula \(\text {\textbf{Energy}}(c)= \int {\mathcal {H}}({{\widehat{c}}})dx\). Further, it is assumed that the physical system is either periodic in x, or one is interested in fast decaying solutions as \(x\rightarrow \pm \infty \). The integration by parts implies then that the differential polynomial is defined up to an addition of the total derivative in x which allows one to pass to \(\tilde{{\mathfrak {A}}}= {\mathfrak {A}}/{D({\mathfrak {A}})}\). The natural analogue of the differential of a function in this setup is the variational derivative \(\tfrac{\delta }{\delta u^\alpha }\), and actually, the equation (10) is the natural analogue of the finite-dimensional equation \(\dot{u}= X_H\) (where \(X_H\) is the Hamiltonian vector field of a function H; it is given by \(X_H^j= P^{ij}\tfrac{\partial H}{\partial u^i}\) where \(P(u)^{ij}\) is the matrix of the Poisson structure; please note similarity with (10)). Generally, it is useful to keep in mind the physical interpretation and analogy with the finite-dimensional case.

1.3 Brief Description of Main Results, Structure of the Paper and Conventions

In this paper, we address the following problems:

-

(A)

Description of compatible pairs, \({{\mathcal {B}}}_h + {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}} + {{\mathcal {A}}}_{{\bar{g}}}\), of non-homogeneous Poisson brackets in arbitrary dimension n . In Theorems 1 and 2,we give an algebraic interpretation of this problem in terms of Frobenius algebras and reduce it to classification of Frobenius pencils, i.e. linear families of Frobenius algebras. We do it under the following nondegeneracy assumption: the (1,1)-tensor \(R_h= {\bar{h}}h^{-1}\) (connecting h and \({\bar{h}}\)) has n different eigenvalues.

-

(B)

Description and classification of Frobenius pencils. We reduce this purely algebraic problem to a differential geometric one (explicitly formulated in Sect. 6.1) and completely solve it using geometric methods. The nondegeneracy assumption is that the (1,1)-tensor \(R_g= {\bar{g}}g^{-1}\) (connecting g and \({\bar{g}}\)) has n different eigenvalues. Namely, we show that each Frobenuis pencil in question is a subpencil of a certain maximal pencil. We explicitly describe all maximal pencils, see Theorems 3, 4 and 5.

-

(B1)

A generic in a certain sense maximal pencil corresponds to the well-known multi-Poisson structure discovered by M. Antonowitz and A. Fordy in [1] and studied by E. Ferapontov and M. Pavlov [20], see also [2, 3, 9]. We refer to it as to Antonowitz-Fordy-Frobenius pencil, AFF-pencil. In Theorem 3, we show that, under an additional genericity assumption, every two-dimensional Frobenius pencil is contained in the AFF-pencil .

-

(B2)

Our main result, Theorems 4 and 5 , gives a complete description in the most general case. Theorem 4 constructs all maximal Frobenius pencils using AFF-pencils as building blocks. Theorem 5 states that each Frobenuis pencil is a subpencil of a certain maximal pencil from Theorem 4. These maximal pencils are uniquely determined by some combinatorial data, directed rooted in-forest \({\textsf{F}}\) with edges labelled by numbers \(\lambda _\alpha \)’s and vertices labelled by natural numbers whose sum is the dimension of the manifold. The AFF-pencil corresponds to the simplest case, when \({\textsf{F}}\) consists of a single vertex. To the best of our knowledge, the other Frobenius pencils and the corresponding bi-Poisson structures are new.

In addition, we show that common Frobenius coordinate systems admit an elegant invariant description in terms of the Nijenhuis pencil \({\mathcal {L}}\), see Theorem 4.

-

(B1)

-

(C)

Dispersive perturbations of compatible Poisson brackets of hydrodynamic type. The general question is as follows: given two compatible Poisson structures \({{\mathcal {A}}}_g\) and \({{\mathcal {A}}}_{{\bar{g}}}\) of the first order, can one find flat metrics h and \({\bar{h}}\) such that \({{\mathcal {B}}}_h + {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}} + {{\mathcal {A}}}_{{\bar{g}}}\) are compatible Poisson structures? This passage from a Poisson bracket of hydrodynamic type to a non-homogeneous Poisson bracket of higher order is called dispersive perturbation in literature. We study dispersive perturbations of bi-Hamiltonian structures assuming that the third-order terms \({{\mathcal {B}}}_h\) and \({{\mathcal {B}}}_{{\bar{h}}}\) are Darboux–Poisson.

We describe all such perturbations under the assumption that both \(R_h={\bar{h}} h^{-1}\) and \(R_g={\bar{g}} g^{-1}\) have n different eigenvalues, and in particular, answer a question from [20] on dispersive perturbations of the AFF-pencil (Remark 3.2).

The results of this paper also have the following unexpected application. It turns out that the diagonal coordinates for the operator \(R_g={\bar{g}} g^{-1}\) are orthogonal separating coordinates for the metrics g. In [11], we show that every orthogonal separating coordinates for a flat metric g of arbitrary signature can be constructed in this way. Namely, we reduce PDEs that define orthogonal separating coordinates to those studied in the present paper. This leads us to an explicit description of all orthogonal separating coordinates for metrics of constant curvature and thus solves a long-standing and actively studied problem in mathematical physics, see [11] for details.

The structure of the paper is as follows. In Sect. 2, we start with basic facts and constructions related to compatibility of homogeneous Poisson structures of order 1 and 3, then give description of compatible non-homogeneous structures \({{\mathcal {B}}}_g + {{\mathcal {A}}}_h\) and \({{\mathcal {B}}}_{{\bar{h}}} + {{\mathcal {A}}}_{{\bar{g}}}\) in terms of Frobenius algebras (Theorems 1 and 2 ), leading us to the classification problem for the so-called Frobenius pencils. We conclude this section with an example of AFF-pencil. The AFF-pencil plays later a role of a building block in our general construction. Moreover, it provides an answer under a minor nondegeneracy assumption, see Theorem 3 in Sect. 3, where we also discuss a question of Ferapontov and Pavlov. Theorem 3 will be proved in Sect. 6.

In Sect. 4, we formulate the answer to the classification problem in its full generality. Theorem 5 (proved in Sect. 7) gives a description of Frobenius pencils in the ‘diagonal’ coordinates for \(g,{\bar{g}}\), and Theorem 4 (proved in Sect. 8) describes the corresponding Frobenius coordinates. In Sect. 4.2, we discuss the case of two blocks and give explicit formulas, see Theorem 6.

All objects in our paper are assumed to be of class \(C^\infty \); actually,our results show that most of them are necessarily real analytic.

Throughout the paper, we use \({{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_h\) to denote the Poisson structures of order 1 and 3 given by (7) and (8), respectively. Unless otherwise stated, the metrics we deal with (such as g, h, \({\bar{g}}\), \({\bar{h}}\), \(\dots \)) are contravariant.

2 Non-homogeneous Compatible Brackets and Frobenius Algebras

2.1 Basic Facts and Preliminary Discussion

Recall that we study the compatibility of two Poisson structures \({{\mathcal {B}}}_h+ {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}}+ {{\mathcal {A}}}_{{\bar{g}}}\), constructed by flat metrics \(h,{\bar{h}}, g, {\bar{g}}\); our goal is to construct all of them. Recall that by definition,it means that for any constants \(\lambda , {{\bar{\lambda }}}\), the linear combination \(\lambda ({{\mathcal {B}}}_h+ {{\mathcal {A}}}_g) + {{\bar{\lambda }}}({{\mathcal {B}}}_{{\bar{h}}}+ {{\mathcal {A}}}_{{\bar{g}}})\) is a Poisson structure. Using that \({{\mathcal {B}}}\) and \({{\mathcal {A}}}\) that have different orders, one obtains (see, e.g. [14])

Fact 1

Let h, \({\bar{h}}\), g and \({\bar{g}}\) be flat metrics. If \({{\mathcal {B}}}_h + {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}} + {{\mathcal {A}}}_{{\bar{g}}}\) are compatible Poisson structures, then the following holds:

-

(i)

\({\mathcal {A}}_g\) and \({\mathcal {A}}_{{\bar{g}}}\) are compatible,

-

(ii)

\({\mathcal {B}}_h\) and \({\mathcal {B}}_{{\bar{h}}}\) are compatible,

-

(iii)

\({\mathcal {A}}_g\) and \({\mathcal {B}}_{h}\) are compatible (as well as \({\mathcal {A}}_{{\bar{g}}}\) and \({\mathcal {B}}_{{\bar{h}}}\)).

This Fact naturally leads us to considering pencils ( = linear combinations of metrics) \(\lambda h+ {{\bar{\lambda }}} {\bar{h}}\) and \(\lambda g + {{\bar{\lambda }}} {\bar{g}}\). We need the following definition:

Definition 1

(Dubrovin, [18, Definition 0.5]) Two contravariant flat metrics g and \({\bar{g}}\) are said to be Poisson compatible, if for each (nondegenerate) linear combination \({{\widehat{g}}} = \lambda g + {{\bar{\lambda }}} {\bar{g}}\), \(\lambda , {{\bar{\lambda }}} \in {\mathbb {R}}\), the following two conditions hold:

-

1.

\({{\widehat{g}}}\) is flat;

-

2.

the contravariant Christoffel symbols for g, \({\bar{g}}\) and \({{\widehat{g}}}\) are related as

$$\begin{aligned} {{\widehat{\Gamma }}}^{\alpha \beta }_s = \lambda \Gamma ^{\alpha \beta }_s + {{\bar{\lambda }}} {{\bar{\Gamma }}}^{\alpha \beta }_s. \end{aligned}$$(12)

In this case, the family of metrics \(\{ \lambda g + {{\bar{\lambda }}} {\bar{g}}\}_{\lambda ,{{\bar{\lambda }}}\in {\mathbb {R}}}\) is said to be a flat pencil of metrics.

The next fact explains the relationship between Poisson compatibility of flat metrics and compatibility of the corresponding Poisson structures.

Fact 2

Let h, \({\bar{h}}\), g and \({\bar{g}}\) be flat metrics. Then, the following statements are true:

-

(i)

\({{\mathcal {A}}}_g\) and \({{\mathcal {A}}}_{{\bar{g}}}\) are compatible if and only if g and \({\bar{g}}\) are Poisson compatible.

-

(ii)

If \({{\mathcal {B}}}_h\) and \({{\mathcal {B}}}_{{\bar{h}}}\) are compatible, then h and \({\bar{h}}\) are Poisson compatible.

-

(iii)

If \({{\mathcal {B}}}_h\) and \({{\mathcal {A}}}_g\) are compatible, then h and g are Poisson compatible.

The (i)-part of Fact 2 is in [16], see also [19, 33, 34]. In view of formula (7), the two conditions from Definition 1 are nothing else but a geometric reformulation of the compatibility condition for Poisson structures of order one, which explains the name Poisson compatible. The (ii)-part is an easy corollary of [14, Theorem 3.2], see also proof of Theorem 3 below. The (iii)-part follows from [29, Theorem 2.2].

Notice that unlike the case of Poisson structures of order 1, not every pair of Poisson-compatible metrics h and \({\bar{h}}\) (resp. h and g) leads to compatible Poisson structures of higher order \({{\mathcal {B}}}_h\) and \({{\mathcal {B}}}_{{\bar{h}}}\) as in (ii) (resp. \({{\mathcal {B}}}_h\) and \({{\mathcal {A}}}_g\) as in (iii)). Some extra conditions are required. These conditions will be explained below in Fact 4 (for h and g leading to compatible \({{\mathcal {B}}}_h\) and \({{\mathcal {A}}}_g\)) and Theorem 3 (for h and \({\bar{h}}\) leading to compatible \({{\mathcal {B}}}_h\) and \({{\mathcal {B}}}_{{\bar{h}}}\)).

Let us also recall the relation of compatible metrics to Nijenhuis geometry:

Fact 3

(see [19, 33, 34]) If g and \({\bar{g}}\) are Poisson compatible, then the (1,1)-tensor \(R= {\bar{g}} g^{-1}\) is a Nijenhuis operator. Moreover, if g is flat, R is a nondegenerate Nijenhuis operator with n different eigenvalues, and \({\bar{g}}:= Rg\) is flat, then \({\bar{g}}\) is Poisson compatible with g.

As already explained, the condition that \({{\mathcal {B}}}_h+ {{\mathcal {A}}}_g\) is a Poisson structure is a nontrivial geometric condition on the flat metrics h and g, stronger than Poisson compatibility in the sense of Definition 1. This condition was studied in literature (see, e.g. [4]) and it was observed that the compatibility of homogeneous Poisson structures of order 3 and 1 is sometimes related to certain algebraic structure. In our case, under the assumption that \({{\mathcal {B}}}_h\) is Darboux–Poisson, the algebraic structure which pops up naturally is Frobenius algebra.

Definition 2

Let \(({\mathfrak {a}}, \star )\) be an n-dimensional commutative associative algebra over \({\mathbb {R}}\) endowed with a nondegenerate symmetric bilinear form \(b(\, , \,)\). The pair \(\bigl ( ({\mathfrak {a}}, \star ), b\bigr )\) is called a Frobenius algebra, if

The form b is then called a Frobenius form.

Notice that we do not assume that \({\mathfrak {a}}\) is unital which makes our version slightly more general than the one used in the theory of Frobenius manifolds (see,e.g. [18]), or in certain branches of Algebra. The bilinear form b may have any signature.

Condition (13) is linear in b, so all Frobenius forms (if we allow some of them to be degenerate) on a given commutative associative algebra form a vector space.

Fix a basis \(e^1, \dots , e^n \) in \({\mathfrak {a}}\). Below we will interpret \({\mathfrak {a}}\) as the dual \(({\mathbb {R}}^n)^*\) and for this reason, we interchange lower and upper indices. Consider the structure constants \(a_k^{ij}\) defined by \(e^i \star e^j = a_k^{ij} e^k\) and coefficients \(b^{ij}:=b(e^i, e^j)\) of the Frobenius form b. The algebra \({\mathfrak {a}}\) is Frobenius if and only if \(a_k^{ij}\) and \(b^{ij}\) satisfy the following conditions:

The dual \({\mathfrak {a}}^*\) has a natural structure of an affine space \({\mathbb {R}}^n\) with \(u^i\simeq e^i\) being coordinates on \({\mathfrak {a}}^*\simeq {\mathbb {R}}^n\). Thus, on \({\mathfrak {a}}^*\), we can introduce the contravariant metric \(g^{\alpha \beta }(u) = b^{\alpha \beta } + a_s^{\alpha \beta } u^s\) which is known to be flat (e.g. [29, Lemma 4.1]; the result also follows from [4]). What is special here is not the metric g itself, but the coordinate system \(u^1,\dots , u^n\) which establishes a relationship between g and the Frobenius algebra \({\mathfrak {a}}\). This leads us to

Definition 3

Let g be a flat metric. We say that \(u^1,\dots , u^n\) is a Frobenuis coordinate system for g if

where \(a_s^{\alpha \beta }\) are structure constants of a certain Frobenius algebra \({\mathfrak {a}}\) and \(b=(b^{\alpha \beta })\) is a (perhaps degenerate) Frobenius form for \({\mathfrak {a}}\) .

Frobenius coordinates possess the following important property that can be easily checked.

Fact 4

(see [4] and [29]) Let g be a contravariant metric and \(u^1, \dots , u^n\) a coordinate system. The following two conditions are equivalent:

-

1.

In coordinates \(u^1, \dots , u^n\), the contravariant Christoffel symbols \(\Gamma ^{\alpha \beta }_s\) of g are constant and symmetric in upper indices.

-

2.

\(u^1, \dots , u^n\) are Frobenius coordinates, i.e. g is given by (15).

If either of these conditions holds, then g is flat and \(\Gamma ^{\alpha \beta }_s = -\frac{1}{2} \frac{\partial g^{\alpha \beta }}{\partial s}\).

The relation of Frobenius coordinate systems to our problem is established by the following remarkable and fundamental statement:

Fact 5

[29, Theorem 2.2] Let g and h be two flat metrics. Then \({{\mathcal {B}}}_h+{{\mathcal {A}}}_g\) is Poisson if and only if there exists a coordinate system \(u^1,\dots , u^n\) such that the following holds:

-

1.

\(g^{\alpha \beta } (u) = b^{\alpha \beta } + a_s^{\alpha \beta } u^s,\) where \(a_s^{\alpha \beta }\) are structure constants of a certain Frobenius algebra \({\mathfrak {a}}\), and b is a Frobenius form for \({\mathfrak {a}}\);

-

2.

the entries \(h^{\alpha \beta }\) of h in this coordinate system are constant;

-

3.

\(h=\bigl (h^{\alpha \beta }\bigr )\) is a Frobenius form for \({\mathfrak {a}}\), that is, \(h^{\alpha q} a^{\beta \gamma }_q = h^{\gamma q} a^{\beta \alpha }_q\).

This fact was independently obtained by P. Lorenzoni and R. Vitolo in their unpublished paper. The ‘if’ part of the statement follows from [41] by I. Strachan and B. Szablikowski, see also [15, Theorem 5.12].

The coordinates \((u^1,\dots ,u^n)\) from Fact 5 will be called Frobenius coordinates for the non-homogeneous Poisson structure \({{\mathcal {B}}}_h+{{\mathcal {A}}}_g\). Of course, Frobenius coordinates are not unique; indeed, they remain to be Frobenius after any affine coordinate change. This is the only freedom since the components of h are constant in Frobenius coordinates.

2.2 Reduction of our Problem to an Algebraic One and Frobenius Pencils

Definition 4

Let \(({\mathfrak {a}}, \star )\) and \((\bar{{\mathfrak {a}}},{{\bar{\star }}})\) be Frobenius algebras defined on the same vector space V and \(h, {\bar{h}}: V\times V \rightarrow {\mathbb {R}}\) the corresponding Frobenius forms. We will say that \(({\mathfrak {a}}, h)\) and \((\bar{{\mathfrak {a}}}, {\bar{h}})\) are compatible if the operation

defines the structure of a Frobenius algebra with the Frobenuis form \(h + {\bar{h}}\).

Similarly, if \({\mathfrak {a}}\) and \(\bar{{\mathfrak {a}}}\) are Frobenius algebras each of which is endowed with two Frobenius forms b, h and \({\bar{b}}, {\bar{h}}\), respectively, then we say that the triples \(({\mathfrak {a}}, b, h)\) and \((\bar{{\mathfrak {a}}}, {\bar{b}}, {\bar{h}})\) are compatible if (16) defines a Frobenius algebra for which \(b+{\bar{b}}\) and \(h + {\bar{h}}\) are both Frobenuis forms.

Formally, the definition requires that \(b + {\bar{b}}\) and \(h + {\bar{h}}\) are nondegenerate. It is not essential. Indeed, if the operations \(\star \) and \({{\bar{\star }}}\) are associative, and also the operation \({{\widehat{\star }}}:=\star + {{\bar{\star }}}\) given by (16) is associative, then any linear combination \( \lambda \star + {{\bar{\lambda }}}{{\bar{\star }}}\) is associative. Moreover, if \({{\widehat{b}}}:= b + {\bar{b}}\), possibly degenerate, satisfies the condition (13) for \({{\widehat{\star }}}\), then the linear combination \(\lambda b + {{\bar{\lambda }}} {\bar{b}}\) also satisfies the condition (13) with respect to \( \lambda \star + {{\bar{\lambda }}}{{\bar{\star }}}\). Thus, passing to a suitable linear combination, we can make \({{\widehat{b}}}\) and also \({{\widehat{h}}}\) nondegenerate.

In view of Facts 4 and 5, compatible Frobenius triples \(({\mathfrak {a}}, b, h)\) and \((\bar{{\mathfrak {a}}}, {\bar{b}}, {\bar{h}})\) naturally define compatible Poisson structures \({{\mathcal {B}}}_g+{{\mathcal {A}}}_h\) and \({{\mathcal {B}}}_{{\bar{g}}}+{{\mathcal {A}}}_{{\bar{h}}}\). The next theorem shows that the converse is also true under the assumption that \(R_h= {\bar{h}} h^{-1}\) has n different eigenvalues.

Theorem 1

Consider two non-homogeneous Poisson structures \({{\mathcal {B}}}_h + {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}} + {{\mathcal {A}}}_{{\bar{g}}}\) and suppose that \(R_h= {\bar{h}} h^{-1}\) has n different eigenvalues.

Then, they are compatible if and only if (g, h) and \(({\bar{g}}, {\bar{h}})\) admit a common Frobenius coordinate system \(u^1,\dots , u^n\) in which

-

1.

\(h^{\alpha \beta }\) and \({\bar{h}}^{\alpha \beta }\) are constant,

-

2.

\(g^{\alpha \beta }(u) = b^{\alpha \beta } + a^{\alpha \beta }_s u^s\) and \({\bar{g}}^{\alpha \beta }(u) = {\bar{b}}^{\alpha \beta } + {\bar{a}}^{\alpha \beta }_s u^s\),

-

3.

\(({\mathfrak {a}}, b, h)\) and \((\bar{{\mathfrak {a}}}, {\bar{b}}, {\bar{h}})\) are compatible Frobenius triples (here \({\mathfrak {a}}\) and \(\bar{{\mathfrak {a}}}\) denote the algebras with structure constants \(a^{\alpha \beta }_s\) and \({\bar{a}}^{\alpha \beta }_s\), respectively).

Corollary 2.1

In more explicit terms, compatibility of \({{\mathcal {B}}}_h + {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}} + {{\mathcal {A}}}_{{\bar{g}}}\) such that \(R_h= {\bar{h}} h^{-1}\) has n different eigenvalues, is equivalent to reducibility of these operators, in an appropriate coordinate system \(u^1,\dots , u^n\), to the following simultaneous canonical form

where \(h^{\alpha \beta }, {\bar{h}}^{\alpha \beta }, b^{\alpha \beta }, {\bar{b}}^{\alpha \beta }, a^{\alpha \beta }_s, {\bar{a}}^{\alpha \beta }_s\) are constants symmetric in upper indices and satisfying the conditions:

Notice that the coordinates \(u^1,\dots , u^n\) from Theorem 1 are just flat coordinates for h (or equivalently, for \({\bar{h}}\) as these metrics have common flat coordinates by Theorem 1).

We see that Theorem 1 reduces the problem of description and classification of pairs of compatible Poisson structures \({{\mathcal {B}}}_h+ {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}}+ {{\mathcal {A}}}_{{\bar{g}}}\) such that \(R_g={\bar{h}} h^{-1}\) has n different eigenvalues to a purely algebraic problem. As we announced above, we will reformulate it in differential geometric terms in Sect. 6.1, and solve it under the assumption that \(R_g={\bar{g}} g^{-1}\) has n different eigenvalues.

We have not succeeded in solving the problem by purely algebraic means. Like many other problems in Algebra, it reduces to a system of quadratic and linear equations (see relations (17)). For example, classification of Frobenius algebras is a problem of the same type. This problem is solved under the additional assumption that the Frobenius form is positive definite in [5] and, in our opinion, is out of reach otherwise. Of course, for a fixed dimension, one can find complete or partial answers. In particular, in [36], it is shown that up to dimension 6, there is a finite number of isomorphism classes of commutative associative algebras and for \(n > 6\),the number of classes is infinite. In [27],the classification of nilpotent commutative associative algebras up to dimension 6 is given. See also [31, 41].

In the situation discussed in Theorem 1, consider the pencil of first-order Poisson structures \({{\mathcal {A}}}_{\lambda g + \mu {\bar{g}}}\), which is sometimes referred to as quasiclassical limit [20] of the non-homogeneous pencil \({{\mathcal {B}}}_{\lambda h+\mu {\bar{h}}} + {{\mathcal {A}}}_{\lambda g + \mu {\bar{g}}}\). We can ask the inverse question: Given a flat pencil \(\{ \lambda g + \mu {\bar{g}}\}\), does the corresponding Poisson pencil \(\{{{\mathcal {A}}}_{\lambda g + \mu {\bar{g}}}\}\) admit a perturbation with nondegenerate Darboux–Poisson structures of order three of general position?

Theorem 1 basically shows that the main condition for the related quadruple of metrics \((h, {\bar{h}}, g, {\bar{g}})\) is the existence of a common Frobenius coordinate system for g and \({\bar{g}}\). Indeed, if this condition holds true and this Frobenuis coordinate system is given, then the other two metrics h and \({\bar{h}}\) can be ‘reconstructed’ by solving a system of linear equations. More precisely, we have the following

Theorem 2

Let g and \({\bar{g}}\) be Poisson compatible flat metrics that admit a common Frobenius coordinate system \(u^1,\dots , u^n\), that is

where \(({\mathfrak {a}}, b)\) and \((\bar{{\mathfrak {a}}}, {\bar{b}})\) are Frobenius pairs (here \({\mathfrak {a}}\) and \(\bar{{\mathfrak {a}}}\) denote the algebras with structure constants \(a^{\alpha \beta }_s\) and \({\bar{a}}^{\alpha \beta }_s\), respectively). Then

-

(i)

the corresponding Frobenius algebras are compatible,

-

(ii)

there exist nondegenerate metrics h and \({\bar{h}}\) (with \(h^{\alpha \beta }\) and \({\bar{h}}^{\alpha \beta }\) being constant in coordinates \(u^1,\dots ,u^n\)), such that \({{\mathcal {B}}}_h + {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}} + {{\mathcal {A}}}_{{\bar{g}}}\) are compatible Poisson structures,

-

(iii)

in Frobenius coordinates \(u^1,\dots , u^n\), the (constant) metrics h and \({\bar{h}}\) can always be chosen in the form

$$\begin{aligned}{} & {} h^{\alpha \beta } = m^0 \, b^{\alpha \beta } + a^{\alpha \beta }_s m^s \quad \text{ and }\quad {\bar{h}}^{\alpha \beta }(u) = m^0 \, {\bar{b}}^{\alpha \beta } + {\bar{a}}^{\alpha \beta }_s m^s,\nonumber \\{} & {} \quad (m^1,\dots , m^n)\in {\mathbb {R}}^n, \ m^0\in {\mathbb {R}}. \end{aligned}$$(18)

2.3 AFF-Pencil

Consider a real affine space \(V\simeq {\mathbb {R}}^n\) with coordinates \(u^1, \dots , u^n\) and define the (Nijenhuis) operator L and contravariant metric \(g_0\) on it by:

Next, introduce \(n+1\) contravariant metrics \(g_{\mathrm i} = L^i g\) for \(i = 0, \dots , n\). In matrix form, we have

where \(a_{\mathrm {n - i}}\) is a \((n - i) \times (n - i)\) matrix

and \(b_{\mathrm i}\) is \(i \times i\) matrix of the form

In particular, \(g_{\mathrm 0} = a_{\mathrm n}\) and \(g_{\mathrm n} = b_{\mathrm n}\).

The metrics \(g_{\mathrm 0}, g_{\mathrm 1}, \dots , g_{\mathrm n}\) are flat and pairwise compatible, so that they generate an \(n+1\)-dimensional flat pencil with remarkable properties, see,e.g. [9, 20]. We can write this pencil as

and L and \(g_0\) are given by (19). We will refer to it as an AFF-pencil. This pencil was discovered, in the form (19) and (20), by M. Antonowicz and A. Fordy [1]. As we see, the components of each metric \(g_i\) are affine functions, moreover, the coordinates \((u^1,\dots , u^n)\) are common Frobenius coordinates for all of them.

The corresponding Frobenius algebras are easy to describe. Consider two well-known examples:

-

the algebra \({\mathfrak {a}}_n\) of dimension n with basis \(e_1,e_2,\dots , e_n\) and relations

$$\begin{aligned} e_i \star e_j = {\left\{ \begin{array}{ll} e_{i+j}, \quad &{} \hbox { if}\ i+j\le n, \\ 0 \quad &{} \text{ otherwise }. \end{array}\right. } \end{aligned}$$Notice that \({\mathfrak {a}}_n\) can be modelled as the matrix algebra \({\text {Span}}(J,J^2,\dots , J^n)\), where J is the nilpotent Jordan block of size \((n+1)\times (n+1)\). It contains no multiplicative unity element.

-

the algebra \({\mathfrak {b}}_n\) of dimension n with basis \(e_1,e_2,\dots , e_n\) and relations

$$\begin{aligned} e_i \star e_j = {\left\{ \begin{array}{ll} e_{i+j-1}, \quad &{} \hbox { if}\ i+j-1\le n, \\ 0 \quad &{} \text{ otherwise }. \end{array}\right. } \end{aligned}$$This algebra can be understood as the unital matrix algebra \({\text {Span}}({\text {Id}}, J, J^2,\dots , J^{n-1})\) where J is the nilpotent Jordan block of size \(n\times n\). The difference from the previous example is that \({\mathfrak {b}}_n\), by definition, contains the identity matrix. Equivalently, we can define \({\mathfrak {b}}_n\) as the algebra of truncated polynomials \({\mathbb {R}}[x]/\langle x^n\rangle \) (similarly \({\mathfrak {a}}_n\simeq \langle x\rangle / \langle x^{n+1}\rangle \)).

It is straightforward to see that the metric \(g_\textrm{n}=b_\textrm{n}\) is related to the Frobenius algebra \({\mathfrak {b}}_n\). Similarly \(g_0 = a_n\) is related to the Frobenius algebra \({\mathfrak {a}}_n\) (this becomes obvious if we reverse the order of basis vectors and multiply each of them by \(-1\)). Hence, formula (20) shows that the Frobenius algebra associated with \(g_{\textrm{i}}\) is isomorphic to the direct sum \({\mathfrak {a}}_{n-i}\oplus {\mathfrak {b}}_{i}\).

It is interesting that a generic metric \(g=P(L)g_0\) from the AFF-pencil (21), i.e. such that P(L) has n distinct roots, corresponds to the direct sum \({\mathbb {R}}\oplus \dots \oplus {\mathbb {R}}\oplus {\mathbb {C}} \oplus \dots \oplus {\mathbb {C}}\), where each copy of \({\mathbb {R}}\) relates to a real root and each copy of \({\mathbb {C}}\) relates to a pair of complex conjugate roots of \(P(\cdot )\).

It is a remarkable fact that for each \(g_{\textrm{i}}\),we can find a partner \(h_{\textrm{i}}\) such that \({{\mathcal {B}}}_{h_{\textrm{i}}} + {{\mathcal {A}}}_{g_{\textrm{i}}}\) is a Poisson structure and all these structures are pairwise compatible. The (constant) metrics \(h_{\textrm{i}}\) take the form

where \((g_{\textrm{i}})_{{\bar{m}}, m^0}\) is obtained from the matrix \(g_{\textrm{i}}(u)\) by replacing \(u^s\) with \(m^s\) and all 1’s with \(m^0\). In this way, we obtain an \((n+1)\)-dimensional pencil of non-homogeneous Poisson structures generated by \({{\mathcal {B}}}_{h_{\textrm{i}}} + {{\mathcal {A}}}_{g_{\textrm{i}}}\):

Alternatively, the pencil (23) can be described as follows. Fix \({\bar{m}}=(m^1,\dots , m^n)\in {\mathbb {R}}^n\), \(m^0\in {\mathbb {R}}\) and let \(L({\bar{m}})\) denote the operator with constant entries obtained from \(L=L(u)\) by replacing \(u^i\) with constants \(m^i\in {\mathbb {R}}\). Similarly, \(g_0({\bar{m}})\) denotes the metric with constant coefficients obtained from \(g_0\) by replacing \(u^i\) with the same constants \(m^i\in {\mathbb {R}}\).

Then for \(g = P(L) g_0\), we can define its partner h (metric with constant entries) as

It can be easily checked that the correspondence \((m^0,m^1,\dots , m^n) \mapsto h\) defined by this formula is linear so that it makes sense for \(m_0=0\) (the denominators cancel out). Then the pencil (23) can be, equivalently, defined as

Notice that such a pencil is not unique, as the above construction depends on \(n+1\) arbitrary parameters \(m^0,m^1,\dots , m^n\). In other words, in (24), the polynomial \(P(\cdot )\) serves as a parameter of the bracket within the AFF-pencil, whereas \((m_0, {\bar{m}})\) parametrise dispersive perturbations of this pencil.

Remark 2.1

For our purposes below,it will be convenient to rewrite this pencil in another coordinate system by taking the eigenvalues of L as local coordinates \(x^1,\dots , x^n\). In these coordinates, \(g_0\) and L from (19) take the following diagonal form (see, e.g. [20, p. 214] or [6, §6.2])Footnote 3:

so that the AFF-pencil (21) becomes diagonal too:

We also notice that the transition from the diagonal coordinates x to Frobenius coordinates u is quite natural: the coordinates \(u^i\) are the coefficients \(\sigma _i\) of the characteristic polynomial \(\chi _L (t) = \det (t\cdot {\text {Id}}- L)= t^n - \sigma _1 t^{n-1} - \sigma _2 t^{n-2} -\dots - \sigma _n\), so that, up to sign, \(u_i\) are elementary symmetric polynomials in \(x^1,\dots , x^n\).

The AFF-pencil provides a lot of examples of compatible flat metrics g and \({\bar{g}}\) that admit a common Frobenius coordinate system: one can take any two metrics from the pencil (21) or, equivalently, (26).

3 Compatible Flat Metrics with a Common Frobenius Coordinate System: Generic Case

Theorems 1 and 2 reduce the compatibility problem for two Poisson structures of the form \({{\mathcal {B}}}_h + {{\mathcal {A}}}_g\) to a classification of all pairs of metrics g and \({\bar{g}}\) admitting a common Frobenius coordinate system. The next theorem solves this problem under the standard assumption that \(R_g= {\bar{g}} g^{-1}\) has n different eigenvalues and one minor additional condition.

Theorem 3

Let g and \({\bar{g}}\) be compatible flat metrics that admit a common Frobenius coordinate system. Assume that the eigenvalues of the operator \(R_g={\bar{g}} g^{-1}\) are all different and in the diagonal coordinates (such that \(R_g\) is diagonal), every diagonal component of g depends on all variables. Then the flat pencil \(\lambda g + \mu {\bar{g}}\) is contained in the AFF-pencil, in other words, there exists a coordinate system \((x^1,\dots , x^n)\) such that

for some polynomials \(P(\cdot )\) and \(Q(\cdot )\) of degree \(\le n\) and \(g_{\textsf {LC} }\) and L defined by (25).

Moreover, if \(n\ge 2\) and \(P(\cdot )\) and \(Q(\cdot )\) are not proportional, then the common Frobenius coordinate system for \(g = P(L) g_{\textsf {LC} }\) and \({\bar{g}} = Q(L) g_{\textsf {LC} }\) is unique up to an affine coordinate change.

Theorem 3 will be proved in Sect. 6. The uniqueness part will be explained in Sect. 7.3, see Remark 7.1.

Remark 3.1

In Theorem 3, we allow some of the eigenvalues \(R_g\) to be complex. In this case, we think that a part of the diagonal coordinates \((x^1,\ldots ,x^n)\) is also complex-valued. For example, the coordinates \(x^1,\ldots ,x^k\) may be real-valued, and the remaining coordinates \(x^{k+1}= z^1, x^{k+2}= {\bar{z}}^1\),..., \(x^{n-1}= z^{\tfrac{n-k}{2} }\), \(x^{n }= {\bar{z}}^{\tfrac{n-k}{2} }\), where ‘\(\ \bar{ \ } \ \)’ means complex conjugation, are complex-valued. In this case,(26) gives us a well-defined (real) metric \(g_{\textsf {LC} }\) and a (real) Nijenhuis operator L.

The genericity condition in Theorem 3 is that every diagonal component of g depends on all variables. In Theorems 4, 5 below, we will solve the problem in full generality, without assuming this or any other genericity condition.

Remark 3.2

In [20, §5] E. Ferapontov and M. Pavlov asked whether dispersive perturbations of the pencil (21) with \(g_0\) and L given by (25) other than those described in Sect. 2.3 are possible. Theorem 3 leads to a negative answer under the additional assumption that the dispersive perturbation is in the class of nondegenerate Darboux–Poisson structures of order 3. Indeed, according to Theorem 1, every dispersive perturbation \(\lambda ({{\mathcal {B}}}_h + {{\mathcal {A}}}_g) + \mu ({{\mathcal {B}}}_{{\bar{h}}} + {{\mathcal {A}}}_{{\bar{g}}})\) of the pencil \(\lambda {{\mathcal {A}}}_g + \mu {{\mathcal {A}}}_{{\bar{g}}}\) can be reduced to a simple normal form in a common Frobenius coordinate system for g and \({\bar{g}}\) (assuming that \(R_h={\bar{h}} h^{-1}\) has different eigenvalues). Moreover, in this coordinate system, h and \({\bar{h}}\) are constant and represent Frobenius forms for the corresponding Frobenius algebras \({\mathfrak {a}}\) and \(\bar{{\mathfrak {a}}}\). Since by Theorem 3, such a coordinate system is unique, it remains to solve a Linear Algebra problem of choosing suitable forms h and \({\bar{h}}\), satisfying three conditions (cf. (17)):

It is straightforward to show for a generic pair g, \({\bar{g}}\) of metrics from the AFF-pencil, the forms h and \({\bar{h}}\) are defined by \(n+1\) parameters \(m^0, m^1,\dots , m^n\) as in (24). No other solutions exist. In particular, formula (24) describes all possible dispersive perturbations of the AFF-pencil by means of nondegenerate Darboux–Poisson structures of order 3. Moreover, this conclusion holds for any generic two-dimensional subpencil.

4 Compatible Flat Metrics with a Common Frobenius Coordinate System: General Case

4.1 General Multi-block Frobenius Pencils

Let us now discuss the general case without assuming that in diagonal coordinates, every diagonal component of g depends on all variables.

Similar to Theorem 3, the metrics g and \({\bar{g}}\) will belong to a large Frobenius pencil built up from several blocks each of which has a structure of an (extended) AFF pencil. We start with constructing a series of such pencils.

We first divide our diagonal coordinates into B blocks of positive dimensions \(n_1,\ldots ,n_B\) with \(n_1+ \cdots +n_B=n\):

Next, we consider a collection of \(n_\alpha \)-dimensional Levi-Civita metrics \(g^{\textsf {LC} }_\alpha \) and \(n_\alpha \)-dimensional operators \(L_\alpha \) (as in Theorem 3 but now for each block separately):

Then we introduce a new block-diagonal metric \({{\widehat{g}}}\)

where \(c_{s\alpha } = 0\) or 1. The values of the discrete parameters \(c_{s\alpha }\) and numbers \(\lambda _{s\alpha }\) are determined by some combinatorial data as explained below.

Finally, we consider the pencil of (contravariant) metrics of the form

where \({\mathcal {L}}\) is a family (pencil) of block-diagonal operators of the form

where \(P_\alpha (\cdot )\) are polynomials with \(\deg P_\alpha \le n_\alpha +1\) treated as parameters of this family. The coefficients of the polynomials \(P_\alpha \) are not arbitrary but satisfy a collection of linear relations involving coefficients from different polynomials so that this pencil, in general, is not a direct sum of blocks (although, direct sum is a particular example). Notice that \({\mathcal {L}}\) is a Nijenhuis pencil whose algebraic structure is quite different from that of the pencil \(\{ P(L)\}\) from Theorem 3.

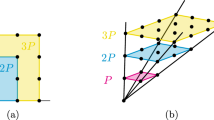

The numbers \(c_{s\alpha }\), \(\lambda _{s\alpha }\) and relations on the coefficients of \(P_\alpha \)’s are determined by a combinatorial object, an oriented graph \({\textsf{F}}\) with special properties, namely, a directed rooted in-forest (see [26] for a definition) whose edges are labelled by numerical marks \(\lambda _\alpha \). This graph may consists of several connected components, each of which is a rooted tree whose edges are oriented from its leaves to the root. An example is shown in Fig. 1.

Each vertex of \({\textsf{F}}\) is associated with a certain block of the above decomposition (28) and labelled by an integer number \(\alpha \in \{1,\ldots ,B\}\). The structure of a directed graph defines a natural strict partial order (denoted by \(\prec \)) on the set \(\{1,\ldots ,B\}\): for two numbers \(\alpha \ne \beta \in \{1,\ldots ,B\}\), we set \(\alpha \prec \beta \), if there exists an oriented way from \(\beta \) to \(\alpha \). For instance, for the graph shown on Fig. 1, we have \(1\prec 3\), \(2\prec 4\), \(5\prec 6\). Without loss of generality, we can and will always assume that the vertices of \({\textsf{F}}\) are labelled in such a way that \(\alpha \prec \beta \) implies \(\alpha <\beta \).

Notice that the vertices of degree one are of two types, roots and leaves: \(\alpha \) is a root if there is no \(\beta \) such that \(\beta \prec \alpha \) and, conversely, \(\beta \) is a leaf if there is no \(\beta \) such that \(\alpha \prec \beta \). Notice that roots of degree \(\ge 2\) are also allowed, whereas all leaves have degree 1. We say \(\alpha = {\text {next}}(\beta )\), if \(\alpha \prec \beta \) and there is no \(\gamma \) with \(\alpha \prec \gamma \prec \beta \). In the upper tree of Fig. 1,the root is 1, the leaves are 3 and 4 and we have: \(1= \text {next}(2)\) and \(2= \text {next}(3)\), \(2= \text {next}(4)\).

The numbers \(c_{s \alpha }\) in (30) are now defined from \({\textsf{F}}\) as follows:

Recall that in our assumptions, \(s\prec \alpha \) implies \(s<\alpha \) so that the \(B{\times }B\)-matrix \(c_{s\alpha }\) is upper triangular with zeros on the diagonal, see Fig. 1.

The parameters \(\lambda _{s\alpha }\) are defined as follows. For each vertex \(\alpha \) that is not a root, there is exactly one out-going edge which we will denote by \(e_\alpha \). Notice that the correspondence \(\alpha \mapsto e_\alpha \) is a bijection between the set of edges of \({\textsf{F}}\) and the (sub)set of vertices which are not roots. To each edge \(e_\alpha \),we now assign a number \(\lambda _\alpha \) (these numbers will serve as parameters of our construction) and set

Such \(\beta \) exists and is unique, if \(s \prec \alpha \), i.e. \(c_{s\alpha }=1\). Otherwise, \(c_{s\alpha }=0\) and the value of \(\lambda _{s \alpha }\) plays no role in (30).

Remark 4.1

The above definitions of parameters \(c_{s\alpha }\) and \(\lambda _{s\alpha }\) are convenient to make our formulas shorter, but do not quite clarify the meaning of (30) in terms of the graph \({\textsf{F}}\). Equivalently, formula (30) can be rewritten as follows:

where the function \(f_\alpha \) is defined by the oriented path from the vertex \(\alpha \) to a certain root \(\beta \):

each edge of which is endowed with a number \(\lambda _{\alpha _i}\), \(i=1,\dots ,k\). Namely, we set

which coincides with the factor in front of \(g_\alpha ^{\textsf {LC} }\) in formula (30) written in terms of \(c_{s\alpha }\) and \(\lambda _{s\alpha }\).

Finally, for a vertex \(\alpha \),we denote the coefficients of the corresponding polynomial \(P_\alpha \) by \(P_\alpha (t) = \overset{\alpha }{a}_0 + \overset{\alpha }{a}_1 t + \cdots + \overset{\alpha }{a}_{n_\alpha +1}t^{n_\alpha +1}\). Then the conditions on the coefficients \(P_\alpha (t)\) are

-

(i)

If \(\alpha \) is a root, then \(\overset{\alpha }{a}_{n_\alpha +1}=0\), i.e. \(\deg P_\alpha \le n_\alpha \).

-

(ii)

If \(\alpha = {\text {next}}(\beta )\), then \(\lambda _\beta \) is a root of \(P_\alpha \) and \(\overset{\beta }{a}_{n_\beta +1}= P'(\lambda _\beta )\), where \(P'(t)\) denotes the derivative of P(t).

-

(iii)

If \(\alpha = {\text {next}}(\beta )\) and \(\alpha = {\text {next}}(\gamma )\) with \(\lambda _\beta = \lambda _\gamma =\lambda \), \(\beta \ne \gamma \), then \(\lambda \) is a double root of \(P_\alpha \) (in view of (ii) this automatically implies \(\overset{\beta }{a}_{n_\alpha +1} = \overset{\gamma }{a}_{n_\gamma +1} =0\)).

Remark 4.2

Each of the above conditions is linear in the coefficients of \(P_\alpha \)’s. However, (i)–(iii) may imply that \(P_\alpha =0\) for some \(\alpha \). This happens, for instance, if the vertex \(\alpha \) has too many neighbours \(\gamma _i\) such that \(\alpha = {\text {next}}(\gamma _i)\), \(i=1,\dots ,k\). Then all \(\lambda _{\gamma _i}\) must be roots of \(P_\alpha \) due to (ii). However, in view of (i), \(\deg P_\alpha \le n_\alpha + 1\). If \(n_\alpha + 1 < k\) and \(\lambda _{\gamma _i}\) are all different, then \(P_\alpha \) cannot have k different roots unless \(P_\alpha =0\). Strictly speaking, such a situation should be excluded as the corresponding metrics turn out to be degenerate at every point. However, from the algebraic point of view,we still obtain an example of a good Frobenius pencil.

We also notice that the shift \(L_\alpha \mapsto L_\alpha + c_\alpha {\text {Id}}\) in any individual block leads to an isomorphic pencil. In particular, if at a certain vertex \(\alpha \) of \({\textsf{F}}\) we add the same number \(c_\alpha \) simultaneously to all numerical parameters \(\lambda _\beta \) on the incoming edges, we get an isomorphic pencil.

This completes the description of the pencil (31) of (contravariant) metrics and we can state our next result.

Theorem 4

The pencil (31) (with \(c_{s\alpha }\) defined by (32), \(\lambda _{s\alpha }\) defined by (33) and coefficients of \(P_\alpha \) satisfying (i)–(iii)) is Frobenius. In other words, all the metrics

are flat, Poisson compatible and admit a common Frobenius coordinate system

which is defined as follows. Let \(\sigma _\alpha ^1,\dots ,\sigma _\alpha ^{n_i}\) denote the coefficients of the characteristic polynomial of \(L_\alpha \)

Then

Theorem 4 will be proved in Sect. 8.

The advantage of the formulas for Frobenius coordinates in Theorem 4 is that they are invariant in the sense they do not depend on the choice of coordinates in blocks, but use coefficients of the characteristic polynomials of blocks \(L_i\).

Let us explain how one can use this property to check algorithmically (say, using computer algebra software) that the coordinates in Theorem 4 are indeed Frobenius for the metric g.

In each block (with number \(\alpha \)), we change from diagonal coordinates \(X_\alpha = (x_\alpha ^1,\ldots ,x_\alpha ^{n_\alpha })\) to the coordinates \(Y_\alpha = (y_\alpha ^1,\ldots , y_\alpha ^{n_\alpha })\) given as follows:

Note that in the coordinates \(Y_\alpha \), the metric \(g^{\textsf {LC} }_\alpha \) and the operator \(L_\alpha \) have the form (19) with \(u^1,\ldots ,u^n\) replaced by \(y_\alpha ^1,\ldots , y_\alpha ^{n_\alpha }\). The iterated warped product metric \(g=(g^{ij})\) is given by the following easy algebraic formula

with \(g_\alpha = P_\alpha (L_\alpha ) g^{\textsf {LC} }_\alpha \) and \(g^{\textsf {LC} }_\alpha \) and \(L_\alpha \) explicitly given by (19).

In order to check whether the coordinates u given by (36) are Frobenius, one needs to perform the multiplication

where \(J = \left( \frac{\partial u^i}{\partial y^j}\right) \) is the Jacobi matrix of the coordinate transformationFootnote 4\((y^1,\ldots , y^n) \rightarrow (u^1,\ldots ,u^n)\) and check whether the entries of the resulting matrix \(J g J^\top \) are affine functions in \(u^i\) and conditions (17) are fulfilled. All these operations can be realised by standard computer algebra packages.

The next result gives a description of two-dimensional Frobenius pencils in the general case.

Theorem 5

Let g and \({\bar{g}}\) be compatible flat metrics that admit a common Frobenius coordinate system. If the eigenvalues of the operator \(R_g={\bar{g}} g^{-1}\) are all different at a point \({\textsf{p}}\), then in a neighbourhood of this point, the pencil \(\lambda g +\mu {\bar{g}}\) is isomorphic to a two-dimensional subpencil of the Frobenius pencil (31) with suitable parameters, i.e. in a certain coordinate system these metrics take the form

(with parameters \(c_{\alpha \beta }\) defined by (32), \(\lambda _{s\alpha }\) defined by (33) and coefficients of \(P_\alpha \) and \(Q_\alpha \) satisfying (i)–(iii)).

Theorem 5 will be proved in Sect. 7.

Remark 4.3

In Theorem 5,we allow complex eigenvalues of \(R_g\). The corresponding part of diagonal coordinates is then complex. Moreover, the polynomials \(P_\alpha \) and \(Q_\alpha \) may have complex coefficients, and also the numbers \(\lambda _{s\alpha }\) may be complex. The only condition is that the metrics given by (30) should be well-defined real metrics. It is easy to see that this condition implies in particular that every block \((g^{\textsf {LC} }_\alpha , L_\alpha )\) is either real or pure complex (= all coordinates are complex; the coefficients of the polynomials \(P_\alpha \) and \(Q_\alpha \) may be complex as well), and that a pure complex block comes together with a complex conjugate one. See also [6, §3] for discussion on Nijenhuis operators some of whose eigenvalues are complex.

In certain special cases, a common Frobenius coordinate system for g and \({\bar{g}}\) is not unique (up to affine transformations). This is the case when \(n_\alpha =1\), \(c_{\alpha \beta }=0\) for all \(\beta \) (i.e. this block represents a leaf of the corresponding in-forest) and the diagonal component of \(R_g={\bar{g}} g^{-1}\) corresponding to this block is constant; in other words, the (quadratic) polynomials \(P_\alpha \) and \(Q_\alpha \) are proportional. The restrictions \(g_\alpha \) and \({\bar{g}}_\alpha \) onto these blocks are then as follows

where f is some function of the remaining coordinates, \(c\in {\mathbb {R}}\) and \(L_\alpha = (x^\alpha )\) (diagonal \(1\times 1\) matrix). However, we can do coordinate transformation \(x^\alpha \mapsto {{\tilde{x}}}^\alpha = {{\tilde{x}}}^\alpha (x^\alpha )\) that changes the coefficients \(a_1\) and \(a_0\) (the highest coefficient \(a_2\) is fixed by condition (ii)).

Hence, with a new operator \(L_\alpha ^{\textsf{new}} = ({{\tilde{x}}}^\alpha )\) and new polynomials \(P^{\textsf{new}} _\alpha (t) = a_2 t^2 + {{\tilde{a}}}_1 t + {{\tilde{a}}}_0\), \(Q^{\textsf{new}} _\alpha (t) = c(a_2 t^2 + {{\tilde{a}}}_1 t + {{\tilde{a}}}_0)\), we still remain in the framework of our construction and (38) still holds. This transformation will lead to another Frobenius coordinate system. In Sect. 7.3, we explain that only this situation allows ambiguity in the choice of Frobenius coordinates up to affine transformations.

Remark 4.4

In [20, Theorem 2], it was claimed that under some general assumptions for \(n>2\), there is only one equivalence class of \((n+1)\)-Hamiltonian hydrodynamic systems (in the sense of [20]) and \(n+1\) is the best possible. The corresponding multi-Hamiltonian structure comes from the \((n+1)\)-dimensional AFF-pencil. In this view, it is interesting to notice that multi-block pencils from Theorem 5 also provide such a structure, which may have even higher dimension.

4.2 Case of Two Blocks

In the case of two blocks, i.e. \(B=2\), the construction explained in the previous section gives a natural and rather simple answer. We have two cases: \(c_{12}=0\) and \(c_{12}=1\). The first case is trivial being a direct product of two blocks (possibly complex conjugate) each of which is as in Theorem 3; in (34) we set \({{\widehat{g}}}_i=g_i^{\textsf {LC} }\) and take \(P_i\) to be arbitrary polynomials of degrees \(\le n_i\) (\(i=1,2\)).

Theorem below is a special case of Theorem 4 in the nontrivial case \(c_{12}=1\).

Theorem 6

Suppose \(B=2\), \(c_{12}=1\) and consider the metric g given by the construction from Sect. 4.1:

Following this construction, assume that the polynomials \(P_1\) and \(P_2\) have degrees no greater than \(n_1 \) and \(n_2+1\), respectively: \(P_1=\sum _{s=0}^{n_1} a_st^s\) and \(P_2=\sum _{s=0}^{n_2+1} b_st^s\). Then the coordinates from Theorem 4 are Frobenius for g if and only if \(a_0=0\) and \(a_1 = b_{n_2+1}\).

Example 4.1

In Theorem 6, take \(n_1=n_2=2\). In diagonal coordinates \(x^1, x^2, x^3, x^4\), the metric \(g=(g^{ij})\) is as follows:

where \(P_1(t) = a_1 t + a_2t^2\) and \(P_2(t)= b_0 + b_1 t + b_2 t^2 + b_3 t^3\) with \(b_3 = a_1\). Recall that \(L = L_1\oplus L_2\) with \(L_1={\text {diag}} (x^1, x^2)\), \(L_2={\text {diag}} (x^3, x^4)\), and the relation between the diagonal coordinates \(x^i\) and the Frobenius coordinates \(u^i\) given by Theorem 4 are as follows:

In these Frobenius coordinates, the metric \(g = (g^{ij})\) has the following form:

This formula defines a 5-dimensional pencils of metrics (with parameters \(a_1,a_2, b_0, b_1, b_2\)). For any choice of the parameters such that g is nondegenerate, the coordinates \(u^i\) are Frobenius for it in the sense of Definition 3.

From the algebraic viewpoint, we may equivalently think of this formula as 5-parametric family (pencil) of Frobenius algebras \(({\mathfrak {a}}, b)\). The entries of g define the structure constants of \({\mathfrak {a}}\). For instance, \(g^{11}=a_{2}u^{1}+a_{1}\) and \(g^{34} = -b_0 u^2 - b_2 u^4\) imply

for a basis \(e^1, e^2, e^3, e^4\) of \({\mathfrak {a}}\). The matrix \((b^{ij})\) of the corresponding Frobenius form b is obtained from that of g by assigning to \(u^i\) any constant values \(u^i = m^i\in {\mathbb {R}}\) (such that b is nondegenerate for generic choice of \(a_1,a_2, b_0,b_1, b_2\)). To get a Frobenius pencil, the constants \(m^i\) should be the same for all parameters \(a_1,a_2, b_0,b_1, b_2\).

In the coordinates \((u^1,\ldots ,u^4)\) the operators \(L_1\) and \(L_2\) are given by the matrices

The matrices of \(g_1^{\textsf {LC} }\) and \(g_2^{\textsf {LC} }\) are

5 Proof of Theorems 1 and 2

Proof of Theorem 1

We assume that \({\mathcal {B}}_h + {\mathcal {A}}_g\) and \({\mathcal {B}}_{{\bar{h}}} + {\mathcal {A}}_{{\bar{g}}}\) are compatible with the additional condition that the eigenvalues of \(R_h = {\bar{h}} h^{-1}\) are pairwise different. We also assume that \(h + {\bar{h}}\) is nondegenerate.

Recall that Theorem 7.1 from [14] implies that \({\mathcal {B}}_h\) and \({\mathcal {B}}_{{\bar{h}}}\) are compatible Poisson structures (item (i) in Fact 1). Let \(\Gamma ^{\alpha \beta }_s\) and \({{\bar{\Gamma }}}^{\alpha \beta }_s\) denote the contravariant Levi-Civita connections of h and \({\bar{h}}\). From Theorem 3.2 in [14] applied to \({\mathcal {B}}_h + {\mathcal {B}}_{{\bar{h}}}\), it follows that the connection \({{\widehat{\Gamma }}}^{\beta }_{qs}\) defined from

is symmetric and flat.

By direct computation \({{\widehat{\nabla }}} (h + {\bar{h}}) = \nabla h + {{\bar{\nabla }}} {\bar{h}} = 0\), so that \({{\widehat{\Gamma }}}\) is the Levi-Civita connection for \(h + {\bar{h}}\) and moreover, \(h + {\bar{h}}\) is flat. According to Theorem 6.2 in [14], this implies that \({\mathcal {B}}_h + {\mathcal {B}}_{{\bar{h}}}\) is Darboux–Poisson (i.e. is given by (8)). Hence, in our notations, we obtain the formula

Setting \({{\widehat{\Gamma }}}^{\alpha \beta }_s = (h + {\bar{h}})^{\alpha q} {{\widehat{\Gamma }}}^{\beta }_{qs}\) to be the contravariant Levi-Civita connection of \(h + {\bar{h}}\), we get

and conclude that h and \({\bar{h}}\) are Poisson compatible in the sense of Definition 1 (in particular, this proves the (ii)-part of Fact 2). Hence, \(R_h= {\bar{h}} h^{-1}\) is a Nijenhuis operator (Fact 3).

For a pair of flat metrics h and \({\bar{h}}\), introduce the so-called obstruction tensor

It vanishes if and only if \(h, {\bar{h}}\) can be brought to constant form simultaneously (thus, the name). It is obviously symmetric in lower indices. Condition (41) can be written in equivalent form ( [33], Lemma 3.1 and Theorem 3.2) in terms of only \(\Gamma ^{\alpha \beta }_s, {{\bar{\Gamma }}}^{\alpha \beta }_s, h, {\bar{h}}\)

After lowering both indices with h and rearranging the terms, we get

For a given metric h and its Levi-Civita connection, define

The \({\bar{c}}^{\alpha \beta }_{rs}, {{\widehat{c}}}^{\alpha \beta }_{rs}\) for \({\bar{h}}\) and \(h + {\bar{h}}\) are defined in a similar way. This formula is one ‘half’ of the formula for Riemann curvature tensor and the flatness of the metrics implies that \(c^{\alpha \beta }_{rs} = c^{\alpha \beta }_{sr}\) (and similarly for metrics \({\bar{h}}, h + {\bar{h}}\)). Using this symmetry in lower indices, we apply the general formula (8) to the Poisson structures in (40) and collect coefficients in front of \(D^2\) to get

Collecting all the terms with \(u^r_x u^s_x\) in this differential polynomial, in turn, implies

Using the characteristic property of the Levi-Civita connection

we rewrite \(c^{\alpha \beta }_{rs}\) as

Applying (41), (42) and (44) yields

Now consider the coordinate system in which the Nijenhuis operator \(R_h\) is diagonal. As \(R_h\) by definition is self-adjoint with respect to both h and \({\bar{h}}\), we get that both h and \({\bar{h}}\) are also diagonal. Condition (43) implies that for given \(\beta \) the only nonzero elements of \(S^{\beta }_{pq}\) are the ones that stand on the diagonal. The previous calculation yields

which, for fixed \(\alpha \) and \(\beta \), is just the product of four diagonal matrices, two of which are nondegenerate. Taking \(\alpha = \beta \), we see that the matrix \(S^{\alpha }_{qr}\) must be zero. As \(\alpha \) is arbitrary, this implies that the obstruction tensor vanishes and \(h, {\bar{h}}\) have common Darboux coordinates.

Fix the coordinates in which both h and \({\bar{h}}\) are flat. Applying Fact 5, we see that these coordinates are Frobenius for both g and \({\bar{g}}\). Using (40) and applying Fact 5 to the sum of our Poisson structures, we get that \(a^{\alpha \beta }_s + {\bar{a}}^{\alpha \beta }_s\) define a commutative associative algebra, while \(b^{\alpha \beta }_s + {\bar{b}}^{\alpha \beta }_s\) and \(h^{\alpha \beta }_s + {\bar{h}}^{\alpha \beta }_s\) are Frobenius forms for this algebra, as required.

The inverse statement immediately follows from Facts 4 and 5 . \(\square \)

Proof of Theorem 2

Consider a pair of compatible flat metrics \(g, {\bar{g}}\) in common Frobenius coordinates \(u^1,\dots , u^n\)

Fact 4 implies that \(- \frac{1}{2}a^{\alpha \beta }_s\) and \(- \frac{1}{2}{\bar{a}}^{\alpha \beta }_s\) are the contravariant Christoffel symbols for g and \({\bar{g}}\), respectively. Compatibility of g and \({\bar{g}}\) means that the contravariant Levi-Civita symbols for the flat metric \(g + {\bar{g}}\) are the sum of the corresponding symbols for g and \({\bar{g}}\), that is, \(- \frac{1}{2} a^{\alpha \beta }_s-\frac{1}{2} {\bar{a}}^{\alpha \beta }_s\). At the same time, these symbols are constant and symmetric in upper indices. Hence the coordinates \(u^1,\dots , u^n\) are Frobenius for \(g + {\bar{g}}\) (Fact 4).

This, in turn, implies that \(a^{\alpha \beta }_s + {\bar{a}}^{\alpha \beta }_s\) are the structure constants of a commutative associative algebra and \(b^{\alpha \beta }_s + {\bar{b}}^{\alpha \beta }_s\) is one of its Frobenius form. Thus, the corresponding Frobenius algebras are compatible.

As g and \({\bar{g}}\) are both nondegenerate metrics, this implies that for a generic collection of constants \(m^0, m^1, \dots , m^n\), the bilinear forms

are both nondegenerate too. At the same time, each of them is the sum of a Frobenius form (\(m^0 b^{\alpha \beta }\) and resp. \(m^0 {\bar{b}}^{\alpha \beta }\)) and trivial form (\(a^{\alpha \beta }_s m^s\) and resp. \({\bar{a}}^{\alpha \beta }_s m^s\)), which corresponds to \(m \in {\mathfrak {a}}^*\) with coordinates \(m^1, \dots , m^n\) and, thus, is also Frobenius.Footnote 5 As a result, h and \({\bar{h}}\) lead us to Frobenius triples \((h, b, {\mathfrak {a}})\) and \(({\bar{h}}, {\bar{b}}, \bar{{\mathfrak {a}}}.)\).

By construction, \(h + {\bar{h}}\) defines (if nondegenerate) a Frobenius form for the ‘sum’ of the algebras. Thus, we get compatible Frobenius triples, which yield compatible non-homogeneous Poisson structures \({{\mathcal {B}}}_h + {{\mathcal {A}}}_g\) and \({{\mathcal {B}}}_{{\bar{h}}}+{{\mathcal {A}}}_{{\bar{g}}}\). \(\square \)

6 Proof of Theorem 3

6.1 Rewriting the Existence of Frobenius Coordinates in a Differential Geometric Form

We start with the following observation related to Frobenius coordinate systems (Fact 4): \((u^1,\dots , u^n)\) is a Frobenius coordinate system for a metric g if and only if the contravariant Christoffel symbols \(\Gamma ^{ij}_k = \sum _s g^{si} \Gamma ^j_{sk}\) in this coordinate system are constant and symmetric in upper indices.

We denote by \(\Gamma , {{\bar{\Gamma }}}\) the Levi-Civita connections of g and \({\bar{g}}\). Assuming that a common Frobenius coordinate system \(u^1, \dots , u^n\) exists, we let \({{\widehat{\Gamma }}}\) be the flat connection whose Christoffel symbols identically vanish in this coordinate system. Let \(R^i_{\ jk\ell }\), \({\bar{R}}^i_{\ jk\ell }\), and \({{\widehat{R}}}^i_{\ jk\ell }\) denote the corresponding curvature tensors. We assume \(n\ge 2\), the case \(n=1\) is trivial.

Consider the tensors

In terms of these tensors, the necessary and sufficient conditions that the connection \({{\widehat{\Gamma }}}\) determines Frobenius coordinates are:

Indeed, if \((u^1,\dots , u^n)\) is a common Frobenius coordinate system for g and \({\bar{g}}\), then in these coordinates \({{\widehat{\Gamma }}}^j_{sk}=0\), and \(\Gamma ^{ij}_k= g^{is} \Gamma ^j_{sk}\) and \({{\bar{\Gamma }}}^{ij}_k= {\bar{g}}^{is} {{\bar{\Gamma }}}^j_{sk}\) are both constant and symmetric in upper indices by Fact 4. Hence, (45)–(48) obviously follow.

Conversely, if (45)–(48) hold, then in the flat coordinates for \({{\widehat{\Gamma }}}^j_{sk}\) we see that \(\Gamma ^{ij}_k = S^{ij}_{\ \ k}\) and \({{\bar{\Gamma }}}^{ij}_k ={\bar{S}}^{ij}_{\ \ k}\) are both symmetric in upper indices due to (46) and (47) and are also constant due to (48). Therefore, by Fact 4, \((u^1,\dots , u^n)\) are Frobenius coordinates for both g and \({\bar{g}}\).

6.2 General Form of the Metric in Diagonal Coordinates

We work in the coordinates \((x^1,\ldots ,x^n)\) such that

where \(g_i\) are local functions on our manifold and \(\varepsilon _i\in \{-1,1\}\). Local existence of such coordinates follows from Facts 2 and 3 which imply that \(R_g\) is a Nijenhuis operator and therefore, according to Haantjes theorem, is diagonalisable and \(\ell _i\) depends on \(x^i\) only (see also various versions of diagonalisability theorems in [6] which, in particular, allows us to include the case of complex eigenvalues too). We assume that all \(\ell _i(x^i)\) are different and never vanish.

Remark 6.1

We allow some of the diagonal variables \(x^i\) to be complex. Note that if a variable \(x^i\) is complex then by [6, §3], we may assume that the corresponding eigenvalue \(\ell _i\) is a holomorphic function of \(x^i\). In the first read, we recommend to think of all the eigenvalues as real and then to carefully check that our proofs are based on algebraic manipulations and differentiations, which are perfectly defined over complex coordinates, so that generalisation of the proofs to complex eigenvalues requires no change in formulas. See also a discussion at the end of [9, §7].

Note that the results we use (e.g. [38, 40]) are also based on algebraic manipulations (essentially, on a careful calculation of the curvature tensor and connection coefficients) and are applicable if a part of eigenvalues is complex.

Note also that when we work over complex numbers, we may think that the numbers \(\varepsilon _i\) are all equal to 1. If all the eigenvalues of \(R_g\) are real, objects we will introduce in the proof will automatically be real as well.

Let us first consider the conditions (46, 47). We view them as linear (algebraic) system of equations with unknown \({{\widehat{\Gamma }}}^i_{jk}\)’s (satisfying also \({{\widehat{\Gamma }}}^i_{jk}={{\widehat{\Gamma }}}^i_{kj}\)) whose coefficient matrix is constructed from the entries of g and L and the free terms are constructed from \(g, {\bar{g}} , \Gamma , {{\bar{\Gamma }}}\). Being rewritten in such a way that unknowns are on the left-hand side and free terms are on the right-hand side, it has the following form:

We see that for fixed \(i=j=k\), the system bears no information. For fixed \(i\ne j\), the coefficient matrix \(\begin{pmatrix} \varepsilon _i e^{-g_i} &{} - \varepsilon _j e^{-g_j} \\ \ell _i \varepsilon _i e^{-g_i} &{} - \ell _j \varepsilon _j e^{-g_j} \end{pmatrix}\) of the linear system (50) is nondegenerate, since the eigenvalues \(\ell _i\) are all different, and therefore the system has a unique solution.

The entries of the connections \(\Gamma \) and \({{\bar{\Gamma }}}\) of the diagonal metrics \(g_{ij}\) and \({\bar{g}}_{ij}:= gL^{-1}\) were calculated many times in the literature, see, e.g.[9, Lemma 7.1], and are given by the following formulas:

-

\(\Gamma ^k_{ij} ={{\bar{\Gamma }}}^k_{ij}= 0\) for pairwise different i, j and k,

-

\(\Gamma ^k_{kj} = \frac{1}{2} \frac{\partial g_k}{\partial x^j} \) for arbitrary k, j,

-

\(\Gamma ^k_{jj} = - \frac{\varepsilon _j}{\varepsilon _i} \frac{e^{g_j-g_k}}{2} \frac{\partial g_j}{\partial x^k} \) for arbitrary \(k \ne j\),

-

\({{\bar{\Gamma }}}^k_{kj} = \Gamma ^k_{kj} \) for arbitrary \(k\ne j\),

-