Abstract

In forensic medical investigations, physical injuries are documented with photographs accompanied by written reports. Automatic segmentation and classification of wounds on these photographs could provide forensic pathologists with a tool to improve the assessment of injuries and accelerate the reporting process. In this pilot study, we trained and compared several preexisting deep learning architectures for image segmentation and wound classification on forensically relevant photographs in our database. The best scores were a mean pixel accuracy of 69.4% and a mean intersection over union (IoU) of 48.6% when evaluating the trained models on our test set. The models had difficulty distinguishing the background from wounded areas. As an example, image pixels showing subcutaneous hematomas or skin abrasions were assigned to the background class in 31% of cases. Stab wounds, on the other hand, were reliably classified with a pixel accuracy of 93%. These results can be partially attributed to undefined wound boundaries for some types of injuries, such as subcutaneous hematoma. However, despite the large class imbalance, we demonstrate that the best trained models could reliably distinguish among seven of the most common wounds encountered in forensic medical investigations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In this pilot study, our aim was to automatically segment and classify skin wounds on photographs taken during forensic medical examinations of injured persons. Performing segmentation prior to the assessment by a forensic pathologist could reduce the time needed for examination and documentation. Our approach of using neural networks for image-based wound assessments in a legal or investigative context is not yet fully covered in the literature.

Anisuzzaman et al. (2020) discuss three main algorithms for image-based wound assessment: rule-based, machine learning, and deep learning—a subset of machine learning based on artificial neural networks [1]. Rule-based algorithms rely on manual wound descriptions [2, 3], while machine learning and deep learning algorithms can be trained to extract features directly from the images and use this information for further assessments. For example, Li et al. (2018) employed a composite model that combines watershed segmentation with dynamic thresholding and deep learning [4].

In recent years, several papers on image-based wound assessment have been published. However, unlike our study, most of the papers focus on chronic wounds and not forensic images [1, 4,5,6,7,8,9,10,11,12]. Wang et al. (2020) built a deep learning framework for the automatic segmentation of chronic wounds [7]. Other related works examined burn marks [13,14,15,16]. For example, Jiao et al. (2019) proposed a deep learning method based on mask region-based convolutional neural networks, which generate regions of interest on the images and then detect and segment burn wounds from each of those regions [13]. In forensics, Oura et al. (2021) utilized artificial neural networks to assess the shooting distance using images of gunshot wounds in piglets [17].

Among the most successful and commonly employed techniques for image segmentation are convolutional neural networks (CNNs) [18,19,20]. Many models currently use an encoder-decoder architecture in their CNN [19]. The encoder downsamples the input image to extract features, while the decoder upsamples the feature maps to gradually recover the full resolution of the input image necessary to establish a segmentation map. Skipped connections—a technique where some of the hidden layers in the neural network are skipped in an alternative path—are often used to combine coarse-grained, high-level information with fine-grained, low-level information [19]. As an example, in Wang et al. (2015), the authors developed a simple encoder-decoder CNN architecture to segment wound regions [5]. Feature pyramid network (FPN) architectures are based on a pyramidal hierarchy of CNNs that consist of a bottom-up pathway and a top-down pathway. The bottom-up feature encoder is connected via lateral connections to the top-down pathway, which were developed for building high-level semantic feature maps at various scales [21]. Another popular architecture for medical image segmentation is U-Net, which also builds on an encoder-decoder architecture [22].

A variety of networks can serve as encoders. For example, ResNet uses skip connections to facilitate the training of deep neural networks [23]. ResNeXt extends this technique by splitting the input of each building block into branches, transforming them, and then merging them again [24]. Squeeze-and-excitation (SE) networks, for example, SE-ResNeXt, add a channelwise attention mechanism to the CNN architecture [25]. ResNeSt also builds on the ResNeXt architecture by applying channelwise split attention on the different network branches [26]. EfficientNet builds on mobile inverted bottleneck blocks combined with squeeze-and-excitation layers, and a compound scaling factor was used to rescale the models to the desired size [27].

We compare different existing encoder-decoder architectures and loss functions for the segmentation and classification of wounds on forensic images.

Methods

For this study, we labeled photographs from our forensic photo database to train preexisting CNNs and compare their suitability for wound segmentation and classification on our test dataset. Information about data augmentation, data preprocessing, and evaluation metrics is provided in Online Resource 1.

Case selection

Wound images were retrospectively extracted from our database. We included all closed cases from January 2017 to September 2020 that had a clinical forensic medical examination performed at the Zurich Institute of Forensic Medicine. A total of 1753 pictures from 817 cases were extracted, showing 4666 separate wounded regions. During the extraction, we ensured that at least one skin injury was visible in each photograph. There were no exclusion criteria. However, if several photographs of a specific wound were available, usually only one photograph was chosen to keep the diversity as high as possible. We also included photographs of untreated wounds taken by the staff from hospitals where the injured person was treated. We deliberately included images containing small or barely visible lesions and some treated wounds, over- or underexposed photographs, images with blurry wounds in the background, and images with tattoos next to the wounds. These photographs should strengthen the models to become more resilient against generating incorrect predictions from poor-quality images (Fig. 1b).

Photographs showing two examples of wounds from the training set. The picture on the left (a) is an example of small potentially nonwounded and unspecified areas that have been classified as subcutaneous hematomas. In this case, it is unclear whether this wound should be classified as one or several wounds. The picture on the right (b) is an example of a subcutaneous hematoma where the exact boundaries are not clearly defined and parts are barely visible (red, subcutaneous hematoma; blue, skin abrasion)

Dataset

Semantic wound segmentation and classification were realized by a medical student with the VGG Image Annotator (VIA) version 2.0.10 using the forensic reports as a reference [28]. Semantic image segmentation describes the task of assigning each pixel of the input image to a certain class [18,19,20]. Instance image segmentation extends this approach by delineating and detecting individual objects as separate objects, even if they belong to the same class [19]. We chose semantic segmentation for our study; for example, skin abrasions and subcutaneous hematomas could sometimes be classified as a single wound or as a collection of wounds within one area (Fig. 1a). Another difficulty when segmenting images is that the wound boundaries are often not well delineated. For example, different experts might place the boundaries of a subcutaneous hematoma differently when looking at the same image (Fig. 1b).

All images were rechecked to avoid classification mistakes. Cases with unclear classification were discussed with a board-certified forensic pathologist. The wounds were labeled using ten different categories: (1) skin abrasion, (2) subcutaneous hematoma (bleeding under the skin), (3) dermatorrhagia (bleeding into the skin), (4) cut, (5) contused-lacerated, (6) stab, (7) thermal, (8) semisharp force, (9) puncture/gunshot wound, and (10) laceration. Figure 2 presents the total number of wounds per category (red bars) and shows the ratio of the wound area to the total image area in percentage and per category (blue bars). We subsequently removed wounds in categories (8) semisharp force, (9) puncture/gunshot wound, and (10) laceration from the results, as we realized that their numbers were too few for a proper model comparison.

As shown in Fig. 2, there was a large wound area-to-image area imbalance. Even the most prominent class of injury, subcutaneous hematoma, has only approximately 1% of all image pixels assigned to it, and all wounds combined comprised only approximately 2%. Additionally, there was a very significant imbalance among the different wound classes for both total wound area and wound counts.

Some wounds, such as bleeding into the skin (mostly petechiae) or abrasions, often consist of dozens of small injuries in one area that are hard to individually segment. In these cases, sometimes, the whole area was classified as one wound, even though there was some healthy skin near the injuries (Fig. 1a). In some rarer cases, it was possible to have two correct wound class labels in the same injured area. These regions were assigned to the visually more dominant class. For example, if an area showed clear superficial skin abrasions with a barely visible subcutaneous hematoma underneath, then the wound was labeled as skin abrasion.

Not all injuries could be reliably classified. To address this label noise, a likelihood attribute for each wounded region was included in the data by a medical student. The likelihood corresponded to “not certain,” “quite certain,” or “very certain.”

Training

To evaluate our models, we randomly selected a test set of 182 images. We applied sevenfold cross-validation from scikit-learn [29] to randomly split the remaining data into seven groups. In each fold, one of these groups served as the validation set with 225 images, and the other 6 groups served as the training set with 1346 images.

During training, we used a batch size of eight images. All training images were randomly shuffled between each epoch for a total of 100 epochs. The model parameters were updated using the Adam optimizer algorithm [30]. The initial learning rate, which determines the step size toward the minimum of the loss function, was set to \(1\times {10}^{-4}\) and then reduced by multiplying it by a factor of 0.98 after each epoch, reducing it to approximately \(1.3\times {10}^{-5}\) after 100 epochs. The training pipeline was implemented using PyTorch [31]. The models with the best mean pixel accuracy on the validation set and the models that had been trained for 100 epochs were saved and later evaluated. All results were averaged sevenfold.

Loss function

Commonly employed loss functions for image segmentation include the pixelwise binary cross-entropy (BCE) loss and Dice loss functions. The Dice loss can be generalized to obtain the Tversky loss function, which enables us to control both the number of false-positives and the number of false-negatives with two parameters \(\alpha\) and \(\beta\). The focal Tversky loss (FTL) function introduced by Abraham and Khan additionally allows us to change the contributions of the different classes with respect to the Tversky loss function by adding a \(\gamma\) factor to the function [32]. We chose \(\alpha\) = 0.7, \(\beta\) = 0.3, and \(\gamma\) = 1.3 as Abraham and Khan reported good results using these values in their paper [32].

We tested different combinations of the focal Tversky and BCE loss functions. However, the weighted BCE loss function as described in Eq. (1) yielded the best results. The weights \({w}_{c}\), which were chosen inversely proportional to the label frequencies (wound areas) \({f}_{c}\), allowed us to counteract the class imbalances. The parameter \({p}_{ic}\) represents the predicted value for a pixel, and \({g}_{ic}\) represents the ground truth. The value \(N\) denotes the total number of pixels in the image. The total number of wound classes is indicated by \(c\). The smoothing factor \(\epsilon\) was set to \(1\times {10}^{-3}\) to avoid extreme gradients. Additional weights \({m}_{ic}\) for each pixel were employed to take into account the certainty of classification during labeling (see the “Dataset” section for more information). The value \({m}_{ic}\) was set to 1; if the wound classification was considered very certain, \({m}_{ic}\) was set to 1.5 for that wounded area, and if it was considered not certain, \({m}_{ic}\) was set to 0.5.

For our models, we initially chose weights \({w}_{c}=1/100{f}_{c}\). We also tried \({w}_{c}=\sqrt{1/100{f}_{c}}\). The constant 1/100 was chosen because subcutaneous hematoma is the most common class and covers, on average, approximately 1% of the image surface area, which leads to \({w}_{\mathrm{hematoma}}\approx 1\) and all other weights being greater than 1. Taking the square root thus leads to lower weights. The weights exceeded one, as only the first term in Eq. (1) is weighted with \({w}_{c}\). Consequently, the first term is weighted higher than the second term, and the incentive for the model to avoid false-negative predictions is higher than that to avoid false-positive predictions.

Model selection

Initial tests were carried out with a combination of FPN and U-Net decoders and four different encoders: EfficientNet-B3, ResNeSt-50, ResNet-50, and SE-ResNeXt-50. They were trained with a weighted (\({w}_{c}=1/100{f}_{c}\)) BCE loss function without using \({m}_{ic}\) weights according to the certainty of classification (see the “Loss function” section for more details). We downloaded the encoders and the FPN and U-Net decoders from Segmentation Models PyTorch—v0.1.2 [33] and used pretrained ImageNet weights for transfer learning. The default numbers of layers and filters were left unchanged. A softmax2d activation function provided the final predictions, after which each pixel was assigned to the class with the highest prediction. SE-ResNeXt-50 U-Net showed the best results in this first round. Further tests focused on different loss functions and weights, which were compared by training the SE-ResNeXt-50 U-Net and SE-ResNeXt-50 FPN models to determine if changes in the loss function equally affect both model architectures.

Results

In this study, we compared different encoder-decoder architectures and loss functions based on the mean IoU and mean pixel accuracy. All results were averaged sevenfold. Online Resource 2 gives an overview of the results for different combinations of encoders and decoders on the test dataset. The best scores were achieved by SE-ResNeXt-50 in combination with a U-Net decoder. The model with the best mean validation accuracy was evaluated on the test dataset. Averaged sevenfold, the model achieved a mean pixel accuracy of 67.5% and a mean IoU of 39.9% on the test set. The other encoders gave superior results in combination with an FPN compared to a U-Net decoder. Consequently, the mean pixel accuracy was 3.4%, and the mean IoU was 3.6% higher on average for the FPN.

As shown in Online Resource 2, the models with the best mean pixel accuracy evaluated on the validation set have a better mean pixel accuracy on the test set than the models trained for the full 100 epochs. For the 8 models in Online Resource 2, the decrease in mean pixel accuracy was approximately 3.1% on average. On the other hand, the mean IoU was on average 6.2% higher after 100 completed epochs of training.

Figure 3 shows the mean pixel accuracy and IoU for SE-ResNeXt-50 U-Net and SE-ResNeXt-50 FPN evaluated on the validation sets for each epoch. We observed that the mean pixel accuracy peaks after 20 to 60 epochs and shows a minimal downward tendency. In comparison, the IoU continues to slightly improve up to epoch 100. Generally, we observed that FPN architectures learned at a faster rate than U-Net architectures.

Time series plot showing the IoU and mean pixel accuracy for each epoch, averaged sevenfold and evaluated on the validation sets. The U-Net architecture appears to learn at a slower rate than the FPN architecture. While the IoU continues to improve up to the last epoch, the mean pixel accuracy peaks between epoch 20 and epoch 60 and then continues to slightly decrease

Online Resource 3 shows an overview of different combinations of the focal Tversky and binary cross-entropy loss functions, as well as the effects of using different weights. All loss functions were tested with SE-ResNeXt-50 FPN and SE-ResNeXt-50 U-Net. Overall, the SE-ResNeXt-50 U-Net and FPN architectures were equally good, with U-Net having a 0.7% higher mean pixel accuracy but a mean IoU that was 0.6% lower than the FPN on average.

The BCE loss function showed results superior to the FTL and combinations of FTL and BCE (Online Resource 3).

In our baseline BCE loss function (Eq. 1), only the first term is weighted. Weighting the second term also led to a 9.9% lower mean pixel accuracy but a 2.7% increase in the mean IoU on average. Using smaller class weights by taking the square root of the weights (\({w}_{c}=\sqrt{1/100{f}_{c}}\)) led to a 1.2% decrease in the mean pixel accuracy but a 4.2% increase on average in the mean IoU for all loss functions compared to the original weights \({w}_{c}= 1/100{f}_{c}\).

Our dataset included information regarding the level of classification certainty during the labeling of the wounds, which was utilized in the loss function by adding additional weights (for more details, see the “Dataset” and “Loss function” sections). With the added weights, we achieved the highest mean pixel accuracy of 69.4% and a mean IoU of 38.1% with SE-ResNeXt-50 FPN. The additional weights increased the mean pixel accuracy by 1.9% on average. The effects on the mean IoU were variable, with an average decrease of 1.9% for U-Net but an increase of 0.9% for FPN. Combining this loss with smaller class weights by taking the square root of the weights \(({w}_{c}=\sqrt{1/100{f}_{c}})\), we achieved the highest observed mean IoU of 48.7% but a lower pixel accuracy of 63.4% again with the SE-ResNeXt-50 FPN.

The confusion matrix in Fig. 4 shows the pixel accuracies evaluated on the test set for the model with the highest mean pixel accuracy, as described above. The model fails to fully distinguish wounds from the background. In particular, pixels classified as subcutaneous hematomas and skin abrasions were incorrectly assigned to the background class in approximately 31% of cases. The model is also unsuccessful in fully differentiating dermatorrhagia and subcutaneous hematoma, with 14% incorrectly classified as the latter. Stab wounds, however, were very reliably classified with a pixel accuracy of approximately 93% in the test dataset, and the network quite successfully distinguished cut and stab wounds, with less than 10% of cut pixels being classified as stab wounds and less than 5% of stab wound pixels being classified as cuts.

Confusion matrix showing the mean pixel accuracy evaluated on the test set averaged sevenfold. The selected model is SE-ResNeXt-50 FPN, trained with a BCE loss function with \({w}_{c}=1/100{f}_{c}\) and weights according to the certainty of classification (Eq. 1). Then, the model with the best mean pixel accuracy on the validation sets was selected. All results are averaged sevenfold

Discussion

The main goal of our study was to train a reliable algorithm to automate forensic wound segmentation and classification. Related studies assessed relatively large wounds with well-defined borders and few classes [4,5,6,7,8,9, 13]. This choice makes it difficult to directly compare their results with our results. More importantly, most studies focus on chronic wounds and not forensic images [1, 4,5,6,7,8,9,10,11,12]. Forensic images usually show more contextual information and different wound classes. Furthermore, the photographs often have a higher resolution and several types of wounds on the same image.

We assume that the high overlap among subcutaneous hematoma, skin abrasion, and background depicted in Fig. 4 can be partially explained by often undefined wound boundaries for these classes. Moreover, we observed a tradeoff between IoU and pixel accuracy. While the mean pixel accuracy peaks early, the IoU continues to improve (Fig. 3). We conclude that the optimal number of epochs depends on whether the aim is to reach the highest possible IoU or the highest pixel accuracy.

Similarly, reducing the weights by taking the square root of the weights increased the mean IoU, but only at the cost of a lower mean pixel accuracy. One explanation for this effect is that smaller loss weights led to a smaller image area assigned to the wound classes, resulting in substantially fewer false-positive predictions and a better IoU. There was also a slight increase in false-negatives and a decrease in true positives, which caused a decrease in the pixel accuracy. We observed substantially better results with the BCE loss function than with the FTL (Online Resource 3). The large difference might be partially attributed to the parameters \(\alpha\), \(\beta\), and γ used in the FTL, which potentially could be further optimized for our models and dataset.

Collecting additional data to improve the certainty of classification during the manual segmentation and classification of the wounds helped us further increase the mean pixel accuracy. Compared to the segmentation of the wounds, only a small additional effort was needed to collect these data. Based on our results, using this information seems worthwhile in the case of datasets with a significant proportion of wounds that are difficult to classify with high certainty just by looking at the photographs.

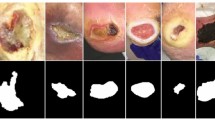

Figure 5 shows a collection of true and false predictions obtained on the test set. For instance, mistakes were made by the network that would be obvious to a human, such as mistaking parts of the background as wounds (Fig. 5 (1)). Increasing the size and diversity of our dataset could avoid this kind of mistake. One of the limitations to this approach is the low number of newly closed cases and the time needed to mask the images. Another type of less obvious mistake is visible in Fig. 5 (2). The network correctly classified parts of the cut. However, the wider center part of the wound was labeled stab. Additionally, dried blood on the hand was classified as a cut. The third type of misclassification is hard to assess even for a human without zooming in on a photograph or having additional background information on the case. An example is Fig. 5 (3), where the model predicts a cut, while the ground truth classification is skin abrasion. At a lower resolution, it is difficult, even for a human eye, to confidently assess the type of wound. Such errors are quite rare and might be fixed by increasing the resolution of the training images. In our study, this size was set to \(512\times 512\) pixels due to limited computing resources. Figure 5 (4) depicts an additional example from our test set. Note that the model correctly classifies the blood on the cloth and arm as background.

Illustration of photographs with their corresponding predictions made by the SE-ResNeXt-50 FPN model on our test dataset. The network was trained with a BCE loss function, \({w}_{c}=1/100{f}_{c}\), and weights according to the certainty of classification (Eq. 1). Then, the model with the best mean pixel accuracy on the validation sets was selected. Columns from left to right: input image (a), ground truth/target label (b), and its predictions (c). The first three rows depict common mistakes by the network: mistaking parts of the background as wounds and other obvious mistakes [1]; center of cut classified as stab wounds and dried blood classified as a cut and other understandable mistakes [2]; misclassification of skin abrasion as a cut wound [3]. Using only the photograph, especially at a low resolution, it is difficult for a human to determine the correct classification. Row four depicts an additional example from our test set [4]. The model correctly classifies the blood on the cloth and arm as background. (blue, thermal wound; green, cut; orange, stab wound; and magenta, skin abrasion)

Preventing the abovementioned misclassifications and thus reducing false-positive and false-negative predictions remain challenging. In praxis, a photograph is not always sufficient to correctly classify a wound. Currently, human supervision is still critical for correcting false predictions.

Future studies could test the networks with other methods employed in medical forensic imaging, such as three-dimensional photogrammetry [34]. Future studies might also expand the network with multilabel classification in the case of overlapping wounds and add a postprocessing step to remove very small wound predictions that would otherwise cost substantial time to revise if incorrect. However, the next step will be to use wound segmentations and classifications to automatically create short wound descriptions and thus facilitate the documentation process.

Key points

-

1.

SE-ResNeXt-50 encoders combined with FPN or U-Net decoder models are suitable for differentiating wounds on photographs.

-

2.

Most common errors are linked to misclassifying backgrounds as wounds and vice versa due to undefined wound boundaries.

-

3.

Stab wounds were reliably classified with a pixel accuracy of 93%.

-

4.

Use of a weighted BCE loss function gave the best results.

Data availability

The datasets analyzed during the current study are not publicly available due to data privacy. The code and the trained models are available on reasonable request.

References

Anisuzzaman DM, Wang C, Rostami B, Gopalakrishnan S, Niezgoda J, Yu Z. Image-based artificial intelligence in wound assessment: a systematic review. Adv Wound Care. 2021. https://doi.org/10.1089/wound.2021.0091.

Yadav MK, Manohar DD, Mukherjee G, Chakraborty C. Segmentation of chronic wound areas by clustering techniques using selected color space. J Med Imaging Health Inform. 2013;3:22–9. https://doi.org/10.1166/jmihi.2013.1124.

Veredas F, Mesa H, Morente L. Binary tissue classification on wound images with neural networks and bayesian classifiers. IEEE Trans Med Imaging. 2010;29:410–27. https://doi.org/10.1109/TMI.2009.2033595.

Li F, Wang C, Liu X, Peng Y, Jin S. A composite model of wound segmentation based on traditional methods and deep neural networks. Comput Intell Neurosci. 2018;2018:4149103. https://doi.org/10.1155/2018/4149103.

Wang C, Yan X, Smith M, Kochhar K, Rubin M, Warren SM, Wrobel J, Lee H. A unified framework for automatic wound segmentation and analysis with deep convolutional neural networks. 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy. 2015;2415–18. https://doi.org/10.1109/EMBC.2015.7318881.

Anisuzzaman DM, Patel Y, Niezgoda JA, Gopalakrishnan S, Yu Z. A Mobile app for wound localization using deep learning. IEEE Access. 2022;10:61398–409. https://doi.org/10.1109/ACCESS.2022.3179137.

Wang C, Anisuzzaman DM, Williamson V, Dhar MK, Rostami B, Niezgoda J, et al. Fully automatic wound segmentation with deep convolutional neural networks. Sci Rep. 2020;10:21897. https://doi.org/10.1038/s41598-020-78799-w.

Wagh A, Jain S, Mukherjee A, Agu E, Pedersen P, Strong D, et al. Semantic segmentation of smartphone wound images: comparative analysis of AHRF and CNN-based approaches. IEEE Access. 2020;8:181590–604. https://doi.org/10.1109/access.2020.3014175.

Zahia S, Garcia-Zapirain B, Elmaghraby A. Integrating 3D model representation for an accurate non-invasive assessment of pressure injuries with deep learning. Sensors (Basel). 2020. https://doi.org/10.3390/s20102933.

Marijanović D, Nyarko EK, Filko D. Wound detection by simple feedforward neural network. Electronics. 2022;11:329. https://doi.org/10.3390/electronics11030329.

Chang CW, Christian M, Chang DH, Lai F, Liu TJ, Chen YS, Chen WJ. Deep learning approach based on superpixel segmentation assisted labeling for automatic pressure ulcer diagnosis. PLoS One. 2022;17:e0264139. https://doi.org/10.1371/journal.pone.0264139.

Scebba G, Zhang J, Catanzaro S, Mihai C, Distler O, Berli M, Karlen W. Detect-and-segment: a deep learning approach to automate wound image segmentation. Inform Med Unlocked. 2022;29:100884. https://doi.org/10.1016/j.imu.2022.100884.

Jiao C, Su K, Xie W, Ye Z. Burn image segmentation based on mask regions with convolutional neural network deep learning framework: more accurate and more convenient. Burns Trauma. 2019;7:6. https://doi.org/10.1186/s41038-018-0137-9.

Liu H, Yue K, Cheng S, Li W, Fu Z. A framework for automatic burn image segmentation and burn depth diagnosis using deep learning. Comput Math Methods Med. 2021;2021:5514224. https://doi.org/10.1155/2021/5514224.

Şevik U, Karakullukçu E, Berber T, Akbaş Y, Türkyılmaz S. Automatic classification of skin burn colour images using texture-based feature extraction. IET Image Proc. 2019;13:2018–28. https://doi.org/10.1049/iet-ipr.2018.5899.

Dai F, Zhang D, Su K, Xin N. Burn images segmentation based on Burn-GAN. J Burn Care Res. 2020. https://doi.org/10.1093/jbcr/iraa208.

Oura P, Junno A, Junno J-A. Deep learning in forensic gunshot wound interpretation-a proof-of-concept study. Int J Legal Med. 2021;135:2101–6. https://doi.org/10.1007/s00414-021-02566-3.

Taghanaki SA, Abhishek K, Cohen JP, Cohen-Adad J, Hamarneh G. Deep semantic segmentation of natural and medical images: a review. Artif Intell Rev 2021;54:137–78. https://doi.org/10.1007/s10462-020-09854-1.

Minaee S, Boykov Y, Porikli F, Plaza A, Kehtarnavaz N, Terzopoulos D. Image segmentation using deep learning: a survey. IEEE Trans Pattern Anal Mach Intell. 2022;44:3523–42. https://doi.org/10.1109/TPAMI.2021.3059968.

Ulku I, Akagündüz E. A survey on deep learning-based architectures for semantic segmentation on 2D images. Appl Artif Intell. 2022. https://doi.org/10.1080/08839514.2022.2032924.

Seferbekov S, Iglovikov V, Buslaev A, Shvets A. Feature pyramid network for multi-class land segmentation. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA. 2018;272–2723. https://doi.org/10.1109/CVPRW.2018.00051.

Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. Med Image Comput Comput-Assist Interv MICCAI. 2015;234–41. https://doi.org/10.1007/978-3-319-24574-4_28.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA. 2016;770–8. https://doi.org/10.1109/CVPR.2016.90.

Xie S, Girshick R, Dollár P, Tu Z, He K. Aggregated residual transformations for deep neural networks. arXiv:1611.05431. 2017. https://doi.org/10.48550/arXiv.1611.05431.

Hu J, Shen L, Sun G. Squeeze-and-excitation networks. IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA. 2018;7132–41. https://doi.org/10.1109/CVPR.2018.00745.

Zhang H, Wu C, Zhang Z, Zhu Y, Lin H, Zhang Z, et al. ResNeSt: Split-attention networks. arXiv:2004.08955. 2020. https://doi.org/10.48550/arXiv.2004.08955.

Tan M, Le VQ. EfficientNet: rethinking model scaling for convolutional neural networks. Int Conf Mach Learn. 2020. https://doi.org/10.48550/arXiv.1905.11946.

Dutta A, Zisserman A. The VIA Annotation Software for Images, Audio and Video. In Proceedings of the 27th ACM International Conference on Multimedia (MM '19). Association for Computing Machinery, New York, NY, USA. 2019;2276–79. https://doi.org/10.1145/3343031.3350535.

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: machine learning in Python. J Mach Learn Res. 2011;12:2825–30. https://dl.acm.org/doi/10.5555/1953048.2078195.

Kingma DP, Ba J. Adam: a method for stochastic optimization. San Diego. 2015.

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, et al. PyTorch: an imperative style, high-performance deep learning library. Adv Neural Inf Process Syst. 2019;32:8024–35.

Abraham N, Khan NM. A Novel focal tversky loss function with improved attention U-Net for lesion segmentation. IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy. 2019;683–87. https://doi.org/10.1109/ISBI.2019.8759329.

GitHub. qubvel/segmentation_models.pytorch. 2020–11–28T22:00:00.000Z. https://github.com/qubvel/segmentation_models.pytorch. Accessed 28 Nov 2020.379Z.

Michienzi R, Meier S, Ebert LC, Martinez RM, Sieberth T. Comparison of forensic photo-documentation to a photogrammetric solution using the multi-camera system “Botscan.” Forensic Sci Int. 2018;288:46–52. https://doi.org/10.1016/j.forsciint.2018.04.012.

Acknowledgements

The authors thank Lars Ebert and Julian Haug for discussions and improvements to the manuscript and Sabine Franckenberg for checking our assessment of the wound classification.

Funding

Open access funding provided by University of Zurich. This study was funded by the Emma Louis Kessler Foundation.

Author information

Authors and Affiliations

Contributions

N.Z. retrieved the images from the database and annotated the wound images. N.Z. trained and compared the models. N.Z. and A.D. wrote the manuscript. A.D. supervised the study. T.S. designed the study and reviewed the manuscript. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval

This retrospective study was approved by the Cantonal Ethics Board of the Canton of Zurich (document KEK ZH-No. 15–0686), and all methods were performed in accordance with the relevant guidelines and regulations. The Cantonal Ethics Board of the Canton of Zurich has also waived the need of an informed consent.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zimmermann, N., Sieberth, T. & Dobay, A. Automated wound segmentation and classification of seven common injuries in forensic medicine. Forensic Sci Med Pathol (2023). https://doi.org/10.1007/s12024-023-00668-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s12024-023-00668-5