Abstract

We prove a very general fixed point theorem in the space of functions taking values in a random normed space (RN-space). Next, we show several of its consequences and, among others, we present applications of it in proving Ulam stability results for the general inhomogeneous linear functional equation with several variables in the class of functions f mapping a vector space X into an RN-space. Particular cases of the equation are for instance the functional equations of Cauchy, Jensen, Jordan–von Neumann, Drygas, Fréchet, Popoviciu, the polynomials, the monomials, the p-Wright affine functions, and several others. We also show how to use the theorem to study the approximate eigenvalues and eigenvectors of some linear operators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we prove a fixed point theorem for classes of functions taking values in a random normed space (RN-space) and show some applications of it to several issues connected with Ulam-type stability.

The study on such stability was initiated by a question of Ulam from 1940 (cf., e.g., [48, 96]) asking if an “approximate” solution of the functional equation of group homomorphisms must be “close” to an exact solution of the equation. The first answer was provided by Hyers [48], who considered the question for the Cauchy functional equation in Banach spaces and used the method that subsequently was called the direct method. He defined the equation solution explicitly as a pointwise limit of a sequence of mappings constructed from the given approximate solution.

Later, Hyers’ result was generalized by Aoki [10], Rassias [84], Forti [37], Gajda [39], Gǎvruta [40] and others, with a similar method. We refer to the monographs [25, 49, 53] for more information on history and recent research directions related to the subject.

Further, in 2003, Radu [82] proposed a new method to retrieve the main result of Rassias [84], based on the fixed point alternative in [34]. The same fixed point method, using also Banach Contraction Principle, has subsequently been used by many other authors to study the stability of a large variety of functional equations (see for example [21, 27, 33, 69, 74] and the references therein). A modification of it was proposed in [74, 75], where the author tied some set of functions to the given approximate solution of a given functional equation to make it a complete metric space, and then to apply the Banach theorem. Many new fixed point theorems have been shown in the literature, to investigate Ulam stability in spaces endowed with some kind of generalized metrics, such as fuzzy metric, quasi-metric, partial metric, G-metric, D-metric, b-metric, 2-metric, ultrametric, modular metric, and dislocated metric; see for instance [5, 9, 46, 56, 64].

Some authors have also used a somewhat different approach, proposed for the first time in [18, 19] (see [21] for further references), which applies the fixed point result for function spaces proved in [20]. For instance, Bahyrycz and Olko [13] applied that approach in their study on stability of the general functional equation

for functions f mapping a linear space X over a field \({\mathbb {K}}\) into a Banach space Y, where \(A \in Y\) and, for every \(i =1, 2, \ldots , m\), \(j = 1, 2, \ldots , n\), \(A_i \in {\mathbb {K}}^* := {\mathbb {K}} {\setminus } \{0\}\), and \(a_{ij} \in {\mathbb {K}}\). Let us mention that numerous functional equations that are well known in the literature are particular cases of (1.1) (see Sect. 6 for more details).

Bahyrycz and Olko [14] and Zhang [98] (see also [75]) published the hyperstability results for Eq. (1.1) obtained by the same theorem in [20]. Related results can also be found in [16, 17].

The theory of probabilistic metric (or random normed) spaces was proposed by Menger [66] as a probabilistic extension of the metric space theory (see also [87]). This theory was later investigated by Šerstnev [89,90,91] (we also refer to the book [44]). It seems that Alsina [7] was the first to consider Ulam-type stability of functional equations in probabilistic normed spaces. Next, in 2008, Mihet and Radu [68], using the fixed point method, proved the stability results for the Cauchy and Jensen functional equations in random normed spaces.

The stability of many other functional equations was also investigated in random spaces. For example, Kim et al. [57] investigated the stability of the general cubic functional equation, Abdou et al. [1] studied the stability of the quintic functional equations, Alshybani et al. [6] used the direct and the fixed point methods to prove the stability results for the additive–quadratic functional equation, and Pinelas et al. [76] used the direct and the fixed point method to show stability of a new type of the n-dimensional cubic functional equation. We also refer to the book of Cho et al. [28] for more details on that type of stability in random normed spaces.

In this paper, we will first show a general fixed point theorem for classes of functions taking values in a random normed space. This is the random normed space version of the fixed point theorems in [20, 22] (see also [24]), which turned out to be very useful in investigations of the stability of various functional equations. Next, we show how to use the theorem to study the Ulam stability of various functional equations in a single variable and investigate the approximate eigenvalues and eigenvectors in the spaces of function taking values in RN-spaces.

Finally, using this fixed point theorem, we prove the very general results on the stability of the functional equation

for functions mapping a linear space X into a random normed space Y, with a given function \(D : X^n \rightarrow Y\). As special cases of this result, we can obtain the stability criteria for numerous functional equations in several variables, in the framework of random normed spaces.

2 Preliminaries

In the sequel, we use the definitions and properties of the random normed space (RN-space) as in [7, 28, 44, 45, 64, 68, 87, 89,90,91]. However, for the convenience of the reader, we remind some of them.

Definition 2.1

A mapping \(g : {\mathbb {R}} \rightarrow [0, 1] \) is called a distribution function if it is left continuous, non-decreasing and

The class of all distribution functions g with \(g(0) = 0\) is denoted by \({\mathcal {D}}_+\).

For any real number \(a \ge 0\), \(H_a\) is the element of \({\mathcal {D}}_+\) defined by

Definition 2.2

[28] A mapping \(T : [0, 1] \times [0, 1] \rightarrow [0, 1] \) is a triangular norm (briefly a t-norm) if T satisfies the following conditions:

-

(a)

T is commutative and associative;

-

(b)

\(T(a, 1) = a\) for all \(a \in [0, 1];\)

-

(c)

\(T(a,b) \le T(c,d)\), whenever \(a \le c\) and \(b \le d.\)

Remark 2.3

Clearly, in general, a t-norm does not need to be continuous. Typical examples of continuous t-norms are as follows:

Moreover, in view of (b) and (c), for each t-norm T and \(x \in [0, 1]\), we have:

Remark 2.4

(Cf. [28]) If T is a t-norm, \(m \in {\mathbb {N}}_0\) and \(a_i \in [0, 1]\) for \(i \in {\mathbb {N}}_0\), then we write

Since T is commutative and associative, it is easy to show by induction that

Note yet that, by (c), the sequence \((T_{i=m}^{m+n} a_i)_{n\in {\mathbb {N}}}\) is non-increasing for every \(m \in {\mathbb {N}}\) and therefore always convergent. So, for each \(m \in {\mathbb {N}}\), we may introduce the following notation:

A t-norm T can be extended in a unique way to an n-ary operation taking:

To shorten some long formulas, we will write

It is easy to show by induction on k (using the associativity and commutativity of T) that

for every \(k,n,m \in {\mathbb {N}}_0\), \(k\ge 1\), and \(a_{ij}\in [0,1]\) with \(j=1,\ldots ,k\) and \(i=m,\ldots ,m+n\). We need that property a bit later.

Definition 2.5

Let Y be a real vector space, \(F : x \mapsto F_x\) a mapping from Y into \({\mathcal {D}}_+\), and T a continuous t-norm. We say that (Y, F, T) is a random normed space (briefly RN-space) if the following conditions are satisfied:

-

(1)

\( F_x = H_0 \) if and only if \(x = 0\) (the null vector);

-

(2)

\( F_{\alpha x }(t) = F_x\left( \frac{t}{| \alpha |}\right) \) for all \( x \in Y, \ t > 0 \) and \( \alpha \not = 0;\)

-

(3)

\( F_{x+y}(t+s) \ge T(F_x(t), F_y(s)) \) for all \( x, y \in Y \) and \( t, s \ge 0.\)

For more information on the RN-spaces, we refer to [41, 45, 65, 87, 89].

Example

Let \((Y, \Vert \ \Vert )\) be a normed space. Then both \((Y, F, T_M)\) and \((Y, F, T_p)\) are random normed spaces, where for every \(x \in Y\)

The same remains true if

Definition 2.6

(Cf., e.g.,, [41, 65]) Let (Y, F, T) be an RN-space.

-

(1)

A sequence \((x_n)_{n\in {\mathbb {N}}}\) in Y is said to converge (or to be convergent) to \(x \in Y\) (which we denote by: \( \lim _{n \rightarrow +\infty } x_n = x)\) if

$$\begin{aligned} \lim _{n \rightarrow +\infty } F_{x_n - x}(t) = 1,\quad t>0, \end{aligned}$$i.e., for each \(\epsilon > 0\) and each \(t > 0\), there exists an \( N_{\epsilon , t} \in {\mathbb {N}}\) such that \(F_{x_n - x}(t) > 1 - \epsilon \), for all \(n \ge N_{\epsilon , t}\).

-

(2)

A sequence \((x_n)_{n\in {\mathbb {N}}}\) in Y is said to be an M-Cauchy sequence if

$$\begin{aligned} \lim _{n,m \rightarrow +\infty } F_{x_n - x_m}(t)= 1,\quad t > 0, \end{aligned}$$i.e., for each \( \epsilon > 0\), and each \(t > 0\), there exists \(N_{\epsilon , t} \in {\mathbb {N}}\) such that \(F_{x_n - x_m}(t) > 1 - \epsilon \), for all \(N_{\epsilon ,t} \le n < m\).

-

(3)

A sequence \((x_n)_{n\in {\mathbb {N}}}\) in Y is said to be a G-Cauchy sequence if

$$\begin{aligned} \lim _{n \rightarrow +\infty } F_{x_n - x_{n+k}}(t)= 1,\quad t > 0,k\in {\mathbb {N}}, \end{aligned}$$i.e., for every \(\epsilon > 0\), \(k\in {\mathbb {N}}\) and \(t > 0\), there exists an \(N_{\epsilon , t,k} \in {\mathbb {N}}\) such that \(F_{x_{n} - x_{n+k}}(t) > 1 - \epsilon \) for all \(n \ge N_{\epsilon ,t,k}\).

-

(4)

(Y, F, T) is said to be G-complete (M-complete, respectively) if every G-Cauchy (M-Cauchy, resp.) sequence in Y is convergent to some point in Y.

Remark 2.7

Since every M-Cauchy sequence is also G-Cauchy, it is easily seen that each G-complete RN-space is M-complete.

3 A general fixed point theorem in RN-spaces

Our first main result is a very general RN-space version of a fixed point theorem in [20]; actually, we follow the approach from [22] (see also [24]). We provide some applications of it in the next sections.

In what follows, X is a non-empty set, (Y, F, T) is an RN-space, \({\mathbb {N}}_0:={\mathbb {N}}\cup \{0\}\) and \({\mathbb {R}}_+ := [0, +\infty )\) (the set of non-negative real numbers). If U and V are nonempty sets, then as usual \(U^V\) denotes the family of all mappings from V to U. If \(F \in U^U\), then \(F^n\) stands for the n-th iterate of F, i.e., \(F^0(x)= x\) and \(F^{n+1}(x)= F(F^n(x))\) for \(x \in U\) and \(n \in {\mathbb {N}}_0\). The space \(Y^X\) is endowed with the coordinatewise operations, so that it is a linear space.

To simplify some expressions, for given \(\phi \in {\mathcal {D}}_+^X\) and \(x\in X\), we write \(\phi _x\) to mean \(\phi (x)\), i.e.,

For every \(\varphi , \psi \in {\mathcal {D}}_+\) the inequality \(\varphi \le \psi \) means that \(\varphi (t) \le \psi (t)\) for each \(t > 0\). We use this abbreviation to simplify formulas whenever the variable t is not necessary to express them precisely.

Definition 3.1

Let \(\Lambda : {\mathcal {D}}_+^X \rightarrow {\mathcal {D}}_+^X\) and \(J : Y^X \rightarrow Y^X\) be given. We say that the operator J is \(\Lambda \)-contractive if, for every \(\xi , \eta \in Y^X\) and every \(\phi \in {\mathcal {D}}_+^X\),

The convergence in \({\mathcal {D}}_+\) will mean the pointwise convergence. Therefore, we say that a sequence \((\psi _n)_{n\in {\mathbb {N}}}\) in \({\mathcal {D}}_+\) converges to some \(\psi \in {\mathcal {D}}_+\) if

Hence, the convergence of \((\psi _n)_{n\in {\mathbb {N}}}\) to \(H_0\) means that

We need yet the following hypothesis on \(\Lambda : {\mathcal {D}}_+^X \rightarrow {\mathcal {D}}_+^X\).

- \(({\mathcal {C}}_0)\):

-

If \((g_n)_{n\in {\mathbb {N}}}\) is a sequence in \(Y^X\) such that the sequence \(\big (F_{g_n(x)}\big )_{n\in {\mathbb {N}}}\) converges to \(H_0\) for every \(x\in X\), then the sequence \(\big ((\Lambda F_{g_n})_x\big )_{n\in {\mathbb {N}}}\) converges to \(H_0\) for every \(x\in X\), where \(F_{g_n} \in {\mathcal {D}}_+^X\) is given by \(F_{g_n}(x) := F_{g_n(x)}\) for \(x \in X\).

Remark 3.2

Let \(\chi _0\in {\mathcal {D}}_+^X\) be given by: \(\chi _0(x)=H_0\) for \(x\in X\). Then \(({\mathcal {C}}_0)\) actually means the continuity of \(\Lambda \) at the point \(\chi _0\) (with respect to the pointwise convergence topologies in \({\mathcal {D}}_+^X\) and \({\mathcal {D}}_+\)) and the property: \(\Lambda \chi _0=\chi _0\).

Let \(\nu \in {\mathbb {N}}\), \(\xi _1,\ldots ,\xi _{\nu }:X\rightarrow X\), and \(L_1,\ldots ,L_{\nu }:X\rightarrow (0,\infty )\) be fixed. A natural example of operator \(\Lambda \) fulfilling hypothesis \(({\mathcal {C}}_0)\) can be defined by

We refer to Remark 3.10 for further comments on this situation.

In what follows, \(\Omega \) stands for the family of all real sequences \((\omega _n)_{n \in {\mathbb {N}}_0}\) with \(\omega _n \in (0,1)\) for each \(n\in {\mathbb {N}}_0\) and

Let us first state the following lemma, which will be used in the sequel.

Lemma 3.3

Let \(\Lambda : {\mathcal {D}}_+^X \rightarrow {\mathcal {D}}_+^X\) and \(\epsilon : X \rightarrow {\mathcal {D}}_+\) be arbitrary. Then, for every \(x \in X,\) \(k \in {\mathbb {N}}_0,\) \(\omega \in \Omega ,\) and \(t > 0,\) the limits

exist in \({\mathbb {R}}\) and

Proof

Fix \(k \in {\mathbb {N}}_0\), \(x \in X\) and \(t > 0\) and write

Since \((\Lambda ^i \epsilon )_x\in {\mathcal {D}}_+\), it is a non-decreasing function for each \(i \in {\mathbb {N}}\). Hence,

Consequently,

whence the sequence \((\tau _m(x,t,k))_{m \in {\mathbb {N}}}\) is non-increasing and, therefore, for every \(k \in {\mathbb {N}}_0\), \(x \in X\) and \(t > 0\), the following limit exists

Next, fix \(\omega \in \Omega \), \(k \in {\mathbb {N}}_0\), \(x \in X\) and \( t >0\), and write

Then,

This means that the sequence \((\rho _m(x,t,k))_{m \in {\mathbb {N}}}\) is non-increasing. Therefore, there exists the limit

\(\square \)

Remark 3.4

Fix \(x \in X\) and \(k \in {\mathbb {N}}_0\). If \(T=T_M\) in Lemma 3.3, then

If \(T=T_p\), then (3.4) implies that

Analogous equalities are valid for \(\,^{\omega }\!\sigma _x^k\) with any \(\omega \in \Omega \).

In the sequel, given \(\Lambda : {\mathcal {D}}_+^X \rightarrow {\mathcal {D}}_+^X\) and \(\epsilon : X \rightarrow {\mathcal {D}}_+\), we write

for every \(x \in X\), \(k \in {\mathbb {N}}_0\) and \(t > 0\), where \(\sigma _x^k(t)\) and \(^{\omega }\!\sigma _x^k(t)\) are defined by (3.2) and (3.3).

Theorem 3.5

Let \(\Lambda : {\mathcal {D}}_+^X \rightarrow {\mathcal {D}}_+^X,\) \(\epsilon : X \rightarrow {\mathcal {D}}_+,\) \(J : Y^X \rightarrow Y^X\) and \(f : X \rightarrow Y\) be given. Assume that \(\Lambda \) satisfies hypothesis \(({\mathcal {C}}_0),\) J is \(\Lambda \)-contractive,

and one of the following three conditions holds.

-

(i)

(Y, F, T) is M-complete and

$$\begin{aligned} \lim _{k \rightarrow +\infty } \;\inf _{j\in {\mathbb {N}}_0}T_{i=k}^{k+j} (\Lambda ^{i} \epsilon )_x \left( \frac{t}{j+1}\right) =1, \quad x \in X,\ t>0. \end{aligned}$$(3.9) -

(ii)

(Y, F, T) is M-complete and for each \(k\in {\mathbb {N}}\) there is a sequence \((\omega ^k_n)_{n\in {\mathbb {N}}_0}\in \Omega \) with

$$\begin{aligned} \lim _{k \rightarrow +\infty } \;\inf _{j\in {\mathbb {N}}_0}T_{i=k}^{k+j} (\Lambda ^{i} \epsilon )_x \big (\omega ^k_{i-k}t\big ) =1, \quad x \in X,\ t>0. \end{aligned}$$(3.10) -

(iii)

(Y, F, T) is G-complete and \(\lim _{n \rightarrow +\infty }(\Lambda ^{n} \epsilon )_x = H_0\) for \(x\in X,\) i.e.,

$$\begin{aligned} \lim _{n \rightarrow +\infty } \,(\Lambda ^{n} \epsilon )_x (t) =1, \quad x \in X,\ t>0. \end{aligned}$$(3.11)

Then, for every \(x \in X,\) the limit

exists in Y and \(\psi \in Y^X\) thus defined is a fixed point of J with

Moreover, in case (i) or (ii) holds, \(\psi \) is the unique fixed point of J such that there exists \(\alpha \in (0,1)\) with

Proof

First we show by induction that, for every \(n \in {\mathbb {N}}_0\),

The case \(n = 0\) is just (3.8). So, fix \(n \in {\mathbb {N}}_0\) satisfying (3.15). Then, using the \(\Lambda \)-contractivity of J and the inductive assumption, we obtain

Thus, we have proved that (3.15) holds for every \(n \in {\mathbb {N}}_0\). Consequently, for every \(n \in {\mathbb {N}}_0\), \(m \in {\mathbb {N}}\), \(x \in X\) and \(t > 0\) we have

and analogously, as \(\omega _{m-1}t< \sum _{i=m-1}^{\infty }\omega _{i}t\) for every \((\omega _n)_{n\in {\mathbb {N}}_0}\in \Omega \),

Now, we show that the limit (3.12) exists in Y for every \(x\in X\). First consider the case of (i). Then, by (3.16), for all \(k, m\in {\mathbb {N}}\), \(n \in {\mathbb {N}}_0\), \(x\in X\) and \(t>0\),

Consequently, by (c),

Hence, (3.9), (b) and the continuity of T at (1, 1) yield

If (ii) is valid, then (3.17) implies that, for all \(k, m\in {\mathbb {N}}\), \(n \in {\mathbb {N}}_0\), \(x\in X\) and \(t>0\),

and consequently, by (c),

Hence, (3.10), (b) and the continuity of T at (1, 1) yield

Thus we have proved that, for every \(x \in X\), \((J^n f(x))_{n \in {\mathbb {N}}}\) is an M-Cauchy sequence and, as (Y, F, T) is M-complete, the limit (3.12) exists.

In the case of (iii), in view of (3.16),

whence (3.11) and the continuity of T at (1, 1) imply that

Thus, for every \(x \in X\), \((J^n f(x))_{n \in {\mathbb {N}}}\) is a G-Cauchy sequence. As (Y, F, T) is G-complete, the limit (3.12) exists.

Now, we prove (3.13). Note that, in view of Lemma 3.3, \({\widehat{\sigma }}_x^k( t)\) is well defined by (3.7) for every \(k\in {\mathbb {N}}_0\), \(x \in X\) and \(t>0\).

Fix \(t>0\), \(x\in X\), \(\alpha \in (0,1)\) and \(n \in {\mathbb {N}}_0\). First, we show that

To this end, observe that (3.16) implies

for every \(m \in {\mathbb {N}}\). Hence, by (3.12) and the continuity of T at the point \(\big (1,\sigma _x^n(\alpha t)\big )\), by letting \(m\rightarrow +\infty \), we obtain (3.18).

Next, we show that

So, fix \(\omega \in \Omega \) and note that (3.17) implies

for every \(m \in {\mathbb {N}}\). Hence, by (3.12) and the continuity of T at the point \(\big (1,\,^{\omega }\sigma ^n_x(\alpha t)\big )\), by letting \(m\rightarrow +\infty \), we obtain

Clearly, (3.22) implies (3.20), which with (3.18) yields (3.13).

Furthermore, by the \(\Lambda \)-contractivity of J,

Since (3.12) means that

by \(({\mathcal {C}}_0)\) we have

Whence, on account of (3.23),

and consequently

Thus, we have shown that \(\psi \) is a fixed point of J.

It remains to prove the statements on the uniqueness of \(\psi \). So, assume that (i) or (ii) holds and \(\psi _1, \psi _2 \in Y^X\) are two fixed points of J such that

with some \(\alpha _1, \alpha _2\in (0,1)\). Then, for all \(x \in X\), \(t > 0\) and \(k \in {\mathbb {N}}_0\), we get

Note yet that, in view of (3.4) and (3.5), each of the conditions (3.9) and (3.10) implies

Hence, by letting \(k\rightarrow \infty \) in (3.24), by the continuity of T at the point (1, 1), we finally obtain that

which means that \(\psi _1 = \psi _2\). \(\square \)

Remark 3.6

If, for a given \(k\in {\mathbb {N}}_0\) and \(x\in X\), the function \({\widehat{\sigma }}_x^k\) is left continuous (which is not necessarily the case, because this depends on the forms of \(\epsilon \) and T), then it is easily seen that (3.13) can be replaced by

Otherwise, for every fixed \(x\in X\) and \(k\in {\mathbb {N}}_0\), the inequality in (3.13) can of course be replaced by

with any fixed \(\alpha _{x,k}\in (0,1)\).

Remark 3.7

The assumptions (i) and (ii) in Theorem 3.5 look nearly the same and (i) is a bit simpler than (ii). However, as we will see below, in some situations (3.10) (with some sequence \((\omega ^k_n)_{n\in {\mathbb {N}}_0}\in \Omega \)) and (3.11) are fulfilled, while (3.9) is not.

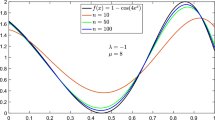

Namely, let \(T=T_M\) and \(\Lambda \) have the following simple form:

with some \(a,b\in (0,\infty )\) (cf. the proof of Corollary 6.4). Then,

Further, assume that X is a normed space, \(p\in [0,\infty )\), \(v\in {\mathbb {R}}_+\) and

Write \(e_0:=ba^{-p}\). Clearly, for every \(n\in {\mathbb {N}}\), \(x\in X\) and \(t>0\),

and therefore

Assume that \(e_0> 1\). Then, by (3.27),

which means that (3.11) holds. Further, for every \(k\in {\mathbb {N}}_0\), (3.28) yields

whence

Consequently, for every \(x \in X\) and \(t > 0\),

and

Hence, (3.9) is not valid and \(\sigma _x^k\) makes no contribution in estimation (3.13).

On the other hand, for every \(x\in X\), \(t>0\) and \(\omega =(\omega _n)_{n\in {\mathbb {N}}_0}\in \Omega \), we have

So, for \({\widehat{\omega }}=({\widehat{\omega }}_n)_{n\in {\mathbb {N}}_0}\in \Omega \), with

we have

Therefore, for every \(x\in X\) and \(t > 0\),

whence

This means that (3.10) holds with \(\omega ^k={\widehat{\omega }}\) for \(k\in {\mathbb {N}}_0\) and

For the situation where (3.10) is valid with sequences \(\omega ^k\in \Omega \) that are not the same for all \(k\in {\mathbb {N}}\), we refer to Remark 5.3.

Remark 3.8

Note that in the proof of Theorem 3.5, we have only used continuity of T at the points of the form \((1,\xi )\) for \(\xi \in (0,1]\). Actually, even that assumption can be weakened. Namely, it is enough to assume that T is continuous only at the point (1, 1), but then we have to modify inequality in (3.13) basing it only on (3.19) and (3.21) without taking the limits.

Remark 3.9

Observe that the properties of the t-norm yield

whence (3.9) implies (3.11). However, since every G-complete RN-space is M-complete (see Remark 2.7) and not necessarily conversely, assumption (iii) is not weaker than (i).

Remark 3.10

Let \(\nu \in {\mathbb {N}}\), \(\xi _1,\ldots ,\xi _{\nu }:X\rightarrow X\), and \(L_1,\ldots ,L_{\nu }:X\rightarrow (0,\infty )\) be fixed. If the operator J has the form

with a function \(H:X\times Y^{\nu }\rightarrow Y\) satisfying the following Lipschitz-type condition:

for all \(x\in X\) and \(y_1,\ldots ,y_{\nu },z_1,\ldots ,z_{\nu }\in Y\), then such J is \(\Lambda \)-contractive with \(\Lambda \) defined by (3.1) and such \(\Lambda \) fulfills hypothesis \(({\mathcal {C}}_0)\) (see Remark 3.2).

Clearly, (3.31) holds if H has the following simple form:

with a fixed function \(h\in Y^X\). Then, (3.30) becomes

In particular, such J satisfies the following Lipschitz-type condition:

If we want to admit functions \(L_i\) taking values in \({\mathbb {R}}\) (i.e., in particular taking the value zero), then we can rewrite that condition in the subsequent form:

Note that if, in such a situation, \(L_i(x)\ne 0\) for some \(i\in \{1,\ldots ,\nu \}\) and some \(x\in X\), then

but if \(L_i(x)=0\), then

In view of Remark 3.10, for operators \(J : Y^X \rightarrow Y^X\) fulfilling condition (3.34), we have the following particular case of Theorem 3.5, with a stronger statement on the uniqueness of fixed point (because under the weaker assumption that (3.14) holds only for \(k=0\)).

Theorem 3.11

Let \({\nu } \in {\mathbb {N}},\) \(\epsilon \in {\mathcal {D}}_+^X,\) \(\xi _1,\ldots , \xi _{\nu } \in X^X,\) \(L_1, \ldots , L_{\nu } : X \rightarrow (0, \infty ),\) \(\Lambda : {\mathcal {D}}_+^X \rightarrow {\mathcal {D}}_+^X\) be defined by (3.1), \(J : Y^X \rightarrow Y^X\) satisfy condition (3.34), and \(f : X \rightarrow Y\) fulfil (3.8). Assume that one of the conditions (i)–(iii) of Theorem 3.5 holds. Then, for every \(x \in X,\) the limit (3.12) exists and the function \(\psi \in Y^X,\) defined in this way, is a fixed point of J satisfying (3.14).

Moreover, if (i) or (ii) holds, then \(\psi \) is the unique fixed point of J such that there is \(\alpha \in (0,1)\) with

Proof

First, fix \(\xi , \eta \in Y^X\) and \(\phi \in {\mathcal {D}}_+^X\) with

Then, by (3.34),

Hence, J is \(\Lambda \)-contractive. Moreover, as we have noticed in Remark 3.10, \(\Lambda \) satisfies hypothesis \(({\mathcal {C}}_0)\). Hence, by Theorem 3.5, limit (3.12) exists for every \(x \in X\) and so defined function \(\psi \) is a fixed point of J satisfying (3.14).

It remains to show the statement on uniqueness of \(\psi \). So, let \(\tau \in Y^X\) be a fixed point of J such that, for some \(\alpha \in (0, 1)\),

Fix \(x \in X\), \(\omega =(\omega _n)_{n \in {\mathbb {N}}_0} \in \Omega \) and \(t > 0\). We show that, for every \(n \in {\mathbb {N}}_0\), we have

This is the case for \(n = 0\), because by the continuity of T, for every \(x \in X\) and \(t > 0\), we have

Analogously,

Since T is continuous, we finally get

Now assume that (3.38) is valid for some \(n \in {\mathbb {N}}_0\). Then, by (2.2) and the continuity of T, for every \(x \in X\) and \( t > 0\),

Next, assume that (3.39) is valid for some \(n \in {\mathbb {N}}_0\). Then in the same way, by (2.2) and the continuity of T, for every \(x \in X\), \( t > 0\), and \(\omega \in \Omega \),

Thus, we have proved (3.38) and (3.39) for every \(n \in {\mathbb {N}}\), \(x\in X\), \(\omega \in \Omega \), and \(t>0\). Whence

Now, if (3.9) holds, then by letting \(n \rightarrow +\infty \) in (3.40), by the continuity of \({\widehat{T}}\), we get \(F_{(\tau - \psi )(x)}(t)=1\) for every \(x\in X\) and \(t>0\), which means that \(\tau = \psi \). Similarly, if (3.10) holds, then we argue analogously by letting \(n \rightarrow +\infty \) in (3.41). \(\square \)

As for the uniqueness of the fixed points of J in Theorem 3.5, we also have the following proposition.

Proposition 3.12

Let \(\Lambda : {\mathcal {D}}_+^X \rightarrow {\mathcal {D}}_+^X,\) \(J : Y^X \rightarrow Y^X\) be \(\Lambda \)-contractive, \(k \in {\mathbb {N}}_0\) and \(\sigma \in \{\sigma ^k\} \cup \{\,^{\omega }\!\sigma ^k : \omega \in \Omega \}\) satisfy

where \(\sigma ^k,\,^{\omega }\!\sigma ^k \in {\mathcal {D}}^X_+\) are given by \(\sigma ^k(x)=\sigma _x^k\) and \(\,^{\omega }\!\sigma ^k(x)=\,^{\omega }\!\sigma _x^k\) for \(x \in X\).

Then, for every \(f : X \rightarrow Y,\) J has at most one fixed point \(\psi _0\) with

Proof

Fix \(f : X \rightarrow Y\) and assume that \(\psi _1, \psi _2 \in Y^X\) are fixed points of J satisfying

Then, by the \(\Lambda \)-contractivity of J,

and consequently,

for every \(m \in {\mathbb {N}}_0\), \(x \in X\) and \(t > 0\). Hence, by letting m tend to \(\infty \), by (3.42) and the continuity of T at the point (1, 1), \(F_{(\psi _1 - \psi _2)(x)} = H_0\) for \(x \in X\). Consequently, \(\psi _1 = \psi _2\). \(\square \)

If X has only one element, then \(Y^X\) can actually be identified with Y and Theorem 3.5 becomes an analog of the classical Banach Contraction Principle (somewhat generalized), given in Corollary 3.14 below. To present it, we need the following hypothesis, concerning mappings \(\lambda : {\mathcal {D}}_+ \rightarrow {\mathcal {D}}_+\), which is a special case of hypothesis \(({\mathcal {C}}_0)\).

-

(C)

The sequence \(\big (\lambda (F_{z_n})\big )_{n \in {\mathbb {N}}}\) converges pointwise to \(H_0\) for each sequence \((z_n)_{n \in {\mathbb {N}}}\) in Y, which converges to 0.

To avoid any ambiguity, let us give one more definition, which is a special case of an earlier definition, namely: Definition 3.1.

Definition 3.13

Let \(\lambda : {\mathcal {D}}_+ \rightarrow {\mathcal {D}}_+\) be given. We say that a mapping \(h : Y \rightarrow Y\) is \(\lambda \)-contractive provided

for every \(z,w \in Y\) and \(\phi \in {\mathcal {D}}_+\) with \(F_{z-w} \ge \phi \).

Corollary 3.14

Let \(\lambda : {\mathcal {D}}_+ \rightarrow {\mathcal {D}}_+\) satisfy hypothesis (C) and \(h : Y \rightarrow Y\) be \(\lambda \)-contractive. Let \(\epsilon \in {\mathcal {D}}_+\) be such that

and assume that one of the following three conditions holds.

- \((\alpha )\):

-

(Y, F, T) is M-complete and

$$\begin{aligned} \lim _{k \rightarrow +\infty }\; \inf _{j \in {\mathbb {N}}_0}\, T_{i=k}^{k+j} (\lambda ^i \epsilon ) \Bigg (\frac{t}{j+1} \Bigg ) =1,\quad t > 0. \end{aligned}$$ - \((\beta )\):

-

(Y, F, T) is M-complete and, for each \(k \in {\mathbb {N}},\) there is a sequence \((\omega ^k_n)_{n \in {\mathbb {N}}_0} \in \Omega \) with

$$\begin{aligned} \lim _{k \rightarrow +\infty }\; \inf _{j \in {\mathbb {N}}_0}\, T_{i=k}^{k+j} (\lambda ^i \epsilon ) \big ( \omega ^k_{i-k} t \big ) =1,\quad t > 0. \end{aligned}$$ - \((\gamma )\):

-

(Y, F, T) is G-complete and

$$\begin{aligned} \lim _{n \rightarrow +\infty } \,(\lambda ^n \epsilon ) =H_0. \end{aligned}$$(3.44)

Then, for every \(\omega = (\omega _n)_{n \in {\mathbb {N}}_0} \in \Omega \) and \( t > 0,\) the limits

exist (in Y and \({\mathbb {R}},\) respectively) and \(z_0\) is a fixed point of h such that

where

Moreover, in case \((\alpha )\) or \((\beta )\) holds, \(z_0\) is the unique fixed point of h for which there exists \(\alpha \in (0,1)\) with

Remark 3.15

Let \(g : {\mathbb {R}} \rightarrow {\mathbb {R}}\) and \(G : [0,1] \rightarrow [0,1]\) be non-decreasing, left continuous and such that \(g(0) = G(0) = 0\), \(G(1) = 1\),

Let \(\lambda : {\mathcal {D}}_+ \rightarrow {\mathcal {D}}_+\) have the form

Then,

which means that (3.44) holds for every \(\epsilon \in {\mathcal {D}}_+\).

A very simple example of such \(\lambda \) is obtained when G is the identity map of [0, 1] (i.e., \(G(t) \equiv t\)) and

with a fixed \(a > 1\). Clearly, then \((\lambda \xi )(t) = \xi (at)\) for \(\xi \in {\mathcal {D}}_+\) and \(t > 0\) and, in this case, the \(\lambda \)-contractive mappings are known as B-contractions or Sehgal contractions (see [67, 88]).

If g is the identity map on \({\mathbb {R}}\) and

with some \(\kappa \in (0,1)\), then \(\lambda \)-contractive mappings are fuzzy contractive (see [43, 67, 83]).

If both (3.45) and (3.46) hold, then \(\lambda \)-contractive mappings are called strict B-contractions (see [67, 85]).

4 Approximate eigenvalues

In this section, we show an application of Theorem 3.5 in investigation of the approximate eigenvalues and eigenvectors, which corresponds to the results in [36, 47].

It is well known that \(Y^X\) is a real linear space with the operations defined pointwise in the usual way:

The next corollary is an example of a result concerning approximate eigenvalues of some linear operators on \(Y^X\). Actually, the assumption of linearity of the operators is not necessary in the proof, but the notion of eigenvalue might be ambiguous without it (see, e.g., [86]) and therefore we confine only to the linear case.

Corollary 4.1

Let \(\gamma \in {\mathbb {R}}{\setminus }\{0\},\) \(\Lambda _0:{\mathcal {D}}_+^{X}\rightarrow {\mathcal {D}}_+^{X}\) satisfy \(({\mathcal {C}}_0),\) and \(J_0 : Y^X \rightarrow Y^X\) be linear and \(\Lambda _0\)-contractive. Assume \(h \in Y^X\) and \(\varepsilon \in {\mathcal {D}}_+^{X}\) satisfy the condition

If one of the conditions (i), (ii) and (iii) of Theorem 3.5 is valid with

then \(\gamma \) is an eigenvalue of \(J_0,\) the limits

exist for every \(x \in X,\) \(\omega =(\omega _n)_{n\in {\mathbb {N}}_0}\in \Omega \) and \(t>0,\) and the function \(\psi _0 \in Y^X,\) given by

is an eigenvector of \(J_0,\) with the eigenvalue \(\gamma ,\) such that

Proof

Let \(\varphi := \gamma h\) and \(J : Y^X \rightarrow Y^X\) be given by:

Then, in view of the \(\Lambda _0\)-contractivity and linearity of \(J_0\), for every \(\mu ,\xi \in Y^X\) and \(\delta \in {\mathcal {D}}_+^X\) with \(F_{(\mu -\xi )(x)}\ge \delta _x\), we have

whence

which means that J is \(\Lambda \) – contractive. Next, we can write (4.1) in the form:

Hence, by Theorem 3.5 and Lemma 3.3, the limits (4.3), (4.4) and (4.5) exist for every \(x\in X\), \(\omega = (\omega _n)_{n \in {\mathbb {N}}_0} \in \Omega \) and \(t > 0\). Moreover, the function \(\psi : X \rightarrow Y\), defined by (3.12), is a fixed point of J with

Write \(\psi _0 := \gamma ^{-1}\psi \). Now, it is easily seen that \(J_0 \psi _0 = J\psi = \psi = \gamma \psi _0\), (4.6) is equivalent to (4.8), and (3.12) yields (4.3). \(\square \)

Clearly, under suitable additional assumptions in Corollary 4.1, we can deduce from Theorem 3.5 some statements on the uniqueness of \(\psi \), and consequently on the uniqueness of \(\psi _0\).

Given \(\varepsilon \in {\mathcal {D}}_+^{X}\), let us introduce the following definition: \(\gamma \in {\mathbb {R}} {\setminus } \{0\}\) is an \(\varepsilon \)-eigenvalue of a linear operator \(J_0 : Y^X \rightarrow Y^X\) provided there exists \(h \in Y^X\) such that \(F_{(J_0 h-\gamma h)(x)} \ge \varepsilon _x\) for \(x\in X\).

It is easily seen that Corollary 4.1 yields the following simple result.

Corollary 4.2

Let \(\Lambda _0:{\mathcal {D}}_+^{X}\rightarrow {\mathcal {D}}_+^{X},\) \(J_0 : Y^X \rightarrow Y^X\) be \(\Lambda _0\)-contractive and linear, and \(\varepsilon \in {\mathcal {D}}_+^{X}\). If \(\gamma \in {\mathbb {R}} {\setminus } \{0\}\) is an \(\varepsilon \)-eigenvalue of \(J_0\) and one of the conditions (i)–(iii) of Theorem 3.5 is valid with \(\Lambda \) given by (4.2), then \(\gamma \) is an eigenvalue of \(J_0\).

5 Ulam stability of functional equations in a single variable

In this section, as before, X is a nonempty set and (Y, F, T) is an RN-space.

As we have mentioned in the Introduction, the main issue of Ulam stability can be very briefly expressed in the following way: when must a function satisfying an equation approximately (in some sense) be near an exact solution to the equation?

The next definition (cf. [25, p. 119, Ch. 5, Definition 8]) makes that notion a bit more precise for the RN-spaces.

Definition 5.1

Let \({\mathcal {E}}\) and \({\mathcal {C}}\) be nonempty subsets of \({\mathcal {D}}_+^{X}\) with \({\mathcal {E}} \subset {\mathcal {C}}\). Let \({\mathcal {T}}\) be an operator mapping \({\mathcal {C}}\) into \({\mathcal {D}}_+^{X}\), \({\mathcal {G}}\) be an operator mapping a nonempty set \({\mathcal {K}} \subset Y^X\) into \(Y^{X}\), and \(\chi _0 \in Y^X\). We say that the equation

is \(({\mathcal {E}},{\mathcal {T}})\) - stable provided for any \(\varepsilon \in {\mathcal {E}}\) and \(\phi _0\in {\mathcal {K}}\) with

there exists a solution \(\phi \in {\mathcal {K}}\) of (5.1) such that

Roughly speaking, \(({\mathcal {E}},{\mathcal {T}})\)-stability of (5.1) means that every approximate (in the sense of (5.2)) solution \(\phi _0\in {\mathcal {K}}\) of (5.1) is always close (in the sense of (5.3)) to an exact solution \(\phi \in {\mathcal {K}}\) of (5.1).

Now, we present a simple Ulam stability outcome that can be derived from the results of the previous sections. To this end, we need the following hypothesis.

-

(H1)

\(\nu \in {\mathbb {N}}\), \(H : X \times Y^{\nu } \rightarrow Y\), \(L_i,\ldots ,L_\nu : X \rightarrow (0,\infty )\) and

$$\begin{aligned} F_{H(x,w_1,\ldots ,w_{\nu })-H(x,z_1,\ldots ,z_{\nu })}&\,(t) \ge T_{i=1}^{\nu } F_{w_i-z_i}\Bigg (\frac{t}{\nu L_i(x)}\Bigg ),\nonumber \\ x \in X,&\ (w_1,\ldots ,w_\nu ),\;(z_1,\ldots ,z_\nu ) \in Y^\nu ,\ t > 0. \end{aligned}$$(5.4)

The subsequent corollary can be easily deduced from Theorem 3.11.

Corollary 5.2

Let hypothesis (H1) be valid, \(\xi _1,\dots ,\xi _{\nu }\in X^X,\) \(f\in Y^X,\) \(\varepsilon \in {\mathcal {D}}_+^{X}\) and

Assume that one of the assumptions (i)–(iii) of Theorem 3.5 is fulfilled with \(\Lambda :{\mathcal {D}}_+^{X}\rightarrow {\mathcal {D}}_+^{X}\) given by

Then, for each \(x \in X,\) \(\omega = (\omega _n)_{n\in {\mathbb {N}}_0}\in \Omega \) and \(t>0,\) the limits

exist (in Y and \({\mathbb {R}},\) respectively), with \(J : Y^X \rightarrow Y^X\) given by :

and the mapping \(\psi \in Y^X\), defined by (5.7), fulfills

Moreover, if one of the condition (i) and (ii) holds, then \(\psi \) is the unique solution of (5.9) such that there is \(\alpha \in (0,1)\) with

Proof

Clearly, inequality (5.5) implies (3.8). Next, hypothesis \(({\mathcal {C}}_0)\) holds (see Remarks 3.2 and 3.10) and (5.4) means that (3.34) is valid. Consequently, by Theorem 3.11 with \({\mathcal {C}}=Y^X\), the function \(\psi \) defined by (5.7) is a fixed point of J (that is a solution of (5.9)) satisfying (5.10) (take \(k=0\) in (3.14)).

The statement on the uniqueness of \(\psi \) also follows from Theorem 3.11. \(\square \)

The stability of functional equations of form (1.2) (or related to it) has already been studied by several authors. For further information, we refer to [4, 23, 25]. A very particular case of (5.9), with H given by (3.32), is the linear functional equation of the form:

with fixed functions \(h\in Y^X\) and \({\widetilde{L}}_1, \ldots , {\widetilde{L}}_{\nu } \in {\mathbb {R}}^X\). That equation is called a linear equation of higher order when \(\xi _i = \xi ^i\) for \(i=1,\ldots ,\nu \), with some \(\xi \in X^X\), i.e., when (5.12) has the form:

Some recent results concerning the stability of less general cases of it can be found in [25, 26, 51, 52, 70, 97].

The simplest case of Eq. (5.13), when \(\nu =1\) and \(0\not \in {\widetilde{L}}_1(X)\), can be rewritten in the form:

which is also called the linear equation. Special cases of (5.14) are the gamma functional equation

for \(X=Y={\mathbb {R}}\), the Schröder functional equation

with fixed \(s\in {\mathbb {R}}{\setminus } \{0\}\), and the Abel functional equation

For more details on Eq. (5.14) and its various particular versions, we refer to [58, 60].

Remark 5.3

Let us consider a situation analogous to that in Remark 3.7, with \(T=T_M\), for the Schröder functional equation (5.15) rewritten as

Clearly, Eq. (5.16) is (5.9) with \(\nu =1\), \(\xi _1=\xi \) and \(H(x,y)=\frac{1}{s}y\) for \(x\in X\) and \(y\in Y\). So we have the case as in Corollary 5.2 with \(\Lambda :{\mathcal {D}}_+^{X}\rightarrow {\mathcal {D}}_+^{X}\) given by

Further, let E be a normed space, \(X:=E{\setminus } \{0\}\), \(p\in {\mathbb {R}}\), \(L\in (0,\infty )\) and

Assume that \(\Vert \xi ^n(x)\Vert ^p\le a_n\Vert x\Vert ^p\) for \(x\in X\) and \(n \in {\mathbb {N}}_0\), with some sequence \((a_n)_{n\in {\mathbb {N}}_0}\) of positive reals such that \(\lim _{n \rightarrow \infty } a_n^{-1} |s|^n = \infty \).

Write \(e_n := a_n^{-1}|s|^n\). Clearly, for every \(n \in {\mathbb {N}}\), \(x \in X\) and \(t > 0\),

whence

which means that (3.11) holds.

Further, assume additionally that

and write

Then,

Now, using (5.17), we get

Consequently, for every \(x\in X\) and \(t>0\),

which means that (3.10) holds with \(\omega ^k:=(\omega _n^k)_{n\in {\mathbb {N}}_0}\in \Omega \). Moreover, for \(\omega :=\omega ^0\) we have

whence

where \(\,^{\omega }\sigma _x^0\) and \({\widehat{\sigma }}_x^0\) have the same meaning as in Corollary 5.2.

6 Stability of Eq. (1.2)

In this section, we are concerned with the stability of the functional equation (1.2) for \(m > 1\). So we assume that X is a linear space over a field \({\mathbb {K}} \in \{ {\mathbb {R}}, {\mathbb {C}}\}\), \(A_i, a_{ij} \in {\mathbb {K}}\) for \(i = 1, \ldots , m\) and \(j = 1, \ldots , n\), and that \(D : X^n \rightarrow Y\) is a fixed function.

It is easily seen that particular cases of the homogeneous version of (1.2), namely of the equation

are the Cauchy functional equation

the Jensen functional equation

the particular version (with \(c = C = 0\)) of the linear equation in two variables

the Jordan–von Neumann (quadratic) functional equation

the Drygas equation

and the Fréchet functional equation

Various information on the Cauchy, Jensen and linear equations can be found in [2, 3, 59]. Equation (6.3) (the parallelogram law) was used by Jordan and von Neumann [50] in a characterization of the inner product spaces and Eqs. (6.4) and (6.5) were applied for the analogous purposes (cf. [8, 38, 55]); we refer to [12, 15, 35, 49, 53, 54, 71, 72, 77,78,79,80, 85] for further related information and stability results for those equations.

Let \(\Delta :Y^X \rightarrow Y^X\) denote the Fréchet difference operator given by

Write

and

for functions \(f\in Y^X\). Recurrently, we define

It is easily seen that the equations

are particular cases of (6.1). Functions \(f:X\rightarrow Y\) satisfying (6.6) and (6.7) are called polynomial functions of order \(n-1\) and monomial functions of order n, respectively (see, e.g., [42, 49, 59, 61, 93] for information on their solutions and stability).

Let us mention yet that (6.5) can be written as

where

and

i.e., \(C^2f\) is the Cauchy difference of f of the second order. Recurrently,

for \(x_1, \ldots ,x_{n},u,w\in X,\) and \(n\in {\mathbb {N}}\). It is easily seen that the equation

also is of the form (6.1) for every \(n\in {\mathbb {N}}\).

The functional equation

(M, N, m, n being non-zero integers) is another particular case of (6.1). It has been studied in [29,30,31,32]. The Eq. (6.8) with \(M=m=3\) and \(N=n=2\) was considered for the first time by Popoviciu [81] in connection with some inequalities for convex functions; for results on solutions and stability of it, we refer to [92, 94]. Solutions and stability of (6.8) with \(M=m=3\) and \(N=n=2\) have been investigated by Lee [62]. The more general case \(N=n^2\) and \(M=m^2\) of (6.8) has been studied in [63]. For results on a generalization of (6.8) we refer to [95].

Finally, let us recall here the equation of p-Wright affine functions (called also the p-Wright functional equation)

where \(p \in {\mathbb {R}}\) is fixed, which also is of form (6.1). For more information on (6.9) and recent results on its stability we refer to [11, 18].

Our main theorem in this section concerns the Ulam-type stability of Eq. (1.2) in RN-spaces. The following two hypotheses are needed to formulate it.

- \(({\mathcal {M}})\):

-

There exist \(\mu \in \{1, \cdots , m-1\}\) and \(c_1, \ldots , c_n\in {\mathbb {K}}\) such that

$$\begin{aligned} A_0 := \Bigg |\sum _{i =\mu +1 }^{m} A_i \Bigg | > 0,\quad \beta _{i} = 1, \qquad i=\mu +1,\ldots ,m, \end{aligned}$$where \(\beta _{i} := \sum _{j=1}^n a_{ij} c_j\) for \(i=1,\ldots ,m\).

- \(({\mathfrak {D}})\):

-

For every \(x_1,\ldots ,x_n\in X,\)

$$\begin{aligned} \sum _{i=1}^mA_i d\Bigg (\sum _{j=1}^n a_{ij} x_j\Bigg ) =\sum _{i=1}^m A_iD\Bigg (\beta _i x_1,\ldots ,\beta _i x_n\Bigg ), \end{aligned}$$(6.10)where \(\beta _i\) is defined as in hypothesis \(({\mathcal {M}})\) and \(d(x)=D(c_1x, \ldots , c_n x)\) for \(x\in X\).

The next two remarks provide some comments on those hypotheses.

Remark 6.1

If \(\sum _{j=1}^n |a_{mj}| \ne 0\), then there exist \(c_1, \ldots , c_n\in {\mathbb {K}}\) such that \(\sum _{j=1}^n a_{mj} c_j = 1\). Therefore, hypothesis \(({\mathcal {M}})\) is fulfilled with \(\mu = m - 1\). However, because of the forms of the conditions (i)–(iii) of Theorem 3.5 and (6.13), it makes sense to consider (for some cases of the Eq. (1.2) and some functions \(\theta \)) also the situations with \(\mu < m - 1\).

For instance, for the Cauchy equation (6.2) and its inhomogeneous form

we can consider the following two situations (we refer to Corollary 6.4 and its proof for consequences in both of them).

-

(a1)

If (6.11) is written in the form (1.2) as \(f(x_1+x_2)-f(x_1)-f(x_2)=D(x,y)\), then \(m = 3\), \(n = 2\), \(A_1 = 1\), \(A_2 = A_3 = -1\), \(a_{11} = a_{12} = 1\), \(a_{21} = 1\), \(a_{22} = 0\), \(a_{31} = 0\), \(a_{32} = 1\). In the matrix form, we can write \(a_{ij}\) as

$$\begin{aligned} (a_{ij})_{1 \le i \le 3,\atop 1 \le j \le 2} = \begin{pmatrix} 1 &{} 1 \\ 1 &{} 0 \\ 0 &{} 1 \\ \end{pmatrix}. \end{aligned}$$Clearly, \(({\mathcal {M}})\) is valid with \(\mu =1\), \(A_0=2\), and \(c_1=c_2=1\).

-

(a2)

If (6.11) is written in the form (1.2) as \(-f(x_1)-f(x_2)+f(x_1+x_2)=D(x,y)\), then \(m=3\), \(n=2\), \(A_1=A_2=-1\), \(A_3=1\), \(a_{11}=1\), \(a_{12}=0\), \(a_{21}=0\), \(a_{22}=1\), \(a_{31}=a_{32}=1\). In the matrix form, we can write \(a_{ij}\) as

$$\begin{aligned} (a_{ij})_{1 \le i \le 3,\atop 1 \le j \le 2} = \begin{pmatrix} 1 &{} 0 \\ 0 &{} 1 \\ 1 &{} 1 \\ \end{pmatrix}, \end{aligned}$$and \(({\mathcal {M}})\) is valid with \(\mu = 2\), \(A_0 = 1\), and \(c_1 = c_2 = 1/2\).

Remark 6.2

(b0) Clearly, if D is a constant function, then hypothesis \(({\mathfrak {D}})\) is valid (this case includes Eq. (1.1)). Moreover, if functions \(D_1,D_2:X^n\rightarrow Y\) satisfy the hypothesis, then so does the function \(\alpha _1 D_1+ \alpha _2 D_2\) with any fixed scalars \(\alpha _1,\alpha _2\). Below, we provide more examples of nontrivial functions D satisfying the hypothesis.

(b1) Consider the situation (a1) depicted in the previous remark, with \(m = 3\), \(n = 2\), \(A_1 = 1\), \(A_2 = A_3 = -1\), \(a_{11} = a_{12} = 1\), \(a_{21} = 1\), \(a_{22} = 0\), \(a_{31} = 0\), \(a_{32} = 1\), \(\mu =1\), and \(c_1=c_2=1\). Then \(\beta _{1}=2\), \(\beta _{2}=\beta _{3}=1\) and condition (6.10) takes the form

Observe that condition (6.12) holds in each of the following three cases:

-

D is a symmetric biadditive function (i.e., \(D(x_1,x_2)= D(x_2,x_1)\) and \(D(x_1,x_2+x_3)= D(x_1,x_2)+D(x_1,x_3)\) for \(x_1,x_2,x_3\in X\));

-

there exist additive \(h_1,h_2:X\rightarrow X\) such that \(D(x_1,x_2)= h_1(x_1)+h_2(x_2)\) for \(x_1,x_2\in X\);

-

there exists \(\rho :X\rightarrow Y\) such that \(D(x_1,x_2)= \rho (x_1+x_2)-\rho (x_1)-\rho (x_2)\) for \(x_1,x_2\in X\).

(b2) In the situation (a2) depicted in the previous remark, with \(m=3\), \(n=2\), \(A_1=A_2=1\), \(A_3=-1\), \(a_{11}=1\), \(a_{12}=0\), \(a_{21}=0\), \(a_{22}=1\), \(a_{31}=a_{32}=1\), \(\mu = 2\), \(c_1 = c_2 = 1/2\), \(\beta _{2}=\beta _{3}=1/2\) and \(\beta _{3}=1\), condition (6.10) takes the form

which is actually (6.12) (it is enough to replace \(x_i\) by \(2x_i\) and multiply both sides by \(-1\)).

(b3) More generally, if \(h_1,\ldots ,h_n:X\rightarrow Y\) are solutions to equation (6.1), then the function \(D:X^n\rightarrow Y\), given by

fulfills hypothesis \(({\mathfrak {D}})\). In fact, fix \(x_1, \ldots , x_n\in X\). Then, according to the definition of d,

whence we get (6.10).

Theorem 6.3

Let hypotheses \(({\mathcal {M}})\) and \(({\mathfrak {D}})\) be valid and \(\theta : X^n \rightarrow {\mathcal {D}}_+\) satisfy

where \({\mathcal {T}} : {\mathcal {D}}_+^{X^n} \rightarrow {\mathcal {D}}_+^{X^n}\) is given by

Further, assume that one of the conditions (i)–(iii) of Theorem 3.5 holds with \(\epsilon \in {\mathcal {D}}_+^X\) and \(\Lambda : {\mathcal {D}}_+^X \rightarrow {\mathcal {D}}_+^X\) defined by

If \(f : X \rightarrow Y\) fulfills

then there is a solution \(\psi : X \rightarrow Y\) of Eq. (1.2) such that

with \({\widehat{\sigma }}_x^0\) defined by (3.7) (see also (3.2) and (3.3)).

Moreover, in case where (i) or (ii) holds, there is exactly one solution \(\psi \in Y^X\) of (1.2) such that there exists \(\alpha \in (0,1)\) with

Proof

Write \(|\alpha |=1/A_0\) and fix \(x \in X\). Putting \(x_j := c_j x\) for \(j \in \{1, \ldots , n\}\) in (6.17), we get

Moreover,

Therefore,

Consequently,

with the operator \(J : Y^X \rightarrow Y^X\) defined by

Note that the assumptions of Theorem 3.11 are satisfied for such J, because, for every \(\xi ,\eta \in Y^X\) and \(x \in X\),

whence

This means that the condition (3.34) is fulfilled with \(\xi _i(x)=\beta _{i} x\) and \(L_i(x)=|A_i|/A_0\). Consequently, by Theorem 3.11, for every \(k\in {\mathbb {N}}_0\), \(x \in X\) and \(t>0\), the limit (3.12) exists and the function \(\psi \in Y^X\) is a fixed point of J fulfilling (6.18).

Moreover, if one of the conditions (i), (ii) holds, then \(\psi \) is the unique fixed point of J such that there is \(\alpha \in (0,1)\) with

Now, we show that \(\psi \) is a solution to (6.1). To this end, observe that

First, we prove by induction that, for each \(k\in {\mathbb {N}}_0\) and \(x_1,\ldots ,x_n \in X\),

The case \(k=0\) is (6.17). So fix \(k\in {\mathbb {N}}_0\) and assume that (6.23) holds. Then, by hypothesis \(({\mathfrak {D}})\), for every \(x_1, \ldots , x_n \in X\),

Hence, by (6.14) and the assumed inequality (6.23),

Thus we have proved that (6.23) holds for each \(k\in {\mathbb {N}}_0\). Now, by letting \(k\rightarrow \infty \) in (6.23), in view of (6.13), for every \(x_1,\ldots ,x_n \in X\), we get

which means that

Consequently, by (6.22),

To complete the proof, observe that every solution of (1.2) is a fixed point of J and therefore the statement on uniqueness follows directly from the uniqueness property of \(\psi \) as a fixed point of J satisfying (6.21). \(\square \)

Using Theorem 6.3, we can obtain various stability results for numerous equations. For instance, for the Cauchy inhomogeneous equation (6.11) we can argue as in the following corollary.

Corollary 6.4

Assume that \(T=T_M,\) Y is M-complete, \(\Vert \ \Vert \) is a norm on X, \(D:X^2\rightarrow Y\) satisfies condition (6.12), \(p,v_1, v_2 \in [0,\infty ),\) \(p\ne 1,\) \(v_1 + v_2 \ne 0,\) and \(f : X \rightarrow Y\) satisfies

Then there exists a unique solution \(\psi : X \rightarrow Y\) to the Cauchy inhomogeneous equation (6.11) such that

where \({\widehat{\sigma }}_x^0(t)\) is defined by (3.7) and

Proof

Equation (6.11) is (1.2) with \(m = 3\) and \(n = 2\). Next, Remark 6.2 shows that condition (6.12) means that D fulfills hypothesis \(({\mathfrak {D}})\). So, we use Theorem 6.3 with

and consider two separate cases: \(p<1\) and \(p>1\).

The first case (\(p<1\)) coincides with the situation (a1) of Remark 6.1, with \(A_1 = - A_2 = - A_3 = 1\) and

when hypothesis \(({\mathcal {M}})\) is valid with \(\mu = 1\) and \(c_1 = c_2 = 1\). Then, \(A_0 = 2\), \(\beta _1 = a_{11} c_1 + a_{12}c_2 = 2\), and consequently (see (6.14)–(6.16))

with \(v:=(v_1 + v_2)/2\).

Arguing as in Remark 3.7 (with \(a = b = 2\) and \(e_0 =ba^{-p}= 2^{1-p} >1\)), we obtain that \(\Lambda \) satisfies condition (3.10) (with some sequence \((\omega ^k_n)_{n\in {\mathbb {N}}_0}\in \Omega \)) and

with

Moreover, by (6.29),

which means that (6.13) is fulfilled. Therefore our statement for \(p<1\) results from Theorem 6.3.

In the case \(p > 1,\) we need situation (a2) of Remark 6.1, with \(-1=A_1 = A_2 = - A_3\) and

when hypothesis \(({\mathcal {M}})\) holds with \(\mu = 2\), \(A_0 = 1\) and \(c_1 = c_2 = 1/2\). The reasoning is analogous to the case \(p<1\), but for the convenience of the reader, we present it in some details. Namely, when \(\beta _1 =\beta _2 = 1/2\), \(\epsilon _x(t)\) is given by (6.30), but with \(v=2^{-p}(v_1+v_2)\), and for every \(x, x_1, x_2 \in X\), \(t>0\) and \(\delta \in {\mathcal {D}}_+^X\),

According to Remark 3.7 (with \(a = b = 1/2\) and consequently \(e_0 := ba^{-p} = 2^{p-1} > 1\)), condition (3.10) is satisfied and

with

Note yet that, for \(x_1, x_2 \in X\) and \(t > 0\), we have

This means that (6.13) is fulfilled. Therefore, also our statement for \(p>1\) results from Theorem 6.3. \(\square \)

Remark 6.5

According to Remark 6.2 ((b0) and (b1)), the function D in Corollary 6.4 can be of the following form:

with any fixed: \(g:X\rightarrow Y\), additive \(u_1,u_2:X\rightarrow Y\), biadditive symmetric \(u_3:X^2\rightarrow Y\), and \(z_0\in Y\).

References

Abdou, A.A.N., Cho, Y.J., Khan, L., Kim, S.S.: On stability of quintic functional equations in random spaces. J. Comput. Anal. Appl. 23, 624–634 (2017)

Aczél, J.: Lectures on Functional Equations and their Applications. Academic Press, New York (1966)

Aczél, J., Dhombres, J.: Functional Equations in Several Variables. Encyclopedia of Mathematics and Its Applications, vol. 31. Cambridge University Press, Cambridge (1989)

Agarwal, R.P., Xu, B., Zhang, W.: Stability of functional equations in single variable. J. Math. Anal. Appl. 288, 852–869 (2003)

Alqahtani, B., Fulga, A., Karapinar, E.: Fixed point results on \(\Delta \)-symmetric; quasi-metric space via simulation function with an application to Ulam stability. Mathematics 6(10), 208 (2018). https://doi.org/10.3390/math6100208

Alshybani, S., Vaezpour, S.M., Saadati, R.: Generalized Hyers-Ulam stability of mixed type additive-quadratic functional equation in random normed spaces. J. Math. Anal. 8, 12–26 (2017)

Alsina, C.: On the stability of a functional equation arising in probabilistic normed spaces. In: General Inequalities 5, pp. 263–271. Birkhäuser, Basel (1987)

Alsina, C., Sikorska, J., Tomás, M.S.: Norm Derivatives and Characterizations of Inner Product Spaces. World Scientific Publishing Co., Singapore (2010)

Alsulami, H., Galyaz, S., Karapinar, E., Erhan, I.: An Ulam stability result on quasi-b-metric-like spaces. Open Math. (2016). https://doi.org/10.1515/math-2016-0097

Aoki, T.: On the stability of the linear transformation in Banach spaces. J. Math. Soc. Jpn. 2, 64–66 (1950)

Bahyrycz, A., Brzdęk, J., Piszczek, M.: On approximately \(p\)-Wright affine functions in ultrametric spaces. J. Funct. Spaces Appl. (2013). Art. ID 723545

Bahyrycz, A., Brzdęk, J., Piszczek, M., Sikorska, J.: Hyperstability of the Fréchet equation and a characterization of inner product spaces. J. Funct. Spaces Appl. (2013). Art. ID 496361

Bahyrycz, A., Olko, J.: On stability of the general linear equation. Aequ. Math. 89, 1461–1474 (2015)

Bahyrycz, A., Olko, J.: Hyperstability of general linear functional equation. Aequ. Math. 90, 527–540 (2016)

Bahyrycz, A., Piszczek, M.: Hyperstability of the Jensen functional equation. Acta Math. Hung. 142, 353–365 (2014)

Benzarouala, C., Oubbi, L.: Ulam-stability of a generalized linear functional equation, a fixed point approach. Aequ. Math. 94, 989–1000 (2020)

Benzarouala, C., Oubbi, L.: A purely fixed point approach to the Ulam-Hyers stability and hyperstability of a general functional equation. In: Brzdęk, J., et al. (eds.) Ulam Type Stability, pp. 47–56. Springer Nature, Switzerland (2019)

Brzdęk, J.: Stability of the equation of the \(p\)-Wright affine functions. Aequ. Math. 85, 497–503 (2013)

Brzdęk, J.: Hyperstability of the Cauchy equation on restricted domains. Acta Math. Hung. 141, 58–67 (2013)

Brzdęk, J., Chudziak, J., Páles, Z.: A fixed point approach to stability of functional equations. Nonlinear Anal. Theory Methods Appl. 74, 6728–6732 (2011)

Brzdęk, J., Cădariu, L., Ciepliński, K.: Fixed point theory and the Ulam stability. J. Funct. Spaces (2014). Art. ID 829419

Brzdęk, J., Ciepliński, K.: A fixed point approach to the stability of functional equations in non-Archimedean metric spaces. Nonlinear Anal. Theory Methods Appl. 74, 6861–6867 (2011)

Brzdęk, J., Ciepliński, K., Leśniak, Z.: On Ulam’s type stability of the linear equation and related issues. Discret. Dyn. Nat. Soc. (2014). Art. ID 536791

Brzdęk, J., Karapınar, E., Petruşel, A.: A fixed point theorem and the Ulam stability in generalized dq-metric spaces. J. Math. Anal. Appl. 467, 501–520 (2018)

Brzdęk, J., Popa, D., Raşa, I., Xu, B.: Ulam Stability of Operators. Academic Press, Oxford (2018)

Brzdęk, J., Popa, D., Xu, B.: On approximate solutions of the linear functional equation of higher order. J. Math. Anal. Appl. 373, 680–689 (2011)

Cădariu, L., Radu, V.: Fixed points and the stability of Jensen’s functional equation. J. Inequal. Pure Appl. Math. 4(1) (2003). Art. 4

Cho, Y.J., Rassias, T.M., Saadati, R.: Stability of Functional Equations in Random Normed Spaces. Springer Optimization and Its Applications, vol. 86. Springer, Berlin (2013)

Chudziak, M.: On a generalization of the Popoviciu equation on groups. Ann. Univ. Pedagog. Crac. Stud. Math. 9, 49–53 (2010)

Chudziak, M.: Stability of the Popoviciu type functional equations on groups. Opusc. Math. 31, 317–325 (2011)

Chudziak, M.: On solutions and stability of functional equations connected to the Popoviciu inequality. Ph.D. Thesis (in Polish), Pedagogical University of Cracow (Poland), Cracow (2012)

Chudziak, M.: Popoviciu type functional equations on groups. In: Rassias, Th.M., Brzdȩk, J. (eds.) Functional Equations in Mathematical Analysis, pp. 417–426. Springer Optimization and Its Applications, vol. 52. Springer, Berlin (2011)

Dales, H.G., Moslehian, M.S.: Stability of mappings on multi-normed spaces. Glasg. Math. J. 49, 321–332 (2007)

Diaz, J., Margolis, B.: A fixed point theorem of the alternative for contractions on a generalized complete metric space. Bull. Am. Math. Soc. 74, 305–309 (1968)

Dragomir, S.S.: Some characterizations of inner product spaces and applications. Stud. Univ. Babes-Bolyai Math. 34, 50–55 (1989)

Drmač, Z., Veselić, K.: Approximate eigenvectors as preconditioner. Linear Algebra Appl. 309, 191–215 (2000)

Forti, G.L.: An existence and stability theorem for a class of functional equations. Stochastica 4, 23–30 (1980)

Fréchet, M.: Sur la définition axiomatique d’une classe d’espaces vectoriels distanciés applicables vectoriellement sur l’espace de Hilbert. Ann. Math. 2(36), 705–718 (1935)

Gajda, Z.: On stability of additive mappings. Int. J. Math. Math. Sci. 14, 431–434 (1991)

Gǎvruta, P.: A Generalization of the Hyers-Ulam-Rassias stability of approximately additive mappings. J. Math. Anal. Appl. 184, 431–436 (1994)

George, A., Veeramani, P.: On some results in fuzzy metric spaces. Fuzzy Sets Syst. 64, 395–399 (1994)

Gilányi, A.: Hyers-Ulam stability of monomial functional equations on a general domain. Proc. Natl. Acad. Sci. USA 96, 10588–10590 (1999)

Gregori, V., Sapena, A.: On fixed-point theorems in fuzzy metric spaces. Fuzzy Sets Syst. 125, 245–253 (2002)

Guillen, B.L., Harikrishnan, P.: Probabilistic Normed Spaces. Imperial College Press, London (2014)

Hadžić, O., Pap, E.: Fixed Point Theory in Probabilistic Metric Spaces. Kluwer Academic Publishers, Dordrecht (2001)

Hassan, A.M., Karapinar, E., Alsulami, H.H.: Ulam–Hyers stability for MKC mappings via fixed point theory. J. Funct. Space (2016). Article ID 9623597

Hecker, D., Lurie, D.: Using least-squares to find an approximate eigenvector. Electron. J. Linear Algebra 16, 99–110 (2007)

Hyers, D.H.: On the stability of the linear functional equation. Proc. Natl. Acad. Sci. USA 27, 222–224 (1941)

Hyers, D.H., Isac, G., Rassias, T.M.: Stability of Functional Equations in Several Variables. Birkhäuser, Basel (1998)

Jordan, P., von Neumann, J.: On inner products in linear metric spaces. Ann. Math. Second Ser. 36, 719–723 (1935)

Jung, S.M., Rassias, T.M.: A linear functional equation of third order associated to the Fibonacci numbers. Abstr. Appl. Anal. (2014). Art. ID 137468

Jung, S.M., Popa, D., Rassias, T.M.: On the stability of the linear functional equation in a single variable on complete metric groups. J. Glob. Optim. 59, 165–171 (2014)

Jung, S.M.: Hyers–Ulam–Rassias Stability of Functional Equations in Nonlinear Analysis. Springer Optimization and Its Applications, vol. 48. Springer, New York (2011)

Kannappan, P.: Quadratic functional equation and inner product spaces. Results Math. 27, 368–372 (1995)

Kannappan, P.: Functional Equations and Inequalities with Applications. Springer, Berlin (2009)

Karapinar, E., Fulga, A.: An admissible hybrid contraction with an Ulam type stability. Demonstr. Math. 52, 428–436 (2019)

Kim, S.S., Rassias, J.M., Hussain, N., Cho, Y.J.: Generalized Hyers-Ulam stability of general cubic functional equation in random normed spaces. Filomat 30, 89–98 (2016)

Kuczma, M.: Functional Equations in a Single Variable. PWN-Polish Scientific Publishers, Warsaw (1968)

Kuczma, M.: An Introduction to the Theory of Functional Equations and Inequalities. Cauchy’s Equation and Jensen’s Inequality. Birkhäuser, Basel (2009)

Kuczma, M., Choczewski, B., Ger, R.: Iterative Functional Equations. Encyclopedia of Mathematics and Its Applications, vol. 32. Cambridge University Press, Cambridge (1990)

Lee, Y.H.: On the Hyers-Ulam-Rassias stability of the generalized polynomial function of degree 2. J. Chungcheong Math. Soc. 22, 201–209 (2009)

Lee, Y.W.: On the stability on a quadratic Jensen type functional equation. J. Math. Anal. Appl. 270, 590–601 (2002)

Lee, Y.W.: Stability of a generalized quadratic functional equation with Jensen type. Bull. Korean Math. Soc. 42, 57–73 (2005)

Li, C.-Y., Karapinar, E., Chen, C.-M.: A discussion on random Meir-Keeler contractions. Mathematics 8, 245 (2020). https://doi.org/10.3390/math8020245

Mecheraoui, R., Mukheimer, A., Radenović, S.: From G-Completeness to M-Completeness. Symmetry 11 (2019). Art. ID 839

Menger, K.: Statistical metrics. Proc. Natl. Acad. Sci. USA 28, 535–537 (1942)

Miheţ, D.: A Banach contraction theorem in fuzzy metric spaces. Fuzzy Sets Syst. 144, 431–439 (2004)

Miheţ, D., Radu, V.: On the stability of the additive Cauchy functional equation in random normed spaces. J. Math. Anal. Appl. 343, 567–572 (2008)

Mirzavaziri, M., Moslehian, M.S.: A fixed point approach to stability of a quadratic equation. Bull. Braz. Math. Soc. 37(3), 361–376 (2006)

Mortici, C., Rassias, M.T., Jung, S.M.: On the stability of a functional equation associated with the Fibonacci numbers. Abstr. Appl. Anal. (2014). Art. ID 546046

Moslehian, M.S., Rassias, J.M.: A characterization of inner product spaces concerning an Euler-Lagrange identity. Commun. Math. Anal. 8, 16–21 (2010)

Nikodem, K., Páles, Z.: Characterizations of inner product spaces by strongly convex functions. Banach J. Math. Anal. 5, 83–87 (2011)

Oubbi, L.: Ulam-Hyers-Rassias stability problem for several kinds of mappings. Afr. Mat. 24, 525–542 (2013)

Oubbi, L.: Hyers-Ulam stability of a functional equation with several parameters. Afr. Mat. 27, 1199–1212 (2016)

Phochai, T., Saejung, S.: Hyperstability of generalised linear functional equations in several variables. Bull. Austral. Math. Soc. 102, 293–302 (2020)

Pinelas, S., Govindan, V., Tamilvanan, K.: Stability of cubic functional equation in random normed space. J. Adv. Math. 14, 7864–7877 (2018)

Piszczek, M.: Remark on hyperstability of the general linear equation. Aequ. Math. 88, 163–168 (2014)

Piszczek, M.: Hyperstability of the general linear functional equation. Bull. Korean Math. Soc. 52, 1827–1838 (2015)

Piszczek, M., Szczawińska, J.: Hyperstability of the Drygas functional equation. J. Funct. Spaces Appl. (2013). ID 912718

Piszczek, M., Szczawińska, J.: Stability of the Drygas functional equation on restricted domain. Results Math. 68, 11–24 (2015)

Popoviciu, T.: Sur certaines inégalités qui caractérisent les fonctions convexes, An. Ştiinţt. Univ. “Al. I. Cuza” Iaşi Secţ. I a Mat. (N.S.) 11, 155–164 (1965)

Radu, V.: The fixed point alternative and the stability of functional equations. Fixed Point Theory 4, 91–96 (2003)

Radu, V.: Some remarks on the probabilistic contractions on fuzzy Menger spaces. In: The Eighth International Conference on Applied Mathematics and Computer Science, Cluj-Napoca, 2002. Automation, Computers, Applied Mathematics, vol. 11, no. 1, pp. 125–131 (2002)

Rassias, T.M.: On the stability of the linear mapping in Banach spaces. Proc. Am. Math. Soc. 72, 297–300 (1978)

Rassias, T.M.: New characterizations of inner product spaces. Bull Sci. Math. 2(108), 95–99 (1984)

Santucci, P., Väth, M.: On the definition of eigenvalues for nonlinear operators. Nonlinear Anal. 40, 565–576 (2000)

Schweizer, B., Sklar, A.: Probabilistic Metric Spaces. North-Holland, Amsterdam (1983)

Sehgal, V.M., Bharucha-Reid, A.T.: Fixed points of contraction mappings on PM-spaces. Math. Syst. Theory 6, 97–100 (1972)

Šerstnev, A.N.: Random normed spaces: problems of completeness. Kazan Gos. Univ. Učhen Zap. 122, 3–20 (1962)

Šerstnev, A.N.: On the notion of a random normed space. Dokl. Akad. Nauk SSSR 149(2), 280–283 (1963). ((in Russian))

Šerstnev, A.N.: Best approximation problem in random normed spaces. Dokl. Acad. Nauk SSSR 149(3), 539–542 (1963)

Smajdor, W.: Note on a Jensen type functional equation. Publ. Math. Debr. 63, 703–714 (2003)

Székelyhidi, L.: Convolution Type Functional Equations on Topological Abelian Groups. World Scientific, Singapore (1991)

Trif, T.: Hyers-Ulam-Rassias stability of a Jensen type functional equation. J. Math. Anal. Appl. 250, 579–588 (2000)

Trif, T.: On the stability of a functional equation deriving from an inequality of Popoviciu for convex functions. J. Math. Anal. Appl. 272, 604–616 (2002)

Ulam, S.M.: Problems in Modern Mathematics. Chapter VI. Science Editions. Wiley, New York (1960)

Xu, B., Brzdęk, J., Zhang, W.: Fixed point results and the Hyers-Ulam stability of linear equations of higher orders. Pac. J. Math. 273, 483–498 (2015)

Zhang, D.: On hyperstability of generalised linear functional equations in several variables. Bull. Aust. Math. Soc. 92, 259–267 (2015)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Benzarouala, C., Brzdęk, J. & Oubbi, L. A fixed point theorem and Ulam stability of a general linear functional equation in random normed spaces. J. Fixed Point Theory Appl. 25, 33 (2023). https://doi.org/10.1007/s11784-022-01034-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s11784-022-01034-8

Keywords

- Fixed point

- function space

- general linear functional equation

- random normed space

- Ulam stability

- approximate eigenvalue

- approximate eigenvector