Abstract

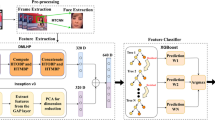

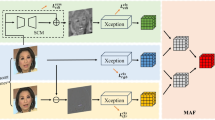

In recent years, the nefarious exploitation of video face forgery technology has emerged as a grave threat, not only to personal property security but also to the broader stability of states and societies. Although numerous models and methods have emerged for video face forgery detection, these methods fall short in recognizing subtle traces of forgery in local regions, and the performance of the detection models is often affected to some extent when dealing with specific forgery strategies. To solve this problem, we propose a model based on multiple feature fusion network (MFF-Net) for video face forgery detection. The model employs Res2Net50 to extract texture features of the video, which realizes deeper texture feature extraction. By integrating the extracted texture and frequency feature into a temporal feature extraction module, which includes a three-layer LSTM network, the detection model fully incorporates the diverse features of the video information, thus identifying the subtle artifacts more effectively. To further enhance the discrimination ability of the model, we have also introduced a texture activation module (TAM) in the texture feature extraction section. It helps to enhance the saliency of subtle forgery traces, thus improving the detection of specific forgery strategies. In order to verify the effectiveness of the proposed method, we conduct experiments on several generalized datasets such as FaceForensics++ and DFD. The experimental results demonstrate that the MFF-Net model can recognize subtle forgery traces more effectively, especially in the case of a particular forgery strategy, and the model exhibits excellent performance and high detection accuracy.

Similar content being viewed by others

Data availability

Deepfake-Timit data available at https://www.idiap.ch/dataset/deepfaketimit; FaceForensics++ data available at https://github.com/ondyari/ FaceForensics; Celeb-DF data available at https://github.com/yuezunli/celeb-deepfakeforensics; DFD data available at https://link.zhihu.com/? target = https%3A//github.com/ondyari/FaceForensicsreference.

References

Xie, T., Yu, L., Luo, S., et al.: A review of deep face forgery and detection techniques. J. Tsinghua Univ. (Natural Science Edition) 63(9), 1350–1365 (2023)

Zhang, L., Lu, T., Du, Y.: A review of deep forgery detection methods for face videos. Comput. Sci. Explor. 17(1), 1–26 (2023)

Li, Z., Zhang, X., Pu, Y., et al.: A review of multimodal depth forgery and detection techniques. Comput. Res. Develop. 60(6), 1396–1416 (2023)

Li, X., Ji, S., Wu, C., et al.: A review of deep forgery and detection techniques. J. Softw. 32(2), 496–518 (2021)

Sabir, E., Cheng, J. X., Jaiswal, A., et al.: Recurrent convolutional strategies for face manipulation detection in videos. In: Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, Jun 16–20, pp. 80–87. IEEE, Piscataway (2019)

Thies, J., Zollhofer, M., Stamminger, M., et al.: Face2Face: real-time face capture and reenactment of RGB videos. Commun. ACM. ACM 62(1), 96–104 (2019)

Thies, J., Zollhofer, M., Nießner, M.: Deferred neural rendering: image synthesis using neural textures. ACM Trans. Graph. 38(4), 1–12 (2019)

Kuang, Z., Guo, Z., Fang, J., et al.: Unnoticeable synthetic face replacement for image privacy protection. Neurocomputing 457, 322–333 (2021)

Wei, R., Wang, P.: SeTGAN: semantic-text guided face image generation. Comput. Anim. Virt. Worlds 34(3), e2155 (2023)

Haliassos, A., Vougioukas, K., Petridis, S., et al.: Lips don’t lie: a generalisable and robust approach to face forgery detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5039–5049 (2021)

Wang, C., Deng, W.: Representative forgery mining for fake face detection. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 14918–14927 (2021)

Agarwal, S., Farid, H., El-Gaaly, T., et al.: Detecting deep-fake videos from appearance and behavior. In: Proceedings of the 12th IEEE International Workshop on Information Forensics and Security, New York, Dec 6–11, pp. 1–6. IEEE, Piscataway (2020)

Cozzolino, D., Rossler, A., Thies, J., et al.: ID-Reveal: identity-aware deepfake video detection. In: Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Oct 10–17, pp. 15088–15097. IEEE, Piscataway (2021)

Qi, H., Guo, Q., Xu, J., et al.: DeepRhythm: exposing deepfakes with attentional visual heartbeat rhythms. In: Proceedings of the 28th ACM International Conference on Multimedia, Seattle, Oct 12–16, 2020, pp. 4318–4327. ACM, New York (2020)

Xu, K., Yang, G., Fang, X., et al.: Facial depth forgery detection based on image gradient. Multimed. Tools Appl. 82, 29501–29525 (2023)

Wang, G., Jiang, Q., Jin, X., et al.: MC-LCR: multimodal contrastive classification by locally correlated representations for effective face forgery detection. Knowl. Based Syst. 250, 109114 (2022)

Kingra, S., Aggarwal, N., Kaur, N.: SiamNet: exploiting source camera noise discrepancies using Siamese network for Deepfake detection. Inf. Sci. 645, 119341 (2023)

Ganguly, S., Ganguly, A., Mohiuddin, S., et al.: ViXNet: vision transformer with Xception network for deepfakes based video and image forgery detection. Expert Syst. Appl. 210, 118423 (2022)

Ismail, A., Elpeltagy, M., Zaki, M., et al.: Deepfake video detection: YOLO-Face convolution recurrent approach. PeerJ Comput. Sci. 7, e730 (2021)

Wang, Z., Bao, J., Zhou, W., et al.: AltFreezing for more general video face forgery detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4129–4138 (2023)

Ren, H., Atwa, W., Zhang, H., et al.: Frame duplication forgery detection and localization algorithm based on the improved levenshtein distance. Sci. Program. 1, 1–10 (2021)

Fadl, S., Han, Qi., Li, Q.: CNN spatiotemporal features and fusion for surveillance video forgery detection. Signal Process. Image Commun. 90, 116066 (2021)

Lai, Z., Wang, Y., Feng, R., et al.: Multi-feature fusion based deepfake face forgery video detection. Systems 10(2), 31 (2022)

Li, J., Xie, H., Li, J., et al.: Frequency-aware discriminative feature learning supervised by single-center loss for face forgery detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6458–6467 (2021)

Li, Y. Z., Yang, X., Sun, P., et al.: Celeb-DF: a large-scale challenging dataset for deepfake forensics. In: Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, Jun 13–19, 2020, pp. 3204–3213. IEEE, Piscataway (2020)

Cozzolino, D., Poggi, G., Verdoliva, L.: Recasting residualbased local descriptors as convolutional neural networks: an application to image forgery detection. In: Proceedings of the 5th ACM Workshop on Information Hiding and Multimedia Security, pp. 159–164. ACM (2017)

Rahmouni, N., Nozick, V., Yamagishi, J., et al.: Distinguishing computer graphics from natural images using convolution neural networks. In: 2017 IEEE Workshop on Information Forensics and Security (WIFS), pp. 1–6. IEEE, Piscataway (2017)

Bayar, B., Stamm, M.C.: A deep learning approach to universal image manipulation detection using a new convolutional layer. In: Proceedings of the 4th ACM Workshop on Information Hiding and Multimedia Security, pp. 5–10. ACM (2016)

Rossler, A., Cozzolino, D., Verdoliva, L., et al.: Faceforensics++: learning to detect manipulated facial images. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1–11. IEEE, Piscataway (2019)

Afchar, D., Nozick, V., Yamagishi, J., et al.: Mesonet: a compact facial video forgery detection network. In: 2018 IEEE International Workshop on Information Forensics and Security (WIFS) , pp. 1–7. IEEE, Piscataway (2018)

Ganguly, S., Mohiuddin, S., Malakar, S., et al.: Visual attention-based deepfake video forgery detection. Pattern Anal. Appl. 25, 981–992 (2022)

Luo, Y., Zhang, Y., Yan, J., et al.: Generalizing face forgery detection with high-frequency features. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16317–16326 (2021)

Li, L., Bao, J., Zhang, T., et al.: Face x-ray for more general face forgery detection. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5000–5009 (2020)

Waseem, S., Abu-Bakar, S.A.R.S., Omar, Z., et al.: Multi-attention-based approach for deepfake face and expression swap detection and localization. J. Image Video Proc. (2023). https://doi.org/10.1186/s13640-023-00614-z

Liu, D., Dang, Z., Peng, C., et al.: FedForgery: generalized face forgery detection with residual federated learning. IEEE Trans. Inf. Forens. Secur. 18, 4272–4284 (2023). https://doi.org/10.1109/TIFS.2023.3293951

Pattanaik, R.K., Mishra, S., Siddique, M., et al.: Breast cancer classification from mammogram images using extreme learning machine-based DenseNet121 model. J. Sens. 1, 1–12 (2022)

Sharma, N., Gupta, S., Reshan, M.S.A., et al.: EfficientNetB0 cum FPN based semantic segmentation of gastrointestinal tract organs in MRI scans. Diagnostics 13(14), 2399 (2023). https://doi.org/10.3390/diagnostics13142399

Kadirappa, R., Ko, S.B., et al.: An automated multi-class skin lesion diagnosis by embedding local and global features of dermoscopy images. Multimed. Tools Appl. 82, 34885–34912 (2023). https://doi.org/10.1007/s11042-023-14892-2

Zhu, A., Chen, S., Fangfang, Lu., et al.: Recognition method of tunnel lining defects based on deep learning. Wirel. Commun. Mob. Comput.. Commun. Mob. Comput. 1, 1–12 (2021). https://doi.org/10.1155/2021/9070182

Chu, Y., Yue, X., Lei, Yu., et al.: Automatic image captioning based on ResNet50 and LSTM with soft attention. Wirel. Commun. Mob. Comput.. Commun. Mob. Comput. 1, 1–7 (2020). https://doi.org/10.1155/2020/8909458

Funding

This study was funded by the Science and Technology Project in Xi’an (No. 22GXFW0123), Thesis work was supported by the special fund construction project of key disciplines in ordinary colleges and universities in Shaanxi Province, and the authors would like to thank the anonymous reviewers for their helpful comments and suggestions.

Author information

Authors and Affiliations

Contributions

Wenyan Hou wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

We declare that we have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hou, W., Sun, J., Liu, H. et al. Research on video face forgery detection model based on multiple feature fusion network. SIViP (2024). https://doi.org/10.1007/s11760-024-03059-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03059-7