Abstract

Compressive sensing (CS) enables reconstructing a sparse signal from fewer samples than those required by the classic Nyquist sampling theorem. In general, CS signal recovery algorithms have high computational complexity. However, several signal processing problems such as signal detection and classification can be tackled directly in the compressive measurement domain. This makes recovering the original signal from its compressive measurements not necessary in these applications. We consider in this paper detecting stochastic signals with known probability density function from their compressive measurements. We refer to it as the compressive detection problem to highlight that the detection task can be achieved via directly exploring the compressive measurements. The Neyman–Pearson (NP) theorem is applied to derive the NP detectors for Gaussian and non-Gaussian signals. Our work is more general over many existing literature in the sense that we do not require the orthonormality of the measurement matrix, and the compressive detection problem for stochastic signals is generalized from the case of Gaussian signals to the case of non-Gaussian signals. Theoretical performance results of the proposed NP detectors in terms of their detection probability and the false alarm rate averaged over the random measurement matrix are established. They are verified via extensive computer simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Compressive sensing (CS) is an important innovation in the field of signal processing. With CS, if the representation of a signal in a particular linear basis is sparse, we can sample it at a rate significantly smaller than that dictated by the classic Nyquist sampling theorem. To ensure that the obtained compressive measurements preserve sufficient information so that signal reconstruction is feasible, the measurement matrix needs to satisfy the well-known restricted isometry property (RIP). Fortunately, measurement matrices whose elements are independently drawn from the sub-Gaussian distribution would meet this requirement with a high probability [1–6].

To reconstruct the original signal from its compressive measurements, various algorithms were proposed in literature. Roughly speaking, they belong to three different categories, namely relaxation-based algorithms, pursuit algorithms, and Bayesian algorithms. Relaxation-based algorithms use functions easier to tackle to approximate the non-smooth and non-convex \(l_\mathrm{0}\)-norm employed in the signal reconstruction-oriented optimization problem. The relaxed problem is then solved via standard numerical techniques. Well-known instances of relaxation-based algorithms include the basis pursuit (BP) [7] and FOCUSS (focal underdetermined system solver) [8] that approximate the \(l_\mathrm{0} \)-norm using the \(l_\mathrm{1} \)-norm and \(l_{p}\)-norm with \(p<\mathrm{1}\). Pursuit algorithms search for a solution to the sparse signal recovery problem by taking a sequence of greedy decisions on the signal support. Algorithms belonging to this family include, but not limited to, the matching pursuit (MP) [9], orthogonal matching pursuit (OMP) [10], stagewise OMP (StOMP) [11], the iterative hard thresholding (IHT) [12], the hard thresholding pursuit (HTP) [13], the compressive sampling matching pursuit (CoSaMP) [14] and subspace pursuit (SP) [15]. Bayesian algorithms formulate the signal reconstruction problem as a Bayesian inference problem and apply statistical tools to solve it. Typical Bayesian algorithms are the Bayesian compressive sensing (BCS) [16] and the Laplace prior-based BCS [17].

Despite the great efforts in developing efficient signal reconstruction methods for CS, researchers also noticed that there are many signal processing applications such as detection, classification and parameter estimation where recovering the original signal is not necessary, given that its compressive measurements are available. In [18], the technique of compressive signal processing (CSP) was advocated, where the deterministic signal detection problem is addressed in the compressive measurement domain. Duarte et al. [19] proposed another deterministic signal detection algorithm based on OMP. The developed technique can complete the signal detection task using fewer compressive measurements and iterations than those needed by the OMP algorithm to recover the original signal. However, this work did not establish analytically the detection probability and the false alarm rate, and the detection threshold was also found by experiments.

For stochastic signals, the compressive detection problem (i.e., detecting stochastic signals in the compressive measurement domain) can be formulated as the following binary hypothesis testing problem

where \({\varvec{y}}=\left[ {y_\mathrm{1} ,y_2 ,\ldots ,y_M } \right] ^{T}\) is the compressive measurement vector; \({\varvec{\Phi }} \) is the \(M \times N\) measurement matrix (\(M < N\)) whose elements are assumed to be drawn independently from a Gaussian distribution, and \({\varvec{\Phi }} \) should satisfy the restricted isometry property (RIP) [18]; \({\varvec{\theta }}\) is the original stochastic signal with known probability density function (PDF); and \({{\varvec{n}}}\) represents the additive noise.

The above stochastic signal detection problem has been considered in [20]. The authors proposed to design the projection matrices by maximizing the mutual information between projected signals and signal class labels. Sparse event detection in sensor networks under a CS framework was considered in [21]. The problem of detecting spectral targets using noisy incoherent projections was investigated in [22, 23]. Through employing a linear subspace model for sparse signals, Wimalajeewa et al. [24] solved the stochastic detection problem with reduced number of measurements for a given performance. Several detection algorithms, dependent on the availability of information about the subspace model and the sparse signal, have also been developed. Specifically, Wang et al. [25] and Rao et al. [26] considered the compressive detection problem, assuming that the row vectors of the measurement matrix are orthonormal. Wang et al. [25] derived the theoretical performance limits for detecting an arbitrary random signal from compressive measurements. Rao et al. [26] studied the problem of detecting sparse random signals using compressive measurements. It also discussed the problem of detecting stochastic signal with known PDF. Recently, in [27], the authors applied the sparse random projection matrix in place of dense projection matrices and developed Neyman–Pearson (NP) detectors for stochastic signals. The constraint on the orthonormality of the measurement matrix was eliminated. But in [27], the case of correlated signal detection was not considered.

The contribution of this paper is as follows. First, we tackle the compressive detection problem with the elements of the measurement matrix drawn independently from a Gaussian PDF using the NP theorem. Therefore, the context of this work is both CS and random projection. As in [27], we do not assume that the row vectors of the measurement matrix are orthonormal to one another (i.e., the constraint \({\varvec{\Phi }} {\varvec{\Phi }} ^{T}=\mathbf{I}_M \) is not required but \(E( {{\varvec{\Phi }} {\varvec{\Phi }} ^{T}} )=\mathbf{I}_M \) is assumed when obtaining the average performance of the proposed NP detectors). In fact, the assumption that the measurement matrix consists of orthonormal rows is not proper in some cases. This work considers detecting Gaussian signals with independent samples and with correlated samples. The average performance of the proposed NP detectors under Gaussian random measurement matrices is found. The second contribution of this work is that the compressive detection problem for stochastic signals is generalized from the case of Gaussian signals to the case of non-Gaussian signals by invoking the central limit theorem. In this case, performance analysis of the proposed NP detectors is done by asymptotic analysis. In other words, we will consider in this paper a generalized compressive detection problem. In applications, the measurement matrix may be not necessarily orthonormal and the distribution of the signal may be not necessarily Gaussian. The detector we develop in this paper can be well applied to these cases. We will illustrate the performance of the developed signal detectors using extensive computer experiments.

It is worthwhile to point out that the development of the NP detectors proposed in this paper assumes the availability of the PDF of the stochastic signal of interest, but the practical applications of the signal detectors only require the mean and the covariance of the original stochastic signal being known a prior. As a result, the proposed techniques can be used to detect non-stochastic signals by utilizing their sample mean and sample covariance in place of their true but known mean and covariance. We will illustrate the detection of non-stochastic signals in the computer experiment section (see Sect. 5).

The rest of the paper is organized as follows. Section 2 presents the NP detector and its performance analysis for Gaussian signals with diagonal covariance (i.e., signals with independent samples). Section 3 extends the results of Sect. 2 to the case where the Gaussian signal has non-diagonal covariance (i.e., signals with correlated samples). Section 4 considers the case of non-Gaussian signals and resorts to asymptotic analysis to establish the detector. Computer experiment results are given in Section 5, and Section 6 is the conclusion.

2 Compressive detection of zero-mean Gaussian signals with diagonal covariance

We consider the binary hypothesis testing problem in (1). Let the noise vector \({{\varvec{n}}}\) be Gaussian distributed with mean \({\varvec{0}}_{N\times 1} \), where \({\varvec{0}}_{N\times 1} \) is an \(N\times 1\) zero vector, and covariance \(\beta ^{-1}\mathbf{I}_N \), where \(\mathbf{I}_N \) is an \(N \times N\) identity matrix. In contrast to existing works such as [25, 26], the measurement matrix \({\varvec{\Phi }} \) in (1) may not satisfy \({\varvec{\Phi }} {\varvec{\Phi }} ^{T}=\mathbf{I}_M \), i.e., its row vectors are not necessarily orthonormal. Assuming that the elements in \(\theta =\left[ {\theta _\mathrm{1} ,\theta _2 ,\ldots ,\theta _N } \right] ^{T}\) are independent and identically distributed (i.i.d.) Gaussian random variables, we have

We assume that the values of \(\alpha \) and \(\beta \) are known. In other words, the PDFs of the signal vector and the noise are completely specified. Let \(P_{F}\) denote the probability of choosing the hypothesis \(\hbox {H}_\mathrm{1} \) when the hypothesis \(\hbox {H}_\mathrm{0} \) is true, i.e., the false alarm rate. Let \(P_{D}\) be the probability of choosing the hypothesis \(\hbox {H}_\mathrm{1} \) when the hypothesis \(\hbox {H}_\mathrm{1} \) is true, i.e., the detection probability. We will approach the detection problem (1) by applying the NP theorem in the compressive measurement domain.

First, We will find the PDF of \({\varvec{y}}\) under the hypotheses \(\hbox {H}_\mathrm{0} \) and \(\hbox {H}_\mathrm{1} \). Since \({{\varvec{n}}}\) is Gaussian, we have that under hypothesis \(\hbox {H}_\mathrm{0} \),

where \(|\cdot |\) denotes the matrix determinant. On the other hand, using (2), we can express the distribution of \(\left( {{\varvec{\theta }}+{{\varvec{n}}}} \right) \) as

Then, when the hypothesis \(\hbox {H}_\mathrm{1} \) holds, the PDF of \({\varvec{y}}\) becomes

Let \(r( {\varvec{y}} )\) be the likelihood ratio \(r( {\varvec{y}} )={p_\mathrm{1} ( {\varvec{y}} )}/{p_\mathrm{0} ( {\varvec{y}} )}\). The NP theorem says that we decide the hypothesis \(\hbox {H}_\mathrm{1} \) when \(r( {\varvec{y}} )>\eta \) or we decide the hypothesis \(\hbox {H}_\mathrm{0} \) when \(r( {\varvec{y}} )<\eta \), where \(\eta \) is the detection threshold. \(\eta \) is obtained via solving

where \(\alpha _{F}\) is the user-defined false alarm rate.

Taking the logarithm of \(r( {\varvec{y}} )\), we obtain an equivalent NP test given by

where \(\mathbf{D}=\frac{\beta ^{{2}}}{\alpha +\beta }( {{\varvec{\Phi }} {\varvec{\Phi }} ^{T}} )^{-1}\) and \(\gamma =M\log \frac{\alpha +\beta }{\alpha }+2\log \eta \). We will find in the following development the average performance of the proposed NP detector in (7).

From (7), we can define the following test statistics using the compressive measurements y as

\(t\) is a sufficient statistic for detecting independent Gaussian signals. Under \(\hbox {H}_\mathrm{0} \), we have

where \(\mathbf{M}_\mathbf{0} ={\varvec{\Phi }} ^{T}( {{\varvec{\Phi }} {\varvec{\Phi }} ^{T}} )^{-1}{\varvec{\Phi }}\) and it is a projection matrix. It is straightforward to show that (1) \(\mathbf{M}_\mathbf{0} =\mathbf{M}_\mathbf{0}^T \) and (2) \(\mathbf{M}_\mathbf{0}^\mathbf{2} =\mathbf{M}_\mathbf{0} \). As such, \(\mathbf{M}_\mathbf{0} \) is also idempotent and it can be decomposed as

where \(r_{M}\) is the rank of \(\mathbf{M}_\mathbf{0}\, ; \mathbf{I}_{r_M }\) is an \(r_{M} \times r_{M}\) identity matrix; \(\mathbf{0}_{r_M \times (N-r_M )} ,\, \mathbf{0}_{N-r_M } \) and \(\mathbf{0}_{\left( {N-r_M } \right) \times r_M } \) are the zero matrixes with the dimension \(r_{M} \times (N - r_{M}),\,(N-r_{M}) \times (N - r_{M})\) and (\(N-r_{M}) \times r_{M};\, \mathbf{U}_s \) is the matrix composed of eigenvectors corresponding to eigenvalues of one; and \(U_n \) is a matrix consisting of eigenvectors corresponding to eigenvalues being zero. We can verify using (10) and the definition of \({\varvec{\Phi }} \) that \(r_{M} = M\) and \(\mathbf{M}_\mathbf{0} =\mathbf{U}_s \mathbf{U}_s^T \).

Let \({\varvec{\omega }}=\mathbf{U}_s^T {{\varvec{n}}}\), we have

the mean of \({\varvec{\omega }}\) is

and the covariance of \({\varvec{\omega }}\) is

Thus, \({\varvec{\omega }}\) has a PDF \({N}( {{\varvec{0}}_{M\times 1} ,\beta ^{-1}}\mathbf{I}_M )\). As a result, \(\beta {{\varvec{n}}}^{T}\mathbf{M}_\mathbf{0} {{\varvec{n}}}\) is the sum of squares of \(M\) i.i.d. standard Gaussian random variables such that \(\beta {{\varvec{n}}}^{T}\mathbf{M}_\mathbf{0} {{\varvec{n}}}\) has a chi-square distribution with \(M\) degrees of freedom. In other words, under the hypothesis \(\hbox {H}_\mathrm{0} ,\,t(\alpha + \beta )/\beta \) has a chi-square distribution with \(M\) degrees of freedom.

Under the hypothesis \(\hbox {H}_\mathrm{1} \), we have

Following the derivation that leads to the distribution function of \(t \) under the hypothesis \(\hbox {H}_\mathrm{0} \), we can show that \(\frac{\alpha \beta }{\alpha +\beta }\left( {{\varvec{\theta }}+{{\varvec{n}}}} \right) ^{T}\mathbf{M}_\mathbf{0} \left( {{\varvec{\theta }}+{{\varvec{n}}}} \right) \) is also equal to the sum of squares of \(M\) i.i.d. standard Gaussian random variables. As such, \(\frac{\alpha }{\beta }t\) has a chi-square distribution with \(M\) degrees of freedom under \(\hbox {H}_\mathrm{1} \).

In summary, we have

where \(\chi ^{{2}}( M )\) denotes the chi-square distribution with \(M\) degrees of freedom. Combining (7), (8) and (15) yields

where \(\hbox {X}\left( x \right) =\int _x^\infty {\frac{{1}}{{2}^{M/2}\Gamma \left( {M/2} \right) }u^{M/2-{1}}\hbox {exp}\left( {-u/2} \right) \mathrm{d}u} \), the integrand is the chi-square distribution function with \(M\) degrees of freedom, \(\Gamma \left( a \right) =\int _\mathrm{0}^\infty {u^{a-{1}}\hbox {exp}} \left( {-u} \right) \mathrm{d}u\) and \(\Gamma (a)\) is the Gamma function.

When the false alarm rate \(P_{F}\) is set to \(\alpha _{F}\), according to (16), we can obtain the detection threshold using

The detection probability in this case is

3 Compressive detection of zero-mean Gaussian signals with non-diagonal covariance

In this section, we will generalize the results in the previous section to the case where the Gaussian signals have covariance that are no longer diagonal. In other words, the elements in \({\varvec{\theta }}\) may be correlated (compare, e.g., [27]). Assume that \({\varvec{\theta }}\) now has a covariance \(\mathbf{C}_N \), which is positive semidefinite. The noise is still zero-mean Gaussian distributed as in Sect. 2. We have that the PDFs of the compressive measurement vector y under hypotheses \(\hbox {H}_\mathrm{0} \) and \(\hbox {H}_\mathrm{1} \) are

According to the NP theorem, We will decide the hypothesis \(\hbox {H}_\mathrm{1} \) if \(r( {\varvec{y}} )={p_\mathrm{1} ( {\varvec{y}} )}/{p_\mathrm{0} ( {\varvec{y}} )}>\eta \). The equivalent NP test is

where \(\mathbf{C}=\mathbf{C}_N +\beta ^{-1}\mathbf{I}_N \) is the covariance of \(\left( {{\varvec{\theta }}+{{\varvec{n}}}} \right) \), and \(\gamma =\log \frac{\left| {{\varvec{\Phi }} \mathbf{C}{\varvec{\Phi }} ^{T}} \right| }{\left| {\beta ^{-1}}{\varvec{\Phi }} {\varvec{\Phi }} ^{T} \right| }+2\log \eta \).

Note that though \(\mathbf{C}_N \) is positive semidefinite only and may be singular, yet it can be shown in the following that \(\mathbf{C}=\mathbf{C}_N +\beta ^{-1}\mathbf{I}_N \, (\beta ^{-1}\ne 0)\) is invertible. Decompose \(\mathbf{C}_N \) as \(\mathbf{C}_N =\mathbf{U}_C {\varvec{\Lambda }}_C \mathbf{U}_C^T \), where \(\mathbf{U}_C \) is an orthonormal matrix, \({\varvec{\Lambda }} _C =\hbox {diag}\left( {\lambda } _{C{1}} , {\lambda } _{C2} , \ldots , \lambda _{CN} \right) \) and \(\lambda _{C_\mathrm{1}} , \lambda _{C{2}} , \ldots , \lambda _{CN} \) are the eigenvalues of \(\mathbf{C}_N \) that are non-negative. Denoting the matrix \(\beta ^{-1}\mathbf{I}_N \) as \(\beta ^{-1}\mathbf{I}_N =\mathbf{U}_C {\varvec{\Lambda }} _\beta \mathbf{U}_C^T \), where \({\varvec{\Lambda }} _\beta =\hbox {diag}\left( {\beta ^{-1}}, \beta ^{-1}, \ldots , \beta ^{-1} \right) \), we have \(\mathbf{C}=\mathbf{C}_N +\beta ^{-1}\mathbf{I}_N =\mathbf{U}_C \left( {{\varvec{\Lambda }} _C +{\varvec{\Lambda }} _\beta } \right) \mathbf{U}_C^T \). In particular, \({\varvec{\Lambda }}_C +{\varvec{\Lambda }}_\beta =\hbox {diag}\left( {\lambda _{C{1}} +\beta ^{-1}}, \lambda _{C{2}} +\beta ^{- 1}, \ldots , \lambda _{CN} +\beta ^{-1} \right) \). Applying the fact that \(\beta ^{-1}>0\), we can easily verify that the rank of \(\Lambda _C +\Lambda _\beta \) is \(N\). So \(C\) has full rank, and as a result, it is positive definite. It has the Cholesky decomposition \(C=RR^{T}\), where \(R\) is an \(N~\times ~N\) non-singular matrix.

From (20), we can define \(t={\varvec{y}}^{T}( {\beta ( {{\varvec{\Phi }} {\varvec{\Phi }} ^{T}} )^{-1}}\) \(-( {{\varvec{\Phi }} C{\varvec{\Phi }} ^{T}}\!)^{-1}\!){\varvec{y}}\) as the test statistics. We have, under the hypothesis \(\hbox {H}_{1}\),

where \(\mathbf{p}=\mathbf{R}^{-1} ( {{\varvec{\theta }}+{{\varvec{n}}}} )\) is a Gaussian random vector with zero mean and covariance \(\mathbf{I}_N\). \(\mathbf{Q}=\mathbf{R}^{T}{\varvec{\Phi }} ^{T} ( {\beta ( {{\varvec{\Phi }} {\varvec{\Phi }} ^{T}} )^{-1}}- ( {{\varvec{\Phi }} \mathbf{C}{\varvec{\Phi }} ^{T}} )^{-1} ){\varvec{\Phi }} \mathbf{R}\) is a real symmetric matrix with rank less than or equal to \(M\). Applying \(\mathbf{Q}=\mathbf{A}\,\hbox {diag}(\lambda _1 ,\ldots ,\lambda _M ,0,\) \(\ldots ,0)\mathbf{A}^{T}\), we have

where \(\lambda _\mathrm{1} , \lambda _2 , \ldots , \lambda _M \) are the largest \(M\) eigenvalues of \(\mathbf{Q}\) arranged in a descending order, \(\mathbf{A}\) is orthonormal and \(\mathbf{s}={{\varvec{A}}}^{T}\mathbf{p}=[s_\mathrm{1} ,s_2 ,\ldots ,s_N ]^{T}\). The PDF of \(\mathbf{s}\) is \(\hbox {N}\left( {{\varvec{0}}_{N\times 1} , \mathbf{A}^{T}\mathbf{A}} \right) =\hbox {N}\left( {{\varvec{0}}_{N\times 1} , \mathbf{I}_N } \right) \) and as a result, its elements are independent to one another. Putting (22) into (21) gives that the test statistics t under \(\hbox {H}_\mathrm{1} \) is

We next derive the detection probability of the NP detector given in (20), which is

where in this case, \(t\) is defined in (23). We will find \(P_{D}\) by evaluating the cumulative distribution function (CDF) of \(t=\sum _{k={1}}^M {\lambda _k s_k^2}\). For this purpose, the characteristic function of \(t\), which is Fourier transform of the PDF \(p(t)\), is computed first. Mathematically, we have

Applying the fact that \(s_{k}\), where \(k=1,2,\ldots , M\), is mutually independent, we have

where \(\phi _{s_k^2} \left( {\lambda _k \omega } \right) =E( {\hbox {exp}( {j\omega \lambda _k s_k^2 } )} )\) is the characteristic function of \(s_{k}^{2}\) evaluated at \(\lambda _{k}\omega \). It is known that the characteristic function of a chi-square distributed variable with 1 degree of freedom is \(\phi _{\chi _\mathrm{1}^{2} } \left( \omega \right) ={ 1}/{\sqrt{{1}-{2}j\omega }}\). Putting this result into (26) yields

Performing the inverse Fourier transform of \(\phi _{t}(\omega )\) in (27) and putting the obtained \(p(t)\) into (24) give

which completes the derivation of \(P_{D}\), the detection probability of the NP detector in (20).

We proceed to consider the false alarm rate of the detector (20). In this case, following the process that leads to (23), we can show that the test statistics is

where \(\mathbf{s}^{{\prime }}=\left[ {s_{1}^{\prime } , s_{2}^{\prime } , \ldots , s_M^{\prime } } \right] ^{T}=\mathbf{A}^{{\prime }}{{\varvec{n}}},\, \mathbf{A}^{{\prime }}\) is an orthogonal matrix, which can diagonalize \(\beta ^{-1}{\varvec{\Phi }} ^{T}( {\beta ({{\varvec{\Phi }} {\varvec{\Phi }} ^{T}} )^{-1}}-( {{\varvec{\Phi }} \mathbf{C}{\varvec{\Phi }} ^{T}} )^{-1} ){\varvec{\Phi }} \) as \( \mathbf{A}^{{\prime }}\hbox {diag}\left( {\lambda _1^{\prime },\ldots ,\lambda _M^{\prime } ,0,\ldots ,0} \right) \left( {\mathbf{A}^{{\prime }}} \right) ^{T}\), and \(\lambda _\mathrm{1}^{\prime } , \lambda _{2}^{\prime } , \ldots , \lambda _M^{\prime } \) are the \(M\) largest eigenvalues of \(\beta ^{-1}{\varvec{\Phi }} ^{T}\left( {\beta ( {{\varvec{\Phi }} {\varvec{\Phi }} ^{T}} )^{-1}}-\left( {{\varvec{\Phi }} \mathbf{C}{\varvec{\Phi }} ^{T}} \right) ^{-1} \right) {\varvec{\Phi }} \). We can also verify that \(s_{k}^{{\prime }},\, k=1,2,\ldots , M\), are mutually independent and \(\left( {s_k^{\prime } } \right) ^{{2}}\) has a chi-square distribution with one degree of freedom. The false alarm rate can then be calculated using

With (30), the detection threshold \(\gamma \) can be found by first setting the false alarm rate to a given value \(\alpha _{F}\) and then solving for \(\gamma \). Numerical methods are available for solving such Volterra integral equations [28].

4 Compressive detection of zero-mean non-Gaussian signals

This section considers detecting zero-mean stochastic signals that have non-Gaussian distributions. The corresponding hypothesis testing problem is still given by (1), where in this case, the additive noise is also non-Gaussian. The measurement matrix \({\varvec{\Phi }} \) contains i.i.d. random Gaussian entries with zero mean and variance \(1/N\). The signal of interest \({\varvec{\theta }}\) and the noise \({{\varvec{n}}}\) have covariance \(\mathbf{C}_N \) and \(\beta ^{-1}\mathbf{I}_N \), where \(\mathbf{C}_N \) is positive semidefinite and \(\beta >0\). We further assume that \({\varvec{\theta }}\) and \({{\varvec{n}}}\) are independent to each other. The proposed NP signal detector for this scenario will be derived as follows using asymptotic (large-sample) analysis.

Let \(E( {{\varvec{x}}} )\) and \(D( {{\varvec{x}}} )\) be the statistical expectation and the covariance of the random quantity \(\mathbf{x}\). It can be shown that for the non-Gaussian scenario, we still have that under hypothesis \(\hbox {H}_{1},E( {{\varvec{\theta }}+{{\varvec{n}}}} )={\varvec{0}}_{N\times 1} \) and \(D( {{\varvec{\theta }}+{{\varvec{n}}}} )=\mathbf{C}_N +\beta ^{-1}\mathbf{I}_N =\mathbf{C}\). The zero-mean property of the compressive measurements can also be established using \(y_i =\sum _{j={1}}^N {{\varvec{\Phi }} _{i,j} \left( {\theta _j +n_j } \right) } \) and \(E( {\theta _i +n_i } )=0\), where \(i=1,2,{\ldots },M\). Let \(\Delta _{1}^{2} \hbox {=D}\left( {y_i } \right) =\sum _{j={1}}^N {D( {{\varvec{\Phi }} _{i,j} \left( {\theta _j +n_j } \right) } )} \) and \(\Delta _{2}^3 =\sum _{i={1}}^N {\rho _i } \), where \(\rho _i =E( {| {{\varvec{\Phi }} _{i,j} ( {\theta _j +n_j } )-E( {{\varvec{\Phi }} _{i,j} ( {\theta _j +n_j } )} )} |^{3}} )=| {{\varvec{\Phi }} _{i,j} } |^{{3}}\) \(E( {| {\theta _j +n_j } |^{3}} )\). The central limit theorem [29] tells that if \({\Delta _{2} }/{\Delta _\mathrm{1} }\rightarrow 0\) as \(N\rightarrow \infty ,\, y_{i}\) would follow a Gaussian distribution \(y_i \rightarrow \mathbb {N}\left( 0,{D( {y_i } )} \right) \). We will next evaluate \({\hbox {lim}}_{N\rightarrow \infty } {\Delta _2 }/{\Delta _\mathrm{1}}\) to establish the PDF of the compressive measurements under the hypothesis \(\hbox {H}_{1}\). We have from (1)

where \({\varvec{\Phi }} _{i,{\bullet }} \) denotes the \(i\)th row of the measurement matrix \({\varvec{\Phi }} \), and \(\mathbf{C}_{i,k} \) is the \(\left( {i, k} \right) \)th element of the covariance \(\mathbf{C}\). Using (31), we can obtain the following inequalities

Note that the elements of \({\varvec{\Phi }} \) are drawn independently from a Gaussian distribution with zero mean and variance \(1/N\), we have that \({\hbox {lim}}_{N\rightarrow \infty } \frac{1}{N}\sum _{j=1}^N {{\varvec{\Phi }} _{i,j}^{2} } \rightarrow E( {{\varvec{\Phi }} _{i,j}^{2} } )=\frac{1}{N}, {\hbox {lim}}_{N\rightarrow \infty } \frac{1}{N}\sum _{j=1}^N {\left| {{\varvec{\Phi }} _{i,j} } \right| ^{3}} \rightarrow E( {| {{\varvec{\Phi }} _{i,j} } |^{3}} )=\left( {\frac{{1}}{\sqrt{N}}} \right) ^{3}\frac{{2}^{3/2}}{\sqrt{\pi }}\) and

Applying the central limit theorem [29] yields that as \(N~\rightarrow ~\infty \),

On the other hand, the covariance between two compressive measurements is

Combining (34) and (35), and using (31), we have that under the hypothesis \(\hbox {H}_\mathrm{1} \), as \(N \rightarrow \infty \), the PDF of the compressive measurements \({\varvec{y}}\) converges asymptotically to

Following a similar procedure, it can be shown that under the hypothesis \(\hbox {H}_\mathrm{0} \), as \(N \rightarrow \infty \), we have

Comparing (35) and (36) with (19) reveals that in the non-Gaussian signal scenario, as \(N \rightarrow \infty \), the NP signal detector is given in (20) and its performance in terms of the detection probability and false alarm rate has been derived in (28) and (30).

We will consider a special case when the signal \({\varvec{\theta }}\) has a covariance \(\alpha ^{-1}\mathbf{I}_N \) such that \(\mathbf{C}=\left( {\alpha ^{-1}+\beta ^{-1}} \right) \mathbf{I}_N \). In this case, (36) and (37) become

The corresponding NP detector is given in (7), and its performance results are summarized in (16).

5 Computer experiments

In this section, we will illustrate the performance of the proposed NP detectors for Gaussian and non-Gaussian signals via computer experiments. The obtained results reveal the relationship between the detection performance and the factors such as the false alarm rate, the number of compressive measurements used for detection and the signal-to-noise ratio (SNR). In all the experiments, the elements of the compressive measurement matrix \({\varvec{\Phi }} \) are drawn independently from a Gaussian distribution with zero mean and variance \(1/N\). We will show the performance of the NP detectors developed in this paper only for clarity.

5.1 Compressive detection of Gaussian random signals with independent samples

We consider the NP detector (7) for Gaussian signals with diagonal covariance first. In Fig. 1, we show the receiver-operating characteristic (ROC) curves that illustrate the relationship between the detection probability of the NP detector and the false alarm rate. We include in the figure the theoretical detection probability given by (18) (denoted as ‘predict’), and the one from the Monte Carlo simulation of 5,000 independent runs using the \(M \times N\) measurement matrix whose elements are drawn from a Gaussian distribution (denoted as ‘statistic’). For the purpose of comparison, we also plot in the figure the detection probability of the detector in (7) (denoted as ‘statistic-I’) when only the first \(M\) elements of the original signal are used for detection (in this case, a signal truncation instead of signal compression is performed).

ROC of the detector in (7). a \(\hbox {SNR}=-5\,\hbox {dB}\). b \(\hbox {SNR}=0\,\hbox {dB}\)

To generate Fig. 1, the original signal has \(N=500\) samples. We consider two cases, one with SNR = 0 dB and the other with SNR = \(-\)5 dB. The SNR is defined as \(10\log _{10} \frac{\alpha ^{-1}}{\beta ^{-1}}\), where \(\alpha ^{-1}\) and \(\beta ^{-1}\) are the variances of the original signal and the noise, respectively. In each SNR case, the number of compressive measurements \(M\) is varied as \(M=0.05N, 0.1N, 0.2N, 0.4N\). The obtained ROC curves are summarized in Fig. 1a for SNR = \(-\)5 dB and Fig. 1b for SNR = 0 dB.

We can see that the simulation results closely match the theoretical ones. This validates our analytical derivation of the detection probability \(P_{D}\) of the NP detector in (7) (see (18)). Besides, comparing Fig. 1a with Fig. 1b shows that a higher SNR leads to a larger \(P_{D}\), as expected. We can also see that \(P_{D}\) gradually improves as the number of used compressive measurements \(M\) increases as well, which is also expected.

Interestingly, we also find from Fig. 1 that the detection performance using the Gaussian compressive measurement matrix and the signal truncation is the same. In other words, under the i.i.d. Gaussian signal model, the performance of compressive signal detection using \(M \) compressive measurements would be identical to the case where only the first \(M\) samples of the original signal are used for the detection task. The underlying reasons are as follows. First, the obtained simulation results can be explained theoretically by examining (18), which reveals that the detection probability of the detector in (7) depends on the rank of the measurement matrix only. In this simulation, the measurement matrices of the compressive signal detector and the signal truncation-based detector both have a rank of \(M\), which renders their detection performance identical to each other. Another important insight is that the obtained simulation results may result from the signal model adopted in the development of the compressive detector (7). In particular, the original signal \({\varvec{\theta }}\) and the noise \({{\varvec{n}}}\) are both assumed to be composed of i.i.d. Gaussian samples and they are independent to each other. As a result, roughly speaking, the compression of the noise-corrupted original signal due to the use of compressive measurement matrix and the direct signal truncation would result in the same detection performance observed in Fig. 1.

The compressive signal detection technique may outperform the direct signal truncation-based approach when, e.g., the original signal has correlated samples or some of its samples have significantly larger variance than others. In these cases, due to its averaging nature, the use of the compressive measurement matrix may provide the better detection performance, because the direct signal truncation may not be able to retain all the signal samples with large power. As the original signal no longer has a diagonal covariance matrix with identical diagonal elements, the proposed compressive detectors in Sects. 3 and 4 need to be invoked to address the signal detection task. We will investigate more in our future work the impact of the properties of the stochastic signals to be detected on the performance of the proposed compressive detectors.

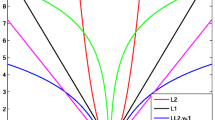

To better illustrate the impact of the number of compressive measurements used on the detection performance, we repeat the simulation experiment that produced Fig. 1. This time, we fix the false alarm rate at 0.01 and 0.1, and generate two sets of results shown in Fig. 2a for \(\alpha _F =0.1\) and Fig. 2b for \(\alpha _F =0.01\). In each subfigure, we plot the detection probability as function of \(M/N\). Other settings are the same as in the previous experiment. We observe that increasing the number of compressive measurements does lead to improved detection probability. But the amount of performance improvement is not as significant as the one brought by enlarging the SNR.

Detection probability of the detector in (7) as function of \(M/N\). a \(\alpha _F =0.1\). b \(\alpha _F =0.01\)

5.2 Compressive detection of non-Gaussian signals

We consider the compressive detection of non-Gaussian signals in this subsection. Two cases are simulated, one with the signal of interest having a uniform distribution in \(\left[ -\hbox {2,2} \right] \), and the other with a \(t\)-distribution with 8 degrees of freedom. In both cases, the elements of the signal of interest \({\varvec{\theta }}\) are i.i.d. with mean 0 and variance \(4/3\). The noise \({{\varvec{n}}}\) has a Gaussian distribution with covariance \(\beta ^{-1}\mathbf{I}_N \). Since both \({\varvec{\theta }}\) and \({{\varvec{n}}}\) have diagonal covariance, as shown in Sect. 4 via asymptotic analysis [see (38) and (39)], the NP detector for this case is given in (7) and its detection probability and the false alarm rate are given in (16).

In the first experiment of this subsection, we set \(N=500\) and fix the false alarm rate at 0.1. We plot the detection probability as function of \(M/N\) in Fig. 3a. The detection probability \(P_{D}\) from a simulation of 5,000 runs for the uniform and \(t\)-distributed signals are denoted as ‘uni’ and ‘t’ in the figure, while ‘predict’ represents the theoretical results obtained via evaluating (18) with the false alarm rate setting to be 0.1. It can be observed from Fig. 3a that under different SNR levels, the theoretical detection probability from (18) is very close to the results from simulation. This observation holds for both uniformly distributed and \(t\)-distributed signals, which justifies the validity of the asymptotic analysis used in Sect. 4 to derive the NP detector for non-Gaussian signals and establish its performance. We repeat the experiment that leads to Fig. 3a but this time, we fix the SNR at \(-\)2 dB and vary the false alarm rate to show the ROCs of the NP detector for non-Gaussian signals. Again, it can be seen that the asymptotic analysis we applied does lead to NP detectors for non-Gaussian signals whose performance can be predicted precisely using (16).

Detection probability of the detector in (7) for uniformly distributed and \(t\)-distributed signals of length 500. a Detection probability as function of \(M/N\). b Detector ROC

Detection probability of the detector in (7) for uniformly distributed and \(t\)-distributed signals of length 50. a Detection probability as function of \(M/N\). b Detector ROC

In the second experiment of this subsection, we reduce the length of the original signal to \(N=50\). Other setup is the same as in the previous experiment. The results are summarized in Fig. 4. Comparing Fig. 4 with Fig. 3 shows performance degradation in terms of lower detection probability. This comes from the fact that with reduced \(N\), the number of compressive measurements used for detection would be also decreased, which leads to poorer performance of the detector. Another important observation is that as \(N\) decreases, the performance of the NP detector from simulation no longer matches the theoretical values well. This is expected, because as indicated in Sect. 4, the NP detector for the non-Gaussian signals converges to the one given (7) as \(N\) increases to infinity.

5.3 Compressive detection of Gaussian random signals corrupted by correlated noise

In this subsection, we consider the compressive detection of Gaussian random signals corrupted by correlated noise. The colored noise is generated by a second-order moving average (MA) model driven by a zero-mean white Gaussian process \(e(k)\), which is \(n(k)=e(k)+0.5e(k-1)+0.2e(k-2)\). To detect the presence of the original signal, the detector given in (7) is applied with the variance of the noise \(\beta ^{-1}\) set to be the variance of the correlated noise. Note that in the case of colored noise, the detector (7) is only suboptimal, as it assumes a noise vector with independent samples.

The simulation setup is very similar the one used to generate Fig. 3. We set the length of the original signal to be \(N=500\). In Fig. 3a, the detection probability as function of \(M/N\) is shown. We consider here different SNR levels. In Fig. 3b, we fix the SNR at 0 dB and plot the detector ROC as function of the false alarm rate under different settings of \(M/N\). Figure 3 reveals that at high SNR, the performance of the detector in (7) from 5,000 Monte Carlo ensemble runs matches the theoretical value in (18) well, which indicates that in this case, the correlation between the noise samples does not greatly affect the detection performance. When the SNR decreases to \(-\)5 dB, the detection performance from simulation deviates from the theoretical value, mainly due to the mismatch between the assumed and the true noise covariance models.

5.4 Compressive detection of a real-world signal

In this subsection, we consider the compressive detection of a real-world frequency modulated (FM) signal transmitted by a UHF wireless intercom. It is sampled at a frequency of 500 MHz, and we record the first \(N=500\) samples for the computer experiment. The sample mean of the recorded FM signal is estimated and subtracted from the obtained samples to make them zero mean.

The detector in (7) is utilized for detecting the non-Gaussian FM signal with its sample mean removed. By applying the detector in (7), we indeed assume that the FM signal has a covariance \(\alpha ^{-1}\mathbf{I}_N \), where \(\alpha ^{-1}\) is equal to the sample variance of the FM signal. As in the previous computer experiments, the compressive measurement matrix \({\varvec{\Phi }} \) is generated via drawing independently from a Gaussian distribution with zero mean and variance \({1}/N\). Besides, in each Monte Carlo run, independent zero-mean Gaussian noise vectors with covariance \(\beta ^{-1}\mathbf{I}_N \) are added to the FM signal to produce the noise-corrupted signal of interest before we compress it using \({\varvec{\Phi }} \). The detection probability of the detector in (7) is found via a total number of 5,000 Monte Carlo runs.

Other simulation setup is the same as the one used to generate Fig. 5. The obtained simulation results are plotted in Fig. 6. It can bee seen that the simulated detection probability follows closely the trend of the theoretical values given in (18), thanks to the utilization of a relatively large number of data samples (see also Sect. 4). The difference between the simulation and the theoretical results probably comes from our assumption that the recorded FM signal has a diagonal covariance.

Detection probability of the detector in (7) for Gaussian signal corrupted by correlated noise. a Detection probability as function of \(M/N\). b Detector ROC

Detection probability of the detector in (7) for a real-world FM signal. a Detection probability as function of \(M/N\). b Detector ROC

6 Conclusion

In this paper, we considered the problem of detecting stochastic signals using compressive measurements. Different from most existing literature, we investigated the case where the measurement matrix is no longer orthonormal, which makes this work more general. Under the condition that the PDFs of the signal of interest and the noise are known, NP detectors have been developed for Gaussian and non-Gaussian signals. Explicit expressions for the detection probability and the false alarm rate of the proposed detectors were established and verified via extensive computer experiments.

References

Candès, E.: Compressive sampling. In: Proceedings of the International Congress of Mathematicians. European Mathematical Society Publishing House, Zürich, pp. 1433–1452 (2006)

Candès, E., Romberg, J.: Quantitative robust uncertainty principles and optimally sparse decompositions. Found. Comput. Math. 6, 227–254 (2006)

Baraniuk, R.: A lecture on compressive sensing. IEEE Signal Process. Mag. 24, 118–121 (2007)

Candès, E.: The restricted isometry property and its implications for compressed sensing. Acadèmie des sciences 346, 589–592 (2006)

Candès, E., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52, 489–509 (2006)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006)

Chen, S., Donoho, D.L., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20, 33–61 (1999)

Gorodnitsky, I., Bhaskar, D.R.: Sparse signal reconstruction from limited data using FOCUSS: a re-weighted minimum norm algorithm. IEEE Trans. Signal Process. 45, 600–616 (1997)

Mallat, S.G., Zhang, Z.: Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 41, 3397–3415 (1993)

Pati, Y.C., Rezaiifar, R., Krishnaprasad, P.S.: Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition. In: Proceedings of 27th Annual Asilomar Conference on Signals, Systems, and Computers, pp. 40–44 (1993)

Donoho, D.L., Tsaig, Y., Drori, I., Starck, J.L.: Sparse solution of underdetermined systems of linear equations by stagewise orthogonal matching pursuit. IEEE Trans. Inf. Theory 58, 1094–1121 (2012)

Blumensath, T., Davies, M.E.: Iterative thresholding for sparse approximations. J. Fourier Anal. Appl. 14, 629–654 (2008)

Foucart, S.: Hard thresholding pursuit: an algorithm for compressive sensing. SIAM J. Numer. Anal. 49, 2543–2563 (2011)

Needell, D., Tropp, J.A.: CoSaMP: iterative signal recovery from incomplete and inaccurate samples. Appl. Comput. Harmon. Anal. 26, 301–321 (2009)

Dai, W., Milenkovic, O.: Subspace pursuit for compressive sensing signal reconstruction. IEEE Trans. Inf. Theory 55, 2230–2249 (2009)

Ji, S., Xue, Y., Carin, L.: Bayesian compressive sensing. IEEE Trans. Signal Process. 56, 2346–2356 (2008)

Babacan, S., Molina, R., Katsaggelos, A.: Bayesian compressive sensing using Laplace priors. IEEE Trans. Image Process. 19, 53–63 (2010)

Davenport, M.A., Boufounos, P.T., Wakin, M.B.: Signal processing with compressive measurements. IEEE J. Sel. Top. Signal Process. 4, 445–460 (2010)

Duarte, M.F., Davenport, M.A., Wakin, M.B., Baraniuk, R.G.: Sparse signal detection from incoherent projections. In: IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Toulouse, France, pp. 305–308 (2006)

Vila-Forcen, J.E., Artes-Rodriguez, A., Garcia-Frias, J.: Compressive sensing detection of stochastic signals. In: Proceedings of 42nd Annual Conference on Information Sciences and Systems (CISS), pp. 956–960 (2008)

Meng, J., Li, H., Han, Z.: Sparse event detection in wireless sensor networks using compressive sensing. In: Proceedings of 43rd Annual Conference on Information Sciences and Systems (CISS), Baltimore, MD, pp. 181–185 (2009)

Krishnamurthy, K., Raginsky, M., Willett, R.: Hyperspectral target detection from incoherent projections. In: Proceedings of Acoustics, Speech and Signal Processing (ICASSP), Dallas, TX, pp. 3550–3553 (2010)

Krishnamurthy, K., Raginsky, M., Willett, R.: Hyperspectral target detection from incoherent projections: nonequiprobable targets and inhomogeneous SNR. In: Proceedings of IEEE 17th International Conference on Image Processing (ICIP), pp. 1357–136 (2010)

Wimalajeewa, T., Chen, H., Varshney, P.K.: Performance analysis of stochastic signal detection with compressive measurements. In: Signals, Systems and Computers (ASILOMAR), 2010 Conference Record of the Forty Fourth Asilomar Conference, pp. 813–817 (2010)

Wang, Z., Arce, G., Sadler, B.: Subspace compressive detection for sparse signals, In: Proceedings of Acoustics, Speech and Signal Processing (ICASSP), Las Vegas, NV, pp. 3873–3876 (2008)

Rao, B., Chatterjee, S., Ottersten, B.: Detection of sparse random signals using compressive measurements. In: Processing Acoustics, Speech and Signal Processing (ICASSP), Kyoto, pp. 3257–3260 (2012)

Junni, Z., Yifeng, L., Wenrui, D.: Compressive detection with sparse random projections. IEICE Commun. Express 2, 287–293 (2013)

Linz, P.: Numerical methods for Volterra integral equations of the first kind. Comput. J. 12, 393–397 (1969)

Cramer, H.: Mathematical Methods of Statistics. Princeton University Press, Princeton (1946)

Acknowledgments

The authors wish to gratefully thank all anonymous reviewers and associate editor who provided insightful and helpful comments. This work was supported in part by Hunan Provincial Innovation Foundation for Postgraduates under Grant CX2012B019, Fund of Innovation, Graduate School of National University of Defense Technology under grant B120404 and National Natural Science Foundation of China (No. 61304264).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Wang, YG., Liu, Z., Yang, L. et al. Generalized compressive detection of stochastic signals using Neyman–Pearson theorem. SIViP 9 (Suppl 1), 111–120 (2015). https://doi.org/10.1007/s11760-014-0666-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-014-0666-z