Abstract

Background

Patient ratings of their healthcare experience as a quality measure have become critically important since the implementation of the Affordable Care Act (ACA). The ACA enabled states to expand Medicaid eligibility to reduce uninsurance nationally. Arkansas gained approval to use Medicaid funds to purchase a qualified health plan (QHP) through the ACA marketplace for newly eligible beneficiaries.

Objective

We compare patient-reported satisfaction between fee-for-service Medicaid and QHP participants.

Design

The Consumer Assessment of Healthcare Providers and Systems (CAHPS) was used to identify differences in Medicaid and QHP enrollee healthcare experiences. Data were analyzed using a regression discontinuity design.

Participants

Newly eligible Medicaid expansion participants enrolled in Medicaid during 2013 completed the Consumer Assessment of Health Providers and Systems (CAHPS) survey in 2014. Survey data was analyzed for 3156 participants (n = 1759 QHP/1397 Medicaid).

Measures

Measures included rating of personal and specialist provider, rating of all healthcare received, and whether the provider offered to communicate electronically. Demographic and clinical characteristics of the enrollees were controlled for in the analyses.

Methods

Regression-discontinuity analysis was used to evaluate differential program effects on positive ratings as measured by the CAHPS survey while controlling for demographic and health characteristics of participants.

Key Results

Adjusted logistic regression models for overall healthcare (OR = 0.71, 95%CI = 0.56–0.90, p = 0.004) and personal doctor (OR = 0.68, 95%CI = 0.53–0.87, p = 0.002) predicted greater satisfaction among QHP versus Medicaid participants. Results were not significant for specialists or for use of electronic communication with provider.

Conclusions

Using a quasi-experimental statistical approach, we were able to control for observed and unobserved heterogeneity showing that among participants with similar characteristics, including income, QHP participants rated their personal providers and healthcare higher than those enrolled in Medicaid. Access to care, utilization of care, and healthcare and health insurance literacy may be contributing factors to these results.

Similar content being viewed by others

INTRODUCTION

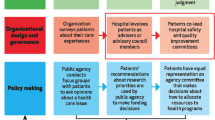

Patient experiences with healthcare have become a critical measure of quality of care since ACA implementation.1 The patient experience evaluates whether what should have happened during a healthcare encounter actually did happen (i.e., timely access to appointments and communication with providers that was effective and patient-centered).2,3

Several studies have examined access to and the quality of care but few have considered the impact of Medicaid expansion on enrollees’ satisfaction. Published research has focused primarily on rating differences between public health plan models (i.e., Medicaid enrollees in managed care or fee for service4,5 or Medicare6). Research is lacking on satisfaction differences in a Medicaid expansion population between enrollees in a commercial insurance plan versus a public plan like Medicaid.

The Arkansas Medicaid expansion program created a unique natural experiment allowing us to address this gap. Medicaid eligibility in the state was expanded to over 225,000 individuals aged 19–64 with incomes at or below 138% of the federal poverty level (FPL) in 2014. The Arkansas legislation authorizing expansion provided a private insurance option for “low risk” adults.7 The state received a Section 1115 demonstration waiver from the Centers for Medicaid and Medicare Services (CMS) to use Medicaid funds to purchase this private insurance through the ACA Marketplace.8 Participants were automatically enrolled in either Medicaid or a qualified Marketplace health plan (QHP) based on a risk assessment9 consistent with legislative requirements.

The automatic assignment to plan type based on risk, while holding demographic factors constant, allowed us to assess any public-private insurance satisfaction gaps. Our objective in this study was to examine patient experience, a core dimension of healthcare quality, to determine if insurance type (Medicaid vs QHP) impacted scores.

METHODS

Study Design

Regression discontinuity (RD) was used to evaluate program differences between QHP vs Medicaid in patient experience scores.10 The expansion population completed a medical needs questionnaire to evaluate health risk (herein referred to as need score).10 The need scores, ranging from 0.02 to 0.61, determined assignment to Medicaid or QHP. Assignment to QHP was made for scores < 0.18 (low medical need) and to Medicaid for scores ≥ 0.18 (medically frail). The RD approach allowed comparisons of individuals at the threshold (0.18) to estimate treatment effects/satisfaction differences. The CAHPS survey was used to assess patient experiences.11

Study Population

As summarized in the flow diagram (Figure A1, Appendix),12 225,168 persons were eligible and enrolled under Medicaid expansion in 2014. Our sampling frame included 181,206 enrollees from which a sample of 29,164 was selected. The sample selection included 5000 enrollees each in Medicaid and QHP with an additional 19,164 participants selected to represent the full range of income and needs scores. A survey response rate of 26.4% yielded 6568 surveys from which exclusions were made for missing needs scores, insurance plan changes due to eligibility changes, and no provider visits in the preceding 6 months. Our final analytic sample was 3156 (1759 QHP/1397 Medicaid).

Data Sources

Data for this study were obtained from four 2014–2015 data sources: (1) Arkansas Medicaid enrollment files from the Arkansas Department of Human Services; (2) administrative claims data; (3) the exceptional health needs questionnaire (Appendix); and (4) the CAHPS survey administered July–September of 2015. All data were linked using a unique, encrypted identifier.

Measures

Outcomes were CAHPS ratings of care and care providers and the availability of electronic communication with provider. Ratings of personal provider, specialist provider, and all health care received ranged from 0 to 10 (0 = the worst possible care to 10 = best care/provider). A personal provider is seen for checkups or health advice while a specialist has expertise in one area (i.e., heart or kidney). Ratings were based on services received in the preceding 6 months. Responses were dichotomized—scores of 9 or 10 (top-box score) versus all other scores consistent with other research.13 Respondents were also asked whether providers offered electronic communications through email, smartphone, or patient portals (yes/no).

Covariates included age (continuous, 19–64), sex (male or female), race (White non-Hispanic, Black non-Hispanic, Other, and Hispanic), educational attainment (< high school, high school graduate/GED, some college, ≥ college graduate, and education missing), marital status (married/partnered, widowed/divorced/separated, never married and missing), mental health or substance abuse diagnosis, and the Charlson Comorbidity Index (CCI). Urban status used rural-urban commuting area code assigned from patient zip code and dichotomized to rural vs urban.14 The CCI, an ordinal measure, is based on diagnostic codes from claims data. CCI ranged from 0, no comorbidities, to 4+ indicating at least 4 comorbidities.

Statistical Methods

RD is a quasi-experimental approach whose results compare favorably to results obtained using RCTs, the gold standard, while being more practical and cost-effective.15 RD requires the use of a continuous measure to assign participants to treatment groups (Medicaid vs QHP)—the needs score in this case. Assignment was based on a discrete cut-point in the needs score (0.18) at which point the probability of assignment jumped from 0 to 1. This is referred to as sharp programmatic assignment as the probability of assignment is not continuous but deterministic (see Figure A2, Appendix).11 We used McCrary’s16 test to determine whether our data met this continuous assumption and to rule out any manipulation in program assignment. Results (see Appendix Figure A2) indicate a continuous distribution with no manipulation.

RD also requires that independent variables have a continuous distribution across needs scores with no breaks at the threshold. We tested this using visual inspection of scatter plots for age and CCI—our continuous variables. No discontinuity was observed (Figures A3 and A4, Appendix). Because of the way assignment to insurance programs was made, enrollees on each side of the threshold are expected to have similar characteristics. We examined Arkansas enrollees on sex, race, marital status, education, and urbanicity finding similar demographic characteristics on either side of the cut-point (Tables A2-A6, Appendix).

RD outcomes were modeled as a function of the needs score and insurance program assignment controlling for covariates. We estimated both parametric and non-parametric models as they take different approaches to bias and precision. Parametric RD uses all available data but can produce biased estimates with high precision. In contrast, non-parametric RD uses only observations close to the cut-point which produces less biased estimates with lower precision when the functional form the model is correctly specified.11 We minimized bias in our parametric models by testing different functional forms for need scores (e.g., linear, quadratic, and cubic) as well as interactions between needs scores and insurance program through a series of F-tests. We selected the simplest model based on F-test p values and the Akaike’s information criterion (AIC) (Table A7, Appendix). We also conducted robustness checks of significant models by sequentially dropping the outermost 1%, 5%, and 10% of data points plus the lowest and highest values of the need scores. Since the lowest 1%, 5%, and 10% of data points were all 0.02 (the 5 lowest values), the lowest 17% (the next lowest value) of data points were also dropped. Results of these tests can be found in Table A8, Appendix. All tests suggest the results of our models were both reliable and robust. Logistic regression models were run using SAS 9.4 (SAS Institute Inc., Cary, NC).

Nonparametric models were estimated as a complement to parametric models with consistent results giving greater confidence in our findings. We used the standard local linear regression approach (i.e., regressing data points near the cut-off) to estimate our nonparametric models. We ensured a sufficient number of observations were included in the analyses to reduce bias while maintaining precision.11 The appropriate bandwidth around the cut-point was determined using the Imbens-Kalyanaraman method15 specifying a triangular kernel function for all the models. The local average treatment effect (LATE) was reported for the program comparisons. The rdd package in R 3.3.3 was used for these analyses.17 Sensitivity analyses were run using local linear regression models with the RDHonest package in R retaining the same kernel function and bandwidths.18 Locally estimated scatterplot smoothing (LOESS) was superimposed to visualize program effects on outcomes.

RESULTS

Table 1 reports baseline characteristics of the sample population. Apart from differences in medical need, characteristics of the population, including income, were similar. Most of the cohort were female, approximately 45 years of age, non-Hispanic White, high school graduates, married, and lived outside an urban area.

Table 2 provides results of the RD parametric and non-parametric models. Results are reported as odds ratios and mean probability differences for the parametric models. The program effect at the cut-point was significant for top box scoring overall healthcare and personal doctor. Medicaid enrollees at the cut-point were less likely to have top-box scored overall healthcare and a personal doctor than QHP enrollees (OR = 0.71, 95% CI = 0.56–0.90 for overall healthcare and OR = 0.68, 95% CI = 0.53–0.87 for personal doctor). Non-parametric local linear regression models showed similar results to the parametric models. The LATE for Medicaid and QHP was − 0.12 (p = 0.04) and − 0.13 (p = 0.04) respectively. A significant program effect was not seen for the rating of specialty provider or for whether a personal doctor offered electronic communication.

The adjusted predicted probability from the parametric model for all health care received (Fig. 1) is on the y-axis and need scores on the x-axis. A program effect is suggested by the discontinuity in the regression curve at the cut-point of about 10% between QHP and Medicaid enrollees with QHP enrollees more likely to highly rate health care received.

In Figure 2, the y-axis shows the adjusted predicted probability of highly rating personal doctor. This probability among QHP enrollees is stable across the need scores where it approaches the cut-point. In contrast, among Medicaid participants, the probability increases with increasing need scores. There is about a 10% difference between Medicaid and QHP participants in the probability of highly rating personal doctor. Significant results suggest a relatively stable and robust program effect.

Figures 3 and 4 are provided for comparison purposes though results are not significant. In these figures, there is no jump at the threshold. The data are, instead, continuous across needs scores. Despite this, the trend was toward QHP participants more likely to highly rate specialists (67.9% versus 59.1%)

DISCUSSION

Among newly insured Arkansans, QHP participants were more likely to highly rate personal providers and overall health care compared to Medicaid enrollees. Comparisons with other states are not possible because no other state has looked at differences in experience scores for a Medicaid expansion population, particularly for enrollees in a Medicaid versus a commercial plan. In part, this is because the Arkansas program was unique at the time in using Medicaid monies to purchase commercial insurance in the marketplace. However, a Commonwealth Fund study published in 2017 did find that Medicaid enrollees were as likely as commercially insured enrollees to rate the quality of their healthcare as excellent or very good (57% vs 52%).19 As these data do not focus solely on a Medicaid expansion population, the comparison is not exact. Still, it does suggest that a later assessment of experience scores might yield results different from ours.

Healthcare ratings are an important indicator of perceived quality. While we controlled for demographic and clinical characteristics, factors other than these may play a role in our findings—access to care and utilization. Analyses conducted by the Arkansas Center for Health Improvement (ACHI)12 found that 98% of the expansion population met distance to care standards, but Medicaid enrollees had more difficulty finding and engaging with providers. Nationally, the number of providers accepting Medicaid has remained stable over time despite the influx of new Medicaid enrollees. The already overburdened system made realized access more difficult for Medicaid enrollees.20 This Medicaid provider shortage is, in part, due to low reimbursement rates which, in 2018, were 50% of reimbursement rates for the privately insured in Arkansas.9

Research has also shown that connecting with a provider when needed is essential to both long-term engagement and satisfaction with providers.21 Arkansas Medicaid vs QHP enrollees indicated they had more difficulty getting care when needed.12 Basseyn and colleagues22 found that among Arkansas primary care practices, QHP providers had higher new patient appointment rates than Medicaid providers consistent with findings from national studies.23 QHP participants also had consistently better access to and utilization of primary, secondary, and tertiary prevention, care, and treatment vs Medicaid participants.12 Only 8.2% of Medicaid enrollees had accessed outpatient care at 30 days compared to 21.2% of QHP enrollees, and by 90 days, there was a wider difference (29.6% vs 41.8%).12 The connection between getting care when needed, utilization, more generally, and Medicaid reimbursement is highlighted by research showing that increasing Medicaid reimbursement also increased appointment availability.24 Additionally, improving healthcare utilization fosters patient trust and engagement with a provider, increases compliance, and improves health status which all lead to higher patient experience ratings.21,25,26

Taken together, patient experience score differences and patterns of reduced access and utilization are consistent with gaps in both health insurance and general health literacy. Health insurance literacy is the ability to understand key insurance terms, purchase and use insurance27,28 while health literacy is “…the capacity to obtain, process, and understand basic health information and services needed to make appropriate health decisions.”29 Individuals with higher health needs, like our Medicaid cohort, experience both greater health insurance and health illiteracy than those who are relatively healthier.30,31 Furthermore, health-related literacy deficits can drive both delayed care and emergent care32,33 impacting the ability to build interpersonal relationships and effective communication with providers34,35—factors associated with patient satisfaction.35

Our results were not significant for specialty care providers, but Medicaid participants generally report less access to specialty providers.36 Regarding results on electronic communication, literature suggests that patient knowledge and use of healthcare portals is limited and varies as a result of the “digital divide” by age, education, race/ethnicity, and income.37,38,39,40,41,42

LIMITATIONS

Our first limitation focuses on patient ratings generally. The problems associated with capturing patient ratings at a single point in time or as a part of a single construct have been documented.43,44,45,46,47,48 However, the CAHPS survey measuring patient experiences has been well validated.49 More specific to the Arkansas deployment of the CAHPS, our response rate was low at 26.4% which is less than the 40% response rate that the Agency for Healthcare Research and Quality (AHRQ)50 suggests can be attained, thus, creating potential nonresponse bias in our results. However, survey response rates in the USA, generally,51 and, more specifically, CAHPS response rate have declined over time.52 The 2014–2015 national Medicaid CAHPS had a response rate of 23.6% with data estimates still considered to be valid.4

Second, weighting CAHPS responses might have addressed survey non-response51; however, weights for the Arkansas CAHPS were developed without consideration of sample strata. Given this, we elected to use unweighted data in our analyses which may limit the generalizability of our conclusions. Third, dichotomizing our outcomes, while consistent with established methods, does obscure full variation in the ratings. Fourth, the study took place relatively soon after expansion began so enrollee knowledge might have changed over time. A second CAHPS was fielded in 2016. Future research will examine differences between the 2014 and 2016 surveys.

Fifth, we excluded participants from our final sampling frame primarily because of dual Medicare-Medicaid eligibility, inaccurate address information, or non-continuous enrollment. While these exclusions might have introduced some bias, we do not believe they substantively affected our results. Sixth, these data represent the experience of a single state and there may be difficulty generalizing our findings to other states since Arkansas was the first state to use the premium assistance mechanism.10 Seventh, while our models controlled for numerous patient factors, a key element, utilization, was not included as a predictor. Future research will examine the impact of utilization on experience scores. Finally, as we included in our analyses only those who had indicated they had a visit with either a primary or specialist provider, those who might have had the greatest difficulty finding providers were excluded.

CONCLUSION

Patient experience is an important policy relevant topic relative to Medicaid expansion. We found that Medicaid enrollees were significantly less likely to highly rate their healthcare and personal provider compared to QHP enrollees. Understanding the factors driving experience scores is critical to improving them. Multiple pathways were suggested for our results including access, utilization, and health-related literacy. First, increasing health insurance and health literacy is crucial for establishing a regular source of care, using healthcare appropriately, increasing trust in providers, and effectively communicating with providers. Ultimately, improvements in these areas will positively impact patient experiences. There are no plans within Arkansas government to implement health insurance or health literacy programs for the Medicaid expansion population. However, individual providers and practices may act on their own to implement practice-based screening for health literacy and multiple instruments are available for that purpose.53,54 Tailoring oral and written materials using language at or below the sixth grade level while incorporating visual aids when possible can improve two-way communication between patients and providers.55 Other non-literacy interventions have been suggested for practices including frequent and respectful communication, decreased appointment wait times, seeing patients at appointment times, and implementing a culture of service.55,56 Finally, programs like Ask Me 3 ® are available. Their purpose is ensuring patients maximize medical visits by asking what is my main health problem, what do I need to do, and why is this action important.57 These interventions improve patient engagement and, consequently, patient satisfaction which is critical in an increasingly market-driven world where physician reimbursement is driven in part by patient experiences.

References

U.S. Congress. Patient Protection and Affordable Care Act. In. H.R. 3590, Public Law 111-148. Washington DC: 111th Congress; 2010.

U.S. Department of Health and Human Services, Agency for Healthcare Research and Quality. CAHPS Clinician and Group Survey Measures, Version 3.0. Agency for Healthcare Research and Quality. https://www.ahrq.gov/cahps/surveys-guidance/cg/about/survey-measures.html. Published 2017. Updated August, 2017. Accessed October 18, 2017, 2017.

Anhang Price R, Elliott MN, Cleary PD, Zaslavsky AM, Hays RD. Should health care providers be accountable for patients’ care experiences? Journal of General Internal Medicine. 2015;30(2):253-256.

Barnett ML, Sommers BD. A national survey of medicaid beneficiaries’ experiences and satisfaction with health care. JAMA internal medicine. 2017;177(9):1378-1381.

Onstad K, Khan A, Hart A, Mierzejewski R, Xia F, Assurance NCfQ. Benchmarks for Medicaid Adult Health Care Quality Measures. Baltimore MD2014. Reference Number: 40248.

McWilliams JM, Landon BE, Chernew ME, Zaslavsky AM. Changes in patients’ experiences in Medicare accountable care organizations. The New England journal of medicine. 2014;371(18):1715-1724.

An Act Concerning Health Insurance for Citizens of the State of Arkansas; to Create the Health Care Independence Act of 2013. In. Title 20, Chapter 77. 89th General Assembly ed 2013.

Hinton E, Musumeci MB, Rudowitz R, Antonisse L, Hall C. Section 1115 Medicaid Demonstration Waivers: The Current Landscape of Approved and Pending Waivers. Kaiser Family Foundation; December 2017 2017.

Arkansas Center for Health Improvement. Arkansas Health Care Independence Program ("Private Option") Section 1115 Demonstration Waiver Final Report. Little Rock AR June 2018 2018.

Goudie A, Martin B, Li C, et al. Higher rates of preventive health care with commercial insurance compared with Medicaid: findings from the Arkansas Health Care Independence "Private Option" Program. Medical care. 2020;58(2):120-127.

Jacob R, Zhu P, Somers M-A, Bloom H. A Practical Guide to Regression Discontinuity. MDRC; July 2012 2012.

Arkansas Center for Health Improvement (ACHI). Arkansas Health Care Independence Program ("Private Option"). Section 1115 Demonstration Waiver Interim Report. Little Rock AR: ACHI; March 30, 2016 2016.

Aligning Forces for Quality RWJF. How to Report Results of the CAHPS Clinician & Group Survey. Agency for Healthcare Research and Quality website 2008.

United States Department of Agriculture, Economic Research Services. Rural-Urban Commuting Area Codes. https://www.ers.usda.gov/data-products/rural-urban-commuting-area-codes.aspx. Published 2016. Accessed 1/12/2016.

Imbens GW, Lemieux T. Regression discontinuity designs: a guide to practice. Journal of Econometrics. 2008;142(2):615-635.

McCrary J. Manipulation of the running variable in the regression discontinuity design: a density test. Journal of Econometrics. 2008;142(2):698-714.

Package 'rdd'. R package version 0.57 [computer program]. 2016.

RDHonest: Honest inference in RD [computer program]. 2017.

Gunja M, Collins SR, Blumenthal D, Doty MM, Beutel S. How Medicaid enrollees fare compared with privately insured and uninsured adults. findings from the Commonwealth Fund Biennial Health Insurance Survey, 2016. The Commonwealth Fund; April 2017.

French MT, Homer J, Gumus G, Hickling L. Key provisions of the patient protection and Affordable Care Act (ACA): a systematic review and presentation of early research findings. Health Serv Res. 2016;51(5):1735-1771.

Martin LT, Luoto JE. From coverage to care: strengthening and facilitating consumer connections to the health system. Rand Health Q. 2015;5(2):1.

Basseyn S, Saloner B, Kenney GM, Wissoker D, Polsky D, Rhodes KV. Primary care appointment availability for Medicaid patients: comparing traditional and premium assistance plans. Medical care. 2016;54(9):878-883.

Holahan J, Karpman M, Zuckerman S. Health Care Access and Affordability among LowHealth Care Access and Affordability among Low- and Moderate-Income Insured and Uninsured Adults under the Affordable Care Act. Washington DC: The Urban Institute, Health Policy Center; April 2016.

Polsky D, Richards M, Basseyn S, et al. Appointment availability after increases in Medicaid payments for primary care. The New England journal of medicine. 2015;372(6):537-545.

Fenton JJ, Jerant AF, Bertakis KD, Franks P. The cost of satisfaction: a national study of patient satisfaction, health care utilization, expenditures, and mortality. Archives of Internal Medicine. 2012;172(5):405-411.

Spooner KK, Salemi JL, Salihu HM, Zoorob RJ. Disparities in perceived patient-provider communication quality in the United States: trends and correlates. Patient education and counseling. 2016;99(5):844-854.

Long SK, Goin D. Large Racial and Ethnic Differences in Health Insurance Literacy Signal Need for Targeted Education and Outreach. The Commonwealth Fund; February 6 2014.

Quincy L. Measuring Health Insurance Literacy: A Call to Action A Report from the Health Insurance Literacy Expert Roundtable. Washington DC: Consumers Union;2012.

Medicine Io. Health Literacy: A Prescription to End Confusion. Washington, DC: The National Academies Press; 2004.

Tipirneni R, Politi MC, Kullgren JT, Kieffer EC, Goold SD, Scherer AM. Association Between Health Insurance Literacy and Avoidance of Health Care Services Owing to Cost. JAMA network open. 2018;1(7):e184796-e184796.

MacLeod S, Musich S, Gulyas S, et al. The impact of inadequate health literacy on patient satisfaction, healthcare utilization, and expenditures among older adults. Geriatric nursing (New York, NY). 2017;38(4):334-341.

Brown V, Russell M, Ginter A, et al. Smart Choice Health Insurance©: a new, interdisciplinary program to enhance health insurance literacy. Health Promot Pract. 2016;17(2):209-216.

Kim J, Braun B, Williams AD. Understanding health insurance literacy: a literature review. Family and Consumer Sciences Research Journal. 2013;42(1):3-13.

Schillinger D, Bindman A, Wang F, Stewart A, Piette J. Functional health literacy and the quality of physician–patient communication among diabetes patients. Patient education and counseling. 2004;52(3):315-323.

Riklin E, Talaei-Khoei M, Merker VL, et al. First report of factors associated with satisfaction in patients with neurofibromatosis. Am J Med Genet A. 2017;173(3):671-677.

Ndumele CD, Cohen MS, Cleary PD. Association of state access standards with accessibility to specialists for Medicaid managed care enrollees. JAMA internal medicine. 2017;177(10):1445-1451.

Woods SS, Forsberg CW, Schwartz EC, et al. The association of patient factors, digital access, and online behavior on sustained patient portal use: a prospective cohort of enrolled users. Journal of medical Internet research. 2017;19(10):e345.

Kontos E, Blake KD, Chou WY, Prestin A. Predictors of eHealth usage: insights on the digital divide from the Health Information National Trends Survey 2012. Journal of medical Internet research. 2014;16(7):e172.

Goel MS, Brown TL, Williams A, Hasnain-Wynia R, Thompson JA, Baker DW. Disparities in enrollment and use of an electronic patient portal. J Gen Intern Med. 2011;26(10):1112-1116.

Roblin DW, Houston TK, 2nd, Allison JJ, Joski PJ, Becker ER. Disparities in use of a personal health record in a managed care organization. Journal of the American Medical Informatics Association : JAMIA. 2009;16(5):683-689.

Garrido T, Kanter M, Meng D, et al. Race/ethnicity, personal health record access, and quality of care. The American journal of managed care. 2015;21(2):e103-113.

Dalrymple PW, Rogers M, Zach L, Luberti A. Understanding Internet access and use to facilitate patient portal adoption. Health Informatics Journal. 2018;24(4):368-378.

Anhang Price R, Elliott MN, Zaslavsky AM, et al. Examining the role of patient experience surveys in measuring health care quality. Med Care Res Rev. 2014;71(5):522-554.

Kane RL, Maciejewski M, Finch M. The relationship of patient satisfaction with care and clinical outcomes. Medical care. 1997;35(7):714-730.

Manary MP, Boulding W, Staelin R, Glickman SW. The patient experience and health outcomes. The New England journal of medicine. 2013;368(3):201-203.

Sequist TD, Schneider EC, Anastario M, et al. Quality monitoring of physicians: linking patients’ experiences of care to clinical quality and outcomes. J Gen Intern Med. 2008;23(11):1784-1790.

Gill L, White L. A critical review of patient satisfaction. Leadership in Health Services. 2009;22(1):8-19.

Wolf JA. Patient experience: the new heart of healthcare leadership. Frontiers of Health Services Management. 2017;33(3):3-16.

Cleary PD. Evolving concepts of patient-centered care and the assessment of patient care experiences: optimism and opposition. Journal of health politics, policy and law. 2016;41(4):675-696.

Agency for Healthcare Research and Quality, US Department of Health and Human Services. Fielding the CAHPS Clinician & Group Survey. Washington DC: AHRQ;2017.

Groves R, Fowler F, Couper M, Lepkowski J, Singer E, Tourangeau R. Survey Methodology. second ed. Hoboken NJ: John Wiley and Sons, Inc.; 2009.

Tesler R, Sorra J. CAHPS Survey Administration: What We Know and Potential Research Questions. Rockville, MD: Agency for Healthcare Research and Quality; October 2017 2017. AHRQ Publication No. 18-0002-EF.

Hersh L, Salzman B, Snyderman D. Health literacy in primary care practice. Am Fam Physician. 2015;92(2):118-124.

Copeland LA, Zeber JE, Thibodeaux LV, McIntyre RT, Stock EM, Hochhalter AK. Postdischarge correlates of health literacy among Medicaid inpatients. Population health management. 2018;21(6):493-500.

Sonis JD, Rogg J, Yun B, Raja AS, White BA. Improving the patient experience: ten high yield interventions. American Academy of Emergency Medicine News. 2016; March/April.

Agency for Healthcare Research and Quality. Improving Customer Service at Health Share of Oregon. Washington DC 2016. 16-CAHPS003-EF.

Institute for Healthcare Improvement. Ask Me 3®. Institute for Healthcare Improvement. http://www.ihi.org/resources/Pages/Tools/Ask-Me-3-Good-Questions-for-Your-Good-Health.aspx. Published 2019. Accessed November 11, 2020.

Funding

Funding for this work was provided by the Arkansas Department of Human Services and the Centers for Medicare and Medicaid Services.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

This study was reviewed by the UAMS Institutional Review Board (protocol: 205496) and determined not to be human subjects research as defined in 45 CFR 46.102.

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 158 kb)

Rights and permissions

About this article

Cite this article

Bollinger, M., Pyne, J., Goudie, A. et al. Enrollee Experience with Providers in the Arkansas Medicaid Expansion Program. J GEN INTERN MED 36, 1673–1681 (2021). https://doi.org/10.1007/s11606-020-06552-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-020-06552-0