Abstract

We demonstrate that the Michaelis–Menten reaction mechanism can be accurately approximated by a linear system when the initial substrate concentration is low. This leads to pseudo-first-order kinetics, simplifying mathematical calculations and experimental analysis. Our proof utilizes a monotonicity property of the system and Kamke’s comparison theorem. This linear approximation yields a closed-form solution, enabling accurate modeling and estimation of reaction rate constants even without timescale separation. Building on prior work, we establish that the sufficient condition for the validity of this approximation is \(s_0 \ll K\), where \(K=k_2/k_1\) is the Van Slyke–Cullen constant. This condition is independent of the initial enzyme concentration. Further, we investigate timescale separation within the linear system, identifying necessary and sufficient conditions and deriving the corresponding reduced one-dimensional equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and overview of results

The irreversible Michaelis–Menten reaction mechanism describes the mechanism of action of a fundamental reaction for biochemistry. Its governing equations are:

with positive parameters \(e_0\), \(s_0\), \(k_1\), \(k_{-1}\), \(k_2\), and typical initial conditions \(s(0)=s_0\), \(c(0)=0\). Understanding the behavior of this system continues to be crucial for both theoretical and experimental purposes, such as identifying the rate constants \(k_1\), \(k_{-1}\) and \(k_2\). However, the Michaelis–Menten system (2) cannot be solved via elementary functions, hence approximate simplifications have been proposed since the early 1900’s.

In 1913, Michaelis and Menten (1913) introduced the partial equilibrium assumption for enzyme-substrate complex formation, effectively requiring a small value for the catalytic rate constant \(k_2\). Later, for systems with low initial enzyme concentration (\(e_0\)), Briggs and Haldane (1925) derived the now-standard quasi-steady-state reduction (see Segel and Slemrod (1989) for a systematic analysis). Both these approaches yield an asymptotic reduction to a one-dimensional equation. A mathematical justification is obtained from singular perturbation theory. Indeed, most literature on the Michaelis–Menten system focuses on dimensionality reduction, with extensive discussions on parameter combinations ensuring this outcome.

We consider a different scenario: low initial substrate concentration (\(s_0\)). This presents a distinct case from both the low-enzyme and partial-equilibrium scenarios, which yield one-dimensional reductions in appropriate parameter ranges. Instead, with low substrate, we obtain a different simplification:

This linear Michaelis–Menten system (2) was first studied by Kasserra and Laidler (1970), who proposed the condition of excess initial enzyme concentration (\(e_0 \gg s_0\)) for the validity of the linearization of (1) to (2). This aligns with the concept of pseudo-first-order kinetics in chemistry, where the concentration of one reactant is so abundant that it remains essentially constant (Silicio and Peterson 1961).

Pettersson (1978) further investigated the linearization, adding the assumption that any complex concentration accumulated during the transient period remains too small to significantly impact enzyme or product concentrations. Later, Schnell and Mendoza (2004) refined the validity condition \(s_0 \ll K_M\) (where \(K_M = (k_{-1}+k_2)/k_1\) is the Michaelis constant) to be sufficient for the linearization (alias dictus the application of pseudo-first-order kinetics to the Michaelis–Menten reaction). However, Pedersen and Bersani (2010) found this condition overly conservative. They introduced the total substrate concentration \({\bar{s}}=s+c\) (used in the total quasi-steady state approximation (Borghans et al. 1996)) and proposed the condition \(s_0 \ll K_M + e_0\), reconciling the Schnell–Mendoza and Kasserra–Laidler conditions. Derived under the standard quasi-steady-state assumption, the one-dimensional Michaelis–Menten equation can be linearized, offering a useful method for parameter estimation at \(s_0 \ll K_M\); see Keleti (1982).

In this paper, we demonstrate that the solutions of the Michaelis–Menten system (1) admit a global approximation by solutions of the linear differential equation (2) whenever the intial substrate concentration is small. Our proof utilizes Kamke’s comparison theorem for cooperative differential equations.Footnote 1 This theorem allows us to establish upper and lower estimates for the solution components of (1) via suitable linear systems. Importantly, these bounds are valid whenever \(s_0<K\), where \(K=k_2/k_1\) is the Van Slyke–Cullen constant, and the estimates are tight whenever \(s_0\ll K\). This effectively serves as sufficient condition for the validity of the linear approximation Michaelis–Menten system (2). We further prove that solutions of the original Michaelis–Menten system (1) converge to solutions of the linear system (2) as \(s_0\rightarrow 0\), uniformly for all positive times. Moreover, the asymptotic rate of convergence is of order \(s_0^2\).

Additionally, we explore the problem of timescale separation within the linear Michaelis–Menten system (2). While a linear approximation simplifies the system, it does not guarantee a clear separation of timescales. In fact, there are cases where no universal accurate one-dimensional approximation exists. Since both eigenvalues of the matrix in (2) are real and negative, the slower timescale will dominate the solution’s behavior. True timescale separation occurs if and only if these eigenvalues differ significantly in magnitude. Importantly, we find that this separation is not always guaranteed. There exist parameter combinations where a one-dimensional approximation would lack sufficient accuracy. Building upon results from (Eilertsen et al. 2022), we establish necessary and sufficient conditions for timescale separation and derive the corresponding reduced one-dimensional equations.

2 Low Substrate: Approximation by a Linear System

To set the stage, we recall some facts from the theory of cooperative differential equation systems. For a comprehensive account of the theory, we refer to the H.L. Smith’s monograph (Smith 1995, Chapter 3) on monotone dynamical systems. Consider the standard ordering, here denoted by \(\prec \), on \({\mathbb {R}}^n\), given by

Now let a differential equation

be given, with f continuously differentiable. We denote by F(t, y) the solution of the initial value problem (3) with \(x(0)=y\), and call F the local flow of the system.

Given a convex subset \(D\subseteq U\), we say that (3) is cooperative on D if all non-diagonal elements of the Jacobian are nonnegative; symbolically

(Matrices of this type are also known as Metzler matrices.)

As shown by Hirsch (1982, and subsequent papers) and other researchers (see (Smith 1995, and the references therein)), cooperative systems have special qualitative features. Qualitative properties of certain monotone chemical reaction networks were investigated by De Leenheer et al. (2007). In the present paper, we consider a different feature of such systems. Our interest lies in the following simplified version of Kamke’s comparison theorem (Kamke 1932).Footnote 2

Proposition 1

Let \(D\subseteq U\) be a convex positively invariant set for \(\dot{x}=f(x)\) given in (3), with local flow F, and assume that this system is cooperative on D. Moreover let \(\dot{x}=g(x)\) be defined on U, with continuously differentiable right hand side, and local flow G.

-

If \(f(x)\prec g(x)\) for all \(x\in D\), and \(y\prec z\), then \(F(t,y)\prec G(t,z)\) for all \(t\ge 0\) such that both solutions exist.

-

If \(g(x)\prec f(x)\) for all \(x\in D\), and \(z\prec y\), then \(G(t,z)\prec F(t,y)\) for all \(t\ge 0\) such that both solutions exist.

2.1 Application to the Michaelis–Menten Systems (1) and (2)

For the following discussions, we recall the relevant derived parameters

where \(K_S\) is the complex equilibrium constant, \(K_M\) is the Michaelis constant, and K is the van Slyke-Cullen constant.

The proof of the following facts is obvious.

Lemma 1

-

(a)

The Jacobian of system (1) is equal to

$$\begin{aligned} \left( \begin{array}{cc} *&{} k_1s+k_{-1}\\ k_1(e_0-c) &{}*\end{array}. \right) \end{aligned}$$The off-diagonal entries are \(\ge 0\) in the physically relevant subset

$$\begin{aligned} D=\left\{ (s,c);\, s\ge 0,\, e_0\ge c\ge 0,\, s+c\le s_0\right\} \end{aligned}$$of the phase space, and D is positively invariant and convex. Thus system (1) is cooperative in D.

-

(b)

In D, one has

$$\begin{aligned} \begin{array}{rcccl} f_1(s,c)&{}\ge &{}g_1(s,c)&{}:=&{}-k_1e_0s+k_{-1}c\\ f_2(s,c)&{}\ge &{}g_2(s,c)&{}:=&{}k_1e_0s-(k_1s_0+k_{-1}+k_2)c, \end{array} \end{aligned}$$hence by Kamke’s comparison theorem the solution of the linear system with matrix

$$\begin{aligned} G:=\begin{pmatrix} -k_1e_0 &{} k_{-1}\\ k_1e_0&{} -(k_1s_0+k_{-1}+k_2) \end{pmatrix} = k_1\begin{pmatrix} -e_0 &{} K_S\\ e_0&{} -K_M(1+s_0/K_M) \end{pmatrix} \end{aligned}$$and initial value in D provides component-wise lower estimates for the solution of (1) with the same initial value.

-

(c)

In D, one has

$$\begin{aligned} \begin{array}{rcccl} f_1(s,c)&{}\le &{}h_1(s,c)&{}:=&{}-k_1e_0s+(k_1s_0+k_{-1})c\\ f_2(s,c)&{}\le &{}h_2(s,c)&{}:=&{}k_1e_0s-(k_{-1}+k_2)c, \end{array} \end{aligned}$$hence by Kamke’s comparison theorem the solution of the linear system with matrix

$$\begin{aligned} H:=\begin{pmatrix} -k_1e_0 &{} k_1s_0+k_{-1}\\ k_1e_0&{} -(k_{-1}+k_2) \end{pmatrix}=k_1\begin{pmatrix} -e_0 &{} K_S(1+s_0/K_S)\\ e_0&{} -K_M \end{pmatrix} \end{aligned}$$and initial value in D provides component-wise upper estimates for the solution of (1) with the same initial value.

Remark 1

The upper estimates become useless in the case \(k_1s_0> k_2\), because then one eigenvalue of H becomes positive. For viable upper and lower bounds one requires that \(s_0< K=k_2/k_1\).

The right hand sides of both linear comparison systems from parts (b) and (c) of the Lemma converge to

as \(s_0\rightarrow 0\). We will use this observation to show that a solution of (1) with initial value in D converges to the solution of (2) with the same initial value on the time interval \([0,\,\infty )\), as \(s_0\rightarrow 0\).

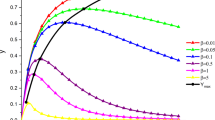

Illustration of Proposition 2. The solution to the Michaelis–Menten system (1) converges to the solution of the linear Michaelis–Menten system (2) as \(s_0\rightarrow 0\). In all panels, the solid black curve is the numerical solution to the Michaelis–Menten system (1). The thick yellow curve is the numerical solution to linear Michaelis–Menten system (2). The dashed/dotted curve is the numerical solution to the linear system defined by matrix G. The dotted curve is the numerical solution to the linear system defined by matrix H. All numerical simulations where carried out with the following parameters (in arbitrary units): \(k_1=k_2=k_{-1}=e_0=1\). In all panels, the solutions have been numerically-integrated over the domain \(t\in [0,T],\) where T is selected to be long enough to ensure that the long-time dynamics are sufficiently captured. For illustrative purposes, the horizontal axis (in all four panels) has been scaled by T so that the scaled time, t/T, assumes values in the unit interval: \(\frac{t}{T}\in [0,1]\). Top Left: The numerically-obtained time course of s with \(s_0=0.5\) and \(c(0)=0.0\). Top Right: The numerically-obtained time course of c with \(s_0=0.5\) and \(c(0)=0.0\). Bottom Left: The numerically-obtained time course of s with \(s_0=0.1\) and \(c(0)=0.0\). Bottom Right: The numerically-obtained time course of c with \(s_0=0.1\) and \(c(0)=0.0\). Observe that the solution components of (2) become increasingly accurate approximations to the solution components of (1) as \(s_0\) decreases (Color figure online)

2.2 Comparison Michaelis–Menten Systems (1) and (2)

Both G and H, as well as Df(0), are of the type

with all entries \(>0\), and \(\gamma >\beta \) (to ensure usable estimates). The trace equals \(-(\alpha +\gamma )\), the determinant equals \(\alpha (\gamma -\beta )\), and the discriminant is

The eigenvalues are

with eigenvectors

The following is now obtained in a straightforward (if slightly tedious) manner. We specialize the initial value to the typical case, with no complex present at \(t=0\).

Lemma 2

The solution of the linear differential equation with matrix (6) and initial value \(\begin{pmatrix} s_0\\ 0 \end{pmatrix}\) is equal to

This enables us to write down explicitly the solution of (2), as well as upper and lower estimates, in terms of familiar constants. We use Lemma 2 with \(\alpha =k_1e_0\), \(\beta =k_1K_S\) and \(\gamma =k_1K_M\), so \(\Delta =k_1^2\left( (K_M-e_0)^2+4K_Se_0\right) \), to obtain

as the solution of (2), after some simplifications.

Proposition 2

To summarize:

-

(a)

The solution \(\begin{pmatrix} s^*\\ c^* \end{pmatrix}\) of the linear differential equation (2) with initial value \(\begin{pmatrix} s_0\\ 0 \end{pmatrix}\) is given by (7).

-

(b)

The solution \(\begin{pmatrix} s_\textrm{low}\\ c_\textrm{low} \end{pmatrix}\) of the linear differential equation with matrix G and initial value \(\begin{pmatrix} s_0\\ 0 \end{pmatrix}\) is obtained from replacing \(K_M\) by \(K_M+s_0\) in (7).

-

(c)

The solution \(\begin{pmatrix} s_\textrm{up}\\ c_\textrm{up} \end{pmatrix}\) of the linear differential equation with matrix H and initial value \(\begin{pmatrix} s_0\\ 0 \end{pmatrix}\) is obtained from replacing \(K_S\) by \(K_S+s_0\) in (7).

-

(d)

Given that \(s_0<K\), for all \(t\ge 0\) one has the inequalities

$$\begin{aligned} \begin{array}{cc} s_\textrm{up} \ge s\ge s_\textrm{low}, &{} \quad s_\textrm{up} \ge s^*\ge s_\textrm{low}, \\ c_\textrm{up} \ge c\ge c_\textrm{low}, &{} \quad c_\textrm{up} \ge c^*\ge c_\textrm{low}; \\ \end{array} \end{aligned}$$(8)where \(\begin{pmatrix} s\\ c \end{pmatrix}\) denotes the solution of (1) with initial value \(\begin{pmatrix} s_0\\ 0 \end{pmatrix}\).

-

(e)

Let \({\mathcal {M}}\) be a compact subset of the open positive orthant \({\mathbb {R}}^4_{>0}\), abbreviate \(\pi :=(e_0, k_1, k_{-1}, k_2)^\textrm{tr}\) and \(K^*:=\min \{k_2/k_1;\, \pi \in \mathcal {M}\}\). Then, there exists a dimensional constant C (with dimension concentration\(^{-1}\)), depending only on \(\mathcal {M}\), such that for all \(\pi \in {{\mathcal {M}}}\) and for all \(s_0\) with \(0<s_0\le K^*/2\) the estimates

$$\begin{aligned} \begin{array}{rcl} \left| \dfrac{s-s^*}{s_0} \right| &{} \le &{} C\cdot s_0\\ \left| \dfrac{c-c^*}{s_0} \right| &{} \le &{} C\cdot s_0\\ \end{array} \end{aligned}$$hold for all \(t\in [0,\infty )\). Thus, informally speaking, the approximation errors of s by \(s^*\), and of c by \(c^*\), are of order \(s_0^2\).

Proof

The first three items follow by straightforward calculations. As for part (d), the first column of (8) is just a restatement of Lemma 1, while the second follows from the observation that (mutatis mutandis) the right-hand side of (2) also obeys the estimates in parts (b) and (c) of this Lemma.

There remains part (e). In view of part (d) it suffices to show that

for some constant and, in turn, it suffices to show such estimates for both \(s_\textrm{up}-s^*\), \(s^*-s_\textrm{low}\), and \(c_\textrm{up}-c^*\), \(c^*-c_\textrm{low}\). We will outline the relevant steps, pars pro toto, for the upper estimates.

By (7) and part (c), one may write

with the \(B_i\) and \(A_i\) continuously differentiable in a neighborhood of \([0,K^*/2]\times \mathcal {M}\times [0,\infty )\). Moreover, one sees

It suffices to show that (with the maximum norm \(\Vert \cdot \Vert \))

in \(\widetilde{{\mathcal {M}}}:=[0,K^*/2]\times \mathcal {M}\times [0,\infty )\), for \(i=1,\,2\).

From compactness of \([0,K^*/2]\times {{\mathcal {M}}}\), continuous differentiability and the explicit form of \(A_i\) and \(B_i\) one obtains estimates, with the parameters and variables in \(\widetilde{{\mathcal {M}}}\):

-

There is \(A_*>0\) so that \(|A_i(s_0,\pi )|\ge A_*\).

-

There is \(B^*>0\) so that \(\Vert B_i(s_0, \pi )\Vert \le B^*\).

-

There are continuous \({\widetilde{B}}_i\) so that \(B_i(s_0,\pi )-B_i(0,\pi )=s_0\cdot {\widetilde{B}}_i(s_0,\pi )\) (Taylor), hence there is \(B^{**}>0\) so that \(\Vert B_i(s_0,\pi )-B_i(0,\pi )\Vert \le s_0\cdot B^{**}\).

-

By the mean value theorem there exists \(\sigma \) between 0 and \(s_0\) so that

$$\begin{aligned} \exp \left( -A_i(s_0, \pi )t\right) -\exp \left( -A_i(0, \pi )t\right) = -t\cdot \dfrac{\partial A_i}{\partial s_0}\,(\sigma ,\pi )\cdot \exp \left( -A_i(\sigma , \pi )t\right) \,s_0. \end{aligned}$$So, with the constant \(A^{**}\) satisfying \(A^{**}\ge |\dfrac{\partial A_i}{\partial s_0}|\) for all arguments in \(\widetilde{\mathcal {M}}\) one gets

$$\begin{aligned} |\exp \left( -A_i(s_0, \pi )t\right) -\exp \left( -A_i(0,\pi )t\right) |{} & {} \le A^{**}\cdot t\exp \left( -A_i(\sigma , \pi )t\right) \, s_0\\{} & {} \le A^{**}\cdot t\exp \left( -A_*t\right) \le \dfrac{A^{**}}{A_*}s_0. \end{aligned}$$

So

and this proves the assertion. \(\square \)

For illustrative purposes, a numerical example is given in Fig. 1.

Remark 2

Our result should not be mistaken for the familiar fact that in the vicinity of the stationary point 0 the Michaelis–Menten system (1) is (smoothly) equivalent to its linearization (2); see, e.g. (Sternberg 1958, Theorem 2). The point of the Proposition is that the estimate, and the convergence statement, hold globally.

Remark 3

Some readers may prefer a dimensionless parameter (and symbols like \(\ll \)) to gauge the accuracy of the approximation. In view of Lemma1 and equation (5) it seems natural to choose \(\varepsilon :=s_0/K_S<s_0/K_M\), which estimates the convergence of G and H to Df(0), and set up the criterion \(s_0/K_S\ll 1\). But still the limit \(s_0\rightarrow 0\) has to be considered.

Remark 4

Since explicit expressions are obtainable from (7) for the \(A_i\) and \(B_i\) in the proof of part (e), one could refine it to obtain quantitative estimates. This will not be pursued further here.

2.3 Timescales for the Linear Michaelis–Menten System (2)

In contrast to familiar reduction scenarios for the Michaelis–Menten system (1), the linearization (2) for the low initial substrate case does not automatically imply a separation of timescales. Timescale separation depends on further conditions that we discuss next.

Recall the constants introduced in (4) and note

In this notation, the eigenvalues of the matrix in the linearized system (2) are

This matrix is the Jacobian at the stationary point 0, thus it is possible to use the results from earlier work (Eilertsen et al. 2022).

Remark 5

Since timescales are inverse absolute eigenvalues, by ( Eilertsen et al. 2022, subsection 3.3), (9) shows:

-

(a)

The timescales are about equal whenever

$$\begin{aligned} 4Ke_0\approx (K_M+e_0)^2, \end{aligned}$$which is the case, notably, when \(K_S\approx 0\) (so \(K_M\approx K\)) and \(K_M\approx e_0\).

-

(b)

On the other hand, one sees from (9) that a significant timescale separation exists whenever (loosely speaking, employing a widely used symbol)

$$\begin{aligned} \dfrac{4Ke_0}{(K_M+e_0)^2}\ll 1. \end{aligned}$$(10)Notably this is the case when \(e_0/K_M\) is small, or when \(K/K_M\) is small.

-

(c)

Moreover, condition (10) is satisfied in a large part of parameter space (in a well-defined sense) as shown in (Eilertsen et al. 2022, subsection 3.3, in sparticular Figure 2).

Proposition 3

We take a closer look at the scenario with significant timescale separation, stating the results in a somewhat loose language.

-

(a)

Given significant timescale separation as in (10), the slow eigenvalue is approximated by

$$\begin{aligned} \lambda _1\approx -k_1\cdot \dfrac{Ke_0}{K_M+e_0}=-\dfrac{k_2e_0}{K_M+e_0}, \end{aligned}$$(11)and the reduced equation is

$$\begin{aligned} \dfrac{d}{dt}\begin{pmatrix} s\\ c \end{pmatrix} =-\dfrac{k_2e_0}{K_M+e_0}\begin{pmatrix} s\\ c \end{pmatrix}. \end{aligned}$$(12) -

(b)

Given significant timescale separation as in (10), the eigenspace for the slow eigenvalue is asymptotic to the subspace spanned by

$$\begin{aligned} {\widehat{v}}_1:=\begin{pmatrix} K_M-\dfrac{Ke_0}{K_M+e_0}\\ e_0 \end{pmatrix}. \end{aligned}$$(13)

Proof

Given (10) one has

by Taylor approximation, from which part (a) follows.

Moreover, according to (7), the direction of the slow eigenspace is given by

By the same token, we have the approximation

which shows part (b). \(\square \)

Remark 6

One should perhaps emphasize that Proposition 3 indeed describes a singular perturbation reduction when \(\lambda _1\rightarrow 0\). This can be verified from the general reduction formula in Goeke and Walcher (2014) (note that the Michaelis–Menten system (2) is not in standard form with separated slow and fast variables), with elementary computations.

Finally we note that Proposition 3 is applicable to the discussion of the linearized total quasi-steady-state approximation. Details are discussed in Eilertsen et al. (2023).

3 Conclusion

Our comprehensive analysis of the Michaelis–Menten system under low initial substrate concentration addresses an important gap in the literature. We obtain the sufficientFootnote 3 condition, \(s_0 \ll K\) for the global approximation of the Michaelis–Menten system (1) by the linear Michaelis–Menten system (2). This simplification, known as the pseudo-first-order approximation, is widely used in transient kinetics experiments to measure enzymatic reaction rates. Moreover, given the biochemical significance of this reaction mechanism and the renewed interest in the application of the total quasi-steady state approximation in pharmacokinetics experiments under low initial substrate concentration (Back et al. 2020; Vu et al. 2023), the linearization analysis holds clear practical relevance. The analytical expression derived from the linearization also provides a direct method to estimate kinetic parameters, circumventing the complexities of numerical optimization. These numerical procedures, which involve solving nonlinear—frequently stiff—ordinary differential equations and fitting the solutions to experimental data, can lead to inaccurate parameter estimates if the algorithm becomes trapped in a local minimum—a risk that persists despite the availability of significant computing power (Ramsay et al. 2007; Liang and Wu 2008). Our work also illustrates the advantage of mathematical theory in the discussion and analysis of parameter-dependent differential equations: The fact that initial enzyme is not relevant for the low initial substrate scenario could not be shown by numerical simulation.

From a mathematical perspective, it is intriguing to observe a simplification that arises independently of timescale separation or invariant manifolds. The natural next step is to explore this approach with other familiar enzyme reaction mechanism (e.g., the reversible Michaelis–Menten reaction, and those involving competitive and uncompetitive inhibition, or cooperativity [see, for example, Keener and Sneyd (2009)]). However, a direct application of Kamke’s comparison theorem is not always feasible. Enzyme catalyzed reaction mechanism with competitive or uncompetitive inhibition do not yield cooperative differential equations, and standard cooperative networks do so only within specific parameter ranges. As to the reversible Michaelis-Menten system, with rate constant \(k_{-2}\) for the reaction of product and enzyme to complex, one has a cooperative system whenever \(k_1\ge k_{-2}\). Investigating these systems under low initial substrate conditions will require the refinement of existing or the development of further mathematical tools.

Data availibility

We do not analyse or generate any datasets, because our work proceeds within a theoretical and mathematical approach.

Notes

This concept is distinct from cooperativity in biochemistry.

Kamke (1932) considered non-autonomous systems with less restrictive properties. In particular the statement also holds on P-convex sets. A subset D of \({\mathbb {R}}^n\) is called P-convex if, for each pair \(y,\,z\in D\) with \(y\prec z\), the line segment connecting y and z is contained in D. Thus, P-convexity is a weaker property than convexity.

Necessity can easily be verified. See also the discussion in Eilertsen et al. (2023) for examples.

References

Back H-M, Yun H-Y, Kim SK, Kim JK (2020) Beyond the Michaelis-Menten: accurate prediction of in vivo hepatic clearance for drugs with low KM. Clin Transl Sci 13:1199–1207

Borghans JAM, de Boer RJ, Segel LA (1996) Extending the quasi-steady state approximation by changing variables. Bull Math Biol 58:43–63

Briggs GE, Haldane JBS (1925) A note on the kinetics of enzyme action. Biochem J 19:338–339

De Leenheer P, Angeli D, Sontag ED (2007) Monotone chemical reaction networks. J Math Chem 41:295–314

Eilertsen J, Schnell S, Walcher S (2022) On the anti-quasi-steady-state conditions of enzyme kinetics. Math Biosci 350:108870

Eilertsen J, Schnell S, Walcher S (2024) The unreasonable effectiveness of the total quasi-steady state approximation, and its limitations. J Theor Biol 583:111770

Goeke A, Walcher S (2014) A constructive approach to quasi-steady state reduction. J Math Chem 52:2596–2626

Hirsch MW (1982) Systems of differential equations which are competitive or cooperative. I. Limit sets. SIAM J Math Anal 13:167–179

Kamke E (1932) Zur Theorie der Systeme gewöhnlicher Differentialgleichungen II. Acta Math 58:57–85

Kasserra HP, Laidler KJ (1970) Transient-phase studies of a trypsin-catalyzed reaction. Can J Chem 48:1793–1802

Keener J, Sneyd J (2009) Mathematical physiology I: cellular physiology, 2nd edn. Springer-Verlag, New York

Keleti T (1982) A novel parameter estimation from the linearized Michaelis-Menten equation at low substrate concentration. Biochem J 203:343–344

Liang H, Wu H (2008) Parameter estimation for differential equation models using a framework of measurement error in regression models. J Am Stat Assoc 103:1570–1583

Michaelis L, Menten ML (1913) Die Kinetik der Invertinwirkung. Biochem Z 49:333–369

Pedersen MG, Bersani AM (2010) Introducing total substrates simplifies theoretical analysis at non-negligible enzyme concentrations: pseudo first-order kinetics and the loss of zero-order ultrasensitivity. J Math Biol 60:267–283

Pettersson G (1978) A generalized theoretical treatment of the transient-state kinetics of enzymic reaction systems far from equilibrium. Acta Chem Scand B 32:437–446

Ramsay JO, Hooker G, Campbell D, Cao J (2007) Parameter estimation for differential equations: a generalized smoothing approach (with discussion). J R Stat Soc Series B Stat Methodol 69:741–796

Schnell S, Mendoza C (2004) The condition for pseudo-first-order kinetics in enzymatic reactions is independent of the initial enzyme concentration. Biophys Chem 107:165–174

Segel LA, Slemrod M (1989) The quasi-steady-state assumption: a case study in perturbation. SIAM Rev 31:446–477

Silicio F, Peterson MD (1961) Ratio errors in pseudo first order reactions. J Chem Educ 38:576–577

Smith HL (1995) Monotone dynamical systems. Am. Math. Soc, Providence

Sternberg S (1958) On the structure of local homeomorphisms of euclidean n-space. II. Amer J Math 80:623–631

Vu NAT, Song YM, Tran Q, Yun H-Y, Kim SK, Chae J-w, Kim JK (2023) Beyond the Michaelis-Menten: accurate prediction of drug interactions through cytochrome P450 3A4 induction. Clin Pharmacol Ther 113:1048–1057

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eilertsen, J., Schnell, S. & Walcher, S. The Michaelis–Menten Reaction at Low Substrate Concentrations: Pseudo-First-Order Kinetics and Conditions for Timescale Separation. Bull Math Biol 86, 68 (2024). https://doi.org/10.1007/s11538-024-01295-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11538-024-01295-z

Keywords

- Comparison principle

- Pseudo-first-order kinetics

- Total quasi-steady state approximation

- Monotone dynamical system