Abstract

Generative AI has the potential to support teachers with writing instruction and feedback. The purpose of this study was to explore and compare feedback and data-based instructional suggestions from teachers and those generated by different AI tools. Essays from students with and without disabilities who struggled with writing and needed a technology-based writing intervention were analyzed. The essays were imported into two versions of ChatGPT using four different prompts, whereby eight sets of responses were generated. Inductive thematic analysis was used to explore the data sets. Findings indicated: (a) differences in responses between ChatGPT versions and prompts, (b) AI feedback on student writing did not reflect provided student characteristics (e.g., grade level or needs; disability; ELL status), and (c) ChatGPT’s responses to the essays aligned with teachers’ identified areas of needs and instructional decisions to some degree. Suggestions for increasing educator engagement with AI to enhance teaching writing is discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Improving Writing Feedback for Struggling Writers: Generative AI to the Rescue?

The advances in Generative Artificial Intelligence (generative AI) have transformed the field of education introducing new ways to teach and learn. Its integration is fast growing in all areas of education, including special education (Marino et al., 2023). Generative AI has the potential to increase the inclusion of students with disabilities in general education by providing additional assistive supports (Garg and Sharma, 2020; Zdravkova, 2022). Specifically, large language models like the one used by a popular AI tool, ChatGPT (Chat Generative Pre-trained Transformer) can generate human-like responses to prompts, similar to a conversation. It can facilitate learning for students with and without high-incidence disabilities (e.g., learning disabilities, ADHD) who struggle with writing (Barbetta, 2023). While experts continue to investigate the future of writing in the ChatGPT era, it is evident that it will significantly alter writing instruction (Wilson, 2023). ChatGPT can support students in choosing a topic, brainstorming, outlining, drafting, soliciting feedback, revising, and proofreading (Trust et al., 2023). This tool may also be a helpful resource for teachers in providing feedback on students’ writing. Timely and quality feedback by ChatGPT can encourage the use of higher-level thinking skills while improving the writing process including the planning, writing, and reviewing phases of that process (Golinkoff & Wilson, 2023).

Writing Instruction and Feedback for Struggling Writers

The writing process may be challenging for some students for many reasons. For example, planning is the first step of writing, but many students don’t systematically brainstorm. Instead, they move directly into drafting their sentences which may, in turn, be disjointed and not effectively communicated (Evmenova & Regan, 2019). Students, particularly those with high-incidence disabilities may not produce text or compose limited text, struggling with content generation, vocabulary, and the organization of ideas (Chung et al., 2020). While multilinguism is an asset, we have observed similar challenges with writing among English Language Learners in our research (Hutchison et al., 2024). The cognitive demands needed for drafting a response leave many students at no capacity to then edit or revise their work (Graham et al., 2017). Therefore, teachers should provide scaffolds to break down the complex process of writing so that it is sequential and manageable, progressing from simple to more complex concepts and skills.

Instruction for struggling writers is typically characterized as systematic and explicit (Archer & Hughes, 2011; Hughes et al., 2018). In order to provide explicit instruction, teachers should be guided by ongoing student data. Specifically, special and general education teachers of writing should collaboratively, systematically, and continuously monitor and responsively adjust instruction based on student progress (Graham et al., 2014). Formative assessments of writing inform feedback that a teacher provides a learner. McLeskey et al., (2017) describes:

Effective feedback must be strategically delivered, and goal directed; feedback is most effective when the learner has a goal, and the feedback informs the learner regarding areas needing improvement and ways to improve performance… Teachers should provide ongoing feedback until learners reach their established learning goals. (p. 25)

Various formative assessments are available to guide feedback in writing, with rubrics being one frequently used method, which we will explore in the following section.

Supporting Writing by Struggling Writers

School-aged students are required to show progress towards mastery of writing independently in order to be successful at school, future work, and in their personal lives (Graham, 2019). Thus, educators continuously look for tools to increase and support learner agency and independence including in writing (Edyburn, 2021). Over the past decade, the authors have developed a digital tool to support learner autonomy, access, and independence during essay composition as part of a federally funded, design-based research project referred to as WEGO: Writing Effectively with Graphic Organizers (Evmenova et al., 2018–2023). This tool is a technology-based graphic organizer (or TBGO) that embeds numerous evidence-based strategies and universally designed supports for students as well as an analytic rubric for teachers to evaluate student products and providing feedback. A detailed description of the tool can be found elsewhere (students’ features: Evmenova et al., 2020a; teachers’ features: Regan et al., 2021).

The TBGO was developed to support upper elementary and middle school students with and without high-incidence disabilities to compose multiple genres of writing including persuasive (Evmenova et al., 2016), argumentative (Boykin et al., 2019), and/or personal narrative writing (Rana, 2018). The TBGO has also been effectively used by English Language Learners (Day et al., 2023; Boykin et al., 2019). In addition, it includes a dashboard that allows a teacher or caregiver to personalize instruction: assign prompts and support features embedded in the TBGO. After the student has an opportunity to write independently, the teacher can engage in what we refer to as data-driven decision making (or DDDM; Park & Datnow, 2017; Reeves and Chiang, 2018).

Teachers’ DDDM

A common formative assessment of writing used in classrooms is a rubric. In order to facilitate the DDDM process within the TBGO, various data are collected by the tool and provided to teachers including final writing product, total time spent actively using the tool, video views and duration, text-to-speech use and duration, audio comments use and duration, transition words use, total number of words, number of attempts to finish. A teacher first evaluates those data as well as student’s writing using a 5-point rubric embedded in the teacher dashboard of the TBGO (a specific rubric is available at https://wego.gmu.edu). Based on the rubric, a teacher identifies an area of need organized by phases of the writing process: Planning (select a prompt; select essay goal; select personal writing goal; brainstorm); Writing (identify your opinion, determine reasons, explain why or say more, add transition words, summarize, check your work); and Reviewing: Revise and Edit (word choice, grammar/spelling, punctuation, capitalization, evaluate). Then, a teacher provides specific instructional suggestions when the students’ score does not meet a threshold (e.g., content video models, modeling, specific practice activities). Once teachers select a targeted instructional move that is responsive to the identified area on the writing rubric, they record their instructional decision in the TBGO dashboard. The student’s work, the completed rubric, and the instructional decision is stored within the teacher dashboard. Recent investigations report that teachers positively perceive the ease and usability of the integrated digital rubric in the TBGO (see Regan et al., 2023a; b). Although promising, the teachers in those studies used DDDM with only a few students in their inclusive classes.

Efficient and Effective DDDM

The current version of the TBGO relies on teachers or caregivers to score student writing using an embedded rubric and to subsequently provide the student(s) with instructional feedback. In a classroom of twenty or more students, scoring individual essays and personalizing the next instructional move for each student is time consuming, and teachers may not regularly assess or interpret students’ writing abilities in the upper grades, especially (Graham et al., 2014; Kiuhara et al., 2009). Generative AI or chatbots are arguably leading candidates to consider when providing students with instructional feedback in a more time efficient manner (Office of Educational Technology, 2023). For example, automated essay scoring (AES) provides a holistic and analytic writing quality score of students’ writing and a description as to how the student can improve their writing. Recent research on classroom-based implementation of AES suggests its potential; but questions have been raised as to how teachers and students perceive the scores, and how it is used in classroom contexts (Li et al., 2015; Wilson et al., 2022). Other investigations remark on the efficiency and reliability among AES systems (Wilson & Andrada, 2016) and the consistency of scores with human raters (Shermis, 2014). More recently, a large-language model (specifically, GPT-3.5 version of ChatGPT) was prompted to rate secondary students’ argumentative essays and chatbot’s responses were compared to humans across five measures of feedback quality (see Steiss et al., 2023). Although GPT-3.5 included some inaccuracies in the feedback and the authors concluded that humans performed better than ChatGPT, the comparisons were remarkably close.

A greater understanding of what generative AI tools can do to support classroom teachers is needed. First, leveraging technology, with the use of automated systems, or logistical tools, can potentially improve working conditions for both general and special education teachers (Billingsley & Bettini, 2017; Johnson et al., 2012). Also, although educators see the benefits of AI and how it can be used to enhance educational services, there is urgent concern about the policies needed around its use and how it is ever evolving. For example, when writing this manuscript, GPT-4 evolved, but at a cost, this latter version may not be widely accessible for educators or students. With the fast adoption of AI, the Office of Educational Technology states that “it is imperative to address AI in education now to realize and mitigate emergent risks and tackle unintended consequences” (U.S. Department of Education, 2023, p. 3). A first step in addressing AI in education is to understand what AI can do, and how its use supports or hinders student learning and teacher instruction. In this case, we focus on teachers’ writing instruction and feedback.

As we learn more about AI tools, it becomes obvious that AI literacy skills will need to be developed as part of digital skills by both teachers and students (Cohen, 2023). The importance of how we use chatbots, how we prompt them, and what parameters we use to direct the responses of chatbots becomes paramount.

Purpose

Thus, the purpose of this study was to explore feedback and instructional suggestions generated by different AI tools when using prompts providing varying specificity (e.g., a generic 0–4 rating vs. analytic rubric provided) to help guide teachers of writing in their use of these tools. The purpose of including two versions of ChatGPT was not to criticize one and promote the other; but rather to understand and leverage their similarities and differences, given the same prompt. The research questions were:

-

RQ1: What is the difference between responses generated by GPT-3.5 and GPT-4 given prompts which provide varying specificity about students’ essays?

-

RQ2: What is the nature of the instructional suggestions provided by ChatGPT for students with and without disabilities and/or ELLs (aka struggling writers)?

-

RQ3: How does the formative feedback provided by GPT-3.5 and GPT-4 compare to the feedback provided by teachers when given the same rubric?

Method

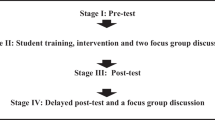

Data for this study were selected from a large intervention research study (led by the same authors) for a secondary data analysis. Specifically, while previous studies focused on the improvements in students’ writing outcomes (e.g., both quantity and quality of written essays) as well as explored how teachers provide feedback on students’ writing, the unique focus of this paper was on the use of AI to provide writing feedback (something we have not done before). The data included 34 persuasive student essays, a teacher’s completed analytic rubric evaluating the essay, and a teacher’s data-driven decisions with instructional feedback in the area of Writing and Reviewing (essays with the teachers’ DDDM in the area of Planning were excluded). We purposefully selected essays completed by students with various abilities and needs in different grade levels who struggled with writing and needed the TBGO intervention.

Participants

The 34 essays used in this study were written by 21 girls and 13 boys. Students ranged in age 8–13 and were in grades 3–7. The majority (59%) were White, 21% were Hispanic, 3% were African American, and 17% were other. Among the students, 41% were identified with high-incidence disabilities (learning disabilities, ADHD); 24% were English language learners (with a variety of primary languages); and 35% were struggling writers as reported by teachers. Teachers identified struggling writers as those who consistently demonstrated writing performance below grade level expectations (e.g., needing extra support with writing mechanics, cohesive and well-organized ideas).

Study Context

The data used in this study were collected in two separate settings: two inclusive classrooms in a suburban, private day school and an after-school program in a community center serving economically disadvantaged families. The same essay writing procedures were used in both settings. All students were first asked to write a persuasive opinion-based essay in response to one of two prompts validated by previous research (Regan et al., 2023b). Examples of the prompts included:

-

Some students go to school on Saturday. Write an essay on whether or not students should go to school on Saturdays.

-

Some people believe kids your age should not have cell phones. Using specific details and examples to persuade someone of your opinion, argue whether or not kids your age should have cell phones.

After the pretest, students were introduced to the technology-based graphic organizer (TBGO) with embedded evidence-based strategies and supports. The instruction lasted 5–6 lessons. Then students were asked to use the TBGO to practice independent essay writing without any help from the teachers. As the TBGO is a Chrome-based web application and works on any device with a Chrome browser installed, each student used their own device/laptop and individual login credentials to access the TBGO. After completing the independent writing, teachers reviewed students’ products and completed the analytic rubric built into the TBGO’s teacher dashboard. They identified one primary area of need and determined an instructional decision that should take place in order to address the existing area of need. The instructional decisions included whole- and small-group activities (especially in those cases when multiple students demonstrated the same area of need); independent activities (including watching video models embedded within the TBGO); as well as individual teacher-student check-ins to discuss the area of need and future steps. A posttest with the TBGO and a delayed posttest without the TBGO were later administered. The essays used in the current study were from an independent writing phase since those included teachers’ DDDM. On average, essays had 133.44 (SD = 57.21; range 32–224) total words written. The vast majority included such important persuasive essay elements such as a topic sentence introducing the opinion, distinct reasons, examples to explain the reasons, summary sentence, and transition words. While this provides some important context, the quantity and quality of students’ writing products is not the focus of the current study and is reported elsewhere (Boykin et al., 2019; Day et al., 2023; Evmenova et al., 2016, 2020b; Regan et al., 2018, 2023b).

Data Sources

The existing 34 essays were imported into two different versions of the ChatGPT generative AI: GPT-3.5 version of ChatGPT (free version) and GPT-4 (subscription version). Four different prompts were used in both ChatGPT versions (see Table 1). As can be seen in Table 1, the different prompts included (1) using a specific analytic rubric (when a rubric from the TBGO was uploaded to ChatGPT); (2) asking for a generic 0–4 rating (without any additional specifics regarding scoring); (3) no rubric (asking to identify the area of need without any rubric); (4) no information (asking to provide generic feedback without any information about the student in the prompt). Each prompt type constituted its own GPT chat. Thus, eight sets of responses (or eight different chats) were generated by ChatGPT. A prompt tailored to include the student’s essay as well as the specific student characteristics and the essay topic when applicable (according to the prompt samples presented in Table 1) was pasted into the chat. After GPT had a chance to react and provide feedback, the next prompt was pasted into the same chat. Thus, each chat included a total of 34 prompts and 34 GPT outputs. Each chat was then saved and analyzed.

Data Analysis and Credibility

Inductive thematic analysis was used to explore how generative AI can be used to provide writing feedback and guide writing instruction for struggling writers (Guest et al., 2011). First, each set of ChatGPT responses (or each GPT chat) was analyzed individually, and reoccurring codes across responses were grouped into categories. The four members of the research team were randomly assigned to analyze two GPT sets each. Each member generated a list of codes and categories within a chat that were the shared with the team and discussed. During those discussions, the patterns within categories were compared across different sets to develop overarching themes in response to RQ1 and RQ2. The trustworthiness of findings was established by data triangulation across 34 writing samples and eight sets of feedback. Also, peer debriefing was used throughout the data analysis (Brantlinger et al., 2005).

To answer RQ3, frequencies were used to compare teachers’ and ChatGPT scores on the analytic rubric and suggested instructional decisions. First, two researchers independently compared teachers’ and ChatGPT scores and suggestions. Since the same language from the rubric was used to identify the area of need, the comparisons were rated as 0 = no match; 1 = match. For instructional suggestions, the scale was 0 = no match; 1 = match in concept, but not in specifics; and 2 = perfect match. Over 50% of comparisons were completed by two independent researchers. Interrater reliability was established using point-by-point agreement formula dividing the number of agreements by the total number of agreements plus disagreements and yielding 100% agreement.

Findings

RQ1: Differences in AI Responses

In effort to answer RQ1 and explore the differences between responses generated by different ChatGPT versions when given prompts with varying specificity, we analyzed eight sets of responses. While the purpose was not to compare the sets in effort to find which one is better, several patterns have been observed that can guide teachers in using ChatGPT as the starting point for generating writing feedback to their struggling writers. The following are the six overarching themes that emerged from this analysis.

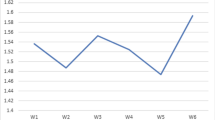

Predictable Pattern of Response

As can be seen in Table 2, all sets generated excessive amounts of feedback (average length: M = 383; SD = 109.7; range 258–581 words) and followed a consistent, formulaic, and predictable pattern of responses across all the writing samples. While the layout and headers used to organize the responses differed across different ChatGPT versions and prompts, the layout and headers were consistent within each set. That said, it was also observed in all ChatGPT sets that the organization and headings found in a response changed slightly towards the end of the run for the 34 writing samples. It is unclear whether this pattern change may happen after a certain number of entries (or writing samples in our case) were entered into the ChatGPT run or if this shift in pattern occurs randomly. Similarly, we also observed that the later responses seemed to be more concise and lacked details which were observed earlier in the same set.

Specific Analytic Rubric

Both GPT-3.5 and GPT-4 provided responses organized into nine categories matching those included in the uploaded rubric. Each category included 1–2 sentences of feedback along with a numerical rating on a 0–4 scale. An overall holistic score was also calculated at the end along with a summary of the student’s overall strengths and weaknesses.

Generic 0–4 Rating

For each writing sample, GPT-3.5 consistently included an evaluation of student writing using four criteria-based categories: Content, Organization, Language Use (punctuation, spelling, and grammar), and Development of Ideas. Two to three bullet points of feedback were listed under each category along with a numeric rating on a 0–4 scale for each. The scale was not defined or explained. An overall holistic score was totaled at the end along with a summary of feedback presented in a bulleted list.

GPT-4’s response to the first writing sample included a definition of what each point on the scale meant (e.g., 4 = writing is clear, well-organized, well-developed, with effectively chosen details and examples presented logically, and few to no errors in conventions). In all consecutive responses, an introductory paragraph identified an overall bold-faced score (0–4) and an overview of what the student did and did not demonstrate in the writing. The following areas of writing were discussed across essays: Organization, Development, Main Idea, Reasons, Examples, Coherence, and Grammar.

No Rubric

Each response in GPT-3.5 began with “One area of need is…” followed by two sentences including how to address the need. Areas of need for instruction identified by ChatGPT included a high frequency of subject-verb agreement as parts of sentence structure (topic sentence and supporting details), followed by transition words or phrases, spelling and grammar conventions, spelling and word choice, capitalization, and punctuation. The second part of the response, titled Instructional Suggestion, provided an instructional strategy for a teacher to use, followed by a model of a ‘revised’ essay using ideas from the student’s response.

GPT-4 provided four consistent parts. First, the response opened with a statement about what the student wrote, a positive affirmation, and an instructional area of writing that could be improved upon. Next, under a header of Instructional Suggestion was a brief description as to what the teacher should do. The third part was a bold-faced, numbered list of steps for implementing that suggestion with bulleted cues underneath. The final part of the response was a ‘revised’ paragraph using the student’s initial writing and addressing the area of need.

No Info

GPT-3.5 provided feedback organized in 9 to 11 bolded categories. The sections that were identical for every writing sample included Proofreading; Revising and Editing; Encourage Creativity; and Positive Reinforcement. The sections that were consistent but individualized for each writing sample were Clarity and Organization (including a topic/introductory sentence); Supporting Details; Sentence Structure and Grammar (primarily focus on sentence fragments, punctuation, and capitalization); Conclusion; Vocabulary and Word Choice. Feedback on spelling and transition words/phrases was offered either as separate categories or subsumed under others.

GPT-4’s response could be organized in 3 overarching groups: Positive Reinforcement (including specific praise, affirmation, and creativity); Areas for Improvement (content feedback including idea development; details; coherence; clarity and focus; concluding sentence; and technical feedback including sentence structure; punctuation; grammar; word choice); as well as Instructional Suggestions. A sample revised paragraph was offered at the end with an explanation as to how it showcased the offered suggestions.

Using Specific Language from the Rubric

Both Specific Analytic Rubric sets (using GPT-3.5 and GPT-4) referred exclusively to the uploaded rubric and provided feedback using specific language from the rubric. This included feedback across the nine categories built into the rubric (e.g., the writer clearly identified an opinion, the writer has determined three reasons that support his/her opinion, etc.). Also, both ChatGPT versions used descriptors from the rubric (0 = Try again; 1 = Keep trying; 2 = Almost there; 3 = Good job; 4 = Got it). However, GPT-3.5 did not use any explicit examples from the student’s writing within the feedback and used broad and general statements. GPT-4 referred to the specific content from the students’ writing samples and was more tailored, or individualized (e.g., There are some grammatical and spelling errors present, e.g., "are" instead of "our").

Identifying General, Broad Areas of Need

Feedback in all GPT-3.5 sets (regardless of the prompt) was characterized as using common phrases representing broad areas of need. These phrases were not specifically targeted or explicit. For example, the Generic Rating GPT-3.5 set included such common phrases as “The essay presents ideas and supports them with reasonable detail, but there's room for more depth and elaboration.” or “The content is well-structured and effectively conveys the main points.” Similarly, the No Rubric GPT-3.5 set identified instructional areas of need that were only broadly relevant to the students’ writing. For example, in several instances, our review questioned the prioritization of the writing area identified and if ChatGPT was overgeneralizing areas in need of improvement. Specifically, does two instances of using lowercase when it should be uppercase mean that capitalization should be prioritized over other essential features of writing? Finally, the No Info GPT-3.5 set also used common phrases to describe areas for improvement regardless of the writing sample. For example, there were no difference in ChatGPT’s feedback for a writing essay with eight complete, robust, well-written sentences vs. an incomplete paragraph with just two sentences indicating the lack of targeted and specific feedback.

No Rubric GPT-4 set would start with identifying a broad area of need (e.g., coherence, grammar, development, organization/development of ideas, attention to detail) followed by a more individualized and specific instructional suggestion (as discussed below). The authors acknowledge that this might be explained by the prompt language to identify one area of need.

Focusing on an Individualized, Specific Areas of Need

Like the Specific Analytic Rubric GPT-4 set, the Generic 0–4 Rating GPT-4 set and the No Info GPT-4 sets were observed to include more guidance for the student, drawing on specific areas of an essay to provide corrective feedback. For example, Generic Rating GPT-4 feedback noted, “We should also try to provide more specific examples or explanations for each reason. For example, you mentioned that students get tired – maybe you can explain more about how having some recess can help them feel less tired.” In turn, No Info GPT-4 included detailed feedback focused on specific areas of need such as encouraging more details and clarifications, cohesion and flow, capitalization, spelling, homophones, and punctuation (including avoiding run-on sentences and properly using commas). Word choice, contractions, and conjunctions were often mentioned offering specific revisions. Varying the length and structure of sentences was sometimes suggested for making the writing more engaging and readable.

Misaligned Feedback

While there were some occasional discrepancies in GPT-4 sets, all GPT-3.5 sets appeared to generate feedback that was more misaligned with writing samples. For example, in the Specific Analytic Rubric GPT-3.5 set, a “Good Job” score of 3 was given for the Summary sentence that read, “Moreover, …” and was not a complete sentence. Also, the Generic Rating GPT-3.5 set did not mention any misuse of capitalization despite numerous cases of such misuse. Subject-verb agreement was erroneously mentioned as an area of need for some writing samples for the No Rubric GPT-3.5 set, and then, not mentioned for those students’ writing in which this feedback would be relevant. In the No Info GPT-3.5 set, the topic or introductory sentence was always noted as a suggested area of improvement and a revised sentence was always provided. This was true for cases when a student:

-

was missing an opinion that aligned with the prompt

-

had an opinion but did not start it with words “I believe …” (e.g., “Kids should get more recess time.”); and

-

already had a strong introductory sentence (e.g., “I believe that school starts too early and should begin later in the morning.”).

Starting with Specific Praise/Positive Affirmation

While most ChatGPT feedback included some general praise and affirmation, Generic Rating GPT-4, No Rubric GPT-4, and No Info GPT-4 sets always started with specific positive reinforcement. Unique elements in each essay were praised including conveying personal experiences, having a clear stance or position, and including a variety of reasons, etc.

RQ2: Instructional Suggestions

Instructional suggestions based on the evaluation of student writing was a focus of RQ2. Although we expected the responses from prompts that included specific student characteristics to differentiate the instructional suggestions in some way, this was not the case. In fact, none of the sets provided explicit instructional suggestions aligned with students’ characteristics (e.g., grade, disability, ELL). First, the suggestions for improving the writing of a 3rd grader’s essay were not distinct from those suggestions provided in response to a 7th grader’s writing (in Generic Rating GPT-3.5 and No Rubric GPT-3.5 sets). Also, there were no remarkable differences in the vocabulary used in the feedback for a 3rd grader vs. a 7th grader (in Generic Rating GPT-4 set). Only one set (Generic Rating GPT-4) offered a personalized message in a student-friendly format (without any additional prompting to do so).

Second, student characteristics were merely acknowledged in some sets. For example, Specific Analytic Rubric GPT-3.5 and GPT-4 only noted those characteristics in the summary section at the end of the feedback (e.g., “This is a well-written persuasive essay by your 7th-grade student with ADHD”). This was also observed in responses from the Generic Rating GPT-4 set, as well. For example, “This feedback emphasizes both the strengths of the student’s writing and the areas where improvement can be made, offering encouragement and guidance that is particularly important for a student with ADHD.” Finally, the No Rubric GPT-4 set also gave a mere nod to the additional context (e.g., Given student characteristics…). Although rare, connecting student characteristics with instruction was observed here: “Students with ADHD often struggle with organizing their thoughts in a coherent manner, and the flow of ideas in this student’s paragraph seems a bit disjointed….” Students’ characteristics were not mentioned in any other sets in which student information was included in the prompt (Generic Rating GPT-3.5 and No Rubric GPT-3.5).

Below is the description of how specific, broad, or no instructional suggestions were included in the ChatGPT sets (see Table 2).

Specific Suggestions

Specific instructional suggestions were mentioned in Generic Rating GPT-4, No Rubric GPT-4, and No Info GPT-4 sets. At the end of responses for the Generic Rating GPT-4 set, ChatGPT encouraged the teacher to use self-regulatory instructional strategies with students, such as goal setting or self-evaluation. For example, “By involving the student in the refinement of their work and setting goals, you empower them to take ownership of their learning and progression.”

No Rubric GPT-4 responses used such headings as modeling, guided practice, feedback, and independent practice with bulleted ideas under each. The specific suggestions included practice, mini-instructional lessons, engaging activities, peer review, explicit instruction, sentence-building activities, peer review sentence starters, technology such as word processing and online games, the five W’s and How strategy (i.e., a writing strategy that helps students remember to include the answers to “who,” “what,” “where,” “when,” “why,” and “how” in their writing to make their writing complete and clear), a mnemonic referred to as PEE (i.e., Point, Explain, Elaborate; this mnemonic helps students ensure their writing is focused, well-supported, and thoroughly developed), a personal dictionary, interactive editing, and a graphic organizer or outline. When the latter was suggested to support the “coherence” or “development of ideas,” ChatGPT’s response sometimes provided a backwards planning model of what the student’s ideas would look like in an outline format.

Responses of the No Info GPT-4 set included specific and varied instructional suggestions organized by categories: Writing Exercises; Focused Practice; and Revision Work. Suggestions included mini lessons on sentence structure, transition workshops, details workshops, personal experience illustrations, developing ideas workshops, worksheets, grammar lessons, spelling activities, sentence expansion or completion, and editing practice.

Broad Instructional Suggestions

Primarily broad instructional suggestions were offered in the Generic Rating GPT-3.5 and No Rubric GPT-3.5 sets. For example, Generic Rating GPT-3.5 responses had a section with a bulleted list of actionable, instructional suggestions. Each began with a verb (i.e., Work on…; Encourage the student to…; Practice…). It was also not clear if these suggestions were presented in any order of instructional priority. Also, the items included broad ideas that aligned with the student essays but may or may not have aligned with the lowest rated category of writing. Examples of largely vague and broad instructional suggestions recycled throughout the responses in the No Rubric GPT-3.5 set including: “use different types of sentences,” “teach basic spelling rules,” and “use appropriate punctuation.”

Revised Essay

The following three ChatGPT sets included responses with a revised student essay along with a brief explanation of how it was better (even though a revision was not requested in the prompt): No Rubric GPT-3.5, No Rubric GPT-4, and No Info GPT-4 sets. We considered that a model of writing, revised for improvement, was a broad instructional strategy. This is one of many excellent strategies for teaching writing, however, the revisions were often characterized by sophisticated vocabulary and complex elaborations. For example, a student wrote, “To illustrate, when students are hungry it’s hard for them to listen.” And ChatGPT elevated the sentence with, “To illustrate, when students are hungry, it's hard for them to listen because their minds may be preoccupied with thoughts of food.” Whereas the latter sentence is a well-crafted model for the student, this revision arguably loses the student’s voice and tone.

No Instructional Suggestions

No explicit instructional suggestions were included in the responses for Specific Analytic Rubric GPT-3.5, No Info GPT-3.5, and Specific Analytic Rubric GPT-4 sets. The reader was only reminded to provide feedback in a constructive and supportive manner and encourage students to ask questions and seek clarifications on any offered suggestions. While this is logical for both Specific Analytic rubric sets (not asking for instructional suggestions in the prompt), it is surprising for the No Info GPT-3.5 set (which asked for feedback and instructional suggestions).

RQ3: Comparisons Between Teachers and ChatGPT

In response to RQ3, we compared a real teachers’ data-based decision-making (DDDM), including the score and the instructional decision, to the scores generated in the Specific Analytic Rubric GPT-3.5 and Specific Analytic Rubric GPT-4 sets for students’ essays (N = 34). The first rubric category scored with a 2 or below was considered the area of need for writing instruction.

GPT-3.5 matched the teacher’s recommendation for the area of writing need 17.6% of the time. For example, the teacher identified Word Selection as the area of need (e.g., high use of repeated words and lacking sensory words) and GPT-3.5 noted the same area of need (e.g., there is some repetition and awkward phrasing). When comparing teacher versus ChatGPTs instructional decisions, there was no perfect match; however, 26.5% were coded as a partial match. For example, both the teacher and GPT-3.5 suggested an instructional activity of modeling how to write a summary sentence.

GPT-4 matched the teacher’s recommendation for the area of writing need 23.5% of the time. Similarly, when comparing the teacher versus ChatGPT’s instructional decisions, 47.1% were coded as a partial match for instruction.

Discussion and Practical Implications

Since the end of 2022 when it debuted, school leaders and teachers of writing have been grappling with what ChatGPT means for writing instruction. Its ability to generate essays from a simple request or to correct writing samples is making an impact on the classroom experience for students with and without disabilities and it is reshaping how teachers assess student writing (Marino et al., 2023; Trust et al., 2023; Wilson, 2023). However, teachers may have limited knowledge of how AI works and poor self-efficacy for using AI in the classroom to support their pedagogical decision making (Chiu et al., 2023). It is imperative to ensure that teachers receive professional development to facilitate the effective and efficient use of AI. There are more questions than answers currently, especially for its application by students struggling with academics.

The purpose of this investigation was to explore the application of ChatGPT chatbot for teachers of writing. Specifically, we used different versions of ChatGPT (GPT-3.5 – free and GPT-4 – subscription) and purposefully different types of prompts, providing limited or more information about the student characteristics and the topic of their writing. Essentially, we asked ChatGPT to evaluate an authentic student’s writing, identify the area(s) of need, and provide instructional suggestion(s) for addressing the problematic area(s) in that individual writing sample. We then compared AI-generated feedback to that completed by humans.

-

The findings indicate the possibilities and limitations of ChatGPT for evaluating student writing, interpreting a teacher-developed rubric, and providing instructional strategies.

Our finding is that, generally, ChatGPT can follow purposeful prompts, interpret and score using a criterion-based rubric when provided, create its own criteria for evaluating student writing, effectively revise student essay writing, celebrate what students do well in their writing, paraphrase student essay ideas, draft outlines of a student’s completed essay, and provide formative feedback in broad and specific areas along different stages of the writing process. Moreover, the response is immediate. These findings are consistent with previous investigations of ChatGPT and the assessment of student writing (Steiss et al., 2023). However, teachers need to consider the following points before relying on ChatGPT to provide feedback to their struggling writers.

-

In the ChatGPT sets which included no contextual information, the responses included more feedback.

-

All sets generated excessive amounts of feedback about student writing with no delineation of the next clear instructional move a teacher should attend to. So, ChatGPT may work as a great starting point, but teachers will need to go through the response to prioritize and design their instruction. Sifting through information for relevance can be time consuming and may even warrant a teacher verifying the content further.

-

Additionally, if students relied directly on ChatGPT, without any vetting from a teacher about the content, they too may be overwhelmed by the amount of feedback given to modify their writing or they may even be provided with erroneous feedback.

-

All GPT-3.5 sets identified broad areas of writing that needed improvement and frequently used common phrases such as grammar, organization/development of ideas, and attention to detail. In addition, this feedback was more often misaligned with students’ writing. This observation is worrisome since GPT-3.5 version of ChatGPT is free and highly accessible, making it likely the preferred AI tool for classroom educators.

-

Most GPT-4 sets (except one) generated more specific and individualized feedback about student writing. The specific feedback included in the generated outputs were much lengthier and would take much more time for a teacher to review than GPT-3.5 responses.

-

All sets identified multiple areas of need and when included in the responses, there were multiple instructional suggestions. Even the No Rubric sets, which explicitly prompted ChatGPT to focus on just one area of instructional need and one suggestion, included much more in the responses. This finding reiterates that we are still learning about AI literacy and the language we need to use to communicate effectively.

-

Both GPT-3.5 and GPT-4 allowed the upload of a researcher-developed analytic rubric and moreover, interpreted the performance criteria, rating scale, and indicators. ChatGPT also used the rubric’s specific language when providing its evaluation of the student writing.

-

No tailored feedback or specific suggestions were contextualized when prompts included varying ages, grade levels, or various student abilities and needs. Further research is needed to determine the types of AI literacy prompts or the contextual information that ChatGPT needs to address the particular needs of an individual child. Specially designed instruction, the heart of special education, should be tailored to a particular student (Sayeski et al., 2023).

-

Low agreement reported between the rubric scores and instructional suggestions made by teachers and those generated by ChatGPT does not necessarily mean that ChatGPT’s feedback is incorrect. One explanation for the difference may be that teachers provide targeted and individualized instruction using multiple forms of data and critical information to make instructional decisions. This includes their own professional judgement and knowledge about how each students’ backgrounds, culture, and language may influence student performance (McLeskey et al., 2017).

Limitations

This study is an initial exploration. There are several limitations that need to be taken into consideration. First and foremost, the four prompts were designed to present the chatbots with varying levels of details and student information to consider when providing feedback about a student’s writing sample. For example, Specific Analytic Rubric prompt asked the chatbot to assess students’ writing using an uploaded rubric, while No Rubric prompt asked to identify one area of need for the student’s writing and offer one instructional suggestion to address it. In addition to providing the chatbots with varying information, we also used varying language throughout the prompts when seeking feedback and suggestions (e.g., “Identify areas of need for this student’s writing”; “Identify one area of need … and offer one instructional suggestion”; “what feedback and instructional suggestions…”). Chatbots are clearly sensitive to the word choices made; thus, a consistency of the language in prompts should be considered for any future investigations that aim at prompt comparison. The purpose of this work was not to compare the four prompts in effort to find the best possible one. We also were not looking specifically for the feedback that could be shared with students as is (even though some versions generated such feedback without additional prompting). Instead, we were trying to explore how the output might differ depending on the prompts with differing level of detail. So, some of the reported difference are logical. We also did not prompt the ChatGPT any further, which would most likely result in refined feedback and/or suggestions. There is an infinite number of prompts that we could have used in this analysis. In fact, a new field of prompt engineering is emerging right in front of our eyes as we learn to design inputs for generative AI tools that would produce optimal outputs. Further investigations of various prompts to feed ChatGPT are needed. Our hope is that this paper will inspire teacher to spend some time exploring different tools and prompts in effort to find the most appropriate output depending on their context and their students’ needs.

Also, there was a limited numbers of essays from each specific group of learners (e.g., certain age/grade, specific disability categories and other characteristics). While we reported meaningful findings for this initial exploratory analysis, future research should include writing products from more homogeneous groups. Finally, teachers’ DDDM was accomplished by evaluating a completed graphic organizer, while ChatGPT feedback was provided based on the final student essay copied and pasted from the TBGO. Future research should consider new features of generative AI tools (e.g., Chat GPT’s new image analysis feature) where an image of a completed graphic organizer can be uploaded and analyzed.

Conclusion

This study offers examples for how to potentially incorporate AI effectively and efficiently into writing instruction. High quality special education teachers are reflective about their practice, use a variety of assistive and instructional technologies to promote student learning, and regularly monitor student progress with individualized assessment strategies. It seems very likely that teachers will adopt the capabilities of generative AI tools. With ongoing development and enhancements, AI technology is certain to become an integral component of classroom instruction. However, given the limitations of ChatGPT identified in this study, teacher-led instruction and decision making is still needed to personalize and individualize specialized instruction. Engaging with the technology more and building familiarity of what it can do to improve student learning and teacher practice is warranted.

References

Archer, A. L., & Hughes, C. A. (2011). Explicit instruction: Effective and efficient teaching. Guilford press.

Barbetta, P. M. (2023). Remedial and compensatory writing technologies for middle school students with learning disabilities and their classmates in inclusive classrooms. Preventing School Failure: Alternative Education for Children and Youth. https://doi.org/10.1080/1045988X.2023.2259837

Boykin, A., Evmenova, A. S., Regan, K., & Mastropieri, M. (2019). The impact of a computer-based graphic organizer with embedded self-regulated learning strategies on the argumentative writing of students in inclusive cross-curricula settings. Computers & Education, 137, 78–90. https://doi.org/10.1016/j.compedu.2019.03.008

Billingsley, B., & Bettini, E. (2017). Improving special education teacher quality and effectiveness. In J. M. Kauffman, D. P. Hallahan, & P. C. Pullen (Eds.), Handbook of special education (2nd ed., pp. 501-520). Boston: Taylor & Francis.

Brantlinger, E., Jimenez, R., Klinger, J., Pugach, M., & Richardson, V. (2005). Qualitative studies in special education. Exceptional Children, 71(2), 195–207. https://doi.org/10.1177/001440290507100205

Garg, S., & Sharma, S. (2020). Impact of artificial intelligence in special need education to promote inclusive pedagogy. International Journal of Information and Education Technology, 10(7), 523–527. https://doi.org/10.18178/ijiet.2020.10.7.1418

Chiu, T. K. F., Xia, Q., Zhou, X., Chai, C. S., & Cheng, M. (2023). Systematic literature review on opportunities, challenges, and future research recommendations. Computers and Education: Artificial Intelligence, 4, 1–15. https://doi.org/10.1010/j.caeai.2022.100118

Chung, P. J., Patel, D. R., & Nizami, I. (2020). Disorder of written expression and dysgraphia: Definition, diagnosis, and management. Translational Pediatrics, 9(1), 46–54. https://doi.org/10.21037/tp.2019.11.01

Cohen, Z. (2023). Moving beyond Google: Why ChatGPT is the search engine of the future [Blog Post]. Retrieved from https://thecorecollaborative.com/moving-beyond-google-why-chatgpt-is-the-search-engine-of-the-future/. Accessed 1 Nov 2023

Day, J., Regan, K., Evmenova, A. S., Verbiest, C., Hutchison, A., & Gafurov, B. (2023). The resilience of students and teachers using a virtual writing intervention during COVID-19. Reading & Writing Quarterly, 39(5), 390–412. https://doi.org/10.1080/10573569.2022.2124562

Edyburn, D. (2021). Universal usability and Universal Design for Learning. Intervention in School and Clinic, 56(5), 310–315. https://doi.org/10.1177/1053451220963082

Evmenova, A. S., & Regan, K. (2019). Supporting the writing process with technology for students with disabilities. Intervention in School and Clinic, 55(2), 78–87. https://doi.org/10.1177/1053451219837636

Evmenova, A. S., Regan, K., Boykin, A., Good, K., Hughes, M. D., MacVittie, N. P., Sacco, D., Ahn, S. Y., & Chirinos, D. S. (2016). Emphasizing planning for essay writing with a computer-based graphic organizer. Exceptional Children, 82(2), 170–191. https://doi.org/10.1177/0014402915591697

Evmenova, A. S., Regan, K., & Hutchison, A. (2018-2023). WEGO RIITE: Writing ef iciently with graphic organizers – responsive instruction while implementing technology ef ectively (Project No. H327S180004) [Grant]. Technology and media services for individuals with disabilities: Stepping-up technology implementation grant, office of special education.

Evmenova, A. S., Regan, K., & Hutchison, A. (2020a). AT for writing: Technology-based graphic organizers with embedded supports. TEACHING Exceptional Children, 52(4), 266–269. https://doi.org/10.1177/0040059920907571

Evmenova, A. S., Regan, K., Ahn, S. Y., & Good, K. (2020b). Teacher implementation of a technology-based intervention for writing. Learning Disabilities: A Contemporary Journal, 18(1), 27–47. https://www.ldw-ldcj.org/

Golinkoff, R. M., & Wilson, J. (2023). ChatGPT is a wake-up call to revamp how we teach writing. [Opinion]. Retrieved from https://www.inquirer.com/opinion/commentary/chatgpt-ban-ai-education-writing-critical-thinking-20230202.html. Accessed 1 Nov 2023

Graham, S. (2019). Changing how writing is taught. Review of Research in Education, 43(1), 277–303. https://doi.org/10.3102/0091732X18821125

Graham, S., Capizzi, A., Harris, K. R., Hebert, M., & Morphy, P. (2014). Teaching writing to middle school students: A national survey. Reading and Writing, 27, 1015–1042. https://doi.org/10.1007/s11145-013-9495-7

Graham, S., Collins, A. A., & Rigby-Wills, H. (2017). Writing characteristics of students with learning disabilities and typically achieving peers: A meta-analysis. Exceptional Children, 83(2), 199–218. https://doi.org/10.1177/001440291666407

Guest, G., MacQueen, K. M., & Namey, E. E. (2011). Applied thematic analysis. SAGE Publications.

Hughes C. A., Riccomini P. J., & Morris J. R. (2018). Use explicit instruction. In High leverage practices for inclusive classrooms (pp. 215–236). Routledge. https://doi.org/10.4324/9781315176093.

Hutchison, A., Evmenova, A. S., Regan, K., & Gafurov, B. (2024). Click, see, do: Using digital scaffolding to support persuasive writing instruction for emerging bilingual learners. Reading Teacher. https://doi.org/10.1002/trtr.2310

Johnson S. M., Kraft M. A., & Papay J. P. (2012). How context matters in high-need schools: The effects of teachers’ working conditions on their professional satisfaction and their students’ achievement. Teachers College Record, 114, 1–39.

Kiuhara, S. A., Graham, S., & Hawken, L. S. (2009). Teaching writing to highschool students. Journal of Educational Psychology, 101(1), 136–160. https://doi.org/10.1037/a0013097

Li, J., Link, S., & Hegelheimer, V. (2015). Rethinking the role of automated writing evaluation (AWE) feedback in ESL writing instruction. Journal of Second Language Writing, 27, 1–18. https://doi.org/10.1016/j.jslw.2014.10.004

Marino, M. T., Vasquez, E., Dieker, L., Basham, J., & Blackorby, J. (2023). The future of artificial intelligence in special education technology. Journal of Special Education Technology, 38(3), 404–416. https://doi.org/10.1177/01626434231165977

McLeskey, J., Barringer, M.-D., Billingsley, B., Brownell, M., Jackson, D., Kennedy, M., Lewis, T., Maheady, L., Rodriguez, J., Scheeler, M. C., Winn, J., & Ziegler, D. (2017). High-leverage practices in special education. Council for Exceptional Children & CEEDAR Center.

Office of Educational Technology (2023). Artificial intelligence and the future of teaching and learning: Insights and recommendations. Retrieved from https://tech.ed.gov/files/2023/05/ai-future-of-teaching-and-learning-report.pdf. Accessed 1 Nov 2023

Park, V., & Datnow, A. (2017). Ability grouping and differentiated instruction in an era of data-driven decision making. American Journal of Education, 123(2), 281–306.

Rana, S. (2018). The impact of a computer-based graphic organizer with embedded technology features on the personal narrative writing of upper elementary students with high-incidence disabilities (Publication No. 13420322) [Doctoral dissertation, George Mason University]. ProQuest Dissertation Publishing.

Reeves, T. D., & Chiang, J.-L. (2018). Online interventions to promote teacher data-driven decision making: Optimizing design to maximize impact. Studies in Educational Evaluation, 59, 256–269. https://doi.org/10.1016/j.stueduc.2018.09.006

Regan, K., Evmenova, A. S., Good, K., Leggit, A, Ahn, S., Gafurov, G., & Mastropieri, M. (2018). Persuasive writing with mobile-based graphic organizers in inclusive classrooms across the curriculum. Journal of Special Education Technology, 33(1), 3–14. https://doi.org/10.1177/0162643417727292

Regan, K., Evmenova, A. S., Hutchison, A., Day, J., Stephens, M., Verbiest, C., & Gufarov, B. (2021). Steps for success: Making instructional decisions for students’ essay writing. TEACHING Exceptional Children, 54(3), 202–212. https://doi.org/10.1177/00400599211001085

Regan, K., Evmenova, A. S., & Hutchison, A. (2023a). Specially designed assessment of writing to individualize instruction for students. In K. L. Write, & T. S. Hodges (Eds.), Assessing disciplinary writing in both research and practice (pp. 29–56). IGI Global. https://doi.org/10.4018/978-1-6684-8262-9

Regan, K., Evmenova, A. S., Mergen, R., Verbiest, C., Hutchison, A., Murnan, R., Field, S., & Gafurov, B. (2023b). Exploring the feasibility of virtual professional development to support teachers in making data-based decisions for improving student writing. Learning Disabilities Research & Practice, 38(1), 40–56. https://doi.org/10.1111/ldrp.12301

Sayeski, K. L., Reno, E. A., & Thoele, J. M. (2023). Specially designed instruction: Operationalizing the delivery of special education services. Exceptionality, 31(3), 198–210. https://doi.org/10.1080/09362835.2022.2158087

Shermis, M. D. (2014). State-of-the-art automated essay scoring: Competition, results, and future directions from a United States demonstration. Assessing Writing, 20, 53–76. https://doi.org/10.1016/j.asw.2013.04.001

Steiss, J., Tate, T., Graham, S., Cruz, J., Hevert, M., Wang, J., Moon, Y., Tseng, W., & Warschauer, M. (2023). Comparing the quality of human and ChatGPT feedback on students’ writing. Retrieved from https://osf.io/preprints/edarxiv/ty3em/. Accessed 1 Nov 2023

Trust, T., Whalen, J., & Mouza, C. (2023). Editorial: ChatGPT: Challenges, opportunities, and implications for teacher education. Contemporary Issues in Technology and Teacher Education, 23(1), 1–23.

U.S. Department of Education. (2023). Office of Educational Technology, Artificial Intelligence and Future of Teaching and Learning: Insights and Recommendations, Washington, DC.

Wilson, J., & Andrada, G. N. (2016). Using automated feedback to improve writing quality: Opportunities and challenges. In Y. Rosen, S. Ferrara, & M. Mosharraf (Eds.), Handbook of research on technology tools for real-world skill development (pp. 678–703). IGI Global.

Wilson, J., Myers, M. C., & Potter, A. (2022). Investigating the promise of automated writing evaluation for supporting formative writing assessment at scale. Assessment in Education: Principles, Policy, & Practice, 29(1), 1–17. https://doi.org/10.1080/0969594X.2022.2025762

Wilson, J. (2023). Writing without thinking? There’s a place for ChatGPT – if used properly [Guest Commentary]. Retrieved from https://www.baltimoresun.com/opinion/op-ed/bs-ed-op-0206-chatgpt-tool-20230203-mydxfitujjegndnjwwen4s4x7m-story.html. Accessed 1 Nov 2023

Zdravkova, K. (2022). The potential of artificial intelligence for assistive technology in education. In M. Ivanović, A. Klašnja-Milićević, L. C. Jain (Eds) Handbook on intelligent techniques in the educational process. Learning and analytics in intelligent systems (vol 29). Springer. https://doi.org/10.1007/978-3-031-04662-9_4.

Funding

This research was supported by the U.S. Department of Education, Office of Special Education Programs [award number: H327S180004]. The views expressed herein do not necessarily represent the positions or policies of the Department of Education. No official endorsement by the U.S. Department of Education of any product, commodity, service, or enterprise mentioned in this publication is intended or should be inferred.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Research Involving Human Participants

All the procedures in this study were evaluated and approved by the Institutional Research Board. All authors have complied with the ethical standards in the treatment of our participants.

Informed Consent

Informed parental consent and student assent were obtained for all individual participants in the study.

Conflict of Interest

There is no known conflict of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Evmenova, A.S., Regan, K., Mergen, R. et al. Improving Writing Feedback for Struggling Writers: Generative AI to the Rescue?. TechTrends (2024). https://doi.org/10.1007/s11528-024-00965-y

Accepted:

Published:

DOI: https://doi.org/10.1007/s11528-024-00965-y