Abstract

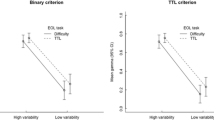

This experimental study examined whether the uninformative anchoring effect, which should be ignored, on judgments of learning (JOLs) was eliminated through the learning experience. In the experiments, the participants were asked to predict whether their performance on an upcoming test would be higher or lower than the anchor value (80% in the high anchor condition or 20% in the low anchor condition) before learning. Experiments 1a and 1b obtained consistent results, regardless of item difficulty. Specifically, the results showed that both the pre- and post-study JOLs in the high anchor condition were higher than those in the low anchor condition. Further, participants in the high (vs. low) anchor conditions made higher item-by-item JOLs during the learning process. This anchoring effect was maintained throughout the learning process. In contrast, there was no significant difference in recall performance between the two conditions. Experiment 3 demonstrated that the uninformative anchoring effect was not eliminated by obtaining test experience through a practice task before presenting anchoring information. These findings suggest that uninformative anchoring biases JOLs, but its effects are not eliminated by the learning experience.

Similar content being viewed by others

Data availability

All data associated with this study are publicly available on OSF and can be accessed at https://osf.io/6zrpg/.

Notes

Exact means, standard deviations and 95% CIs of variables represented in Figures are reported in the Appendix.

Previous research showed that the analysis using aggregate data, such as item-by-item JOLs, can mask variability attributable to individuals and underestimate the true effect (Rouder & Lu, 2005). Given this fact, this study conducted additional analysis for item-by-item JOLs adopting a linear mixed effect model approach including the differences between participants and word pairs as random effects (for details, see Supplementary material), thereby demonstrating the anchoring effect on JOLs.

Additional analysis for item-by-item JOLs adopting a linear mixed effect model approach including the differences between participants and word pairs as random effects also showed a linear trend for the serial position effect (for details, see Supplementary material).

Although the results of global JOLs (i.e., pre- and post-study JOLs) and item-by-item JOLs was inconsistent, it is common to observe a disconnection between global post-study JOL and item-by-item JOLs (e.g., Hertzog et al., 2009).

In this additional analysis, the anchor condition was coded as the low anchor condition = –0.5 and the high anchor condition = 0.5, and recall performance in the practice task was centralized. The effects of the anchor condition and the performance in the practice task were significant, β = 10.33, 95% CI [2.08, 18.57], p = .02 and β = 0.46, 95% CI [0.32, 0.59], p < .001, respectively.

References

Ariel, R., & Dunlosky, J. (2011). The sensitivity of judgment-of-learning resolution to past test performance, new learning, and forgetting. Memory & Cognition, 39(1), 171–184. https://doi.org/10.3758/s13421-010-0002-y

Bakeman, R. (2005). Recommended effect size statistics for repeated measures designs. Behavior Research Methods, 37(3), 379–384. https://doi.org/10.3758/BF03192707

Blake, A. B., & Castel, A. D. (2018). On belief and fluency in the construction of judgments of learning: Assessing and altering the direct effects of belief. Acta Psychologica, 186, 27–38. https://doi.org/10.1016/j.actpsy.2018.04.004

Brooks, B. M. (1999). Primacy and recency in primed free association and associative cued recall. Psychonomic Bulletin & Review, 6(3), 479–485. https://doi.org/10.3758/BF03210838

Castel, A. D. (2008). Metacognition and learning about primacy and recency effects in free recall: The utilization of intrinsic and extrinsic cues when making judgments of learning. Memory & Cognition, 36(2), 429–437. https://doi.org/10.3758/MC.36.2.429

Castel, A. D., McCabe, D. P., & Roediger, H. L., III. (2007). Illusions of competence and overestimation of associative memory for identical items: Evidence from judgments of learning. Psychonomic Bulletin & Review, 14(1), 107–111. https://doi.org/10.3758/BF03194036

Connor, L. T., Dunlosky, J., & Hertzog, C. (1997). Age-related differences in absolute but not relative metamemory accuracy. Psychology and Aging, 12(1), 50–71. https://doi.org/10.1037/0882-7974.12.1.50

Daniels, K. A., Toth, J. P., & Hertzog, C. (2009). Aging and recollection in the accuracy of judgments of learning. Psychology and Aging, 24(2), 494–500. https://doi.org/10.1037/a0015269

Dunlosky, J., & Matvey, G. (2001). Empirical analysis of the intrinsic-extrinsic distinction of judgments of learning (JOLs): Effects of relatedness and serial position on JOLs. Journal of Experimental Psychology: Learning, Memory, and Cognition, 27(5), 1180–1191. https://doi.org/10.1037/0278-7393.27.5.1180

Dunlosky, J., & Thiede, K. W. (2004). Causes and constraints of the shift-to-easier-materials effect in the control of study. Memory & Cognition, 32(5), 779–788. https://doi.org/10.3758/BF03195868

Dunlosky, J., Mueller, M. L., & Tauber, S. K. (2015). The contribution of processing fluency (and beliefs) to people’s judgments of learning. In D. S. Lindsay, A. P. Yonelinas, H. I. Roediger, D. S. Lindsay, A. P. Yonelinas, & H. I. Roediger (Eds.), Remembering: Attributions, processes, and control in human memory: Essays in honor of Larry Jacoby (pp. 46–64). Psychology Press.

England, B. D., & Serra, M. J. (2012). The contributions of anchoring and past-test performance to the underconfidence-with-practice effect. Psychonomic Bulletin & Review, 19(4), 715–722. https://doi.org/10.3758/s13423-012-0237-7

Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160. https://doi.org/10.3758/BRM.41.4.1149

Finn, B., & Metcalfe, J. (2007). The role of memory for past test in the underconfidence with practice effect. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33(1), 238–244. https://doi.org/10.1037/0278-7393.33.1.238

Finn, B., & Metcalfe, J. (2008). Judgments of learning are influenced by memory for past test. Journal of Memory and Language, 58(1), 19–34. https://doi.org/10.1016/j.jml.2007.03.006

Frank, D. J., & Kuhlmann, B. G. (2017). More than just beliefs: Experience and beliefs jointly contribute to volume effects on metacognitive judgments. Journal of Experimental Psychology: Learning, Memory, and Cognition, 43(5), 680–693. https://doi.org/10.1037/xlm0000332

Furnham, A., & Boo, H. C. (2011). A literature review of the anchoring effect. The Journal of Socio-Economics, 40(1), 35–42. https://doi.org/10.1016/j.socec.2010.10.008

Hanczakowski, M., Zawadzka, K., Pasek, T., & Higham, P. A. (2013). Calibration of metacognitive judgments: Insights from the underconfidence-with-practice effect. Journal of Memory and Language, 69(3), 429–444. https://doi.org/10.1016/j.jml.2013.05.003

Hertzog, C., Price, J., Burpee, A., Frentzel, W. J., Feldstein, S., & Dunlosky, J. (2009). Why do people show minimal knowledge updating with task experience: Inferential deficit or experimental artifact? The Quarterly Journal of Experimental Psychology, 62(1), 155–173. https://doi.org/10.1080/17470210701855520

Hertzog, C., Curley, T., & Dunlosky, J. (2021). Are age differences in recognition-based retrieval monitoring an epiphenomenon of age differences in memory? Psychology and Aging, 36(2), 186–199. https://doi.org/10.1037/pag0000595

Higham, P. A., & Higham, D. P. (2019). New improved gamma: Enhancing the accuracy of Goodman–Kruskal’s gamma using ROC curves. Behavior Research Methods, 51(1), 108–125. https://doi.org/10.3758/s13428-018-1125-5

Higham, P. A., Zawadzka, K., & Hanczakowski, M. (2016). Internal mapping and its impact on measures of absolute and relative metacognitive accuracy. In J. Dunlosky & S. K. Tauber (Eds.), The Oxford handbook of metamemory (pp. 39–61). Oxford University Press.

Ikeda, K. (2022). How beliefs explain the effect of achievement goals on judgments of learning. Metacognition and Learning, 17(2), 499–530. https://doi.org/10.1007/s11409-022-09294-y

Jacowitz, K. E., & Kahneman, D. (1995). Measures of anchoring in estimation tasks. Personality and Social Psychology Bulletin, 21(11), 1161–1166. https://doi.org/10.1177/01461672952111004

JASP Team. (2022). JASP (Version 0.16.3) [Computer software].

Jeffreys, H. (1961). Theory of probability ((3rd ed.). ed.). Oxford University Press.

Jia, X., Li, P., Li, X., Zhang, Y., Cao, W., Cao, L., & Li, W. (2016). The effect of word frequency on judgments of learning: Contributions of beliefs and processing fluency. Frontiers in Psychology, 6, 1995. https://doi.org/10.3389/fpsyg.2015.01995

Koriat, A. (1997). Monitoring one’s knowledge during study: A cue utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126(4), 349–370. https://doi.org/10.1037/0096-3445.126.4.349

Linderholm, T., Zhao, Q., Therriault, D. J., & Cordell-McNulty, K. (2008). Metacomprehension effects situated within an anchoring and adjustment framework. Metacognition and Learning, 3(3), 175–188. https://doi.org/10.1007/s11409-008-9025-1

Metcalfe, J., & Kornell, N. (2003). The dynamics of learning and allocation of study time to a region of proximal learning. Journal of Experimental Psychology: General, 132(4), 530–542. https://doi.org/10.1037/0096-3445.132.4.530

Mueller, M. L., Tauber, S. K., & Dunlosky, J. (2013). Contributions of beliefs and processing fluency to the effect of relatedness on judgments of learning. Psychonomic Bulletin & Review, 20(2), 378–384. https://doi.org/10.3758/s13423-012-0343-6

Mueller, M. L., Dunlosky, J., Tauber, S. K., & Rhodes, M. G. (2014). The font-size effect on judgments of learning: Does it exemplify fluency effects or reflect people’s beliefs about memory? Journal of Memory and Language, 70, 1–12. https://doi.org/10.1016/j.jml.2013.09.007

Mueller, M. L., Dunlosky, J., & Tauber, S. K. (2016). The effect of identical word pairs on people’s metamemory judgments: What are the contributions of processing fluency and beliefs about memory? The Quarterly Journal of Experimental Psychology, 69(4), 781–799. https://doi.org/10.1080/17470218.2015.1058404

Nelson, D. L., McEvoy, C. L., & Schreiber, T. A. (2004). The University of South Florida free association, rhyme, and word fragment norms. Behavior Research Methods, Instruments, & Computers, 36(3), 402–407. https://doi.org/10.3758/BF03195588

Olejnik, S., & Algina, J. (2003). Generalized Eta and omega squared statistics: Measures of effect size for some common research designs. Psychological Methods, 8(4), 434–447. https://doi.org/10.1037/1082-989X.8.4.434

Palmer, E. C., David, A. S., & Fleming, S. M. (2014). Effects of age on metacognitive efficiency. Consciousness and Cognition, 28, 151–160. https://doi.org/10.1016/j.concog.2014.06.007

Price, J., & Harrison, A. (2017). Examining what prestudy and immediate judgments of learning reveal about the bases of metamemory judgments. Journal of Memory and Language, 94, 177–194. https://doi.org/10.1016/j.jml.2016.12.003

Putnam, A. L., Deng, W., & DeSoto, K. A. (2022). Confidence ratings are better predictors of future performance than delayed judgments of learning. Memory, 30(5), 537–553. https://doi.org/10.1080/09658211.2022.2026973

Rouder, J. N., & Lu, J. (2005). An introduction to Bayesian hierarchical models with an application in the theory of signal detection. Psychonomic Bulletin & Review, 12(4), 573–604. https://doi.org/10.3758/BF03196750

Scheck, P., & Nelson, T. O. (2005). Lack of pervasiveness of the underconfidence-with-practice effect: Boundary conditions and an explanation via anchoring. Journal of Experimental Psychology: General, 134(1), 124–128. https://doi.org/10.1037/0096-3445.134.1.124

Scheck, P., Meeter, M., & Nelson, T. O. (2004). Anchoring effects in the absolute accuracy of immediate versus delayed judgments of learning. Journal of Memory and Language, 51, 71–79. https://doi.org/10.1016/j.jml.2004.03.004

Senko, C., Perry, A. H., & Greiser, M. (2022). Does triggering learners’ interest make them overconfident? Journal of Educational Psychology, 114(3), 482–497. https://doi.org/10.1037/edu0000649

Thiede, K. W., Anderson, M. C. M., & Therriault, D. (2003). Accuracy of metacognitive monitoring affects learning of texts. Journal of Educational Psychology, 95(1), 66–73. https://doi.org/10.1037/0022-0663.95.1.66

Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science, 185(4157), 1124–1131. https://doi.org/10.1017/CBO9780511809477.002

Wagenmakers, E. J., Love, J., Marsman, M., Jamil, T., Ly, A., Verhagen, J., Selker, R., Gronau, Q. F., Dropmann, D., Boutin, B., Meerhoff, F., Knight, P., Raj, A., van Kesteren, E. J., van Doorn, J., Šmíra, M., Epskamp, S., Etz, A., Matzke, D., …, Morey, R. D. (2018). Bayesian inference for psychology. Part 2: Example applications with JASP. Psychonomic Bulletin & Review, 25(1), 58–76. https://doi.org/10.3758/s13423-017-1323-7

Yang, C., Sun, B., & Shanks, D. R. (2018). The anchoring effect in metamemory monitoring. Memory & Cognition, 46(3), 384–397. https://doi.org/10.3758/s13421-017-0772-6

Zawadzka, K., & Higham, P. A. (2015). Judgments of learning index relative confidence, not subjective probability. Memory & Cognition, 43(8), 1168–1179. https://doi.org/10.3758/s13421-015-0532-4

Zhao, Q. (2012). Effects of accuracy motivation and anchoring on metacomprehension judgment and accuracy. Journal of General Psychology, 139(3), 155–174. https://doi.org/10.1080/00221309.2012.680523

Zhao, Q., & Linderholm, T. (2011). Anchoring effects on prospective and retrospective metacomprehension judgments as a function of peer performance information. Metacognition and Learning, 6(1), 25–43. https://doi.org/10.1007/s11409-010-9065-1

Acknowledgements

This work was supported by JSPS KAKENHI, Grant Number 22K03089 (to Kenji Ikeda).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

APA ethical standards were followed in the conduct of this study, and the present study was approved by Tokai Gakuin University ethics committee.

Consent to participate

Before participation, all participants received informed consent and consented to participate the experiment.

Conflicts of interest

The author does not have any conflicts of interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ikeda, K. Uninformative anchoring effect in judgments of learning. Metacognition Learning 18, 527–548 (2023). https://doi.org/10.1007/s11409-023-09339-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11409-023-09339-w