Abstract

In this paper, we provide a wide-ranging survey of the state of the art in the area of communication and asset price dynamics. We start out by documenting empirical evidence that social communication influences investment decisions and asset prices, before turning to the main modelling approaches in the literature (both past and present). We discuss models of belief-updating based on observed performance; models of herd behaviour; and models with social interactions that arise from preferences for conformity or contrarianism. Our main contribution is to introduce readers to a social network approach which has been widely used in the opinion dynamics literature, but only recently applied to asset pricing. In the final part, we show how recent contributions to both modelling and empirical work are using the social network approach to improve our understanding of financial markets and asset price dynamics. We conclude with some thoughts on fruitful avenues for future research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Investing in speculative assets is a social activity. Investors spend a substantial part of their leisure time discussing investments, reading about investments, or gossiping about others’ successes or failures in investing. It is thus plausible that investors’ behaviour (and hence prices of speculative assets) would be influenced by social movements. (Shiller 1984)

[T]he time has come to move beyond behavioural finance to ‘social finance’, which studies the structure of social interactions, how financial ideas spread and evolve, and how social processes affect financial outcomes. (Hirshleifer 2015)

1 Introduction

Asset prices defy easy explanation but affect the fortunes of individuals and entire economies. When asset prices increase, investors make capital gains and firms can raise more financial capital by issuing shares. Conversely, if asset prices fall sharply, aggregate wealth takes a hit and the resulting losses are widespread, from professional investors, to workers trying to supplement their income, to the old whose pensions depend on the value of the stock market. The ripple effect to the wider economy is a ‘financial accelerator’ in reverse. Therefore, understanding the determinants of asset prices is a worthwhile endeavour.

Following the seminal paper of Shiller (1984), attention turned to communication as a potential determinant of asset prices. In short, the idea is that investors are influenced not only by ‘fundamentals’ or personal judgement about an asset’s value, but also by the opinions of others they come into contact with, such as friends and relatives, well-known successful investors or industry experts. There is a large empirical literature which has found that social factors influence investment decisions, and alongside this literature has developed a model-based literature that studies the impact of communication on asset prices.

In this paper, we survey the literature that models communication and asset price dynamics. We start out by documenting empirical evidence that social communication influences investment decisions and asset prices, before turning to the main modelling approaches in the literature. As a benchmark, we first study an asset pricing model with rational expectations and no communication. We then set out an alternative framework based on heterogeneous expectations and communication between investors, and highlight some important implications. We emphasize heterogeneous expectations because, in most models, communication between investors happens at the level of individual beliefs or asset demands. We also highlight what the main approaches in the literature have in common, such as the assumed use of rule-of-thumb behaviours, or heuristics, by agents in financial markets.

We consider in detail how communication has been modelled in the literature. In particular, we discuss models with updating of belief types (chartist or fundamentalist) based on the observed performance of other investors; models of herd behaviour; and models with ‘social interactions’ due to preferences for conformity or contrarianism at the individual level. Our main departure from previous literature is to highlight a social network approach, which has been widely used in the opinion dynamics literature, but only recently applied to asset pricing. We introduce some key concepts and notation in relation to networks, thus providing readers with the tools needed to understand this growing area of the literature.

In the final part, we show how the most recent contributions to modelling and empirical work are using the social network approach to good effect. We include here works that extend social interactions models via the inclusion of local social networks; models that build directly on the opinion dynamics approach by adding a financial market and performance-based updating of beliefs; and diffusion-based models in which beliefs spread, like a virus, in the population of investors. We highlight some advantages of these modern approaches, and we also show how social network models of asset prices can be—and are being—taken to the data by leading researchers with cutting-edge methods in hand. We conclude by discussing some promising avenues for future research.

2 Motivation

Interest in communication and asset prices was sparked by the work of Shiller (1984). Shiller argued that since investing in speculative assets is a social activity, investor behaviour (and hence asset prices) will be influenced by collective social movements, such as ‘fads’ and fashions. Shiller also pointed to ‘local’ social influences that could affect investor decisions and asset prices, such as group pressure to conform with the opinions of one’s peers or the exchange of information through word-of-mouth communication.

Many empirical studies have considered this social influence hypothesis. In an early paper, Shiller and Pound (1989) surveyed 131 investors and found that many stock purchases were influenced by interactions with personal contacts, such as friends and relatives. In a German study, Arnswald (2001) surveyed 275 fund managers and found that information exchange with industry experts was cited as the most important source of information for their work, followed by conversations with colleagues and media reports.

Focusing on investment portfolios, Hong et al. (2005) show that mutual fund managers in the US have similar asset holdings to those of other fund managers in the same city, while Ivković and Weisbenner (2007) show that US households are more likely to purchase stocks from a particular industry if their neighbours did so. Shive (2010) investigated the impact of social influence using trading data for the 20 most active stocks in Finland; socially influenced trades were found to predict stock returns. In a similar vein, Ozsoylev et al. (2014) found that investors in the Istanbul Stock Exchange are connected in an empirical investor network and that more central investors earn higher returns with respect to information events.

More recently, there has been attention on social media as a medium of communication that affects investment decisions and asset prices. For example, Jiao et al. (2020) study the impact of traditional news media and social media on turnover and stock volatility and find that traditional news coverage predicts decreases in subsequent turnover and volatility, whereas social media coverage predicts increases in subsequent turnover and volatility. They also show that these patterns are consistent with a model of ‘echo chambers’ in which social networks repeat news, but some investors interpret repeated news as new information. By comparison, Semenova and Winkler (2021) study text data from online discussions on the investor forum WallStreetBets and find that discussions about particular stocks can be self-perpetuating and that peer influence on retail investors is primarily through consensus formation and belief contagion by shifting investor attention to particular stocks.

Finally, there are also several ‘lab experiments’ that study the impact of communication on asset prices. For example, Oechssler et al. (2011) study the implications of inside information and communication among traders in an experimental asset market; they find that having traders with an information advantage can create bubbles, but communication is counterproductive for bubble formation. Schoenberg and Haruvy (2012) studied traders in an experimental asset market who received communication about their relative performance; this information had a significant impact on market prices and boom duration. Communication and price bubbles is revisited by Steiger and Pelster (2020), who find that face-to-face communication leads to significantly larger asset price bubbles than standard laboratory markets and increases size of bubbles more than communication via social media ‘likes’.

In short, there is compelling evidence that communication affects investment decisions and asset prices. This observation raises important questions for researchers interested in financial markets. How should social interactions between investors be modelled? Which social interactions, specifically, are most useful in explaining price volatility and stylized facts of empirical stock returns? In the next sections, we first set out a benchmark asset pricing model in which communication plays no role. We then add heterogeneous expectations in the model and use this framework to introduce the reader to the various approaches to modelling social communication in the literature, including the most recent developments.

3 Asset pricing without communication

Let us start by considering a benchmark model of asset prices with no social influence. We assume that investors have common rational expectations, consistent with the efficient market hypothesis (see e.g. Fama 1970). We refer to this as the ‘conventional approach’.

The conventional approach stresses economic fundamentals as the key determinant of asset prices. These fundamentals include, among others, interest rates on bonds and dividends paid out to shareholders. Note that dividends should be considered stochastic since future profitability of firms is uncertain ex ante and firms are not required to pay dividends to shareholders even if they earn a profit. As a result, investors must form expectations of future fundamentals, such as dividends, which are random variables.

To make this concrete, suppose there are N investors and two assets, a share and a bond. The first asset (shares, x) pays a stochastic dividend \(d_t\) in each period \(t \in {\mathbb {N}}\); we assume \(d_t\) is exogenous and has a fixed conditional variance. Shares can be purchased at a known price \(p_t\) and their (unknown) resale price one period ahead is \(p_{t+1}\). The second asset (bonds) is riskless: it pays a fixed return \(r>0\) and has a price normalized to 1. Shares are in zero net supply, whereas bonds have a flexible supply. As a result, investors are not liquidity constrained and may take arbitrarily large positive or negative asset positions.

Investors want a portfolio of shares and bonds that maximizes their utility. Following Markowitz (1952), we give each investor \(i\in {\mathcal {N}}\) a mean-variance utility function with risk aversion parameter \(a>0\), where \({\mathcal {N}}\) denotes the set of all investors. We also give all investors common rational expectations \(E_t[.]=E[.|I_t]\), where \(E_t[.]\) is the conditional expectations operator and \(I_t = \{p_t,p_{t-1},\ldots ;d_t, d_{t-1},\ldots \}\) is the information set of investors at time t.

The optimal portfolio choice of investor \(i\in {\mathcal {N}}\) solves the problem:

where \(w_{t+1}^i = (p_{t+1} + d_{t+1} ) x_t^i + (1+r)(w_t^i - p_t x_t^i)\) is the future wealth, \(w_t^i - p_t x_t^i\) is holdings of the riskless asset (bonds), and \(V_t\) denotes conditional variance. Let \(R_{t}= p_{t}+d_{t} - (1+r)p_{t-1}\) denote the excess return on shares.

The first-order condition for problem (1) is:

where we set \(V_t[R_{t+1}]=\sigma ^2>0\) because under standard assumptions about dividends, there is a fundamental rational expectations solution with constant conditional variance.Footnote 1

Equation (2) says that the optimal demand for shares equals the ratio of the expected excess return to the (scaled) conditional return variance; intuitively, the latter is the product of the aversion to risk a and the quantity of risk \(\sigma ^2\). Because all these terms are common knowledge to investors with rational expectations, the demand for shares is homogeneous.

Market-clearing requires that the aggregate demand for shares equal the zero net supply, or \(\sum _i x_t^i = 0\), which implies that \(p_t = \frac{E_t[p_{t+1}] + E_t[d_{t+1}]}{1+r}\). Assuming that rational bubbles are ruled out, the asset price is given byFootnote 2

i.e. price equals the expected present-discounted-value of the future dividend stream.

Hence, the rational expectations asset pricing model set out above implies that:

-

Investors have common expectations, such that all investors value the asset equally and take the same asset position (given the absence of liquidity constraints);

-

The price can be determined using the expectations of a ‘representative agent’ and these expectations reflect all available (useful) information about asset payoffs;

-

Price depends on expected future fundamentals (dividends and interest rates) in all future periods and is equal to the risky asset’s intrinsic value.

According to this textbook model of asset pricing, social factors play no role for the simple reason that no investor can learn anything useful from interactions with others: all investors share the same information and beliefs, and thus take the same investment decisions.

The conventional approach clearly makes strong assumptions and has strong implications. For instance, because investors value the asset equally and take identical asset positions, there is no trade between investors. From an empirical standpoint, both Shiller (1981) and LeRoy and Porter (1981) pointed to problems with the simple present-discounted-value model of asset prices in (3): historical US stock prices have been far too volatile to be explained by observed changes in dividends, so there is an excess volatility puzzle.

A further implication of the present-value model in (3) is that changes in stock prices should be due solely to unanticipated news about future fundamentals (e.g. dividends). However, large declines in the stock market have been observed even when there has been little or no extra news about fundamentals, and stock prices often seem to be at odds with events in the real economy, to which the profitability of firms is presumably linked.

If fundamentals are not volatile enough to explain the observed variation in share prices, it may be that expectations of future payoffs are excessively volatile. For example, in a well-known passage of the General Theory, Keynes compares the stock market to a newspaper ‘beauty contest’ in which the participants attempt to guess the average opinion, knowing that other readers are participating in the same guessing game. If investors in the stock market are indeed anticipating the expectations of others when forming their own expectations, then expectations need not coordinate on rational expectations, and thus behavioural and psychological influences on investors become relevant considerations. Keynes’ analogy also suggests that investors may prefer to be informed about the expectations of others before making their own investment decisions; this could be achieved, for example, if investors communicate and share information about their beliefs as we discuss below.

These three ingredients—heterogeneous expectations, behavioural influences, and social communication—provide the foundations of an alternative approach to asset pricing that has received considerable interest in recent decades. In the remainder of this paper, we set out this approach and then take the reader to the forefront of this literature.

4 Asset pricing with communication

In this section, we introduce a benchmark asset pricing model in which the investors have heterogeneous expectations about asset payoffs. We then add social communication in the model and use this framework to discuss the main modelling approaches in the literature.

4.1 Heterogeneous expectations

Early work in the literature emphasized heterogeneous expectations across different groups of investors. However, there are many ways in which expectations could differ across individuals, and each could have different asset pricing implications. Research has therefore been informed by psychological evidence that real-world decisions are based on rules-of-thumb, or heuristics, rather than full optimization by unboundedly rational agents (see e.g. Gigerenzer and Todd 1999). This behavioural approach to expectations formation emphasizes simple forecasting rules that may differ across individual investors.

Suppose, therefore, that our model is unchanged, except that investors differ in expectations. We denote the subjective expectation of investor i as \(\tilde{E}_t^i[.]\), where the ‘tilde’ indicates that the expectation is boundedly-rational, in the sense of Simon (1957), to reflect cognitive limitations of investors.Footnote 3 Similarly, \({\tilde{V}}_t^i[.]\) is the subjective variance of investor i.

The optimal portfolio choice of investor \(i \in {\mathcal {N}}\) now solves the problemFootnote 4

The demand of investor i is therefore amended from (2) to

where \({\tilde{\sigma }}_{i,t}^2\) is the subjective return variance of investor i at date t.

Equation (5) makes clear that if investors differ in expectations, then they will generally take different investment decisions. For example, optimistic investors will have higher (risk-adjusted) valuations than pessimistic investors and thus find it optimal to invest more in the risky asset.Footnote 5 Aggregating asset demands across investors and equating aggregate demand with the zero (net) supply of shares, we find that the market-clearing asset price is

Some papers consider heterogeneous, time-varying conditional variances as in (5) and (6). In this case, both an investor’s expectations about payoffs \({\tilde{E}}_t^i[p_{t+1}+d_{t+1}]\) and their subjective return variance \({\tilde{\sigma }}_{i,t}^2\) need to be specified (see De Grauwe and Grimaldi 2006; Ap Gwilym 2010). However, most papers have preferred to focus on heterogeneous expectations of payoffs by assuming subjective variances are homogeneous across agents. In this case, (6) simplifies to

Equation (7) simply says that the asset price equals the average expected payoff (across all investors), discounted by gross return on the riskless asset. There are some notable differences relative to the fundamental price under rational expectations in Eq. (3).

First, the rational expectations price (3) equals the expected discounted present value of future dividends—i.e. the intrinsic value of the risky asset. The price under heterogeneous expectations, (7), will coincide with the latter only if subjective expectations are on average equal to the rational expectation. Second, to the extent that average opinion determines asset prices, the asset price in (7) is in line with Keynes’ beauty-contest view of the stock market: speculative asset prices depend on average opinion, which consists of the subjective assessments of individual investors, rather than strictly rational valuations. Finally, note that each expectation has an equal weight of 1/N, so investors whose subjective expectations are strongly optimistic or pessimistic may ‘bias’ the price in one direction or another.

A common approach in the literature has been to focus on heterogeneous price expectations \( E_t^i[p_{t+1}]\) by holding expected dividends \({\tilde{E}}_t^i[d_{t+1}]\) equal across investors.Footnote 6 Price expectations \({\tilde{E}}_t^i[p_{t+1}]\) are often assumed to be of two types: chartist and fundamentalist. The basic idea is that at any given point in time, investors may make either a chartist (or trend-following) forecast of asset prices or a ‘fundamentalist’ forecast which conditions on fundamental indicators such as dividends and interest rates. The key difference between the two forecasting approaches is that the chartist approach is backward-looking (relying on past prices), whereas the fundamental approach is forward-looking: investors use information on current and projected future fundamentals such as dividends and interest rates.

A chartist forecasting rule c has the general form:

where the function \(f_c: {\mathbb {R}}^L \rightarrow {\mathbb {R}}\) describes how the chartist forecast relates to past prices.

The parameter \(L \ge 1\) in (8) is the longest price lag that is taken into account by chartists. The function \(f_c\) may be linear or nonlinear, but linearity is often assumed in the literature for the sake of analytical tractability. Note that the above specification nests both extrapolative rules (that consider the size and direction past price changes) and level rules (that consider the absolute level of prices). Hence, (8) allows a range of behaviours associated with the trend-following and technical analysis popularized by Charles Dow.

A fundamentalist forecasting rule f has the form:

where \(E_t[p_{t+1}^*]\) is the expected future fundamental price.

Note that the forecast \(E_t[p_{t+1}^*]\) would equal the (actual) expected future price if all investors were fundamentalists with rational expectations; it is thus equal to the one-period-ahead conditional expectation of (3). Equivalently, \(E_t[p_{t+1}^*]\) is the price that is expected to clear fundamental demand at date \(t+1\),Footnote 7 Note that (9) implies that fundamentalists base price forecasts on fundamental information only, even if the market is populated by some chartists; hence such fundamentalist forecasts are behavioural in the sense that they ignore (or are ignorant of) the presence of chartists in the market. This ‘fundamentals-only’ approach appears to be a good (rough) description of some prominent investors.Footnote 8

Early works that modelled both chartist and fundamental investors, include Zeeman (1974), Beja and Goldman (1980) and Chiarella (1992). These papers showed that the presence of chartist investors in the market provides an explanation for the unstable short run behaviour of stock prices. For example, in the model of Zeeman (1974), there is endogenous switching of asset prices between bull and bear markets that can be traced to the behaviour of fundamentalists and chartists, whereas Beja and Goldman (1980) show that with sufficiently strong trend-following in their model, the dynamic system is unstable with exploding price oscillations, such that speculative trading of chartists destabilizes asset prices. In Chiarella (1992), the Beja-Goldman model is generalized such that the demand of chartists is nonlinear, increasing and S-shaped; as a result, a unique stable limit cycle exists along which the asset price and chartists’ assessment of the price trend fluctuate over time.Footnote 9

The focus on chartists and fundamentalists is consistent with evidence on the forecasting strategies of real-world investors. For example, Frankel and Froot (1990) provide survey evidence from foreign exchange forecasting firms: some firms described themselves as focusing on economic fundamentals, whereas others said they relied on chartist analysis or a combination of the two approaches. Interestingly, the relative proportion of firms using chartist forecasting approaches increased substantially over the decade from 1978 to 1988—a period of substantial Dollar appreciation which is difficult to explain using economic fundamentals.

Further evidence is provided by Taylor and Allen (1992) using questionnaire surveys of foreign exchange dealers in London: both chartist (technical) and fundamentalist forecasting approaches are cited, but there is a skew towards chartist, as opposed to fundamentalist, analysis at shorter horizons, which is steadily reversed as the forecast horizon is increased. In a review, Menkhoff and Taylor (2007) find the overall shares of chartist and fundamentalist approaches in foreign exchange forecasting are quite similar, whereas Menkhoff (2010) finds that the pattern of greater reliance on technical analysis at short horizons applies also to fund managers, with the pattern again reversed at longer horizons such as months and years.

The survey evidence above indicates that the popularity of chartist versus fundamentalist forecasting strategies is not fixed over time and seems to be linked to the relative performance of these forecasting approaches (see Frankel and Froot 1990). In the early models with chartists and fundamentalists discussed above, the population shares of the two groups were taken as fixed, and hence updating of forecasting strategies was neglected. Note that specifying such updating requires us to take a stand on communication between investors, since adoption of a different forecasting rule based on performance implies that investors know both the forecasting rules of others and their relative performance.

These are some key themes that have been taken up in the subsequent literature.

4.2 Social communication models

We now introduce asset pricing models with heterogeneous expectations and communication, including type updating based on performance, herding models, and social interactions.

4.2.1 The Brock–Hommes model

The Brock and Hommes (1998) model brings together some key ingredients discussed so far. In the simplest version of the model, a large population of investors may choose between a chartist forecasting rule and a fundamentalist forecasting rule. It is assumed that each investor can observe the performance (i.e. profitability) of all other investors and the forecasting rule they follow. The key dynamic in the model is the updating of forecasting strategies: in any period, the better-performing rule will have a higher rate of adoption in the population of investors (as we show below).Footnote 10 The Brock–Hommes model can be interpreted as a simple model of communication: social comparisons matter for belief updating.

Investors are boundedly-rational. The price forecast of investor i is denoted by \(\tilde{E}_t^i[p_{t+1}]\) (as in Sect. 5.1 above), and all investors are assumed to have a common subjective return variance which is fixed at \({\tilde{\sigma }}^2>0\). Dividends follow an exogenous process of the form \(d_t = {\bar{d}} + \varepsilon _t\), where \({\bar{d}}>0\) and \(\varepsilon _t\) is IID and mean zero. It assumed that all investors know the dividend process, such that \(\tilde{E}_t^i[d_{t+1}]={\bar{d}}\) for all \(i\in {\mathcal {N}}\). In any period \(t \in {\mathbb {N}}\), an investor must adopt either a chartist forecasting rule or a fundamental forecasting rule for the price.

Given the above assumptions, the demand of investor i at date t (see (5)) is

where \({\tilde{E}}_t^i[p_{t+1}]\) is the expectation of investor i given their forecasting rule at date t.

The fundamental forecasting rule can be derived by finding the price \(p_t^*\) that would clear the market if all investors were fundamentalists with common rational expectations, such that \({\tilde{E}}_t^i[p_{t+1}] = E_t[p_{t+1}^*]\) for all \(i\in {\mathcal {N}}\). Using this expression in (10) and noting that market-clearing requires \(x_t^i (p_t^*) = 0\) \(\forall i\in {\mathcal {N}}\) gives the fundamental price asFootnote 11

such that the fundamental forecasting rule (9) simplifies to

Here, \({\overline{p}}\) is the fundamental price in (3) when expected dividends equal \({\bar{d}}\) in every period. It says that in the absence of rational bubbles, an asset that is expected to pay a fixed dividend \({\overline{d}}\) in perpetuity has a price (= intrinsic value) given by the presented discounted value of the dividend stream. The fundamental forecast (12) is based upon this intrinsic value.

The chartist forecasting rule is given by a special case of (8) when there are \(L=1\) lags and the function \(f_c\) is linear:

where \(g>0\) is the trend-following parameter.

Note that the forecasting rule (13) has the interpretation that chartists expect the future deviation of price from the fundamental price to be linked to its past value \((p_{t-1} - {\bar{p}})\) via the trend-following parameter g; hence, chartists extrapolate from the past to the future. Note that the one-lag chartists are myopic in contrast to the fundamentalists who are farsighted.

The key mechanism in the model is the adoption of types via evolutionary competition. In particular, at a given date t, each investor must be either a chartist or a fundamentalist, and the probabilities of being each type are depend on the relative performance of the two forecasting rules. In a large population \(N \rightarrow \infty \), only the population shares of investors of each type need to be tracked. Brock and Hommes therefore assume the population shares are determined by a discrete choice logistic updating equation:

where \(\beta \ge 0\) is the intensity of choice and \(U_{t}^h \in {\mathbb {R}}\) is the fitness of predictor h at date t.

Equation (14) says that the share of the population using the chartist predictor c at date \(t+1\) depends on its relative performance against the fundamentalist predictor f, as judged by the past observed levels of fitness \(U_{t}^c\) and \(U_{t}^f\). The intensity of choice parameter \(\beta \) determines how fast agents switch to better-performing predictors. In the special case \(\beta =0\), the population shares are fixed and equal to 1/2; in this case, performance is ignored by investors and they are split equally between the two predictors. At the other extreme \(\beta \rightarrow \infty \), all investors will adopt in period \(t+1\) the best-performing predictor in period t.Footnote 12

Note that for any \(\beta \in (0, \infty )\), the better-performing predictor will be adopted by a larger share of the population, which is apparent if we write (14) as \(n_{t+1}^c = \frac{ 1 }{ 1 + e^{\beta (U_{t}^f - U_{t}^c)} }\).

The model is closed with the fitness measures \(U_{t,c}\) and \(U_{t,f}\). Brock and Hommes (1998) use the realized profits under a given predictor \(h \in \{c,f\}\) net of a predictor costFootnote 13

where \({\tilde{R}}_t:=p_t + d_t - (1+r)p_{t-1}\) is the realized excess return on shares at date t and \(C^h \ge 0\) is the cost of obtaining predictor h.

Brock and Hommes assume that only the fundamental predictor is costly: \(C^c = 0\), \(C^f = C \ge 0\). The basic idea here is that fundamental information may be more costly to obtain because it relies on more than past prices (which are readily observable). The asset price \(p_t\) is determined by market-clearing, given an assumption of zero outside supply, such that \(\sum _{h \in \{c,f\}} n_t^h x_t^h=0\). The market-clearing asset price is

where \(\tilde{p}_t: = p_t - {\bar{p}}\) is the deviation of price from the fundamental price.

Brock and Hommes (1998) establish several properties of the dynamical system (10)–(16):

-

For \(0<g<1+r\), the model has a unique, globally stable steady state. At this (fundamental) steady state, the asset price p is equal to the fundamental price \({\bar{p}}\).

-

For \(g>2(1+r)\), there are three steady states: two non-fundamental steady states with (resp.) positive and negative price deviation, and a fundamental steady state where \(p={\bar{p}}\), such that \(\tilde{p}=0\). The fundamental steady state is unstable.

-

For \(1+r<g<2(1+r)\), there are two possibilities: either (i) there are three steady states (as above) and the fundamental steady state is unstable, or (ii) the fundamental steady state is the unique, globally stable steady state.Footnote 14

-

For \(1+r<g<2(1+r)\), there is a pitchfork bifurcation at some \(\beta = \beta ^*>0\), and as the intensity of choice increases further there is a Hopf bifurcation at some \(\beta =\beta ^{**}\). The non-fundamental steady states are unstable for \(\beta > \beta ^{**}\).

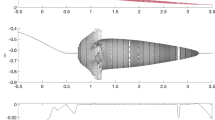

Bifurcation diagram for price deviation \(\tilde{p}_t\) in the two-type model (chartists and fundamentalists). Parameters are \(g=1.2\), \(r=0.1\), \(C=1\), \(a {\tilde{\sigma }}^2=1\) and we simulate the deterministic skeleton, i.e. \(d_t = {\overline{d}}\) for all t. For each \(\beta \) we plot 350 points following a transitory of 4000 periods from initial values \(\tilde{p}_{-1} \in (0,2)\)

In Fig. 1, we show a numerical bifurcation diagram for the case \(1+r<g<2(1+r)\). For low enough intensity of choice \(\beta \), the fundamental steady state \(\tilde{p}=0\) is stable. As the intensity of choice increases, the fundamental steady state becomes unstable due to a pitchfork bifurcation (\(\beta ^* \approx 2.37\)) in which two extra (non-fundamental) steady states \(\tilde{p}^*<0<\tilde{p}^*\) are created (since we assume \(\tilde{p}_{-1}>0\), only the upper ‘fork’ appears in the diagram). As the intensity of choice increases further, the non-fundamental steady states become unstable due to a Hopf bifurcation (\(\beta ^{**} \approx 3.33\)), giving more complicated dynamics, including stable limit cycles and quasi-periodic attractors. For intensity of choice above (approx.) 3.6, there is a positive Lyapunov exponent, indicating chaotic price dynamics.

The social aspect of the model (switching based on observable differences in performance) is essential for these dynamics. If the population shares of chartists were instead exogenous and fixed at some \({\bar{n}}^c \in [0,1]\), then for any initial condition \(p_{-1} \ne {\bar{p}}\) the price would converge monotonically to the fundamental price if \(0 \le {\bar{n}}^c g < 1+r\); remain fixed and equal to \(p_{-1}\) if \({\bar{n}}^c g = 1+r\); and diverge monotonically to \(+\infty \) or \(-\infty \) if \({\bar{n}}^c g > 1+r\).

Several papers have provided empirical support for the two-type Brock–Hommes model. For example, Boswijk et al. (2007) estimate an empirical version of the model on annual US stock price data from 1871 to 2003. The estimation supports the existence of heterogeneous expectations that differ in the extent of fundamentalist or trend-following behaviour, and the model offers an explanation for the run-up in stock prices after 1990 in terms of increased trend-following among investors. The estimated two-type model of fundamentalists and chartists in Chiarella et al. (2014) also supports the assumption of heterogeneous expectations, and the model can explain endogenously the rise and collapse of asset prices in a bubble-like fashion, as seen in the dot-com bubble.Footnote 15 The importance of heterogeneous expectations for asset price fluctuations has also been highlighted by ‘learning to forecast’ experiments in laboratory asset markets: evolutionary selection between behavioural expectation rules improves out-of-sample predictive performance and endogenous bubbles are related to trend-chasing behaviour (Hommes et al. 2008; Anufriev and Hommes 2012).

4.2.2 Herding models

We now turn to models of herd behaviour among investors. We confine our attention to behavioural herding models, which have been widely used in the asset pricing literature.Footnote 16

An early contribution was Kirman’s stochastic recruitment model (see Kirman 1993). The model was motivated by a puzzle in biology concerning the behaviour of ants: when faced with two identical food sources, ants concentrate more on one of the two food sources, but after a period they would switch their attention to the other food source. Thus, ants facing a symmetric situation behave collectively in an asymmetric way. Similar asymmetry has been observed in humans choosing between restaurants of similar price and quality situated on either side of a street: a large majority choose the same restaurant. Here, we consider a financial markets version of the Kirman (1993) model, as in the survey by Hommes (2006).

There are N investors who form an expectation about the future asset price \(p_{t+1}\), which may be either optimistic or pessimistic. An investor’s expectation depends on the outcome of random meetings with other investors. Let \(k_t \in \{0,1,\ldots ,N\}\) denote the number of investors that hold the optimistic view at date t; the initial number of optimistic investors \(k_0\) is given. Beliefs in periods \(t \ge 1\) are formed as follows. When two investors meet, the first investor is converted to the other investor’s belief with probability \((1-\delta )\), where \(\delta \in [0,1]\). There is also a small probability \(\epsilon \) that the first investor will change their view independently.

Given the above assumptions, the number of optimistic investors \(k_t\) is updated as follows:

where \(P_t^+\) and \(P_t^-\) are the state-contingent probabilities of a change in optimism, which depend on the prevailing number of optimists \(k_t\).

In Fig. 2, we simulate the fraction of optimistic investors \(k_t/N\) over 60,000 periods (left panel) and the ‘optimism distribution’ over a long time horizon (right panel). The fraction of optimistic investors starts out close to zero and remains below 10% for the first third of simulated periods (left panel), i.e. there is strong and persistent herding on the pessimistic belief. In the middle of the simulation, the fraction of optimistic investors rises due to a run of ‘optimism shocks’ and at one point exceeds 50% before becoming very volatile and then strongly pessimistic again. In the final third of the simulation, however, we see a sudden switch from herding on the pessimistic belief to persistent herding on the optimistic belief.

Fraction k/N in the Kirman (1993) model with parameters \(\epsilon = 0.002\), \(\delta = 0.01\), \(k_0=0\), \(N=100\). Left panel: \(T=60\),000 periods. Right panel: \(T=5\times 10^7\) periods

The herd-like behaviour of beliefs is also clear from the simulated distribution of the fraction of optimists (right panel). The distribution is bimodal with peaks at the extremes of 0 and 1, for which all investors are either pessimistic or optimistic. Although the average fraction of optimistic investors is one-half, the share of optimistic investors spends least time at this value and most time near the extremes of 0 and 1. There is a U-shaped distribution for the population share of optimists, as in Fig. 2, provided that \(\epsilon \) (the probability of an independent change in an investor’s beliefs) is small enough; in particular, we require \(\epsilon < (1-\delta )/(N-1)\) for a U-shaped distribution (see Kirman 1993, p. 144).

Kirman (1991) adds this simple herding mechanism in an asset pricing model, such that the population share of chartists \(n_t^c\) is determined by stochastic recruitment as in (17). Hence, unlike the Brock–Hommes model, population shares are determined by pure social dynamics, without any reference to profitability. The forecasting rules in the model are similar to the fundamentalist and chartist rules (12)–(13).Footnote 17 Price volatility is high when chartist beliefs dominate the market (\(n_t^c\) close to 1) and low when fundamental beliefs dominate (\(n_t^c\) close to 0). As the population share of chartists switches from low to high values as in Fig. 2, price volatility switches from a low volatility regime to a high volatility regime. The model thus provides a qualitative explanation for volatility clustering in returns.

Cont and Bouchaud (2000) also construct a herding model and assess its implications for stock market returns. In their model, investors interact through a random communication structure to determine the asset price (see below). In each period the investors \(i \in \{1,\ldots ,N\}\) receive a random signal \(\phi _i(t) \in \{-1,0,1\}\). If \(\phi _i(t)=+1\), investor i buys the asset in period t; if \(\phi _i(t)=-1\), investor i sells the asset; and if \(\phi _i(t)=0\), then investor i does not trade in period t. Aggregate excess demand for the asset at date t is thus:

The evolution of \(\phi _i(t)\) is described by

A value of \(b <1/2\) allows a finite fraction of traders not to trade, with positive probability, in a given period. Given the assumption of a symmetric marginal distribution in (19), expected excess demand is zero. Thus, in any given period t, excess demand will vary around zero due to random variations in the aggregate sentiment \(\sum _{i=1}^N \phi _i(t)\).

The asset price \(p_t\) is given by a market-maker equation in which the change in price is a linear function of the past excess demand:

where parameter \(\lambda >0\) is referred to as the market depth.

Cont and Bouchaud (2000) first consider the case where individual demands \([\phi _i(t)]_{1 \le i \le N}\) are IID random variables with finite variance. Since this assumption implies that individual demands are statistically independent, they call this the ‘independent agents’ hypothesis. In the case, the joint distribution of the demands is the product of the individual distributions, and the change in price \(\Delta p = p_t-p_{t-1}\) is a sum of N IID random variables with finite variance (and hence \(p_t\) is a random walk; see 20). For large N, the distribution of \(\Delta p\) is well-approximated by a normal distribution via the central limit theorem.

Is this a good model of stock market returns? The basic answer is no. Empirical distributions of asset returns and price changes are strongly non-normal, exhibiting fat tails and excess kurtosis; moreover, the tails of the empirical distributions are heavy with finite variance (see Pagan 1996; Mandelbrot and Hudson 2010). Accordingly, Cont and Bouchaud conclude that the ‘independent agents’ hypothesis is at odds with the data and turn to the hypothesis that individual demands are determined by communication between investors.

Cont and Bouchaud (2000) suppose that investors organize into groups which are given by forming independent binary links with probability c/N, where \(0<c<1\) is a connectivity parameter. The components of the so-formed Erdös and Rényi random network (see Sect. 6.1.2) then determine the trading groups. Investors in a particular group (or cluster) coordinate their actions in a herd-like manner, such that all members of a group act in unison to buy or sell (or to not trade).

For the case of \(n_g\) groups, the price equation is adjusted from (20) to

where \(D_{\alpha ,t} = N_\alpha \phi _\alpha (t)\) is the demand of group \(\alpha \) at date t, \(N_\alpha \) is the number of investors in group \(\alpha \), and \(\phi _\alpha (t)\) is the common individual demand of each member of the group.

Cont and Bouchaud assume that the demands of each cluster \(\phi _\alpha (t)\) are independently random variables with a symmetric distribution analogous to (19), i.e.

The parameter b is taken as proportional to order flow in a given time period and inversely proportional to the number of investors N; this implies that only a finite number of investors trade at the same time when the number of investors increases without bound.

Under the above assumptions, Cont and Bouchaud (2000) show that the distribution of price changes as \(N \rightarrow \infty \) has the following properties:

-

The density of price changes \(\Delta p = p_t - p_{t-1}\) has a heavy, non-Gaussian tail

-

The heaviness of the tails, as measured by the kurtosis of the price change, is inversely related to the order flow (i.e. liquidity of the market)

In summary, Cont and Bouchaud show that the ‘independent agents’ version of their model is rejected by the data, but adding communication between investors through a simple herding mechanism allows the model to generate a distribution of price changes that shares some key features of the empirical distribution of stock market returns.

4.2.3 Herding plus performance-based updating

In a series of papers, Lux and co-authors combine herding mechanisms with endogenous updating of investor types based on the evolution of asset prices. In particular, these works combine herd-like behaviour as in the Kirman and Cont and Bouchaud models with performance-based updating as in the Brock and Hommes model, such that there is a coupled dynamics of prices, trader sentiment and investor types.

The herding mechanism, known as mimetic contagion, is set out in Lux (1995).Footnote 18 Time is continuous and there is a fixed number of chartist investors 2N. Investors may be either optimistic or pessimistic, such that \(n_+ + n_- = 2N\), where \(n_+\) (\(n_-\)) is the prevailing number of optimistic (pessimistic) chartist investors. Chartists are assumed to react to the prevailing sentiment \(m = n/N\), where \(n = (n_+ - n_-)/2\), such that \(m \in [-1,1]\). Lux assumes the probability of switching from pessimism to optimism \(P_{+-}\) is higher the larger the prevailing share of optimistic chartists, and vice versa for a switch in the opposite direction:

where \(v>0\) and the parameter \(b > 0\) measures the strength of herd behaviour.Footnote 19

Given the above assumptions, the dynamics of the sentiment index s is given by

For \(b \le 1\), there is a unique stable steady state at \(m=0\), whereas for \(b>1\) , the steady state at \(m=0\) is unstable and two extra, stable, steady states \(m_+>0\), \(m_{-}=-m_+<0\) exist.

Thus, if the herd effect is relatively weak (\(0<b\le 1\)), then the dynamics return to a balanced sentiment \(m=0\) after a disturbance. On the other hand, for \(b>1\) small disturbances will make a majority of speculative investors bullish or bearish through mutual contagion, such that the steady state \(m=0\) is unstable and the dynamics lead to an unbalanced steady state (\(m_+\) or \(m_{-}\)) in which the majority has either an optimistic or a pessimistic opinion, with the majority being bigger for a larger herding parameter b.

Stock market dynamics are introduced through demand and supply for shares. At any instant, a chartist investor may either buy or sell a fixed amount of stock \(t_N>0\); the optimistic chartists are the buyers and the pessimistic chartists are the sellers. Under these assumptions, the net demand of chartist investors is

where \(T_N= 2N t_N\) is the trading volume of chartists.

Only if \(m=0\) would all trades of speculators be carried out within the group. Therefore, to close the model, Lux (1995) also introduces fundamentalists. Fundamental demand depends on the difference between the prevailing price p and a fundamental price \({\overline{p}}\):

where \(T_F>0\) represents the trading volume of fundamentalists.

A market-maker sets the price in response to excess demand, such that

with \(\mu >0\) being a speed of adjustment coefficient.

To allow feedback from the market price to the disposition of chartists, Lux (1995) amends the switching probabilities in (22) as follows:

where \(b_1,b_2 > 0\), \({\dot{p}}\) denotes the time derivative of the price (i.e. the price trend), and the parameter \(b_1\) is a weight that describes how the probability of switching disposition is affected by the price trend (as opposed to the prevailing sentiment, m, among chartists).

With this amendment, the system of contagion and price dynamics is given by

System (28) allows a rich variety of dynamic behaviours (see Lux 1995, Proposition 2):

-

(i)

For \(b_2 \le 1\), there exists a unique (fundamental) steady state \(E_f = (0,{\overline{p}})\)

-

(ii)

For \(b_2 > 1\), two additional steady states exist: \(E_+ = (m_+,p_+)\), \(E_{-} = (m_{-},p_{-})\), where \(m_+>0\), \(m_{-}=-m_+\) and \(p_+-{\overline{p}}=-(p_{-}-{\overline{p}})>0\). If \(E_{\pm }\) exist, \(E_f\) is always unstable.

-

(iii)

When \(E_f\) is a unique steady state, it may either be stable or unstable.

-

(iv)

If \(E_f\) is unique and unstable, at least one stable limit cycle exists and all trajectories of the system converge to a periodic orbit.

Note that stability of the zero-contagion steady state (\(E_f\)) is no longer guaranteed when the herding parameter \(b_2 \le 1\). Stability is favoured by low ‘responsiveness to trend’ \(b_1\) and by high (low) values of the trading volume of fundamentalists \(T_F\) (chartists \(T_N)\).

As noted in part (iv), a cyclic motion prevails when \(E_f\) is a unique steady state (i.e. \(b_2 \le 1\)) and the local stability condition is violated. In this case, the price switches between undervaluation and overvaluation as the disposition of chartists switches between pessimism and optimism. The price trend and sentiment reinforce each other in the upward and downward phases of the cycle; however, stationary majorities are avoided because contagion reaches a climax and declines thereafter, as illustrated in Fig. 3.Footnote 20

Finally, Lux (1995) shows that cycles are not ruled out when herding is strong (\(b_2>1\)) if the switching probabilities are amended with an ‘mood term’ that depends on average returns relative to average expected returns.Footnote 21 This switching between bull and bear markets is related to opinion reversals of the speculative traders (i.e. chartists). In particular, as stock prices increase, the ‘mood’ is initially positive due to positive realized returns; however, once the overwhelming majority of chartists are bullish, the mood switches because the pool of potential buyers is exhausted, such that price increases start to diminish. Ultimately, this change in sentiment causes the bubble to collapse, and chartists then become more pessimistic and prices fall until the next bull market is triggered by diminishing deflation.

In Lux (1998) and Lux and Marchesi (1999), switching based on profit is introduced. The mechanism is similar to that in Brock and Hommes (1998), except that there are two types of chartists—optimistic and pessimistic—and the switching probabilities of these types are allowed to differ. In particular, the probabilities to switch from fundamentalist to optimistic chartist, from optimistic chartist to fundamentalist, from fundamentalist to pessimistic chartist, and from pessimistic chartist to fundamentalist are:

where \(v>0\) and \(\beta \ge 0\) is the sensitivity of traders to the fitness measures \(U_1\) and \(U_2\).

The fitness measures \(U_1\) and \(U_2\) are based on the difference in profits between chartists and fundamentalists—in particular, the realized excess profit per share of chartists and the expected (discounted) profit of fundamentalists. There are two fitness measures \(U_1\) and \(U_2\) because optimistic chartists buy shares, while pessimistic chartists sell shares. Note that \(n_+/2N\) (\(n_{-}/2N\)) is the probability of a fundamentalist meeting an optimistic chartist (pessimistic chartist), and \(n_f/2N\) is the probability of a chartist meeting a fundamentalist.

Lux (1998) shows that the model with herding and performance-based updating has chaotic attractors for a wide range of parameter values. Consistent with empirical evidence, the distribution of price returns implied by the chaotic trajectories has higher peaks around the mean than the Normal distribution and fat tails. In a similar vein, Lux and Marchesi (1999) show that a version of the model with IID-Normal news arrival in the fundamental price generates volatility clustering in returns, a plausible frequency of extreme events, and other features of the empirical distribution of stock returns, such as a slower than exponential fall-off in the density of large price fluctuations that ‘dies out’ under time aggregation.

In short, a simple model that combines herding and performance-based type updating does well at replicating some key empirical regularities of stock market returns. The social aspects of the model—herding related to aggregate sentiment and updating of types based on meeting other investors—play a central role in the empirical performance of the model.

We now consider some alternatives to the models discussed above, which are based on social interactions. We then turn to network approaches to social communication.

4.2.4 Social interactions

Social interaction refers to a situation where the utility or payoff of an individual depends directly upon the choices of other individuals in their ‘reference group’. Note that such interactions differ from the (indirect) dependencies between individuals that occur through, say, market prices or congestion effects when all agents have ‘selfish’ utility. Social interactions include, for example, the behaviours of conformity and contrarianism. We first introduce a simple discrete choice model of social interactions (Brock and Durlauf 2001), and we then discuss how this approach has been used in the context of asset pricing.

Each individual in a population of N agents makes a binary choice \(\omega _i \in \{-1,+1\}\). In an asset pricing context this could be the choice to buy or sell a stock, or the choice between two different price predictors. Let \(\omega _{-i} = \{\omega _1,\ldots ,\omega _{i-1}, \omega _{i+1},\ldots ,\omega _N\}\) denote the choices of all agents other than i. The utility that agent i derives from choice \(\omega _i\) has three components:

where \(u(\omega _i)\) is private utility, \(S(\omega _i,\mu _i^e(\omega _{-i}))\) is social utility that depends on i’s choice \(\omega _i\) and on a generic conditional probability measure \(\mu _i^e(\omega _{-i})\) that agent i places on the choices of other agents, and \(\epsilon (\omega _i)\) is a random utility term that is assumed to be IID across agents.

A common specification for \(\mu _i^e(\omega _{-i})\) is the mean expected choice of all other agentsFootnote 22:

where \(\omega _{i,j}^e\) is the expected choice of agent j as forecast by agent i.

Brock and Durlauf (2001) consider a simple specification for the social utility term:

Since \(J>0\), the specification in (31) implies a positive externality from conforming to the average. In fact, maximizing (31) by choosing \(\omega _i\) is equivalent to maximizing a conformity-based specification \(S(\omega _i,\mu _i^e(\omega _{-i}))=-\frac{J}{2}(\omega _i-{\bar{m}}_i^e)^2\), since \(\omega _i^2 = 1\) is independent of \(\omega _i\).

The random utility terms \(\epsilon (-1)\) and \(\epsilon (1)\) are assumed to be independent with an extreme-value distribution, such that the differences in the errors follow a logistic distribution:

Under these assumptions, the probability of individual choices follows a logistic model:

where \(\beta \) can be interpreted as the intensity of choice.

Note that the exponents in (32) may be nonlinear in \(\omega _i\) due to the private utility \(u(\omega _i)\). However, since \(\omega _i \in \{-1,1\}\), the private utility can be replaced with a linear utility function \({\tilde{u}}(\omega _i) = k + h \omega _i\), where h and k are parameters that satisfy \(h+k = u(1)\) and \(k-h=u(-1)\), such that \(h=(u(1)-u(-1))/2\). Note that the function \({\tilde{u}}(\omega _i)\) recovers \(u(\omega _i)\) exactly, such that the probabilities in (32) can be simplified by replacing \(u(\omega _i)\) with \(k + h \omega _i\).Footnote 23

Using (30), the expected value of \(\omega _i\) is given by

Under common rational expectations, \(\omega _{i,j}^e= E[\omega _i]\) for all i, j, so \(E[\omega _i]=E[\omega _j]:= m^*\) for all i, j. In this case, (33) simplifies to

Brock and Durlauf (2001) show that a rational expectations equilibrium \(m^*\) always exists and, depending on parameters, there may be multiple solutions. For \(\beta J<1\), (34) has a unique solution, whereas for \(\beta J > 1\) , (34) has three solutions if either \(h=0\) or \(h \ne 0\) and \(|\beta h|<H\) (where threshold H depends on \(\beta J\)) and a unique solution if \(|\beta h|>H\).

With a dynamic model in discrete time \(t \in {\mathbb {N}}\) and myopic expectations based on past mean choices, we have \(\omega _{i,j,t}^e= m_{t-1}\) for all i, j and \(\omega _{i,t}^e = m_{t}\) for all \(i\in {\mathcal {N}}\), so by (33), we have:

The steady states of (35) correspond to the rational expectations solutions \(m^*\) discussed above. When there is a unique steady state (e.g. \(\beta J<1\) or \(|\beta h|>H\)), this steady state is globally stable. On the other hand, if (35) has three steady states, then the middle one is locally unstable, whereas the other steady states are locally stable (see Hommes 2006). Thus, when there are multiple steady states, the system will settle at one of the extremes in which there is herd behaviour: all agents choose either \(\omega _i=-1\) or \(\omega _i=1\). In such cases, small differences in individual utility may lead to large changes in aggregate choices.

The social interactions approach is applied to asset pricing in Chang (2007, 2014). In these papers, the Brock–Hommes model is extended with myopic global interactions (see (35)), such that parameter h is the difference in fitness of chartists and fundamentalists. Interestingly, Chang (2007) shows that the strength of social interactions depends not just on the parameter J, but also on an ‘endogenous coefficient’ that reflects the characteristics of the predictors, such that the steady state version of (35) reads as:

where \(J_g,J_b\) are coefficients that depend on \(m^*\) and \(J_b\) generally differs from zero.Footnote 24

For the two-type model with chartists and fundamentalists Chang (2007) shows that additional steady states may exist if the strength of social interactions J is strong enough (i.e. if \(\beta J > 1\)), while weak social interactions (\(\beta J < 1\)) can give rise to attractors where the population share and asset price have a cyclical behaviour. Further, local stability of steady states can be quite sensitive to the strength of social interactions. In Chang (2014), herd behaviour and price bubbles are examined. The main finding is that for strong enough social interactions, a small deviation from the fundamental price may lead to herding that results in a price ‘bubble’ and causes the price-type dynamics to settle at a new steady state where herding is permanent and the asset is mispriced relative to fundamentals.Footnote 25

5 Networks and opinion dynamics

In the papers introduced so far, communication or social interactions are global. Empirical evidence, however, suggests that the interactions determining financial investment decisions are usually local; see Sect. 3. To model local interaction, some recent papers on communication and asset price dynamics employ results from the literature on opinion dynamics and the spread of diseases on networks.

This social network approach to communication has been widely used in the opinion dynamics literature and has some important advantages. First, networks provide a precise description of social connections that is general enough to nest many different communication structures observed in practice. Second, given increased availability of computing power and big data, there is now an empirical literature that estimates social networks directly. Third, networks are convenient both analytically and computationally because they can be analysed using the tools of linear algebra.

Despite these attractions, social networks have not been widely used in asset pricing models until recently. We therefore introduce useful network concepts in this section, before turning to recent literature that embeds social networks in asset pricing models.

5.1 Networks and notation

A network is fully described by the set of vertices and edges, which satisfies the definition of a graph in mathematics. In the context of economics and financial markets, the vertices represent the interacting agents or investors and are usually assumed to stay fixed over time. Hence, we use the same notation for the set of vertices that we used for the set of investors, i.e. \({\mathcal {N}}=\{1,\ldots ,N\}\). In this paper, we will not use the set-theoretic notation of the set of edges, but rather describe a network by its \(N\times N\) adjacency matrix \({\textbf{A}}\). Figure 4 gives some example networks and their adjacency matrices where

In many applications, networks are binary such that the modeller only cares about whether two agents i and j influence each other or not. In this case, each entry \(a_{ij}\) is of binary nature such that the restriction \(a_{ij}\in \{0,1\}\) is imposed and an entry \(a_{ij}=1\) denotes a link from agent i to agents j. Examples where the direction of the link matters include information flow, citations, or following behaviour in social media. Such a network can be represented by its directed edges as in Fig. 4b). A further restriction may require the network to be undirected which best describe bilateral relations like friendship, cooperations, or co-authorship. In this case, the adjacency matrix is restricted to be symmetric such that \(a_{ij}=a_{ji}\) for all \(i,j\in {\mathcal {N}}\) and an entry \(a_{ij}=a_{ji}=1\) is referred to as a link between agents i and j. An example of an undirected network is given in Fig. 4a. Non-binary or weighted networks play a role for opinion dynamics to determine relative influences. In this case, a weighted network is required to be row stochastic to account for relative influences such that \(\sum _{j\in {\mathcal {N}}}a_{ij}=1\) for all \(i\in {\mathcal {N}}\) is imposed. While binary networks often impose the assumption that there are no self-links, i.e. \(a_{ii}=0\) \(\forall i\in {\mathcal {N}}\), this is often not the case for row-stochastic influence matrices, as these loops represent self-trust. An example of a weighted, row-stochastic network is drawn in Fig. 4c.

In the application of financial markets, information flow plays a crucial role. If the network represents information flow or influence between agents, then agents can only influence each other directly if they are connected by a link in the network \({\textbf{A}}\). To account for the direction of information flow, define \(N^i({\textbf{A}}):=\{j\in {\mathcal {N}}:a_{ij}>0\}\) as the set of agents that i observes and let \(M^i({\textbf{A}}):=\{j\in {\mathcal {N}}:a_{ji}>0\}\) be the set of agents that observe i. For example, in Fig. 4b, \(N_1=\{2\}\) while \(M_1=\{2,3,4\}\). Clearly for undirected networks, \(N^i=M^i\) for all \(i\in N\). In this case, the number of neighbours is called the degree and denoted by \(\eta _i:=|N^i|\) for all \(i\in {\mathcal {N}}\).

Agents can also influence each other indirectly via multiple connections. To capture this, define a walk from node i to node j of length \(k\in {\mathbb {N}}\) by a sequence of connected nodes \((i^1,\ldots i^k)\) such that \(a_{i^l,i^{l+1}}=1\) for all \(1\le l\le k-1\) and \(i^1=i\) and \(i^k=j\). Note that a walk of length k from i to j exists, if and only if we have \(({\textbf{A}}^k)_{ij}>0\) where \({\textbf{A}}^k\) denotes the k-th power of the matrix \({\textbf{A}}\). The set of nodes that lie on a walk that starts in node i are defined as \({\mathcal {W}}^i:=\{j\in N|\exists k\in {\mathbb {N}}: ({\textbf{A}}^k)_{ij}>0\}.\) Clearly, \({\mathcal {W}}^j\subseteq {\mathcal {W}}^i\) for all \(j\in {\mathcal {W}}^i\). For instance, \({\mathcal {W}}^4=\{1,2,3,4\}\) in \({\textbf{A}}_b\) in Fig. 4c and \({\mathcal {W}}^j=\{1,2,3\}\) for \(j=1,2,3\).

A subset of agents \({\mathcal {C}}\subset {\mathcal {N}}\) is said to be strongly connected if there is a walk from any \(i\in {\mathcal {C}}\) to any \(j\in {\mathcal {C}}\), i.e. \(j\in {\mathcal {W}}^i\) for all \(i,j\in {\mathcal {C}}\). Thus, information can flow between any two agents of a strongly connected subset. For instance, the sets \(\{1,2\}\) and \(\{1,2,3\}\) are the only strongly connected (non-singleton) sets in Fig. 4a–c. A subset of agents \({\mathcal {C}}\subset {\mathcal {N}}\) is said to be closed if there exists no walk from any \(i\in {\mathcal {C}}\) to any outsider \(j\in {\mathcal {N}}\setminus {\mathcal {C}}\), i.e. \({\mathcal {W}}^i\subseteq {\mathcal {C}}\) for all \(i\in {\mathcal {C}}\). Note, that the sets \(\{1,2,3\}\) and \(\{1,2,3,4\}\) are the only closed sets in Fig. 4a–c. Thus, the notion of walks induces a partition of the set of agents into communication classes \(\Pi ({\mathcal {N}},{\textbf{A}})=\{{\mathcal {C}}_1,{\mathcal {C}}_2,\ldots ,{\mathcal {C}}_K,{\mathcal {R}} \}\) such that the sets \({\mathcal {C}}_k\) are strongly connected and closed and \({\mathcal {R}}\) denotes the (possibly empty) rest of the world of agents not belonging to a strongly connected and closed set. Note that there always exists at least one non-empty strongly connected and closed set \({\mathcal {C}}\) for each network. A network is called strongly connected if \({\mathcal {N}}\) is strongly connected. In the examples of Fig. 4a–c, the set \(\{1,2,3\}\) is the only closed and strongly connected communication class and the singleton set \(\{4\}\) is the rest of the world.

The distance between two nodes i and j in network \({\textbf{A}}\) is defined as the minimal walk length denoted by \(d(i,j):=\min \{k\in {\mathbb {N}}:({\textbf{A}}^k)_{ij}>0\}\). A path between two nodes i and j is a shortest walk, i.e. a walk with distance d(i, j). If two nodes are not connected by a walk, we set \(d(i,j)=\infty \). Clearly a network is strongly connected if \(d(i,j)<\infty \) for all \(i,j\in N\). The diameter of the network is given by \(D({\textbf{A}})=\max _{i,j\in N}d(i,j)\).

5.1.1 Network centrality

In many applications, the centrality of nodes (e.g. investors) in a network plays an important role. This is also true if we think about opinion dynamics, information flow, and influence in networks as discussed later. Before economists paid attention to networks, a large body of literature in sociology introduced measures to assess how central an agent is in a network.

The most straightforward way to define a node’s centrality is to count the neighbours, which is also called the degree centrality of an agent. Clearly, such a centrality measure ignores large parts of the network structure and may be too simple for many applications. More elaborated centrality measures take into account more structural properties of the network which may be intuitively thought of as assessing network ‘flows’ (Borgatti 2005).

Taking into account only the shortest possible network flows, i.e. paths between nodes in the network, Freeman (1979) defines the seminal measures of Closeness and Betweenness centrality. Closeness centrality simply discounts the distance d(i, j) between any two nodes such that agents with many short paths to others receive a high closeness centrality. Betweenness centrality, on the other hand, considers all paths between any two nodes and counts for each node \(i\in {\mathcal {N}}\) the respective share of paths which pass through i.Footnote 26

Reducing network flows to only the shortest possible ones may be relevant in some applications, but often this assumption is a bit restrictive. For instance, Betweenness centrality only takes into account the paths between any two nodes. Potential outside options via walks of greater distance play no role. Similarly, information flows usually take place not only along walks of minimum distance, but also along all possible walks in a network.

Seminal notions of centrality taking into account all network flows, were developed by Katz (1953) and Bonacich (1987). In a similar spirit as Freeman closeness centrality, Bonacich centrality discounts the length of all possibly walks between any two nodes by a parameter \(0<\delta <\lambda _1({\textbf{A}})^{-1}\) where \(\lambda _1({\textbf{A}})\) is the eigenvalue of \({\textbf{A}}\) having largest modulus. The idea is that agents with many short walks to others receive a high Bonacich centrality. This centrality measure can be alternatively expressed by a self-referential notion such that the centrality index proposed by Bonacich (1987), \(b_i({\textbf{A}})\), is given by, \( b_i({\textbf{A}},\delta )=1+\delta \sum _{j\in N^i({\textbf{A}})}b_{j}({\textbf{A}},\delta )\) for all nodes \(i\in {\mathcal {N}}\). This self-referential notion expresses the idea that an agent is central, if the neighbours are central (see e.g. Hellmann 2021, for more details). Similar ideas trace back to Katz (1953) who defined status of a node to be high if the status of observing neighbours \(M^i\) is high. The most straightforward way to define this is to directly impose the condition that centrality is proportional to the sum of centralities of neighbours, i.e. \( c_i({\textbf{A}})=\frac{1}{\lambda }\sum _{j\in M^i}c_j({\textbf{A}})\), with \(\lambda \) being some constant. This system of equations can be rewritten in matrix notation such that

For this system of equations to have a solution, \(\lambda \) must be an eigenvalue and \({\textbf{c}}({\textbf{A}})\) must be a (left) eigenvector of \({\textbf{A}}\). Usually, \(\lambda \) is assumed to be the largest eigenvalue such that all entries of the eigenvector are guaranteed to be real (by Perron Frobenius). For instance, the principal eigenvalue of the weighted network in Fig. 4c is \(\lambda =1\) (since \({\textbf{A}}_c\) is row stochastic) and the eigenvector normalized such that entries sum to unity is \({\textbf{c}}({\textbf{A}}_c)=(\frac{18}{38},\frac{15}{38},\frac{5}{38},0)'\). Note that nodes from the rest of the world always receive eigenvector centrality of 0. PageRank developed by Google founder Larry Page uses similar ideas (but introduces additional scaling factors) and helped Google dominate over other search engines.

One advantage of the eigenvector based centrality measures defined in Eq. (37) is that it not only applies to binary networks, but can also be applied for weighted and directed networks. We show in Sects. 6.3.3 and 7.2.2 that these centrality measures appear in applications of opinion formation and belief formation in financial markets.

5.1.2 Random networks

The networks discussed so far have deterministic links. When the modeler does not know the entire network or wants to use degree distributions rather than the precise structure of the network, a simplifying assumption is that network formation is random. The most straightforward way to model random networks is to assume that all links in an (undirected) network form with some probability p which is identical and independent across all links. Erdös and Rényi advanced the findings for such models in such a way that these types of random networks are often referred to as Erdös and Rényi networks; see e.g. Erdös and Rényi (1959, 1960). Due to their simplicity, Erdös and Rényi random networks provide a good benchmark to compare with empirical facts in order to find out how real-world networks differ from purely random networks (see also Sect. 6.2).

For Erdös and Rényi networks, it is quite easy to calculate the degree distribution as this is binomial. The probability that a given node has exactly \(\eta \) neighbours is given by

For large N and small p, this degree distribution is well approximated by a Poisson distribution such that the fraction of nodes that have \(\eta \) links is given by \(\frac{\exp (-(N-1)p)\left( (N-1)p\right) ^\eta }{\eta !}\).

Erdös and Rényi networks already appear in Cont and Bouchaud (2000) where trading groups are determined by the random network (see Sect. 5.2.2). More recently, Granha et al. (2022) apply these types of random graphs in an agent-based model to analyse opinion dynamics of noise traders and fundamentalists.

Other approaches to random networks aiming to capture some real-world phenomenon directly impose degree distributions. One example is the class of scale-free networks where the degree distribution follows a power law, i.e. which can be written as

where k is a constant ensuring that the probabilities sum to unity and \(\kappa \in {\mathbb {R}}_+\). In a log-log plot mapping degrees to probabilities (or relative frequencies of observations), such a distribution is given by a straight line. Scale-free networks seem to better capture some stylized facts about real world networks which we present subsequently and which play an important role in diffusion models; see Sect. 6.3.

5.2 Stylized facts of real-world networks

Many real-world networks across different contexts share common properties. In this section, we only sketch some stylized facts about real-world networks and refer the reader to textbooks such as Watts (1999) or Jackson (2008) and references therein for more details.

-

Connectivity

Social networks with many participants are often quite sparse, i.e. each node is only connected to a very small subset of nodes. As a case in point, Ugander et al. (2011) study Facebook data from 2011 and find that out of 721 million users, the median friend count is 99 with most users having less than 200 friends (out of a possible 721 million connections).

-

High clustering

Clustering refers to the likelihood that three connected nodes in an undirected network form a ‘clique’ which means that they are completely connected. To put it simply, it is a measure determining the likelihood that two neighbours of a node are neighbours themselves. In Erdös and Rényi networks where links form independently, two friends of a given node are not more likely to be friends themselves than any two random nodes. Instead, in real-world networks, this is clearly not the case and clustering is quite high. For instance while the global Facebook network is quite sparse, the clustering coefficient is independent of the number of friends and is estimated at 0.14, which is quite high compared to the relative frequency of links (see above).

-

Small worlds

The small world property refers to the fact that many real-world networks have small diameters relative to the number of nodes and small average distances although networks are sparse and highly clustered. This was observed by Milgram (1967) in the famous letter experiments where participants had to send a letter to unknown persons within the USA by only sending and forwarding letters to acquaintances. The experiments seemed to confirm the phrase ‘six degrees of separation’ since the median number of steps for a letter to reach a target was 5, although this phrase was not used by the authors themselves. Modern studies (Backstrom et al. 2012) using global Facebook data (with more than 1.59 billion facebook users) estimate the average distance to be 4.57, corresponding to 3.57 intermediaries or ‘degrees of separation’.

-

Degree distribution

The distribution of (the number of) neighbours in a network usually does not follow a Poisson distribution as would be expected for sparse but large networks if links form with IID probability, as in Erdös and Rényi networks. Instead, the distribution of degrees in real-world networks often exhibits fat tails: nodes with very high degrees and with very low degrees occur a lot more frequently than expected from a Poisson distribution. Some studies find that the degree distributions of real-world networks are well-approximated by scale-free power law distributions, see (38). For instance, Liljeros et al. (2001) study the network of human sexual contacts in Sweden and conclude that the degree distribution is well approximated by a power law. Multiple studies also confirm that the world wide web has a scale-free degree distribution (Albert et al. 1999, 2000; Caldarelli et al. 2000; Medina et al. 2000). In particular, scale-free networks satisfy the fat tails property, though not all studies on the degree distribution of real-world networks confirm a strict power law.Footnote 27

-

Assortativity

Many studies have found real-world networks to exhibit positive assortativity with respect to degrees. In other words, nodes with many neighbours will be connected to other nodes with many neighbours with higher probability than under pure random network formation. For instance, Newman (2003) finds a high correlation of neighbouring nodes’ degrees in scientific co-authorship networks. One network structure with positive assortativity is the core-periphery structure composed of a well-connected core and a sparse periphery of nodes (see Borgatti and Everett 2000,and references therein); this structure is observed quite frequently in social and financial contexts—e.g. the institutional funds market has this sort of hierarchical structure (Alfarano et al. 2013). An example core-periphery structure is presented in Fig. 5.

5.3 Models of diffusion and opinion formation in networks

Particularly relevant for communication and asset prices is the theory of how information (respectively diseases) or opinions and beliefs spread through a network. We present here two models in epidemiology, the SIR model and the SIS model, and a model of opinion formation, the so-called DeGroot model, that have recently been used to model the spread of information or types within models of communication on networks and asset prices.

The SIR and the SIS model are diffusion models originating in epidemiology and use networks to model local interaction. In this case, networks are usually assumed to be random to allow aggregation over transmission probabilities or use of mean-field approaches. Typical questions include infection rates and diffusion thresholds at which a phase transition occurs from only a small fraction of the population being infected to the large parts of the population catching the disease. Such approaches have been applied to find relative frequencies of chartist and fundamentalist types and diffusion thresholds in asset pricing models.

The DeGroot model instead assumes a fixed network that is allowed to be directed and weighted, thus modeling relative influences. Research focuses on long-run opinions, consensus, time to convergence, and wisdom of the crowds. This approach has been recently applied model the dynamics of investor types and asset prices.

5.3.1 The SIR model