Abstract

Markov chain Monte Carlo (MCMC) is a key algorithm in computational statistics, and as datasets grow larger and models grow more complex, many popular MCMC algorithms become too computationally expensive to be practical. Recent progress has been made on this problem through development of MCMC algorithms based on Piecewise Deterministic Markov Processes (PDMPs), irreversible processes which can be engineered to converge at a rate which is independent of the size of the dataset. While there has understandably been a surge of theoretical studies following these results, PDMPs have so far only been implemented for models where certain gradients can be bounded in closed form, which is not possible in many relevant statistical problems. Furthermore, there has been substantionally less focus on practical implementation, or the efficiency of PDMP dynamics in exploring challenging densities. Focusing on the Zig-Zag process, we present the Numerical Zig-Zag (NuZZ) algorithm, which is applicable to general statistical models without the need for bounds on the gradient of the log posterior. This allows us to perform numerical experiments on: (i) how the Zig-Zag dynamics behaves on some test problems with common challenging features; and (ii) how the error between the target and sampled distributions evolves as a function of computational effort for different MCMC algorithms including NuZZ. Moreover, due to the specifics of the NuZZ algorithms, we are able to give an explicit bound on the Wasserstein distance between the exact posterior and its numerically perturbed counterpart in terms of the user-specified numerical tolerances of NuZZ.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Markov Chain Monte Carlo (MCMC) methods are a mainstay of modern statistics (Brooks et al. 2011), and are employed in a wide variety of applications, such as astrophysics (e.g. The Dark Energy Survey Collaboration et al. (2017)), epidemiology (e.g. O’Neill and Roberts (1999), House et al. (2016)), chemistry (e.g. Cotter et al. (2019, 2020)) and finance (e.g. Kim et al. (1998)). There are a diverse range of challenges which can hinder MCMC algorithms in practice, including multimodality of the posterior, complex non-linear dependencies between parameters, large number of parameters (e.g. Cotter et al. (2013)), or a large number of observations (e.g. Bardenet et al. (2017)). Common MCMC methods such as the Random Walk Metropolis (RWM) algorithm (Metropolis et al. 1953) offer simplicity and flexibility, but also have several limitations.

Throughout the history of MCMC there have been many attempts to improve algorithmic efficiency (Robert and Casella 2011), including the work of Hastings (1970) in generalising the RWM approach to non-symmetric proposals, and the use of gradient information to explore the state space according to a discretised Langevin diffusion in the Metropolis-Adjusted Langevin Algorithm (MALA) (Roberts and Tweedie 1996). Both of these methods rely on reversible dynamics which satisfy a detailed balance condition, used primarily to ensure convergence to the appropriate limiting distribution.

Lifting the process simulated to a larger state space can aid exploration of the original state space, with one of the most successful such approaches being the Hamiltonian Monte Carlo (HMC) algorithm in Euclidean space (Duane et al. 1987), and on Riemannian manifolds (RMHMC) (Girolami et al. 2011). HMC relies on augmenting the state space with momentum variables, while maintaining detailed balance and reversibility by use of a Metropolis acceptance step.

Recently, there has been growing interest in irreversible algorithms, which have been shown to converge faster and have lower asymptotic variance than their reversible counterparts (Chen and Hwang 2013; Ottobre 2016). This was initially investigated by Diaconis et al. (2000) and Turitsyn et al. (2011), where the authors achieve irreversibility via “lifting" and injecting vortices in the state space. In Ma et al. (2016) the authors provide a framework for developing irreversible algorithms.

Not all irreversible algorithms are built from reversible ones. In the physics literature, Rapaport (2009) and Peters and de With (2012) laid the foundations for the Bouncy Particle Sampler (BPS), which was then thoroughly studied by Bouchard-Côté et al. (2018). The BPS abandons diffusion in favour of completely irreversible, piecewise deterministic dynamics based on the theory developed in Davis (1984, 1993). The close relationship between the BPS and HMC is explored in Deligiannidis et al. (2021).

The Zig-Zag (ZZ) process (Bierkens 2016; Bierkens and Roberts 2017; Bierkens and Duncan 2017), a variant of the Telegraph process (Kolesnik and Ratanov 2013), is another Piecewise Deterministic Markov Process (PDMP) closely related to the BPS, even coinciding in one dimension. Its properties were first explored in work by Bierkens and Duncan (2017) and Bierkens et al. (2019a). The ZZ sampler is particularly appealing as when properly modified with upper bounds for the switching rate, it has a computational cost per iteration that need not grow with the size of the dataset. This feature makes it particularly well suited for “tall, thin" data, e.g. multivariate datasets with a large number of observations, n, and a relatively small number of parameters, d.

The BPS sampler and the ZZ sampler are both PDMPs that change direction at random times according to the interarrival times of a Poisson process whose rate depends on the gradient of the target density. The main difference between these samplers is the way in which the new direction is chosen. Conditions for ergodicity have been established for both algorithms (Bierkens et al. 2019b; Durmus et al. 2020), and the Generalised Bouncy Particle Sampler (Wu and Robert 2017) partially bridges the gap between the two.

Given their desirable properties, there has been a wealth of theoretical analysis of PDMPs in MCMC developed in recent years (Bierkens et al. 2018; Zhao and Bouchard-Côté 2019; Andrieu et al. 2021c; Bierkens and Verduyn Lunel 2019; Chevallier et al. 2020; Andrieu et al. 2021b; Chevallier et al. 2021). In Vanetti et al. (2018), the authors provide insights into a general framework for construction of continuous and discrete time algorithms based on PDMPs, and discuss some improvements that ameliorate some of the current challenges that PDMP samplers face. There remains, however, a lack of guidance about which classes of statistical problems are well-suited to the use of PDMP algorithms, and how those algorithms are best to be implemented.

In this work we concentrate our attention on the Zig-Zag dynamics, and develop a numerical approximation to calculate the time to the next switch for general distributions, without requiring analytical solutions or bounds for the switching rate, which enables the methods to be used for a much wider family of targets. We then use our algorithm to study how well the Zig-Zag dynamics are able to explore distributions with specific pathologies which are often found in practical Bayesian statistics (e.g. non-linear dependence structures, fat tails, and co-linearity). Our algorithm can be applied to general targets, but we here restrict our attention to targets for which we have access to the marginal cumulative distribution functions, so that we can easily assess convergence by comparison with the ground truth.

Moreover, we obtain results in controlling the numerical error induced by our algorithm. A first result in this sense was achieved by Riedler (2013), where the author shows that under general assumptions, sample paths resulting from suitable numerical approximations of PDMPs converge almost surely to the paths followed by the exact process as the numerical tolerances approach zero. Another recent result is the work of Bertazzi et al. (2021), in which the authors study a general class of Euler-type integration routines which yield a Markov process at each point of the mesh defined by the integrator. Their framework is sufficiently general to accommodate PDMPs with trajectories which are analytically intractable and thus need to be approximated numerically. One key difference is that their process is defined on a mesh of discrete points equally spaced in time. On the contrary, our process is defined directly in continuous time, and the mesh is not homogeneous, but rather depends adaptively on the skeleton chain of the switching points.

Both this work and Bertazzi et al. (2021) involve constructing numerical approximations to the dynamics in order to extend the applicability to PDMPs to models where switching times cannot be sampled directly and analytical bounds are not available. An alternative approach is to construct bounds numerically in order to sample the switching times. This approach was followed in Sutton and Fearnhead (2021), and Corbella et al. (2022). In Sutton and Fearnhead (2021), the authors construct a bound for the event rates by exploiting a convex-concave decomposition, and using polynomials to bound each of the two components separately. In Corbella et al. (2022), the authors construct an approximate piecewise-constant bound for the event rates by dividing the trajectory into time windows, and finding the maximum rate within each time window numerically. All four methods follow a different path to making PDMP-based MCMC more applicable to general models, each combining numerical integration and optimisation techniques in a different way.

The paper is organised as follows. In Sect. 2 we review the technical details of the Zig-Zag process and fix the notation for the rest of this work. In Sect. 3 we present an alternative algorithm, the Numerical Zig-Zag (NuZZ), which uses numerical methodology to compute switching times of the Zig-Zag process. In Sect. 4 we study how the numerical tolerances affect the quality of the NuZZ MCMC sample. In Sect. 5 we present some numerical experiments which test the performance of a range of MCMC algorithms, including NuZZ, for a range of test problems with features often found in real-world models. Finally in Sect. 6 we conclude with some discussions of the results. Code is available at https://github.com/FilippoPagani/NuZZ.

2 The Zig-Zag sampler

The Zig-Zag sampler is an MCMC algorithm designed for drawing samples from a probability measure \(\pi (\textbf{x})\) with a smooth density on \({\mathcal {X}} = {\mathbb {R}}^d\). In the same vein as methods like HMC, the Zig-Zag sampler operates on an extended state space where the position variable \(\textbf{x}\) is augmented by a velocity variable \(\textbf{v}\).

The Zig-Zag sampler is driven by the Zig-Zag process, a continuous-time PDMP which describes the motion of a particle \(\textbf{x}\), with a piecewise constant velocity which is subject to intermittent jumps. The dynamics and jumps are constructed in such a way that the process converges in law to a unique invariant measure which admits \(\pi \) as its marginal.

Let the Zig-Zag process be defined as \(\textbf{Z}_t = (\textbf{X}_t, \textbf{V}_t)\) on the extended space \(E = {\mathcal {X}} \times {\mathcal {V}}\), with \({\mathcal {X}}={\mathbb {R}}^d\) and \({\mathcal {V}} = \{-1,1\}^d\). From an initial state \((\textbf{x}, \textbf{v})\), the process evolves in time following the deterministic linear dynamics

where the dots denote differentiation with respect to time. The probability of changing direction (i.e. the sign of one component of the vector \(\textbf{v}\)) increases as the process heads into the tails of \(\pi (\textbf{x})\). The event of changing the sign of \(v_i\) is modelled as an inhomogeneous Poisson process with component-wise rate

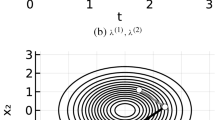

where \(\partial _i\) is the partial derivative w.r.t. the \(i^\text {th}\) component. Hence, the probability of having an event for coordinate i in \([t,t+ \textrm{d} t]\) is \(\lambda _i(\textbf{z}_t) \textrm{d} t + o( \textrm{d} t)\). This induces the ZZ process to spend more time in regions of high density of \(\pi (\textbf{x})\). When a velocity switch is triggered, a switching time \(\tau \) is obtained, and the process moves forward from \(\textbf{x}\) to \(\textbf{x}' = \textbf{x}+\tau \textbf{v}\). At which point the transition kernel \(Q(\textbf{v}' | \textbf{x}',\textbf{v})\) selects the velocity component to switch and flips its sign. From \((\textbf{x}',\textbf{v}')\) the dynamics proceed following Eq. 1 until another velocity switch occurs. The trajectories of the process produce ‘zig-zag’ patterns such as those seen in Fig. 1, motivating its name. The linear dynamics in Eq. 1, the jump rates \(\lambda _i(\textbf{x},\textbf{v})\), and the transition kernel \(Q(\textbf{v}' | \textbf{x}',\textbf{v})\) uniquely define the Zig-Zag process. Its infinitesimal generator is known, and can be given in terms of the three above quantities as

where \(F_i(\textbf{v})\) represents the operation of flipping the sign of the ith component.

The process described above is known as the Zig-Zag process with canonical rates, and has been proven to be ergodic for a large class of targets (Bierkens et al. 2019b). Taking instead the rate

(compare with Eq. (2)), with \(\gamma _i \geqslant 0\) satisfying

also yields a process that targets the desired distribution, and the process is ergodic on a larger class of models. However, increasing \(\gamma _i\) induces diffusive behaviour, in that the process tends to change direction more often than it otherwise would, and to cover less distance in the same amount of time. Hence, if the functions \(\gamma _i\) are taken to be strictly positive, they are generally set to be as small as possible.

In this work we take \(\gamma _i(\textbf{x}, \textbf{v}) = \gamma \), which gives an overall baseline switching rate of \(\Gamma = \sum _i \gamma _i = d \gamma \). For \(\textbf{z} = (\textbf{x}, \textbf{v})\), we will often refer to the switching rate as a function of time as \(\lambda _i^\textbf{z} (t):= \lambda _i ( \textbf{x} + t \cdot \textbf{v}, \textbf{v})\).

2.1 Sampling the switching time

In this section we outline the approach followed in Bierkens et al. (2019a). Switches in \(\textbf{v}\) occur as the interarrival times of an inhomogeneous Poisson process with rate \(\lambda _i(\textbf{x},\textbf{v})\). Starting the process at \(t=0\), each \(\tau _i\), the time to the next switch in the ith component conditional on no other switches occurring, has cumulative distribution function (cdf)

A switching time \(\tau _i\) is sampled separately for each component with law given by (6), and the final switching time \(\tau \) is selected as

Once \(\tau \) has been calculated, the system follows the deterministic dynamics from \(\textbf{x}(s) = \textbf{x}(0) + s \textbf{v}\) to \(\textbf{x}(\tau ^-) = \textbf{x}(0) + \tau ^- \textbf{v}\), where a velocity flip occurs, and the process starts following the new dynamics \(\textbf{x}(s) = \textbf{x}(\tau ) +\textbf{F}_{i^*}(\textbf{v}) (s-\tau )\). The larger \( \{ \tau _i \}_{i \ne i^*}\) are typically then discarded, as they relate to a state of the system which may no longer be relevant. The same procedure is repeated at each iteration of the algorithm.

This method for sampling the switching times requires realisations of d random variables with densities given by (6), where d is the number of components of the Zig-Zag process, i.e. the number of parameters to be estimated via MCMC.

2.2 Poisson sampling via thinning

Sampling \(\tau _i\) from Eq. (6) requires solving the integral in the exponential. This is often a difficult task, as the rates \(\lambda _i\) depend on the posterior in a complex way as shown in Eq. (4). Analytic solutions are available only for simple targets and are problem-dependent.

In Bierkens et al. (2019a) the authors propose the method of thinning to sample the waiting times to the next switch, \(\tau _i\). If the Jacobian or Hessian of the target \(\pi (\textbf{x})\) is bounded, then there exists a bound \(\bar{\lambda }_i(\textbf{x},\textbf{v}) \ge \lambda _i(\textbf{x},\textbf{v})\), \(\forall \textbf{x},\textbf{v}, i\). The bound \(\bar{\lambda }_i\) can be used instead of \(\lambda _i\) in Eq. (6), so that \(G_{T_i}(\tau _i)\) can be replaced with a simpler function. Once the \(\tau _i\) are sampled and the time \(\tau \) to the next switch is found, the proposed velocity switch occurs with probability \(\lambda _{i*} / \bar{\lambda }_{i*}\), to correct for the fact that \(\tau \) was not sampled from the correct distribution. If the switch is rejected, there is no change in \(\textbf{v}\), the process moves linearly to \(\textbf{x}+\tau \textbf{v}\), and new switching times are sampled.

Sampling the switching times via thinning extends the applicability of the Zig-Zag sampler to a wider class of models than those where \(\tau \) can be sampled by inverting the cdf, and forms the basis for the ZZCV algorithm of Bierkens et al. (2019a). Additionally, in the recent work of Sutton and Fearnhead (2021), the authors propose a method for systematically identifying numerical bounds which are valid on a given time horizon. Their method significantly extends the applicability of PDMPs, but again retains the cost of needing to construct a numerical bound at each iteration, and some a priori knowledge of the target measure and its regularity. In Corbella et al. (2022) the authors propose an alternative method to construct bounds numerically. Using numerical optimisation routines, the authors construct a piecewise constant bound on a finite time interval long enough that it is likely to contain a switching event. If the geometry of the target is sufficiently regular that the bound is valid, then this method is exact and will admit the correct invariant measure. However, if the geometry of the target is more complicated, the ‘bound’ constructed numerically may in fact fail to upper-bound the true event rate. This then introduces numerical error into the posterior approximation, which needs to be quantified, thus setting this algorithm apart from the other thinning-based methods described in this section.

As such, despite these developments, the Zig-Zag sampler remains understudied from a practical point of view, which has so far hampered the real-world applications of PDMP-based MCMC.

3 Numerical Zig-Zag

The Zig-Zag sampler described in Sect. 2 relies on the availability of an easy way to sample from Eq. (6), or on having knowledge of a bound for \(\lambda _i\). In this section we extend the Zig-Zag algorithm to sample from arbitrary targets via numerical approximation of the switching times.

3.1 The Sellke Zig-Zag process

In order to ameliorate the issues described in Sects. 1 and 2, it is possible to reformulate the problem of finding the time to the next velocity switch in a more convenient way. This approach is based on the Sellke construction (Sellke 1983), and we note that picking \(\tau _i\) with law (6) is equivalent to finding \(\tau _i >0\) such that

where \(R_i \sim \textrm{Exp}(1)\). Ergo, finding \(\tau _i\) is equivalent to finding the root of the function g.

We demonstrate this equivalence in the supplementary material in Section S.1, in which we describe SeZZ, the Zig-Zag process where the switching times are given by roots of (7), and where we show that the SeZZ generator is stationary with respect to the target measure. The Numerical Zig-Zag, or NuZZ, arises from numerical approximations of the roots of (7).

3.2 Numerical integration

Unlike other Zig-Zag processes, which can rely on bounds which are either unknown or might not exist, numerical approximations of the roots of (7) can be accomplished for a very wide class of models, and allow us to study the associated numerical error. There are, however, a number of problems to overcome. The function g is in the set \(C^1({\mathbb {R}})\backslash C^2({\mathbb {R}})\), and in practice it often increases very steeply when moving into the tails of the distribution. The numerical method used to solve the integral in (7) up to some \(\widetilde{\tau }\) should be chosen on the basis of its efficient and accurate approximation of such a problem. The function g is only once differentiable, and therefore numerical integrators like Runge–Kutta may perform unreliably. In particular, the first derivative of \(\lambda _i\) is only piecewise continuous, which may also create problems for common root finding methods like Newton–Raphson.

Towards ameliorating this, one could consider modifications of the event rates which have improved smoothness properties, at the cost of increasing the average number of events; see e.g. Andrieu and Livingstone (2021a, Corollary 5). This could be worthwhile, though necessitates analysis of some extra practical trade-offs, which we elect not to explore in this work.

The method which we use to find the time to the next switch combines the QAGS (Quadrature Adaptive Gauss-Kronrod Singularities) integration routine from the GSL library (Galassi 2017), with Brent’s method (Press et al. 2007) for finding the root.

3.3 Brent’s algorithm

Common root-finding algorithms such as Newton–Raphson and the secant method have good theoretical properties, such as superlinear convergence to the root. However, if the target function is not extremely well behaved, these algorithms may converge very slowly, or not converge at all. On the other hand, iteratively bisecting intervals is a more reliable method to find the root, as it converges linearly even when the function is not so well behaved. However, bisection has slower linear convergence.

Brent’s method (Press et al. 2007) was originally developed by Van Wijngaarden and Dekker in the 1960 s, and it was only successively improved by Brent in 1973. Dekker’s root-finding method combined the secant method with bisection, to converge superlinearly when the function is well-behaved, while maintaining linear convergence if the target is particularly difficult. Unfortunately, it is possible for Dekker’s algorithm to choose to apply the secant method even when the change to the current guess of the root is arbitrarily small, leading to very slow convergence.

Brent’s method introduces an additional check on the change that the secant method would apply to the current root guess. If the update is too small, the algorithm then performs a bisection step instead, which guarantees linear convergence in the worst case scenario.

Moreover, Brent’s method is superior to previous methods in that when it determines the new root guess via the secant method, it uses inverse quadratic interpolation instead of the usual linear interpolation.

Brent’s method deals with numerical instabilities caused by the denominators of the secant update being too small by bracketing the root. That is to say, by storing and updating the range where the root is known to be by the intermediate value theorem, as the iterations are performed and the search for the root continues.

In this work, for a Zig-Zag process as defined in Sect. 2.1, the root returned by Brent’s method for each of the components, \(\widetilde{\tau }_i\), satisfies the following inequality

where \(\varepsilon _{\textrm{Bre}}\) is the user-specified numerical tolerance, and \(\widetilde{\lambda }_i\) is the piecewise linear approximation to \(\lambda \) induced by the numerical integration routine.

In Sect. 4 we discuss how we model the numerical tolerances for the NuZZ process, and what effect they have on the resulting MCMC sample. In the next sub-section, we discuss our actual implementation, where we reduce the number of integrals to be computed from d to 1.

3.4 Efficient implementation

The Zig-Zag Sampler discussed in Sect. 2.1 relies on sampling a switching time \(\tau _i\) for each component, then taking the minimum \(\tau = \min _{i=1,\ldots ,d} \tau _i\). This approach requires solving d integrals for each iteration. However, it is possible to reduce the cost to only one integral per iteration, by using the following procedure. First, sample \(\tau \) such that

where \(\Lambda (\textbf{x}(s),\textbf{v}):= \sum _{i=1}^d \lambda _i(\textbf{x}(s),\textbf{v})\) is the total rate of switching in any component. Then sample the index of the first component to switch, \(i^*\), as a multinomial distribution with ith cell probability \(\lambda _i(\textbf{x}(\tau ),\textbf{v}) / \Lambda (\textbf{x}(\tau ),\textbf{v})\). The last step can be achieved efficiently by taking \(i^*\) such that

and finding \(i^*\) by bisection search. This sampling method is used in the Gillespie algorithm, for Monte Carlo simulation of chemical reaction networks (Gillespie 1977), and is a standard result for Poisson processes.

Proposition 1

A Zig-Zag process where switches happen at rate \(\Lambda (\textbf{x}(s),\textbf{v}) = \sum _{i=1}^d \lambda _i(\textbf{x}(s),\textbf{v})\), and the index of the velocity component to switch is distributed as

targets the same invariant distribution \(\pi (\textbf{x})\) as the Zig-Zag process with generator given in (3).

Proof

This follows from standard results on Poisson processes. For a proof tailored to this case see Appendix A \(\square \)

Importantly, sampling \(\tau \) in this way reduces the number of integrals to be computed numerically from d to 1, at the price of a slight increase in the complexity of the integrand. Moreover, there may be more efficient implementations than bisection to find \(i^*\), which may reduce the computational complexity further.

It is worth pointing out that the benefits of this implementation of the Zig-Zag Sampler, i.e. working with the sum of rates, are not only applicable to the NuZZ algorithm. The approach can be applied to other Zig-Zag-based algorithms and PDMPs, and in fact it synergises particularly well with the Zig-Zag algorithm with control variates, as showed in Appendix B.

Even though the efficient implementation has the same theoretical cost as the original (without taking numerical approximations into consideration), our experiments suggest that the efficient implementation may be significantly more efficient in practice.

3.5 Reusing information

As integration over a similar range is repeated at every root finding iteration, we have modified the integration routine so that information can be shared through subsequent iterations of Brent’s method.

The QAGS routine performs a 10–21 Gauss–Kronrod numerical integral on the interval \([0,\widetilde{\tau }]\) at each root finding iteration, where \(\widetilde{\tau }\) is the current proposed root. If the estimated integration error is greater than the threshold \(\varepsilon _{\textrm{int}}\), the interval \([0,\widetilde{\tau }]\) is split into subintervals, on which new 10–21 numerical integrals are computed, and so on recursively.

Note that the value of the numerically approximated integral of the true rate \(\Lambda \) on \([0,\widetilde{\tau }]\) is equal to the value of the exact integral of \(\widetilde{\Lambda }\), the piecewise-linear approximation of the rate \(\Lambda \) obtained joining the nodes of the numerical integral.

If at a given iteration of the root finding algorithm the following inequality holds,

then the quantities computed between 0 and \(\widetilde{\tau }\) can be saved and reused during the next iteration of the root finder. At the next iteration, the new proposed root would be \(\widetilde{\tau }'\), and the objective function can be decomposed as

where now we only have to compute the second integral, which typically requires fewer resources to achieve the desired level of precision. Because of this, the Gauss-Kronrod number of points was decreased from the original 10–21 to 7–15 points.

Reusing information to compute \(\tau \) as described above involves the computation of multiple integrals. As such, a naive implementation of the above policy will only control the error of each sub-integral, which can lead to errors accumulating and potentially exceeding the desired user-specified tolerance. As such, when the error is a substantial concern, one should be more conservative and control the error estimate for the entire integral at the nominal level. In practice however, the integrator used in this work is often too precise for our problem, and the actual precision tends to be far higher than the numerical tolerance suggests.

4 Error and convergence analysis

In this section we study how the error induced by numerical procedures impacts the quality of the samples which are produced by NuZZ. In order to do this, we will consider NuZZ as a stochastic process that is a perturbation of the original process, the Zig-Zag sampler.

Our aim is to show that the ergodic averages which we calculate via NuZZ are close to the true equilibrium expectations of interest, with an error that can be quantified, and whose dependence on the numerical tolerances can be made transparent.

However, the continuous-time NuZZ process is not Markov, as numerical integration induces a mesh on the deterministic part of the trajectory that depends on both end-points. It is possible to enlarge the state space to make the NuZZ process Markov, but calculations quickly become unwieldy. Therefore we will follow an indirect route using the skeleton chain.

Recall that while the full Zig-Zag process \(\textbf{Z}_t = (\textbf{X}_t, \textbf{V}_t)\) evolves in continuous time, however the differential equations (1) describing its behaviour in-between velocity switches are trivially solvable, and so the full process can be reconstructed from the skeleton chain of points at which velocities change, \(\textbf{Y}_k =(\textbf{X}_{T_k}, \textbf{V}_{T_k})\). Explicitly,

Our strategy for bounding the impact of numerical errors on ergodic averages therefore involves bounding the discrepancy between the skeleton chain from the exact process, and the skeleton chain from the NuZZ process. One can then reconstruct ergodic averages via interpolation using (12).

4.1 Results

Our final result, contained in Theorem 1, can be summarised with the following corollary. Let \(\varepsilon _{\textrm{int}}, \varepsilon _{\textrm{Bre}}\) be the user-defined error tolerances for the numerical (QAGS) integration routine and for Brent’s method respectively, and let \(\eta _{\textrm{int}}\) be the integration error.

Let us assume now (we will motivate these assumptions later on in this section) that

-

1.

\(\eta _{\textrm{int}} \leqslant \varepsilon _{\textrm{int}}\), i.e. the true numerical integration error is always smaller than the prescribed tolerance.

-

2.

The total refreshment rate \(\Gamma \) is strictly positive.

-

3.

Every element of the Hessian of \(\log \pi (\textbf{x})\) is globally bounded from above by the constant M.

-

4.

The skeleton chain \(\textbf{Y}_k\) of the Zig-Zag process without numerical errors, \(\textbf{Z}_t\), is Wasserstein geometrically ergodic.

-

5.

The skeleton chain \(\widetilde{\textbf{Y}}_k\) of the Zig-Zag process with numerical errors (the NuZZ process), \(\widetilde{\textbf{Z}}_t\), is Wasserstein geometrically ergodic.

-

6.

The average interarrival time for the process \(\textbf{Z}_t\) exists and is equal to H.

-

7.

The average interarrival time for the process \(\widetilde{\textbf{Z}}_t\) exists and is equal to \(\widetilde{H}\).

Then we will be able to obtain the following result.

Corollary 1

Let \(\textbf{Z}_t\) be the exact Zig-Zag process on the state space E with stationary measure \(\mu \), and let \(\widetilde{\textbf{Z}}_t\) be the corresponding NuZZ process with tolerances \(\left( \varepsilon _{\textrm{Bre}}, \varepsilon _{\textrm{int}} \right) \). Under Assumptions 1–7, there exists a probability measure \(\widetilde{\mu } = \widetilde{\mu }_{\varepsilon _{\textrm{Bre}}, \varepsilon _{\textrm{int}}}\) on E such that for all functions f bounded, Lipschitz, and with compact support, the limit

exists almost surely and takes the value

Moreover, defining the Kantorovich-Rubinstein distance as

where \(| \cdot |_{\textrm{Lip}}\) is the Lipschitz seminorm, it holds that

where \(\varepsilon _{\textrm{Tot}} = \varepsilon _{\textrm{int}} +\varepsilon _{\textrm{Bre}}\), for a constant c which depends only on the convergence properties of the exact process \(\textbf{Z}_t\).

This bound motivates the following considerations.

Remark 1

We note that the dependence of this bound on \(\Gamma \) may not be tight, and so it may be possible to use other techniques to obtain similar stability results in the degenerate case in which \(\Gamma =0\). It would also be natural to devise a specialised root-finding routine which is adapted to this setting. We leave such specialisations for future work.

Remark 2

Consider the bound in Eq. (16), and a sequence of model problems in dimension d, with Hessian bounds growing as \(M \sim d^a\), numerical tolerances tightening as \(\varepsilon _{\textrm{Tot}} \sim d^{-b}\), and aggregate refreshment rates shrinking as \(\Gamma \sim d^{-c}\) with \(a, b, c \geqslant 0\). We choose to consider bounded \(\Gamma \) as it represents a minimal perturbation to the non-reversible character of the original process.

In order to have the error bound on the ergodic averages decaying to 0 as the dimension grows, we must have

Noting that the cost per unit time of simulating the process grows with b, efficient algorithms will seek to make this quantity as small as possible. Since we must have \(b > a + c + 1\), it suffices to take \(c = 0\) and \(b > a + 1\), i.e. let \(\Gamma \) be of constant order, and take \(\varepsilon _{\textrm{Tot}} = o \left( 1 / (Md) \right) \).

One outcome of this argument is to confirm the intuition that for more irregular targets (i.e. with larger M), a tighter numerical tolerance \(\varepsilon _{\textrm{Tot}}\) is required in order to faithfully resolve the event times and jump decisions of the process numerically, and hence to accurately reproduce equilibrium expectation quantities.

In order to produce these results, we start by recalling some facts about Markov chains and Wasserstein distances that will be of use later.

4.2 Wasserstein distance

In Rudolf and Schweizer (2018) the authors derive perturbation results for Markov chains which are formulated in terms of the 1-Wasserstein distance between transition kernels. We found that in our calculations, it is more convenient to work instead with the 2-Wasserstein distance. Hence, in this section, we report some basic results on Markov chains and Wasserstein distances, and prove that the relevant results in Rudolf and Schweizer (2018) do indeed extend to the 2-Wasserstein case. For brevity, many of the results of this section have been moved to Appendix C, as they are only technical conditions and are not used directly in the results that we chose to include in the main body of this work. Let us now define the following.

Let \(E \subseteq {\mathbb {R}}^d\), and equip it with its Borel \(\sigma \)-algebra \({\mathcal {B}}(E)\). Let \({\mathcal {P}}\) the set of Borel probability measures on E, and write \({\mathcal {P}}_2 \subset {\mathcal {P}}\) for the subset of probability measures with finite second moment. The 2-Wasserstein distance between \(\mu _1,\mu _2 \in {\mathcal {P}}_2\) is then given by

where \(\Xi \) is the set of all couplings of \(\mu _1\) and \(\mu _2\) on \(E \times E\) which have \(\mu _1\) and \(\mu _2\) as marginals, and \(\Vert \cdot \Vert _2\) is the 2-norm. We will use the following notation for the expectation of a function f with respect to the measure \(\mu _1\):

Let \(P:{\mathcal {P}}_2 \rightarrow {\mathcal {P}}_2\) denote a transition kernel on \((E, {\mathcal {B}}(E))\) which sends probability measures with finite second moment to probability measures with finite second moment. Then

This implies that \(\delta _{\textbf{x}_1} P(A) = P(\textbf{x}_1,A)\), and for a measurable function \(f: E \rightarrow {\mathbb {R}}\),

where

Definition 1

(Wasserstein Geometric Ergodicity of Markov kernels) We say that the transition kernel P is Wasserstein geometrically ergodic with constants \((C, \rho ) \in [0, \infty ) \times [0, 1)\) if, for all \(n \in {\mathbb {N}}\), it holds that

Proposition 2

Let P and \(\widetilde{P}\) be Markov kernels such that P is Wasserstein geometrically ergodic, and \(d_{W}\left( \delta _\textbf{x} P,\delta _\textbf{x} \widetilde{P}\right) \leqslant \epsilon \) for all \(\textbf{x}\). Let \(\pi _n\) and \(\widetilde{\pi }_n\) be the measures defined by \(\pi _n = \pi _0 P^n\) and \(\widetilde{\pi }_n = \widetilde{\pi }_0 \widetilde{P}^n\). It then holds that

Proof

See Appendix C. \(\square \)

Proposition 3

If a Markov kernel P is Wasserstein geometrically ergodic, then it has an ergodic invariant measure \(\pi \) with finite second moment, which is therefore unique. Furthermore, for any measure \(\nu \) with finite second moment, it holds that \(\nu P^n \rightarrow \pi \) in the Wasserstein distance.

Proof

See Appendix C. \(\square \)

We are now ready to discuss results specific to the Zig-Zag process.

4.3 Zig-Zag notation

Recall that \(\textbf{Z}_t=(\textbf{X}_t, \textbf{V}_t)\) is the exact (Zig-Zag) continuous-time Markov process defined on the state space \(E = {\mathcal {X}} \times {\mathcal {V}}\), with stationary measure \(\mu (\textrm{d} \textbf{x}, \textrm{d} \textbf{v}) = \pi (\textrm{d} \textbf{x}) \otimes \psi (\textrm{d} \textbf{v})\). Let \(T_k\) be the kth switching time of the Zig-Zag process, and let \(\textbf{Y}_k = (\textbf{X}_{T_k}, \textbf{V}_{T_k})\) for \(k=1,...,n\) be the skeleton Markov chain, with stationary measure \(\nu (\textrm{d} \textbf{x}, \textrm{d} \textbf{v})\).

Let \(P:E\rightarrow E\) denote the Markov transition kernel of the skeleton chain \(\textbf{Y}_k\). Intuitively, applying P to a state \((\textbf{x},\textbf{v})\) of the process involves sampling a new \(\tau \), pushing the dynamics forward from \((\textbf{x}, \textbf{v})\) to \((\textbf{x}+\tau \textbf{v}, \textbf{v})\), and sampling a new velocity from the distribution associated with the transition kernel \(Q(\textbf{v}'|\textbf{x}+\tau \textbf{v},\textbf{v})\).

Let us also define \(\textbf{U}_k = ( \textbf{Y}_{k-1}, \textbf{Y}_k)\) on \(E \times E\) (or equivalently, \(E^2\)) as the ‘extended’ skeleton chain, which includes two consecutive states of the \(\textbf{Y}_k\) chain, with invariant measure \(\zeta \). As mentioned above, our proof strategy is based on the interpolation of the skeleton chain, and the process \(\textbf{U}_k\) will be a key component.

Lastly, we place tildes above all of the quantities above to denote their perturbed versions under numerical approximation. In this spirit, if \(\textbf{Z}_t\) is the exact Zig-Zag process, \(\widetilde{\textbf{Z}}_t\) is the NuZZ process. If \(\textbf{Y}_k\) is the skeleton chain of the Zig-Zag process, then \(\widetilde{\textbf{Y}}_k\) is the skeleton chain of NuZZ, and if P is the Markov transition kernel that moves the skeleton chain of the exact process one step forward, then \(\widetilde{P}\) is its numerical equivalent. The convention same applies to the other relevant objects. We assume that all of these quantities exist, and we will address their properties below. In particular, in the next section we will describe the analytical form that numerical errors take for the NuZZ algorithm, which will be useful in bounding discrepancies between the exact and numerical approximation of the objects described above.

For notational simplicity, we use the unlabelled norm \(\Vert \cdot \Vert \) to represent a 2-norm, and norms defined on elements of the extended state space E and \(E \times E\) (denoted as \(E^2\)) are defined as

4.4 Perturbation bound for the skeleton chain

Under appropriate conditions that we detail below, a sufficient condition for the processes \(\textbf{Z}_t\) and \(\widetilde{\textbf{Z}}_t\) to produce comparable ergodic behaviour is that \(\textbf{Z}_t\) contracts towards equilibrium sufficiently rapidly, and that the transition kernels P and \(\widetilde{P}\) should be close to each other in a uniform sense. We emphasise that \(\widetilde{P}\) is indeed a Markov kernel, which stands in contrast to the non-Markovian nature of the continuous-time process \(\widetilde{\textbf{Z}}_t\); this property is what enables our proof strategy. By construction, the latter property holds for the NuZZ if on average, (i) the new locations \(\textbf{x}', \widetilde{\textbf{x}}'\) are close to one another, and (ii) the new velocities \(\textbf{v}', \widetilde{\textbf{v}}'\) are close to one another. By analysing the numerical error, we will show in Proposition 4 that this holds.

Recall that in Sect. 3.2 we described how NuZZ relies on two different numerical methods to solve Eq. (7): an adaptive integration routine and Brent’s method, each requiring a user-set tolerance \(\varepsilon _{\textrm{int}}\) and \(\varepsilon _{\textrm{Bre}}\), respectively.

In our specific setting, fixing R at the beginning of each MCMC iteration, the error at each iteration of Brent’s method can be written as

where \(\tilde{\Lambda }\) is the piecewise polynomial approximation to \(\Lambda \) induced by the integration routine, and \(\widetilde{\tau }\) \((\ne \tau )\) is the root currently returned by the algorithm. Using Eq. (9) and adding and subtracting terms to Eq. (26) we obtain the following error decomposition

The term \(\eta _{\textrm{int}}\) represents the component of the total Brent error deriving from the numerical integration routine, while the term \(\eta _{\textrm{root}}\) represents the component of the total Brent error deriving from the fact that, conditional on integrating on the exact rate, the integral is calculated up to \(\widetilde{\tau }\) instead of to \(\tau \).

Let us now make an assumption on the smoothness of the event rates of the process.

Assumption 1

The function \(\Lambda \) is continuous, uniformly bounded below by a constant \(\Lambda _{\textrm{min}} > 0\), and is sufficiently well-behaved that the numerical error \(\eta _{\textrm{int}}\) is always bounded by the given tolerance \(\varepsilon _{\textrm{int}}\).

Remark 3

The quadrature rules used by NuZZ make a polynomial approximation to the integrand, and so we expect that the true numerical error will be controlled at the nominal tolerance level when event rates are locally well-approximated by polynomials, i.e. have good local smoothness properties. We do not find this to be a substantial practical restriction.

In our specific case, the integration routine is adaptive, and it stops improving the result when the size of the estimated \(\eta _{\textrm{int}}\), the integration error between the two layers of Gauss–Kronrod nodes, is below the tolerance threshold \(\varepsilon _{\textrm{int}}\). An irregular \(\Lambda \) with very tall and thin spikes can in principle fool the integrator, but the QAGS routine places more nodes around areas of high variation of the target, to minimise the impact of the irregularities on the final value of the integral. Therefore, only a very pathological \(\Lambda \), e.g. one with very large or infinite values of certain derivatives are likely to cause Assumption 1 to be false. Hence we expect this to hold for most densities arising in statistical applications, including all targets studied in this work. Let us now proceed with the discussion.

Brent’s method will continue iterating until \(|\eta _{\textrm{Bre}}| \le \varepsilon _{\textrm{Bre}}\). If Assumption 1 holds, the root error \(\eta _{\textrm{root}}\) at the last iteration of Brent’s method can be bounded as

Effectively, as \(\varepsilon _{\textrm{Bre}}, \varepsilon _{\textrm{int}} \rightarrow 0\), then \(\eta _{\textrm{root}} \rightarrow 0\). This does not guarantee that \(\widetilde{\tau } \rightarrow \tau \), which will be the subject of Lemma 1.

Lemma 1

Let \(\tau \) be the exact switching time found by solving Eq. (7), and \(\widetilde{\tau }\) be the approximated switching time obtained by solving Eq. (26). Let Assumption 1 and Eq. (28) hold. The difference between the exact and numerically approximate switching time can be bounded (deterministically) as

Proof

See Appendix D. \(\square \)

Note that when implemented without a refreshment procedure, Eq. (29) can diverge as \(\Lambda _{\textrm{min}}\) may degenerate to 0. The degradation of this bound corresponds to the possibility of serious discrepancies between the inter-jump times for the exact and numerical chain, which is a concrete possibility for PDMPs which have vanishing event rates over nontrivial parts of the state space. This problem can be circumvented by taking a baseline position-independent refreshment rate of \(\gamma _i(\textbf{z}) \equiv \gamma _i>0\), with \(\Lambda _{\textrm{min}} = \Gamma = \sum \gamma _i = d \gamma \). This parameter will also appear in a similar position in Theorem 1. Let us formally make the assumption.

Assumption 2

Let the baseline switching rate \(\Gamma = \sum \gamma _i = d \gamma \) be strictly greater than zero.

One practical interpretation of the bound in Eq. (29) is that if the numerical tolerances are high, then a large value of \(\Gamma \) is necessary in order to avoid large discrepancies in computation of the inter-jump times. Likewise, as the numerical tolerances become smaller, the baseline refreshment rate \(\Gamma \) can also be taken smaller.

Let us now prove the second component needed for Proposition 4. Intuitively, if \(\tau \) and \(\widetilde{\tau }\) are close to each other, then the Zig-Zag process and NuZZ both starting from \((\textbf{x}, \textbf{v})\) will remain close at \((\textbf{x} + \tau \textbf{v}, \textbf{v})\) and \((\textbf{x} + \widetilde{\tau } \textbf{v}, \textbf{v})\) respectively. With the following lemma, we ensure that when the two processes reach their respective new switching points, the new velocities which are sampled according to the transition kernel \(Q(\textbf{v}'|\textbf{x},\textbf{v})\) are likely to remain the same.

Let us now make the following assumption.

Assumption 3

Let the entries of the Hessian of \(\log \pi (\textbf{x})\) be uniformly bounded in absolute value by a constant M, i.e.

Remark 4

Note that Assumption 3 is made for the purposes of analysis. To run the algorithm in practice, explicit knowledge of the constant M is not required. Indeed, the adaptive root-finding solvers used in NuZZ are expected to be quite robust to the precise value of M. Nevertheless, if this value is large, then our error bounds will deteriorate.

Lemma 2

Let Assumptions 2 and 3 hold. Let us also assume that the process is implemented with coordinate-wise refreshment rates of \(\gamma _i > 0\), with sum \(\Gamma = \sum _i \gamma _i = d\gamma \). Then, for all \(\left( \textbf{x}, \textbf{v}, \textbf{x}', \textbf{v}'\right) \), it holds that

Proof

See Appendix D. \(\square \)

We can now use Lemma 1 and 2 to show that the the transition kernels P and \(\widetilde{P}\) are close to each other.

Proposition 4

(Wasserstein closeness of P and \(\widetilde{P}\)) Let Assumptions 1, 2 and 3 hold. Let \(\widetilde{P}\) be the Markov transition kernel for NuZZ, parametrised by the numerical tolerances \(\varepsilon _{\textrm{Bre}}\) and \(\varepsilon _{\textrm{int}}\). Let \(\Gamma > 0\) be the baseline switching rate of both ZZS and NuZZ. For any point \(\textbf{z} = (\textbf{x},\textbf{v}) \in E\), it holds that

where \(d_W\) is the 2-Wasserstein distance as defined in Equation (18), and

Proof

See Appendix D. \(\square \)

4.5 Invariant measures and convergence

It is challenging to make statements on the asymptotic law \(\widetilde{\mu }\) of \(\widetilde{\textbf{Z}}_t\), as NuZZ is not a Markov process. While there are ways of augmenting the state space such that \(\widetilde{\textbf{Z}}_t\) is a sub-component of a Markov process, we have found that in our case these approaches are somewhat opaque and algebraically complicated. Moreover, establishing the a priori existence of a stationary distribution for the numerical process is in fact not crucial for Monte Carlo purposes. With this in mind, we chose to pursue results directly in terms of ergodic averages along the trajectories of our processes. A byproduct of our approach is that we will be able to describe these ergodic averages as spatial averages against a probability measure \(\widetilde{\mu }\), which encapsulates the long-run behaviour of the process \(\widetilde{\textbf{Z}}_t\), without requiring the strict property of being an invariant measure for the process.

In this Section we will use Proposition 4 to derive results about the stationary measures of \(\textbf{Y}_k, \widetilde{\textbf{Y}}_k, \textbf{U}_k\) and \(\widetilde{\textbf{U}}_k\). We will then define the interpolation operator \({\mathcal {J}}f\) and use it, together with the results on the stationary measures, to produce Theorem 1, the main result of this section.

Recall that P is defined as the Markov kernel corresponding to the skeleton chain of the exact Zig-Zag Process. If the Zig-Zag process \(\textbf{Z}_t\) converges geometrically to the stationary distribution, then one generally expects that this assumption should also hold for the skeleton chain \(\textbf{Y}_k\). However, we do not know of existing results which guarantee this implication.

Assumption 4

(Wasserstein geometric ergodicity for \(\textbf{Y}_k\)) The transition kernel P is Wasserstein geometrically ergodic with constants \(\rho \in [0,1)\) and \(C\in (0,\infty )\).

Moreover, let us make the following additional assumption.

Assumption 5

(Wasserstein geometric ergodicity for \(\widetilde{\textbf{Y}}_k\)) The transition kernel \(\widetilde{P}\) is Wasserstein geometrically ergodic.

Once again, as \(\widetilde{P}\) relates to the skeleton chain rather than the continuous-time process, this assumption is not particularly strict. Note that Assumptions 4 and 5 imply that \(\nu \) and \(\widetilde{\nu }\) are unique.

Remark 5

Wasserstein geometric ergodicity is in some ways a weaker condition than geometric ergodicity in total variation, as e.g. the Wasserstein distance does not require the existence of the densities of the random variables involved. With this in mind, we view Assumptions 4 and 5 to be reasonable for e.g. light-tailed targets, while acknowledging the difficulty of verifying these assumptions in practice. Indeed, the convergence behaviour of PDMPs and their skeleton chains is known to be a difficult topic, particularly in the presence of non-contractive dynamics, such as those of the Zig-Zag process. Rigorously establishing these assumptions is beyond the scope of this work.

Under these assumptions, the following bound on the invariant measures of the skeleton chains holds.

Proposition 5

(Wasserstein distance between skeleton chains) Let Assumptions 1–5 hold. Let \(\nu \) and \(\widetilde{\nu }\) be the unique invariant distributions of \(\textbf{Y}_k\) and \(\widetilde{\textbf{Y}}_k\). Then

where C and \(\rho \) are the constants defined in Assumption 4, and \(\epsilon \) is defined in Proposition 4.

Proof

See Appendix D. \(\square \)

Proposition 5 above already provides a bound on the Wasserstein distance. However, the computed bound applies only to the stationary distribution of the skeleton chain. In what follows we try to extend these results on the skeleton chain \(\widetilde{\textbf{Y}}_k\) to the continuous-time Zig-Zag process \(\textbf{Z}_t\) by making use of the extended skeleton chain \(\textbf{U}_k\) and the interpolation operator \({\mathcal {J}}\). Let us now state a couple of technical results on the extended skeleton chains \(\textbf{U}_k\) and \(\widetilde{\textbf{U}}_k\).

Lemma 3

Let Assumptions 1–5 hold. The Markov chains \(\textbf{U}_k\) and \(\widetilde{\textbf{U}}_k\) each have a unique invariant measure.

Proof

See Appendix E. \(\square \)

We can now bound the distance between \(\textbf{U}_k\) and \(\widetilde{\textbf{U}}_k\) in terms of the chains \(\textbf{Y}_k\) and \(\widetilde{\textbf{Y}}_k\) as follows.

Proposition 6

(Wasserstein distance between \(\textbf{U}_k\) and \(\widetilde{\textbf{U}}_k\)) Let Assumptions 1–5 hold. Let \(\zeta \) and \(\widetilde{\zeta }\) be the invariant distributions of the augmented chains \(\textbf{U}_k\) and \(\widetilde{\textbf{U}}_k\). Then:

Proof

See Appendix E. \(\square \)

The remainder of this section shows that if the numerical error is small, the ergodic averages obtained through the measure \(\widetilde{\mu }\) will be close to those obtained through the exact stationary measure \(\mu \). Moreover, the discrepancy will be bounded from above by a monotone function of the numerical tolerance.

Let us now define the interpolation operator \({\mathcal {J}}\), which will be of distinct importance in our derivation, and prove two technical lemmas that will be used in Theorem 1.

Definition 2

(Interpolation operator) Let \((\textbf{x},\textbf{v})\) and \(\left( \textbf{x}',\textbf{v}'\right) \) be two consecutive points in the skeleton chain \(\textbf{Y}_k\). Define the interpolation operator \({\mathcal {J}}: C ( E ) \rightarrow C \left( E \times E \right) \) by its action on functions as

The significance of this operator is that given a pair of consecutive points in the skeleton chain and a function f, the interpolated function \({\mathcal {J}}f\) returns the integral of the function f over the period of time during which the continuous-time process moves from the first point to the second point. As such, it is a natural tool for expressing ergodic averages of the continuous-time processes in terms of their skeleton chains.

To prove the subsequent results, we require two additional assumptions on the frequency at which events occur for both the exact and numerical processes.

Assumption 6

(Bounded event frequency for \(\textbf{Z}_t\)) The limiting empirical event frequency for the exact Zig-Zag process exists almost surely, and takes the value \(H \in \left( 0, \infty \right) \), i.e.

Assumption 7

(Bounded event frequency for \(\widetilde{\textbf{Z}}_t\)) The limiting empirical event frequency for the numerical Zig-Zag process exists almost surely, and takes the value \(\tilde{H} \in \left( 0, \infty \right) \), i.e.

Remark 6

Observing that the empirical event frequencies are identical to the empirical averages of the function \({\mathcal {J}} \textbf{1}\) along the skeleton chain, one sees that if \({\mathcal {J}} \textbf{1} \in L^1 \left( \zeta \right) \cap L^1 ( \widetilde{\zeta } )\), then a modification of the proof of Lemma 4 ensures that Assumptions 6 and 7 hold. For the exact process, this condition relates to integrability of the jump rates with respect to the invariant measure; for the approximate process, there is a similar but less rigorous interpretation with the same character. With this in mind, we instead choose to make explicit, interpretable assumptions on the empirical event frequencies, which we expect to hold in a majority of practical cases.

Under Assumptions 6 and 7, we can show the following:

Lemma 4

Let Assumptions 1–7 hold. Let f be such that \({\mathcal {J}}f \in L^1 \left( \zeta \right) \cap L^1 ( \widetilde{\zeta } )\). Then, the ergodic averages of f along the continuous processes \(\textbf{Z}_t\) and \(\widetilde{\textbf{Z}}_t\) both converge almost surely to deterministic limits. Moreover, these limits can be expressed in terms of the chains \(\textbf{U}_k\) and \(\widetilde{\textbf{U}}_k\) and their stationary distributions as

Proof

See Appendix E. \(\square \)

Remark 7

Note now that the right-hand side of Equation (36) can be rewritten as

where

where \(K \left( \cdot | \textbf{y}, \textbf{y}'\right) \) is the Markov kernel which selects a point uniformly at random from the line segment joining \(\textbf{y}\) and \(\textbf{y}'\), and \(\mu \) is obtained by integrating \(\textbf{y}, \textbf{y}'\) out of \(\varphi \).

In particular, it follows that \(\mu \) is the occupation (and stationary, in this case) measure of the exact process \(\textbf{Z}_t\) on the original state-space E. Likewise, we can define

with

in order to characterise the occupation measure of the approximating process.

We can now prove the main result of this section, which provides an error bound on the ergodic averages which are obtained from the paths of the continuous-time approximate process.

Theorem 1

(Bound on discrepancy of ergodic averages) Let Assumptions 1–7 hold. Let \(f: E \rightarrow {\mathbb {R}}\) be a bounded and Lipschitz function such that \({\mathcal {J}}f \in L^1 (\zeta ) \cap L^1 (\widetilde{\zeta })\). Then, the limit

exists almost surely, and takes a deterministic value. Additionally, there exists a probability measure \(\widetilde{\mu } = \widetilde{\mu }_{\varepsilon _{\textrm{Bre}}, \varepsilon _{\textrm{int}}}\) such that this limit is equal to \(\widetilde{\mu } (f)\), and the approximation error relative to the exact process satisfies

where \(\mu \) is the invariant distribution of the exact Zig-Zag Process.

Proof

See Appendix E. \(\square \)

Intuitively, Theorem 1 shows that despite \(\widetilde{\textbf{Z}}_t\) being non-Markovian, there exists a probability measure that describes its sojourn in the state-space, and that integration against this measure will reproduce the ergodic averages of the process.

The second, and perhaps most important consequence of Theorem 1 is to establish a bound between the difference of ergodic averages between the exact process \(\textbf{Z}_t\) and ergodic averages of the approximate process \(\widetilde{\textbf{Z}}_t\), bound that depends on how close the skeleton chains \(\left( \textbf{Y}_k, \widetilde{\textbf{Y}}_k\right) \) via the distance between the extended skeleton chains \(\left( \textbf{U}_k, \widetilde{\textbf{U}}_k\right) \). Hence ergodic averages computed with the occupation measure \(\widetilde{\mu }\) of the numerical process \(\widetilde{\textbf{Z}}_t\) will be close to those computed via the exact stationary measure \(\mu \) of the exact process, and as the numerical tolerances \(\varepsilon _{\textrm{Bre}}\) and \(\varepsilon _{\textrm{int}}\) go to zero, the two ergodic averages coincide.

The following corollary is a somewhat restrictive but more explicit summary of the results presented in this section.

Corollary 2

Let \(f: E \rightarrow {\mathbb {R}}\) be a bounded and Lipschitz function with compact support. Let Assumptions 1–7 hold. Then by Theorem 1 and Propositions 6, 5, 4, the discrepancy between ergodic averages from the Zig-Zag process, \(\textbf{Z}_t\), and the ergodic averages from NuZZ, \(\widetilde{\textbf{Z}}_t\), can be bounded as

with

5 Numerical experiments

We now study how the Zig-Zag process compares to other popular MCMC algorithms on test problems with features that are often found in practice. These features include high linear correlation, different length scales, high dimension, fat tails, and position-dependent correlation structure. Our results are shown in Fig. 2.

In the following sections, we aim to compare the NuZZ method against three popular algorithms. In the figures that follow, the Random Walk Metropolis method is represented by the black \(\bigcirc \) line labelled RWM in plots, Hamiltonian Monte Carlo by the green \(+\) line labelled HMC, and the simplified Manifold MALA Girolami et al. (2011) with SoftAbs metric Betancourt (2013) by the red \(\bigtriangleup \) line labelled sMMALA. We also include a Direct Monte Carlo sample (blue \(\triangledown \) line) from the target as a term of comparison. Every example we examine has been chosen so that it is easy to obtain a direct Monte Carlo sample, and the marginal distributions are easily accessible.

For each example, we test two versions of NuZZ. The yellow \(\Diamond \) line labelled NuZZroot, represents the performance of NuZZ as if only one gradient evaluation was necessary to obtain each \(\tau \), which would be the performance of the algorithm if an analytical solution to Eq. (9) were available. The yellow line therefore represents a lower bound to the performance of NuZZ, and indeed any numerical approximation of the Zig-Zag process. The blue \(\times \) line labelled NuZZepoch represents the performance of the NuZZ algorithm when every gradient evaluation necessary to obtain \(\tau \) is counted. In this context, the current implementation of NuZZ is expensive, as for each root, \(\Lambda (\textbf{x}(s),\textbf{v})\) has to be evaluated for each root finding iteration, for each node of the numerical integral.

Lastly, the icdfZZ algorithm mentioned in Sect. 5.3 is the exact process where \(\tau \) is obtained via cdf inversion, while the NuZZtol algorithm from Sect. 5.7 is just the NuZZepoch run with increased tolerances, as explained below.

5.1 Tuning

The RWM, MALA and HMC algorithms used in this work are tuned to achieve the optimal acceptance rate of roughly 25%, 50% (Roberts and Rosenthal 2001), and 65% (Beskos et al. 2013), with exceptions described in each relevant section.

We run all the NuZZ-based algorithms with absolute tolerance of \(\varepsilon _{\textrm{int}} = 10^{-10}\) for the integration routine, and \(\varepsilon _{\textrm{Bre}} = 10^{-10}\) for Brent’s method.

The functions \(\gamma _i(\textbf{x},\textbf{v})\) can be set to zero if the target distribution satisfies certain properties (Bierkens et al. 2019b), or otherwise they can be made small enough such that they have negligible effects on the dynamics. Small values of \(\gamma _i\) reduce diffusivity and guarantee better results in terms of asymptotic variance (Andrieu and Livingstone 2021a). As it is unknown what the effect of setting \(\Gamma = 0\) for a numerical approximation to the Zig-Zag process, we set \(\Gamma = 0.001\) in all of our examples, which is an empirical value small enough (in our case) that the impact on the switching rate is negligible.Footnote 1

The mixing of Zig-Zag-based algorithms is affected by the velocity vector \(\textbf{v}\) in the same way the mixing of Metropolis-based MCMC algorithms is influenced by the covariance of the transition kernel (or the momentum variable). Tuning \(\textbf{v}_{\textrm{init}}\) is discussed in the relevant examples in Sect. 5.4 and 5.5. Wherever possible, we used the values of the theoretical covariance matrix to adapt the proposal covariance, mass matrix, and velocity vectors of all the algorithms. We leave the study of how fast the methods adapt to each example using partial MCMC samples for future work.

5.2 Performance comparison

We chose as a measure of algorithm performance the largest Kolmogorov-Smirnov (KS) distance between the MCMC sample and true distribution amongst all the marginal distributions. The KS distance is particularly well suited to our situation as the marginal distributions of our test problems are known or easily obtainable. We chose not to utilise the Effective Sample Size (ESS) or other ESS-based metrics, as NuZZ is not an exact algorithm and a high ESS value does not necessarily reflect the quality of the sample (NuZZ with very high tolerances can have excellent ESS but be arbitrarily far from the target distribution). Moreover, the KS distance is still well defined even if the moments of the target are infinite as in Sect. 5.7, unlike the ESS. Choosing the KS distance also reflects the requirements of practical Bayesian statistics, where each parameter will typically have a point estimate and credible region reported, meaning that a supremum distance such as the KS is appropriate in assessing the worst-case accuracy of such figures.

Formally, the Kolmogorov-Smirnov distance for the ith parameter is defined as

i.e. the supremum of the absolute value of the difference between the exact cdf, \(F_i\), and the empirical cdf constructed from the MCMC sample, \(\widetilde{F}_i\). The KS distance also has the advantage of being relatively easy to calculate, and it is an integral probability measure with good theoretical properties (Sriperumbudur et al. 2009). The largest KS distance on the marginal distributions, which we standardly refer to as the “D-statistic”, is simply

Since the empirical cdf is calculated via Monte Carlo simulation, we ran each algorithm 40 times and reported the mean and two-sided \(95\%\) region of the KS distance in plots such as Fig. 2.

In order to meaningfully compare the algorithms studied in this work, we gave ourselves a fixed computational budget. We defined one epoch as “a complete passage of the algorithm through the whole dataset”. For each model, we gave ourselves a computational budget of \(6\times 10^6\) epochs, and counted one epoch every time the full gradient or one likelihood evaluation is completed. For sMMALA, we ignored the computational cost of computing the Hessian matrix, since depending on the problem, the actual cost of this will be highly variable.

As each algorithm that we studied has a different computational cost, each of them must return a sample of different size. In order to meaningfully compare the D-statistic across samples of different size, we divided the sample from each algorithm in 40 equal batches, which is a large enough number to give a detailed account of convergence, but not too large for our computational resources. We then produced the lines in Fig. 2 by calculating the D-statistic on subsamples formed by an increasing number of batches, with the first value of each line calculated only on one batch, and the last value of each line calculated on the whole sample. The Zig-Zag-based algorithms that we study in this work return a trajectory which is represented by a series of switching points. These trajectories then have to be uniformly subsampled to obtain a sample from the distribution of interest \(\pi (\textbf{x})\). In our experiments we extracted \(6 \times 10^6\) samples from each trajectory.

5.3 Standard normal

The first model we study is a 10-dimensional standard normal distribution, which lacks any of the challenging features discussed later, and as such provides a baseline for algorithm performance. It is a model that has analytic solutions for the Zig-Zag Sampler, so it allows us to compare the performance of the Zig-Zag with cdf inversion (pink \(\boxtimes \) line labelled icdfZZ in Fig. 2a) with that of the NuZZroot algorithm. As one would expected, direct sampling from the target density shows the fastest convergence.

The three popular algorithms RWM, sMMALA and HMC are all quite close to each other in terms of performance. HMC, which was tuned to take 3 leapfrog steps with step size 0.6 to achieve alternate autocorrelation (identity mass), performs slightly worse than sMMALA. Note that for normal distributions, the Hessian is constant, so there is no difference between sMMALA and standard MALA. The RWM converges quite well, but falls behind sMMALA and HMC in terms of performance.

The Zig-Zag-based algorithms are represented by the blue \(\times \), yellow \(\Diamond \), and pink \(\boxtimes \) lines. As expected, NuZZepoch has the slowest convergence as root finding is expensive in terms of epochs. That leaves the algorithm with a budget of around \(8\times 10^3\) switching points, which is not sufficient to explore the density as well as the other algorithms. If this algorithm represents a lower bound for the performance of numerically approximated Zig-Zag Samplers, the yellow \(\Diamond \) line of NuZZroot represents the upper bound; its efficiency significantly outperforms every other MCMC algorithm tested.

Of particular interest is the convergence of the analytical version of the Zig-Zag Sampler (pink \(\boxtimes \) line labelled icdfZZ) on this model. The algorithm’s performance is practically indistinguishable from NuZZroot, providing further evidence supporting the discussion on numerical errors in this work.

5.4 Different length scales

Having measured the performance of the algorithms on a standard normal, we now proceed to introduce the features of interest which commonly appear in Bayesian inverse problems, starting with correlations. Some algorithms, struggle on these targets, as the length of their trajectory is tuned on one particular scale. Therefore the trajectory will be too long for variables with smaller scales, doubling back and wasting computational resources, while it will be too short for variables with larger scales, giving a more correlated sample.

A common test problem with these feature is Neal’s Normal (Neal 2010), an uncorrelated normal distribution (we take the dimension to be 10, to be consistent with the rest of the test problems) with variances \(\left[ 1^2,2^2, \ldots ,10^2\right] \). The results are shown in Fig. 2b.

Tuning the components of the velocity vector \(\textbf{v}\) for Zig-Zag-based processes helps mixing for this problem. The simplest choice for the initial velocities is \(v_i=1, i\in \{1,\ldots ,d\}\), however it makes sense to have different velocities for components with different scales. When we tune the velocities of the NuZZ algorithm, we use the theoretical standard deviations \(\sigma _i\) for each variable, and normalise them to have fixed speed \(\Vert \textbf{v} \Vert _2 = \sqrt{d}\), as the original process. Thus the individual values of the \(v_i\) change, but the overall speed of the process remains the same. Explicitly, if we have a set of standard deviations, \(\sigma _i\), then the velocity update we use is

The velocities can also be tuned adaptively (Roberts and Rosenthal 2007) using the history of the process to calculate standard deviations \(\sigma _i\) for each variable on the basis of observed sample properties during the adaptation phase. Our experiments suggest that the adaptively estimated velocities converge quickly to their final value, as they only need information about the relative scale of the components.

As before, direct sampling is the most efficient method, while NuZZepoch is too expensive to provide a good sample. NuZZroot on the other hand, performs well, surpassing the Metropolis-based algorithms. RWM and sMMALA perform again on a similar level, as they apply the same kind of global conditioning to their proposal. HMC, outperforms both of them, with step size 0.6, 3 leapfrog steps, and mass matrix \(M=\Sigma ^{-1}\).

5.5 Linear correlation

Another common issue that arises in practice is posteriors whose variables have strong linear correlation. In this section we compare the performance of the algorithms on a 10-dimensional multivariate normal with covariance matrix

where we pick \(\alpha = 0.9\). The results can be see in Fig. 2c.

Unsurprisingly, the performance of RWM and sMMALA is quite similar, as they both use rotation matrices to improve their proposals. HMC was tuned with mass matrix \(M=\Sigma ^{-1}\), step size 0.6 and 3 Leapfrog steps, with a trajectory long enough to achieve alternate autocorrelation. HMC outperforms both the RW and MALA, even though the difference is not substantial. That is because as MALA is theoretically equivalent to HMC with one leapfrog step, naturally a HMC trajectory consisting of only three leapfrog steps will yield similar results.

The Zig-Zag algorithms do not perform well in this setting. The NuZZroot, performs worse than a well-tuned Random Walk. While a RW can take advantage of the whole matrix \(\Sigma \), the strategy for tuning \(\textbf{v}\) applied in the previous section only utilises the marginal standard deviations, hence ignoring information on the correlation of the components. This is largely due to the fact that the process can only travel in certain directions, and if these directions are not conducive with travelling along a ridge in the target density, then the process does not mix well. This highlights the importance of the work in Bertazzi and Bierkens (2020), where the authors obtain results on using the whole covariance matrix of the target to tune the dynamics of the process and achieve better mixing. The theoretical linearly-correlated target considered here is the closest example to the real-data example in the Supplementary Material, Section S.2, in terms of posterior properties and hence algorithm performance.

5.6 High dimension

The next example we study is a standard normal in 100 dimensions. Some algorithms are known to scale particularly poorly with dimension, e.g. RWM, while HMC is better adapted to this scenario. For this particular experiment, we reduced the computational budget to \(1 \times 10^6\) epochs, to be able to run the repeats in reasonable time. As a consequence, the KS distance is generally one order of magnitude larger than that for the other models. The result of our tests are shown in Fig. 2d.

The performance of HMC and sMMALA is quite similar, meaning that the use of gradient information has a positive effect on their performance on this problem. Conversely, the RWM performs significantly worse. No preconditioning was used for RWM, MALA nor HMC, and HMC was tuned to have step size .73 and 3 leapfrog steps (\(\approx d^{1/4}\), Beskos et al. (2013)). The performance of HMC and sMMALA is still close as HMC only needs 3 leapfrog steps to reach alternate autocorrelation. Moreover, it should be pointed out that even though theoretically MALA is equivalent to HMC with one leapfrog step, in practice due to the leapfrog scheme the cost of one HMC iteration with one leapfrog step is more expensive than one MALA iteration.

Interestingly, the yellow \(\Diamond \) line corresponding to the NuZZroot is below the sMMALA and HMC line, suggesting that the Zig-Zag dynamics does not scale as well in high dimension, but it is still superior to RWM.

5.7 Fat tails

In this example we look at how the algorithms perform when the target has fat tails. This feature is particularly common in particle physics, where posteriors are often Cauchy-like, with power-law decay in their tails. The computational budget is set again to \(6\times 10^6\) epochs, and the target distribution is a 10-dimensional student-t with one degree of freedom, so that all moments are infinite.

In Fig. 2e, the curves are tightly packed with overlapping confidence intervals. RWM, HMC and sMMALA display similar convergence speed, with sMMALA being the fastest. This may be due to the fact that the Hessian is not constant in this example, which may provide some helpful local information to the dynamics. HMC was tuned to take 20 leapfrog steps, and a step size of 3. The mass matrix was taken to be the identity matrix, as there is no correlation in this example, and the marginals are all equal. As the moments of the distribution are infinite, we used a fixed identity matrix for the proposal.

The Zig-Zag-like algorithms perform quite well. The blue \(\times \) line representing the NuZZepoch is near the others. The yellow \(\Diamond \) line corresponding to the NuZZroot is the second lowest on the chart, outperformed only by direct sampling of the density. The good performance of the Zig-Zag-based algorithms is due to the fact that the process is more frequently able to make longer excursions into the tails than the other methods, due to the low gradient of the potential away from the mode.

In the Supplementary Material, Section S.3, we show numerical experiments to find the highest level of the numerical tolerances such that the the difference in the posterior sample in not detectable in this example. While one iteration of the adaptive integration routine is usually enough to fall within the tolerance \(\varepsilon _{\textrm{int}}\), preventing us from detecting its effect, we found that the numerical error overtakes the Monte Carlo error at a value of about \(\varepsilon _{\textrm{Bre}} = 10^{-2}\), in this example. The NuZZtol process simply corresponds to the NuZZepoch process run with an increased tolerance of \(\varepsilon _{\textrm{Bre}} = 10^{-2}\).

5.8 Non-linear correlation

We move on to study how the algorithms behave in the regime of curved (or non-constant, non-linear) correlation structure. A distribution belonging to this class cannot be indexed with a global covariance matrix, as global conditioning does not capture the local characteristics of the target. They commonly look like curved ridges, or ‘bananas’, in the state space. These shapes are common in many fields of science, often in hierarchical models or models affected by some sort of degeneracy in their parameter space (e.g. House et al. (2016), The Dark Energy Survey Collaboration et al. (2017) ).

In this section we will use the Hybrid Rosenbrock distribution from Pagani et al. (2021), and more specifically the distribution

where \(\textbf{x} \in {\mathbb {R}}^d\), \(a=2.5\), \(b=50\). The parameters a, b have been chosen so that the shape of the distribution does not pose an extreme challenge to the algorithms we are studying. The contours of this distribution generally look like those shown in Fig. 1. The results of our experiments are shown in Fig. 2f.

It should be noted that the marginals of the Hybrid Rosenbrock are not known analytically, but it is possible to sample from them directly. To produce convergence plots based on the D statistics, we built empirical cumulative distribution functions for each marginal of our target, based on \(2\times 10^{10}\) samples. The Monte Carlo error introduced in our analysis by this step is therefore negligible.

Even though the curvature is not extreme, the RWM performs significantly worse than the other standard MCMC algorithms. The sMMALA algorithm performs well, as it is designed to deal with this class of densities, and the gap with the other algorithms would be larger if the shape of the target was narrower and with longer tails. HMC works quite well, even though it is difficult to tune for this target. HMC was run with step size 0.03, 20 leapfrog steps, identity mass, aiming for an acceptance of 80% (Betancourt et al. 2015). The HMC tuning was obtained by repeating the analysis with several different combinations of values for the step size and number of leapfrog steps, and it corresponds to the best ESS we were able to obtain, with speed of convergence close to sMMALA.