Abstract

We construct a new class of efficient Monte Carlo methods based on continuous-time piecewise deterministic Markov processes (PDMPs) suitable for inference in high dimensional sparse models, i.e. models for which there is prior knowledge that many coordinates are likely to be exactly 0. This is achieved with the fairly simple idea of endowing existing PDMP samplers with “sticky” coordinate axes, coordinate planes etc. Upon hitting those subspaces, an event is triggered during which the process sticks to the subspace, this way spending some time in a sub-model. This results in non-reversible jumps between different (sub-)models. While we show that PDMP samplers in general can be made sticky, we mainly focus on the Zig-Zag sampler. Compared to the Gibbs sampler for variable selection, we heuristically derive favourable dependence of the Sticky Zig-Zag sampler on dimension and data size. The computational efficiency of the Sticky Zig-Zag sampler is further established through numerical experiments where both the sample size and the dimension of the parameter space are large.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Overview

Consider the problem of simulating from a measure \(\mu \) on \(\mathbb {R}^d\) that is a mixture of atomic and continuous components. A key application is Bayesian inference for sparse problems and variable selection under a spike-and-slab prior \(\mu _0\) of the form

Here, \(w_i \in [0,1]\), \(\pi _{1}, \pi _{2},\ldots ,\pi _d\) are densities with respect to the Lebesgue measure referred to as slabs and \(\delta _0\) denotes the Dirac measure at zero. For sampling from \(\mu \), it is common to construct and simulate a Markov process with \(\mu \) as invariant measure. Routinely used samplers such as the Hamiltonian Monte Carlo sampler (Duane et al. 1987) cannot be applied directly due to the degenerate nature of \(\mu \). We show that “ordinary” samplers based on piecewise deterministic Markov processes (PDMPs) can be adapted to sample from \(\mu \) by introducing stickiness.

In piecewise deterministic Markov processes, the state space is augmented by adding to each coordinate \(x_i\) a velocity component \(v_i\), doubling the dimension of the state space. They are characterized by piecewise deterministic dynamics between event times, where event times correspond to changes of velocities. PDMPs have received recent attention because they have good mixing properties (they are non-reversible and have ‘momentum’, see e.g. Andrieu and Livingstone 2019), they take gradient information into account and they are attractive in Bayesian inference scenarios with a large number of observations because they allow for subsampling of the observations without creating bias (Bierkens et al. 2019a, 2020).

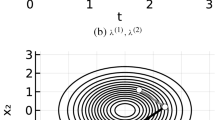

We introduce “sticking event times”, which occur every time a coordinate of the process state hits 0. At such a time that particular component of the state freezes for an independent exponentially distributed time with a specifically chosen rate equal to \(|v_i|\kappa _i\), for some \(\kappa _i>0\) which depends on \(\mu \). This corresponds to temporarily setting the marginal velocity to 0: the process “sticks to (or freezes at) 0” in that coordinate, while the other coordinates keep moving, as long as they are not stuck themselves. After the exponentially distributed time the coordinate moves again with its original velocity, see Fig. 1 for an illustration of the sticky version of the Zig-Zag sampler (Bierkens et al. 2019a). By this we mean that the dynamics of a ordinary PDMP are adjusted such that the process can spend a positive amount of time at the origin, at the coordinate axes and at the coordinate (hyper-)planes by sticking to 0 in each coordinate for a random time span whenever the process hits 0 in that particular coordinate. By restoring the original velocity of each coordinate after sticking at 0, we effectively generate non-reversible jumps between states with different sets of non-zero coordinates. In the Bayesian context this corresponds to having non-reversible jumps between models of varying dimensionality.

This allows us to construct a piecewise deterministic process that has a pre-specified measure \(\mu \) as invariant measure, which we assume to be of the form

for some differentiable function \(\Psi \), normalising constant \(\, C_\mu > 0\) and positive parameters \(\kappa _1,\kappa _2,\ldots ,\kappa _d\). Here the Dirac masses are located at 0, but generalizations are straightforward. The resulting samplers and processes are referred to as sticky samplers and sticky piecewise deterministic Markov processes respectively. The proportionality constant \(C_\mu \) is assumed to be unknown while \((\kappa _i)_{i=1,\ldots ,d}\) are known. This is a natural assumption; suppose a statistical model with parameter x and log-likelihood \(\ell (x)\) (notationally, we drop the dependence of \(\ell \) on the data). Under the spike-and-slab prior defined in Eq. (1.1), the posterior measure is of the form of Eq. (1.2) with

where C, independent of x, can be chosen freely for convenience. A popular choice for \(\pi _i\) is a Gaussian density centered at 0 with standard deviation \(\sigma _i\). In this case, as \(w/(1-w) \approx w\) for \(w\approx 0\), \(\kappa _i\) depends linearly on \(w_i/\sigma _i\) in the sparse setting.

Two-dimensional Sticky Zig-Zag sampler with initial position \((-0.75, -0.4)\) and initial velocity \((+1, -1)\). On the left panel, a trajectory on the (x, y)-plane of the Sticky Zig-Zag sampler. The sticky event times relative to the x (respectively y) coordinate and the trajectories with the x (respectively y) stuck at 0 are marked with a blue (respectively red) cross and line. On the right panel, the trajectories of each coordinate against the time using the same (color-) scheme. The trajectory of y is dashed

Relevant quantities useful for model selection, such as the posterior probability of a model excluding the first variable

cannot be directly computed if \(C_\mu \) is unknown. However, given a trajectory \(\left( x(t)\right) _{0\le t\le T}\) of a PDMP with invariant measure \(\mu \), the quantity \(\mu (\{0\}\times \mathbb {R}^{d-1})\) can be approximated by the ratio \(T_0/T\) where \(T_{0} = \text{ Leb }\{0\le t \le T:x_1(t) = 0\}\). This simple, yet general idea requires the user only to specify \(\{\kappa _i\}_{i=1}^d\) and \(\Psi \) as in Eq. (1.2). Moreover, the posterior probability that a collection of variables are all jointly equal to zero can be estimated in a similar way by computing the fraction of time that all corresponding coordinates of the process are simultaneously zero and, more generally, expectations of functionals with respect to the posterior can be estimated from the simulated trajectory.

1.2 Related literature

The main purpose of this paper is to show how “ordinary” PDMPs can be adjusted to sample from the measure \(\mu \) as defined in (1.2). The numerical examples illustrate its applicability in a wide range of applications. One specific application that has received much attention in the statistical literature is variable selection using a spike-and-slab prior. For the linear model, early contributions include Mitchell and Beauchamp (1988) and George and McCulloch (1993). Some later contributions for hierarchical models derived from the linear model are Ishwaran and Rao (2005), Guan and Stephens (2011), Zanella and Roberts (2019) and Liang et al. (2021). These works have in common that samples from the posterior are obtained from Gibbs sampling and can be implemented in practise only in specific cases (when the Bayes factors between (sub-)models can be explicitly computed). A general and common framework for MCMC methods for variable selection was introduced in Green (1995) and Green and Hastie (2009) and referred to as reversible jump MCMC.

Methods that scale better (compared to Gibbs sampling) with either the sample size or dimension of the parameter can be obtained in different ways. Firstly, rather than sampling from the posterior one can approximate the posterior within a specified class, for example using variational inference. As an example, Ray et al. (2020) adopt this approach in a logistic regression problem with spike-and-slab prior. Secondly, one can try to obtain sparsity using a prior which is not of spike-and-slab type. For example, Griffin and Brown (2021) consider Gibbs sampling algorithms for the linear model with priors that are designed to promote sparseness, such as the Laplace or horseshoe prior (on the parameter vector). While such methods scale well with dimension of data and parameter, these target a different problem: the posterior is not of the form (1.2). That is, the posterior itself is not sparse (though derived point estimates may be sparse and the posterior itself may have good properties when viewed from a frequentist perspective). Moreover, part of the computational efficiency is related to the specific model considered (linear or logistic regression model) and, arguably, a generic gradient-based MCMC method would perform poorly on such measures since the gradient of the (log-)density near 0 in each coordinate explodes to account for the change of mass in the neighborhood of 0 induced by the continuous spike component of the prior.

A recent related work by Chevallier et al. (2020) addresses variable selection problems using PDMP samplers. The different approach taken in that paper is based on the framework of reversible jump (RJ) MCMC as proposed in Green (1995). A comparison between Chevallier et al. (2020) and our work may be found in Appendix C.

1.3 Contributions

-

We show how to construct sticky PDMP samplers from ordinary PDMP samplers for sampling from the measure in Eq. (1.2). This extension allows for informed exploration of sparse models and does not require any additional tuning parameter. We rigorously characterise the stationary measure of the sticky Zig-Zag sampler.

-

We analyse the computational efficiency of the sticky Zig-Zag sampler by studying its complexity and mixing time.

-

We demonstrate the performance of the sticky Zig-Zag sampler on a variety of high dimensional statistical examples (e.g. the example in Sect. 4.2 has dimensionality \(10^6\)).

The Julia package ZigZagBoomerang.jl (Schauer and Grazzi 2021) implements efficiently the sticky PDMP samplers from this article for general use.

1.4 Outline

Section 2 formally introduces sticky PDMP samplers and gives the main theoretical results for the sticky Zig-Zag sampler. In Sect. 2.4 we explain how the sticky Zig-Zag sampler may be applied to subsampled data, allowing the algorithm to access only a fraction of data at each iteration, hence reducing the computational cost from \(\mathcal {O}(N)\) to \(\mathcal {O}(1)\), where N is the sample size. In Sect. 3 we extend the Gibbs sampler for variable selection for target measures of the form of Eq. (1.2). We analyse and compare the computational complexity and the mixing times of both the sticky Zig-Zag sampler and the Gibbs sampler. Section 4 presents four statistical examples with simulated data and analyses the outputs after applying the algorithms considered in this article. In Sect. 5 both limitations and promising research directions are discussed.

There are five appendices. The derivation of our theoretical results is given in Appendix A. Appendix B extends some of the theoretical results for two other sticky samplers: the sticky version of the Bouncy particle sampler (Bouchard-Côtè et al. 2018) and the Boomerang sampler (Bierkens et al. 2020), the latter having Hamiltonian deterministic dynamics invariant to a prescribed Gaussian measure. Appendix C contains a self-contained discussion with heuristic arguments and simulations which highlight the differences between the sticky PDMPs and the method of Chevallier et al. (2020). Appendix D complements Sect. 3 with the details of the derivations of the main results and by presenting local implementations of the sticky Zig-Zag sampler that benefit of a sparse dependence structure between the coordinates of the target measure. Appendix E contains some of the details of the numerical examples of Sect. 4.

1.5 Notation

The ith element of the vector \(x \in \mathbb {R}^d\) is denoted by \(x_i\). We denote \(x_{-i} := (x_1,x_2,\ldots ,x_{i-1}, x_{i+1},\ldots ,x_d) \in \mathbb {R}^{d-1}\). Write

and \([x]_A := (x_i)_{i \in A} \in \mathbb {R}^{|A|}\) for a set of indices \(A\subset \{1,2,\ldots ,d\}\) with cardinality |A|. We denote by \(\sqcup \) the disjoint union between sets and the positive and negative part of a real-valued function f by \(f^+ := \max (0, f)\) and \(f^- := \max (0, -f)\) respectively so that \(f = f^+ - f^-\). For a topological space E, let \(\mathcal {B}(E)\) denote the Borel \(\sigma \)-algebra on E. Denote by \(\mathcal {M}(E)\) the class of Borel measurable functions \(f:E \rightarrow \mathbb {R}\) and let \(C(E) = \{f \in \mathcal {M}(E):f \text { is continuous }\}\). For a measure \(\mu ( \textrm{d}x, \textrm{d}y)\) on a product space \(\mathcal {X},\mathcal {Y}\), we write the marginal measure on \(\mathcal {X}\) by \(\mu ( \textrm{d}x) = \int _{\mathcal {Y}} \mu ( \textrm{d}x, \textrm{d}y)\).

2 Sticky PDMP samplers

In what follows, we formally describe the sticky PDMP samplers (Sect. 2.1) and give the main theoretical results obtained for the sticky Zig-Zag sampler (Sect. 2.3). Section 2.4 extends the sticky Zig-Zag sampler with subsampling methods.

2.1 Construction of sticky PDMP samplers

The state space of the the sticky PDMPs contains two copies of zero for each coordinate position. This construction allows a coordinate process arriving at zero from below (or above) to spend an exponentially distributed time at zero before jumping to the “other” zero and continuing the dynamics. Formally, let \({\overline{\mathbb {R}}}\) be the disjoint union \( {\overline{\mathbb {R}}} = (-\infty ,0^-] \sqcup [0^+,\infty )\) with the natural topologyFootnote 1\(\tau \), where we use the notation \(0^-\), \(0^+\) to distinguish the zero element in \((-\infty ,0]\) from the zero element in \([0,\infty )\). The process has càdlàgFootnote 2 trajectories in the locally compact state space \(E = {\overline{\mathbb {R}}}^d \times \mathcal {V}\), where \(\mathcal {V}\subset \mathbb {R}^d\). Pairs of position and velocity will typically be denoted by \( (x, v) \in {\overline{\mathbb {R}}}^d \times \mathcal {V}\). A trajectory reaching zero in a coordinate from below (with positive velocity) or from above (with negative velocity) spends time at the closed end of the half open interval \((-\infty , 0^-]\) or \([0^+, \infty )\), respectively. For \(i = 1,\ldots , d\) we define the associated ‘frozen boundary’ \({\mathfrak {F}}_i \subset E\) for the ith coordinate as

Thus the ith coordinate of the particle is sticking to zero (or frozen), if the state of the particle belongs to the ith frozen boundary \({\mathfrak {F}}_i\).

Sometimes, we abuse notation by writing \((x_i,v_i) \in \mathfrak F_i\) when \((x,v) \in {\mathfrak {F}}_i\) as the set \({\mathfrak {F}}_i\) has restrictions only on \(x_i, v_i\). The closed endpoints of the half-open intervals are somewhat reminiscent of sticky boundaries in the sense of Liggett (2010, Example 5.59). Denote by \(\alpha \equiv \alpha (x,v)\) the set of indices of active coordinates corresponding to state (x, v), defined by

and its complement \(\alpha ^c = \{1,2,\ldots ,d\}{\setminus } \alpha \). Furthermore define a jump or transfer mapping \(T_i :{\mathfrak {F}}_i \rightarrow E\) by

The sticky PDMPs on the space E are determined by their infinitesimal characteristics: their dynamics are determined by random state changes happening at random jump times of a time inhomogeneous Poisson process with intensity depending on the state of the process, and a deterministic flow governed by a differential equation in between. The state changes are characterised by a Markov kernel \(\mathcal {Q}:E\times \mathcal {B}(E) \rightarrow [0,1]\), at random times sampled with state dependent intensity \(\lambda :E \rightarrow [0,\infty )\). The deterministic dynamics are determined coordinate-wise by the integral equation

with \(\xi _i\) being state dependent with form

for functions \({\bar{\xi }}_i:{\overline{\mathbb {R}}} \times \mathbb {R}\rightarrow {\overline{\mathbb {R}}} \times \mathbb {R}\) which depend on the specific PDMP chosen and corresponds to the coordinate-wise dynamics of the ordinary PDMP while the second case in Eq. (2.3) captures the behaviour of the ith coordinate when it sticks at 0.

For PDMP samplers, we typically have \({\bar{\xi }}_i = {\bar{\xi }}_j\) for all \(i,j \in 1, \ldots , d\) and we have different types of state changes given by Markov kernels \(\mathcal {Q}_1\), \(\mathcal {Q}_2\), ..., for example refreshments of the velocity, reflections of the velocity, unfreezing of a coordinate etc. If each transition is triggered by its individual independent Poisson clock with intensity \(\lambda _1, \lambda _2, \ldots \), then \(\lambda = \sum _i \lambda _i\), and \(\mathcal {Q}\) itself can be written as the mixture

With that, the dynamics of the sticky PDMP sampler \(t\mapsto (X(t), V(t))\) are as follows: starting from \((x, v) \in E\),

-

1.

its flow in each coordinate is deterministic and continuous until an event happens. The deterministic dynamics are given by (2.2). Upon hitting \({\mathfrak {F}}_i\), the ith coordinate process freezes, captured by the state dependence of (2.3).

-

2.

A frozen coordinate “unfreezes” or “thaws” at rate equal to \(\kappa _i|v_i|\) by jumping according to the transfer mapping \(T_i\) to the location \((0^+, v_i)\) (or \((0^-, v_i)\)) outside \(\mathfrak {F}_i\) and continuing with the same velocity as before. That is, on hitting \({\mathfrak {F}}_i\), the ith coordinate process freezes for an independent exponentially distributed time with rate \(\kappa _i|v_i|\). This constitutes a non-reversible move between models of different dimension. The corresponding transition \(\mathcal {Q}_{i,{\text {thaw}}}\) is the Dirac measure at \( \delta _{T_i(x,v)}\) and the intensity component \(\lambda _{i,{\text {thaw}}}\) equals \(\kappa _i|v_i| {\textbf {1}} _{\mathfrak {F}_i}\).

-

3.

An inhomogeneous Poisson process \(\lambda _{\textrm{refl}}\) with rate depending on \(\Psi \) triggers the reflection events. At a reflection event time, the process changes its velocities according to its reflection rule \(\mathcal {Q}_{\textrm{refl}}\) in such a way that the process is invariant to the measure \(\mu \).

-

4.

Refreshment events can be added, where, at exponentially distributed inter-arrival times, the velocity changes according to a refreshment rule leaving the measure \(\mu \) invariant. Refreshments are sometimes necessary for the process to be ergodic.

The resulting stochastic process \((X_t, V_t)\) is a sticky PDMP with dynamics \(\mathcal {Q}\), \(\lambda \), \(\varphi \), initialised in \((X(\tau _0),V(\tau _0))\). Let \(s \rightarrow \varphi (s, x, v)\) be the deterministic solution of (2.2) starting in (x, v). Set \(\tau _0=0\) and the initial state \((X(\tau _0),V(\tau _0)) \in E\). A sample of a sticky PDMP is given by the recursive construction in Algorithm 1.

In what follows, we focus our attention on the Sticky Zig-Zag sampler and defer to Appendix B the details of the Bouncy Particle sampler and the Boomerang samplers.

2.2 Sticky Zig-Zag sampler

A trajectory of the Sticky Zig-Zag sampler has piecewise constant velocity which is an element of the set \(\mathcal {V} = \{v :|v_i| = a_i, \forall i \in \{1,2,\ldots , d\}\}\) for a fixed vector a. For each index i, the deterministic dynamics of Eq. (2.3) are determined by the function \({\bar{\xi }}_i(x_i, v_i) = (v_i, 0)\). The reflection rate \(\lambda _{\textrm{refl}}\) is factorised coordinate-wise and the reflection event for the ith coordinate is determined by the inhomogeneous rate

At reflection time of the ith coordinate, the transition kernel \(\mathcal {Q}_{i, \textrm{refl}}\) acts deterministically by flipping the sign of the ith velocity component of the state: \((x_i, v_i) \rightarrow (x_i, -v_i)\). As shown in Bierkens et al. (2019b), the Zig-Zag sampler does not require refreshment events in general to be ergodic.

2.3 Theoretical aspects of the Sticky Zig-Zag sampler

A theoretical analysis of the sticky Zig-Zag sampler is given in “Appendix A.1”. In this section we review key concepts and state the main results.

The stationary measure of a PDMP is studied by looking at the extended generator of the process which is an operator characterising the process in terms of local martingales—see Davis (1993, Section 14) for details. The extended generator is - as the name suggests—an extension of the infinitesimal generator of the process (defined for example in (Liggett 2010, Theorem 3.16) in the sense that it acts on a larger class of functions than the infinitesimal generator and it coincides with the infinitesimal generator when applied to functions in the domain of the infinitesimal generator.

A general representation of the extended generator of PDMPs is given in Davis (1993, Section 26), while the infinitesimal generator of the ordinary Zig-Zag sampler is given in the supplementary material of Bierkens et al. (2019a). Here, we highlight the main results we have derived for the sticky Zig-Zag sampler.

Recall \(t \rightarrow \varphi (t, x, v)\) denotes the deterministic solution of (2.2) starting in (x, v) and \(\tau \) is the natural topology on E. Define the operator \(\mathcal {A}\) with domain

by \(\mathcal {A}f(x, v) = \sum _{i=1}^d \mathcal {A}_i f(x, v)\) with

Proposition 2.1

The extended generator of the d-dimensional Sticky Zig-Zag process is given by \({\mathcal {A}}\) with domain \({\mathcal {D}}({\mathcal {A}})\).

Proof

See Appendix 1. \(\square \)

Notice that, the operator \(\mathcal {A}\) restricted on \(D = \{f \in C^1_c( E), \mathcal {A}f \in C_b( E)\}\) coincides with the infinitesiaml generator of the ordinary Zig-Zag process restricted on D, see Proposition A.6, Appendix 1 for details.

Theorem 2.2

The d-dimensional Sticky Zig-Zag sampler is a Feller process and a strong Markov process in the topological space \((E, \tau )\) with stationary measure

for some normalization constant \(C>0\).

Proof

The construction of the process and the characterization of the extended generator and its domain of the d-dimensional Sticky Zig-Zag process can be found in Appendix 1. We then prove that the process is Feller and strong Markov (“Appendix A.2” and “Appendix A.3”). By Liggett (2010, Theorem 3.37), \(\mu \) is a stationary measure if, for all \(f \in D\), \(\int \mathcal {L}f \textrm{d}\mu = 0\). This last equality is derived in Appendix A.5. \(\square \)

Theorem 2.3

Suppose \(\Psi \) satisfies Assumption A.8. Then the sticky Zig-Zag process is ergodic and \(\mu \) is its unique stationary measure.

Proof

See Appendix 1. \(\square \)

The following remark establishes a formula for the recurrence time of the Sticky Zig-Zag to the null model, and may serve as guidance in design of the probabilistic model or the choice of the parameter \(\kappa _i\), here assumed for simplicity to be all equal.

Remark 2.4

(Recurrence time of the Sticky Zig-Zag to zero) The expected time to leave the position \(\varvec{0} = (0,0,\ldots ,0)\) for a d-dimensional Sticky Zig-Zag with unit velocity components is \(\frac{1}{\kappa d}\) (since each coordinate leaves 0 according to an exponential random variable with parameter \(\kappa \)). A simple argument given in “Appendix A.7” shows that the expected time of the process to return to the null model is

2.4 Extension: sticky Zig-Zag sampler with subsampling method

Here we address the problem of sampling a d-dimensional target measure when the log-likelihood is a sum of N terms, when d and N are large. Consider for example a regression problem where both the number of covariates and the number of experimental units in the dataset are large. In this situation full evaluation of the log-likelihood and its gradient is prohibitive. However, PDMP samplers can still be used with the exact subsampling technique (e.g. Bierkens et al. 2019a) as this allows for substituting the gradient of the log-likelihood (which is required for deriving the reflection times) by an estimate of it which is cheaper to evaluate, without introducing any bias on the output of the sampler.

The subsampling technique for Sticky Zig-Zag samplers requires to find an unbiased estimate of the gradient of \(\Psi \) in (1.2). To that end, assume the following decomposition:

for some scalar valued function S. This assumption on \(\Psi \) is satisfied for example for the setting with a spike-and-slab prior and a likelihood that is a product of factors, such as for likelihoods of (conditionally) independent observations.

For fixed (x, v) and \(x^* \in \mathbb {R}^d\), for each \(i \in \alpha (x,v)\) the random variable

is an unbiased estimator for \(\partial _{x_i} \Psi (x)\). Define the Poisson rates

and, for each \(i \in \alpha \), define the bounding rate

which is specified by the user and such that Poisson times with inhomogeneous rate \(\tau \sim \text {Poiss}(s\rightarrow \overline{\lambda }_i(s,x,v))\) can be simulated (see “Appendix D.2” for details on the simulation of Poisson times).

The Sticky Zig-Zag with subsampling has the following dynamics:

-

the deterministic dynamics and the sticky events are identical to the ones of the Sticky Zig-Zag sampler presented in Sect. 2.3;

-

a proposed reflection time equals \(\min _{i\in \alpha (x,v)} \tau _i\), with \(\{\tau _i\}_{i\in \alpha (x,v)}\) being independent inhomogeneous Poisson times with rates \(s \rightarrow {\overline{\lambda }}_i(s,x,v)\);

-

at the proposed reflection time \(\tau \) triggered by the ith Poisson clock, the process reflects its velocity according to the rule \((x,v) \rightarrow (x,v[i, -v_i])\) with probability \({\widetilde{\lambda }}_{i, J}(\varphi (\tau , x, v))/{\overline{\lambda }}_i(\tau , x, v)\) where \(J \sim \text {Unif}(\{1,2,\ldots , N_i\})\).

Proposition 2.5

The Sticky Zig-Zag with subsampling has a unique stationary measure given by Eq. (2.6).

The proof of Proposition 2.5 follows with a similar argument made in the proof of Bierkens et al. (2019a, Theorem 4.1). The number of computations required by the Sticky Zig-Zag with subsampling to compute the next event time with respect to the quantity N is \(\mathcal {O}(1)\) (since \(\partial _{x_i} \Psi (x^*)\) can be pre-computed). This advantage comes at the cost of introducing ‘shadow event times’, which are event times where the velocity component does not reflect. In case the posterior density satisfies a Bernstein–von-Mises theorem, the advantage of using subsampling over the standard samplers has been empirically shown and informally argued for in Bierkens et al. (2019a, Section 5) and Bierkens et al. (2020, Section 3) for large N and when choosing \(x^*\) to be the mode of the posterior density.

3 Performance comparisons for Gaussian models

In this section we discuss the performance of the Sticky Zig-Zag sampler in comparison with a Gibbs sampler. The sticky Zig-Zag sampler includes new coordinates randomly but uses gradient information to find which coordinates are zero. By comparing to a Gibbs sampler that just proposes models at random, we show that it is an efficient scheme of exploration. As the Gibbs sampler requires closed form expression of Bayes factors between different (sub-)models (Eq. (2.1) below), we consider Gaussian models. The comparison is motivated by considering two samplers that do not require model specific proposals or other tuning parameters. In specific cases such as the target models considered below, the Gibbs sampler could be improved by carefully choosing a problem-specific proposal kernel in between (sub-)models, see for example Zanella and Roberts (2019) and Liang et al. (2021)—something we don’t consider here.

The comparison is primarily in relation to the dimension d, average number of active particles and sample size N of the problem. It is well known that the performance of a Markov chain Monte Carlo method is given by both the computational cost of simulating the algorithm and the convergence properties of the underlying process. In Sect. 3.2 we consider both these aspects and compare the results obtained for the sticky Zig-Zag sampler with those relative to the Gibbs sampler. The results are summarised in Tables 1 and 2. The technical details of this section are given in “Appendix D”.

3.1 Gibbs sampler

We can use a set of active indices \(\alpha \) to define a model, as the corresponding set of non-zero values in \(\mathbb {R}^d\):

For every set of indices \(\alpha \subset \{1,2,\ldots ,d\} \) and for every j, the Bayes factors relative to two neighbouring (sub-)models (those differing by only one coefficient) for a measure as in Eq. (1.2) are given by

where \(y = \{x \in \mathbb {R}^d :x_i = 0,\, i \notin (\alpha \cup \{j\}) \}\), \(z = \{x \in \mathbb {R}^d :x_i = 0, \, i \notin (\alpha {\setminus } \{j\})]\). The Gibbs sampler starting in \((x, \alpha )\), with \(x_i \ne 0\) only if \(i \in \alpha \) for some set of indices \(\alpha \subset \{1,2,\ldots ,d\} \), iterates the following two steps:

-

1.

Update \(\alpha \) by choosing randomly \(j \sim \text {Unif}(\{1,2,\ldots ,d\})\) and set \(\alpha \leftarrow \alpha \cup \{j\}\) with probability \(p_j\) where \(p_j\) satisfies \(p_j/(1-p_j) = B_j(\alpha )\), otherwise set \(\alpha \leftarrow \alpha {\setminus } \{j\}\).

-

2.

Update the free coefficients \(x_\alpha \) according to the marginal probability of \(x_{\alpha }\) conditioned on \(x_i = 0\) for all \(i\in \alpha ^c\).

In Appendix 1, we give an analytical expressions for the right hand-side of Eq. (2.1) and the conditional probability in step 2 when \(\Psi \) is a quadratic function of x. For logistic regression models, neither step 1 nor step 2 can be directly derived and the Gibbs samplers makes use of a further auxiliary Pólya-Gamma random variable \(\omega \) which has to be simulated at every iteration and makes the computations of step 1 and step 2 tractable, conditionally on \(\omega \) (see Polson et al. 2013 for details).

3.2 Runtime analysis and mixing times

The ordinary Zig-Zag sampler can greatly profit in the case of models with a sparse conditional dependence structure between coordinates by employing local versions of the standard algorithm as presented in Bierkens et al. (2021). In “Appendix D.2” we discuss how to simulate sticky PDMPs and derive similar local algorithms relative to the sticky Zig-Zag. Also the Gibbs sampler algorithm, as described in Sect. 3.1, benefits when the conditional dependence structure of the target is sparse. In “Appendix D.3” we analyse the computational complexity of both algorithms. In the analysis, we drop the dependence on (x, v) and we assume that the size of \(\alpha (t) := \{i :x_i(t) \ne 0\}\) fluctuates around a typical value p in stationarity. Thus p represents the number of non-zero components in a typical model, and can be much smaller than d in sparse models.

Table 1 summarises the results obtained of both algorithms in terms of the sample size N and p when the conditional dependence structure between the coordinates of the target is full and the sub-sampling method presented in Sect. 2.4 cannot be employed (left-column) and when there is sparse dependence structure and subsampling can be employed (right-column). Our findings are validated by numerical experiments in Sect. 4 (Figs. 5 and 8).

We now turn our focus on the mixing time of both the underlying processes. Given the different nature of dependencies of the two algorithms, a rigorous and theoretical comparison of their mixing times is difficult and outside the scope of this work. We therefore provide an heuristic argument for two specific scenarios where we let both algorithms be initialized at \(x \sim \mathcal {N}_d(0, I) \in \mathbb {R}^d\), hence in the full model, and assume that the target \(\mu \) assigns most of its probability mass to the null model \(\mathcal {M}_{\emptyset }\). Then we derive the expected hitting time to \(\mathcal {M}_{\emptyset }\) for both processes. The two scenarios differ as in the former case the target \(\mu \) is supported in every sub-model so that the process can reach the point \((0,0,\ldots ,0)\) by visiting any sequence of sub-models while in the latter case the measure \(\mu \) is supported in a single nested sequence of sub-models. Details of the two scenarios are given in “Appendix D.4”. Table 2 summarizes the scaling results (in terms of dimensions d) derived in the two cases considered.

4 Examples

In this section we apply the Sticky Zig-Zag sampler and, when possible, compare its performance with the Gibbs sampler in four different problems of varying nature and difficulty:

-

4.1

(Learning networks of stochastic differential equations) A system of interacting agents where the dynamics of each agent are given by a stochastic differential equation. We aim to infer the interactions among agents. This is an example where the likelihood does not factorise and the number of parameters increases quadratically with the number of agents. We demonstrate the Sticky Zig-Zag sampler under a spike-and-slab prior on the parameters that govern the interaction and compare this with the Gibbs sampler.

-

4.2

(Spatially structured sparsity) An image denoising problem where the prior incorporates that a large part of the image is black (corresponding to sparsity), but also promotes positive correlation among neighbouring pixels. Specifically, this examples illustrates that the Sticky Zig-Zag sampler can be employed in high dimensional regimes (the showcase is in dimension one million) and for sparsity promoting priors other than factorised priors such as spike-and-slab priors.

-

4.3

(Logistic regression) The logistic regression model where both the number of covariates and the sample size are large, while assuming the coefficient vector to be sparse. This is an non-Gaussian optimal scenario where the Sticky Zig-Zag sampler can be employed with subsampling technique achieving \(\mathcal {O}(1)\) scaling with respect to the sample size.

-

4.4

(Estimating a sparse precision matrix) The setting where N realisations of independent Gaussian vectors with precision matrix of the form \( X X'\) are observed. Sparsity is assumed on the off-diagonal elements of the lower-triangular matrix X. What makes this example particularly interesting is that the gradient of the log-likelihood explodes in some hyper-planes, complicating the application of gradient-based Markov chain Monte Carlo methods.

In all cases we simulate data from the model and assume the parameter to be sparse (i.e. most of its elements are assumed to be zero) and high dimensional. In case a spike-and-slab prior is used, the slabs are always chosen to be zero-mean Gaussian with (large) variance \(\sigma _0^2\). The sample sizes, parameter dimensions and additional difficulties such as correlated parameters or non-linearities which are considered in this section illustrate the computational efficiency of our method (and implementation) in a wide range of settings. In all examples we used either the local or the fully local algorithm of the Sticky Zig-Zag as detailed in “Appendix D.2” with velocities in the set \(\mathcal {V}= \{-1,+1\}^d\). Comparisons with the Gibbs sampler are possible for Gaussian models and the logistic regression model. Our implementation of the Gibbs sampler is taking advantage of model sparsity. Because of its computational overhead, when such comparisons are included, the dimensionality of the problems considered has been reduced. The performance of the two algorithms is compared by running the two algorithms for approximately the same computing time. As performance measure we consider the squared error as a function of the computing time:

where c denotes computing time (we use c rather than t as the latter is used as time index for the Zig-Zag sampler). In the displayed expression, we first compute \(\overline{p}_i\), which is an approximation to the posterior probability of the ith coordinate being nonzero. This quantity can either be obtained by running the Sticky Zig-Zag sampler or the Gibbs sampler (if applicable) for a very long time. As we show the Sticky Zig-Zag sampler to converge faster, especially in high dimensional problems, we use this sampler in approximating this value. We stress that the same result could be obtained by running the Gibbs sampler for a very long time. More precisely, we compute for each coordinate of the Sticky Zig-Zag sampler the fraction of time it is nonzero. In \(\mathcal {E}_{\text {s}}(c)\), the value of \(\overline{p}_i\) is compared to \(p^{\text {s}}_i(c)\) which is the fraction of time (or fraction of samples in case of the Gibbs sampler) where \(x_i\) is nonzero using computational budget c and sampler ‘s’. All the experiments were carried out with a conventional laptop with Intel core i5-10310 processor and 16 GB DDR4 RAM. Pre-processing time and memory allocation of both algorithms are comparable.

4.1 Learning networks of stochastic differential equations

In this example we consider a stochastic model for p autonomously moving agents (“boids”) in the plane. The dynamics of the location of the ith agent is assumed to satisfy the stochastic differential equation

where, for each i, \((W_i(s))_{0\le s\le T}\) is an independent 2-dimensional Wiener process. We assume the trajectory of each agent is observed continuously over a fixed interval [0, T]. This implies \(\sigma >0\) can be considered known, as it can be recovered without error from the quadratic variation of the observed path. For simplicity we will also assume the mean-reversion parameter \(\lambda >0\) to be known. Let \(x = \{x_{i,j} :i \ne j\} \in \mathbb {R}^{p^2 - p}\) denote the unknown parameter. If \(x_{i,j} > 0\), agent i has the tendency to follow agent j, on the other hand, if \(x_{i,j} < 0\), agent i tends to avoid agent j. Hence, estimation of x aims at inferring which agent follows/avoids other agents. We will study this problem from a Bayesian point of view assuming sparsity of x, incorporated via the prior using a spike and slab prior. This problem has been studied previously in Bento et al. (2010) using \(\ell _1\)-regularised least squares estimation.

Motivation for studying this problem can be found in Reynolds (1987) and the presentation at JuliaCon (2020). An animation of the trajectories of the agents in time can be found at Grazzi and Schauer (2021).

Suppose \(U_i(s)=(U_{i,1}(s), U_{i,2}(s))\) and let \(Y(s) = (U_{1,1}(s),\ldots , U_{p,1}(s), U_{1,2}(s),\ldots , U_{p,2}(s))\) denote the vector obtained upon concatenation of all x-coordinates and y-coordinates of all agents. Then, it follows from Eq. (2.2) that \( \textrm{d}Y(s) = C(x) Y(s) \textrm{d}s + \sigma \textrm{d}W(s)\), where W(s) is a Wiener process in \(\mathbb {R}^{2p}\). Here, \(C(x)=\text{ diag }(A(x), A(x))\) where

with \(\overline{x}_i = \sum _{j \ne i} x_{i,j}\). If \(\mathbb {P}_x\) denotes the measure on path space of \(Y_T:=(Y(s),\, s\in [0,T])\) and \(\mathbb {P}_0\) denotes the Wiener-measure on \(\mathbb {R}^{2p}\), then it follows from Girsanov’s theorem that

As we will numerically only be able to store the observed sample path on a fine grid, we approximate the integrals appearing in the log-likelihood \(\ell (x)\) using a standard Riemann-sum approximation of Itô integrals (see e.g. Rogers and Williams 2000, Ch. IV, Sect. 47) and time integrals. We assume x to be sparse which is incorporated by choosing a spike-and-slab prior for x as in Eq. (1.1). The posterior measure is of the form of (1.2) with \(\kappa \) and \(\Psi (x)\) as in (1.3). As \(x\mapsto \Psi (x)\) is quadratic, the reflection times of the Sticky Zig-Zag sampler can be computed in closed form.

Numerical experiments: In our numerical experiments we fix \(p = 50\) (number of agents), \(T = 200\) (length of time-interval), \(\sigma = 0.1\) (noise-level) and \(\lambda = 0.2\) (mean-reversion coefficient). We set the parameter x such that each agent has one agent that tends to follow and one agent that tends to avoid. Hence, for every i, we set \(x_{i,j}\) to be zero for all \(j \ne i\), except for 2 distinct indices \(j_1,j_2 \sim \text {Unif}(\{1,2,\ldots ,d\}{\setminus } i)\) with \(x_{i,j_1}x_{i,j_2}<0\). The parameter x is very sparse and it is highly nontrivial to recover its value. We then simulate \(Y_T\) using Euler forward discretization scheme, with step-size equal to 0.1 and initial configuration \(Y(0)\sim \mathcal {N}_{2p} (0,I)\).

The prior weights \(w_1 = w_2 = \cdots = w_d\) (\(w_i\) being the prior probability of the ith coordinate to be nonzero) are conveniently chosen to equal the proportion of non-zero elements in the true (data-generating) parameter vector x. The variance of each slab was taken to be \(\sigma ^2_0 = 50\). We ran the Sticky Zig-Zag sampler with final clock 500, where the algorithm was initialized in the full-model with no coordinate frozen at 0 at the posterior mean of the Gaussian density proportional to \(\Psi \).

Figure 2 shows the discrepancy between the parameters used during simulation (ground truth) and the estimated posterior median. In this figure, from the (sticky) Zig-Zag trajectory of each element \(x_{i,j}\) (\(i\ne j\)) we collected their values at time \(t_i =i 0.1 \) and subsequently computed the median of the those values. We conclude that all parameters which are strictly positive (coloured in pink) are recovered well. At the bottom of the figure (black points and crosses), 25 are incorrectly identified as either being zero or negative. In this experiment, the Sticky Zig-Zag sampler outperforms the Gibbs sampler considerably.

In Fig. 3 we compare the performance of the Sticky Zig-Zag sampler with the Gibbs sampler. Here, all the parameters (including initialisation) are as above, except now the number of agents is taken as \(p = 20\). Both \(c \mapsto \mathcal {E}_{\text {Zig-Zag}}(c)\) and \(c \mapsto \mathcal {E}_{\text {Gibbs}}(c)\), with c denoting the computational budget, are computed for \(c \in [0,10]\). For this, the final clock of the Zig-Zag was set to \(10^4\) and the number of iterations for the Gibbs sampler was set to \(1.2\times 10^4\). For obtaining \(\bar{p}_i\) the Sticky Zig-Zag sampler was run with final clock \(5\times 10^4\) (taking approximately 50 s computing time).

Posterior median estimate of \(x_k\) (where k can be identified with (i, j)) versus k computed using the Sticky Zig-Zag sampler. Thin vertical lines indicate distance to the truth. True zeros are plotted with the symbol \(\times \), others are plotted as points. With \(p = 50\) agents, the dimension of the problem is \(d = 2450\)

Squared error of the marginal inclusion probabilities (Eq. 2.1) \(c \rightarrow \mathcal {E}_{\text {zig-zag}}(c)\) (red) and \(c \rightarrow \mathcal {E}_{\text {gibbs}}(c)\)(green) where c represent the computing time in seconds. With \(p=20\) agents the dimension of the problem is \(p(p-1)/2 = 380\)

4.2 Spatially structured sparsity

We consider the problem of denoising a spatially correlated, sparse signal. The signal is assumed to be an \(n\times n\)-image. Denote the observed pixel value at location (i, j) by \(Y_{i,j}\) and assume

The “true signal” is given by \(x=\{x_{i,j}\}_{i,j}\) and this is the parameter we aim to infer, while assuming \(\sigma ^2\) to be known. We view x as a vector in \(\mathbb {R}^d\), with \(d=n^2\) but use both linear indexing \(x_k\) and Cartesian indexing \(x_{i,j}\) to refer to the component at index \(k = n(i-1) + j\). The log-likelihood of the parameter x is given by \(\ell (x) = C + \sigma ^{-2} \sum _{i=1}^n \sum _{j=1}^n |x_{i,j}-Y_{i,j}|^2\), with C a constant not depending on x.

We consider the following prior measure

The Dirac masses in the prior encapsulate sparseness in the underlying signal and an appropriate choice of \(\Gamma \) can promote smoothness. Overall, the prior encourages smoothness, sparsity and local clustering of zero entries and non-zero entries. As a concrete example, consider \(\Gamma = c_1\Lambda + c_2 I\) where \(\Lambda \) is the graph Laplacian of the pixel neighbourhood graph: the pixel indices i, j are identified with the vertices \(V=\{(i, j) :\) \((i,j)\in \{1, \ldots , n\}^2\}\) of the \(n \times n\) -lattice with edges \(E=\{\{v, v^{\prime }\}: (v, v^\prime ) = ((i, j), (i^{\prime }, j^{\prime })) \in V^2\), \(|i-i^{\prime }|+|j-j^{\prime }|= 1\}\) (using the set notation for edges). Thus, edges connect a pixel to its vertical and horizontal neighbours. Then

and \( \Lambda = (\Lambda _{k,l})_{k,l \in \{ 1, \ldots , n^2\}}\) with \(\Lambda _{(i-1)n + j, (k-1)n + l} = \lambda _{(i,j), (k,l)}\), for \(\quad i,j,k,l \in \{1, \ldots , n\}\).

This is a prior which is applicable in similar situations as the fused Lasso in Tibshirani et al. (2005).

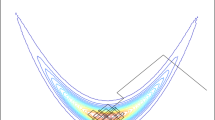

Numerical experiments: We assume that pixel (i, j) corresponds to a physical location of size \(\Delta _1 \times \Delta _2\) centered at \(u(i,j) = u_0 + (i \Delta _1, j \Delta _2) \in \mathbb {R}^2\). To numerically illustrate our approach, we use a heart shaped region given by \( x_{i,j} = 5\max (1 - h(u(i,j)), 0) \) where \(h:\mathbb {R}^2 \rightarrow [0, \infty )\) is defined by \( h(u_1, u_2) = u_1^2+\left( \frac{5u_2}{4}-\sqrt{|u_1|}\right) ^2 \), \(u_0 = (-4.5, -4.1)\), \(n = 10^3\) and \(\Delta _1 = \Delta _2 = 9/n\). In the example, about 97% of the pixels of the truth are black. The dimension of the parameter equals \(10^6\). Figure 4, top-left, shows the observation Y with \(\sigma ^2 = 0.5\) and the ground truth.

As the ordinary Sticky Zig-Zag sampler would require storing and ordering 1 million elements in the priority queue we ran the Sticky Zig-Zag sampler with sparse implementation as detailed in Remark D.1. For this example, we have \(\Psi (x) = \ell (x) + 0.5x'\Gamma x\). We took \(c_1 = 2, c_2 = 0.1\) in the definition of \(\Gamma \) and chose the parameters \(\kappa _1 = \kappa _2 = \cdots = \kappa _d = 0.15\) for the smoothing prior. The reflection times are computed by means of a thinning scheme, see “Appendix E.2” for details. We set the final clock of the Sticky Zig-Zag sampler to 500. Results from running the sampler are summarized in Fig. 4.

In Fig. 5, the runtimes of the Sticky Zig-Zag sampler and Gibbs sampler are shown (in a log–log scale) for different values of \(n^2\) (dimensionality of the problem), the final clock was fixed to \(T = 500\) (\(10^3\) iteration for the Gibbs sampler). All the other parameters are kept fixed as described above. The results agree well with the scaling results of Table 1, rightmost column.

In Fig. 6 we show \(t \rightarrow \mathcal {E}_{\text {Zig-Zag}}(t)\) and \(t \rightarrow \mathcal {E}_{\text {Gibbs}}(t)\) for t ranging from 0 to 5, in case \(n = 20\). Both samplers were initialized at the posterior mean of the Gaussian density proportional to \(\Psi \) (hence, in the full-model with no coordinates set to 0). In this experiment, the Sticky Zig-Zag sampler outperforms the Gibbs sampler considerably.

Top-left: observed \(1000 \times 1000\) image of a heart corrupted with white noise, with part of the ground truth inset. Top-right, left half: posterior mean estimated from the trace of the Sticky Zig-Zag sampler (detail). Top-right, right half: mirror image showing the absolute error between the posterior mean and the ground truth in the same scale (color gradient between blue (0) and yellow (maximum error)). Bottom: trace plot of 3 coordinates; on the left the full trajectory is shown whereas on the right only the final 60 time units are displayed. The traces marked with blue and orange lines belong to neighbouring coordinates (highly correlated) from the center, the trace marked with green belongs to a coordinate outside the region of interest

4.3 Logistic regression

Suppose \(\{0,1\} \ni Y_i \mid x \sim \text{ Ber }(\psi (x^T a_i))\) with \(\psi (u) =(1 + e^{- u})^{-1}\). \(a_i \in \mathbb {R}^d\) denotes a vector of covariates and \(x \in \mathbb {R}^d\) a parameter vector. Assume \(Y_1,\ldots , Y_N\) are independent, conditionally on x. The log-likelihood is equal to

We assume a spike-and-slab prior of (1.1) with zeromean Gaussian slabs and (large) variance \(\sigma _0^2\). Then the posterior can be written as in Eq. (1.2), with \(\Psi \) and \(\kappa \) as in Equation (1.3).

Numerical experiments: We consider two categorical features with 30 levels each and 5 continuous features. For each observation, an independent random level of each discrete feature and a random value of the continuous features, \(\mathcal {N}(0,0.1^2)\) is drawn. Let the design matrix \(A\in \mathbb {R}^{N\times d}\) be the matrix where the i-th row is the vector \(a_i\). A includes the levels of the discrete features in dummy encoding and the interaction terms between them also in dummy encoding scaled by 0.3 (960 columns), and the continuous features in the final 5 columns. This implies that the dimension of the parameter equals \(d = 965\). We then generate \(N =50d= 48250\) observations using as ground truth sparse coefficients obtained by setting \(x_i = z_i \xi _i\) where \(z_i {\mathop {\sim }\limits ^{{\text {i.i.d.}}}} \text {Bern}(0.1)\) and \(\xi _i{\mathop {\sim }\limits ^{{\text {i.i.d.}}}} \mathcal {N}(0, 5^2)\), where \(\{z_i\}\) and \(\{\xi _i\}\) are independent.

We run the sticky ZigZag with subsampling and bounding rates derived in Appendix E.1. We chose \(w_1 = w_2 = \cdots = w_d = 0.1\) and \(\sigma _0^2 = 10^2\) and ran the Sticky Zig-Zag sampler for 100 time-units. The implementation makes use of a sparse matrix representation of A, speeding up the computation of inner products \(\langle a_{j}, x\rangle \). Figure 7 reveals that while perfect recovery is not obtained (as was to be expected), most nonzero/zero features are recovered correctly.

Runtime comparison of the Sticky Zig-Zag sampler (green) and the Gibbs sampler (red) for the example in Subsection 4.2. The horizontal axis displays the dimension of the problem, which is \(n^2\). The vertical axis shows runtime in seconds. The runtime is evaluated at \(n^2 = 50^2, 100^2, \ldots , 600^2\) for the sticky Zig-Zag sampler and at \(n^2 = 40^2,45^2, \ldots , 70^2\) for the Gibbs sampler. Both plots are on a log–log scale. The dashed curves shows the theoretical scaling (including a log-factor for the priority queue insertion): \(x \mapsto c_1 x\log (x)\) (green) and \(x \mapsto c_2 x^{3/2}\) (orange), with \(c_1\) and \(c_2\) chosen conveniently

Squared error of the marginal inclusion probabilities (Eq. 2.1) \(c \rightarrow \mathcal {E}_{\text {zig-zag}}(c)\) (red) and \(t \rightarrow \mathcal {E}_{\text {gibbs}}(c)\) (green) where c represent the computational time in seconds; right-panel: zoom-in near 0. Here the dimension of the problem is \(n^2 = 400\)

In a second numerical experiment we compare the computing time of the Sticky Zig-Zag sampler and Gibbs sampler (as proposed in Polson et al. 2013) as we vary the number of observations (N). In this case, we reduce the dimension of the parameter by restricting to 2 categorical variables, including their pairwise interactions, augmented by 3 “continuous” predictors (leading to the parameter vector \(x \in \mathbb {R}^9\)). For each sample size N we ran the Gibbs sampler for 1000 iterations and the Sticky Zig-Zag sampler for 1000 time units. Our interest here is not to compare the computing time of the samplers for a fixed value of N, but rather the scaling of each algorithm with N. Figure 8 shows that the computing time for the Sticky Zig-Zag sampler is roughly constant when varying N. On the contrary, the computing time increases linearly with N for the Gibbs sampler. This is consistent with the theoretical scaling results presented in Table 1 (rightmost column). We remark that qualitatively similar results would be obtained if we would have fixed the number of iterations of the Gibbs sampler and endtime of the Zig-Zag sampler to different values.

Results for the logistic regression coefficients derived with the Sticky Zig-Zag sampler with subsampling. Description as in caption of Figure 2. The dimension of this problem is \(d = 965\)

Logistic regression example: computing time in seconds versus number of observations. Solid red line: Gibbs samplers with \( 10^3\) iterations. Solid blue line: Sticky Zig-Zag samplers with subsampling ran for \(10^3\) time units. The dashed lines correspond to the scaling results displayed in Table 1. Here, the dimension of the problem is fixed to \(d=9\)

4.4 Estimating a sparse precision matrix

Consider

for some unknown lower triangular sparse matrix \(X \in \mathbb {R}^{p\times p}\). We aim to infer the lower-triangular elements of X which we concatenate to obtain the parameter vector \(x :=\{X_{i,j} :1\le j \le i \le p\} \in \mathbb {R}^{p(p+1)/2}\). This class of problems is important as the precision matrix \(X X'\) unveils the conditional independence structure of Y, see for example Shi et al. (2021), and reference therein, for details.

We impose a prior measure on x of the product form \(\mu _0( \textrm{d}x) = \bigotimes _{i=1}^p \bigotimes _{j=1}^i \mu _{i,j}( \textrm{d}x_{i,j})\) where

and \(\pi _{i,j}\) is the univariate Gaussian density with mean \(c_{i,j} \in \mathbb {R}\) and variance \(\sigma _{0}^2>0\).

Left: error between the true precision matrix and the precision matrix obtained with the estimated posterior mean of the lower-triangular matrix (colour gradient between white (no error) and black (maximum error)). Right: traces of two non-zero coefficients (\(x_{1,1}\) in red and \(x_{2,1}\) in pink) of the lower triangular matrix. Dashed green lines are the ground truth. Here, the dimension of each vector \(Y_i\) is \(p = 200\) and the dimension of the problem is \(p(p+1)/2 = 20\,100\)

This prior induces sparsity on the lower-triangular off-diagonal elements of X while preserving strict positive definiteness of \(X X'\) (as the elements on the diagonal are restricted to be positive).

The posterior in this example is of the form

with

and \(\kappa _{i,j}= \pi _{i,j}(0) w/(1-w)\). In particular, the posterior density is not of the form as given in Eq. (1.2), as the diagonal elements cannot be zero and have a marginal density relative to the Lebesgue measure, while the off-diagonal elements are marginally mixtures of a Dirac and a continuous component. Notice that, for any \(i = 1,2,\ldots , p\), as \(x_{i,i} \downarrow 0\), \(\exp (-\Psi (x))\) vanishes and \(\nabla \Psi (x)\rightarrow \infty \). This makes the sampling problem challenging for gradient-based algorithms.

Numerical experiments: We apply the Sticky Zig-Zag sampler where the reflection times are computed by using a thinning and superposition scheme for inhomogeneous Poisson processes, see “Appendix E.3” for the details.

We simulate realisations \(y_1, \ldots , y_N\) with precision matrix \(X X'\) a tri-diagonal matrix with diagonal \((0.5, 1, 1,\ldots , 1, 1, 0.5) \in \mathbb {R}^p\) and off-diagonal \((-0.3,-0.3,\ldots ,-0.3)\in \mathbb {R}^{p-1}\). In the prior we chose \(\sigma _{0}^2 = 10\) and \(c_{i,j} = {\textbf {1}} _{(i=j)}\) and for \(1 \le j \le i \le p\) and \(w=0.2\).

We fixed \(N = 10^3\) and \(p = 200\) and ran the Sticky Zig-Zag sampler for 600 time-units. We initialized the algorithm at \(x(0) \sim \mathcal {N}_{p(p+1)/2}(0,I)\) and set a burn-in of 10 unit-time. The left panel of Fig. 9 shows the error between \(X X'\) (the ground truth) and \({\overline{X}} \, {\overline{X}}'\) where \({\overline{X}}\) is posterior mean of the lower triangular matrix estimated with the sampler. The error is concentrated on the non-zero elements of the matrix while the zero elements are estimated with essentially no error. The right panel of Fig. 9 shows the trajectories of two representative non-zero elements of X. The traces show qualitatively that the process converges quickly to its stationary measure.

In this case, comparisons with the Gibbs sampler are not possible as there is no closed form expression for the Bayes factors of Eq. (2.1).

5 Discussion

The sticky Zig-Zag sampler inherits some limitations from the ordinary Zig-Zag sampler:

Firstly, if it is not possible to simulate the reflection times according to the Poisson rates in Eq. (2.5), the user needs to find and specify upper bounds of the Poisson rates from which it is possible to simulate the first event time (see “Appendix D.2” for details). This procedure is referred to as thinning and remains the main challenge when simulating the Zig-Zag sampler. Furthermore, the efficiency of the algorithm deteriorates if the upper bounds are not tight.

Secondly, the Sticky Zig-Zag sampler, due to its continuous dynamics, can experience difficulty traversing regions of low density, in particular it will have difficulty reaching 0 in a coordinate if that requires passing through such a region.

Finally, the process can set to 0 (and not 0) only one coordinate at a time, hence failing to be ergodic for measures not supported on neighbouring sub-models. For example, consider the space \(\mathbb {R}^2\) and assumes that the process can visit either the origin (0, 0) or the full space \(\mathbb {R}^2\) but not the coordinate axes \(\{0\}\times \mathbb {R}\cup \mathbb {R}\times \{0\}\). Then the process started in \(\mathbb {R}^2\) hits the origin with probability 0, hence failing to explore the subspace (0, 0).

In what follows, we outline promising research directions deferred to future work.

5.1 Sticky Hamiltonian Monte Carlo

The ordinary Hamiltonian Monte Carlo (HMC) process as presented by Neal et al. (2011) can be seen as a piecewise deterministic Markov processes with deterministic dynamics equal to

where \(\nabla \Psi \) is the gradient of the negated log-density relative to the Lebesgue measure. At random exponential times with constant rate, the velocity component is refreshed as \(v \sim \mathcal {N}(0,I)\) (similarly to the refreshment events in the bouncy particle sampler). By applying the same principles outlined in Sect. 2, such process can be made sticky with Eq. (1.2) as its stationary measure.

Unfortunately, in most cases, the dynamics in (2.1) cannot be integrated analytically so that a sophisticated numerical integrator is usually employed and a Metropolis–Hasting steps compensates for the bias of the numerical integrator (see Neal et al. 2011 for details). These two last steps makes the process effectively a discrete-time process and its generalization with sticky dynamics is not anymore trivial.

5.2 Extensions

The setting considered in this work does not incorporate some relevant classes of measures:

-

Posteriors given by prior measures which freely choose prior weights for each (sub-)model. This limitation is mainly imputed to the parameter \(\kappa =(\kappa _1,\kappa _2,\ldots ,\kappa _d)\) which here does not depend on the location component x of the state space. While the theoretical framework built can be easily adapted for letting \(\kappa \) depend on x, it is currently unclear to us the exact relationship between \(\kappa \) and the posterior measure in this more general setting.

-

Measures which are not supported on neighbouring sub-models are also not covered here.

To solve this problem, different dynamics for the process should be developed which allow the process to jump in space and set multiple coordinates to 0 (and not 0) at a time.

Notes

A function \(f:\overline{\mathbb {R}} \rightarrow \mathbb {R}\) is continuous if both restrictions to \((\infty , 0^-]\) and \([0^+, \infty )\) are continuous. If \(f(0^-) = f(0^+)\), we write f(0).

I.e., trajectories that are continuous from the right, with existing limits from the left.

References

Andrieu, C., Livingstone, S.:. Peskun–Tierney ordering for Markov chain and process Monte Carlo: beyond the reversible scenario (2019). arXiv: 1906.06197

Bento, J., Ibrahimi, M., Montanari, A.: Learning networks of stochastic differential equations (2010). arXiv: 1011.0415

Bierkens, J., Fearnhead, P., Roberts, G.: The Zig-Zag process and super-efficient sampling for Bayesian analysis of big data. Ann. Stat. 47(3), 1288–1320 (2019)

Bierkens, J., Grazzi, S., Kamatani, K., Roberts, G.: The boomerang sampler. In: International Conference on Machine Learning, PMLR, pp. 908–918 (2020)

Bierkens, J., Grazzi, S., van der Meulen, F., Schauer, M.: A piecewise deterministic Monte Carlo method for diffusion bridges. Stat. Comput. 31(3), 1–21 (2021)

Bierkens, J., Roberts, G.O., Zitt, P.-A.: Ergodicity of the zigzag process. Ann. Appl. Probab. 29(4), 2266–2301 (2019)

Bouchard-Côtè, A., Vollmer, S.J., Doucet, A.: The bouncy particle sampler: a nonreversible rejection-free Markov chain Monte Carlo method. J. Am. Stat. Assoc. 113(522), 855–867 (2018)

Chevallier, A., Fearnhead, P., Sutton, M.: Reversible jump PDMP samplers for variable selection (2020). arXiv: 2010.11771

Cotter, S.L., Roberts, G.O., Stuart, A.M., White, D.: MCMC methods for functions: modifying old algorithms to make them faster. Stat. Sci. 28, 424–446 (2013)

Davis, M.H.A.: Markov models and optimization. In: Monographs on Statistics and Applied Probability, vol. 49. Chapman & Hall, London (1993)

Duane, S., Kennedy, A.D., Pendleton, B.J., Roweth, D.: Hybrid Monte Carlo. Phys. Lett. B 195(2), 216–222 (1987)

George, E.I., McCulloch, R.E.: Variable selection via Gibbs sampling. J. Am. Stat. Assoc. 88(423), 881–889 (1993)

Grazzi, S., Schauer, M.: Boid animation. https://youtu.be/O1VoURPwVLI (2021)

Green, P.J.: Reversible jump Markov chain Monte Carlo computation and Bayesian model determination. Biometrika 82, 711–732 (1995)

Green, P.J., Hastie, D.I.: Reversible jump MCMC. Genetics 155(3), 1391–1403 (2009)

Griffin, J.E., Brown, P.J.: Bayesian global-local shrinkage methods for regularisation in the high dimension linear model. Chemom. Intell. Lab. Syst. 210, 104255 (2021)

Guan, Y., Stephens, M.: Bayesian variable selection regression for genome-wide association studies and other large-scale problems. Ann. Appl. Stat. 5(3), 1780–1815 (2011)

Ishwaran, H., Rao, J.S.: Spike and slab variable selection: frequentist and Bayesian strategies. Ann. Stat. 33(2), 730–773 (2005)

JuliaCon: 2020 by Jesse Bettencourt. JuliaCon 2020—Boids: Dancing with friends and enemies. https://www.youtube.com/watch?v=8gS6wejsGsY (2020)

Liang, X., Livingstone, S., Griffin, J.: Adaptive random neighbourhood informed Markov chain Monte Carlo for high-dimensional Bayesian variable Selection. arXiv:2110.11747 (2021)

Liggett, T.M.: Continuous time Markov processes. In: Graduate Studies in Mathematics, vol. 113. American Mathematical Society, Providence, RI (2010)

Meyn, S.P., Tweedie, R.L.: Stability of Markovian processes II: continuous-time processes and sampled chains. Adv. Appl. Probab. 25(3), 487–517 (1993)

Mitchell, T.J., Beauchamp, J.J.: Bayesian variable selection in linear regression. J. Am. Stat. Assoc. 83(404), 1023–1032 (1988)

Neal, R.M., et al.: MCMC using Hamiltonian dynamics. Handb. Markov Chain Monte Carlo 2(11), 2 (2011)

Polson, N.G., Scott, J.G., Windle, J.: Bayesian inference for logistic models using Pòlya- Gamma latent variables. J. Am. Stat. Assoc. 108(504), 1339–1349 (2013)

Ray, K., Szabo, B., Clara, G.:Spike and slab variational Bayes for high dimensional logistic regression (2020). arXiv: 2010.11665

Reynolds, C. W.: Flocks, herds and schools: a distributed behavioral model. In: Association for Computing Machinery (1987)

Rogers, L.C.G., Williams, D.: Diffusions, Markov Processes and Martingales: Volume 2, Itô calculus. vol. 2. Cambridge University Press (2000)

Rogers, L., Williams, D.: Diffusions, Markov processes, and martingales: foundations. In: Cambridge Mathematical Library, vol. 1. Cambridge University Press (2000)

Schauer, M., Grazzi, S.: mschauer/ZigZagBoomerang.jl: v0.6.0. Version v0.6.0. https://doi.org/10.5281/zenodo.4601534 (2021)

Shi, W., Ghosal, S., Martin, R.: Bayesian estimation of sparse precision matrices in the presence of Gaussian measurement error. Electron. J. Stat. 15(2), 4545–4579 (2021)

Sutton, M., Fearnhead, P.: Concave-convex PDMP-based sampling. arXiv:2112.12897 (2021)

Tibshirani, R., et al.: Sparsity and smoothness via the fused lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 67(1), 91–108 (2005)

Zanella, G., Roberts, G.: Scalable importance tempering and Bayesian variable selection. J. R. Stat. Soc. Ser. B Stat. Methodol. 81(3), 489–517 (2019)

Acknowledgements

this work is part of the research programme Bayesian inference for high dimensional processes with project number 613.009.034c, which is (partly) financed by the Dutch Research Council (NWO) under the Stochastics—Theoretical and Applied Research (STAR) grant. J. Bierkens acknowledges support by the NWO for the research project Zig-zagging through computational barriers with project number 016.Vidi.189.043.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A. Details of the Sticky Zig-Zag sampler

1.1 A.1 Construction

In this section we discuss how the Sticky Zig-Zag can be constructed as a standard PDMP in the sense of Davis (1993). The construction is a bit tedious, but the underlying idea is simple: the Sticky Zig-Zag process has the dynamics of a ordinary Zig-Zag process until it reaches a freezing boundary \({\mathfrak {F}}_i =\{(x,v) \in E:x_i = 0^-, \, v_i >0 \, \text { or } \, x_i = 0^+, \, v_i < 0\}\) of \(E = {\overline{\mathbb {R}}}^d \times \mathcal {V}\), with \({\overline{\mathbb {R}}} = (-\infty ,0^-] \sqcup [0^+,\infty )\) which has two copies of 0. Then it immediately changes dynamics and evolves as a lower dimensional ordinary Zig-Zag process on the boundary, at least until an unfreezing event happens or upon reaching yet another freezing boundary in the domain of the restricted process.

Davis’ construction allows a standard PDMP to make instantaneous jumps at boundaries of open sets, but puts restrictions on further behaviour at that boundary. We circumvent these restrictions by first splitting up the space \(\mathbb {R}^d \times \mathcal {V}\) into disconnected components in a way somewhat different than the construction of E as presented in Sect. 2. Only at a later stage we recover the definition of E.

Define the set

and

(note that |K| does not denote the cardinality of the set K). Define the functions \(k :\mathbb {R}\times \mathbb {R}\rightarrow K\) and \(|k| :\mathbb {R}\times \mathbb {R}\rightarrow |K|\) by

(x, v) | k(x, v) | at (x, v) the process is... | |k|(x, v) |

\(x>0, v>0\) |

| ...moving away from 0 with positive velocity |

|

\(x<0, v<0\) |

| ...moving away from 0 with negative velocity |

|

\(x>0, v<0\) |

| ...moving toward 0 with negative velocity |

|

\(x<0, v>0\) |

| ...moving toward 0 with positive velocity |

|

\(x = 0, v>0\) |

| ...at 0 with positive velocity | \(\circ \) |

\(x = 0, v<0\) |

| ...at 0 with negative velocity | \(\circ \) |

If \((x,v) \in \mathbb {R}^d \times \mathcal {V}\), then extend \(k :{\overline{\mathbb {R}}}^d \times \mathcal {V} \rightarrow K^d\) and \(|k| :{\overline{\mathbb {R}}}^d \times \mathcal {V}\rightarrow |K|^d\) by applying the map k and |k| coordinatewise.

For each \(\ell \in K^d\) define

Note that for \(\ell \ne \ell '\) the sets \(\widetilde{E}_\ell ^\circ \) and \(\widetilde{E}_{\ell '}^\circ \) are disjoint. The set \(\widetilde{E}_\ell ^\circ \) is open under the metric introduced in Davis (1993, p. 58), which sets the distance between two points \((\ell , x,v)\) and \((\ell ',x',v')\) to 1 if \(\ell \ne \ell '\). We denote the induced topology on \({\widetilde{E}}\) by \({\widetilde{\tau }}\). \({\widetilde{E}}^\circ _\ell \) is a subset of \(\mathbb {R}^{2d}\) of dimension \(d_\ell = \sum _{i =1}^d \mathbbm {1}_{|\ell _i| \ne \circ }\), since the velocities are constant in \(E^\circ _\ell \) and the position of the components i where \(\ell _i = \circ \) are constant as well in \({\widetilde{E}}^\circ _\ell \) (\({\widetilde{E}}^\circ _\ell \) is isomorphic to an open subset of \(\mathbb {R}^{d_\ell }\)).

The sets which contain a singleton, i.e. \(|{\widetilde{E}}^\circ _\ell | = 1\), are those sets \({\widetilde{E}}^\circ _\ell \) such that \(|\ell _i(x,v)| = \circ \) for all \(i = 1,2,\ldots , d\) and are open as they contain one isolated point, but will have to be treated a bit differently. Then \({\widetilde{E}}^\circ = \bigcup _{\ell \in K^{d}} {\widetilde{E}}^\circ _\ell \) is the tagged space of open subsets of \(\mathbb {R}^{d_\ell }\) used in Davis (1993, Section 24).

\({\widetilde{E}}^\circ \) separates the space into isolated components of varying dimension. In each component, the Sticky Zig-Zag process behaves differently and essentially as a lower dimensional Zig-Zag process.

Let \(\partial {\widetilde{E}}_\ell ^\circ \) denote the boundary of \(\widetilde{E}_\ell ^\circ \) in the embedding space \(\mathbb {R}^{d_\ell }\) (where the velocity components are constant in \({\widetilde{E}}_\ell ^\circ \)), with elements written \((\ell , x, v)\). Some points in \(\partial \widetilde{E}_\ell ^\circ \) will also belong to the state space \({\widetilde{E}}\) of the Sticky Zig-Zag process, but only the entrance-non-exit boundary points:

(This corresponds to the definition of the state space in Davis (1993, Section 24), only that we use knowledge of the flow).

The remaining part of the boundary is

with \({\widetilde{E}} \cap \Gamma = \varnothing \) so that \(\Gamma \) is not part of the state space \({\widetilde{E}}\). Any trajectory approaching \(\Gamma \), jumps back into \({\widetilde{E}}\) just before hitting \(\Gamma \). If \({\widetilde{E}}^\circ _\ell \) is a singleton \((|{\widetilde{E}}^\circ _\ell | = 1)\), then \( \Gamma _\ell = \emptyset \) and \({\widetilde{E}}_\ell = \widetilde{E}^\circ _\ell \) (atoms).

Lemma A.1

A bijection \(\iota :{\widetilde{E}} \rightarrow E\) is given by

where

Proof

Recall that \(\alpha (x,v) := \{i\in \{1,2,\ldots , d\} :(x, v) \notin {\mathfrak {F}}_i \}\) and \(\alpha ^c\) denotes its complement. First of all, notice that \(\iota ({\widetilde{E}}) \subset E\). Now let \((x,v)\in E\) be given. We construct \(e \in {\widetilde{E}}\) such that \((x,v) = \iota (e)\). If there is at least one \(x_j = 0^\pm \) with \(j \notin \alpha (x,v)\), then take \(e = (\ell , {\widetilde{x}},v) \in \widetilde{E}{\setminus } {\widetilde{E}}^\circ \) as follows (entrance-non-exit boundary): for \(i \in \alpha ^C\) we have \(|\ell _i| = \circ , \, {\widetilde{x}}_i = 0\), while for all \(i \in \alpha \) with \(x_i = 0^\pm \), we have  . Then \(\iota (e) = (x, v)\). Otherwise, \(e =(k({\widetilde{x}}, v), {\widetilde{x}},v)) \in \widetilde{E}^\circ \) (interior of an open set) and \(\iota (e) = (x, v)\) where \({\widetilde{x}}_i = 0\) for all \(i \in \alpha (x, v)\) and \({\widetilde{x}}_i = x_i\) otherwise. \(\square \)

. Then \(\iota (e) = (x, v)\). Otherwise, \(e =(k({\widetilde{x}}, v), {\widetilde{x}},v)) \in \widetilde{E}^\circ \) (interior of an open set) and \(\iota (e) = (x, v)\) where \({\widetilde{x}}_i = 0\) for all \(i \in \alpha (x, v)\) and \({\widetilde{x}}_i = x_i\) otherwise. \(\square \)

Having constructed the state space, we proceed with the process dynamics. Firstly, the deterministic flow (locally Lipschitz for every \(\ell \in K\)) is determined by the functions \(\widetilde{\phi }_\ell :[0,\infty )\times {\widetilde{E}}^\circ _\ell \rightarrow \widetilde{E}^\circ _\ell \) which for the sticky ZigZag process are given by

with \(x_i + v_i t (\mathbbm {1}_{|\ell _i| \ne \circ }), i= 1,2,\ldots ,d\) and determines the vector fields

Sometimes we write \({\widetilde{\phi }}_k(t, x, v) = {\widetilde{\phi }}(t, k, x, v)\) for convenience. Next, further state changes of the process are instantaneous, deterministic jumps from the boundary \(\Gamma \) into \({\widetilde{E}}\)

and random jumps at random times corresponding to unfreezing events

with  if

if  and

and  if

if  , and random reflections

, and random reflections

with

and

Then \(\lambda :{\widetilde{E}} \rightarrow \mathbb {R}^+\)

and a Markov kernel \(\mathcal {Q}:({\widetilde{E}} \cup \Gamma , \mathcal {B}(\widetilde{E} \cup \Gamma )) \rightarrow [0,1]\) by

Proposition A.2

\({\mathfrak {X}}, \lambda , \mathcal {Q}\) satisfy the standard conditions given in Davis (1993, Section 24.8), namely

-

For each \(\ell \in K,\, {\mathfrak {X}}_\ell \) is a locally Lipschitz continuous vector field and determines the deterministic flow \({\widetilde{\phi }}_\ell :{\widetilde{E}}_\ell \rightarrow {\widetilde{E}}_\ell \) of the PDMP.

-

\(\lambda :{\widetilde{E}} \rightarrow \mathbb {R}^+\) is measurable and such that \(t \rightarrow \lambda ({\widetilde{\phi }}_\ell (t, x, v))\) is integrable on \([ 0, \varepsilon (\ell ,x,v))\), for some \(\varepsilon >0\), for each \(\ell ,x,v\).

-

\(\mathcal {Q}\) is measurable and such that \(\mathcal {Q}((\ell , x, v), \{(\ell , x, v)\}) = 0\)

-

The expected number of events up to time t, starting at \((\ell ,x,v)\) is finite for each \(t>0, \forall (\ell ,x,v) \in {\widetilde{E}}\)

To see the latter, remember that for any initial point \((\ell , x, v) \in {\widetilde{E}}\), the deterministic flow (without any random event) hits \(\Gamma \) at most d times before reaching the singleton \((0,0,\ldots ,0)\) and being constant there.

1.2 A.2 Strong Markov property

Proposition A.3

(Part of Theorem 2.2) Let \(({\widetilde{Z}}_t)\) be a Zig-Zag process on \({\widetilde{E}}\) with characteristics \({\mathfrak {X}}, \lambda , \mathcal {Q}\). Then \(Z_t = \iota ({\widetilde{Z}}_t)\) is a strong Markov process.

Proof

By Davis (1993), Theorem 26.14, the domain of the extended generator of the process \(({\widetilde{Z}}_t)\) with characteristics \({\mathfrak {X}}, \lambda , \mathcal {Q}\) is

with

and

The strong Markov property of \(({\widetilde{Z}}_t)\) follows by Davis (1993), Theorem 25.5. Denote by \(({\widetilde{P}}_t)_{t\ge 0}\) the Markov transition semigroup of \(({\widetilde{Z}}_t)\) and let \((P_t)_{t\ge 0}\) be a family of probability kernels on E and such that for any bounded measurable function \(f :E \rightarrow \mathbb {R}\) and any \(t\ge 0\),

Then \((P_t)_{t\ge 0}\) is the Markov transition semigroup of the process \(Z_t = (\iota ({\widetilde{Z}}_t))\). By Rogers and Williams (2000), Lemma 14.1, and since any stopping time for the filtration of \(({\widetilde{Z}}_t)\) is a stopping time for the filtration of \((Z_t)\), \(Z_t\) is a strong Markov process. \(\square \)

1.3 A.3 Feller property

Given an initial point \(\ell , x,v \in {\widetilde{E}}\), let

and define the extended deterministic flow \(\widetilde{\varphi }:{\widetilde{E}} \rightarrow {\widetilde{E}}\) by setting \(\varphi (0, \ell , x, v) = (\ell , x, v)\) and recursively by

with \((\ell ', x',v') = \lim _{t \rightarrow t_{\Gamma _1}}\widetilde{\varphi }_\ell (t, x, v) \in \Gamma \).

Observe that \(t \rightarrow \iota ({\widetilde{\varphi }}(t, \ell ,x,v))\) is continuous on \((E, \tau )\). Define also

Notice that, while \((\ell , x,v) \rightarrow \lambda (\ell ,x,v)\) has discontinuities at the boundaries \(\Gamma \), \((\ell , x,v) \rightarrow \Lambda (\ell ,x,v)\) is continuous. Denote by \(T_1\) the first random event (so excluding the deterministic jumps). Then for functions \(f \in B({\widetilde{E}})\) and \(\psi \in B(\mathbb {R}^+ \times {\widetilde{E}})\), set \(z(t) = (\ell (t), x(t), v(t))\) and define

We have that

with

The Feller property holds if, for each fixed t and for \(f \in C_b( E)\), we have that \((x,v) \rightarrow P_t f(x,v)\) is continuous (and bounded follows easily). This is what we are going to prove below, by making a detour in the space \({\widetilde{E}}\), using the bijection \(\iota \) and adapting some results found in Davis (1993, Section 27), for the process \({\widetilde{Z}}_t\).

Theorem A.4

(Part of Theorem 2.2) \(Z_t\) is a Feller process.

Proof

Take \(f \in C_b({\widetilde{E}})\) such that \(f\circ \iota \in C_b(E)\). Call those functions on \({\widetilde{E}}\) \(\tau \)-continuous. We want to show that \({\widetilde{P}}\) preserves \(\tau \)-continuity. Notice that \(\tau \)-continuous functions on \({\widetilde{E}}\) are such that

For \(\tau \)-continuous functions f and for a fixed t, the first term on the right hand side of (A.1) \((\ell ,x,v) \rightarrow f({\widetilde{\varphi }}(t,\ell ,x,v))\) is clearly continuous. Also the second term is continuous since is of the form of an integral of a piecewise continuous function. Therefore, for any \(t\ge 0, \, \psi (t, \cdot ) \in B({\widetilde{E}})\) and \(\tau \)-continuous function f, we have that \((\ell ,x,v)\rightarrow {\widetilde{G}} \psi (t,\ell ,x,v)\) is continuous. Clearly, the (similar) operator

with \(T_n\) denoting the nth random time, is continuous as well for any fixed \(n,\, t,\, \psi (t, \cdot ) \in B({\widetilde{E}})\) and \(\tau \)-continuous function f. By applying Lemma 27.3 in Davis (1993) we have that for any \(\psi (t, \cdot ) \in B({\widetilde{E}})\)

Finally, if \(\lambda \) is bounded, then we can bound \(P(t\ge T_n)\) by something which does not depend on \((\ell , x,v)\) and goes to 0 as \(n \rightarrow \infty \) so that \({\widetilde{G}}_n \psi \rightarrow {\widetilde{P}}_t f\) uniformly on \(\ell ,x,v \in {\widetilde{E}}\) under the supremum norm. This shows that, for any t, \({\widetilde{P}}_t\) (and therefore \(P_t\)) preserves \(\tau \)-continuity. \(\square \)

Remark A.5

The proof of the Feller and Markov property follow similarly for the Bouncy Particle and the Boomerang sampler.

1.4 A.5 The extended generator of \(Z_t\)