Abstract

Feedback is a key factor in helping individuals to self-regulate their learning behavior. Informative feedback, as a very basic form of feedback informing learners about the correctness of their answers, can be framed in different ways emphasizing either what was correct or what must be improved. The regulatory focus theory describes different strategic orientations of individuals towards goals, which may be associated with different effects of different informative feedback types. A promotion orientation describes the preference for approaching positive outcomes, while a prevention orientation describes the preference for avoiding negative ones. Applied to the context of informative feedback in self-regulated e-learning environments, we predict that regulatory fit, defined as the congruence of individuals’ regulatory orientations and framed feedback, positively affects learning persistence and performance. In two experiments, we assessed individuals’ regulatory orientations and experimentally varied framed feedback in samples of university students preparing for exams with an e-learning tool (N = 182, experiment 1; N = 118, experiment 2) and observed actual learning behaviors. Using different operationalizations of regulatory-framed feedback, we found statistically significant regulatory fit effects on persistence and performance in both experiments, although some remain insignificant. In experiment 2, we additionally tested ease of processing as a mechanism for regulatory fit effects. This way, we expand the literature on regulatory fit effects and feedback on actual learning behavior and provide evidence for the benefits of adaptive learning environments. We discuss limitations, especially regarding the stability of regulatory fit, as well as future directions of research on regulatory-framed feedback.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Is the pursuit of success the same as the avoidance of failure? It depends. At first glance, these two perspectives seem to be two sides of the same coin. In particular, if looking at one specific outcome it seems evident that this is only a matter of perspective. A student might answer three out of six exercises correctly, which is the same as answering three out of six incorrectly. While there is no objective difference in the outcome of both perspectives, research in social psychology has investigated the psychological processes underlying the views from the two different perspectives. Specifically, the regulatory focus theory (Higgins, 1997, 1998) describes two motivational systems: a promotion focus represents the presence or absence of positive outcomes while a prevention focus emphasizes the presence or absence of negative outcomes. The term regulatory orientation describes individuals’ (more or less stable) preferences for one of these two different views. Regulatory orientations are humans’ perspectives on how to get along in this world; they can drive individuals’ goal striving behavior, especially in situations where differences in regulatory foci are salient. Higgins (2000) further developed this framework towards the regulatory fit theory stating that situational cues framed with regulatory foci that match personal regulatory orientations foster goal pursuit. Moreover, the construct has raised attention among educational researchers in the last years (e.g. Hodis, 2018, 2020; Rosenzweig & Miele, 2016; Shin et al., 2017; Shu & Lam, 2016).

Based on the knowledge of the power of feedback on learning achievements (Hattie, 2009; Hattie & Timperley, 2007) we want to investigate the effects of regulatory fit on learning persistence and learning performance of students preparing for final exams. We will use an online learning environment that provides practice exercises for final exams to university students. We want to test the power of those regulatory fit effects in actual achievement-related high-stakes settings. By gathering data of learners practicing personally meaningful content (or at least content to fulfill their goals of passing the exams) for a longer period and not using artificial content and settings, we provide high ecological validity. Furthermore, with our second experiment, we want to further investigate the underlying processes of regulatory fit effects and their connection with fluency (Alter & Oppenheimer, 2009). By using a different manipulation of regulatory focus in the second experiment, we also test the robustness of the assumed regulatory fit effects in an e-learning environment in terms of generalized replicability (Schwarz & Strack, 2014; Stroebe & Strack, 2014).

By expanding the literature on regulatory fit in e-learning environments and testing the effects on actual learning outcomes we can make claims on the potential of adaptive e-learning methods (Shute & Towle, 2003).

2 Theory

2.1 A differential perspective on the power of feedback

Following meta-analyses from the educational field, feedback has a strong impact on learning outcomes (Hattie, 2009). However, whether this effect is always positive or might also be negative has been debated (Hattie & Timperley, 2007). Despite a positive main effect of d = 0.41, Kluger and DeNisi (1996) revealed in their meta-analysis that one-third of the 607 analyzed effect sizes were negative. These findings call for a closer look at factors potentially influencing feedback effects on learners’ persistence and performance. While the traditional literature has focused on the overall effectiveness of different feedback types, we emphasize a person-environment approach to explain differences in feedback effects. Our underlying rationale stems from psychological research from different domains based on the idea of beneficial effects depending on the fit between person and situation (Cable & Edwards, 2004; Kristof-Brown et al., 2005).

When investigating classroom settings, researchers, as well as practitioners often experience that certain subgroups benefit more or less from certain interventions than others. Cronbach and Snow (1969) called this phenomenon aptitude-treatment-interactions. For example, more structured and teacher-centered learning conditions are more beneficial for learners with lower abilities than for learners with higher abilities (Snow, 1989). However, Corno and Snow (1986) concluded that aptitude-treatment-interaction research suffers from two major setbacks. First, aptitude-treatment-interactions are hard to detect due to the statistical nature of interaction effects which need more power to become statistically significant than main effects (Cohen et al., 2003). Second, and in our view even more important, in classroom settings with multiple learners each having individual needs, adapting to every single learner is often not possible. This often results in the fact that aptitude-treatment-interactions may theoretically exist but may be hard to establish within real learning settings. Nevertheless, ongoing digitalization and the rise of e-learning tools with technical opportunities for more individualized learning environments provide emerging opportunities for more individualized learning environments (Shute & Towle, 2003).

Based on general findings on feedback effects (Hattie, 2009; Hattie & Timperley, 2007) and interindividual differences in the effectiveness of educational treatments (Corno & Snow, 1986; Cronbach & Snow, 1969) we aim to further investigate differential effects of informative feedback.

Informative feedback is one of the most basic types of feedback that is, just stating whether a task has been completed successfully or not. It can be used as information about the current competence level and whether further learning activities are needed. But it is interesting to note that the association between task success and motivation to continue is rather complex. Failing at all exercises of a test because they are too difficult might be as demotivating as solving exercises that are too easy. Receiving informative feedback implying that the task difficulty matches individual skills is optimal for motivation (cf. Broadhurst, 1959; Endler et al., 2012). Furthermore, informative feedback is the foundation of corrective feedback about the task and its correct solutions. Even though it is rather basic, such corrective feedback types have a meaningful impact on learning outcomes (Hattie & Timperley, 2007; Kluger & DeNisi, 1996). A more recent meta-analysis also revealed small to medium-sized effects of reinforcement or punishment (providing solely information on the task; d = 0.24) and corrective feedback types (d = 0.46), but also their large heterogeneity (Wisniewski et al., 2020). This general heterogeneity in the effectiveness of feedback interventions has been addressed by Kluger and DeNisi (1996, 1998). Within their feedback intervention theory, they propose that the effectiveness of feedback depends on learners’ uptake of the presented information. Therefore, feedback needs to be meaningful for learners to guide behavior and following research continued improving the beneficial power of feedback on learning outcomes by suggesting more elaborated feedback types. The meaningfulness of feedback is dependent on the characteristics of the learner and the situation. For example, Brunot and colleagues (1999) reported that high-achievers were more self-focused after receiving feedback about failures compared to success feedback, while low-achievers were more self-focused after feedback about success compared to feedback on failures. However, only little interest has been spent on the question of whether possible moderations explain differences in effects of different feedback signs of informative feedback (success/failure) on individual motivation (Van-Dijk & Kluger, 2004). Therefore, we aim to investigate whether the effectiveness of informative feedback, as one of the easiest feedback types to implement in digital learning environments, can be improved.

2.2 Regulatory-framed feedback

As outlined, we take a differential perspective on feedback intervention and will focus on a theory describing strategic orientations guiding human behavior, which might be suitable for predicting differential reactions to different forms of informative feedback. As such, the regulatory focus theory (Higgins, 1997, 1998) proposes two different motivational systems. A promotion focus describes tendencies to approach positive outcomes and focuses on the pursuit of aspirations, hopes, and wishes (strong ideals). A prevention focus describes tendencies to avoid negative outcomes and emphasizes the fulfillment of obligations, duties, and responsibilities (strong oughts). These definitions provide distinctions based on two different dimensions. First, individuals with different regulatory foci emphasize different means by either focusing on ideals or oughts. Beside this self-guide definition, the reference-point perspective distinguishes promotion and prevention by approach and avoidance. The latter definition applies to the framing of feedback. In terms of regulatory focus, one can describe end states by a gain-nongain perspective (promotion) or a loss-nonloss perspective (prevention). In particular, when preparing for an exam, a promotion focus leads individuals to solve as many tasks correctly as possible (matching desired end-state) while a prevention focus emphasizes making no mistakes and answering items incorrectly (mismatching undesired end-states).

Individuals develop regulatory orientations as (rather stable) manifestations of regulatory foci. Higgins (1997) took a developmental perspective by explaining those manifestations as a result of child-caretaker interactions in early childhood: Children who receive parental styles of emphasizing danger avoidance and are told to be careful are more likely to develop stronger prevention orientations. Children who are encouraged to overcome obstacles and pursue their dreams are more likely to develop stronger promotion orientations.

Both regulatory orientations are stated to be independent systems. Empirical evidence from different measurements of regulatory orientations supports this notion. Studies using different measurements of regulatory orientations like the regulatory focus questionnaire (RFQ, Higgins et al., 2001) and the general regulatory focus measure (GRFM, Lockwood et al., 2002) have in common that promotion and prevention subscales are not strongly negatively correlated (as a unidimensional approach of promotion and prevention as endpoints of one continuum would indicate) and may, in fact, be either unrelated or even positively associated (Haws et al., 2010). Therefore, one should be careful with collapsing a promotion and prevention focus. However, some researchers decided to do so, to investigate the effects of predominant relative regulatory orientations (e.g. Avnet & Higgins, 2006; Förster et al., 1998; Keller & Bless, 2006; Louro et al., 2005). We also want to highlight that regulatory orientations are described as domain-general in psychological literature. However, these are stated to be especially relevant in achievement and performance domains (Lanaj et al., 2012).

Research on regulatory orientations demonstrated that they influence human perception and behavior in various fields. Higher discrepancies between actual and ideal selves (promotion) relate to dejection-related emotions like disappointment, dissatisfaction, and sadness while higher discrepancies between actual and ought selves (prevention) relate to agitation-related emotions like fear, threat, and restlessness (Higgins, 1987; Higgins et al., 1986). Brockner and colleagues (2002) demonstrated that higher promotion success (congruence of actual and ideal self) relates to higher accuracy of disjunctive probability estimates and higher prevention success (congruence of actual and ought self) relates to higher accuracy of conjunctive ones. Additionally, according to a meta-analysis from the field of organizational psychology by Gorman and colleagues (2012), a promotion focus predicts task performance as well as affective, continuance, and normative commitment and is positively associated with organizational citizenship behavior, while a prevention focus positively relates to normative and continuance commitment. Moreover, Förster and colleagues (1998) revealed that both chronic regulatory orientation as well as manipulated situational regulatory states affect individuals’ approach and avoidance tendencies. In particular, they measured arm flexion and extension as an indicator of approach-avoidance. A chronic or activated promotion focus is related to steeper approach gradients, while a chronic or activated prevention focus is related to steeper avoidance gradients. In a more recent study from educational psychology, Rosenzweig and Miele (2016) revealed higher performance of individuals with a higher prevention orientation on laboratory tasks as well as college midterms and final exams and concluded that holding a prevention orientation is adaptive for performance in low- and high-stakes situations. On the other hand, holding a promotion orientation is associated with higher expectancies about success than holding a prevention focus (Hodis, 2018, 2020).

Besides those effects of chronic regulatory orientations, other studies relied on the manipulation of regulatory foci or individuals’ response towards stimuli framed with a promotion or prevention focus, especially in terms of approach-avoidance (Keller & Bless, 2006; Shah et al., 1998; Shin et al., 2017). A common manipulation of regulatory focus is the framing of instruction of tasks. For example, Keller and Bless (2006) varied the instruction of math tests in terms of gains (for correct answers) and losses (for incorrect answers) and Shin et al. (2017) informed participants of a stroop task either about successes (promotion condition) or failures (prevention condition).

2.3 Regulatory fit—when feedback fits orientations

To answer the question of whether it is better to tell learners that they have already answered three out of six tasks correctly or that they only failed on three out of six, it is necessary to consider the development of regulatory focus literature. Shah and colleagues (1998) revealed that performance in anagram tasks is higher when the regulatory framing of an incentive (earning an extra dollar if 90% of the words are identified as a promotion frame and not losing an extra dollar by not missing more than 10% of the words) meets individuals’ chronic focus, i.e., their regulatory orientation. The regulatory fit literature emphasizes those beneficial effects of framing situational cues towards individuals’ preferences. In particular, Higgins (2000) claimed that individuals gain “value from fit” in three central manners: under regulatory fit (a) they are more inclined towards goals, (b) show stronger goal pursuit, and (c) their respective feelings about choices made are more positive. Aaker and Lee (2006, p. 15) condensed this under the term: “it-just-feels-right”.

In the last two decades, many studies underlined the beneficial effects of regulatory fit. A meta-analysis showed overall medium-sized effects on evaluation, behavioral intention, and behavior itself (Motyka et al., 2014). Regulatory fit supports individuals’ self-regulation in many fields, such as in health-related settings. For example, under regulatory fit, individuals show higher physical endurance on a handgrip exercise, choose an apple as a healthy snack and resist the temptation of a chocolate bar, and are more willing to seek medical examination (Hong & Lee, 2008). Also, in educational settings, regulatory fit has a positive impact on learners’ self-regulation. In one of the classic studies, Spiegel and colleagues (2004) provided indirect evidence for bolstered motivation under regulatory fit. They asked participants to write optional reports in their leisure time and assessed how many participants handed in those reports. Participants in the promotion condition were asked to imagine conditions (where/when/how) that would be favorable for task completion, while participants in the prevention condition were asked to imagine conditions unfavorable for task completion. Under regulatory fit, if the strategic means of the instruction matched individuals’ regulatory orientations, participants were 50% more likely to hand in their reports. However, not only goal striving and persistence were bolstered, but also performance. Keller and Bless (2006) stated that regulatory fit influences cognitive test performance. They provided high school students with tests and framed the instruction. Regulatory fit improved students’ performance as predicted. Students with a higher relative promotion focus performed better with a promotion instruction than a prevention instruction. Also, research from educational psychology already distinguished induced regulatory fit effects on persistence and performance. Shu and Lam (2016) induced regulatory fit (or misfit) by giving framed feedback about success and failure after a promotion or prevention priming and revealed both higher task persistence as well as higher performance under regulatory fit.

With our present research, we aim to investigate the beneficial effects of regulatory fit effects on persistence and performance in e-learning environments, establishing high ecological validity of regulatory fit effects in the field and underlining the emerging opportunities of adaptive e-learning environments, where treatments and instructions fit individuals’ abilities and needs (Corno & Snow, 1986; Cronbach & Snow, 1969; Shute & Towle, 2003). We want to highlight, that we consider this approach of fostering self-regulation via regulatory fit as especially promising in situations where learning activities are mainly dependent on self-regulation and not external drivers. This assumption is based on the distinction between weak and strong situations and their consequences for interindividual differences in behavior (Mischel, 1968). While the situational strength of strong situations allows for little individual variance in behavior, the opposite holds true for weak situations. For learning activities, this implies that compared to high structured situations (like sitting in class or having little time left to prepare for exams), in low structured situations where the perceived pressure to execute learning activities is low, fostering self-regulation with regulatory fit might be especially meaningful. This also contributes to Steel’s temporal motivation theory (2007), stating that interindividual differences predict the execution of goal striving behavior if time to deadlines is long.

2.4 Persistence in e-learning under regulatory fit

Initiating and maintaining learning activities is a self-regulatory challenge (Klingsieck, 2013; Steel, 2007). A large body of literature on regulatory fit effects from different domains claimed regulatory fit strengthens goal pursuit (Hong & Lee, 2008; Spiegel et al., 2004). Prior research also investigated the mechanisms of this motivational effect. Freitas and Higgins (2002) state that under regulatory fit, individuals perceive more enjoyment during goal-directed actions. In two experiments with different manipulations of regulatory foci, participants under regulatory fit reported higher anticipated enjoyment. In a third experiment, participants under regulatory fit evaluated a task as more interesting and enjoyable than those under misfit, and even the willingness to repeat the task was higher.

In the present paper, we aim to transfer the findings of regulatory fit fostering self-regulation via instructions and framings to the feedback context of e-learning and extend the literature on differential effects of feedback (Hattie & Timperley, 2007; Kluger & DeNisi, 1998) with evidence on actual learning behavior compared to controlled laboratory settings. Hence, we predict higher learning persistence in e-learning environments if additionally provided informative feedback about success and failure is regulatory-framed towards own chronic orientations (Freitas & Higgins, 2002; Higgins, 2000; Spiegel et al., 2004). Individuals with a high chronic promotion orientation in terms of a high strategic orientation towards approaching positive outcomes should benefit more from feedback highlighting success and opportunities for success improvement than feedback emphasizing avoided or actual failures. The opposite holds for individuals with a high prevention orientation. As we respect the independence of regulatory orientations, we propose separate hypotheses for both regulatory orientations:

H1a

Promotion (prevention) framed feedback will enhance (decrease) learning persistence for learners with a higher chronic promotion orientation.

H1b

Prevention (promotion) framed feedback will enhance (decrease) learning persistence for learners with a higher chronic prevention orientation.

2.5 Performance in e-learning under regulatory fit

We consider regulatory fit to not only bolster persistence but also performance in e-learning environments. This focus on rather cognitive outcomes is also a topic of the regulatory fit literature (Keller & Bless, 2006; Shah et al., 1998; Shu & Lam, 2016). For example, individuals’ performance on anagram tasks was higher when task incentives were framed towards their own regulatory orientations (Shah et al., 1998). Keller and Bless (2006) conducted two experiments testing the cognitive effects of regulatory fit on high school students. In their first study, they manipulated the instruction of a math test by either telling the students that the test was designed to indicate exceptionally strong math abilities (promotion frame) or that the test would indicate exceptionally weak math abilities (prevention frame). Students receiving instructions fitting with their predominant regulatory orientation performed better on the math test. In the second experiment, they conceptually replicated their results using a gain–loss framing as manipulation. In the promotion condition, they told students that they would receive one point for each correct answer. In contrast, in the prevention condition, they were also told that there would be one point per correct answer, but for every wrong or missing answer, one point would be detracted. Again, relative regulatory fit predicted performance in this study with higher performance under regulatory fit than under misfit. However, little is known about the underlying processes explaining those regulatory fit effects on performance. Keller and Bless (2006) discussed two plausible explanations. The higher performance under regulatory fit might be a distal outcome of the fostered persistence and self-regulation. However, there is an alternative—more cognitive—explanation of fit effects on performance: Instructions in line with own preferences might be easier to process and therefore more capacities for task completion might be available. There is distal evidence for such a general underlying process of easier processing of information in line with own preferences (Macrae et al., 1993; Stangor & McMillan, 1992) and this explanation might be linked to the more recent cognitive load theory (Sweller, 2011). The cognitive load theory distinguishes intrinsic cognitive load (load inherent to the task), extraneous cognitive load (load due to poorly designed information presentation), and germane cognitive load (load of a deeper procession of instructional messages). We assume this to be a possible explanation as mismatching information is an additional source of extraneous cognitive load, which reduces performance according to the cognitive load theory due to higher attention split (Ayres & Sweller, 2005).

Taken together, the work by Keller and Bless (2006) demonstrated the power of regulatory fit effects on performance in classroom settings impressively but has some shortcomings. First, they collapsed both regulatory orientations, which denies the independency of both (Haws et al., 2010; Summerville & Roese, 2007). Second, the manipulation in the second experiment did not only frame regulatory focus. The manipulation included a conceptual difference between getting points in the promotion condition and getting points but also losing points in the prevention condition. We consider this approach to cofound a promotion focus with a maximizing strategy and a prevention focus with an optimizing strategy. Third, though they established high ecological validity by studying students in classroom settings, the students were aware of the experimental situation as an experimenter entered the classroom for the studies and this was not a high-stakes situation of actual exams.

We aim to address these shortcomings by transferring the results of Keller and Bless (2006) to the e-learning context of actual exam preparation with practice exercises. We consider the cognitive explanation of enhanced cognitive resources under regulatory fit and, even more plausibly, the straining of resources due to misfit as a possible underlying mechanism (Sweller, 2011). However, based on the rationale of reduced cognitive resources due to interference of misfitting information, we assume this effect only for items presented together with the feedback. Therefore, we predict fit effects—again separate ones for both regulatory orientations—for those exercises introduced with feedback simultaneously:

H2a

Promotion (prevention) framed feedback will enhance (decrease) learning performance for learners with a higher chronic promotion orientation.

H2b

Prevention (promotion) framed feedback will enhance (decrease) learning performance for learners with a higher chronic prevention orientation.

3 Experiment 1

3.1 Methods

With our first experiment, we aim to demonstrate regulatory fit of feedback effects on learning persistence and performance in an e-learning environment. We implemented feedback with regulatory framing towards approach or avoidance in an existing online-learning environment, which has already been used for investigating fit effects of feedback with different comparison standards (Janson et al., 2022). This e-learning tool provides practice exercises for university students to prepare for final exams. It is used as an addition to existing teaching formats like lectures and tutoring and relies on the general testing principle, which proposes practice testing as one of the most effective learning methods (Dunlosky et al., 2013; Roediger & Karpicke, 2006).

3.1.1 Sample

One-hundred-and-eighty-two users of the e-learning software participated in the first experiment (24 were excluded due to a failed attention check). Participants’ mean age was 21.69 years (SD = 4.21) and 150 were female (30 male, 2 other). Participants were psychology undergraduate students using the learning software either for preparing for a statistics exam or an exam covering topics of diagnostics and differential psychology.

3.1.2 Design

We investigated fit effects on learning persistence and learning performance within the e-learning software. To evaluate the proposed fit effects, we assessed the chronic regulatory orientations (promotion and prevention) of our participants as one independent variable and implemented a manipulation of the feedback as another. We manipulated the regulatory-framed feedback within person for two reasons. First, we wanted to obtain maximum power for our design as we expected noisy data due to field settings. Second, we dismissed a between-subject design due to ethical concerns while investigating actual learning activities of students preparing for final exams.

3.1.3 Materials

3.1.3.1 Learning software

We used an existing online e-learning environment for our experiment (Siebert & Janson, 2018) providing students with practice exercises for self-regulated exam preparations. The e-learning software combines practice exercises with numerous parallel item versions with an adaptive algorithm selecting exercises learners are struggling with based on the individual learning history to maximize the power of the testing effect and distributed learning (Dunlosky et al., 2013). The software provides several types of formative feedback. First corrective feedback on the item is given after each exercise (e.g., an adequate approach for arithmetic problems). Also, the software provides a total score of learned content based on the learning history. After accomplishing certain milestones for each chapter of a learning package a medal is given to the learner (ranging in five steps from bronze to diamond).

3.1.3.2 Regulatory orientations assessment

We measured the regulatory orientations of participants via a web-based German translation of the GRFM (Lockwood et al., 2002), which was also used by Keller and Bless (2006). It measures promotion and prevention with nine items per subscale. We decided to use the GRFM as it has several advantages for our purposes compared to other measurements (Haws et al., 2010). First, it emphasizes the approach-avoidance definition of regulatory focus, which we are concentrating on. Second, the items are tailored for usage in the academic context. The scales consist of items such as “I typically focus on the success I hope to achieve in the future” and “my major goal in university right now is to achieve my academic ambitions” to measure a promotion orientation and “in general, I am focused on preventing negative events in my life” and “my major goal in school right now is to avoid becoming an academic failure” to assess a prevention orientation. We collected answers on a five-point Likert scale using (1) “strongly disagree” and (5) “completely agree” as endpoints.

3.1.3.3 Regulatory-framed feedback

We implemented pop-up feedback during the learning sessions. The additional feedback provided information on the current position between the last achieved medal and the upcoming medal for the respective chapter. As illustrated in Fig. 1 a bar indicated the position of the learner. In the promotion condition, the space towards the next medal was highlighted. The corresponding texttold the learner, for example, “you already completed 75% of the chapter [chapter name]. Keep trying to solve the tasks correctly to reachthe platinum medal”. In the prevention condition, the bar highlighted the space back to the already achieved medal. The corresponding text told the learner, for example, “you already completed 75% of the chapter [chapter name]. Keep trying not to answer tasks wrong to not fall back to silver”. This feedback was presented as a pop-up on the learning screen after each fifth item under the circumstance that the learner had more than 50% on the way to the next medal.Footnote 1 We pretested the feedback with an additional sample of 20 pretesters. They rated the regulatory framing of the feedback types on a unidimensional scale (“this type of feedback predominantly emphasizes…”) with the endpoints “avoiding negative outcomes” (1) and “approaching positive outcomes” (7). We observed a statistically significant difference in the ratings of the promotion frame (M = 6.5; SD = 1.19) and the prevention frame (M = 1.5; SD = 0.89), t(19) = 13.516, p < .001, d = 4.78.

Regulatory-framed feedback in experiment 1. Illustrations of the regulatory-framed feedback pop-ups in the learning software. In the promotion condition (left) the part of the progress bar towards the next medal (light cyan [online]; light grey [print]) flashed in light cyan and white. In the prevention condition (right) the part of the progress bar towards the last medal (light cyan [online]; light grey [print]) flashed in dark and light cyan

3.1.3.4 Learning outcomes

We used logfiles of the e-learning software to observe the effects of regulatory fit on learning outcomes. For measuring learning persistence, we checked whether learners abort the learning activities after presented feedback. Therefore, we coded whether the feedback presented was the last feedback seen in this learning session. A learning session was defined as ongoing learning activities until a user actively quit the session or was inactive for more than 10 min. We collected data from the items answered directly after the presented feedback to measure the direct impact of the feedback on learning performance. For each exercise, the software automatically checks whether the item was solved correctly or not.

3.1.4 Procedure

We invited all users of the learning software to participate in the evaluation of the new feedback system introduced to the software at their first login. After giving informed consent to participate in exchange for course credits, participants answered our online questionnaire including the assessment of regulatory orientations. Afterward, participants used the software for individual exam preparation over a period of up to eight weeks. Every time the software presented the feedback it randomly selected either the promotion or the prevention framed version.

3.2 Results

3.2.1 Preliminary analyses and descriptives

We checked the internal consistency of our used regulatory orientation assessment. Both, the prevention (α = .85) and the promotion (α = .75) items yield good reliability. The promotion and prevention scale were slightly correlated, r = -.15, p = .04. Second, we inspected the data of the learning software. Learning activities were extremely skewed with most activities in the week before the exam. We decided to include all data until two weeks before the exam. This rationale is based on the principle of fewer interindividual differences in strong situations (Mischel, 1968) and, more specifically, the temporal motivations theory on procrastination also stating fewer procrastination tendencies if the time till the exam is low (Steel, 2007). Therefore, we take this as an indication to not rely on data where the situational strength of the upcoming exams is driving learning activities more than potential self-regulatory systems like regulatory fit. Still, the dataset contains learning activities of on average 12.23 h (SD = 10.74) per learner and each of them performed on average 623.89 practice exercises (SD = 610.87).

3.2.2 Main analyses

3.2.2.1 Analytical procedure

We conducted stepwise multi-level binomial regressions using the lme4 package (Bates et al., 2014) for R. Each instance of feedback was a unit of observation on L1 clustered in participants on L2. We analyzed the effects of feedback type (L1) on persistence (L1) and performance (L1) dependent on participants' regulatory orientation (L2). For all analyses, we selected an alpha-level of 5% (one-tailed). We entered type of feedback as an effect-coded variable (prevention: − 1; promotion: 1) and centered all continuous variables. We also included certain covariates to reduce the noisiness of the field data. We included time till the exam as a control variable to further control for intensified learning activities approaching the end of the semester. Also, as motivation to maintain is dependent on task difficulty and performance on items is best predicted by former performance, we included the proportion of correct items during the session as a covariate as well as the ID of the respective item as an additional clustering variable. According to the existing literature (Broadhurst, 1959; Endler et al., 2012) medium task difficulty is optimal for motivation. Thus, we transform it as p*(1 − p) to achieve the highest values for medium solution probabilities and lower ones for high as well as low solution probabilities for the analyses on learning persistence. Furthermore, we included the session length (as abortion is obviously more likely the longer a session continues) for the analyses on persistence and the amount of time spent on the item (item processing time) for the analyses on performance as well as the already achieved medal presented in the feedback (level) and the progress until the next medal (progress) as possible covariates to further reduce error variance in both regressions. The results of our regression analyses are displayed in Table 1.Footnote 2

3.2.2.2 Learning persistence

We found no statistically significant main effects of the N = 5632 feedback and the scaled regulatory orientations on session abortion. Out of our proposed fit effects, we found a statistically significant interaction of promotion orientation and feedback type using one-tailed testing, bpromotion × feedback = -0.124, p = .057.Footnote 3 This interaction indicated that session abortion was less likely for persons with a higher promotion orientation if promotion feedback was present and supported H1a. However, we observed no statistically significant interaction of prevention orientation and feedback type, bprevention × feedback = -0.076, p = .246, resulting in no support for H1b.

3.2.2.3 Learning performance

Because some learners aborted sessions with the presence of the feedback and therefore the task performance after feedback is missing, the sample size of our analyses on those exercises after feedback was slightly reduced (N = 5379). Again, only the interaction of promotion orientation and feedback-type was statistically significant and in the stated direction, bpromotion × feedback = 0.135, p = .066. With a higher promotion orientation the probability of solving an exercise was enhanced if promotion feedback is presented supporting H2a. The interaction of prevention orientation and feedback-type remained statistically insignificant, bprevention × feedback = 0.066, p = .135, not supporting H2b.

3.3 Discussion

With our first experiment, we provided first evidence for regulatory fit effects of feedback in e-learning environments. The fit effects were observable for both outcomes persistence and performance. However, we were only able to reveal fit effects for a promotion and not for a prevention orientation. As illustrated in Fig. 2, learning aborts were more likely for persons with high promotion orientation when prevention feedback was presented, but the interaction also indicated that for persons with a low promotion orientation, abort tendencies were higher under promotion feedback. An inverted pattern was observable for learners’ success probabilities after presented feedback. Namely, more exercises were solved correctly after promotion feedback compared to prevention feedback for individuals with high promotion orientations, while the opposite held true for learners with low prevention orientations. Our results underline the necessity to investigate promotion and prevention separately. We observed a disordinal interaction of promotion orientation and feedback type. By collapsing both, for example, using relative regulatory focus computed by the difference of promotion and prevention orientations (as used by Avnet & Higgins, 2006; Förster et al., 1998; Keller & Bless, 2006; Louro et al., 2005), we would not have been able to disentangle if a fit effect of relative regulatory focus is driven by both regulatory foci or solely by one. Besides our first findings of regulatory fit effects on learning persistence and performance, the first experiment had shortcomings. The framed feedback was very abstract, giving feedback on the overall learning progress on chapters. This abstract feedback might have been too uninformative for learners or especially too unrelated to current learning activities. The lack of empirical support for prevention fit could also be caused by the decision to show the feedback only if the chapter progress was higher than 50%. Consequently, approaching a positive outcome was more accessible than the avoidance of a negative outcome. Moreover, the first experiment solely focuses on the outcomes of fit effects but not on underlying processes.

Therefore, we continued our research with two goals. First, we aimed to conceptually replicate our findings with a different operationalization of regulatory framing to be able to generalize the findings across feedback types. We wanted the feedback to give information on a more concrete level as referring to single items might be more valuable for learners even if it is more retrospective. In contrast to the first experiment, in which the feedback was only presented if the progress was higher than 50%, the feedback should be presented in a constant sequence independent of the current progress. This way we could check whether the observed dominance of promotion fit effects in the first experiment was due to design or conceptual differences between the two regulatory foci.

Second, we aimed to investigate the underlying processes of regulatory fit effects in our context. This way, we could expand the body of literature on regulatory fit. Freitas and Higgins (2002) already connected regulatory fit effects to higher task enjoyment and Aaker and Lee (2006) describe regulatory fit as “it-just-feels-right”. This is closely related to the construct of perceived easiness of information processing (Schwarz et al., 1991). Alter and Oppenheimer (2009) provided an overview of different processes which influence the experienced ease of processing. Altering perceptual fluency, for example, with different levels of contrast, is a common method of manipulating fluency (Reber et al., 1998). In our second experiment, we investigated whether processing ease is an underlying mechanism of regulatory fit effects. If regulatory fit enhances persistence via task enjoyment and willingness to repeat the task (Freitas & Higgins, 2002) and is also based on a meta-cognitive experience of feeling right (Aaker & Lee, 2006) this effect should rely on fluency or a fluency-like mechanism.

Instead of measuring processing ease, we decided to directly manipulate perceptual fluency as a manifest factor related to the perceived ease of processing (Alter & Oppenheimer, 2009). Moreover, this moderation-of-process design has more causal strength (Spencer et al., 2005). The general rationale is based on the following principle: If X affects Y via M, the traditional approach to test the mediation is to follow Baron and Kenny (1986) and to test whether X affects Y as well as M and if M affects Y (and to statistically check whether there is full mediation, i.e., no residual association of X and Y not explained by M). However, this approach is limited as it is correlational and the measurement per se can be seen as an additional manipulation. Therefore, Spencer et al. (2005) suggest a completely new moderation-of-process approach. The logic behind this approach is as follows: To test whether M is the psychological process in the causal chain between X and Y, a manipulation of M is recommended. If X affects Y via M, the effect of X on Y should be dependent on manipulations of M. In particular, if higher X leads to higher Y via higher M, an experimental reduction of M should diminish the effects of X on Y. Therefore, for the design of our second experiment, we decided to experimentally manipulate perceptual fluency in order to test the (assumed mediating) role of ease of processing in the process between regulatory fit and learning outcomes.

Thus, we propose that an additional manipulation of perceptual fluency should alter the strength of regulatory fit effects. One might speculate about the direction of the effect. First of all, it is necessary to consider that individuals are sensitive to changes in fluency (Hansen et al., 2008; Wänke & Hansen, 2015). As we assume that learners experience fluency under regulatory fit, any additional cues leading to lower experienced ease of processing should diminish the effects of regulatory fit. By adding such disfluent cues after the perceived fit, we test whether experienced ease of processing is the underlying process of regulatory fit effects on learning persistence and performance. Hence, we propose:

H3a

Fit effects of promotion orientation and regulatory-framed feedback on learning persistence are stronger under fluency than under disfluency.

H3b

Fit effects of prevention orientation and regulatory-framed feedback on learning persistence are stronger under fluency than under disfluency.

We also believe fluency influences regulatory fit effects on learning performance. We have already stated fitting feedback to enhance learning performance as it is easier to process and binds fewer cognitive resources. Ease of processing might be influenced by regulatory fit as well as perceptual fluency. According to the cognitive load theory (Sweller, 2011) disfluency should induce extraneous cognitive load. On the other hand, recent studies have investigated the effect of disfluent illustration of learning material on learning performance (Eitel et al., 2014). The authors revealed that in fact, disfluent versions of learning materials lead to better performance. However, this is not a contradiction. Manipulations focusing learners’ attention towards the learning material are not exactly an addition of extraneous cognitive load. However, an additional introduction of perceptual disfluent material provided beside the learning material (e.g., general information not necessary for the current task) should reduce the ease of processing and consequently the positive effects of regulatory fit.

H4a

Fit effects of promotion orientation and regulatory-framed feedback on learning performance are stronger under fluency than disfluency.

H4b

Fit effects of prevention orientation and regulatory-framed feedback on learning performance are stronger under fluency than disfluency.

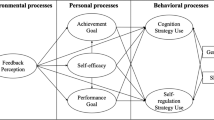

The complete conceptual model is displayed in Fig. 3.

4 Experiment 2

4.1 Methods

Our second experiment was a conceptual replication of the first one. Again, we used an online learning environment to investigate regulatory fit effects on learning persistence and performance. However, we changed the operationalization of the regulatory framing and the within design slightly. Instead of randomly selecting the framing for each feedback, the feedback type was constant for learning sessions but randomly assigned across all learning sessions. At the beginning of each learning session, we randomized whether promotion or prevention framed feedback was presented. This way, we wanted to observe the effects over longer periods. Additionally, we included a manipulation of fluency to test the underlying mechanism of fit effects.

4.1.1 Sample

After the exclusion of 30 users failing an attention check item included in the regulatory orientations assessment, the final sample consisted of 118 users of the e-learning software (108 female, 10 male) with a mean age of 20.87 years (SD = 2.96). Again, participants used the learning software either for preparing for a statistics exam or an exam covering topics of diagnostics and differential psychology over a period of up to 12 weeks.

4.1.2 Design

We conducted an experiment using the same online e-learning software as in experiment 1. We used a 2 × 2 × 2 mixed design to investigate the effects of chronic regulatory foci (between-subject), regulatory-framed feedback (within-subject: promotion vs. prevention), and fluency (between-subject: fluent vs. disfluent) on learning persistence and performance as dependent variables. While the regulatory frame for the feedback was randomized at every single presentation in the first experiment, we modified the design keeping the feedback frames constant for every learning session with randomization at the beginning of the session.

4.1.3 Materials

4.1.3.1 Learning software

We used the same learning software as in our first experiment (Siebert & Janson, 2018).

4.1.3.2 Regulatory orientations assessment

Again we used the German translation of the GRFM (Keller & Bless, 2006; Lockwood et al., 2002) to measure promotion and prevention orientations. We also included one item asking participants to select one specific answer option to check whether participants carefully processed the questionnaire.

4.1.3.3 Regulatory-framed feedback

We implemented another regulatory-framed feedback to the software (see Fig. 4). Different to experiment 1, this feedback focused more concretely on the performance of users for the last seven items. Three components of the feedback were manipulated: framing of results, framing of instruction, and visualization. First, we used an approach-avoidance framing by highlighting successes or failures (Shin et al., 2017). In the promotion condition, the feedback provided the information: “You answered X questions correctly”. In the prevention condition, the feedback provided the following information: “You answered X questions wrong.” Furthermore, participants were asked to “try to achieve maximum performance on the next exercises” in the promotion condition and “try to avoid any mistakes on the next exercises” in the prevention condition (Keller & Bless, 2006).

We also visualized the regulatory-framed feedback. We presented symbols for each of the seven exercises referred to in the feedback. In the promotion condition, correctly solved items were highlighted with a checkmark on a green background and incorrectly solved items remained grey. In contrast, in the prevention condition, incorrectly solved items were visualized with a cross on a red background and correctly solved items remained grey. The priming of regulatory foci with green and red has been used by other researchers as well (Förster et al., 1998; Shah et al., 1998). We also asked our 20 pretesters to rate whether “this type of feedback predominantly emphasizes…” on a unidimensional rating scale with the endpoints “avoiding negative outcomes” (1) or “approaching positive outcomes” and observed a statistically significant difference between the promotion frame (M = 5.40; SD = 1.90) and the prevention frame (M = 1.40; SD = 0.75) t(19) = 7.72, p < .001, d = 2.90.

4.1.3.4 Fluency manipulation

We included an additional perceptual fluency manipulation in the experiment. After each instance of feedback, learning advice was presented on the pop-up screen right after the regulatory-framed feedback. The learning advice was randomly selected from a pool of 22 different forms of advice which contain general learning advice like “testing is one of the most effective learning strategies. It is more effective than rereading or highlighting learning materials” or “if you like to hear music during learning, use instrumental music only. Due to the irrelevant-speech-effect vocal sounds impair the learning process.” We presented the learning advice with normal font type and color in the fluent condition. In the disfluent condition, the learning advice was harder to read due to a blurred font type. We also asked our 20 pretesters whether they experienced the readability of the font type as “very bad” (1) or “very good” (7). For this manipulation, we also found statistically significant differences between the fluent (M = 6.70; SD = 0.57) and the disfluent condition (M = 2.90; SD = 1.37), t(19) = 11.01, p < .001, d = 3.67.

4.1.3.5 Learning outcomes

Again, we used the logfiles of the e-learning software. As a measurement of learning persistence, we used the learning time spent in the sessions. As feedback-type was constant for each learning session, we checked how long participants learned under the influence of either promotion or prevention framed feedback. Congruent with the first experiment we only used performance on items after feedback to measure the direct impact of the feedback. However, as in this experiment we did not randomize the feedback within sessions, we aggregated the learning performance for each session to also have a dependent variable on session level as we have for persistence.

4.1.4 Procedure

The procedure was the same as the procedure of the first experiment, with two exceptions. At the first login, one of the two fluency conditions was selected for each user randomly. To guarantee blindness of participants regarding hypotheses, we decided to keep the fluency constant for each person as we consider psychology students able to recognize a change in font type not as a feature of the software but as psychological treatment. Also, as explained we shifted from randomly varying each instance of feedback within a session to only randomize whether promotion or prevention feedback is shown for the whole learning session. A learning session began when learners selected chapters they wanted to go on within the software. After each seventh item, the framed feedback was presented on a pop-up on the screen. This feedback was succeeded by the learning advice. Learners were only able to continue with the exercises after clicking away the pop-up. The endings of learning sessions were logged when participants actively quit or were inactive for more than 10 min (with the 10 min subtracted from learning time automatically).

4.2 Results

4.2.1 Preliminary analyses and descriptives

Again, the internal consistencies of promotion and prevention provided support for good reliability of the promotion (α = .81) and the prevention (α = .82) scales. We found no statistically significant correlation of promotion and prevention, r = .02, p = .79. Congruent with the first experiment, we included all learning sessions until two weeks before the exams. In total, participants performed 3702 sessions and each learner on average spent 17.08 h (SD = 16.84) within the software.

4.2.2 Main analyses

4.2.2.1 Analytical procedure

In this experiment, learning sessions were the units of observation on L1, clustered in participants on L2. We conducted stepwise multi-level regressions with feedback-type (L1), regulatory orientations (L2), and fluency (L2) as predictors for learning persistence (L1) and learning performance (L1). Learning persistence was measured as the learning time per session in minutes, logarithmized to approximate a normal distribution of the skewed learning times, and the proportion of correct items after feedback served as the dependent outcome for learning persistence. Again, we entered type of feedback as effect-coded variable (prevention: − 1; promotion: 1) and an additional dummy-variable indicated the fluency of the learning advice (fluent: 0; disfluent: 1). All continuous variables were centered. Congruent with the first experiment we included the performance per session (p*1 − p) as a control variable for learning persistence and the logarithmized learning time as a control variable for learning performance. Also congruent with experiment 1, we added the time till exam as a covariate for both outcomes. For all analyses, we selected an alpha-level of 5%. The results of the regressions are printed in Table 2.

4.2.2.2 Learning persistence

Out of the main effects of the interesting variables, the learning time was only lower for participants with a higher promotion orientation, b = -.153, p = .010. Regarding the proposed two-way interactions, we found a statistically significant fit effect of promotion orientation and feedback type, bpromotion × feedback = 0.075, p = .007. Individuals with a higher promotion orientation learned for longer if promotion framed feedback was presented compared to prevention framed feedback, replicating support for H1a. We observed no fit effect for prevention, when the three-way-interaction was not included, bprevention × feedback = -0.022, p = .416. Furthermore, to test if fit effects are affected by fluency, we inspected the three-way-interactions of fit effects with fluency. We were not able to reveal the proposed three-way-interaction of promotion with fluency, bpromotion × feedback x fluency = -.002, p = .967, but the two-way interaction remained statistically significant, bpromotion × feedback = 0.098, p = .008, indicating stable promotion fit effects across both fluency conditions and no support for H3a. For prevention, we observed a statistically significant three-way-interaction, bprevention × feedback x fluency = 0.134, p = .014, indicating prevention fit effects to be dependent on the fluency of the additional learning advice. Actually, we observed a statistically significant fit effect of prevention orientation and feedback type, bprevention × feedback = -.080, p = .032, if taking the three-way-interaction into account. Based on the dummy coding of the fluency, this indicates that learning time was higher for individuals with a higher prevention orientation if prevention framed feedback was presented compared to promotion framed feedback in the fluent condition, but this effect diminished with disfluent learning advice, supporting H1b and H3b.Footnote 4

4.2.2.3 Learning performance

We also found statistically significant main effects of our predictors on learning performance. The learning performance was reduced after the disfluent learning advice, b = -.069 p = .013. Regarding our hypotheses, we were not able to identify any of the stated fit effects, bpromotion × feedback = -.002, p = .767, bprevention × feedback = -.003, p = .594, or statistically significant three-way-interactions, bpromotion × feedback x fluency = -.019, p = .143, bprevention × feedback × fluency = -.002, p = .866, resulting in no support for H2a, H2b, H4a and H4b.

4.3 Discussion

The second experiment replicated the promotion fit effects on learning persistence observed in the first experiment. Learning time was longer for individuals with a higher promotion orientation if promotion-framed feedback was presented. Additionally, we found a statistically significant prevention fit on learning time. For both regulatory orientations, we observed statistically significant fit effects in the stated direction. Learners receiving concrete feedback about their recent performance were motivated to maintain learning activities if the feedback was framed towards the strategic orientations. Moreover, with this experiment, we also tested the underlying process of regulatory fit. The additional prevention fit was only observable if the fluency manipulation was considered. The positive impact of the fit effect was diminished if the subsequent disfluent cue was presented as illustrated in Fig. 5. On the downside, we observed no fit effects on learning performance overall. While the experiment worked very well on learning persistence, we were not even able to replicate the promotion fit observed in the first study. This might be explained by the fact that we were focusing on aggregated learning performance per session of those items after feedback. The mean percentage of correct exercises might be highly dependent on the item difficulties of the respective exercises. Furthermore, regarding the fluency manipulation, one must consider that the perceptual disfluency manipulation might not have strong power. We selected learning advice, which we believe to be meaningful for learners. However, we cannot say for sure if learners paid attention to them. Although we observed a significant three-way-interaction, for future studies we would suggest using a manipulation of fluency that is inherent to the task (cf. Janson et al., 2022). However, taken together, the second experiment provided further evidence of regulatory fit effects on learning performance and insight into the underlying processes.

5 General discussion

The present work aimed to extend the literature on feedback and regulatory fit by investigating fit effects of regulatory-framed feedback in e-learning environments. We conducted our research on the actual exam preparation of university students. Even though the learning situation might not be as stressful as the exam situation, preparation for high-stakes exams is highly relevant for students. We partly revealed our proposed fit effects on learning persistence and performance underlining the opportunities of individualized feedback framings. This individualized approach to the perception of informative feedback might also explain inconsistencies in the effect sizes of feedback interventions (Hattie & Timperley, 2007; Kluger & DeNisi, 1996; Wisniewski et al., 2020). Beyond the fact that the feedback literature claimed that more elaborative feedback has stronger effects on learning outcomes, e.g., by helping learners to overcome misconceptualizations by giving concrete solution strategies if tasks were completed incorrectly, fitting feedback with individuals' strategic orientations can improve the beneficial effects of easy-to-implement feedback types like informative feedback. This is in line with recent research providing fit effects of feedback framed with different comparison standards (Janson et al., 2022). However, it turns out that fit effects are not as stable as proposed and are hard to detect. Nevertheless, our research provides insights into the underlying processes of such fit effects. In the following, we discuss our results in the light of theory and research practice and also illustrate potential practical implications.

5.1 Outcomes and processes of regulatory fit

Overall, our results suggest beneficial effects of fit effects on both learning persistence and performance even though the size of these effects is rather weak. Given these effects, our present work expands the regulatory focus literature on motivation and cognition in achievement-related settings. However, we cannot conclude confidently that the effects of regulatory fit on learning performance are actual cognitive ones. Our design could not disentangle performance outcomes from persistence outcomes, and a possible mediation, i.e., higher performance through higher persistence, might also explain the results. Regarding this, it should be noted that we only observed a promotion fit effect on performance while a promotion fit on persistence was also present. Finding a fit effect on performance independent of fit effects on persistence would have ruled out the explanation of fit-effects on performance due to a potential mediation via persistence.

With fluency, we tested an underlying process and hypothesized both motivational and cognitive outcomes. However, we only observed a moderation for persistence outcomes. This underlines our theoretical assumption that regulatory fit effects facilitate learners’ motivation by the same means as fluency (Freitas & Higgins, 2002; Reber et al., 1998). However, we cannot draw any conclusions on the mechanisms of regulatory fit on performance as no moderation with fluency was observable. This does not rule out that perceived processing ease is also related to cognitive outcomes, but leaves room for further investigations. Future studies should also focus on more cognitive mechanisms of regulatory fit, such as the proposed effect of reduced cognitive load (Sweller, 2011) under regulatory fit. If additional cognitive load induced via a dual-task paradigm altered the power of regulatory fit effects, this would support the hypothesis of cognitive regulatory fit effects. With our present work, we decided to take an experimental design on the underlying processes of fit effects. By not measuring the proposed mediation of fit effects via ease of processing, but additionally manipulating it with perceptual fluency, we make stronger claims regarding causality (Spencer et al., 2005). Instead of solely establishing correlational support for an underlying psychological process, we also directly altered the proposed mechanism between regulatory fit and learning outcomes. The reported results can be seen as first evidence, but should be considered with caution as we only observed one of the four proposed three-way-interaction.

Regarding the generalizability of our results, it should be noted that we used a very context-specific assessment of regulatory orientations. The used scale by Lockwood and colleagues (2002) is a common way to assess regulatory orientations, but it would be interesting to investigate whether students have domain-specific regulatory orientations or if regulatory fit effects observed in these studies are based on a fit with domain-general regulatory orientations. Unfortunately, this current research cannot disentangle this.

5.2 The distinction between promotion and prevention fit

Former research already provided strong support for the benefits of regulatory fit on self-regulation (Motyka et al., 2014). Based on this literature we expected to detect those effects in the applied setting of digital learning environments. However, we were only able to partly support regulatory fit effects on persistence and performance with our two experiments. It is important to consider that we only found promotion fit effects in the first experiment. In total, three out of four predicted fit effects of promotion orientation and regulatory-framed feedback were statistically significant. Individuals with a higher promotion orientation showed higher learning time, fewer learning aborts, and higher success probability. In contrast, we only revealed one predicted fit effect of prevention orientation and regulatory-framed feedback on learning persistence in experiment 2. Differences in promotion fit and prevention fit have already been discussed in the existing literature. In their meta-analysis, Motyka and colleagues (2014) report higher promotion fit effects on evaluation and higher prevention fit effects on behavior. They discuss that promotion fit focuses more on the value of choices and evaluations, while prevention fit emphasizes non-losses in outcomes. From this perspective a larger likelihood to find promotion fit effects on persistence (as a result of better evaluation of the task) and higher prevention fit effects on performance might be expected, but our patterns do not exactly match this proposition. However, this underlines the importance of investigating regulatory fit effects separately for both regulatory orientations (Haws et al., 2010). The low intercorrelations of promotion and prevention in our studies, which are in line with former studies stating the independence of promotion and prevention orientations (Haws et al., 2010; Summerville & Roese, 2007), delegitimate a unidimensional approach empirically. Furthermore, collapsing both orientations may conceal if only one orientation drives the fit effect or may lead to statistically insignificant results. Currently, differential effects of the different motivational systems of promotion and prevention are well discussed (for a review see Scholer et al., 2019). Therefore, future research should move on to also investigate differential aspects of promotion and prevention fit. Even though it is too early to conclude theoretical directions from our results for this research question, our evidence provides a call for a closer look at those differences.

This research gap not only affects the differential outcomes of promotion and prevention fit, but also processes. A clear analytical distinction between a more or less strong prevention and a more or less strong promotion orientation leads us to the question of whether regulatory fit effects observed within previous studies (Avnet & Higgins, 2006; Förster et al., 1998; Keller & Bless, 2006; Louro et al., 2005) were driven more by promotion fit than prevention fit, and if so, whether different mechanisms behind those regulatory fit effects exist. Regarding the latter, it is interesting that in our experiment the additional prevention fit on learning persistence was with respect to fluency, while the promotion fit was not affected by the altered fluency. We took a general perspective on the influence of perceptual fluency on fit effects, proposing fit effects in general to affect learning outcomes via ease of processing. However, one might speculate that promotion fit and prevention fit differ in this process. One could argue that prevention narrows the focus on the task (Förster & Higgins, 2005) and as a consequence a prevention fit should be bolstered under disfluency. In contrast to this reasoning, we found a higher prevention fit effect under perceptually fluent conditions, which is in line with our general perspective on regulatory fit. Overall, it is too early to conclude differential processes of the two regulatory systems promotion and prevention from our results, but our differences in the results also call for a closer look at the differences between promotion and prevention fit regarding the processes.

5.3 Limitations

Choosing an experimental field design provides opportunities to investigate psychological constructs under real-world conditions. Finding effects under these circumstances makes a strong claim for the research question and impact. Due to the less controlled field setting, one cannot expect to replicate patterns of laboratory experiments (Bless & Burger, 2016). With the decision to investigate fit effects on actual learning parameters of an exam preparation software, our present work has some crucial limitations.

First, we had limiting factors regarding the design. For ethical reasons, we chose a within-subject design for our feedback manipulations to test our proposed fit effects. Therefore, we cannot disentangle if the presence of fitting feedback or the absence of non-fitting feedback drives our fit effects. By switching the design from within-session variation to between-session variation, we aimed to address this problem of interfering short-term relative fit effects. However, to investigate the absolute effects of fitting feedback a between-subject design would be necessary. Also, the usage of the software limited the feedback possibilities due to technical restrictions. We implemented informative feedback on exercise success or failure. However, the framings could also be used for more elaborative feedback like information about misconceptions or opportunities to improve. Besides the fact that elaborative feedback, in general, is more effective (Kluger & DeNisi, 1996, 1998), it is worth noting that we already found regulatory fit effects for feedback giving solely information about performance. Regarding the fluency manipulation, we chose a between-subject design. This approach has more causal strength than within-subject designs, but literature on fluency states that fluency effects are often only observable if the fluency changes (Hansen et al., 2008; Wänke & Hansen, 2015). Combined with the fact that the between-subject design limited the power enormously, this might explain statistically non-significant results as well. However, as outlined, to maintain the credibility of the study, we decided to keep the font type constant for participants.

Second, the chosen field approach had an impact on the noisiness of the data. For example, we did not assume participants’ learning time in the second experiment to be solely dependent on the type of presented feedback. Learners sitting down in the library to repeat the most recent content learned in the last two weeks will (hopefully) persist longer than learners starting a quick session while pizza is in the oven. Therefore, even our extensive dataset with thousands of hours of actual learning time might be underpowered regarding our research question (Bless & Burger, 2016). It is plausible that fit effects and even harder identifiable three-way-interactions might exist but are concealed by the unexplained variance of the study. Consequently, we tried to reduce this variance by introducing certain covariates and including only data from earlier learning phases, where differences in self-regulation explain more variance in learning behavior (Steel, 2007). Taken together, our decisions on covariates and data control were theoretically justified (Broadhurst, 1959; Endler et al., 2012; Mischel, 1968; Steel, 2007) and necessary to provide fit effects with a space to develop their potential on the learning data. In addition, the procedure was analogous to a recent study conducted with the same paradigm (Janson et al., 2022).

The limitations outlined here can be overcome in laboratory studies. However, those laboratory designs are biased as well (Bless & Burger, 2016). Therefore, we decided to stick as close as we could to real learning settings for our present work.

5.4 Conclusion

We transferred the literature on regulatory focus theory (Higgins, 1998, 2000) to the e-learning context. Besides the successfully established promotion fit effects in the first experiment, and the fit effects of both orientations on persistence in the second experiment, further proposed fit effects remained statistically insignificant. While the regulatory fit is stated as an established and well-replicated construct (Motyka et al., 2014), the fit effects were hard to detect in this applied setting. Our large database of students learning for actual exams provides a high-powered and ecologically valid setting, but also comes at the cost of much error variance overshadowing the regulatory fit effects. Under these circumstances, the effects were not as stable as supposed and it must be questioned how important regulatory fit effects are for learning persistence and performance in real-world settings. Future research should replicate our findings and foc2us more on the underlying processes. We did so by additionally manipulating perceptual fluency (Alter & Oppenheimer, 2009; Reber et al., 1998) within the software to investigate whether differences in processing ease alter the strength of fit effects. Our present work does provide first limited evidence for such a process. We suggest investigating these processes in more controlled environments due to the limitations of the field approach before drawing further conclusions. Finally, we consider the present work as promising evidence for emerging opportunities of e-learning to facilitate learners’ persistence and performance by adapting to their interindividual needs (Shute & Towle, 2003).

Change history

03 March 2023

A Correction to this paper has been published: https://doi.org/10.1007/s11218-023-09774-2

Notes

We were cautious about the effects of telling people that they had only achieved 5% on the way to the next medal. We were not sure if learners might abort learning activities based on this feedback as they just achieved a medal and had a long way to go. As this was constant for the whole experiment, it should not have any impact on the research question per se.

For both experiments, we included analyses without the covariates and for excluded learning data in the electronic supplement. Proposed fit effects were not observable in those analyses.

Significance of regression coefficients in multilevel models can be evaluated using one-tailed testing for directional hypotheses (Arend & Schäfer, 2019). Still, we report two-tailed p-values to provide full information for replication or meta-analyses. P-values of effects in the stated direction can be divided by two.

We also repeated the analyses with the fluent condition as baseline (disfluent: 0; fluent: 1). While the direction of the three-way-interaction was inverted, bprevention × feedback × fluency = − 0.134, p = .014, the fit effect was not significant, bprevention × feedback = 0.054, p = .174. These results indicate that the effect is not only stronger under the fluent condition, but also not statistically significant under the disfluent condition.

References

Aaker, J. L., & Lee, A. Y. (2006). Understanding regulatory fit. Journal of Marketing Research, 43(1), 15–19. https://doi.org/10.1509/jmkr.43.1.15

Alter, A. L., & Oppenheimer, D. M. (2009). Uniting the tribes of fluency to form a metacognitive nation. Personality and Social Psychology Review, 13(3), 219–235. https://doi.org/10.1177/1088868309341564

Arend, M. G., & Schäfer, T. (2019). Statistical power in two-level models: A tutorial based on Monte Carlo simulation. Psychological Methods, 24(1), 1–19. https://doi.org/10.1037/met0000195

Avnet, T., & Higgins, E. T. (2006). How regulatory fit affects value in consumer choices and opinions. Journal of Marketing Research, 43(1), 1–10. https://doi.org/10.1509/jmkr.43.1.1

Ayres, P., & Sweller, J. (2005). The split-attention principle in multimedia learning. In R. Mayer (Ed.), The Cambridge handbook of multimedia learning (pp. 135–146). Cambridge University Press.

Bates, D., Mächler, M., Bolker, B., Walker, S. (2014). Fitting linear mixed-effects models using lme4. ArXiv:1406.5823 [Stat]. http://arxiv.org/abs/1406.5823

Bless, H., & Burger, A. M. (2016). A closer look at social psychologists’ silver bullet: Inevitable and evitable side effects of the experimental approach. Perspectives on Psychological Science, 11(2), 296–308. https://doi.org/10.1177/1745691615621278

Broadhurst, P. L. (1959). The interaction of task difficulty and motivation: The Yerkes Dodson law revived. Acta Psychologica, 16, 321–338. https://doi.org/10.1016/0001-6918(59)90105-2

Brockner, J., Paruchuri, S., Idson, L. C., & Higgins, E. T. (2002). Regulatory focus and the probability estimates of conjunctive and disjunctive events. Organizational Behavior and Human Decision Processes, 87(1), 5–24. https://doi.org/10.1006/obhd.2000.2938