Abstract

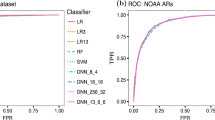

Coronal mass ejections (CMEs) that cause geomagnetic disturbances on the Earth can be found in conjunction with flares, filament eruptions, or independently. Though flares and CMEs are understood as triggered by the common physical process of magnetic reconnection, the degree of association is challenging to predict. From the vector magnetic field data captured by the Helioseismic and Magnetic Imager (HMI) onboard the Solar Dynamics Observatory (SDO), active regions are identified and tracked in what is known as Space Weather HMI Active Region Patches (SHARPs). Eighteen magnetic field features are derived from the SHARP data and fed as input for the machine-learning models to classify whether a flare will be accompanied by a CME (positive class) or not (negative class). Since the frequency of flare accompanied by CME occurrence is less than flare alone events, to address the class imbalance, we have explored the approaches such as undersampling the majority class, oversampling the minority class, and synthetic minority oversampling technique (SMOTE) on the training data. We compare the performance of eight machine-learning models, among which the Support Vector Machine (SVM) and Linear Discriminant Analysis (LDA) model perform best with True Skill Score (TSS) around 0.78 ± 0.09 and 0.8 ± 0.05, respectively. To improve the predictions, we attempt to incorporate the temporal information as an additional input parameter, resulting in LDA achieving an improved TSS of 0.92 ± 0.04. We utilize the wrapper technique and permutation-based model interpretation methods to study the significant SHARP parameters responsible for the predictions made by SVM and LDA models. This study will help develop a real-time prediction of CME events and better understand the underlying physical processes behind the occurrence.

Similar content being viewed by others

References

Abed, A.K., Qahwaji, R., Abed, A.: 2021, The automated prediction of solar flares from sdo images using deep learning. Adv. Space Res. 67(8), 2544.

Ahmed, O.W., Qahwaji, R., Colak, T., Higgins, P.A., Gallagher, P.T., Bloomfield, D.S.: 2013, Solar flare prediction using advanced feature extraction, machine learning, and feature selection. Solar Phys. 283(1), 157. DOI.

Aktukmak, M., Sun, Z., Bobra, M., Gombosi, T., Manchester, W.B. IV, Chen, Y., Hero, A.: 2022, Incorporating polar field data for improved solar flare prediction. Front. Astron. Space Sci. 9. DOI.

Altschuler, M.D., Trotter, D.E., Orrall, F.Q.: 1972, Coronal holes. Solar Phys. 26, 354.

Aminalragia-Giamini, S., Raptis, S., Anastasiadis, A., Tsigkanos, A., Sandberg, I., Papaioannou, A., Papadimitriou, C., Jiggens, P., Aran, A., Daglis, I.A.: 2021, Solar energetic particle event occurrence prediction using solar flare soft x-ray measurements and machine learning. J. Space Weather Space Clim. 11, 59. DOI.

Andrews, M.D.: 2003, A search for cmes associated with big flares. Solar Phys. 218(1), 261. DOI.

Barnes, G., Leka, K.D., Schumer, E.A., Della-Rose, D.J.: 2007, Probabilistic forecasting of solar flares from vector magnetogram data. Space Weather 5(9). DOI.

Bobra, M.G., Ilonidis, S.: 2016, Astrophys. J. 821(2), 127. DOI.

Bobra, M.G., Sun, X., Hoeksema, J.T., Turmon, M., Liu, Y., Hayashi, K., Barnes, G., Leka, K.D.: 2014, The helioseismic and magnetic imager (hmi) vector magnetic field pipeline: sharps – space-weather hmi active region patches. Solar Phys. 289(9), 3549. DOI.

Bobra, M.G., Wright, P.J., Sun, X., Turmon, M.J.: 2021, Smarps and sharps: two solar cycles of active region data. Astrophys. J. 256(2), 26. DOI.

Breiman, L.: 2001, Random forests. Mach. Learn. 45, 5.

Chawla, N.V., Bowyer, K.W., Hall, L.O., Kegelmeyer, W.P.: 2002, Smote: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321.

Chen, T., Guestrin, C.: 2016, Xgboost: a scalable tree boosting system. In: Proc. 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, 785.

Chen, Y., Manchester, W.B., Hero, A.O., Toth, G., DuFumier, B., Zhou, T., Wang, X., Zhu, H., Sun, Z., Gombosi, T.I.: 2019, Identifying solar flare precursors using time series of sdo/hmi images and sharp parameters. Space Weather 17(10), 1404.

Cortes, C., Vapnik, V.: 1995, Support-vector networks. Mach. Learn. 20(3), 273. DOI.

Cranmer, S.R.: 2009, Coronal holes. Living Rev. Solar Phys. 6, 1.

Florios, K., Kontogiannis, I., Park, S.-H., Guerra, J.A., Benvenuto, F., Bloomfield, D.S., Georgoulis, M.K.: 2018, Forecasting solar flares using magnetogram-based predictors and machine learning. Solar Phys. 293(2), 28.

Freund, Y.: 1995, Boosting a weak learning algorithm by majority. Inf. Comput. 121(2), 256.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: 2014, Generative adversarial nets. Adv. Neur. In. 27.

Gosling, J.T.: 1993, The solar flare myth. J. Geophys. Res. 98(A11), 18937. DOI.

Gosling, J., McComas, D., Phillips, J., Bame, S.: 1991, Geomagnetic activity associated with Earth passage of interplanetary shock disturbances and coronal mass ejections. J. Geophys. Res. 96(A5), 7831.

Harrison, R.A.: 1995, The nature of solar flares associated with coronal mass ejection. Astron. Astrophys. 304, 585. ADS.

Hunter, J.D.: 2007, Matplotlib: a 2d graphics environment. Comput. Sci. Eng. 9(3), 90.

Inceoglu, F., Jeppesen, J.H., Kongstad, P., Marcano, N.J.H., Jacobsen, R.H., Karoff, C.: 2018, Using machine learning methods to forecast if solar flares will be associated with cmes and seps. Astrophys. J. 861(2), 128.

Kasapis, S., Zhao, L., Chen, Y., Wang, X., Bobra, M., Gombosi, T.: 2022, Interpretable machine learning to forecast sep events for solar cycle 23. Space Weather 20(2), e2021SW002842. DOI.

Leka, K.D., Barnes, G.: 2007, Photospheric magnetic field properties of flaring versus flare-quiet active regions. IV. A statistically significant sample. Astrophys. J. 656(2), 1173. DOI.

Lin, J., Forbes, T.G.: 2000, Effects of reconnection on the coronal mass ejection process. J. Geophys. Res. 105(A2), 2375. DOI.

Liu, C., Deng, N., Wang, J.T., Wang, H.: 2017, Predicting solar flares using sdo/hmi vector magnetic data products and the random forest algorithm. Astrophys. J. 843(2), 104.

Liu, H., Liu, C., Wang, J.T., Wang, H.: 2019, Predicting solar flares using a long short-term memory network. Astrophys. J. 877(2), 121.

Park, E., Moon, Y.-J., Shin, S., Yi, K., Lim, D., Lee, H., Shin, G.: 2018, Application of the deep convolutional neural network to the forecast of solar flare occurrence using full-disk solar magnetograms. Astrophys. J. 869(2), 91.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., Duchesnay, E.: 2011, Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825.

Pesnell, W.D., Thompson, B.J., Chamberlin, P.C.: 2012, The solar dynamics observatory (SDO). Solar Phys. 275(1–2), 3. DOI. ADS.

Priest, E., Forbes, T.: 2002, The magnetic nature of solar flares. Astron. Astrophys. Rev. 10(4), 313.

Qahwaji, R., Colak, T., Al-Omari, M., Ipson, S.: 2008, Automated prediction of cmes using machine learning of cme – flare associations. Solar Phys. 248(2), 471. DOI.

Schou, J., Scherrer, P.H., Bush, R.I., Wachter, R., Couvidat, S., Rabello-Soares, M.C., Bogart, R.S., Hoeksema, J.T., Liu, Y., Duvall, T.L., Akin, D.J., Allard, B.A., Miles, J.W., Rairden, R., Shine, R.A., Tarbell, T.D., Title, A.M., Wolfson, C.J., Elmore, D.F., Norton, A.A., Tomczyk, S.: 2012, Design and ground calibration of the helioseismic and magnetic imager (HMI) instrument on the solar dynamics observatory (SDO). Solar Phys. 275(1–2), 229. DOI. ADS.

Schrijver, C.J.: 2009, Driving major solar flares and eruptions: a review. Adv. Space Res. 43(5), 739. DOI. https://www.sciencedirect.com/science/article/pii/S0273117708005942.

Shibata, K., Magara, T.: 2011, Solar flares: magnetohydrodynamic processes. Living Rev. Solar Phys. 8, 1.

Sun, P., Dai, W., Ding, W., Feng, S., Cui, Y., Liang, B., Dong, Z., Yang, Y.: 2022, Solar flare forecast using 3d convolutional neural networks. Astrophys. J. 941(1), 1. DOI.

Tharwat, A., Gaber, T., Ibrahim, A., Hassanien, A.E.: 2017, Linear discriminant analysis: a detailed tutorial. AI Commun. 30(2), 169. DOI.

Torres, J., Zhao, L., Chan, P.K., Zhang, M.: 2022, A machine learning approach to predicting sep events using properties of coronal mass ejections. Space Weather 20(7), e2021SW002797. DOI.

Wang, J., Liu, S., Ao, X., Zhang, Y., Wang, T., Liu, Y.: 2019, Parameters derived from the sdo/hmi vector magnetic field data: potential to improve machine-learning-based solar flare prediction models. Astrophys. J. 884(2), 175.

Wang, X., Chen, Y., Toth, G., Manchester, W.B., Gombosi, T.I., Hero, A.O., Jiao, Z., Sun, H., Jin, M., Liu, Y.: 2020, Predicting solar flares with machine learning: investigating solar cycle dependence. Astrophys. J. 895(1), 3. DOI. ADS.

Webb, D.F., Howard, T.A.: 2012, Coronal mass ejections: observations. Living Rev. Solar Phys. 9(1), 3. DOI.

Woodcock, F.: 1976, The evaluation of yes/no forecasts for scientific and administrative purposes. Mon. Weather Rev. 104(10), 1209.

Yan, X.-L., Qu, Z.-Q., Kong, D.-F.: 2011, Relationship between eruptions of active-region filaments and associated flares and coronal mass ejections. Mon. Not. Roy. Astron. Soc. 414(4), 2803. DOI.

Yashiro, S., Gopalswamy, N.: 2009, Statistical relationship between solar flares and coronal mass ejections. In: Gopalswamy, N., Webb, D.F. (eds.) Universal Heliophysical Processes 257, 233. DOI. ADS.

Yi, K., Moon, Y.-J., Lim, D., Park, E., Lee, H.: 2021, Visual explanation of a deep learning solar flare forecast model and its relationship to physical parameters. Astrophys. J. 910(1), 8.

Zhang, H., Li, Q., Yang, Y., Jing, J., Wang, J.T., Wang, H., Shang, Z.: 2022a, Solar flare index prediction using sdo/hmi vector magnetic data products with statistical and machine-learning methods. Astrophys. J. 263(2), 28.

Zhang, H., Li, Q., Yang, Y., Jing, J., Wang, J.T.L., Wang, H., Shang, Z.: 2022b, Solar flare index prediction using sdo/hmi vector magnetic data products with statistical and machine-learning methods. Astrophys. J. 263(2), 28. DOI.

Zheng, Y., Li, X., Si, Y., Qin, W., Tian, H.: 2021, Hybrid deep convolutional neural network with one-versus-one approach for solar flare prediction. Mon. Not. Roy. Astron. Soc. 507(3), 3519.

Zirker, J.B.: 1977, Coronal holes and high-speed wind streams. Rev. Geophys. 15(3), 257.

Acknowledgments

The authors thankfully acknowledge the use of data courtesy of the SDO/AIA and HMI science teams for providing SHARP data. This research uses Python packages scikit-learn (Pedregosa et al., 2011), matplotlib (Hunter, 2007). We thank Dr. Monica G. Bobra for providing us with the updated code. We thank Ms. Srijani Mukherjee for helping us in the selection of the model.

Author information

Authors and Affiliations

Contributions

Hemapriya Raju developed the model and tested different approaches. Hemapriya Raju wrote the draft manuscript. Saurabh Das supervised the manuscript. Both Hemapriya Raju and Saurabh Das contributed to the final version of the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Machine Learning Models

-

Linear models

-

Support Vector Machine (SVM) (Cortes and Vapnik, 1995) can be used effectively for classification and regression. In a high-dimensional space, SVM sets a hyperplane for binary classification. This is achieved by transforming a nonlinear space to a linear space using a kernel at the maximized distance between the classes from the nearest training data points. As the margin between two classes becomes larger, SVM classifies effectively in an ideal scenario. However, in a real case, there will always be a few cases on the other side that cannot be separable, so support vectors can be there on either side of the class. Finding an optimized method between minimizing the support vectors and maximizing the separable margin allows defining a good model leading to a reduced misclassification rate. Support vector machine can handle both linear and nonlinear data using kernels. In this article, we use a radial basis function (RBF) kernel similar to Bobra and Ilonidis (2016).

-

Logistic regression is a statistical method that is used to estimate the probability of a data point belonging to either class. This is achieved using a sigmoid function in which, above a particular threshold, the data point belongs to one class, and below the threshold, it belongs to the other class.

-

-

Discriminant model

-

Linear discriminant analysis (LDA) (Tharwat et al., 2017) assumes that the input data is Gaussian. For each class, LDA estimates the mean and variance from the given data. During prediction, LDA tries to get the probability of the new input belonging to a particular distribution of a class using the Bayes theorem. The class which has a higher probability is assigned as the respective prediction for the input. It further acts as a dimensionality reduction technique by projecting features from a higher-dimension to a lower-dimension space.

-

-

Decision based models

-

A decision tree classifies data according to the attribute associated with the root node and later moves down to the leaf node by making certain decisions. Decision trees are naturally immune to a correlation between variables and resistant to outliers. However, decision trees are prone to overfitting, producing biased trees based on a particular majority class.

-

-

Ensembling models

-

As mentioned above, decision trees are a bit unstable and suffer from generalization issues. Ensemble techniques in the form of bootstrapping or bagging that draw several different decisions from the dataset can be helpful in such cases. The prediction, thereby using majority voting or average outcomes, makes the structure more stable, called as Random Forest (RF) (Breiman, 2001). RF is more stable, accurate, and adaptable to both classification and regression. Random Forest helps in generalization and reduces overfitting by changing the algorithms that are learned by subtrees each time. Random Forest may suffer the worst performance if there are more correlated variables.

-

Boosting (Freund, 1995) is a sequential process where instead of averaging the outcomes, the decisions are weighted and adjusted in sequence models according to the errors obtained from the previous model. The Adaboost model is an ensembling model of weak learners where each model is boosted by the performance of the ensembles to obtain a good classifier with good accuracy.

-

Extreme Gradient Boosting (XGBoost) (Chen and Guestrin, 2016) is an ensembling algorithm enclosing groups of individual learners; it uses gradient descent to minimize the loss function. Further modeling is designed using a regularization parameter and inbuilt cross validation; thus, reducing overfitting and generalization error. Tuning and visualization of Xgboost is a bit complex compared to Adaboost and Random Forest.

-

Appendix B: Wrapper Features

In Table 5 and 6, the first column represents the number of features followed by the performance metrics and the features that contributed to the performance of SVM. The features that contribute to the performance of LDA are shown in Table 6.

Appendix C: Metrics

A True Positive (TP) case is defined here as when both the actual and predicted classes are eruptive flares. A False Positive (FP) is when the actual is a confined flare, whereas the predicted class is an eruptive flare, thus leading to false alarms. When the actual event is an eruptive flare, whereas the predicted is falsely classified as a confined flare, it is termed as False Negative (FN). True Negative (TN) refers to when both actual and prediction classes are confined flares. Thus, from the confusion matrix, it is understood that a good model should have fewer False Positives and also False Negatives. Performance metrics should be carefully chosen before assessing the performance of the model with class imbalance. Metrics such as accuracy show higher performance due to better classification of the majority class than the minority class. Since we have more confined flare events, using accuracy as a metric will lead to a biased model evaluation. Hence, we evaluate a few other performance metrics based on TP, FP, TN, and FN obtained from the confusion matrix. True Skill Score, Probability of Detection (PoD), False Alarm Rates (FAR), and False Detection Rate (FDR) are a few metrics that are described in detail below.

-

Probability of Detection (PoD): The PoD is described as the probability that our model will detect the occurrence of eruptive flare events. Thus, we calculate the ratio between true positive events and total actual eruptive flares. It is also known as True Positive Rate (TPR). It can be described by Equation 1:

$$ PoD=\frac{TP}{TP+FN}\cdot $$(1) -

False Alarm Rate (FAR): The performance of a model is considered good when there are fewer false alarms. Thus, minimizing the ratio of wrongly categorized eruptive flare events to the total number of actual confined flare events should be considered, which is denoted by False Alarm Rate (FAR), otherwise known as False Positive Rate (FPR). FAR is calculated by Equation 2:

$$ FAR=\frac{FP}{FP+TN}\cdot $$(2)The good model will maximize the performance of PoD, but there exists a trade-off while trying to minimize FAR; thus, an optimum balance should be found between both metrics.

-

False Discovery Rate (FDR): FDR can be defined as the rate at which false alarms occur among all predicted eruptive flare events. FDR is given as in Equation 3:

$$ FDR=\frac{FP}{FP+TP}\cdot $$(3) -

True Skill Score (TSS): In order to avoid bias towards the majority class events, we try to evaluate the model based on the metric TSS. TSS can be seen as the difference between PoD and FAR. Thus, a good model with a high TSS score will have more PoD of eruptive flare events while minimizing the FAR. However, maximizing the only metric TSS may lead to more False Positives. TSS ranges from −1 to +1, with +1 as the best measure for our desired class. TSS can be evaluated as in Equation 4. TSS is not affected by the class imbalance; hence, we have considered it as our base metric to evaluate our performance of the model.

$$ TSS=\frac{TP}{TP+FN}-\frac{FP}{FP+TN}\cdot $$(4)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Raju, H., Das, S. Interpretable ML-Based Forecasting of CMEs Associated with Flares. Sol Phys 298, 96 (2023). https://doi.org/10.1007/s11207-023-02187-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11207-023-02187-6