Abstract

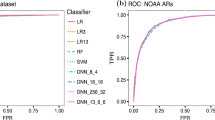

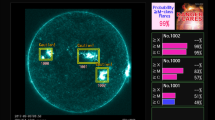

We propose a forecasting approach for solar flares based on data from Solar Cycle 24, taken by the Helioseismic and Magnetic Imager (HMI) on board the Solar Dynamics Observatory (SDO) mission. In particular, we use the Space-weather HMI Active Region Patches (SHARP) product that facilitates cut-out magnetograms of solar active regions (AR) in the Sun in near-realtime (NRT), taken over a five-year interval (2012 – 2016). Our approach utilizes a set of thirteen predictors, which are not included in the SHARP metadata, extracted from line-of-sight and vector photospheric magnetograms. We exploit several machine learning (ML) and conventional statistics techniques to predict flares of peak magnitude \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\) within a 24 h forecast window. The ML methods used are multi-layer perceptrons (MLP), support vector machines (SVM), and random forests (RF). We conclude that random forests could be the prediction technique of choice for our sample, with the second-best method being multi-layer perceptrons, subject to an entropy objective function. A Monte Carlo simulation showed that the best-performing method gives accuracy \(\mathrm{ACC}=0.93(0.00)\), true skill statistic \(\mathrm{TSS}=0.74(0.02)\), and Heidke skill score \(\mathrm{HSS}=0.49(0.01)\) for \({>}\,\mbox{M1}\) flare prediction with probability threshold 15% and \(\mathrm{ACC}=0.84(0.00)\), \(\mathrm{TSS}=0.60(0.01)\), and \(\mathrm{HSS}=0.59(0.01)\) for \({>}\,\mbox{C1}\) flare prediction with probability threshold 35%.

Similar content being viewed by others

Notes

Liu et al. (2017) also used the random forest algorithm for solar flare prediction based on SDO/HMI data. Nevertheless, the specific details in that paper regarding the sampling strategy and the feature extraction are very different from our choices. For example, Liu et al. (2017) only considered flaring ARs (at the level \({>}\,\mbox{B1}\) class), and the sample size was \(N=845\), while we consider both flaring and non-flaring ARs with \(N=23{,}134\).

This is because we considered only the \(B_{r}\) version for predictor \(\mathrm{WL}_{\mathrm{{SG}}}\).

References

Ahmed, O., Qahwaji, R., Colak, T., Dudok De Wit, T., Ipson, S.: 2010, A new technique for the calculation and 3D visualisation of magnetic complexities on solar satellite images. Vis. Comput. 26, 385. DOI .

Ahmed, O.W., Qahwaji, R., Colak, T., Higgins, P.A., Gallagher, P.T., Bloomfield, D.S.: 2013, Solar flare prediction using advanced feature extraction, machine learning, and feature selection. Solar Phys. 283(1), 157. DOI .

Al-Ghraibah, A., Boucheron, L.E., McAteer, R.T.J.: 2015, An automated classification approach to ranking photospheric proxies of magnetic energy build-up. Astron. Astrophys. 579, A64. DOI .

Alissandrakis, C.E.: 1981, On the computation of constant alpha force-free magnetic field. Astron. Astrophys. 100, 197.

Barnes, G., Longcope, D.W., Leka, K.D.: 2005, Implementing a magnetic charge topology model for solar active regions. Astrophys. J. 629, 561. DOI .

Barnes, G., Schanche, N., Leka, K., Aggarwal, A., Reeves, K.: 2016, A comparison of classifiers for solar energetic events. Proc. Int. Astron. Union 12(S325), 201. DOI .

Bloomfield, D.S., Higgins, P.A., McAteer, R.T.J., Gallagher, P.T.: 2012, Toward reliable benchmarking of solar flare forecasting methods. Astrophys. J. Lett. 747(2). DOI .

Bobra, M.G., Couvidat, S.: 2015, Solar flare prediction using SDO/HMI vector magnetic field data with a machine-learning algorithm. Astrophys. J. 798(2), 135. DOI .

Bobra, M.G., Sun, X., Hoeksema, J.T., Turmon, M., Liu, Y., Hayashi, K., Barnes, G., Leka, K.D.: 2014, The Helioseismic and Magnetic Imager (HMI) vector magnetic field pipeline: SHARPs – Space-weather HMI active region patches. Solar Phys. 289(9), 3549. DOI .

Boucheron, L.E., Al-Ghraibah, A., McAteer, R.T.J.: 2015, Prediction of solar flare size and time-to-flare using support vector machine regression. Astrophys. J. 812(1), 51. DOI .

Breiman, L.: 2001, Random forests. Mach. Learn. 45(1), 5. DOI .

Breiman, L., Friedman, J., Stone, C.J., Olshen, R.A.: 1984, Classification and Regression Trees.

Brier, G.W.: 1950, Verification of forecasts expressed in terms of probability. Mon. Weather Rev. 78, 1. DOI .

Chang, Y.-W., Lin, C.-J.: 2008, Feature ranking using linear svm. In: Guyon, I., Aliferis, C., Cooper, G., Elisseeff, A., Pellet, J.-P., Spirtes, P., Statnikov, A. (eds.) Proceedings of the Workshop on the Causation and Prediction Challenge at WCCI 2008, Proceedings of Machine Learning Research 3, 53.

Chang, C.-C., Lin, C.-J.: 2011, LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 27:1. Software available at http://www.csie.ntu.edu.tw/~cjlin/libsvm . DOI .

Chen, Y.-W., Lin, C.-J.: 2006, Combining SVMs with various feature selection strategies. In: Feature Extraction, Springer, Berlin, 315. DOI .

Colak, T., Qahwaji, R.: 2009, Automated solar activity prediction: A hybrid computer platform using machine learning and solar imaging for automated prediction of solar flares. Space Weather 7(6), S06001. DOI .

de Souza, R.S., Cameron, E., Killedar, M., Hilbe, J., Vilalta, R., Maio, U., Biffi, V., Ciardi, B., Riggs, J.D.: 2015, The overlooked potential of generalized linear models in astronomy, I: binomial regression. Astron. Comput. 12, 21. DOI .

Falconer, D.A., Moore, R.L., Gary, G.A.: 2008, Magnetogram measures of total nonpotentiality for prediction of solar coronal mass ejections from active regions of any degree of magnetic complexity. Astrophys. J. 689, 1433. DOI .

Falconer, D., Barghouty, A.F., Khazanov, I., Moore, R.: 2011, A tool for empirical forecasting of major flares, coronal mass ejections, and solar particle events from a proxy of active-region free magnetic energy. Space Weather 9(4), n/a. S04003. DOI .

Falconer, D.A., Moore, R.L., Barghouty, A.F., Khazanov, I.: 2012, Prior flaring as a complement to free magnetic energy for forecasting solar eruptions. Astrophys. J. 757, 32. DOI .

Falconer, D.A., Moore, R.L., Barghouty, A.F., Khazanov, I.: 2014, MAG4 versus alternative techniques for forecasting active region flare productivity. Space Weather 12(5), 306. DOI .

Fang, F., Manchester, W. IV, Abbett, W.P., van der Holst, B.: 2012, Buildup of magnetic shear and free energy during flux emergence and cancellation. Astrophys. J. 754, 15. DOI .

Fernández-Delgado, M., Cernadas, E., Barro, S., Amorim, D.: 2014, Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 15, 3133.

Fletcher, L., Dennis, B.R., Hudson, H.S., Krucker, S., Phillips, K., Veronig, A., Battaglia, M., Bone, L., Caspi, A., Chen, Q., Gallagher, P., Grigis, P.T., Ji, H., Liu, W., Milligan, R.O., Temmer, M.: 2011, An observational overview of solar flares. Space Sci. Rev. 159, 19. DOI .

Georgoulis, M.K., Rust, D.M.: 2007, Quantitative forecasting of major solar flares. Astrophys. J. Lett. 661, L109. DOI .

Granett, B.R.: 2017, Probing the sparse tails of redshift distributions with Voronoi tessellations. Astron. Comput. 18, 18. DOI .

Greco, S., Figueira, J., Ehrgott, M.: 2016, Multiple Criteria Decision Analysis, 2nd edn.

Greene, W.H.: 2002, Econometric Analysis, 5th edn.

Guerra, J.A., Pulkkinen, A., Uritsky, V.M., Yashiro, S.: 2015, Spatio-temporal scaling of turbulent photospheric line-of-sight magnetic field in active region NOAA 11158. Solar Phys. 290, 335. DOI .

Hall, M., Frank, E., Holmes, G., Pfahringer, B., Reutemann, P., Witten, I.H.: 2009, The WEKA data mining software: An update. ACM SIGKDD Explor. Newsl. 11(1), 10. DOI .

Hanssen, A., Kuipers, W.: 1965, On the Relationship Between the Frequency of Rain and Various Meteorological Parameters: (with Reference to the Problem of Objective Forecasting).

Hastie, T., Tibshirani, R., Friedman, J.: 2009, The Elements of Statistical Learning: Data Mining, Inference and Prediction, 2nd edn.

Heidke, P.: 1926, Berechnung des erfolges und der güte der windstärkevorhersagen im sturmwarnungsdienst. Geogr. Ann. 8, 301. DOI .

Hornik, K., Stinchcombe, M., White, H.: 1989, Multilayer feedforward networks are universal approximators. Neural Netw. 2(5), 359. DOI .

Kliem, B., Török, T.: 2006, Torus instability. Phys. Rev. Lett. 96(25), 255002. DOI .

Laboratory, N.-R.A.: 2015, Verification: Weather forecast verification utilities. R package version 1.42.

Li, R., Cui, Y., He, H., Wang, H.: 2008, Application of support vector machine combined with k-nearest neighbors in solar flare and solar proton events forecasting. Adv. Space Res. 42(9), 1469. DOI .

Liaw, A., Wiener, M.: 2002, Classification and regression by randomforest. R News 2(3), 18.

Liu, C., Deng, N., Wang, J.T.L., Wang, H.: 2017, Predicting solar flares using SDO/HMI vector magnetic data products and the random forest algorithm. Astrophys. J. 843(2), 104. DOI .

MacKay, D.J.C.: 2003, Information Theory, Inference, and Learning Algorithms.

Marzban, C.: 2004, The ROC curve and the area under it as performance measures. Weather Forecast. 19(6), 1106. DOI .

Meyer, D., Leisch, F., Hornik, K.: 2003, The support vector machine under test. Neurocomputing 55(1), 169. DOI .

Meyer, D., Dimitriadou, E., Hornik, K., Weingessel, A., Leisch, F.: 2015, e1071: Misc functions of the department of statistics, probability theory group (formerly: E1071), TU Wien. R package version 1.6-7.

Pesnell, W.D., Thompson, B.J., Chamberlin, P.C.: 2012, The Solar Dynamics Observatory (SDO). Solar Phys. 275, 3. DOI .

Prieto, A., Prieto, B., Ortigosa, E.M., Ros, E., Pelayo, F., Ortega, J., Rojas, I.: 2016, Neural networks: An overview of early research, current frameworks and new challenges. Neurocomputing 214, 242. DOI .

R Core Team: 2016, R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna.

Scherrer, P.H., Bogart, R.S., Bush, R.I., Hoeksema, J.T., Kosovichev, A.G., Schou, J., et al.: 1995, The solar oscillations investigation – Michelson Doppler imager. Solar Phys. 162, 129. DOI .

Scherrer, P.H., Schou, J., Bush, R.I., Kosovichev, A.G., Bogart, R.S., Hoeksema, J.T., Liu, Y., Duvall, T.L., Zhao, J., Title, A.M., Schrijver, C.J., Tarbell, T.D., Tomczyk, S.: 2012, The Helioseismic and Magnetic Imager (HMI) investigation for the Solar Dynamics Observatory (SDO). Solar Phys. 275, 207. DOI .

Schrijver, C.J.: 2007, A characteristic magnetic field pattern associated with all major solar flares and its use in flare forecasting. Astrophys. J. Lett. 655, L117. DOI .

Schuh, M.A., Angryk, R.A., Martens, P.C.: 2015, Solar image parameter data from the SDO: Long-term curation and data mining. Astron. Comput. 13, 86. DOI .

Sing, T., Sander, O., Beerenwinkel, N., Lengauer, T.: 2005, ROCR: Visualizing classifier performance in R. Bioinformatics 21(20), 7881. DOI .

Song, H., Tan, C., Jing, J., Wang, H., Yurchyshyn, V., Abramenko, V.: 2009, Statistical assessment of photospheric magnetic features in imminent solar flare predictions. Solar Phys. 254(1), 101. DOI .

Vapnik, V.: 1998, Statistical Learning Theory.

Venables, W.N., Ripley, B.D.: 2002, Modern Applied Statistics with S, 4th edn. Springer, New York. DOI .

Vilalta, R., Gupta, K.D., Macri, L.: 2013, A machine learning approach to Cepheid variable star classification using data alignment and maximum likelihood. Astron. Comput. 2, 46. DOI .

Wang, H.N., Cui, Y.M., Li, R., Zhang, L.Y., Han, H.: 2008, Solar flare forecasting model supported with artificial neural network techniques. Adv. Space Res. 42(9), 1464. DOI .

Wilks, D.S.: 2011, Statistical Methods in the Atmospheric Sciences 100.

Winkelmann, R., Boes, S.: 2006, Analysis of Microdata, Springer, Berlin.

Witten, I.H., Frank, E., Hall, M.A., Pal, C.J.: 2016, Data Mining: Practical Machine Learning Tools and Techniques.

Yu, D., Huang, X., Wang, H., Cui, Y.: 2009, Short-term solar flare prediction using a sequential supervised learning method. Solar Phys. 255(1), 91. DOI .

Yuan, Y., Shih, F.Y., Jing, J., Wang, H.-M.: 2010, Automated flare forecasting using a statistical learning technique. Res. Astron. Astrophys. 10(8), 785. DOI .

Zuccarello, F.P., Aulanier, G., Gilchrist, S.A.: 2015, Critical decay index at the onset of solar eruptions. Astrophys. J. 814, 126. DOI .

Acknowledgements

We would like to thank the anonymous referee for very helpful comments that greatly improved the initial manuscript. This research has been supported by the EU Horizon 2020 Research and Innovation Action under grant agreement No.640216 for the “Flare Likelihood And Region Eruption foreCASTing” (FLARECAST) project. Data were provided by the MEDOC data and operations centre (CNES/CNRS/Univ. Paris-Sud), http://medoc.ias.u-psud.fr/ and the GOES team.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosure of Potential Conflicts of Interest

The authors declare that they have no conflicts of interest.

Appendices

Appendix A: Importance of Predictors for Flare Prediction

We computed the Fisher score (Bobra and Couvidat, 2015; Chang and Lin, 2008; Chen and Lin, 2006) and the Gini importance (Breiman, 2001) for every predictor in the case of \({>}\,\mbox{M1}\) and \({>}\,\mbox{C1}\) flares. The obtained values for the importance of several predictors are presented in Figures 7 and 8 for \({>}\,\mbox{C1}\) and \({>}\,\mbox{M1}\) flare prediction, respectively. The Fisher score, \(F\), is defined for the \(j\)th predictor as

In Equation A.1, \(\bar{x}_{j}\), \(\bar{x}^{(+)}_{j}\), and \(\bar{x}^{(-)}_{j}\) are the mean values for the \(j\)th predictor over the entire sample, the positive class, and the negative class, respectively. Furthermore, \(n^{+}\) (\(n^{-}\)) are the number of positive (negative) class observations. In addition, \(x^{(+)}_{k,j}\) \((x^{(-)}_{k,j})\) are the values for the \(k\)th observation of the \(j\)th predictor belonging in the positive (negative) class. The higher the value of \(F(j)\), the more important the \(j\)th predictor.

The Gini importance is returned with the randomForest function of the randomForest package in R. The higher the Gini importance of the \(j\)th predictor, the more important this predictor.

We note that the correlation between the two quantities (Fisher score and Gini importance) is \(r = 0.7441 \) for \({>}\,\mbox{C1}\) flares and \(r = 0.7535 \) for \({>}\,\mbox{M1}\) flares, respectively. This shows that the two methods qualitatively agree on describing which predictors are the most important regarding flare prediction, in both classes of flare prediction. Figure 7 also shows that for \({>}\,\mbox{C1}\) flares, the top three ranked predictors for both Fisher score and Gini importance are the two versions of Schrijver’s \(R\) and \(\mathrm{WL}_{\mathrm{SG}}\). For the \({>}\,\mbox{M1}\) flares, from Figure 8 the top four ranked predictors for either Fisher score or Gini importance are the two versions of Schrijver’s \(R\), \(\mathrm{WL}_{\mathrm{SG}}\), and \(\mathrm{TLMPIL}_{\mathrm{Br}}\). In Appendix A the terminology for every predictor is explained in Table 7.

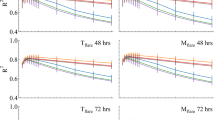

Appendix B: Prediction Models Resulting from Ranking the Predictors

We employed a backward elimination procedure, eliminating gradually predictors according to their Fisher score rank, starting form the model with all \(K=13\) predictors included. In every step, we eliminated the least important predictor from the set of currently included predictors. In this way, we obtained prediction results for models with 2, 3, …, 11, 12 predictors included for the ML methods, RF, SVM, and MLP and the conventional statistics methods LM, PR, and LG. The results of this iterative procedure for flares \({>}\,\mbox{C1}\) and \({>}\,\mbox{M1}\) are presented in Figures 9 and 10.

Same as in Figure 9, but for \({>}\,\mbox{M1}\) flares.

Figure 9 shows that there is a cut-off for the number of parameters included in the RF equal to the 6 most important ones (according to the Fisher score in Equation A.1) above which the RF is advantageous over the other two ML algorithms. For low-dimensional prediction models (e.g. fewer than 6 included parameters) there is no special advantage in using RF, and MLP or SVM seem a better choice then. This finding shows that of the highly correlated set of predictors, the MLP and SVM perform well using only a handful of them (fewer than 6), but the RF continues to improve its performance in higher-dimension settings, when the prediction model includes all 12 most important predictors. There is interest in investigating the performance of RF when the number of (correlated) predictors would be twice or three times that of the present study (24 – 36 predictors). Would the upward trend in Figure 9a continue to hold when the number of included parameters increased to 24 or 36? We note that RF is the only ML algorithm in the present study that belongs in the category of “ensemble” methods. Moreover, in Figure 9, the performance of the three conventional statistics methods LM, PR, and LG is presented. Clearly, the LM presents the worst forecasting ability, and we also note that the other two methods, PR and LG, score similar values for the TSS and HSS in general. It is also noteworthy that the profiles of PR and LG are very flat as a function of the number of included predictors, even flatter than the profiles from SVM and MLP.

Likewise, Figure 10 shows that for low-dimensional settings, RF is worse than MLP. The cut-off seems again to be six included parameters. Above this value, the RF provides better out-of-sample TSS and HSS than MLP. There seems to be a problematic region between three and six parameters included for the SVM, where adding more parameters to the SVM degrades its performance. With more than six parameters, the SVM performance improves again. Similarly to the \({>}\,\mbox{C1}\)-class flares case, we again note in Figure 10 rather flat profiles for the TSS and HSS for the conventional statistics methods, with PR and LG showing better behavior than LM.

One general conclusion is that for very few predictors \(K<6\), all methods work the same, so for parsimony, the conventional statistics methods could be preferred. This is also true for very small samples \(N<2{,}000\) (results available upon request). Conversely, when \(K > 6\) and \(N \geq10{,}000\), the ML methods and especially the RF are better.

We note that in Appendix B, the MLP always has four hidden nodes and the SVM has \(\gamma\) and cost parameters analogously to the full \(K=13\) SVM model for \({>}\,\mbox{C1}\) and \({>}\,\mbox{M1}\) flares cases.

Appendix C: Validation Results when Predictions Are Issued Only Once a Day (at Midnight)

We present here forecasting results in the following scenario:

-

i)

The training is performed as in the main scenario.

-

ii)

The testing is performed only for the observations in the testing set of the main scenario, which correspond to a time of 00:00 UT. To achieve this, we filter for the observations in the previous testing set with midnightStatus = TRUE.

This method of training-testing is called the “hybrid method”, where training is done with a cadence of 3 h and a forecast window of 24 h, and testing is done with a cadence of 24 h and a forecast window of 24 h. The hybrid method is preferable over using a training phase with a cadence of 24 h, which would result in undertrained models because of the limited sample size during training.

Tables 8 and 9 are analogous to Tables 5 and 6 of the main scenario, but for midnight-only (so once a day) predictions. For completeness, we recall that Table 8 is for \({>}\,\mbox{M1}\) flare prediction and Table 9 is for \({>}\,\mbox{C1}\) flare prediction.

By comparing Table 8 to Table 5, we see that BS and AUC do not change much on average when we move from the baseline scenario to the midnight prediction scenario. Nevertheless, the associated uncertainty increases in the case of midnight-only predictions, since the size of the testing set is smaller (only one, rather than eight, predictions per day). More significant differences are observed for BSS since the associated climatology is also different. Nevertheless, the finding that RF is the best overall method continues to hold.

Similar conclusions can be drawn for the \({>}\,\mbox{C1}\) flare prediction case, that is, through Tables 9 and 6. Here, noticeably, not even the BSS changes significantly, since the underlying climatology seems similar in both cases. This is because in contrast to \({>}\,\mbox{M1}\) class flares with a mean frequency of \({\approx}\,5\%\), \({>}\,\mbox{C1}\) class flares show a mean frequency of \({\approx}\,25\%\).

Finally, Tables 10 and 11 present the skill scores ACC, TSS, and HSS for the midnight prediction scenario analogously to Tables 2 and 3 for the baseline scenario. For completeness, we note that Table 10 pertains to \({>}\,\mbox{M1}\) flare prediction and Table 11 to \({>}\,\mbox{C1}\) flare prediction. We see that on average, the issuing of midnight-only predictions does not change the ACC, TSS, and HSS much with respect to the probability threshold. For example, on \({>}\,\mbox{M1}\) flare midnight-only predictions, the RF provides \(\mbox{ACC}=0.93\pm0.01\), \(\mbox{TSS}=0.73\pm0.04\), and \(\mbox{HSS}=0.47\pm 0.03\) for a probability threshold of 15%. Furthermore, for \({>}\,\mbox{C1}\) flare midnight-only predictions, the RF yields \(\mbox{ACC}=0.85\pm0.01\), \(\mbox{TSS}=0.63\pm0.02\), and \(\mbox{HSS}=0.61\pm0.02\) for a probability threshold of 35%.

Appendix D: Concluding Remarks on ML Versus Statistical Methods for Flare Forecasting

In order to assess the overall forecasting ability of ML versus statistical approaches in our dataset and problem definition, we employed the weighted-sum (WS) multicriteria ranking approach (Greco, Figueira, and Ehrgott, 2016), using a composite index (CI) defined in Equation D.1:

The CI value was computed for \(6 \times21 = 126\) probabilistic classifiers using the set of methods {MLP, LM, PR, LG, RF, SVM} and a probability threshold grid of 5%. Then, the 126 probabilistic classifiers were ranked in non-increasing values of the CI index. A normalization was made for ACC, TSS, and HSS, so that each metric over the set of alternatives took values in the range \([0,1]\). The normalization is useful because the range of values for ACC is different from the range of values for TSS and HSS. Moreover, \(\mathrm{{ACC}_{min}}\) is the minimum of ACC over all 126 alternative models. Likewise, \(\mathrm{{ACC}_{max}}\) is the maximum ACC obtained over all 126 alternative models. Similar facts hold for \(\mathrm{{TSS}_{min}}\), \(\mathrm{{TSS}_{max}}\), \(\mathrm{{HSS}_{min}}\), and \(\mathrm{{HSS}_{max}}\). Analytically, Table 12 presents the results of the multicriteria ranking approach for all methods we used with various probability thresholds, especially for the \({>}\,\mbox{C1}\) flare forecasting case. Table 13 conveys a similar ranking of all methods developed in this paper, but for the \({>}\,\mbox{M1}\) flare prediction.

Figure 11 summarizes the results shown in Tables 12 and 13, so that the differences between ML and statistical methods are highlighted (e.g. see Figures 11b and 11d). Similarly, conclusions for the merit of all methods developed in this paper can be drawn in Figures 11a and 11c. The top \(100\tau\) percentile methods are those ranked in the corresponding positions of Tables 12 and 13. For example, the top \(16.6\%(1/6)\) methods are those ranked in positions 1 – 21. For low values of \(\tau\), we obtain the best methods designated as the top \(100\tau\%\) methods. From Figure 11 we see that both for \({>}\,\mbox{C1}\) and \({>}\,\mbox{M1}\) flares, the RF has the greatest frequency in the top 16.6% percentile of methods, with a frequency of 33.3%. This means that in Tables 12 and 13, in positions 1 – 21, the RF method appears seven times in each table. We also see in Figure 11b that for \({>}\,\mbox{C1}\) flares, the top 16.6% methods are of type ML with a frequency 71% (versus 29% for statistical methods). Similarly, in Figure 11d, ML dominates in the top 16.6% methods with a frequency of 62% (versus 38% for statistical methods).

Descriptive statistics on the frequency with which every forecasting method for any probability threshold presents itself in the top \(100\tau\%\) percentile of the CI distribution. Panels (a) and (c) describe frequencies for all methods, and panels (b) and (d) group the results by category of methods (e.g. ML vs. statistical methods). For example, for \({>}\,\mbox{C1}\) flares in panel (a), the top 16.6% methods are dominated by RF with a frequency of \(7/21=33\%\). Likewise, for \({>}\,\mbox{M1}\) flares in panel (c), the top 16.6% methods are again dominated by RF with a frequency of \(7/21=33\%\).

Rights and permissions

About this article

Cite this article

Florios, K., Kontogiannis, I., Park, SH. et al. Forecasting Solar Flares Using Magnetogram-based Predictors and Machine Learning. Sol Phys 293, 28 (2018). https://doi.org/10.1007/s11207-018-1250-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11207-018-1250-4