Abstract

Observations of the solar photosphere from the ground encounter significant problems caused by Earth’s turbulent atmosphere. Before image reconstruction techniques can be applied, the frames obtained in the most favorable atmospheric conditions (the so-called lucky frames) have to be carefully selected. However, estimating the quality of images containing complex photospheric structures is not a trivial task, and the standard routines applied in nighttime lucky imaging observations are not applicable. In this paper we evaluate 36 methods dedicated to the assessment of image quality, which were presented in the literature over the past 40 years. We compare their effectiveness on simulated solar observations of both active regions and granulation patches, using reference data obtained by the Solar Optical Telescope on the Hinode satellite. To create images that are affected by a known degree of atmospheric degradation, we employed the random wave vector method, which faithfully models all the seeing characteristics. The results provide useful information about the method performances, depending on the average seeing conditions expressed by the ratio of the telescope’s aperture to the Fried parameter, \(D/r_{0}\). The comparison identifies three methods for consideration by observers: Helmli and Scherer’s mean, the median filter gradient similarity, and the discrete cosine transform energy ratio. While the first method requires less computational effort and can be used effectively in virtually any atmospheric conditions, the second method shows its superiority at good seeing (\(D/r_{0}<4\)). The third method should mainly be considered for the post-processing of strongly blurred images.

Similar content being viewed by others

1 Introduction

Ground-based telescopes suffer from the degradation of image quality caused by the turbulent nature of Earth’s atmosphere. This phenomenon, frequently termed “seeing”, prevents large-aperture telescopes from achieving their theoretical angular resolution. Even the best observing sites do not allow for observations in the visible with a resolution higher than the diffraction limit of a 20 cm telescope. This means that long exposures of both very small and extremely large telescopes show the same angular resolution.

The Fried parameter, \(r_{0}\), is a quantity that describes the average atmospheric conditions. The Fried parameter is the distance across which the expected change of the wavefront phase is exactly \(1/2\pi\). It may also be understood as the size of the telescope’s aperture over which the theoretical diffraction limit may be easily achieved. Since at the best observational sites \(r_{0}\) rarely reaches 20 cm in the visible (\(\lambda=0.5~\upmu \mbox{m}\); Socas-Navarro et al., 2005), the long exposures obtained from any telescope do not expose a resolution higher than is reached by a 20 cm telescope. Importantly, the \(D/r_{0}\) ratio is frequently used to determine how far away the resolution of images obtained by a telescope of \(D\) diameter is from its theoretical diffraction limit.

So far, numerous techniques, both hardware and software based, have been developed to enhance the resolution of astronomical observations. Probably the most prominent hardware-based approach is adaptive optics (AO; Hardy, 1998), in which the compensation of wavefront distortions is performed directly by a deformable mirror. The AO for solar imaging differs from the method used in nighttime observations (Rimmele and Marino, 2011; Rimmele, 2004). There is no point-like object for wavefront sensing, which is usually performed with a Shack–Hartmann sensor. Instead, the cross correlation between images observed in individual sub-apertures has to be calculated to estimate the shape of the wavefront (Löfdahl, 2010). Significantly poorer daytime atmospheric conditions place much higher demands on the updating frequency of a deformable mirror. Fortunately, there is also more light available for the sensors, which enables these observations.

A popular example of a software-based approach to improve the quality of images is the method called lucky imaging (LI; Scharmer, 1989; Law, Mackay, and Baldwin, 2006). Owing to the availability of very fast and low-noise cameras, this method has recently become a very popular high-resolution acquisition technique in the visible. However, it is dedicated to smaller telescopes (\({<}\,2~\mbox{m}\)), since it relies on the fact that there is only a small chance to obtain a diffraction-limited image in a series of very short exposures (i.e. shorter than the coherence time of the atmosphere – usually up to several milliseconds). This chance, estimated by Fried (1978), is relatively high for smaller apertures (e.g. \(D/r_{0}<2\)), while it quickly becomes negligible for greater mirrors.

There are many combinations of software and hardware techniques of high-resolution imaging. As an example, Colodro-Conde et al. (2017) or Mackay et al. (2012) presented the fusion of LI and adaptive optics (AOLI). In Schödel and Girard (2012), the deconvolution of a series of short exposures was shown as another possible way to enhance the quality of astronomical images. A wide range of speckle-interferometry methods is also available (Saha, 2007; Tokovinin and Cantarutti, 2008). They involve approaches such as aperture masking (Monnier et al., 2004; Ireland, 2013) or speckle bispectral reconstructions (Lohmann, Weigelt, and Wirnitzer, 1983; Hofmann and Weigelt, 1993). Some of them have been successfully used for a long time in solar imaging (von der Lühe, 1993).

Whether with or without AO, the observer acquires a series of images and then applies several post-processing steps (e.g., Denker et al., 2005). One step is always the selection of best exposures, i.e. those with the highest image quality. Such LI has been very popular for a long time in ground-based solar observatories because of its apparent simplicity and very low hardware requirements (only a fast camera is necessary; Beckers, 1989). However, the most advanced imagers that use lucky exposures are far from being simple (Ferayorni et al., 2016). The rejection of less useful frames is possible as a result of the high intensity of the observed object, which allows for acquiring thousands of exposures per second at relatively low resolution or tens of frames if large-format cameras are used. The selection is also essential to reach the high quality of final outcomes, regardless of the complexity of the succeeding image reconstruction (e.g. simple stacking, deconvolution, or bispectral analysis).

The assessment of the temporal quality of the registered solar images becomes challenging because the character of the observed scene is complex. It is impossible to use a basic quality metric that is frequently used in LI – the intensity of the brightest pixel in a speckle pattern – as it requires a well-isolated point-like object (star). Instead, a widely employed method for the quality assessment of solar images is the root-mean-square contrast (rms contrast), which assumes the uniform isotropic properties of granulation (Danilovic et al., 2008; Denker et al., 2005; Scharmer et al., 2010). Unfortunately, the method has several drawbacks, such as a significant dependency of its effectiveness on the wavelength (Albregtsen and Hansen, 1977) and the sensitivity to the structural contents of the image (Deng et al., 2015). This implies that the tip-tilt effects, which move the observed patch and thus slightly change its contents, will also introduce additional noise into the quality estimation because image features such as sunspots or pores will move in and out of the analyzed region.

Motivated by a recent work by Deng et al. (2015), who introduced an objective image quality metric to solar observations, we decided to explore which of the numerous quality metrics (QMs) available in the literature can be employed to select solar frames. This was carried out by investigating the correlation between the outcomes of QMs and the known strength of the simulated turbulence. Our review includes 36 QMs with many varying implementations. Implementations refer to different gradient operators, kernel sizes, thresholding techniques, etc., without implying conceptual changes. We use reference images from the Solar Optical Telescope (SOT: Tsuneta et al., 2008) on board the Hinode satellite and use an advanced method for modeling atmospheric turbulences, the random wave vector (RWV, Voitsekhovich et al., 1999) method. The scintillation noise is also included to faithfully reflect all the seeing characteristics. Moreover, we check the computational efficiency of the QMs to indicate which methods are more suitable for application in high-speed real-time image processing.

2 Experiment

2.1 Turbulence Simulation

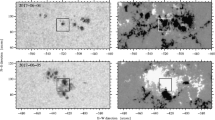

Since observations from space are not disturbed by the atmosphere, we used images obtained with the SOT as reference data for our experiments. From a wide range of registered images in the Hinode database,Footnote 1 we selected a range that contained several regions of enhanced magnetic field (sunspots). The image was originally obtained at the green continuum wavelength, on 29 November 2015 at 21:19:44 UT. In Figure 1 we show the selected reference image and indicate the positions of six extracted \(100\times100\)-pixel patches, (roughly \(5'' \times5''\) each, image scale of \(0.054''~\mbox{pixel}^{-1}\)). Patches W1, W2, and W3 include sunspots, while W4, W5, and W6 contain granulation.

Part of the solar photosphere imaged by the Hinode satellite. The six patches (W1 – W6, \(100\times100\) pixels) extracted for the experiment are indicated. Three of them (W1, W2, W3) contain parts of active regions, while the remaining ones (W4, W5, W6) include only granulation. The image was obtained in the green continuum on 29 November 2015 at 21:19:44 UT.

To investigate the response of various QMs for a given turbulence strength, we modeled the transfer function of the atmosphere using the RVW method. The method allows for reliable modeling of amplitude and phase fluctuations of the incoming optical wavefront. Since a patch of the solar photosphere that is used for quality assessment can be arbitrarily small, we assumed that it is within the isoplanatic angle. Thus, anisoplanatic effects, which are more complicated to simulate, were neglected in this study. Using the RVW, we generated 1000 blurring kernels (speckles patterns) for ten distinctive seeing condition scenarios: \(D/r_{0} = 1, 2, \ldots, 10\). We assumed that this range is representative for observations at the best observing sites. Each generated kernel consisted of \(1024\times1024\) pixels, and the resolution was twice higher than required by the Nyquist limit, i.e. a single pixel corresponded to an angular size of \(\lambda/ 4D\) (\(\lambda\) – wavelength, \(D\) – telescope diameter). We opted for this oversampling to be able to combine simulated speckle kernels with reference Hinode images. Each blurring kernel was normalized so that the summed intensity over all pixels was unity. Exemplary kernels with the corresponding long-exposure seeing disks are presented in Figure 2. Evidently, the poorer the seeing conditions (higher \(D/r_{0}\)), the more complex the kernel shape. The simulated tip-tilt effect is also visible as a displacement of the kernel centroid.

Exemplary blurring kernels (negatives) employed in the experiment. The rows correspond to various \(D/r_{0}\) relative atmospheric conditions, while the last column exposes the simulated long-exposure seeing disk (i.e. an ensemble average over all the kernels for a given \(D/r_{0}\)). Each kernel is presented in a box with a side length of \(40\lambda/D\). The auxiliary gray lines indicate the box center, highlighting the simulated tip-tilt effect.

Atmospheric scintillation results in a varying attenuation of the flux that is collected by a telescope. This type of noise was recently investigated by Osborn et al. (2015). The authors presented the following formula for estimating the relative variance of the total intensity of the observed object:

where \(D\) is telescope diameter, \(\gamma\) is the zenith distance, \(h_{\mathrm{obs}}\) is the altitude of the observatory, \(H\) is the scale height of the atmospheric turbulence, generally assumed to be \({\sim}\,8000~\mbox{m}\), and \(C_{Y}\) is the scaling coefficient, which can be determined from turbulence profilers (SCIDARs) and was estimated to lie between 1.30 – 1.67 for the best observing sites (see Table 1 in Osborn et al., 2015).

To include these fluctuations in our simulated images, we multiplied each kernel by a random variable with an expected value of unity and a standard deviation equal to \(\sigma_{s}\). We assumed that 1) a telescope size \(D=0.5~\mbox{m}\) according to the size of Hinode/SOT instrument, 2) observations made at a zenith distance of \(\gamma=60^{\circ}\), and 3) a low scintillation index, \(C_{y}=1.5\), which is expected for 4) a high-altitude observatory, \(h_{\mathrm{obs}}= 3000~\mbox{m}\). For these conditions, the relative scintillation noise is \(\sigma_{s} = 0.032\).

To properly convolve a blurring kernel with a reference patch, the scale of a kernel has to be the same as the image scale. For the assumptions given above, i.e. a \(D = 0.5~\mbox{m}\) telescope observing at \(\lambda= 550~\mbox{nm}\), we obtain an image scale of \(0.055''~\mbox{pixel}^{-1}\). This means that the sampling of the blurring kernels (\(D/4 \lambda\)) and the assumed telescope size allow for convolving kernels with the Hinode images without any prescaling. Several examples of solar images degraded by simulated blurring kernels are presented in Figure 3.

The time-dependent quality, when observing stellar objects, can be determined from the relative intensity of the brightest pixel in the normalized kernel. This is a widely accepted approach to select the sharpest frames in nighttime LI (Law, Mackay, and Baldwin, 2006). However, in our case, the object is not point-like and shows complex structures. Thus, we decided to use the quality measure based on the amount of energy preserved from the original frequency spectrum. Since the original image is convolved with a blurring kernel and the original amplitudes in the frequency spectrum are multiplied by the amplitudes of a kernel, the value of the proposed quality measure can be calculated by summing squared amplitudes in a 2D Fourier transform of a kernel. According to Parseval’s theorem, this is also equal to the sum of squared intensities of a kernel directly in the image plane.

Since turbulence is a random process, it is also possible that the temporal seeing conditions will become much better or much worse than indicated by the average \(D/r_{0}\) (Fried, 1978). This is in fact what we also observed in our data. For all ten sets of simulated kernels (\(D/r_{0}=1,2,\ldots,10\)), the spread of temporal quality is shown in the histograms in Figure 4. In the right part of Figure 4, we plot the amount of preserved energy of the original image over all 1000 kernels for four values of \(D/r_{0}\). Clearly, the quality for the average \(D/r_{0}=3\) can sometimes outperform the conditions registered at \(D/r_{0}=1\). Therefore, \(D/r_{0}\) expresses only the average blurring strength, while it cannot be taken as a reliable estimator of the quality of individual frames.

Dependencies of temporal turbulence strength expressed by the maximum intensity in a normalized blurring kernel on the average seeing conditions \(D/r_{0}\). Left: amount of preserved image energy for four 1000-kernel sets \(D/r_{0}=1,3,5\), and 7. Right: histograms of the preserved image energy over all the simulated kernels.

2.2 Methods

A set of 35 state-of-the-art QMs was provided in the review of Pertuz, Puig, and Garcia (2013). The authors considered the most popular methods dedicated to the assessment of the focus in complex scenes by means of contrast measurement. The methods can be categorized into six families of operators, based on gradients (GRA), Laplacians (LAP), wavelets (WAV), intensity statistics (STA), discrete-cosine transform (DCT), and the miscellaneous methods (MIS), i.e. those that cannot be categorized into any other group. An overview of the methods with their abbreviations and references is given in Table 1.

Within the set of techniques, we can find the most popular method used in solar image processing, i.e. the rms-contrast measure, which was called normalized gray-level variance and is abbreviated STA5 (we follow this nomenclature). The most recent QM proposed by Deng et al. (2015), the median filter gradient similarity (GRA8), was included in our comparison as well.

To improve the effectiveness of QMs for solar observations, we had to adjust the parameters of many techniques. This was carried out experimentally by tuning the parameter, considering the properties of the observed solar scene and analyzing the algorithm details. Moreover, for some of the methods, we proposed major, but still simple, modifications, which allowed enhancing their effectiveness. This resulted in the creation of various implementations of most of the methods (labeled versions A, B, etc.). The set of investigated parameter values and/or the details of the applied modifications are given in Table 2. The distance parameters, such as radius or size of the local filtering window, are expressed in pixels (in our case, \(1~\mbox{pixel} = 0.055''\)). The thresholds are given in relative values, which means that before applying thresholding, the intensities in a patch were normalized such that the intensities cover the range from zero to unity. Several methods have two adjustable parameters, therefore we evaluated various combinations of their values. Including all the modifications, we obtained a total number of 105 implementations of 36 techniques, which are included in our comparison.

2.3 Data Analysis

To assess the performance of all methods, we investigated the correlation between the results of QMs and the actual expected quality \(Q\). As stated before, the quality was estimated by the total preserved energy with respect to the reference undisturbed image. We observed that the relation between these two quantities is almost always nonlinear. Fortunately, it is sufficient that the dependency is monotonic as it allows us to distinguish between better and poorer atmospheric conditions. To estimate the effectiveness of the methods, instead of Pearson’s correlation coefficient, we therefore used the Spearman rank-order correlation \(C_{s}\). This coefficient is insensitive to any nonlinearities in the observed dependencies.

As an example, we show in the upper panel of Figure 6 the significantly different dependencies between the expected quality \(Q\) and the outcomes of two QMs (LAP1 and MISB, on the left and right side, respectively) calculated for image patch W1. Each set of \(D/r_{0}\) is presented with a different marker/color. We decided to calculate the Spearman correlation coefficient in two ways: 1) within a set of observations for each \(D/r_{0}\), and 2) across all the simulated conditions \(D/r_{0}=1\,\mbox{--}\,10\). We present the corresponding results of \(C_{s}\) in each \(D/r_{0}\) subset in the bar plots of Figure 6. The correlation depends on \(D/r_{0}\) so that it becomes apparent which range of atmospheric conditions is most suitable for a given method. For example, the LAP1 method allows for an effective estimation of the image quality in \(D/r_{0}=1\), while MIS1B is completely useless in this range. The proposed approach to calculate the correlation coefficient permitted estimating the performance of each method for a range of typical atmospheric conditions.

In the example presented in Figure 6, the superiority of LAP1 over MIS1B is also visible for \(C_{s}\) calculated over the whole set of \(D/r_{0}\). However, the difference is significantly smaller than in particular groups as both methods achieve \(C_{s} > 0.9\) (0.98 and 0.90 for LAP1 and MIS1B, respectively). This is due to the wide range of considered \(Q\) values, which makes the correlation between the quantities more evident and leads to the higher value of the \(C_{s}\) coefficient. Although the differences are not so significant, the correlation for such a wide range of \(Q\) values still allows for a comparison of the overall effectiveness of QMs, but within a much narrower range of \(C_{s}\) values.

2.4 Results and Discussion

The results of the comparison are presented in Figure 5 and Figure 7. While in Figure 5 we present only the best four methods for each \(D/r_{0}\), the average performance obtained for whole QM families is summarized in Figure 7. We divided the results into two categories, according to the contents of the observed image: W1, W2, and W3 show active regions, and W4, W5, and W6 have granulation.

Ranking of the best-quality measures for various \(D/r_{0}\) seeing conditions and six analyzed patches: W1, W2, and W3 show active regions, and W4, W5, and W6 have granulation areas. The lower bar plots show the rankings for wide-range correlation across all atmospheric conditions \(D/r_{0}=1\,\mbox{--}\,10\).

Examples of the correlation between the measured and expected image quality for two distinct methods: LAP1 on the left side, and MIS1B on the right side. The analysis was performed for image patch W1. The calculated correlation coefficients in each \(D/r_{0}\) set and over the whole data set (\(D/r_{0}=1\,\mbox{--}\,10\)) are presented in the lower bar plots.

According to the results presented in Figure 5, there is no single winner that would cover all possible atmospheric conditions and scene characteristics. However, three techniques performed distinctively better, and they are therefore discussed below.

One of the methods with a very impressive performance is Helmli and Scherer’s mean method (MIS5), proposed by Helmli and Scherer (2001). This technique provided very good results for the whole range of \(D/r_{0}\) conditions and both types of observed solar regions. For all atmospheric conditions it was always among the four best methods. As indicated by the distinctively higher correlation coefficients calculated over \(D/r_{0}=1\,\mbox{--}\,10\), its usefulness should be considered when the seeing varies strongly or is unknown. In summary, this method should be the first choice of all techniques we tested.

The second noteworthy method is the median filter gradient similarity (GRA8) method, which was recently proposed by Deng et al. (2015). It shows the best performance for very good atmospheric conditions (\(D/r_{0}<4\)). However, to achieve high effectiveness, we had to slightly modify the method by 1) combining both horizontal and vertical gradients, and 2) changing the window size. The superiority of the method is evident especially for active regions (W1 – W3), while it is slightly less efficient when assessing only granulation patches (W4 – W6), especially for \(D/r_{0}>2\). Interestingly, this technique was not included in the best 12 methods indicated by wide-range correlations, \(D/r_{0}=1\,\mbox{--}\,10\), which indicates that it should not be applied for observations with poor or unknown atmospheric conditions. The results of the GRA8 method recommend it to be used in AO-assisted observations since in this case the image quality is significantly higher and the effective \(D/r_{0}\) is small.

The last method that provides good results is the DCT energy ratio proposed by Shen and Chen (2006). Its performance is the highest for moderate and poor atmospheric conditions when observing granulation regions. This method is the second best method when the whole range of \(D/r_{0}\) is taken into account for patches W4 – W6. The parameter of this method, that is, the size of the sub-block, should be selected according to the blurring strength, i.e., the larger \(D/r_{0}\), the larger the sub-block.

The method that is frequently used in solar observations, rms contrast or normalized gray-level variance (STA5), showed a surprisingly poor performance. Since the rms-contrast measure was originally developed for granulation regions, with isotropic and uniform characteristics, we investigated how the method performed for this scenario. The results presented in Figure 8 indicate that the technique indeed allows achieving significantly higher correlation values for granulation. Still, the outcomes based on rms contrast were much less correlated with the expected image quality than the results achieved by the best techniques. Therefore, it did not appear in any ranking presented in Figure 5. Our conclusions agree with the observations made by Deng et al. (2015), who also indicate a relatively low efficiency of the common rms-contrast measure.

Some interesting conclusions can be drawn from the average performance of each QM family presented in Figure 7. The Laplacian-based methods (LAP) show very good average correlation with \(D/r_{0}\) for both types of observed scenarios. While the LAP-based methods are indicated only several times in the rankings presented in Figure 5, they should still be considered as a base for new and better techniques. The greatest difference between the efficiency when changing from granulation to active regions can be observed in statistical methods (STA). This agrees well with the results shown in Figure 8 and appears to be due to the assumption made in STA methods that regions expose uniform properties across the observed field. The strange shape of the GRA dependency visible in the right plot of Figure 7 arises as a result of the characteristics of GRA8. As we stated before, the performance of this method increases significantly as \(D/r_{0}\) decrease. This behavior biases the average efficiency of the GRA family.

The possibility of using a method in real-time image selection can be especially important, as it allows for recording only the most useful data. For completeness of our comparison, we therefore measured the time required for processing a single image patch by each method. The evaluations were performed on a single core of IntelCore i7-3930K operating at 3.2 Ghz. The procedures were repeated \(10^{4}\) times to obtain the average execution time. The results are presented in Table 3, which lists the methods and their average computation time (single-image analysis) in seconds, alternately.

The results show that real-time frame selection for the assumed size of an image patch is possible for frame rates of more than 1000 fps for most of the analyzed methods. This can be not true, however, when large full-resolution frames need to be processed and/or when several image calibration steps have to be applied. For this case, the usage of graphics processing units (GPU) and accompanying optimization of a code should be considered. However, in our comparison we decided to perform measurements on a single CPU core, so that the further reduction of the execution time with a multi-core machine can be estimated. For most of the included methods, the analysis was performed independently in local sub-regions of the whole image, which makes them easily parallelized.

We observed that GRA8 requires visibly more computation time (\({>}\,0.01~\mbox{s}\)) than MIS5 (\({<}\,0.002~\mbox{s}\)). This is mostly due to the median filtering, which requires sorting pixels in a sliding window. This indicates that GRA8, compared to MIS5, would require more computing resources and/or better code optimization to operate at the high frame rates that are frequently used in solar observations. Unfortunately, the discrete cosine transform techniques (DCT1 and DCT2) have significantly higher execution times, which makes them useless for real-time computation. These methods should be considered only in post-processing. The fastest method was the one most frequently used in solar observations, STA5. Unfortunately, its mediocre performance is not compensated by the distinctively higher computational efficiency.

3 Conclusions

Image quality assessment is an integral part of observations of the solar photosphere and chromosphere. It is essential to precisely select only highest fidelity images before performing consecutive image reconstruction. Unfortunately, the complexity and variety of the observed structures make estimating the image quality a challenging task. There is no single point-like object available, therefore the selection techniques used in nighttime LI are not applicable.

In this study we decided to employ 36 techniques with various implementations and evaluated their precision in selecting the best exposures. The methods were based on various principles (gradients, intensity statistics, wavelets, Laplacians, and discrete cosine transforms) and were published over the past 40 years. Additionally, we enhanced their performance by applying simple modifications and by adjusting their tunable parameters.

In the comparison we employed reference images that contained both active regions and granulation areas, obtained by the Hinode satellite. The selected patches were degraded by convolving them with blurring kernels generated by the RWV method, which faithfully reflects the seeing characteristics. We assumed a wide range of relative atmospheric conditions, starting from nearly undisturbed observations at \(D/r_{0}=1\) to mediocre seeing at \(D/r_{0}=10\). The reference quality of each simulated image was objectively estimated by measuring the amount of energy preserved in the Fourier spectrum of the original image. To assess the efficiency of the compared techniques, we finally calculated Spearman’s correlation coefficient between the outcomes of each method and the expected image quality.

The results of our comparison showed that the efficiency depends on the strength of the atmospheric turbulence. For good seeing, \(D/r_{0}<4\), the best method is the median filter gradient similarity (Deng et al., 2015), (GRA8), the most recent technique proposed for solar observations. It is noteworthy that, the original idea had to be slightly modified to enhance the method performance. On the other hand, when the seeing conditions cover a wide range, the most efficient method is Helmli and Scherer’s mean (Helmli and Scherer, 2001), (MIS5). This method should be considered when observing without AO or when the seeing conditions are unknown. The third outstanding method was the DCT energy ratio (Shen and Chen, 2006), which showed high performance in poor atmospheric conditions when observing granulation regions. The measurements of the execution time indicated that of the three mentioned techniques, Helmli and Scherer’s mean has a significantly higher computational efficiency, which recommends it to be used with extremely high frame rates and/or larger image patches.

Notes

Hinode database query form is available at http://darts.isas.jaxa.jp/solar/hinode/query.php .

References

Ahmad, M.B., Choi, T.S.: 2007, Application of three dimensional shape from image focus in LCD/TFT displays manufacturing. IEEE Trans. Consum. Electron. 53(1), 1. DOI .

Albregtsen, F., Hansen, T.L.: 1977, The wavelength dependence of granulation (0.38–2.4 μm). Solar Phys. 54, 31. DOI . ADS .

Beckers, J.M.: 1989, Improving solar image quality by image selection. In: Rutten, R.J., Severino, G. (eds.) NATO Advanced Science Institutes (ASI) Series C, NATO Advanced Science Institutes (ASI) Series C 263, 55. ADS .

Brenner, J.F., Dew, B.S., Horton, J.B., King, T., Neurath, P.W., Selles, W.D.: 1976, An automated microscope for cytologic research a preliminary evaluation. J. Histochem. Cytochem. 24(1), 100. PMID: 1254907. DOI .

Chern, N.N.K., Neow, P.A., Ang, M.H.: 2001, Practical issues in pixel-based autofocusing for machine vision. In: Proceedings of IEEE International Conference on Robotics and Automation 3, 2791. DOI .

Colodro-Conde, C., Velasco, S., Fernández-Valdivia, J.J., López, R., Oscoz, A., Rebolo, R., Femenía, B., King, D.L., Labadie, L., Mackay, C., Muthusubramanian, B., Pérez Garrido, A., Puga, M., Rodríguez-Coira, G., Rodríguez-Ramos, L.F., Rodríguez-Ramos, J.M., Toledo-Moreo, R., Villó-Pérez, I.: 2017, Laboratory and telescope demonstration of the TP3-WFS for the adaptive optics segment of AOLI. Mon. Not. Roy. Astron. Soc. 467, 2855. DOI . ADS .

Danilovic, S., Gandorfer, A., Lagg, A., Schüssler, M., Solanki, S.K., Vögler, A., Katsukawa, Y., Tsuneta, S.: 2008, The intensity contrast of solar granulation: Comparing Hinode SP results with MHD simulations. Astron. Astrophys. 484, L17. DOI . ADS .

Deng, H., Zhang, D., Wang, T., Ji, K., Wang, F., Liu, Z., Xiang, Y., Jin, Z., Cao, W.: 2015, Objective image-quality assessment for high-resolution photospheric images by median filter-gradient similarity. Solar Phys. 290, 1479. DOI . ADS .

Denker, C., Mascarinas, D., Xu, Y., Cao, W., Yang, G., Wang, H., Goode, P.R., Rimmele, T.: 2005, High-spatial-resolution imaging combining high-order adaptive optics, frame selection, and speckle masking reconstruction. Solar Phys. 227(2), 217. DOI .

Ferayorni, A., Beard, A., Cole, W., Gregory, S., Wöeger, F.: 2016, Bottom-up laboratory testing of the DKIST Visible Broadband Imager (VBI). In: Modeling, Systems Engineering, and Project Management for Astronomy VI, Proceedings of SPIE 9911. DOI . ADS .

Firestone, L., Cook, K., Culp, K., Talsania, N., Preston, K.: 1991, Comparison of autofocus methods for automated microscopy. Cytometry 12(3), 195. DOI .

Fried, D.L.: 1978, Probability of getting a lucky short-exposure image through turbulence. J. Opt. Soc. Am. 68, 1651. ADS .

Geusebroek, J.-M., Cornelissen, F., Smeulders, A.W.M., Geerts, H.: 2000, Robust autofocusing in microscopy. Cytometry 39(1), 1. DOI .

Groen, F.C.A., Young, I.T., Ligthart, G.: 1985, A comparison of different focus functions for use in autofocus algorithms. Cytometry 6(2), 81. DOI .

Hardy, J.W.: 1998, Adaptive Optics for Astronomical Telescopes, 448. ADS .

Helmli, F.S., Scherer, S.: 2001, Adaptive shape from focus with an error estimation in light microscopy. In: Proceedings of the 2nd International Symposium on Image and Signal Processing and Analysis. In Conjunction with 23rd International Conference on Information Technology Interfaces, 188. DOI .

Hofmann, K.-H., Weigelt, G.: 1993, Iterative image reconstruction from the bispectrum. Astron. Astrophys. 278, 328. ADS .

Ireland, M.J.: 2013, Phase errors in diffraction-limited imaging: Contrast limits for sparse aperture masking. Mon. Not. Roy. Astron. Soc. 433, 1718. DOI . ADS .

Law, N.M., Mackay, C.D., Baldwin, J.E.: 2006, Lucky imaging: High angular resolution imaging in the visible from the ground. Astron. Astrophys. 446, 739. DOI .

Lee, S.-Y., Kumar, Y., Cho, J.-M., Lee, S.-W., Kim, S.-W.: 2008, Enhanced autofocus algorithm using robust focus measure and fuzzy reasoning. IEEE Trans. Circuits Syst. Video Technol. 18(9), 1237. DOI .

Lee, S.Y., Yoo, J.T., Kumar, Y., Kim, S.W.: 2009, Reduced energy-ratio measure for robust autofocusing in digital camera. IEEE Signal Process. Lett. 16(2), 133. DOI .

Löfdahl, M.G.: 2010, Evaluation of image-shift measurement algorithms for solar Shack–Hartmann wavefront sensors. Astron. Astrophys. 524, A90. DOI . ADS .

Lohmann, A.W., Weigelt, G., Wirnitzer, B.: 1983, Speckle masking in astronomy – Triple correlation theory and applications. Appl. Opt. 22, 4028. DOI . ADS .

Lorenzo, J., Castrillon, M., Mendez, J., Deniz, O.: 2008, Exploring the use of local binary patterns as focus measure. In: Proceedings of International Conference on Computational Intelligence for Modelling Control Automation, 855. DOI .

Mackay, C., Rebolo-López, R., Castellá, B.F., Crass, J., King, D.L., Labadie, L., Aisher, P., Garrido, A.P., Balcells, M., Díaz-Sánchez, A., Fuensalida, J.J., Lopez, R.L., Oscoz, A., Pérez Prieto, J.A., Rodriguez-Ramos, L.F., Villó, I.: 2012, AOLI – Adaptive Optics Lucky Imager: Diffraction limited imaging in the visible on large ground-based telescopes. In: Proceedings of SPIE 8446. DOI .

Malik, A.S., Choi, T.-S.: 2008, A novel algorithm for estimation of depth map using image focus for 3D shape recovery in the presence of noise. Pattern Recognit. 41(7), 2200. DOI .

Minhas, R., Mohammed, A.A., Wu, Q.M.J., Sid-Ahmed, M.A.: 2009, 3D shape from focus and depth map computation using steerable filters, Springer, Berlin, 573. DOI .

Monnier, J.D., Millan-Gabet, R., Tuthill, P.G., Traub, W.A., Carleton, N.P., Coudé du Foresto, V., Danchi, W.C., Lacasse, M.G., Morel, S., Perrin, G., Porro, I.L., Schloerb, F.P., Townes, C.H.: 2004, High-resolution imaging of dust shells by using Keck aperture masking and the IOTA interferometer. Astrophys. J. 605, 436. DOI . ADS .

Nanda, H., Cutler, R.: 2001, Practical calibrations for a real-time digital omnidirectional camera. Technical report, In Technical Sketches, Computer Vision and Pattern Recognition.

Nayar, S.K., Nakagawa, Y.: 1994, Shape from focus. IEEE Trans. Pattern Anal. Mach. Intell. 16(8), 824.

Osborn, J., Föhring, D., Dhillon, V.S., Wilson, R.W.: 2015, Atmospheric scintillation in astronomical photometry. Mon. Not. Roy. Astron. Soc. 452, 1707. DOI . ADS .

Pech-Pacheco, J.L., Cristobal, G., Chamorro-Martinez, J., Fernandez-Valdivia, J.: 2000, Diatom autofocusing in brightfield microscopy: A comparative study. In: Proceedings of 15th International Conference on Pattern Recognition 3, 314. DOI .

Pertuz, S., Puig, D., Garcia, M.A.: 2013, Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 46(5), 1415. DOI .

Rimmele, T.R.: 2004, Recent advances in solar adaptive optics. In: Proceedings of SPIE 5490, 34. DOI .

Rimmele, T.R., Marino, J.: 2011, Solar adaptive optics. Living Rev. Solar Phys. 8, 2. DOI . ADS .

Saha, S.K.: 2007, Diffraction-Limited Imaging with Large and Moderate Telescopes. DOI . ADS .

Santos, A.: 1997, Evaluation of autofocus functions in molecular cytogenetic analysis. J. Microsc. 188(3), 264. DOI .

Scharmer, G.B.: 1989, High resolution granulation observations from La Palma: Techniques and first results. In: Rutten, R.J., Severino, G. (eds.) Solar and Stellar Granulation, NATO Adv. Sci. Inst. (ASI) Ser. C 263, 161.

Scharmer, G.B., Löfdahl, M.G., van Werkhoven, T.I.M., de la Cruz Rodríguez, J.: 2010, High-order aberration compensation with multi-frame blind deconvolution and phase diversity image restoration techniques. Astron. Astrophys. 521, A68. DOI . ADS .

Schlag, J.F., Sanderson, A.C., Neuman, C.P., Wimberly, F.C.: 1983, Implementation of automatic focusing algorithms for a computer vision system with camera control. Technical Report CMU-RI-TR-83-14, Robotics Institute, Pittsburgh, PA.

Schödel, R., Girard, J.H.: 2012, Holographic imaging: A versatile tool for high angular resolution imaging. Messenger 150, 26. ADS .

Shen, C.-H., Chen, H.H.: 2006, Robust focus measure for low-contrast images. In: 2006 Digest of Technical Papers International Conference on Consumer Electronics, 69. DOI .

Shirvaikar, M.V.: 2004, An optimal measure for camera focus and exposure. In: Proceedings of the Thirty-Sixth Southeastern Symposium on System Theory, 472. DOI .

Socas-Navarro, H., Beckers, J., Brandt, P., Briggs, J., Brown, T., Brown, W., Collados, M., Denker, C., Fletcher, S., Hegwer, S., Hill, F., Horst, T., Komsa, M., Kuhn, J., Lecinski, A., Lin, H., Oncley, S., Penn, M., Rimmele, T., Streander, K.: 2005, Solar site survey for the Advanced Technology Solar Telescope. I. Analysis of the seeing data. Publ. Astron. Soc. Pac. 117, 1296. DOI . ADS .

Subbarao, M., Choi, T.-S., Nikzad, A.: 1993, Focusing techniques. Opt. Eng. 32(11), 2824. DOI .

Tenenbaum, J.M.: 1971, Accommodation in computer vision. PhD thesis, Stanford, CA, USA.

Thelen, A., Frey, S., Hirsch, S., Hering, P.: 2009, Improvements in shape-from-focus for holographic reconstructions with regard to focus operators, neighborhood-size, and height value interpolation. IEEE Trans. Image Process. 18(1), 151. DOI .

Tokovinin, A., Cantarutti, R.: 2008, First speckle interferometry at SOAR telescope with electron-multiplication CCD. Publ. Astron. Soc. Pac. 120, 170. DOI . ADS .

Tsuneta, S., Ichimoto, K., Katsukawa, Y., Nagata, S., Otsubo, M., Shimizu, T., Suematsu, Y., Nakagiri, M., Noguchi, M., Tarbell, T., Title, A., Shine, R., Rosenberg, W., Hoffmann, C., Jurcevich, B., Kushner, G., Levay, M., Lites, B., Elmore, D., Matsushita, T., Kawaguchi, N., Saito, H., Mikami, I., Hill, L.D., Owens, J.K.: 2008, The Solar Optical Telescope for the Hinode mission: An overview. Solar Phys. 249, 167. DOI .

Voitsekhovich, V.V., Kouznetsov, D., Orlov, V.G., Cuevas, S.: 1999, Method of random wave vectors in simulation of anisoplanatic effects. Appl. Opt. 38(19), 3985. DOI .

Vollath, D.: 1987, Automatic focusing by correlative methods. J. Microsc. 147(3), 279. DOI .

von der Lühe, O.: 1993, Speckle imaging of solar small scale structure. I – Methods. Astron. Astrophys. 268, 374. ADS .

Wee, C.-Y., Paramesran, R.: 2007, Measure of image sharpness using eigenvalues. Inf. Sci. 177(12), 2533. DOI .

Xie, H., Rong, W., Sun, L.: 2006, Wavelet-based focus measure and 3-D surface reconstruction method for microscopy images. In: 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, 229. DOI .

Yang, G., Nelson, B.J.: 2003, Wavelet-based autofocusing and unsupervised segmentation of microscopic images. In: Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453) 3, 2143. DOI .

Yap, P.T., Raveendran, P.: 2004, Image focus measure based on Chebyshev moments. IEE Proc., Vis. Image Signal Process. 151(2), 128. DOI .

Acknowledgements

We would like to thank for the numerous important comments, a thorough review, and the great help in polishing the English provided by the anonymous referee. This research was supported by the Polish National Science Centre grants 2016/21/D/ST9/00656 and (A. Popowicz) and 2015/17/N/ST7/03720 (K. Bernacki). We would also like to acknowledge the support from the Polish Ministry of Science and Higher Education funding for statutory activities.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosure of Potential Conflicts of Interest

The authors declare that they have no conflicts of interest.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Popowicz, A., Radlak, K., Bernacki, K. et al. Review of Image Quality Measures for Solar Imaging. Sol Phys 292, 187 (2017). https://doi.org/10.1007/s11207-017-1211-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11207-017-1211-3