Abstract

We consider a problem of parameter estimation for the state space model described by linear stochastic differential equations. We assume that an unobservable Ornstein–Uhlenbeck process drives another observable process by the linear stochastic differential equation, and these two processes depend on some unknown parameters. We construct the quasi-maximum likelihood estimator of the unknown parameters and show asymptotic properties of the estimator.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

On the probability space \((\Omega ,{\mathcal {F}},P)\) with a complete and right-continuous filtration \(\{{\mathcal {F}}_t\}\), we consider a \((d_1+d_2)\)-dimensional Gaussian process \((X_t,Y_t)\) satisfying the following stochastic differential equations:

where \(W^1\) and \(W^2\) are independent \(d_1\) and \(d_2\)-dimensional \(\{{\mathcal {F}}_t\}\)-Wiener processes, \((X_0,Y_0)\) is a gaussian random variable independent of \(W^1\) and \(W^2, \theta _1 \in \Theta _1 \subset {\mathbb {R}}^{m_1}\) and \(\theta _2 \in \Theta _2 \subset {\mathbb {R}}^{m_2}\) are unknown parameters, and \(a,b:\Theta _2 \rightarrow M_{d_1}({\mathbb {R}}),c:\Theta _2 \rightarrow M_{d_2,d_1}({\mathbb {R}})\) and \(\sigma :\Theta _1 \rightarrow M_{d_2}({\mathbb {R}})\) are known functions. Here \(M_{m,n}({\mathbb {R}})\) is the set of \(m \times n\) matrices over \({\mathbb {R}}\) and \(M_{n}({\mathbb {R}})=M_{n,n}({\mathbb {R}})\), \(\Theta _1\) and \(\Theta _2\) are known parameter spaces. The solution of (1.1) is an Ornstein–Uhlenbeck process and it has an ergodic property.

We assume that the process X is unobservable, and the purpose of this article is to construct estimators of \(\theta _1\) and \(\theta _2\) based on discrete observations of Y; we assume discrete observations \(Y_{t_0}, Y_{t_1}, Y_{t_2}, \cdots , Y_{t_n}\) where \(t_i=ih_n\) for some \(h_n>0\), instead of considering the continuous observation \(\{Y_{t}\}_{0\le t\le T}\). The discrete observation case is much more complicated and interesting because of the construction of the estimator for \(\theta _2\), which is the main object of this paper and described in detail in Sect. 3, and also because there is no need to estimate \(\theta _1\) (Kutoyants 2004, 2019a).

Note that we can not identify \(b(\theta _2)\) and \(c(\theta _2)\) simultaneously from observation of \(\{Y_t\}\). In fact, the system

generates the same \(\{Y_t\}\) as (1.1) and (1.2). Therefore, we need to impose some restrictions on \(a,b,c,\sigma \) and the dimensions of the parameter spaces.

When \(\theta _1\) and \(\theta _2\) are known, one can estimate the unobservable state \(\{X_t\}\) from observations of \(\{Y_t\}\) by the following well-known Kalman–Bucy filter.

Theorem 1.1

(Theorem 10.2, Liptser and Shiriaev 2001a) In (1.1) and (1.2), let \(\sigma (\theta )\sigma (\theta )'\) be positive definite, where the prime means the transpose. Then \(m_t=E[X_t|\{Y_t\}_{0\le s\le t}]\) and \(\gamma _t=E[(X_t-m_t)(X_t-m_t)']\) are the solutions of the equations

Equation (1.4) is the matrix Riccati equation, which has been examined in the theory of linear quadratic control (Sontag 2013). It is known that (1.4) has the unique positive-semidefinite solution (Liptser and Shiriaev 2001a). Moreover, under proper conditions, one can show that the corresponding algebraic Riccati equation

has the maximal and minimal solutions (Coppel 1974; Zhou et al. 1996), and the solution of (1.4) converges to the maximal solution of (1.5) at an exponential rate (Leipnik 1985). Further details on this topic will be discussed in Sect. 3.

There are already several studies on parameter estimation in the system (1.1) and (1.2) with the Kalman–Bucy filter. For example, Kutoyants (2004) discusses the ergodic case, Kutoyants (1994) and Kutoyants (2019b) small noise cases, and Kutoyants (2019a) the one-step estimator. However, all of them assume \(d_1=d_2=1\) and need continuous observation of Y. The continuous observation case is simpler, because we do not have to estimate \(\theta _1\). In fact, we have

by Itô’s formula and (1.1), and therefore we can get the exact value of \(\sigma (\theta _1)\).

On the other hand, parametric inference for discretely observed stochastic differential equations without an unobservable process has been studied for decades (for example Sørensen 2002; Shimizu and Yoshida 2006; Yoshida 1992). Especially, Yoshida (2011) developed Ibragimov–Khasminskii theory (Ibragimov and Has’ Minskii 1981) into the quasi-likelihood analysis, and investigated the behavior of the quasi-likelihood estimator and the adaptive Bayes estimator in the ergodic diffusion process. Quasi-likelihood analysis is helpful to discretely observed cases, and many works have been derived from it: see Uchida and Yoshida (2012) for the non-ergodic case, Ogihara and Yoshida (2011) for the jump case, Masuda (2019) for the Lévy driven case, Gloter and Yoshida (2021) for the degenerate case, Kamatani and Uchida (2015) for the multi-step estimator, and Nakakita et al. (2021) for the case with observation noises.

This paper also makes use of quasi-likelihood analysis to investigate the behaviors of our estimators. In Sect. 2, we describe the more precise setup and present asymptotic properties of our estimators, which are main results of this paper. Then we go on to proofs of these results in Sects. 3 and 5. We first discuss the estimation of \(\theta _2\) in Sect. 3 because it is the main part of this article, whereas estimation of \(\theta _1\) is quite parallel to the usual case without an unobservable variable. We also examine the Riccati differential equation (1.4) and algebraic Riccati equation (1.5) in Sect. 3. In Sect. 4, we discuss the special case where \(d_1=d_2=1\). In the one-dimensional case, we can reduce our assumptions to simpler ones. In Sect. 5, we discuss estimation of \(\theta _1\). Finally, we show in Sect. 6 the result of computational simulation by YUIMA (Brouste et al. 2014), an package on R, and suggest a way to improve our estimators when the wrong initial value is given.

2 Notations, assumptions and main results

Let \(\theta _1^* \in {\mathbb {R}}^{m_1}\) and \(\theta _2^*\in {\mathbb {R}}^{m_2}\) be the true values of \(\theta _1\) and \(\theta _2\), respectively, and define the \((d_1+d_2)\)-dimensional Gaussian process \((X_t,Y_t)\) by

where \(W_1, W_2, X_0, Y_0, a, b, c\) and \(\sigma \) are the same as Sect. 1; \(a,b:\Theta _2 \rightarrow M_{d_1}({\mathbb {R}}),c:\Theta _2 \rightarrow M_{d_2,d_1}({\mathbb {R}})\) and \(\sigma :\Theta _1 \rightarrow M_{d_2}({\mathbb {R}})\). In this article, we have access to the discrete observations \(Y_{ih_n}~(i=0,1,\cdots ,n)\), where \(h_n\) is some positive constant, and we construct the estimators of \(\theta _1\) and \(\theta _2\) based on the observations.

We assume that \(\Theta _1\subset {\mathbb {R}}^{m_1}\) and \(\Theta _2\subset {\mathbb {R}}^{m_2}\) are open bounded subsets and that the Sobolev embedding inequality holds on \(\Theta =\Theta _1\times \Theta _2\); for any \(p>m_1+m_2\) and \(f\in C^1(\Theta )\), there exists some constant C depending only on \(\Theta \) such that

For example, if each \(\Theta _i\) (\(i=1,2\)) has a Lipchitz boundary, this inequality is valid (Leoni 2017).

Let \(Z(\theta )~(\theta \in \Theta =\Theta _1\times \Theta _2)\) be a class of random variables, where \(Z(\theta )\) is continuously differentiable with respect to \(\theta \). Then by (2.3) and Fubini’s theorem, we get for any \(p>m_1+m_2\)

where \(C_p\) is some constant depending on p and \(\Theta \). This result will be frequently referred to in the following sections.

In what follows, we use the following notations:

-

\({\mathbb {R}}_+=[0,\infty ),{\mathbb {N}}=\{1,2,\cdots \}\).

-

\(\Theta =\Theta _1\times \Theta _2,\theta _1=(\theta _1^1,\cdots ,\theta _1^{m_1}),\theta _2=(\theta _2^1,\cdots ,\theta _2^{m_2}),\theta ^*=(\theta _1^*,\theta _2^*).\)

-

For any subset \(\Xi \subset {\mathbb {R}}^m, {\overline{\Xi }}\) is the closure of \(\Xi \).

-

For every set of matrices A, B and \(C, A'\) is the transpose of \(A, A^{\otimes 2}=AA', A[B,C]=B'AC\) and \(A[B^{\otimes 2}]=B'AB\).

-

For every matrix A, |A| is the Frobenius norm of A. Namely, if \(A=(a_{ij})_{1\le i\le n,1\le j\le m}, |A|\) is defined by

$$\begin{aligned} |A|=\sqrt{\sum _{i=1}^n\sum _{j=1}^m a_{ij}^2}. \end{aligned}$$ -

For every matrix \(A, \lambda _{\min }(A)\) denotes the smallest real part of eigenvalues of matrix A.

-

For every symmetric matrix A and \(B \in M_{d}({\mathbb {R}}), A> B\) (resp. \(A\ge B\)) means that \(A-B\) is positive (resp. semi-positive) definite.

-

For any open subset \(\Xi \subset {\mathbb {R}}^m\) and \(A:\Xi \rightarrow M_{d}({\mathbb {R}})\) of class \(C^k, \partial _{\xi }^kA(\xi )\) denotes the k-dimensional tensor on \(M_{d}({\mathbb {R}})\) whose \((j_1,j_2,\cdots ,j_k)\) entry is \(\displaystyle \frac{\partial }{\partial \xi _{j_1}}\cdots \frac{\partial }{\partial \xi _{j_k}}A(\theta _i)\), where \(1\le j_1,\cdots ,j_k\le m\) and \(\xi =(\xi _1,\cdots ,\xi _m)\).

-

For every k-dimensional tensor A with \((i_1,i_2,\cdots ,i_k)\) entry \(A_{i_1\cdots i_k} \in M_{d}({\mathbb {R}})\) and every matrix \(B \in M_d({\mathbb {R}}), AB\) denotes the tensor whose \((i_1,i_2,\cdots ,i_k)\) entry is \(A_{i_1\cdots i_k}B\). BA is also defined in the same way.

-

For any partially differentiable function \(f:\Theta _2 \rightarrow {\mathbb {R}}^{d_2}\) and \(S \in M_{d_2}({\mathbb {R}}), S[\partial _{\theta _2}^{\otimes 2}]f(\theta )\) is the matrix whose (i, j)-entry is \(\displaystyle \frac{\partial }{\partial {\theta _2^i}}f(\theta _2)S_{ij}\frac{\partial }{\partial {\theta _2^j}}f(\theta _2)\).

-

If both A and B are matrices with \(M_d({\mathbb {R}})\) entries, AB is the normal product of matrices.

-

For every matrix A on \(M_d({\mathbb {R}})\) with (i, j) entry \(A_{ij} \in M_{d}({\mathbb {R}}), \textrm{Tr}A\) is a matrix on \({\mathbb {R}}\) with (i, j) entry \(\textrm{Tr}A_{ij}\).

-

For every stochastic process \(Z, \Delta _i Z=Z_{t_{i}}-Z_{t_{i-1}}\).

-

We write \(a^*,b^*,c^*,\sigma ^*\) and \(\Sigma ^*\) for \(a(\theta _2^*),b(\theta _2^*),c(\theta _2^*),\sigma (\theta _1^*)\) and \(\Sigma (\theta _1^*)\).

-

We omit the subscript n in \(h_n\) and just write h when there is no ambiguity.

-

We designate \(\sigma (\theta _1)\sigma (\theta _1)'\) as \(\Sigma (\theta _1)\).

-

C denotes a generic positive constant. When C depends on some parameter p, we might use \(C_p\) instead of C.

Moreover, we need the following assumptions:

- [A1]:

-

\(nh_n \rightarrow \infty ,~n{h_n}^2 \rightarrow 0\) as \(n \rightarrow \infty \). Moreover, we assume \(h_n \le 1\) for every \(n \in {\mathbb {N}}\).

- [A2]:

-

a, b, c and \(\sigma \) are of class \(C^4\).

Then we can extend a, b, c and \(\sigma \) to continuous functions on \({\overline{\Theta }}_1\) and \({\overline{\Theta }}_2\).

- [A3]:

-

$$\begin{aligned}&\inf _{\theta _2 \in {\overline{\Theta }}_2}\lambda _{\min }(a(\theta _2))>0\\&\inf _{\theta _2 \in {\overline{\Theta }}_2}\lambda _{\min }(b(\theta _2)^{\otimes 2})>0\\&\inf _{\theta _1 \in {\overline{\Theta }}_1}\lambda _{\min }(\Sigma (\theta _1))>0. \end{aligned}$$

- [A4]:

-

For any \(\theta _1 \in {\overline{\Theta }}_1\) and \(\theta _2 \in {\overline{\Theta }}_2\), the pair of matrix \((a(\theta _2)', \Sigma (\theta _1)[c(\theta _2)^{\otimes 2}])\) is controllable; i.e. the matrix

$$\begin{aligned} \begin{pmatrix} \Sigma (\theta _1)[c(\theta _2)^{\otimes 2}]&a(\theta _2)'\Sigma (\theta _1)[c(\theta _2)^{\otimes 2}]&\cdots&{a(\theta _2)'}^{d_1}\Sigma (\theta _1)[c(\theta _2)^{\otimes 2}] \end{pmatrix} \end{aligned}$$has full row rank. Moreover, the eigenvalues of the matrix

$$\begin{aligned} H(\theta _1,\theta _2)=\begin{pmatrix} a(\theta _2)'&{}\Sigma (\theta _1)^{-1}[c(\theta _2)^{\otimes 2}]\\ b(\theta _2)^{\otimes 2}&{}-a(\theta _2) \end{pmatrix} \end{aligned}$$(2.4)are uniformly bounded away from the imaginary axis; i.e. there are some constant \(C>0\) such that for any \(\theta _1 \in {\overline{\Theta }}_1\) and \(\theta _2 \in {\overline{\Theta }}_2\) and eigenvalue \(\lambda \) of \(H(\theta _1,\theta _2)\), it holds

$$\begin{aligned} |\textrm{Re}(\lambda )|>C. \end{aligned}$$

By Assumption [A4] and the corollary of Theorem 6 in Coppel (1974), for every \(\theta _1 \in {\overline{\Theta }}_1\) and \(\theta _2 \in {\overline{\Theta }}_2\), Eq. (1.5) has the maximal solution \(\gamma =\gamma _+(\theta _1,\theta _2)\) and minimal solution \(\gamma =\gamma _-(\theta _1,\theta _2)\), where \(\gamma _+(\theta _1,\theta _2)>\gamma _-(\theta _1,\theta _2)\). The meaning of the maximal and minimal solutions is that for any symmetric solution \(\gamma \) of (1.5), it holds \(\gamma _-\le \gamma \le \gamma _+\).

Now we define \({\mathbb {Y}}_1\) and \({\mathbb {Y}}_2\) by

and

respectively, where

and assume the following condition.

- [A5]:

-

There is some positive constant \(C>0\) satisfying

$$\begin{aligned} {\mathbb {Y}}_1(\theta _1)\le -C|\theta _1-\theta _1^*|^2 \end{aligned}$$(2.8)and

$$\begin{aligned} {\mathbb {Y}}_2(\theta _2)\le -C|\theta _2-\theta _2^*|^2. \end{aligned}$$(2.9)

Remark

By (2.7), it holds

and therefore \({\mathbb {Y}}_2(\theta _2)\) has the following expression:

In particular, we have \({\mathbb {Y}}_2(\theta _2^*)=0\).

Under these assumptions above, we set

and

and we define our estimator of \(\theta _1\) as the maximizer of \({\mathbb {H}}_n^1(\theta _1)\). Note that \(\textrm{Tr}\{{\Sigma ^*}^{-1}\partial _{\theta _1}\Sigma (\theta _1^*)\}\) is a vector whose jth entry is \(\displaystyle \textrm{Tr}\left\{ {\Sigma ^*}^{-1}\frac{\partial }{\partial {\theta _1^j}}\Sigma (\theta _1^*)\right\} \). Then the following theorem holds:

Theorem 2.1

We assume [A1]–[A5], and for each \(n \in {\mathbb {N}}\), let \({\hat{\theta }}^n_1\) be a random variable satisfying

Then for every \(p>0\) and any continuous function \(f:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) such that

it holds that

where \(Z \sim N(0,(\Gamma ^1)^{-1})\).

In particular, it holds that

Next we construct the estimator of \(\theta _2\), which is the main object of this article. Recall that under Assumption [A4], (1.5) has the maximal solution \(\gamma _+(\theta _1,\theta _2)\). Now we replace \(\gamma _t\) with \(\gamma _+(\theta _1,\theta _2)\) in (1.3), and define \(m_t(\theta _1,\theta _2;m_0)\) by

where \(m_0 \in {\mathbb {R}}^{d_1}\) is an arbitrary initial estimated value of \(X_0\).

Due to Itô’s formula, the solution of (2.11) can be written as

where

The eigenvalues of \(\alpha (\theta _1,\theta _2)\) coincides with those of \(H(\theta _1,\theta _2)\) in (2.4) with positive real part (see Zhou et al. 1996), so there exists some constant \(C>0\) such that for any \(\theta _1 \in \Theta _1\) and \(\theta _2 \in \Theta _2\),

where \(\sigma (\alpha (\theta _1,\theta _2))\) is the set of all eigenvalues of \(\alpha (\theta _1,\theta _2)\).

According to (2.12), we set for \(i,n \in {\mathbb {N}}\),

and

where \({\hat{\theta }}_1^n\) is the estimator of \(\theta _1\) defined in Theorem 2.1. Then the following theorem holds:

Theorem 2.2

We assume [A1]–[A5], and let \(m_0 \in {\mathbb {R}}^{d_1}\) be an arbitrary initial value and \({\hat{\theta }}^n_2={\hat{\theta }}^n_2(m_0)\) be a random variable satisfying

for each \(n \in {\mathbb {N}}\). Moreover, let \(\Gamma ^2\) be positive definite. Then for any \(p>0\) and continuous function \(f:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) such that

it holds that

where \(Z \sim N(0,(\Gamma ^2)^{-1})\).

In particular, it holds that

Remark

-

(1)

In order to calculate \({\hat{m}}_i^n\), one can use the autoregressive formula

$$\begin{aligned} {\hat{m}}_{i+1}^n(\theta _2;m_0)&=\exp \left( -\alpha ({\hat{\theta }}_1^n,\theta _2)h\right) {\hat{m}}_{i}^n(\theta _2;m_0)\\&\quad +\exp \left( -\alpha ({\hat{\theta }}_1^n,\theta _2)h\right) \gamma _+({\hat{\theta }}_1^n,\theta _2)c(\theta _2)'\Sigma ({\hat{\theta }}_1^n)^{-1}\Delta _{i+1}Y. \end{aligned}$$ -

(2)

One can obtain \(\gamma (\theta _1,\theta _2)\) in the following way (see Zhou et al. 1996 for details). Let \(v_1,v_2,\cdots , v_{d_1}\) be generalized eigenvectors of \(H(\theta _1,\theta _2)\) in (2.4) with positive real part eigenvalues. Note that \(H(\theta _1,\theta _2)\) has \(d_1\) eigenvalues (with multiplicity) in the right half-plane and \(d_1\) in the left half-plane. We define the matrices \(X_1(\theta _1,\theta _2)\) and \(X_2(\theta _1,\theta _2)\) by

$$\begin{aligned} \begin{pmatrix} v_1&v_2&\cdots&v_{d_1} \end{pmatrix}=\begin{pmatrix} X_1(\theta _1,\theta _2)\\ X_2(\theta _1,\theta _2) \end{pmatrix}. \end{aligned}$$Then \(X_1(\theta _1,\theta _2)\) is invertible and it holds \(\gamma _+(\theta _1,\theta _2)=X_2(\theta _1,\theta _2)X_1(\theta _1,\theta _2)^{-1}\).

-

(3)

\({\mathbb {H}}^2(\theta _2)\) can be interpreted as a approximated log-likelihood function with \(\theta _1\) given. In fact, if \(X_t=X_t(\theta )\) and \(Y_t=Y_t(\theta )\) are generated by (1.1) and (1.2), and we set \(m_0=E[X_0|Y_0]\) and \(\gamma _0=E[(m_0-X_0)^{\otimes 2}]\), then it follows \(m_t(\theta )=E[X_t(\theta )|\{Y_s(\theta )\}_{0\le s\le t}]\) by Theorem 1.1. Thus by the innovation theorem (Kallianpur 1980), we can replace \(X_t(\theta )\) with \(m_t(\theta )\) in Eq. (1.2), and consider the equation

$$\begin{aligned} dY_t(\theta )=c(\theta _2)m_t(\theta )dt+\sigma (\theta _1)d{\overline{W}}_t \end{aligned}$$where \({\overline{W}}\) is a \(d_2\)-dimensional Wiener process. We can approximate this equation as

$$\begin{aligned} \Delta _i Y(\theta )\approx c(\theta _2)m_{t_{i-1}}(\theta )h+\sigma (\theta _1)\Delta _i {\overline{W}}, \end{aligned}$$when \(h \approx 0\). Then we obtain the approximated likelihood function

$$\begin{aligned} p(\theta )&\approx \prod _{i=1}^n\frac{1}{(2\pi h)^{\frac{d}{2}}\{\textrm{det}\Sigma (\theta _1)\}^{\frac{1}{2}}}\\&\quad \times \exp \left( -\frac{1}{2h}\Sigma (\theta _1)^{-1}\left[ (\Delta _iY-c(\theta _2)m_{t_{i-1}}(\theta )h)^{\otimes 2} \right] \right) . \end{aligned}$$ -

(4)

The condition the \(W^1\) and \(W^2\) are independent is not essential; according to Section 12 of Liptser and Shiriaev (2001b), Kalman–Bucy filter can be extended to the equation of the form

$$\begin{aligned} dX_t&=\left\{ a_0(\theta _2,Y_t)+a_1(\theta _2,Y_t)X_t \right\} dt+b_1(\theta _2,Y_t)dW_t^1+b_2(\theta _2,Y_t)dW_t^2\\ dY_t&=\left\{ c_0(\theta _2,Y_t)+c_1(\theta _2,Y_t)X_t \right\} dt+\sigma (\theta _1,Y_t)dW_t^2. \end{aligned}$$However, this case is more complicated, and thus is left for future research.

3 Proof of Theorem 2.2

In this section, we write \(m_t(\theta _2)\), \({\hat{m}}_i^n(\theta _2), {\mathbb {H}}_n^2(\theta _2)\), \(\gamma _+(\theta _2)\) and \(\alpha (\theta _2)\) instead of \(m_t(\theta _1^*,\theta _2;m_0), {\hat{m}}_i^n(\theta _2;m_0)\), \({\mathbb {H}}_n^2(\theta _2;m_0), \gamma _+(\theta _1^*,\theta _2)\) and \(\alpha (\theta _1^*,\theta _2)\), respectively, for simplicity.

Moreover, let \(m_t^*=E[X_t|\{Y_t\}_{0\le s\le t}]\) and \(\gamma _t^*=E[(X_t-m_t)(X_t-m_t)']\). Then by Theorem 1.1, they are the solutions of

We start with preliminary lemmas, which is frequently referred to in proving inequalities.

Lemma 3.1

Let \(\{W_t\}\) be a d-dimensional \(\{{\mathcal {F}}_t\}\)-Wiener process.

-

(1)

Let \(f:{\mathbb {R}}_+ \rightarrow {\mathbb {R}}^m\) be a measurable function. Then for any \(p\ge 1\) and \(0\le s\le t\), it holds

$$\begin{aligned} \left( \int _s^t|f(u)|du \right) ^p\le (t-s)^{p-1}\int _s^t|f(u)|^pdu \end{aligned}$$ -

(2)

Let \(\{A_t\}\) be a \(M_{k,d}({\mathbb {R}})\)-valued progressively measurable process and \(\{W_t\}\) be a d-dimensional Wiener process. Then for every \(0\le s\le t\le T\) and \(p \ge 2\), it holds

$$\begin{aligned} E\left[ \sup _{s\le t \le T}\left| \int _s^t A_udW_u\right| ^p \right]&\le C_{p,d,k}E\left[ \left( \int _s^T|A_u|^2du\right) ^{\frac{p}{2}} \right] \\&\le C_{p,d,k}(T-s)^{\frac{p}{2}-1}\int _s^tE[|A_u|^p]du. \end{aligned}$$

Proof

-

(1)

By Hölder’s inequality, we obtain

$$\begin{aligned} \int _s^t|f(u)|du&\le \left( \int _s^t|f(u)|^{p}du\right) ^{\frac{1}{p}} \left( \int _s^tdu\right) ^{1-\frac{1}{p}}\\&=(t-s)^{1-\frac{1}{p}}\left( \int _s^t|f(u)|^{p}du\right) ^{\frac{1}{p}}, \end{aligned}$$and it shows the desired inequality.

-

(2)

Let \(A_t^{(ij)}\) be the (i, j) entry of \(A_t\), and \(W_t^{(j)}\) be the jth element of \(W_t\). Then the Burkholder-Davis-Gundy inequality gives

$$\begin{aligned} E\left[ \sup _{s\le t\le T}\left| \int _s^t A_udW_u\right| ^p\right]&=E\left[ \sup _{s\le t\le T}\left\{ \sum _{i=1}^k\left( \sum _{j=1}^d\int _{s}^tA_u^{(ij)}dW_u^{(j)} \right) ^2 \right\} ^\frac{p}{2}\right] \\&\le C_{p,d,k}\sum _{i=1}^k \sum _{j=1}^dE\left[ \sup _{s\le t\le T}\left| \int _{s}^tA_u^{(ij)}dW_u^{(j)} \right| ^p\right] \\&\le C_{p,d,k}\sum _{i=1}^k \sum _{j=1}^dE\left[ \left| \int _{s}^T(A_u^{(ij)})^2du \right| ^{\frac{p}{2}}\right] \\&\le C_{p,d,k}E\left[ \left| \int _{s}^T\sum _{i=1}^k \sum _{j=1}^d(A_u^{(ij)})^2du \right| ^{\frac{p}{2}}\right] \\&=C_{p,d,k}E\left[ \left| \int _{s}^T|A_u|^2du \right| ^{\frac{p}{2}}\right] . \end{aligned}$$Hence we have proved the first inequality, and together with (1) we obtain the second one.

\(\square \)

Lemma 3.2

Let A be a \(d\times d\) matrix having eigenvalues \(\lambda _1,\cdots ,\lambda _k\). Then for all \(\epsilon >0\), there exists some constant \(C_{\epsilon ,d}\) depending on \(\epsilon \) and d such that

where

Proof

Let

be a Schur decomposition of A, where \(\lambda _1,\lambda _2,\cdots ,\lambda _{d}\) are the eigenvalues of A, U is an unitary matrix, and N is a strictly upper triangular matrix. Then we have

noting that U is unitary, \(N^{d}=O\), and \(|A|=|D+N|\ge N\). \(\square \)

Lemma 3.3

For any \(s,t\ge 0\) such that \(0\le t-s\le 1\) and \(p \ge 1\), it holds

and

Proof

By Itô’s formula, the solution of (2.1) can be expressed as

where \(\exp \) is the matrix exponential. Hence by Lemmas 3.1 and 3.2, we have

for some constant \(\eta >0\). Therefore for \(s\le t\) we obtain

We can show (3.5) in the same way. \(\square \)

We next discuss important properties of \(\gamma _+(\theta _1,\theta _2)\) and \(\gamma _t^*\).

Proposition 3.4

The maximal solution of (1.5) \(\gamma _+(\theta _1,\theta _2)\) is of class \(C^4\).

Proof

Let \(\theta ^0=(\theta _1^0,\theta _2^0) \in \Theta _1\times \Theta _2\), and we consider the mapping \(f:M_{d_1}({\mathbb {R}}) \rightarrow M_{d_1}({\mathbb {R}})\) such that

Since for every \(T \in M_{d_1}({\mathbb {R}})\), we have

and

the differential of f at \(X=\gamma _+(\theta ^0)\) is given by

where \(\alpha \) is defined by (2.13).

If \((df)_{\gamma _+(\theta ^0)}\) is not injective, \(\alpha (\theta _0)\) has eigenvalues \(\mu \) and \(\lambda \) such that \(\mu +{\overline{\lambda }}=0\) (see lemma 2.7 in Zhou et al. 1996). However, noting that \(\gamma _+(\theta _1,\theta _2)\) is the unique symmetric solution of \(f(X)=O\) such that \(-\alpha (\theta _0)\) is stable (Coppel 1974; Zhou et al. 1996), there are no such eigenvalues. Therefore \((df)_{\gamma _+(\theta ^0)}\) is injective, and by the implicit function theorem, there exists a neighborhood \(U \subset \Theta _1 \times \Theta _2\) containing \(\theta ^0\) and a mapping \(\phi :U \rightarrow M_{d_1}({\mathbb {R}})\) of class \(C^4\) such that

Since \(-a(\theta _2)-\phi (\theta )\Sigma (\theta _1)^{-1}[c(\theta _2)^{\otimes 2}]\) is stable at \(\theta =(\theta _1,\theta _2)=\theta ^0\), it is also stable on a neighborhood of \(\theta ^0\). Thus by the uniqueness of \(\gamma _+\), we obtain \(\gamma _+(\theta )=\phi (\theta )\) on that neighborhood and therefore the desired result. \(\square \)

By this proposition, Theorem 2.1 and the mean value theorem, we get the following corollary.

Corollary 3.5

For any \(p\ge 1\), it holds

and

Proposition 3.6

For every \(\theta _1 \in {\overline{\Theta }}_1\) and \(\theta _2 \in {\overline{\Theta }}_2\),

and

Proof

Noting that for A and \(\gamma \in M_{d_1}({\mathbb {R}})\),

and the Eq. (1.5) is equivalent to

we obtain

by assumption [A3], (2.13) and the stability of \(-\alpha (\theta _1,\theta _2)\). In the same way, we can show \(\gamma _-(\theta _1,\theta _2)<0.\) \(\square \)

Combining this result with assumption [A3], (2.13) and Lemma 3.2, we obtain the following corollary.

Corollary 3.7

There exists some constant \(C_1>0\) and \(C_2>0\) such that

Now we go on to the convergence of \(\gamma _t^*\). Concerning the convergence rate of Riccati equations, Leipnik (1985) presents the following result.

Theorem 3.8

(Section 5, Leipnik 1985) Let \(A,B,C \in M_d({\mathbb {R}})\) and consider the equation

Moreover, assume C is symmetric, \(C \le 0, (B,C)\) is controllable and the matrix

has no pure imaginary eigenvalues.

Then if \(P_0-P^+\) is non-singular, then it holds for any \(\epsilon >0\) that

and if \(P_0-P^-\) is non-singular, then it holds for any \(\epsilon >0\) that

where \(P^+\) and \(P^-\) are the maximal and minimal solutions of the algebraic Riccati equation

respectively, \(r<0\) is the maximum real part of the eigenvalues of \(B+CP^+\).

Proposition 3.9

For any \(\epsilon >0\), there exists some constant \(C>0\) such that

In particular, \(|\gamma _t^*|\) is bounded.

Proof

According to (3.2) and Theorem 3.8, it is enough show that \(\gamma _0^*-\gamma _-(\theta ^*)\) is non-singular, where \(\gamma _-(\theta _1,\theta _2)\) is the minimal solution of (1.5). If we assume \(\gamma _0^*-\gamma _-(\theta ^*)\) is singular, there exists \(x \in {\mathbb {R}}^{d_1}\backslash \{0\}\) such that \(\{\gamma _0^*-\gamma _-(\theta ^*)\}x=0\), and we get \(x\gamma _0^*x=x\gamma _-(\theta ^*)x\). However, since \(\gamma _0^*\ge 0\) and we have \(\gamma _-(\theta ^*)<0\) by Proposition 3.6, that is a contradiction. \(\square \)

Next we consider the innovation process

Note that the right-hand side is well-defined since \(\{m_t^*\}\) has a progressively measurable modification, and that \({\overline{W}}_t\) is also a Wiener process (Kallianpur 1980). Since \(Y_t\) is the solution of

we obtain together with (3.1)

Therefore Itô’s formula gives

Moreover, using Proposition 3.9, we can show for any \(p\ge 1\),

and

in the same way as Lemma 3.3.

Lemma 3.10

For \(j=0,1,2,\cdots \) and \(\theta \in \Theta \), let \(Z_j(\theta )\) be a \(M_{k,l}({\mathbb {R}})\)-valued and \({\mathcal {F}}_{t_j}\)-measurable random variable, and \(U(\theta )\) be an \(M_{l,d}({\mathbb {R}})\)-valued random variable. Moreover, we assume \(Z_j(\theta )\) is continuously differentiable with respect to \(\theta \). Then for any \(n \in {\mathbb {N}}\) and \(p>m_1+m_2\), it holds

Proof

Let \(Z_j^{(ij)}, U^{(ij)}\) and \((Z_jU)^{(ij)}\) be the (i, j) entries of \(Z_j, U\) and \(Z_jU\), respectively, and \(W^{(j)}\) be the jth element of \(W^{(j)}\). Then we have

Moreover, the Sobolev inequality and the Burkholder-Davis-Gundy inequality gives

By (3.13) and (3.14), we obtain the desired result. \(\square \)

Lemma 3.11

For every \(\theta \in \Theta \), let \(\{Z_t(\theta )\}\) be a \({\mathbb {M}}_{d,d_1}({\mathbb {R}})\)-valued progressively measurable process. Moreover, we assume \(Z_t(\theta )\) is differentiable with respect to \(\theta \), and for any \(T>0, p>0\) and \(\theta ,\theta ' \in \Theta \)

Then \(\{\xi _{\cdot }(\theta )\}_{\theta \in \Theta }\) with \(\displaystyle \xi _t(\theta )=\int _0^tZ_t(\theta )d{\overline{W}}_s\) has a modification \(\{{\tilde{\xi }}_{\cdot }(\theta )\}_{\theta \in \Theta }\) which is continuously differentiable with respect to \(\theta \). Moreover, it holds almost surely for any \(t\ge 0\) and \(\theta \in \Theta \)

Proof

For any matrix valued function \(\phi \) on \({\mathbb {R}}^{m_1+m_2}\) and \(\epsilon >0\), let

where \(e_1,\cdots ,e_{m_1+m_2}\) is the standard basis of \({\mathbb {R}}^{m_1+m_2}\). Then for \(\theta ,\theta '\in \Theta ,\epsilon ,\epsilon '>0\) and \(p\ge 1\), we have

where \(\theta =(\theta ^1,\cdots ,\theta ^{m_1+m_2})\).

Hence by Lemma 3.1, it follows for any \(\theta ,\theta ' \in \Theta ,\epsilon ,\epsilon '>0\) and \(N \in {\mathbb {N}}\)

Now for this \(C_{p,N}\), we take a sequence \(\alpha _N>0~(N \in {\mathbb {N}})\) so that

and define the norm on \(C({\mathbb {R}}_+;M_{d,d_1}({\mathbb {R}}))\) by

Then the topology induced by this norm is equivalent to the topology of uniform convergence, and we have

Therefore, by the the Kolmogorov continuity theorem, \(\{\Delta ^j \xi .(\theta ;\epsilon )\}_{\theta \in \Theta ,0<|\epsilon |\le 1}\) has a uniformly continuous modification \(\{\zeta .(\theta ;\epsilon )\}_{\theta \in \Theta ,0<|\epsilon |\le 1}\). Because of the uniform continuity, \(\zeta .(\theta ;\epsilon )\) can be extended to a continuous process on \(\theta \in \Theta ,|\epsilon |\le 1\).

On the other hand, we can show in the same way that \(\{\xi .(\theta ;\epsilon )\}_{\theta \in \Theta }\) has a continuous modification \(\{{\tilde{\xi }}.(\theta ;\epsilon )\}_{\theta \in \Theta }\). Then \(\Delta ^j{\tilde{\xi }}.(\theta ;\epsilon )\) and \(\zeta .(\theta ;\epsilon )\) are both continuous modifications of \(\Delta ^j \xi .(\theta ;\epsilon )\), and thus they are indistinguishable. Therefore almost surely for any \(t \ge 0\) and \(\theta \in \Theta \),

exists. The continuity of \(\displaystyle \frac{\partial \xi _t}{\partial \theta _j}(\theta )\) follows from the continuity of \(\zeta .(\theta ,\epsilon )\).

Moreover, by the assumption and Lemma 3.1 (2), we have for \(p\ge 2\),

where \(0\le \eta _s\le 1\). This means

in \(L^p\), and hence there exists a subsequence \(\{\epsilon _n\}_{n \in {\mathbb {N}}}\) such that \(\epsilon _n \rightarrow 0\) and

Therefore we obtain almost surely

\(\square \)

Lemma 3.12

-

(1)

For \(j \in {\mathbb {N}}\), let \(f_j:[t_{j-1},t_j]\times \Theta \rightarrow M_{k,d_2}({\mathbb {R}})\) be of class \(C^1\). Then for any \(p>m_1+m_2\), it holds

$$\begin{aligned} \begin{aligned}&E\left[ \sup _{\theta \in \Theta }\left| \sum _{j=1}^i\int _{t_{j-1}}^{t_j}f_{j-1}(s,\theta )dY_s\right| ^p \right] ^{\frac{1}{p}}\\&\quad \le C_p\sup _{\theta \in \Theta }\left\{ \sum _{j=1}^i\int _{t_{j-1}}^{t_j}|f_j(s,\theta )|ds+\sum _{j=1}^i\int _{t_{j-1}}^{t_j}|\partial _{\theta }f_j(s,\theta )|ds\right. \\&\qquad \left. +\left( \sum _{j=1}^i\int _{t_{j-1}}^{t_j}|f_{j-1}(s,\theta )|^2ds\right) ^{\frac{1}{2}} +\left( \sum _{j=1}^i\int _{t_{j-1}}^{t_j}|\partial _{\theta }f_{j-1}(s,\theta )|^2ds\right) ^{\frac{1}{2}}\right\} , \end{aligned} \end{aligned}$$(3.16)where \(C_p\) is a constant which depends only on p.

-

(2)

For \(j=0,1,2,\cdots \) and \(\theta \in \Theta \), let \(Z_j(\theta )\) be a \(M_{k,l}({\mathbb {R}})\)-valued and \({\mathcal {F}}_{t_j}\)-measurable random variable, and \(U(\theta )\) be an \(M_{l,d}({\mathbb {R}})\)-valued random variable. Moreover, we assume \(Z_j(\theta )\) is continuously differentiable with respect to \(\theta \). Then for any \(p>m_1+m_2\), it holds

$$\begin{aligned} \begin{aligned}&E\left[ \sup _{\theta \in \Theta }\left| \sum _{j=1}^iZ_{j-1}(\theta )U(\theta )\Delta _j Y\right| ^p \right] ^{\frac{1}{p}}\\&\quad \le C_pE\left[ \sup _{\theta \in \Theta }|U(\theta )|^{4p}\right] ^\frac{1}{4p}\\&\qquad \times \sup _{\theta \in \Theta }\left\{ \sum _{j=1}^iE\left[ |Z_{j-1}(\theta )|^{2p}\right] ^\frac{1}{2p}h+\sum _{j=1}^iE\left[ |\partial _{\theta }Z_{j-1}(\theta )|^{2p}\right] ^\frac{1}{2p}h\right\} \\&\qquad +C_{p}E\left[ \sup _{\theta \in \Theta }\left| U(\theta )\right| ^{2p}\right] ^\frac{1}{2p}\\&\qquad \times \sup _{\theta \in \Theta }\left\{ \sum _{j=1}^nE[|Z_{j-1}(\theta )|^{2p}]^\frac{1}{p}h+\sum _{j=1}^nE[\left| \partial _{\theta }Z_{j-1}(\theta )\right| ^{2p}]^\frac{1}{p}h \right\} ^\frac{1}{2}. \end{aligned} \end{aligned}$$(3.17)

Proof

(1) By Lemma 3.11, we can assume for every j

is continuously differentiable, and

Therefore by the Sobolev inequality and (3.9),

In order to bound the first term of (3.18), we set

Then we have

In the same way, it holds for the third term

Next by Lemma 3.1 (2), we obtain for the second term

and in the same way it holds for the fourth term

We complete the proof by the above inequalities.

(2) By the Sobolev inequality and (3.9),

For the first term of the right-hand side, it follows from Lemma 3.1 (1), (3.11) and the Sobolev inequality

As for the second term, we have by Lemma 3.10

Thus we completed the proof. \(\square \)

Proposition 3.13

For any \(p>m_1+m_2\), it holds

and

Proof

We only prove the first one; the rest can be shown in the same way. By (2.14) and the stability of \(-\alpha (\theta _1,\theta _2)\), it is enough to show

To accomplish this, it is enough to show

according to (3.17).

These can be shown by using Corollary 3.7 and noting that it holds by Haber (2018)

\(\square \)

Proposition 3.14

For any \(n,i \in {\mathbb {N}}\) and \(p>m_1+m_2\),

Proof

The first term of the right-hand side can be bounded by the mean value theorem and Theorem 2.1:

Next we evaluate the second term using (3.16). Noting that by the mean value theorem and Lemma 3.2, we have

and

where \(t_{j-1}\le u\le s\le t_j\), it follows from (3.16)

As for the third term, in the same way as Proposition 3.13, we have

since it holds

by the mean value theorem and Theorem 2.1.

Finally, we consider the forth term of (3.24). Noting that it follows from Lemma 3.2 and the stability of \(-\alpha (\theta _1,\theta _2)\),

we have

In the same way, we obtain the boundedness of

and

Thus by (3.17) we obtain

Therefore it follows

Now we completed the proof by (3.24)–(3.28). \(\square \)

Next, we replace \(m_0^*\) and \(\gamma _s^*\) with \(m_0\) and \(\gamma _+(\theta ^*)\) in (3.10), and introduce

Furthermore, we consider for every \(n,i \in {\mathbb {N}}\),

and

Then in the same way as Proposition 3.13, it holds for any \(p>m_1+m_2\)

Proposition 3.15

For any \(p>0\) and \(t\ge 0\), it holds

Proof

Use (3.10), (3.29), Lemmas 3.1 and 3.2, Proposition 3.9 and the stability of \(\alpha \). \(\square \)

Proposition 3.16

Let \(A:\Theta \rightarrow M_{d_1,d_2}({\mathbb {R}})\) be a continuous mapping. Then for any \(i,n \in {\mathbb {N}}, p>0\) and \(k=0,1,2,\cdots \), it holds

Proof

It can be shown that by Lemma 3.1 and Proposition 3.15, since

\(\square \)

By (2.14) and (3.31), we obtain the following corollaries.

Corollary 3.17

For any \(i,n \in {\mathbb {N}}, p>0\) and \(k=0,1,2,3,4\), it holds

Corollary 3.18

For any \(i,n \in {\mathbb {N}}\) and \(p>m_1+m_2\), it holds

Proof

This result directly follows from By (3.9), (3.32), (3.12), Lemma 3.1, Propositions 3.14 and 3.15 and Corollary 3.17. \(\square \)

Proposition 3.19

Let \(A:\Theta \rightarrow M_{d_1,d_2}({\mathbb {R}})\) be a continuous mapping. Then for any \(n \in {\mathbb {N}}, p>m_1+m_2\) and \(k=0,1,2,3\)

Proof

Hence for every \(k=0,1,2,3\), \(\partial _{\theta _2}^k\{{\hat{m}}_{i-1}^n(\theta _2)-{\tilde{m}}_{i-1}^n(\theta _2)\}\) is a sum of the form

where \(A_1\) is a \(M_{d_1,d_2}({\mathbb {R}})\)-valued k-dimensional tensor of class \(C^1\). Thus if we set

it is enough to show

By Haber (2018), we have

and

Thus it holds by (3.17) and Proposition 3.16

Hence we obtain (3.34). \(\square \)

Proposition 3.20

Let Z be a \(M_{d_2}({\mathbb {R}})\)-valued random variable. Then for any \(n \in {\mathbb {N}}, k=0,1,2,3\) and \(p>m_1+m_2\) it holds

Proof

By (2.14), \(\partial _{\theta _2}^k\{{\hat{m}}_{i}^n(\theta _2)'c(\theta _2)'\}\) is a sum of the form

where \(A_i\) and \(B_i\) are k-dimensional tensor valued continuously differentiable mappings on \(\Theta \). Thus if we set

it is enough to show

In the same way as Proposition 3.13, we first obtain

and

Therefore noting that \(\Psi _i(\theta )\) is \({\mathcal {F}}_{t_{i-1}}\)-measurable, we obtain (3.35) by (3.17). \(\square \)

Next, we define \(\tilde{{\mathbb {H}}}_n^2, {\tilde{\Delta }}_n^2\), \({\tilde{\Gamma }}_n^2\) and \(\tilde{{\mathbb {Y}}}_n^2\) by

and

respectively.

Proposition 3.21

For any \(n \in {\mathbb {N}}, p>m_1+m_2\) and \(k=0,1,2,3\), it holds

Proof

We only consider the case of \(k=0\). The rest is the same. By (2.15) and (3.36),

For the first three terms of the right-hand side, we have by Theorem 2.1 and Proposition 3.13

and by Proposition 3.20

In the same way, the third term can be bounded by \(C_p(n^{-\frac{1}{2}}h+h^\frac{1}{2})\).

Furthermore, making use of Proposition 3.13, (3.33) and Corollary 3.17, we can bound the fourth term by \(\displaystyle C_p\sum _{i=1}^nhe^{-Ct_i}\le C_ph\), noting that

Finally, the last two terms can be bounded by \(\displaystyle C_p+C_p\sum _{i=1}^n(h^{\frac{3}{2}}+n^{-\frac{1}{2}}h+e^{-Ct_i}h)\le C_p(1+nh^{\frac{3}{2}}+n^\frac{1}{2}h+h)\) due to the Corollary 3.18, Proposition 3.19 and the identity

Putting it all together, we obtain

\(\square \)

Proposition 3.22

For any \(p\ge 2\), it holds

Proof

If we set \({\tilde{M}}_j^n(\theta _2)=c(\theta _2){\tilde{m}}_j^n(\theta )\), we have

by (3.32), (3.38) and (3.51). Thus by Lemma 3.1 and (3.33),

\(\square \)

Next, we define the process \(\{\mu _t\}\) by replacing Y with \({\tilde{Y}}\) (therefore \(m_t^*\) with \(m_t(\theta ^*)\) and \(\gamma _t^*\) with \(\gamma _+(\theta ^*)\)) in (2.12);

Then as \(m_t\) is the solution of (2.11), so \(\mu _t\) is the solution of

Moreover, it holds \(\mu _t(\theta _2^*)={\tilde{m}}_t^*\) since by (3.31) \({\tilde{m}}_t^*\) is the solution of

which is equivalent to

Moreover, just as Proposition 3.14, the following proposition holds:

Proposition 3.23

For any \(n,i \in {\mathbb {N}}\) and \(p>m_1+m_2\), we have

Together with (3.33), we obtain the following corollary.

Corollary 3.24

For any \(i \in {\mathbb {N}}\) and \(p>m_1+m_2\), we have

Proposition 3.25

where \(O(e^{-t})\) is some continuous function \(r:\Theta \rightarrow {\mathbb {R}}\) such that

Proof

Therefore

Now we have

and thus by (2.6)

\(\square \)

Proposition 3.26

For any \(n \in {\mathbb {N}}\) and \(p>m_1+m_2\), it holds

Proof

Thus we have

For the first term of this, making use of Proposition 3.23, Corollary 3.24 and (3.33), we obtain

just as we evaluated the fourth term of (3.40).

Now we consider the second term. Due to the proof of Proposition 3.25, \(c(\theta _2)\mu _{t_i}^n(\theta _2)-c^*\mu _{t_i}^n(\theta _2^*)\) has the form

where

Then if we set \(\displaystyle \nu ^i_t(\theta _2)=p_i(\theta _2)+\int _0^{t}q_i(s;\theta _2)d{\overline{W}}_s\), Itô’s formula gives

Therefore

Now by Lemma 3.11 and the continuos differentiability of \(p_i\) and \(q_i\), we can assume \(\displaystyle \nu ^i_t(\theta _2)\) is continuously differentiable with respect to \(\theta _2\) and almost surely

Thus by Lemma 3.1 (2) we obtain for any \(T>0,p\ge 2\) and \(\theta _2,\theta _2' \in \Theta _2\)

and

Then again by Lemma 3.11, \(\displaystyle \int _{0}^{t_i}{\nu ^i_{s}(\theta _2)}'{\Sigma ^*}^{-1}q_i(s;\theta _2)d{\overline{W}}_s\) is continuously differentiable and we have almost surely

Therefore the Sobolev inequality gives for any \(p>m_1+m_2\)

Now we have \(|p_t(\theta _2)|\le Ce^{-Ct_i},|q_i(s;\theta _2)|\le Ce^{-C(t_i-s)}\) and hence

Thus we obtain

and therefore by Lemma 3.1

In the same way, we obtain

Hence by (3.48), it follows

and therefore by (3.47)

Finally, as for the third and fourth terms in (3.45), by the Sobolev inequality, Lemma 3.1 and (3.33) it holds

We obtain the desired result by putting (3.45), (3.46), (3.49) and (3.50) together. \(\square \)

Now we set

Then by (3.32) and (3.39), we have

Moreover, by (2.16) and (3.44), we obtain the following results in the same way as Propositions 3.25 and 3.26:

Proposition 3.27

It holds

Proof

Since \({\tilde{\Delta }}_n^2\) is given by the formula (3.41), we set

Then \((\xi _i^n)^{\otimes 2}\) is the matrix whose (i, j) entry is

Hence it follows from (3.53)

Moreover, we have for \(\epsilon >0\)

and hence

Therefore we obtain the desired result by the martingale central limit theorem. \(\square \)

Proposition 3.28

For any \(p>m_1+m_2\), it holds

Proof

Use (2.15) to divide the left-hand side into three parts, and then apply (3.13) and Proposition 3.20. \(\square \)

Proof of Theorem 2.2

We set \(\Delta _n^2, \Gamma _n^2\) and \({\mathbb {Y}}_n^2\) by

Then by Proposition 3.21 for any \(n \in {\mathbb {N}}\) and \(p>m_1+m_2\), it holds

and

Together with Proposition 3.22, (3.54) and Proposition 3.26, we have for any \(p>m_1+m_2\) (therefore for any \(p>0\))

and

Moreover, by Proposition 3.27 and (3.58) we obtain

Then we have proved the theorem by the assumption [A5], Proposition 3.28, (3.60)–(3.63) and Theorem 5 in Yoshida (2011). \(\square \)

4 One-dimensional case

In this section, we consider the special case where \(d_1=d_2=1\). In this case, \(a(\theta _2),b(\theta _2),c(\theta _2)\) and \(\sigma (\theta _1)\) are scalar valued, so we set \(m_1=1\) and \(\sigma (\theta _1)=\theta _1\). Moreover, we assume \(\Theta _1 \subset (\epsilon ,\infty )\) for some \(\epsilon >0\). In the one-dimensional case, (1.5) is reduced to

and the larger solution of this is

Thus we have

by (2.13). Furthermore, the eigenvalues of \(H(\theta _1,\theta _2)\) in Assumption [A4] is \(\pm \alpha (\theta _1,\theta _2)\) and hence one can remove Assumption [A4].

As for the estimation of \(\theta _1\), one can obtain the explicit expression of \({\hat{\theta }}_1^n\). In fact, we have

and hence

Thus we obtain the formula

Moreover, \({\mathbb {Y}}_1(\theta _1)\) and \(\Gamma ^1\) can be written as

and

Therefore noting that \(\displaystyle x^2-1-2\log x\ge (x-1)^2~(x\ge 0)\) we have

and hence (2.8) holds.

As for the estimation of \(\theta _2\), since we have

by (2.13), we obtain for \(\alpha (\theta _2)\ne a^*\)

making use of (2.6) and the identity

where \(\alpha ,\beta >0\) and \(p,q \in {\mathbb {R}}\). Even if \(\alpha (\theta _2)=a^*\), we obtain the same formula by letting \(a^* \rightarrow \alpha (\theta _2)\) in (4.3).

Now we obtain a sufficient condition for (2.9) by the following proposition.

Proposition 4.1

Assume [A3], \(\displaystyle \inf _{\theta _2 \in \Theta _2}|c(\theta _2)|>C\) and

Then it holds

Proof

Let us assume there is no constant C satisfying (4.5). Then there exists some sequence \(\theta _2^{(n)} \in \Theta _2~(n \in {\mathbb {N}})\) such that

and

Thus if we set

and

it follows that

noting that it holds \(B(\theta _2)-1>C\) by the assumptions and (4.1). In the same way, we have

but these contradict (4.4). \(\square \)

We similarly obtain the explicit expression of \(\Gamma ^2\) by (4.2):

Hence \(\Gamma ^2\) is positive definite if and only if \(\{\partial _{\theta _2}a(\theta ^*)\}^{\otimes 2}\) or \(\{\partial _{\theta _2}\alpha (\theta ^*)\}^{\otimes 2}\) is positive definite. This does not happen if \(m_2\ge 3\); in fact, one can take \(x \in {\mathbb {R}}^{m_2}\) so that \(x'\partial _{\theta _2}a(\theta ^*)=x'\partial _{\theta _2}\alpha (\theta ^*)\) if \(m_2\ge 3\). Thus we need to assume \(m_2 \le 2\) in the one-dimensional case.

Putting it all together, we obtain the following result.

Theorem 4.2

Let \(m_1=1, m_2 \le 2, \sigma (\theta _1)=\theta _2\) and \(\Theta _1 \subset (\epsilon ,\infty )\) for some \(\epsilon >0\). Moreover, we assume [A1], [A2] and the following conditions:

- [B1]:

-

$$\begin{aligned}&\inf _{\theta _2 \in \Theta _2}a(\theta _2)>0\\&\inf _{\theta _2 \in \Theta _2}|b(\theta _2)|>0\\&\inf _{\theta _2 \in \Theta _2}|c(\theta _2)|>0 \end{aligned}$$

- [B2]:

-

For any \(\theta _1 \in \Theta _1\) and \(\theta _2,\theta _2^* \in \Theta _2\),

$$\begin{aligned} |a(\theta _2,\theta _1)-a(\theta _2^*,\theta _1)|+|\alpha (\theta _2,\theta _1)-\alpha (\theta _2^*,\theta _1)|\ge C_{\theta _1}|\theta _2-\theta _2^*|. \end{aligned}$$ - [B3]:

-

For any \(\theta \in \Theta , \{\partial _{\theta _2}a(\theta )\}^{\otimes 2}\) or \(\{\partial _{\theta _2}\alpha (\theta )\}^{\otimes 2}\) is positive definite.

(1) If we set

then for every \(p>0\) and any continuous function \(f:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) such that

it holds that

where \(\displaystyle Z \sim N\left( 0,\frac{{\theta _1^*}^2}{2}\right) \).

In particular, it holds that

(2) Let us define \(\gamma _+(\theta _1,\theta _2)\) and \(\alpha (\theta _1,\theta _2)\) by

and

respectively, and set

and

where \(m_0 \in {\mathbb {R}}^{d_1}\) is an arbitrary initial value.

Then, if \({\hat{\theta }}^n_2={\hat{\theta }}^n_2(m_0)\) is a random variable satisfying

for each \(n \in {\mathbb {N}}\), then for any \(p>0\) and continuous function \(f:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) such that

it holds that

where \(Z \sim N(0,(\Gamma ^2)^{-1})\).

In particular, it holds that

5 Proof of Theorem 2.1

In this section, we prove Theorem 2.1, which can be proved in the same way as the diffusion case in Yoshida (2011).

Lemma 5.1

For every \(p\ge 2\) and \(A \in M_{d_1}({\mathbb {R}})\), it holds

Proof

First we get

For the first term of the rightest-hand side, we obtain by Lemmas 3.1 and 3.3

For the second and third terms, it holds

Therefore we get the desired result. \(\square \)

Lemma 5.2

Let \(A_k \in M_{d_1}({\mathbb {R}})~(k=1,2,\cdots ,d)\), \(A=(A_1,\cdots ,A_d)\) and

Then it holds that

and

Proof

On account of

\(\{A[(\Delta _jW^2)^{\otimes 2}]/h-\textrm{Tr}A\}_j\) is a martingale difference sequence with respect to \(\{{\mathcal {F}}_{t_j}\}\). Hence the Burkholder inequality gives

and we obtain (5.1).

Moreover, due to the fact that \(\{A[(\Delta _jW^2)^{\otimes 2}]/h-\textrm{Tr}A\}_j\) is independent and identically distributed, we have

Thus we obtain (5.2). \(\square \)

By Lemmas 5.1 and 5.2, we get the following lemma.

Lemma 5.3

Let \(A_k \in M_{d_1}({\mathbb {R}})~(k=1,2,\cdots ,d)\), \(A=(A_1,\cdots ,A_d)\) and

Then it holds that

and

Lemma 5.4

For every \(p>0\), it holds

Proof

It is enough to prove the inequality for sufficiently large p. By Lemmas 5.2 and 5.1 and Assumptions [A2] and [A4] we get

and similarly

Thus we get the desired result for \(p>d_1\) by the Sobolev inequality. \(\square \)

Proof of Theorem 2.1

Let

and

Then

and hence by Lemma 5.3, we obtain

and

By the same lemma, it follows that

noting that

is equal to \(-\partial _{\theta _1}^2\log \det \Sigma (\theta _1^*)+\Gamma ^1\).

Moreover, we can show

and in the same way

Thus by the Sobolev inequality, it holds for \(p>d_1\)

Then we have proved the theorem by the assumption [A5], Lemma 5.4, (5.5), (5.6), (5.7), (5.8) and Theorem 5 in Yoshida (2011). \(\square \)

6 Simulations

In this section, we will verify the results of the previous sections by computational simulations. We set \(d_1=d_2=1\) and consider the equations

with \(X_0=Y_0=0\), where we want to estimate \(\theta _1=\sigma \) and \(\theta _2=(a,b)\) from observations of \(Y_t\).

We generated sample data \(Y_{t_i}~(i=0,1,\cdots ,n)\) with \(n=10^6\), \(h=0.0001\) and true value of \((a^*,b^*,\sigma )=(1.5,0.3,0.02)\), and performed 10000 Monte Carlo replications. Recall that for the estimation of \(\theta _2\), we first calculate \({\hat{m}}\) by (2.14), and we have to choose its initial value \(m_0\). Although we proved that Theorem 2.2 holds for an arbitrary choice of \(m_0\), this value is a substitute for \(E[X_0|Y_0]\), and thus in practice the choice of \(m_0\) is very important as will be shown in the following. Also, the choice of the number of terms to drop, which is explained below, is relevant.

Taking these facts into account, we calculated the estimator of \(\theta _2\) in the following ways for each simulated data.

- Estimation (i):

-

\(m_0=0\).

- Estimation (ii):

-

\(m_0=1\).

- Estimation (iii):

-

\(m_0=1\) and removed first 100 terms of \({\hat{m}}_i^n\); i.e. we replaced \({\mathbb {H}}_n^2(\theta _2;m_0)\) with

$$\begin{aligned} \frac{1}{2}\sum _{i=101}^n\left\{ -h(c(\theta _2){\hat{m}}_{j-1}^n(\theta _2))^{2}+2{\hat{m}}_{j-1}^n(\theta _2)c(\theta _2)\Delta _jY\right\} . \end{aligned}$$ - Estimation (iv):

-

\(m_0=1\) and removed first 1000 terms of \({\hat{m}}_i^n\); i.e. we replaced \({\mathbb {H}}_n^2(\theta _2;m_0)\) with

$$\begin{aligned} \frac{1}{2}\sum _{i=1001}^n\left\{ -h(c(\theta _2){\hat{m}}_{j-1}^n(\theta _2))^{2}+2{\hat{m}}_{j-1}^n(\theta _2)c(\theta _2)\Delta _jY\right\} . \end{aligned}$$

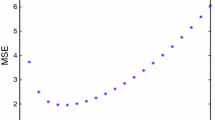

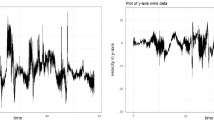

Table 1 shows the means and standard deviations of each estimators, and one can observe asymptotic normalities of them in Fig. 1. Note that the difference of four estimations are not relevant to the estimation of \(\theta _1\).

We can see from the results of first estimation and the corresponding histograms that the estimators behaved in accordance with the theory. At the same time, the following results shows that the wrong value of \(m_0\) can significantly impact the accuracy of our estimator, but it can be improved by leaving out first several terms of \({\hat{m}}_i^n\). One can figure out the reason why this modification of removing first terms works by looking at Fig. 2; it shows that \({\hat{m}}_i^n(\theta ^*)\) with \(m_0=1\) well approximate \(X_{t_i}\) except at the beginning. However, according to the result of Estimations (iii) and (iv), removing first 100 terms is not enough to improve estimators, whereas significant improvement is made by removing 1,000 terms. Thus, it is important choose \(m_0\) which is closer to \(E[X_0|Y_0]\) when some information of \(X_0\) is available. Note that if \(X_0\) and \(Y_0\) are independent, then the best choice of \(m_0\) is \(E[X_0|Y_0]=E[X_0]\).

On the other hand, when you have no information of \(X_0\), it will be interesting to consider the way to decide how many terms of \({\hat{m}}_i^n(\theta )\) should be removed. One possible way is to increase the removing number and calculate estimators until they converge. However, this method is computationally intensive, and more theoretical way will be needed.

The data and script that supports the findings of this study are available in the supplementary material of this article.

References

Brouste A, Fukasawa M, Hino H, Iacus SM, Kamatani K, Koike Y, Masuda H, Nomura R, Ogihara T, Shimuzu Y, Uchida M, Yoshida N (2014) The Yuima project: a computational framework for simulation and inference of stochastic differential equations. J Stat Softw 57(4):1–51. https://doi.org/10.18637/jss.v057.i04

Coppel W (1974) Matrix quadratic equations. Bull Aust Math Soc 10(3):377–401

Gloter A, Yoshida N (2021) Adaptive estimation for degenerate diffusion processes. Electron J Stat 15(1):1424–1472

Haber HE (2018) Notes on the matrix exponential and logarithm

Ibragimov IA, Has’ Minskii RZ (1981) Statistical estimation: asymptotic theory. Springer, Berlin

Kallianpur G (1980) Stochastic filtering theory. Springer, New York

Kamatani K, Uchida M (2015) Hybrid multi-step estimators for stochastic differential equations based on sampled data. Stat Inference Stoch Process 18(2):177–204

Kutoyants YA (1994) Identification of dynamical systems with small noise. Springer, Berlin

Kutoyants YA (2004) Statistical inference for ergodic diffusion processes. Springer, Berlin

Kutoyants YA (2019) On parameter estimation of hidden ergodic Ornstein–Uhlenbeck process. Electron J Stat 13(2):4508–4526. https://doi.org/10.1214/19-EJS1631

Kutoyants YA (2019) On parameter estimation of the hidden Ornstein–Uhlenbeck process. J Multivar Anal 169:248–263. https://doi.org/10.1016/j.jmva.2018.09.008

Leipnik R (1985) A canonical form and solution for the matrix Riccati differential equation. ANZIAM J 26(3):355–361

Leoni G (2017) A first course in Sobolev spaces. American Mathematical Society, Philadelphia

Liptser RS, Shiriaev AN (2001) Statistics of random processes—I. General theory. Springer, Berlin

Liptser RS, Shiriaev AN (2001) Statistics of random processes—II. Applications. Springer, Berlin

Masuda H (2019) Non-Gaussian quasi-likelihood estimation of sde driven by locally stable Lévy process. Stoch Process Appl 129(3):1013–1059

Nakakita SH, Kaino Y, Uchida M (2021) Quasi-likelihood analysis and bayes-type estimators of an ergodic diffusion plus noise. Ann Inst Stat Math 73(1):177–225

Ogihara T, Yoshida N (2011) Quasi-likelihood analysis for the stochastic differential equation with jumps. Stat Inference Stoch Process 14(3):189–229

Shimizu Y, Yoshida N (2006) Estimation of parameters for diffusion processes with jumps from discrete observations. Stat Inference Stoch Process 9(3):227–277

Sontag ED (2013) Mathematical control theory: deterministic finite dimensional systems, vol 6. Springer, Berlin

Sørensen H (2002) Estimation of diffusion parameters for discretely observed diffusion processes. Bernoulli 66:491–508

Uchida M, Yoshida N (2012) Adaptive estimation of an ergodic diffusion process based on sampled data. Stoch Process Appl 122(8):2885–2924

Yoshida N (1992) Estimation for diffusion processes from discrete observation. J Multivar Anal 41(2):220–242. https://doi.org/10.1016/0047-259X(92)90068-Q

Yoshida N (2011) Polynomial type large deviation inequalities and quasi-likelihood analysis for stochastic differential equations. Ann Inst Stat Math 63(3):431–479

Zhou K, Doyle J, Glover K (1996) Robust and optimal control. Feher/Prentice Hall Digital and Prentice Hall, Englewood Cliffs

Acknowledgements

The author is grateful to N. Yoshida for important advice and useful discussions. I also thank Y. Koike for his help with accomplishing the computational simulations.

Funding

Open access funding provided by The University of Tokyo.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kurisaki, M. Parameter estimation for ergodic linear SDEs from partial and discrete observations. Stat Inference Stoch Process 26, 279–330 (2023). https://doi.org/10.1007/s11203-023-09288-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11203-023-09288-w