Abstract

This study is intended to facilitate fair research evaluations in economics. Field- and time-normalisation of citation impact is the standard method in bibliometrics. Since citation rates for journal papers differ substantially across publication years and Journal of Economic Literature classification codes, citation rates should be normalised for the comparison of papers across different time periods and economic subfields. Without normalisation, both factors that are independent of research quality might lead to misleading results of citation analyses. We apply two normalised indicators in economics, which are the most important indicators in bibliometrics: (1) the mean normalised citation score (MNCS) compares the citation impact of a focal paper with the mean impact of similar papers published in the same economic subfield and publication year. (2) PPtop 10 % is the share of papers that belong to the 10% most cited papers in a certain subfield and time period. Since the MNCS is based on arithmetic averages despite skewed citation distributions, we recommend using PPtop 10 % for fair comparisons of entities in economics. In this study, we apply the normalisation methods to 294 journals (including normalised scores for 192,524 papers). We used the PPtop 10 % results for assigning the journals to four citation impact classes. Seventeen journals have been identified as outstandingly cited. Two journals, Quarterly Journal of Economics and Journal of Economic Literature, perform statistically significantly better than all other journals. Thus, only two journals can be clearly separated from the rest in economics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Research evaluation is the backbone of economic research; common standards in research and high-quality work cannot be achieved without such evaluations (Bornmann 2011; Moed and Halevi 2015). It is a sign of the current science system—with its focus on accountability—that quantitative methods of research evaluation complement qualitative assessments of research (i.e. peer review). Today, the most important quantitative method is bibliometrics with its measurements of research output and citation impact (Bornmann in press). Whereas in the early 1960s, only a small group of specialists was interested in bibliometrics (e.g. Eugene Garfield, the inventor of Clarivate Analytics’ Journal Impact Factor, JIF), research activities in this area have substantially increased over the past two decades (Wouters et al. 2015). Today various bibliometric studies are being conducted based on data from individual researchers, scientific journals, universities, research organizations, and countries (Gevers 2014).

Citation impact is seen as a proxy of research quality, which measures one part of quality, namely usefulness (other parts are accuracy and importance, see Martin and Irvine 1983). Since impact measurements are increasingly used as a basis for funding or tenure decisions in science, citation impact indicators are the focus of bibliometric studies. In these studies it is often necessary to analyze citation impact across papers published in different fields and years. However, comparing counts of citations across fields and publication years leads to misleading results (see Council of Canadian Academies 2012). Since the average citation rates for papers published in different fields (e.g. mathematics and biology) and years differ significantly (independently of the quality of the papers) (Kreiman and Maunsell 2011; Opthof 2011), it is standard in bibliometrics to normalise citations. According to Abramo et al. (2011) and Waltman and Eck (2013b), field-specific differences in citation patterns arise for the following reasons: (1) different numbers of journals indexed for the fields in bibliometric databases (Marx and Bornmann 2015); (2) different citation and authorship practices, as well as cultures among fields; (3) different production functions across fields (McAllister et al. 1983); and (4) different numbers of researchers among fields (Kostoff 2002). The law of the constant ratios (Podlubny 2005) claims that the ratio of the numbers of citations in any two fields remains close to constant.

It is the aim of normalised bibliometric indicators “to correct as much as possible for the effect of variables that one does not want to influence the outcomes of a citation analysis” (Waltman 2016a, p. 375). In principle, normalised indicators compare the citation impact of a focal paper with a citation impact baseline defined by papers published in the same field and publication year. The recommendation to use normalised bibliometric indicators instead of bare citation counts is one of the ten guiding principles for research metrics listed in the Leiden manifesto (Hicks et al. 2015; Wilsdon et al. 2015).

This study is intended to introduce the approach of citation normalising in economics, which corresponds to the current state of the art in bibliometrics. “Standard approaches in bibliometrics to normalise citation impact” section presents two normalised citation indicators (see also “Appendix 2”): the mean normalised citation score (MNCS), which was the standard approach in bibliometrics over many years, and the current preferred alternative PPtop 10%. The MNCS normalises the citation count of a paper with respect to a certain economic subfield. PPtop 10% further corrects for skewness in subfields’ citation rates; the metric is based on percentiles. It determines whether a paper belongs to the 10% most frequently cited papers in a subfield. The subfield definition used in this study relies on the Journal of Economic Literature (JEL) classification system. It is well-established in economics and most of the papers published in economics journals have JEL codes attached.

In “Methods” section we describe our dataset and provide several descriptive statistics. We extracted all of the papers from the Web of Science (WoS, Clarivate Analytics) economics subject category published between 1991 and 2013. We matched these papers with the corresponding JEL codes listed in EconLit. Using citation data from WoS, we realized that the citation rates substantially differ across economic subfields. As in many other disciplines, citation impact analyses can significantly inspire or hamper the career paths of researchers in economics, their salaries and reputation (Ellison 2013; Gibson et al. 2014, 2017). In a literature overview Hamermesh (2018) demonstrates that citations are related to the salaries earned by economists. Fair research evaluations in economics should therefore consider subfield-specific differences in citation rates, because the differences are not related to research quality.

In “Results” section we introduce a new economics journal ranking based on normalised citation scores. We calculated these scores for 192,524 papers published in 294 journals (see also “Appendix 1”). Although several top journals are similarly positioned to other established journal rankings in economics, we found large differences for many journals. In “Discussion” section, we discuss our results and give some direction for future research. The subfield-normalisation approach can be applied to other entities than journals, such as researchers, research groups, institutions and countries.

Methods

A key issue in the calculation of normalised citation scores is the definition of fields and subfields, which are used to compile the reference sets (Wilsdon et al. 2015; Wouters et al. 2015). The most common approach in bibliometrics is to use subject categories that are defined by Clarivate Analytics for WoS or Elsevier for Scopus. These subject categories are sets of journals publishing papers in similar research areas, such as biochemistry, condensed matter physics and economics. They shape a multidisciplinary classification system covering a broad range of research areas (Wang and Waltman 2016). However, this approach has been criticized in recent years because it is stretched to its limits with multi-disciplinary journals, e.g. Nature and Science, and field-specific journals with a broad scope, e.g. Physical Review Letters and The Lancet. “These journals do not fit neatly into a field classification system” (Waltman and van Eck 2013a, p. 700), because they cannot be assigned to a single field or publish research from a broad set of subfields (Haddow and Noyons 2013).

It is not only specific for fields, but also for subfields that they have different patterns of productivity and thus different numbers of citations (Crespo et al. 2014; National Research Council 2010). Thus, it is an obvious alternative for field-specific bibliometrics to use a mono-disciplinary classification system (Waltman 2016a). It is an advantage of these systems that they are specially designed to represent the subfield patterns in a single field (Boyack 2004) and are assigned to papers on the paper-level (and not journal-level). The assignment of subfields at the paper level protects the systems from problems with multi-disciplinary journals. In recent years, various bibliometric studies have used mono-disciplinary systems. Chemical Abstracts (CA) sections are used in chemistry and related areas (Bornmann and Daniel 2008; Bornmann et al. 2011), MeSH (Medical Subject Headings) terms in biomedicine (Bornmann et al. 2008; Leydesdorff and Opthof 2013; Strotmann and Zhao 2010), PACS (Physics and Astronomy Classification Scheme) codes in physics and related areas (Radicchi and Castellano 2011), and the MathSciNet’s MSC (Mathematics subject classification) system in mathematics (Smolinsky and Lercher 2012).

The Journal of Econometric Literature (JEL) codes

In economics, the assignment of publications to subfields has a long history. Early classification attempts by the American Economic Association go back to the beginning of the 20th century when ten major categories were defined in the American Economic Review. These categories which have been developed to arrange publications to their subject matter have been subsequently revised several times and transferred to the EconLit system, including JEL codes. The majority of economics journals ask authors to provide JEL codes for their papers. A detailed overview of the history and meaning of JEL codes is provided by Cherrier (2017). In its current form (since 1991) all JEL codes—the main categories—are designed as “Exx”, i.e. a letter plus two stages of subcategories indicated by numbers (see https://www.aeaweb.org/jel/guide/jel.php). There are 20 categories at the main level, which are listed in Table 1. The main levels form the basis for the computation of the normalised scores in this study. The 133 categories at the first sub-level (e.g. E1) are used for robustness checks (see “Robustness” section; further disaggregated levels are not considered here).

According to Cherrier (2017) JEL codes are an important field-classification system in economics: “They provide a map with which to navigate the discipline on the American Economics Association (AEA) website. They are used to publish and search job offers, to skim job offers, to assign grant applications and submitted papers to referees, and to search for book reviewers. Bibliometric studies of the characteristics of economists’ publications, including size, age structure, co-authorship, subject-matter, methodology and citations patterns overwhelmingly rely on JEL codes to categorize papers” (p. 546). Kosnik (2018) used a dataset of articles which were published in the American Economic Review over twenty years to investigate whether the articles have been validly assigned to JEL codes. The results show that “JEL category codes do appear to represent papers that study topics and themes one would expect to be assigned to those codes” (p. 261). Thus, JEL codes seem to reflect research areas in economics validly.

Publication and citation data

WoS is the most important bibliographic database in bibliometrics. Most of the studies in this area are based on its publication and citation data. We downloaded all meta-data of the papers and the corresponding citations from the subject category “Economics”, which were published between 1991 and 2013. The data are from an in-house version of the WoS database. We used 1991 as the first year, since JEL codes were established in its current form in 1991. We obtained data for 224,867 papers with the document type “article” or “review”, which were published in 386 journals. With the exclusion of other document types (e.g. editorial material, notes, and comments), we focus in this study on substantial items.

We have made four adjustments to this dataset:

-

1.

We excluded publications of the Papers and Proceedings issues from the American Economic Review and the European Economic Review. These papers are usually very short due to space considerations from the journal (usually five to six pages). They often represent an extension only that has been left out in full-length papers published elsewhere.

-

2.

We only kept those papers published in journals that were listed in 2013 for at least four years. Thus, we excluded papers from journals that have stopped being listed (or reclassified) in WoS or deceased.

-

3.

The journals in which the papers have appeared had to be listed in EconLit, since the JEL codes were obtained from the Econlit database. If we were not able to match a paper via EconLit (because the publishing journal was not listed), we used JEL codes data from RePEc (see Zimmermann 2013). For these papers we applied a similar matching procedure as described by Angrist, Azoulay, Ellison, Hill, and Lu (2017a).

-

4.

Papers without JEL codes, or with JEL codes “Y” and “Z” were excluded from the study. The codes “Y” and “Z” are not related to a specific content.

The four adjustments ended up with 192,524 papers, which appeared in 294 journals. The citations of these papers refer to the time period between publication and the end of 2016. Thus, the citation counts of the papers are based on different citation windows (ranging between 4 and 26 years). The longer the citation window, the more the “true” impact of a paper can be determined (Research Evaluation and Policy Project 2005; Wang 2013). Glänzel (2008) and Glänzel et al. (2009) recommend using a citation window of at least three years. Johnston et al. (2013) show for papers published in the American Economic Review that the mean citation rate peaks in the fourth year after publication. Since the citations in our in-house database are counted until the end of 2016 (at the time when we conducted the study), papers that appeared after 2013 were not included in the study.

Descriptive statistics and differences in citation rates

Table 2 reports descriptive statistics for all papers in the dataset and for the papers from selected years in a 5 year time interval. The development over time shows that the number of economics journals increased. Correspondingly, the number of papers and assigned JEL codes also increased. Due to the diminishing citation window from 26 to 4 years, citation counts decrease and shares of non-cited papers increase over time. In Table 9 (see “Appendix 1”), we further report the number of papers, the time period covered in WoS, and descriptive citation statistics for each journal in our dataset. For 108 of all 294 journals in the set (37%), papers appeared across the complete time period from 1991 to 2013. For the other journals, the WoS coverage started later than 1991 (such as for the four American Economic journals). The results in Table 9 demonstrate that almost all journals published papers with zero citations. With an average of 145 citations, the highest citation rate was reached by the Quarterly Journal of Economics by way of comparison. Arellano and Bond (1991) is the most frequently cited paper in our set (with 4627 citations).

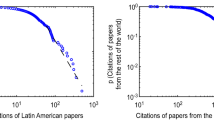

Table 3 shows average citation rates for papers assigned to different JEL codes. The results are presented for selected years in a five year time interval. It is clearly visible over all publication years that the average values differ substantially between the economics subfields. For example, papers published in 1991 in “General Economics and Teaching” (A) received on average 15.2 citations; with 49.5 citations this figure is more than three times larger in “Mathematical and Quantitative Methods” (C). Similar results for differences in citation rates of economic subfields have been published by van Leeuwen and Calero Medina (2012), Ellison (2013), Hamermesh (2018), and Perry and Reny (2016). The results in Table 3 also reveal that the average citation rates decline over time in most cases, as the citation window gets smaller.

The dependency of the average citations in economics on time and subfield, which is independent of research quality, necessitates the consideration of subfield and publication year in bibliometric studies. Without consideration of these differences, research evaluations are expected to be misleading and disadvantage economists newly publishing in the field or working in subfields with systematically low average citations (e.g. in subfield B “History of Economic Thought, Methodology, and Heterodox Approaches”).

Standard approaches in bibliometrics to normalise citation impact

Economics was already part of a few bibliometric studies, which considered field-specific differences (e.g. Ruiz-Castillo 2012). Palacios-Huerta and Volij (2004) and Angrist et al. (2017b) generalized an idea for citation normalisation that goes back to Liebowitz and Palmer (1984), where citations are weighted with respect to the citing journal. Angrist et al. (2017a) constructed their own classification scheme featuring ten subfields in the spirit of Ellison (2002). The classification builds upon JEL codes, keywords, and abstracts. Using about 135,000 papers published in 80 journals, the authors construct time varying importance weights for journals that account for the subfield where a paper was published.

Combes and Linnemer (2010) calculated normalised journal rankings for all EconLit journals. Although they considered JEL codes for the normalisation procedure, they calculated the normalisation at the journal, and not at the paper level. Linnemer and Visser (2016) document the most cited papers from the so called top-5 economics journals (Card and DellaVigna 2013), where they also account for time and JEL codes. With the focus on the top 5 journals, however, they considered only a small sample of journals and did not calculate bibliometric indicators.

In this study, we build upon the different normalization approaches published hitherto in economics by using, e.g. JEL codes as field-classification scheme for impact normalization and combine these approaches with recommendations from relevant metrics guidelines (e.g. Hicks et al. 2015).

Mean normalised citation score (MNCS)

The definition and use of normalised indicators in bibliometrics (based on mean citations) started in the mid-1980s with the papers by Schubert and Braun (1986) and Vinkler (1986). Here, normalised citation scores (NCSs) result from the division of the citation count of focal papers by the average citations of comparable papers in the same field or subfield. The denominator is the expected number of citations and constitutes the reference set of the focal papers (Mingers and Leydesdorff 2015; Waltman 2016a). Resulting impact scores larger than 1 indicate papers cited above-average in the field or subfield and scores below 1 denote papers with below-average impact.

Several variants of this basic approach have been introduced since the mid-1980s (Vinkler 2010) and different names have been used for the metrics, e.g. relative citation rate, relative subfield citedness, and field-weighted citation score. In the most recent past, the metric has been mostly used in bibliometrics under the label “MNCS”. Here the NCS for each paper in a publication set (of a researcher, institution, or country) are added up and divided by the number of papers in the set, which results in the mean NCS (MNCS). Since citation counts depend on the length of time between the publication year of the cited papers and the time point of the impact analysis (see Table 3), the normalisation is performed separately for each publication year.

Sandström (2014) published the following rules of thumb for interpreting normalised impact scores (of research groups):

“A. NCSf [field-normalised citation score] ≤ 0.6 significantly far below international average (insufficient)

B. 0.60 < NCSf ≤ 1.20 at international average (good)

C. 1.20 < NCSf ≤ 1.60 significantly above international average (very good)

D. 1.60 < NCSf ≤ 2.20 from an international perspective very strong (excellent)

E. NCSf > 2.20 global leading excellence (outstanding)” (p. 66).

Thus, excellent research has been published by an entity (e.g. journal or researcher), if the MNCS exceeds 1.6.

The MNCS has an important property, which is required by established normalised indicators (Moed 2015; Waltman et al. 2011): The MNCS value of 1 has a specific statistical meaning: it represents average performance and below-average and above-average performance can be easily identified.

A detailed explanation of how the MNCS is calculated in this study can be found in “Appendix 2”.

PPtop 10% —a percentile based indicator as the better alternative to the MNCS

Although the MNSC has been frequently used as indicator in bibliometrics, it has an important disadvantage: it uses the arithmetic average as a measure of central tendency, although distributions of citation counts are skewed (Seglen 1992). As a rule, field-specific paper sets contain many lowly or non-cited papers and only a few highly-cited papers (Bornmann and Leydesdorff 2017). Therefore, percentile-based indicators have become popular in bibliometrics, which are robust against outliers. According to Hicks et al. (2015) in the Leiden Manifesto, “the most robust normalisation method is based on percentiles: each paper is weighted on the basis of the percentile to which it belongs in the citation distribution of its field (the top 1, 10 or 20%, for example)” (p. 430). The recommendation to use percentile-based indicators can also be found in the Metric Tide (Wilsdon et al. 2015).

Against the backdrop of these developments in bibliometrics, and resulting recommendations in the Leiden Manifesto and the Metric Tide, we use the PPtop 10% indicator in this study as the better alternative to the MNCS. Since we are especially interested in the excellent papers (or journals) and the 1% is too restrictive (resulting in too few papers in the group of highly cited papers), we focus in this study on the 10% most highly cited papers. Basically, the PPtop 10% indicator is calculated on the basis of the citation distribution in a specific subfield whereby the papers are sorted in decreasing order of citations. Papers belonging to the 10% most frequently cited papers are assigned the score 1 and the others the score 0 in a binary variable. The binary variables for all subfields can then be used to calculate the Ptop 10% or PPtop 10% indicators. Ptop 10% is the absolute number of papers published by an entity (e.g. journal or institution) belonging to the 10% most frequently cited papers and PPtop 10% the relative number. Here, Ptop 10% is divided by the total number of papers in the set. Thus, it is the percentage of papers by an entity that are cited above-average in the corresponding subfields.

The detailed explanation of how the PPtop 10% indicator is calculated in this study can be found in “Appendix 2”.

Results

Comparison of citation counts, normalised citation scores (NCSs) and Ptop 10%

The normalisation of citations only makes sense in economics if the normalisation leads to meaningful differences between normalised scores and citations. However, one cannot expect complete independence, because both metrics measure impact based on the same data source.

Table 4 shows the papers with the largest NCSs in each subfield of economics. The listed papers include survey papers and methodological papers that are frequently used within and across subfields. We also find landmark papers in the table that have been continuously cited in the respective subfields. Linnemer and Visser (2016) published a similar list of most frequently cited papers in each subfield. For the JEL codes C, F, H, and R the same papers have been identified in agreement; differences are visible for the codes E, G, I, J, L, and O. Since Linnemer and Visser (2016) based their analyses on a different set of journals which is significantly smaller than our set, the differences are expectable.

The impact scores in Table 4 reveal that the papers are most frequently cited in the subfields with very different citation counts—between 344 citations in “General Economics and Teaching” (A) and 4627 citations in “Mathematical and Quantitative Methods” (C). Correspondingly, similar NCSs in the subfields reflect different citation counts. The list of papers also demonstrate that papers are assigned to more than one economic subfield. The paper by Acemoglu et al. (2001) is the most cited paper in four subfields. Since many other papers in the dataset are also assigned to more than one subfield, we considered a fractional counting approach of citation impact. The detailed explanation of how the fractional counting has been implemented in the normalisation can be found in “Appendix 2”.

Table 4 provides initial indications that normalisation is necessary in economics. However, the analysis could not include Ptop 10%, because this indicator is primarily a binary variable. To reveal the extent of agreement and disagreement between all metrics (citation counts, NCS, and Ptop 10%), we group the papers according to the Characteristics Scores and Scales (CSS) method, which is proposed by Glänzel and Schubert (1988). For each metric (citation counts and NCS), CSS scores are obtained by truncating the publication set at their metric mean and recalculating the mean of the truncated part of the set until the procedure is stopped or no new scores are generated. We defined four classes which we labeled with “poorly cited”, “fairly cited”, “remarkably cited”, and “outstandingly cited” (Bornmann and Glänzel 2017). Whereas poorly cited papers fall below the average impact of all papers in the set, the other classes are above this average and further differentiate the high impact area.

Table 5 (left panel) shows how the papers in our set are classified according to CSS with respect to citations and NCS. 84% of the papers are positioned on the diagonal (printed in bold), i.e. the papers are equally classified. The Kappa coefficient is a more robust measure of agreement than the share of agreement, since the possibility of agreement occurring by chance is taken into account (Gwet 2014). The coefficient in Table 5 highlights that the agreement is not perfect (which is the case with Kappa = 1). According to the guidelines by Landis and Koch (1977), the agreement between citations and NCS is only moderate.Footnote 1

The results in Table 5 show that 16% of the papers in the set have different classifications based on citations and NCS. For example, 13,843 papers are cited below average according to citations (classified as poorly cited), but above average cited according to NCS (classified as fairly cited). Two papers clearly stand out by being classified as poorly cited with respect to citations, but outstandingly cited with respect to the NCS. These are Lawson (2013) with 15 citations and an NCS of 7.8, and Wilson and Gowdy (2013) with 13 citations and an NCS of 6.8. There are also numerous papers in the set that are downgraded in impact measurement by normalised citations: 7226 papers are cited above average (fairly cited) according to citations, but score below average according to NCR (poorly cited). 546 papers are outstandingly cited if citations are used; but they are remarkably cited on the base of the NCR, i.e. if the subfield is considered in impact measurement.

Table 5 (right panel) also includes the comparison of citations and Ptop 10%. Several papers in this study are fractionally assigned to the 10% most-frequently cited papers in the corresponding subfields and publication years (see the explanation in “Appendix 2”). Since Ptop 10% is not completely a binary variable (with the values 0 or 1), we categorized the papers in our set into two groups: Ptop 10% ≤ 0.9 (being lowly cited) and Ptop 10% > 0.9 (being highly cited) for the statistical analysis. Nearly all of the papers classified as poorly cited on the basis of citations are also lowly cited on the basis of Ptop 10%. Thus, both indicators are more or less in agreement in this area. The results also show that some papers (n = 9,496) that are highly cited by Ptop 10% are classified differently by citations (remarkably or outstandingly cited). On the other hand, 898 papers are classified as poorly cited on the basis of citations, but are highly cited on the basis of Ptop 10%.

Taken together, the results in Table 5 demonstrate that normalisation leads to similar results as citations for many papers; however, there is also a moderate level of disagreement, which may lead to misleading results of impact analyses in economics based on citations.

New field- and time-normalised journal ranking

The first economics journal ranking was published by Coats (1971) who used readings from members of the American Economic Association as ranking criterion. With the emerging dominance of bibliometrics in research evaluation in recent decades, citations have become the most important source for ranking journals—in economics and beyond. The most popular current rankings in economics—besides conducting surveys among economists—are the relative rankings that are based on the approach of Liebowitz and Palmer (1984). Bornmann et al. (2018) provide a comprehensive overview of existing journal rankings in economics.

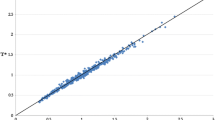

Since funding decisions and the offer of professorships in economics are mainly based on publications in reputable journals, journal rankings should not be influenced by different citation rates in economics subfields. Based on the NCS and the Ptop 10% for each paper in our set, we therefore calculated journal rankings by aggregating the normalised paper impact across years. Figure 1 visualizes the MNCSs and confidence intervals (CIs) of the 294 journals in our publication set, which are rank-ordered by the MNCS. The CIs are generated by adding and subtracting \(1.96*\frac{\sigma }{\sqrt N }\) from the MNCS, where \(\sigma\) denotes the corresponding population standard deviation (Cumming and Calin-Jageman 2016). Thus, we are sampling from the population distribution of MNCSs. If the CIs of two journals do not overlap, they differ “statistically significantly” (α = 1%) in their mean citation impact (Bornmann et al. 2014; Cumming 2012). The results should be interpreted against the backdrop of α = 1% (and not α = 5%), because the publication numbers are generally high in this study. The chance of receiving statistically significant results grows with increasing sample sizes.

We use CIs to receive indications of the “true” level of citation impact (differences), although there is a considerable disagreement among bibliometricians about the correctness of the use of confidence intervals and statistical significance when working with bibliometric indicators (Waltman 2016b; Williams and Bornmann 2016).Footnote 2 We follow the general argument by Claveau (2016) “that these observations [citations] are realizations of an underlying data generating process constitutive of the research unit [here: journals]. The goal is to learn properties of the data generating process. The set of observations to which we have access, although they are all the actual realizations of the process, do not constitute the set of all possible realizations. In consequence, we face the standard situation of having to infer from an accessible set of observations—what is normally called the sample—to a larger, inaccessible one—the population. Inferential statistics are thus pertinent” (p. 1233).

There are two groups including two journals each in Fig. 1, which are clearly separated from the other journals: Journal of Economic Literature and Quarterly Journal of Economics in the first group—confirming the result by Stern (2013)—and Journal of Political Economy and American Economic Review in the second group. The very high impact of the journals in the first group is especially triggered by a few very frequently cited papers appearing in these journals: 26 papers in these journals are among the 100 papers with the highest NCSs. Excluding this small group of papers, the CIs of the journals would overlap with many other journals. All other economic journals in the figure are characterized by overlaps of CIs (more or less clearly pronounced). Most of the journals in Fig. 1 do not differ statistically significantly from similarly ranked journals.

The alternative PPtop 10% journal ranking is based on the premise that the impact results for scientific entities (here: journals) should not be influenced by a few outliers, i.e. the few very highly-cited papers. Figure 2 shows the rank distribution of the journals on the basis of PPtop 10% and the corresponding CIs. The shape of the distribution exhibits a similar convexity as the distribution in Fig. 1. For the calculation of the CIs in Fig. 2 we defined three quantities: \(A = 2r + z^{2} ,\;B = z\sqrt {z^{2} + 4rq} \quad {\text{and}}\quad C = 2 (n + z^{2} )\), where r is the number of Ptop 10%, \(q - 1 - {\raise0.7ex\hbox{$1$} \!\mathord{\left/ {\vphantom {1 N}}\right.\kern-0pt} \!\lower0.7ex\hbox{$N$}}\) and z the corresponding value from the standard normal distribution. The CI for the population proportion is given by (A − B)/C to (A + B)/C (Altman et al. 2013).

In agreement with the MNCS results, we find the same two journals (Quarterly Journal of Economics and Journal of Economic Literature) at the top that are clearly separated from the others. The results confirm thus the previous results which are based on the MNCS. It seems that only two journals in economics (and not five journals as always supposed) can be clearly separated from the rest (in terms of field-normalised citations).

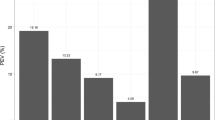

The overlaps of the CIs for the rest of the journals in Fig. 2 make it impossible to unambiguously identify specific performance groups of economics journals in terms of citation impact. We therefore used another (robust) method to classify the journals into certain impact groups and separate an outstandingly cited group (which include Quarterly Journal of Economics and Journal of Economic Literature). In “Comparison of citation counts, normalised citation scores (NCSs) and Ptop 10%” section we applied the CSS method to assign the papers in our set to four impact classes. Since the method can also be used with aggregated scores (Bornmann and Glänzel 2017), we assigned the journals in our set to four impact classes based on PPtop 10%. Table 9 in “Appendix 1” shows all journals (n = 294) with their assignments to the four groups: 205 journals are poorly cited, 62 journals are fairly cited, 14 journals are remarkably cited, and 13 journals are outstandingly cited.

Table 6 shows the 13 economics journals in the outstandingly cited group. Four additional journals are considered in the table. Their CIs include the threshold that separates the outstandingly cited journal group from the remarkably cited journal group. Thus, one cannot exclude the possibility that these journals also belong to the outstandingly cited group.

The three top journals in Table 6 are Quarterly Journal of Economics, Journal of Economic Literature, and Journal of Political Economy. With PPtop 10% of 70.48, 63.71, and 52.16, respectively, (significantly) more than half of the papers published in these journals are Ptop 10%. All journals in the table are able to publish significantly more papers in the corresponding subject categories and publication years than can be expected—the expected value is 10%. PPtop 10% of each journal is greater than 30%; thus, the journals published at least three times more Ptop 10% than can be expected.

In order to investigate the stability of journals in the outstandingly cited group, we annually assigned each economics journal in our set to the four citation impact classes (following the CSS approach). No journal falls in every year into the outstandingly cited group. Quarterly Journal of Economics, Journal of Political Economy, and Journal of Economic Literature missed the outstandingly cited category in only one year. American Economic Review is classified as outstandingly cited from 1992 to 2010 but not in the other four years. Review of Economics Studies and Econometrica are listed at 8 and 15, respectively, out of 23 years in the outstandingly cited category. The other journals in Table 6 are either classified as outstandingly or remarkably cited over the years; some journals are only fairly cited in certain years.

Comparisons with other journal rankings

How is the PPtop 10% journal ranking related to the results of other rankings in economics? The most simple form of ranking the journals is by their mean citation rate. The JIF is one of the most popular journal metric, which is based on the mean citation rate of papers within one year received by papers in the two previous years (Garfield 2006). In the comparison with PPtop 10% we use the mean citation rate for each journal. Since the citation window is not restricted to certain years in the calculation of PPtop 10%, we consider all citations from publication year until the end of 2016 in the calculation of the mean citation rate.

The RePEc website (see www.repec.org) has become an essential source for various rankings in economics. Based on a large and still expanding bibliometric database, RePEc publishes numerous rankings for journals, authors, economics departments and institutions. RePEc covers more journals and additional working papers, chapters and books compared to WoS (further details can be found in Zimmermann 2013). For the comparison with the PPtop 10% journal ranking, we consider two popular journal metrics from RePEc: the simple and the recursive Impact Factor (IF). The simple IF is the ratio of all citations to a specific journal and the number of listed papers in RePEc. The recursive IF also takes the prestige of the citing journal into account (Liebowitz and Palmer 1984). Whereas the simple and recursive IFs are based on citations from the RePEc database, the citations for calculating the mean citation rates (see above) are from WoS.

The results of the comparisons are reported in Table 7. Twenty three journals in our sample are not listed in RePEc, thus, we excluded these journals from all comparisons. We used the CSS method to classify all journals on the basis of the mean citation rate, PPtop 10%, as well as simple and recursive IFs, as outstandingly, remarkably, fairly, and poorly cited. In “Comparison of citation counts, normalised citation scores (NCSs) and Ptop 10%” section we applied the CSS method to assign the papers in our set to four impact classes. Since the method can also be used with aggregated scores (Bornmann and Glänzel 2017), we assigned the journals in our set to four impact classes based on the different indicators.

The Kappa coefficients in the table highlight a moderate agreement between the RePEC simple/recursive IF and PPtop 10% and a substantial agreement between PPtop 10% and mean citation rate (Landis and Koch 1977). Thus, the results reveal that there is considerable agreement, but also disagreement between the rankings. This finding can be expected if subfield-normalized citation metrics and citation metrics are compared. Both metrics groups are based on citation impact, why a considerable agreement is expectable. Since subfield-normalization correct citation impact in many cases moderately, but in a few cases substantially, the Kappa coefficients tend to be closer to almost perfect agreement than to no agreement.

Robustness

JEL codes are available on different levels. We used the main level with 18 categories in this study to normalise the data (see “The journal of econometric literature (JEL) codes” section). The first sub-level includes 122 categories. In a first robustness check of our new journal ranking in “New field- and time-normalised journal ranking” section we calculated PPtop 10% for all journals by using the 122 sub-levels, instead of the 18 main levels for normalisation. Again, we used the CSS method to classify the journals as outstandingly, remarkably, fairly, and poorly cited on the basis of PPtop 10% (see “Comparison of citation counts, normalised citation scores (NCSs) and Ptop 10%” section). Table 8 (see the part with the first robustness check) shows the comparison of two different PPtop 10% journal rankings, whereby one ranking was calculated on the basis of the JEL codes main level and the other on the basis of the JEL codes first subfield level. The Kappa coefficient and the percent agreement highlight a very high level of agreement between the rankings based on the two different subfield definitions. Thus, the journal results are robust to the use of the JEL code level for normalisation.

In two further robustness checks, we tested the results against the influence of extreme values: are the journals similarly classified as outstandingly, remarkably, fairly, and poorly cited, if the most-cited and lowly-cited papers in the journals are removed? The most-cited papers refer in the check to the most-cited papers of each journal in each year, which reduce the publication numbers by 4863 papers. The lowly-cited papers are defined as papers with zero citations or one citation (this reduced the publication numbers by almost one-fourth). The results of the further robustness checks are presented in Table 8 (see the parts with the second and third robustness checks). If the top-cited papers are excluded, the agreement is 95% and Kappa equals 0.91. Almost the same figures are obtained when we exclude lowly-cited papers, whereas the change in the classification scheme is slightly different. According to the guidelines of Landis and Koch (1977) the agreement in both cases is almost perfect, i.e. our results are robust.

In a final robustness check, we compared the PPtop 10% to the corresponding PPtop 50% journal ranking (see the results in Table 8). The PPtop 50% indicator is the percentage of papers (published by a journal) which are among the 50% most frequently cited papers in the corresponding economic subfields and publication years. As the PPtop 10% ranking is more selective than the PPtop 50% ranking, more journals in the PPtop 50% ranking are grouped in better categories than in the PPtop 10% ranking. As a consequence, the percent agreement between both rankings and the corresponding Kappa coefficient are only on a moderate level. All journals listed in Table 6 are also outstandingly cited based on the PPtop 50% ranking.

Discussion

Field- and time-normalisation of citation impact is the standard method in bibliometrics (Hicks et al. 2015), which should be applied in citation impact analyses across different time periods and subfields in economics. The most important reason is that there are different publication and citation cultures, which lead to subfield- and time-specific citation rates: for example, the mean citation rate in “General Economics and Teaching” decreases from 12 citations in 2000 to 5 citations in 2009. There is a low rate of only 7 citations in “History of Economic Thought, Methodology, and Heterodox Approaches”, but a high rate of 31 citations in “Financial Economics” (for papers published in 2001). Anauati et al. (2016) and other studies have confirmed the evidence that citation rates in subfields of economics differs. Without consideration of time- and subfield-specific differences in citation impact analysis, fair comparisons between scientific entities (e.g. journals, single researchers, research groups, and institutions) are impossible and entities with publication sets from recent time periods and in specific subfields are at a disadvantage.

In this study, we applied two normalised indicators in economics, which are the most important indicators in bibliometrics. The MNCS compares the citation impact of a focal paper with the mean impact of similar papers published in the same subfield and publication year. Thomson Reuters (2015) published a list of recommendations, which should be considered in the use of this indicator: for example, “use larger sets of publications when possible, for example, by extending the time period or expanding the number of subjects to be covered” (p. 15). We strongly encourage the consideration of the listed points in bibliometric studies in economic using the MNCS. However, Thomson Reuters (2015) and many bibliometricians view the influence of very highly cited papers on the mean as a measure of central tendency as a serious problem of the MNCS: “In our view, the sensitivity of the MNCS indicator to a single very highly cited publication is an undesirable property” (Waltman et al. 2012, p. 2425).

In recent years, percentiles have become popular as a better alternative to mean-based normalised indicators. The share of papers belonging to the x % most cited papers is regarded as the most important citation impact indicator in the Leiden Ranking (Waltman et al. 2012). According to Li and Ruiz-Castillo (2014), the percentile rank indicator is robust to extreme observations. In this study, we used the PPtop 10% indicator to identify highly cited papers in a certain subfield and time period. Besides focusing on the 10% most frequently cited papers, it is also possible to focus on the 1% or 20% most frequently cited papers (PPtop 1% or PPtop 20%). As the results of Waltman et al. (2012) show, however, the focus on another percentile rank is expected to lead to similar results. Besides percentiles, the use of log-transformed citations instead of citations in the MNCS formula has also been proposed as an alternative (Thelwall 2017). However, this alternative has not reached the status of a standard in bibliometrics yet.

In this study, we calculated normalised scores for each paper. The normalisation leads to similar impact assignments for many papers; however, there is also a high level of disagreement. There are several cases in the data that demonstrate unreasonable advantages or disadvantages for the papers if the impact is measured by citation counts without consideration of subfield- and time-specific baselines. For example, we can expect that papers published in “History of Economic Thought, Methodology, and Heterodox Approaches” and papers published recently are systematically disadvantaged in research evaluations across different subfields and time (because of their low mean citation rates). By contrast, papers from “Financial Economics” and papers published several years ago are systematically advantaged, since more citations can be expected. Thus, we attach importance to the consideration of normalisation in economic impact studies, which is strongly recommended by experts in bibliometrics (Hicks et al. 2015).

In this study, we introduce a new journal ranking, which is based on subfield-normalized citation scores. The results of the study reveal that only two journals can be meaningfully separated from the rest of economics journals (in terms of both indicators MNCS and PPtop 10%): Quarterly Journal of Economics and Journal of Economic Literature. This selection is based on field- and time-normalised impact indicators which are the best available indicators in bibliometrics for the quality assessment of journals. According to Bornmann and Marx (2014b), the benefit of citation analysis is based on what Galton (1907) called the “wisdom of crowds”. In the next few years, future studies should investigate with field-normalised indicators whether both journals can hold this position or will be replaced by other journals.

The ideal way of assessing entities in science, such as journals, is to combine quantitative (metrics) and qualitative (peer review) assessments to overcome the disadvantages of both approaches each. For example, the most-reputable journals that are used for calculating the Nature Index (NI, see https://www.natureindex.com) are identified by two expert panels (Bornmann and Haunschild 2017; Haunschild and Bornmann 2015). The NI counts the publications in these most-reputable journals; the index is used by the Nature Publishing Group (NPG) to rank institutions and countries. To apply the ideal method of research evaluation in economics, peer review and metrics should be combined to produce a list of top-journals in economics: a panel of economists uses the recommendations from our study with the two separated (based on CIs) and further 15 outstandingly cited journals and compare them with the rest of the journals according to their importance in economics. Ferrara and Bonaccorsi (2016) offer advice on how a journal ranking can be produced by using expert panels.

In this study we used a dataset with normalised scores on the paper level to identify the most frequently cited papers and journals. The dataset can be further used for various other entities in economics. The most frequently cited researchers, research groups, institutions, and countries can be determined subfield- and time-normalised. On the level of single researchers, we recommend that the normalised scores should be used instead of the popular h index proposed by Hirsch (2005). Like citation counts, the h index is not time- and subfield normalised. It is also dependent on the academic age of the researcher. Thus, Bornmann and Marx (2014a) recommended calculating the sum of Ptop 10% for a researcher and dividing it by the number of his or her academic years. This results in a subfield-, time-, and age-normalised impact score. In future studies, we will apply citation impact normalisation on different entities in economics. It would be helpful for these studies if normalised impact scores were to be regularly included in RePec, although it is a sophisticated task to produce these scores.

Change history

10 October 2019

Unfortunately, the original version of this article was published with the incorrect corresponding author���s name. The correct corresponding author of the article is Lutz Bornmann instead of Klaus Wohlrabe. This has been corrected with this correction.

Notes

Their guidelines for categorizing Kappa values are as follows: < 0 = no agreement, 0–0.20 = slight, 0.21–0.40 = fair, 0.41–0.60 = moderate, 0.61–0.80 = substantial, and 0.81–1 = almost perfect agreement.

References

Abramo, G., Cicero, T., & D’Angelo, C. A. (2011). Assessing the varying level of impact measurement accuracy as a function of the citation window length. Journal of Informetrics, 5(4), 659–667. https://doi.org/10.1016/j.joi.2011.06.004.

Acemoglu, D., Johnson, S., & Robinson, J. A. (2001). The colonial origins of comparative development: An empirical investigation. American Economic Review, 91(5), 1369–1401. https://doi.org/10.1257/aer.91.5.1369.

Altman, D., Machin, D., Bryant, T., & Gardner, M. (2013). Statistics with confidence: Confidence intervals and statistical guidelines. London: BMJ Books.

Anauati, V., Galliani, S., & Galvez, R. H. (2016). Quantifying the life cycle of scholarly articles across field of economic research. Economic Inquiry, 54(2), 1339–1355.

Angrist, J., Azoulay, P., Ellison, G., Hill, R., & Lu, S. F. (2017a). Economic research evolves: Fields and styles. American Economic Review, 107(5), 293–297. https://doi.org/10.1257/aer.p20171117.

Angrist, J., Azoulay, P., Ellison, G., Hill, R., & Lu, S. F. (2017b). Inside job or deep impact? Using extramural citations to assess economic scholarship. NBER working paper no. 23698. Cambridge, MA: National Bureau of Economic Research.

Arellano, M., & Bond, S. (1991). Some tests of specification for panel data—monte-carlo evidence and an application to employment equations. Review of Economic Studies, 58(2), 277–297. https://doi.org/10.2307/2297968.

Bornmann, L. (2011). Scientific peer review. Annual Review of Information Science and Technology, 45, 199–245.

Bornmann, L. (in press). Bibliometric indicators—Methods for measuring science. In R. Williams (Ed.), Encyclopedia of research methods. Thousand Oaks, CA: Sage.

Bornmann, L., Butz, A., & Wohlrabe, K. (2018). What are the top five journals in economics? A new meta-ranking. Applied Economics, 50(6), 659–675.

Bornmann, L., & Daniel, H.-D. (2008). Selecting manuscripts for a high impact journal through peer review: A citation analysis of communications that were accepted by Angewandte Chemie-International Edition, or rejected but published elsewhere. Journal of the American Society for Information Science and Technology, 59(11), 1841–1852. https://doi.org/10.1002/asi.20901.

Bornmann, L., & Glänzel, W. (2017). Applying the css method to bibliometric indicators used in (university) rankings. Scientometrics, 110(2), 1077–1079. https://doi.org/10.1007/s11192-016-2198-5.

Bornmann, L., & Haunschild, R. (2017). An empirical look at the nature index. Journal of the Association of Information Science and Technology, 68(3), 653–659. https://doi.org/10.1002/asi.23682.

Bornmann, L., & Leydesdorff, L. (2017). Skewness of citation impact data and covariates of citation distributions: A large-scale empirical analysis based on web of science data. Journal of Informetrics, 11(1), 164–175.

Bornmann, L., & Marx, W. (2014a). How to evaluate individual researchers working in the natural and life sciences meaningfully? A proposal of methods based on percentiles of citations. Scientometrics, 98(1), 487–509. https://doi.org/10.1007/s11192-013-1161-y.

Bornmann, L., & Marx, W. (2014b). The wisdom of citing scientists. Journal of the American Society of Information Science and Technology, 65(6), 1288–1292.

Bornmann, L., Mutz, R., Neuhaus, C., & Daniel, H.-D. (2008). Use of citation counts for research evaluation: Standards of good practice for analyzing bibliometric data and presenting and interpreting results. Ethics in Science and Environmental Politics, 8, 93–102. https://doi.org/10.3354/esep00084.

Bornmann, L., Schier, H., Marx, W., & Daniel, H.-D. (2011). Is interactive open access publishing able to identify high-impact submissions? A study on the predictive validity of Atmospheric Chemistry and Physics by using percentile rank classes. Journal of the American Society for Information Science and Technology, 62(1), 61–71.

Bornmann, L., Stefaner, M., de Moya Anegón, F., & Mutz, R. (2014). What is the effect of country-specific characteristics on the research performance of scientific institutions? Using multi-level statistical models to rank and map universities and research-focused institutions worldwide. Journal of Informetrics, 8(3), 581–593. https://doi.org/10.1016/j.joi.2014.04.008.

Boyack, K. W. (2004). Mapping knowledge domains: Characterizing PNAS. Proceedings of the National Academy of Sciences of the United States of America, 101, 5192–5199.

Card, D., & DellaVigna, S. (2013). Nine facts about top journals in economics. Journal of Economic Literature, 51(1), 144–161. https://doi.org/10.1257/jel.51.1.144.

Cherrier, B. (2017). Classifying economics: A history of JEL codes. Journal of Economic Literature, 55(2), 545–579.

Claveau, F. (2016). There should not be any mystery: A comment on sampling issues in bibliometrics. Journal of Informetrics, 10(4), 1233–1240. https://doi.org/10.1016/j.joi.2016.09.009.

Coats, A. (1971). The role of scholarly journals in the history of economics: An essay. Journal of Economic Literature, 9(1), 29–44.

Combes, P.-P., & Linnemer, L. (2010). Inferring missing citations: A quantitative multi-criteria ranking of all journals in economics. Working papers halshs-00520325, hal.

Council of Canadian Academies. (2012). Informing research choices: Indicators and judgment. The expert panel on science performance and research funding. Ottawa: Council of Canadian Academies.

Crespo, J. A., Herranz, N., Li, Y., & Ruiz-Castillo, J. (2014). The effect on citation inequality of differences in citation practices at the web of science subject category level. Journal of the Association for Information Science and Technology, 65(6), 1244–1256. https://doi.org/10.1002/asi.23006.

Cumming, G. (2012). Understanding the new statistics: Effect sizes, confidence intervals, and meta-analysis. London: Routledge.

Cumming, G., & Calin-Jageman, R. (2016). Introduction to the new statistics: Estimation, open science, and beyond. London: Taylor & Francis.

Ellison, G. (2002). The slowdown of the economics publishing process. Journal of Political Economy, 110(5), 947–993. https://doi.org/10.1086/341868.

Ellison, G. (2013). How does the market use citation data? The hirsch index in economics. American Economic Journal-Applied Economics, 5(3), 63–90. https://doi.org/10.1257/app.5.3.63.

Ferrara, A., & Bonaccorsi, A. (2016). How robust is journal rating in humanities and social sciences? Evidence from a large-scale, multi-method exercise. Research Evaluation, 25(3), 279–291. https://doi.org/10.1093/reseval/rvv048.

Galton, F. (1907). Vox populi. Nature, 75, 450–451. https://doi.org/10.1038/075450a0.

Garfield, E. (2006). The history and meaning of the journal impact factor. Journal of the American Medical Association, 295(1), 90–93.

Gevers, M. (2014). Scientific performance indicators: A critical appraisal and a country-by-country analysis. In W. Blockmans, L. Engwall, & D. Weaire (Eds.), Bibliometrics: Use and abuse in the review of research performance (pp. 43–53). London: Portland Press.

Gibson, J., Anderson, D. L., & Tressler, J. (2014). Which journal rankings best explain academic salaries? Evidence from the University of California. Economic Inquiry, 52(4), 1322–1340. https://doi.org/10.1111/ecin.12107.

Gibson, J., Anderson, D. L., & Tressler, J. (2017). Citations or journal quality: Which is rewarded more in the academic labor market? Economic Inquiry, 55(4), 1945–1965. https://doi.org/10.1111/ecin.12455.

Glänzel, W. (2008). Seven myths in bibliometrics. About facts and fiction in quantitative science studies. Paper presented at the fourth international conference on webometrics, informetrics and scientometrics (WIS 2008) & ninth COLLNET meeting, Berlin, Germany.

Glänzel, W., & Schubert, A. (1988). Characteristic scores and scales in assessing citation impact. Journal of Information Science, 14(2), 123–127.

Glänzel, W., Thijs, B., Schubert, A., & Debackere, K. (2009). Subfield-specific normalized relative indicators and a new generation of relational charts: Methodological foundations illustrated on the assessment of institutional research performance. Scientometrics, 78, 165–188.

Gwet, K. L. (2014). Handbook of inter-rater reliability, 4th edition: The definitive guide to measuring the extent of agreement among raters. Washington DC: Advanced Analytics LLC.

Haddow, G., & Noyons, E. (2013). Misfits? Research classification in research evaluation: Visualizing journal content within fields of research codes. Paper presented at the 14th International Conference of the International Society for Scientometrics and Informetrics Vienna (ISSI 2013), Austria, July 15-19, 2013 .

Hamermesh, D. S. (2018). Citations in economics: measurement, uses, and impacts. Journal of Economic Literature, 56(1), 115–156.

Haunschild, R., & Bornmann, L. (2015). Criteria for nature index questioned. Nature, 517(7532), 21.

Hicks, D., Wouters, P., Waltman, L., de Rijcke, S., & Rafols, I. (2015). Bibliometrics: The Leiden manifesto for research metrics. Nature, 520(7548), 429–431.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences of the United States of America, 102(46), 16569–16572. https://doi.org/10.1073/pnas.0507655102.

Johnston, D. W., Piatti, M., & Torgler, B. (2013). Citation success over time: Theory or empirics? Scientometrics, 95(3), 1023–1029. https://doi.org/10.1007/s11192-012-0910-7.

Kosnik, L.-R. (2018). A survey of JEL codes: What do they mean and are they used consistently? Journal of Economic Surveys, 32(1), 249–272. https://doi.org/10.1111/joes.12189.

Kostoff, R. N. (2002). Citation analysis of research performer quality. Scientometrics, 53(1), 49–71.

Kreiman, G., & Maunsell, J. H. R. (2011). Nine criteria for a measure of scientific output. Frontiers in Computational Neuroscience, 5, 48. https://doi.org/10.3389/fncom.2011.00048.

Landis, J. R., & Koch, G. G. (1977). Measurement of observer agreement for categorical data. Biometrics, 33(1), 159–174.

Lawson, T. (2013). What is this ‘school’ called neoclassical economics? Cambridge Journal of Economics, 37(5), 947–983. https://doi.org/10.1093/cje/bet027.

Leydesdorff, L., & Opthof, T. (2013). Citation analysis with medical subject headings (MeSH) using the web of knowledge: A new routine. Journal of the American Society for Information Science and Technology, 64(5), 1076–1080. https://doi.org/10.1002/asi.22770.

Li, Y., & Ruiz-Castillo, J. (2014). The impact of extreme observations in citation distributions. Research Evaluation, 23(2), 174–182. https://doi.org/10.1093/reseval/rvu006.

Liebowitz, S. J., & Palmer, J. P. (1984). Assessing the relative impact of economics journals. Journal of Economic Literature, 22(1), 77–88.

Linnemer, L., & Visser, M. (2016). The most cited articles from the top-5 journals (1991–2015). In CESifo working paper series nr. 5999. Munich: CESifo Group.

Lundberg, J. (2007). Lifting the crown—Citation z-score. Journal of Informetrics, 1(2), 145–154.

Martin, B. R., & Irvine, J. (1983). Assessing basic research—Some partial indicators of scientific progress in radio astronomy. Research Policy, 12(2), 61–90.

Marx, W., & Bornmann, L. (2015). On the causes of subject-specific citation rates in web of science. Scientometrics, 102(2), 1823–1827. https://doi.org/10.1007/s11192-014-1499-9.

McAllister, P. R., Narin, F., & Corrigan, J. G. (1983). Programmatic evaluation and comparison based on standardized citation scores. IEEE Transactions on Engineering Management, 30(4), 205–211.

Mingers, J., & Leydesdorff, L. (2015). A review of theory and practice in scientometrics. European Journal of Operational Research, 246(1), 1–19. https://doi.org/10.1016/j.ejor.2015.04.002.

Moed, H. (2015). Comprehensive indicator comparisons intelligible to non-experts: The case of two SNIP versions. Scientometrics, 106, 1–15. https://doi.org/10.1007/s11192-015-1781-5.

Moed, H. F., & Halevi, G. (2015). Multidimensional assessment of scholarly research impact. Journal of the Association for Information Science and Technology, 66(10), 1988–2002. https://doi.org/10.1002/asi.23314.

Mutz, R. (2016). Some further aspects of sampling: Comment on Williams and Bornmann. Journal of Informetrics, 10(4), 1241–1242. https://doi.org/10.1016/j.joi.2016.09.007.

National Research Council. (2010). A revised guide to the methodology of the data-based assessment of research-doctorate programs in the united states. Washington, DC: The National Academies Press.

Opthof, T. (2011). Differences in citation frequency of clinical and basic science papers in cardiovascular research. Medical & Biological Engineering & Computing, 49(6), 613–621. https://doi.org/10.1007/s11517-011-0783-6.

Palacios-Huerta, I., & Volij, O. (2004). The measurement of intellectual influence. Econometrica, 72(3), 963–977. https://doi.org/10.1111/j.1468-0262.2004.00519.x.

Perry, M., & Reny, P. J. (2016). How to count citations if you must. American Economic Review, 106(9), 2722–2741.

Podlubny, I. (2005). Comparison of scientific impact expressed by the number of citations in different fields of science. Scientometrics, 64(1), 95–99.

Radicchi, F., & Castellano, C. (2011). Rescaling citations of publications in physics. Physical Review E, 83(4), 046116. https://doi.org/10.1103/physreve.83.046116.

Rehn, C., Kronman, U., & Wadskog, D. (2007). Bibliometric indicators—Definitions and usage at karolinska institutet. Stickholm: Karolinska Institutet University Library.

Research Evaluation and Policy Project. (2005). Quantitative indicators for research assessment—A literature review (REPP discussion paper 05/1). Canberra, Australia: Research Evaluation and Policy Project, Research School of Social Sciences, The Australian National University.

Ruiz-Castillo, J. (2012). The evaluation of citation distributions. SERIEs: Journal of the Spanish Economic Association, 3(1), 291–310. https://doi.org/10.1007/s13209-011-0074-3.

Sandström, U. (2014). Bibliometric evaluation of sepa-funded large research programs 2003–2013. Stockholm: Swedish Environmental Protection Agency.

Schneider, J. W. (2016). The imaginarium of statistical inference when data are the population: Comments to Williams and Bornmann. Journal of Informetrics, 10(4), 1243–1248. https://doi.org/10.1016/j.joi.2016.09.011.

Schubert, A., & Braun, T. (1986). Relative indicators and relational charts for comparative assessment of publication output and citation impact. Scientometrics, 9(5–6), 281–291.

Seglen, P. O. (1992). The skewness of science. Journal of the American Society for Information Science, 43(9), 628–638.

Smolinsky, L., & Lercher, A. (2012). Citation rates in mathematics: A study of variation by subdiscipline. Scientometrics, 91(3), 911–924. https://doi.org/10.1007/s11192-012-0647-3.

Stern, D. I. (2013). Uncertainty measures for economics journal impact factors. Journal of Economic Literature, 51(1), 173–189. https://doi.org/10.1257/jel.51.1.173.

Strotmann, A., & Zhao, D. (2010). Combining commercial citation indexes and open-access bibliographic databases to delimit highly interdisciplinary research fields for citation analysis. Journal of Informetrics, 4(2), 194–200. https://doi.org/10.1016/j.joi.2009.12.001.

Thelwall, M. (2017). Three practical field normalised alternative indicator formulae for research evaluation. Journal of Informetrics, 11(1), 128–151. https://doi.org/10.1016/j.joi.2016.12.002.

Thomson Reuters. (2015). InCites indicators handbook. Philadelphia, PA: Thomson Reuters.

van Leeuwen, T. N., & Calero Medina, C. (2012). Redefining the field of economics: Improving field normalization for the application of bibliometric techniques in the field of economics. Research Evaluation, 21(1), 61–70. https://doi.org/10.1093/reseval/rvr006.

Vinkler, P. (1986). Evaluation of some methods for the relative assessment of scientific publications. Scientometrics, 10(3–4), 157–177.

Vinkler, P. (2010). The evaluation of research by scientometric indicators. Oxford: Chandos Publishing.

Waltman, L. (2016a). A review of the literature on citation impact indicators. Journal of Informetrics, 10(2), 365–391.

Waltman, L. (2016b). Special section on statistical inference in citation analysis. Journal of Informetrics, 10(4), 1224. https://doi.org/10.1016/j.joi.2016.09.008.

Waltman, L., Calero-Medina, C., Kosten, J., Noyons, E. C. M., Tijssen, R. J. W., van Eck, N. J., et al. (2012). The Leiden ranking 2011/2012: Data collection, indicators, and interpretation. Journal of the American Society for Information Science and Technology, 63(12), 2419–2432.

Waltman, L., & Schreiber, M. (2013). On the calculation of percentile-based bibliometric indicators. Journal of the American Society for Information Science and Technology, 64(2), 372–379.

Waltman, L., & van Eck, N. J. (2013a). Source normalized indicators of citation impact: An overview of different approaches and an empirical comparison. Scientometrics, 96(3), 699–716. https://doi.org/10.1007/s11192-012-0913-4.

Waltman, L., & van Eck, N. J. (2013b). A systematic empirical comparison of different approaches for normalizing citation impact indicators. Journal of Informetrics, 7(4), 833–849.

Waltman, L., van Eck, N. J., van Leeuwen, T. N., Visser, M. S., & van Raan, A. F. J. (2011). Towards a new crown indicator: Some theoretical considerations. Journal of Informetrics, 5(1), 37–47. https://doi.org/10.1016/j.joi.2010.08.001.

Wang, J. (2013). Citation time window choice for research impact evaluation. Scientometrics, 94(3), 851–872. https://doi.org/10.1007/s11192-012-0775-9.

Wang, Q., & Waltman, L. (2016). Large-scale analysis of the accuracy of the journal classification systems of web of science and scopus. Journal of Informetrics, 10(2), 347–364.

Williams, R., & Bornmann, L. (2016). Sampling issues in bibliometric analysis. Journal of Informetrics, 10(4), 1253–1257.

Wilsdon, J., Allen, L., Belfiore, E., Campbell, P., Curry, S., Hill, S., et al. (2015). The metric tide: Report of the independent review of the role of metrics in research assessment and management. Bristol: Higher Education Funding Council for England (HEFCE).

Wilson, D. S., & Gowdy, J. M. (2013). Evolution as a general theoretical framework for economics and public policy. Journal of Economic Behavior & Organization, 90, S3–S10. https://doi.org/10.1016/j.jebo.2012.12.008.

Wouters, P., Thelwall, M., Kousha, K., Waltman, L., de Rijcke, S., Rushforth, A., et al. (2015). The metric tide: Literature review (supplementary report i to the independent review of the role of metrics in research assessment and management). London: Higher Education Funding Council for England (HEFCE).

Zimmermann, C. (2013). Academic rankings with repec. Econometrics, 1(3), 249–280.

Acknowledgements

Open access funding provided by Max Planck Society. The bibliometric data used in this paper are from an in-house database developed and maintained by the Max Planck Digital Library (MPDL, Munich) and derived from the Science Citation Index Expanded (SCI-E), Social Sciences Citation Index (SSCI), Arts and Humanities Citation Index (AHCI) prepared by Clarivate Analytics (Philadelphia, Pennsylvania, USA). We would like to thank Ludo Waltman at the Centre for Science and Technology Studies (CWTS) of the Leiden University for his support in calculating the field-normalised indicators. We would also like to thank David Card, Daniel Hamermesh, Stefano DellaVigna and participants at the workshop “Economics of Science and Innovation” at the Summer Forum in Barcelona 2017 for their valuable feedback on previous versions of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Appendix 2

Calculation of the Mean Normalised Citation Score (MNCS)

For the calculation of the MNCS, each paper’s citations in a paper set (of a journal, researcher, institution, or country) are divided by the mean citation impact in a corresponding reference set; the received NCSs are averaged to the MNCS. Table 10 shows how the MNCs are calculated for two fictitious journals. For example, the NCS for paper number 2 is 3/10.67 = 0.28; the MNCS for journal B is (0.28 + 1.00)/2 = 0.64. The MNCS is formally defined as (Waltman et al. 2011)

where ci is the citation count of a focal paper and ei is the corresponding expected number of citations in the economic subfield (JEL code). The MNCS is defined similar to the item-oriented field-normalised citation score average indicator (Lundberg 2007; Rehn et al. 2007). Since citation counts depend on the length of time between the publication year of the cited papers and the time point of the impact analysis (see Table 3), the normalisation is performed separately for each publication year.

It is a nice property of the MNCS that it leads to an average value of 1. However, this is only valid in a paper set (with papers from one year) if each paper is assigned to one field. However, many of the field classification systems (e.g. JEL codes) assign papers to more than one field. Table 10 shows a simple example that illustrates the problem with the multi-assignment of papers. Paper number 5 is assigned to two fields. The obvious solution for the calculation of the NCS would be to calculate an average of two ratios for this paper: ((9/10.67) + (9/8.5))/2 = 0.95. However, this solution leads to an average value of greater than 1 (1.01) across the five papers in Table 10.

In order to solve this problem, Waltman et al. (2011) propose the following two calculations, which ensure a mean value of 1 (see Table 11): (1) The expected number of citations for field X is calculated as follows: (20 + 3+(9*0.5))/(1 + 1+0.5) = 11. Thus, the citations of paper 5 are fractionally counted; the calculation for field Y is correspondingly: (8 + (9*0.5))/(1 + 0.5). (2) The NCS for paper 5 also considers its fractional assignment to two fields and is calculated as follows: (9/11*0.5) + (9/8.33*0.5). Both calculations lead to the desired property of the indicator that it results in a mean value of 1 across all papers in a field—although the papers might be assigned to more than one field.

Calculation of the percentile based indicator: PPtop 10% (and PPtop 50%)

Table 12 uses an example dataset to demonstrate how the PPtop 10% indicator is calculated. Basically, the indicator is generated on the basis of the citation distribution in a field (here: field A) whereby the papers are sorted in decreasing order of citations. Papers belonging to the 10% most frequently cited papers are assigned the score 1 and the others the score 0 in a binary variable. The binary variable can then be used to calculate the Ptop 10% or PPtop 10% indicators. Ptop 10% is the absolute number of papers published in field A belonging to the 10% most frequently cited papers (here: 1) and PPtop 10% the relative number whereas Ptop 10% is divided by the total number of papers (1/10*100 = 10). If a journal (here: journal X) had published 6 papers from field A (and no further papers in other fields), Ptop 10% = 1 and PPtop 10% = 16.7% (1/6*100).

The PPtop 10% indicator is concerned by two problems, whereby the solution for the first problem is outlined in Table 13. Citation distributions are characterized by ties, i.e. papers having the same number of citations. The ties lead to problems in identifying the 10% most frequently cited papers, if the ties concern papers around the threshold of 10% in a citation distribution. We explain the problem and the solution based on the PPtop 50% indicator, because the use of this indicator needs to include fewer papers in an example than the PPtop 10%. However, the procedure is the same with PPtop 10%.

In Table 13, the 7 papers with 20 citations can be clearly assigned to the 50% most frequently cited papers and the 5 papers with 0 citations to the rest. However, this is not possible for the 6 papers with 10 citations; they cannot be clearly assigned to one of both groups. Waltman and Schreiber (2013) propose a solution for this problem, which leads to exactly 50% most frequently cited papers in a field despite the existence of papers with the same number of citations (around the threshold). We explain their solution using the example data in Table 13.

Each of the 18 papers in field B represents 1/18 = 5.56% of the field-specific citation distribution. Hence, together the 7 papers with 20 citations represent 7*5.56% = 38.92% of the citation distribution, the 6 papers with 10 citations represent 6*5.56% = 33.36% of the citation distribution, and the 5 papers with 0 citations represent 5*5.56% = 27.8%. We would like to identify the 50% most frequently cited papers, whereby the 6 papers with 10 citations are still unclear. Waltman and Schreiber (2013) fractionally assign these papers to the 50% most frequently cited papers, so that we end up with 50% 50% most frequently cited papers.

The 7 papers with 20 citations cover 38.92% of the 50% most frequently cited papers. The rest (50%-38.92% = 11.08%) needs to be covered by the 6 papers with 10 citations. In order to reach this goal, the segment of the citation distribution covered by the papers with 10 citations must be split into two parts, one part covering 11.08% of the distribution, the other part covering the remaining 33.36–11.08% = 22.28%. This other part (22.28%) belongs to the bottom 50% of the citation distribution. Splitting the segment of the distribution covered by papers with 10 citations is done by assigning each of the 6 papers to the 50% most frequently cited papers with a fraction of 11.08%/33.36% = 0.33. The value 11.08% represents the share of the papers with 10 citations, which belong to the 50% most frequently cited papers; 33.36% is the percentage of papers in the field with 10 citations.

In this way, we obtain 50% 50% most frequently cited papers, since ((0.33*6) + 7)/18 equals 50%. There are 6 papers in the field with 10 citations, which are fractionally assigned to the 50% most frequently cited papers, and 7 papers with 20 citations that clearly belong to the 50% most frequently cited papers.

Table 14 shows an example that reveals the second problem with the PPtop 50% indicator: papers are assigned not only to one, but to two or more fields. The example in Table 14 consists of 16 papers whereby 1 paper belongs to two fields. In these cases, the papers in multiple fields are fractionally counted for the calculation of PPtop 50% following the approach of Waltman et al. (2011).

We explain the approach using the example in Table 14. Since 1 paper in the table belongs to two fields (B and C), it is weighted by 0.5 instead of 1 (the other papers in the sets which belong to one field each are weighted with 1). This leads to 15.5 papers in field B and 10.5 papers in field C.

In field B, the papers with 20 citations represent 29.03% of the citation distribution (4.5/15.5), the papers with 10 citations 38.71% (6/15.5), and the papers with 0 citations 32.26% (5/15.5). Thus, the papers with 20 citations cover 29.03% of the 50% most frequently cited papers. The rest with 20.97% (50%-29.03%) should be covered by the 6 papers with 10 citations. Splitting the segment of the distribution covered by papers with 10 citations is done by assigning each of the 6 papers to the 50% most frequently cited papers with a fraction of 20.97%/38.71% = 0.54. Thus, we obtain 50% 50% most frequently cited papers since ((0.54*6) + 4.5)/15.5 equals 50%.