Abstract

This paper studies the evaluation of research units that publish their output in several scientific fields. A possible solution relies on the prior normalization of the raw citations received by publications in all fields. In a second step, a citation indicator is applied to the units’ field-normalized citation distributions. In this paper, we also study an alternative solution that begins by applying a size- and scale-independent citation impact indicator to the units’ raw citation distributions in all fields. In a second step, the citation impact of any research unit is calculated as the average (weighted by the publication output) of the citation impact that the unit achieves in each field. The two alternatives are confronted using the 500 universities in the 2013 edition of the CWTS Leiden Ranking, whose research output is evaluated according to two citation impact indicators with very different properties. We use a large Web of Science dataset consisting of 3.6 million articles published in the 2005–2008 period, and a classification system distinguishing between 5119 clusters. The main two findings are as follows. Firstly, differences in production and citation practices between the 3332 clusters with more than 250 publications account for 22.5 % of the overall citation inequality. After the standard field-normalization procedure, where cluster mean citations are used as normalization factors, this quantity is reduced to 4.3 %. Secondly, the differences between the university rankings according to the two solutions for the all-sciences aggregation problem are of a small order of magnitude for both citation impact indicators.

Similar content being viewed by others

Notes

SCImago is a research group from the Consejo Superior de Investigaciones Científicas, University of Granada, Extremadura, Carlos III (Madrid) and Alcalá de Henares in Spain. The SCImago Institutions Rankings (SIR; www.scimagoir.com) is a bibliometric ranking of research institutions based on Elsevier’s Scopus database.

A similar definition is applied in the SCImago ranking (Bornmann et al. 2012), as well as in the InCites software in the Web of Science (see ‘percentile in subject area’ in http://incites.isiknowledge.com/common/help/h_glossary.html).

Naturally, everything that we say for the all-sciences case can be equally applied at other aggregation levels, as in the case of aggregating articles in Organic Chemistry, Inorganic Chemistry, Chemical Engineering, and other related sub-fields into the discipline of Chemistry.

Target (or cited-side) normalization procedures depend on a given classification system including a number of different fields. To recognize this feature, it is useful to refer to these procedures as field-normalized normalization procedures. This is the practice we follow in this paper.

More generally, in this section we assume that the assignment of articles in D to the I research units is such that the set of distributions c ij form a partition of c j .

For a justification of including distributional considerations in citation analysis, see Albarrán et al. (2011a).

Given θ, an indicator F is said to be subgroup consistent if the overall citation impact of distribution c, F(c; θ), increases whenever the citation impact of one of the subgroups, say F(c g ; θ), increases while the citation impact of all other subgroups, F(\( \varvec{c}_{{\varvec{g}^{\prime } }} \); θ) for all \( \varvec{g}^{\prime } \) different from g, remain constant. Additively decomposable indicators are clearly subgroup consistent. For the opposite direction, see Foster and Shorrocks (1991) or the summary in Perianes-Rodriguez and Ruiz-Castillo (2015b).

The aggregation of V heterogeneous sub-fields examined in situation C in “The additive decomposability property” section, admits a similar solution: F(\( \varvec{c}_{j}^{*} \); θ *) = Σ v (N vj /N j )F(\( \varvec{c}_{{\varvec{vj}}}^{*} \); θ *).

The aggregation of V sub-fields examined in situation C in “The additive decomposability property” section, admits a similar solution: F(c j ; θ j ) = Σ v (N vj /N j )F(c vj ; θ vj ), where θ vj is the characteristic of citation distribution c vj at the sub-field level for every j = 1, …, J.

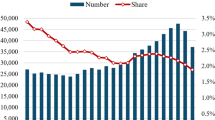

Using an additive decomposability citation inequality index, Ruiz-Castillo and Waltman (2015) decompose the overall citation inequality into a within-group and a between-group citation inequality terms. Then, these authors present some evidence indicating that the percentage represented by the between-group citation inequality term increases along a sequence of twelve independent classification systems, in each of which the same set of publications is assigned to an increasing number of clusters according to a publication-level algorithmic methodology.

The significant effect of field-normalization is illustrated in Figure A in the SMS, which shows how I(π) changes with π both before and after the standard field-normalization.

The situation is illustrated in Figure B in the SMS, showing the histogram of the distribution of these ratios for the 5119 clusters in three cases: (1) the overall citation distribution C, where articles from all clusters are ordered according to their raw citations prior to the application of any field-normalization procedure; (2) the normalized distribution \( \varvec{C}^{*} \), and (3) the restriction of \( \varvec{C}^{*} \) to the 3332 clusters with more than 250 publications.

As pointed out by Waltman et al. (2012b), since university value distributions are somewhat skewed, an increase in the rank of a university by, say, 10 positions is much more significant in the top of the ranking than further down the list. Therefore, a statement such as “University u is performing 20 % better than university v according to the top 10 % indicator” is more informative than a statement such as “University u is ranked 20 positions higher than university v according to the top 10 % indicator.”

References

Albarrán, P., Crespo, J., Ortuño, I., & Ruiz-Castillo, J. (2011a). The skewness of science in 219 sub-fields and a number of aggregates. Scientometrics, 88, 385–397.

Albarrán, P., Ortuño, I., & Ruiz-Castillo, J. (2011b). The measurement of low- and high-impact in citation distributions: Technical results. Journal of Informetrics, 5, 48–63.

Bornmann, L., De Moya Anegón, F., & Leydesdorff, L. (2012). The new excellence indicator in the world report of the SCImago Institutions Rankings 2011. Journal of Informetrics, 6, 333–335.

Brzezinski, M. (2015). Power laws in citation distributions: Evidence from Scopus. Scientometrics, 103, 213–228.

Cowell, F. (2000). Measurement of inequality. In A. B. Atkinson & F. Bourguignon (Eds.), Handbook of income distribution (Vol. 1, pp. 87–166). Amsterdam: Elsevier.

Crespo, J. A., Herranz, N., Li, Y., & Ruiz-Castillo, J. (2014). The effect on citation inequality of differences in citation practices at the Web of Science subject category level. Journal of the American Society for Information Science and Technology, 65, 1244–1256.

Crespo, J. A., Li, Y., & Ruiz-Castillo, J. (2013). The measurement of the effect on citation inequality of differences in citation practices across scientific fields. PLoS ONE, 8, e58727.

Foster, J. E., Greeer, J., & Thorbecke, E. (1984). A class of decomposable poverty measures. Econometrica, 52, 761–766.

Foster, J. E., & Shorrocks, A. (1991). Subgroup consistent poverty indices. Econometrica, 59, 687–709.

Groeneveld, R. A., & Meeden, G. (1984). Measuring skewness and kurtosis. The Statistician, 33, 391–399.

Li, Y., Castellano, C., Radicchi, F., & Ruiz-Castillo, J. (2013). Quantitative evaluation of alternative field normalization procedures. Journal of Informetrics, 7, 746–755.

Perianes-Rodriguez, A., & Ruiz-Castillo, J. (2015a). An alternative to field-normalization in the aggregation of heterogeneous scientific fields. In A. Ali Salah, Y. Tonta, A. A. Akdag Salah, C. Sugimoto & U. Al (Eds.), Proceedings of ISSI 2015 Istanbul: 15th International Society of Scientometrics and Informetrics conference (pp. 294–304), Istanbul, Turkey, 29 June–3 July, 2015. Istanbul: Bogaziçi University.

Perianes-Rodriguez, A., & Ruiz-Castillo, J. (2015b). Multiplicative versus fractional counting methods for co-authored publications. The case of the 500 universities in the Leiden Ranking. Journal of Econometrics 9, 917–989.

Perianes-Rodriguez, A., & Ruiz-Castillo, J. (2015c). University citation distributions. Journal of the American Society for Information Science and Technology,. doi:10.1002/asi.23619.

Radicchi, F., & Castellano, C. (2012). A reverse engineering approach to the suppression of citation biases reveals universal properties of citation distributions. PLoS ONE, 7, e33833.

Radicchi, F., Fortunato, S., & Castellano, C. (2008). Universality of citation distributions: Toward an objective measure of scientific impact. PNAS, 105, 17268–17272.

Ruiz-Castillo, J. (2014). The comparison of classification-system-based normalization procedures with source normalization alternatives in Waltman and Van Eck. Journal of Informetrics, 8, 25–28.

Ruiz-Castillo, J., & Waltman, L. (2015). Field-normalized citation impact indicators using algorithmically constructed classification systems of science. Journal of Informetrics, 9, 102–117.

Schubert, A., Glänzel, W., & Braun, T. (1987). Subject field characteristic citation scores and scales for assessing research performance. Scientometrics, 12, 267–292.

Thelwall, M., & Wilson, P. (2014). Distributions for cited articles from individual subjects and years. Journal of Informetrics, 8, 824–839.

Waltman, L., Calero-Medina, C., Kosten, J., Noyons, E. C. M., Tijssen, R. J. W., Van Eck, N. J., et al. (2012a). The Leiden Ranking 2011/2012: Data collection, indicators, and interpretation. Journal of the American Society for Information Science and Technology, 63, 2419–2432.

Waltman, L., & Schreiber, M. (2013). On the calculation of percentile-based bibliometric indicators. Journal of the American Society for Information Science and Technology, 64, 372–379.

Waltman, L., & Van Eck, N. J. (2012). A new methodology for constructing a publication-level classification system of science. Journal of the American Society for Information Science and Technology, 63, 2378–2392.

Waltman, L., & Van Eck, N. J. (2013). A systematic empirical comparison of different approaches for normalizing citation impact indicators. Journal of Informetrics, 7, 833–849.

Waltman, L., Van Eck, N. J., & Van Raan, A. F. J. (2012b). Universality of citation distributions revisited. Journal of the American Society for Information Science and Technology, 63, 72–77.

Acknowledgments

This research project builds on earlier work started by Javier Ruiz-Castillo during a research visit to the Centre for Science and Technology Studies (CWTS) of Leiden University. The authors gratefully acknowledge CWTS for the use of its data. Ruiz-Castillo acknowledges financial support from the Spanish MEC through Grant ECO2011-29762. Conversations with Antonio Villar, Pedro Albarrán, and Ludo Waltman are deeply appreciated. Two referee reports greatly contributed to an improvement of the original version of the paper. All remaining shortcomings are the authors’ sole responsibility.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Perianes-Rodriguez, A., Ruiz-Castillo, J. A comparison of two ways of evaluating research units working in different scientific fields. Scientometrics 106, 539–561 (2016). https://doi.org/10.1007/s11192-015-1801-5

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-015-1801-5