Abstract

Does exposure to cognitive load affect key properties of economic behavior? In this experiment, subjects face a series of simple binary decision tasks between prospects, testing for monotonicity in monetary payments, consistency with (first-order) stochastic dominance, reduction of compound lotteries, risk attitudes, and ambiguity attitudes. Cognitive load is manipulated via simultaneous memory tasks. Our data show treatment differences resulting from cognitive load for decision tasks with risky outcomes. However, cognitive load has no impact on monotonicity and ambiguity attitudes. Under a dual-process view of human decision-making, our findings suggest that ambiguity attitudes and preferences for “more certain money” are intuitive, not reasoned.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper presents evidence from an experiment featuring choice situations under uncertainty, including risk (known probabilities) and ambiguity (unknown probabilities), with and without exposure to cognitive load. The experimental data shows that cognitive load has different effects on behavior depending on whether subjects face a decision task that involves the comparison of prospects returning certain, risky, or ambiguous monetary payments.

Our design implements simple binary decision tasks that elicit subjects’ ambiguity attitudes, risk aversion, as well as their adherence to monotonicity in certain monetary payments, first-order stochastic dominance, and reduction of compound lotteries.

Subjects make decisions in an environment without additional cognitive load (treatment Zero), with low cognitive load (treatment Low), and with high cognitive load (treatment High). Cognitive load is induced via a memory task that runs simultaneous to each decision task.

The main findings are that monotonicity in monetary payments and ambiguity attitudes are not affected by cognitive load. Both the key idea of “more money is better”, which underlies the standard assumption of an increasing utility function in economic jargon, and the preference for prospects with known probabilities of outcomes, known as ambiguity aversion (Ellsberg, 1961), seem to be governed by intuitive processes in the human mind, not deliberative ones. The latter processes heavily draw on working memory as a cognitive resource available for decision-making (Evans & Stanovich, 2013).

Cognitive load does not interfere with subjects’ behavior when outcomes are certain or ambiguous. However, this is different for tasks involving risky payments. For these, we find differences for aversion towards risk, adherence to stochastic dominance, and reduction of compound lotteries between treatments that occupied subjects’ working memory, by manipulating their cognitive load, and those which did not. In particular, the negative association we observe between cognitive load on the one hand and consistency with stochastic dominance and reduction of compound lotteries on the other hand, is especially true for subjects who display high cognitive abilities, suggesting the use of deliberation by such individuals when deciding under risk.Footnote 1

To explain our main findings, we employ a dual-process model. By adapting the assumption in Evans and Stanovich (2013) that both memorization and deliberative processes make use of a scarce cognitive resource, working memory, while intuitive processes do not, we can classify the decision tasks of our experiment by their “complexity”. Subjects would always like to invoke deliberation to solve complex tasks, but can only do so if sufficient working memory is available. This is less likely in treatments Low and especially High. If the task is simple enough to be solved without deliberation or if cognitive load is present, intuitive processes will be used to make a choice. Thus, a dual-process model would envision the use of deliberative processes only in complex tasks without cognitive load. This distinction identifies the tasks for which we find treatment differences and those for which we do not.

The next section provides an overview of the related literature. Section 3 lays out the behavioral properties we are interested in. Section 4 describes the experimental design and implementation. Section 5 presents our experimental results. Section 6 uses a dual-process theory to derive a behavioral explanation of the main findings, and Section 7 concludes.

2 Related experimental studies

In this section, we provide an overview of the related literature on behavior in relation to cognitive load. Cognitive load refers to the taxation of cognitive resources available for decision-making and is distinct from cognitive abilities, which refer to a decision maker’s general intelligence. Our main focus is on studies that directly manipulate cognitive load via an additional task to be solved. There is a growing body of literature that explores in what domains of economic decision-making, and how, exposure to cognitive load affects behavior.

Economists and psychologists have shown that cognitive load affects, among others, math skills, logical reasoning, generosity, food choice, impatience, fairness, and strategic behavior.Footnote 2 Deck and Jahedi (2015) provide a comprehensive survey of this literature. However, only few studies have explored how cognitive load affects behavior under uncertainty. The existing experiments focus primarily on choice under risk and in particular, on risk-taking behavior.

With regards to risk preferences under cognitive load, the results are rather inconclusive. While some studies have shown that cognitive load increases risk aversion in some tasks (Benjamin et al., 2013; Deck & Jahedi, 2015; Deck et al., 2021; Gerhardt et al., 2016), others have reported that risk aversion decreases (Blaywais & Rosenboim, 2019), or is even not affected at all (Drichoutis & Nayga, 2020; Olschewski et al., 2019).Footnote 3 These studies indicate that statistical significance of the reported effects depends on a couple of factors. First, the domain of payoffs plays an important role; i.e., whether decision tasks contain lotteries that return gains (positive payoffs) or losses (negative payoffs). Second, the lotteries being compared seem to matter for statistical significance too; i.e., whether decision tasks involve comparison of a safe option (paying a certain amount of money for sure) and a risky one, or two risky options. We call the former tasks safe-risky tasks and the latter ones risky-risky tasks. Below we summarize the existing studies on risk preferences under cognitive load in more detail.

The first experiment that links cognitive load and risk-taking behavior goes back to Benjamin et al. (2013). In this experimental study, cognitive load was induced via an unpaid memory task (a sequence of 7 digits). Risk preferences were elicited for two types of binary decision tasks, safe-risky tasks and risky-risky tasks. The authors found that cognitive load increased risk aversion in both types of task. However, this effect is statistically significant for their safe-risky tasks but insignificant for their risky-risky tasks.

Deck and Jahedi (2015) studied risk preferences in different environments. Each subject faced two types of safe-risky tasks. One task contained lotteries framed in the domain of gains and the other one compared lotteries framed in losses.Footnote 4 Cognitive load was manipulated via a memory task (1 digit and 8 digit numbers). Overall, the authors found more risk-averse choices in their high-load treatment, regardless of whether subjects faced gains or losses. However, this effect is significant for the tasks with gains but not for the ones with losses.

Gerhardt et al. (2016) implemented a different method to induce cognitive load than the studies mentioned above. In their load treatment, subjects were asked to memorize an arrangement of dots displayed in a matrix. Subjects faced a series of safe-risky and risky-risky tasks, all framed in gains. The authors found more risk-averse choices in both tasks under cognitive load. However, this effect is significant only for their safe-risky tasks.Footnote 5

Using different tasks, Blaywais and Rosenboim (2019) investigated risk attitudes under cognitive load when opportunity costs are present. Their tasks included pricing of lotteries, with and without an alternative payment, and choosing between these lotteries. Subjects faced a memory task (a 8 digit number). Contrary to the previous studies, the authors found significantly less risk aversion under cognitive load. Furthermore, subjects displayed a tendency to ignore opportunity costs, which was significantly stronger under cognitive load.

In a methodological study, Deck et al. (2021) asked whether the four commonly used techniques for manipulating cognitive load in experimental research (i.e., a number memorization task, a visual pattern task, an auditory recall (n-back) task, and time pressure) have similar effects on risk preferences, among others.Footnote 6 In all load-technique treatment, subjects faced a risk task, which was to select a safe option or a fifty-fifty lottery over two positive payments. The authors found that risk aversion significantly increased, and this effect remained unchanged for all techniques used to manipulate cognitive load.

Other studies in the literature found no effects of cognitive load on risk-taking behavior. Guillemette et al. (2014) studied loss aversion in relation to cognitive load. Each safe-risky task compared lotteries returning either only positive payoffs, only negative payoffs, or both. Cognitive load was induced via unpaid memory tasks (a 2 digit or 7 digit number). The authors could not find any significant changes in loss aversion.

Drichoutis and Nayga (2020) studied effects of cognitive load on “rational” preferences, i.e., consistency of choices with utility maximization. Subjects performed a series of budget allocation tasks, each one bracketed by a memory task (a 1 digit or 8 digit number). The authors did not find any evidence that cognitive load impacts violations of the key postulate of revealed choice theory: the Generalized Axiom of Revealed Preferences. Moreover, by applying auxiliary techniques, the authors measured risk attitudes and adherence to stochastic dominance. Yet neither the former nor the latter property was affected by cognitive load.

In another methodological study, Olschewski et al. (2019) asked whether cognitive load leads to systematic changes in preferences and whether it is driven by random choice. Subjects faced a series of safe-risky tasks framed in gains. A sub-sample of subjects conducted in parallel an auditory (3-back) task, inducing cognitive load. First of all, the authors did not find any significant effect on risk attitudes. Second, by estimating the standard information criterion (WAIC) for various parametric specifications of (non)expected utilities, the authors concluded that changes in risk preferences were not driven by systematic changes in subjects’ preference, but instead by randomness.

To our knowledge, cognitive load in relation to behavior under ambiguity has not been explored yet. The aim of the current experiment is to fill this gap.

3 Behavioral assumptions

The goal of our experiment is to explore how cognitive load affects key properties of economic behavior. We focus on behavioral assumptions that play a central role for economists. Economic theories identify key behavioral properties, which in conjunction with other structural assumptions are sufficient, and sometimes even necessary, to account for an economic phenomenon in question. To make such theories applicable to real-life decision problems, it is important to assess how the key properties are related to different levels of cognitive load to which a decision maker (henceforth, DM), e.g., an investor, politician, policy adviser, physician, or entrepreneur, may be exposed when making decisions under uncertainty.

As usual, we describe a DM’s behavior by preferences over prospects, which may be safe, risky, or ambiguous. When outcomes are monetary payments, a safe prospect pays a monetary amount for sure. A risky prospect (lottery) returns payments according to known probabilities. In contrast, an ambiguous prospect does not specify probabilities of payments.

The first behavioral property we aim to test is Monotonicity. A DM displays a monotonic preference in monetary payments if she prefers a safe prospect paying a larger amount of money to another safe prospect paying strictly less. Monotonicity is a key behavioral assumption almost in all areas of economics that involve monetary outcomes (e.g., financial economics, auctions, and principal-agent models) or monetary incentive schemes (e.g., mechanism design and incentivized experiments).

The second property is called Stochastic Dominance. A DM is said to adhere to stochastic dominance if she prefers a lottery that first-order stochastically dominates the alternative lottery. That is, the DM prefers a lottery whose cumulative distribution is to the right of the alternative lottery. Many theories of choice satisfy this property, including expected utility and many generalizations thereof, such as rank-dependent utility (Quiggin, 1982) and cumulative prospect theory (Tversky & Kahneman, 1992). Since it is a risk attitude-free criterion, Stochastic Dominance is often used in finance for investments, portfolio choice, and options evaluation.

The third property is Risk Aversion. A DM is risk averse if she prefers the safe prospect paying a lottery’s expected value to the lottery itself. Risk aversion is ubiquitous in economics and finance. It is a key behavioral property used to explain a plethora of economic phenomena in areas of insurance, auctions, investments, portfolio choice, principal-agent relationships, and many more.

The fourth property is called Reduction. A DM adheres to reduction of compound lotteries if she is indifferent between a two-stage (compound) lottery and its corresponding reduced form. Reduction is a central assumption in theories of choice under risk and uncertainty (Samuelson, 1952; Anscombe & Aumann, 1963), including models of inter-temporal choice.Footnote 7 In the latter models, reduction of compound lotteries implies time neutrality.

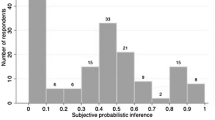

The last property that we are interested in is Ambiguity Attitude, which may be ambiguity aversion, love, or neutrality. A DM is ambiguity neutral if she is probabilistically sophisticated. Such a DM treats ambiguity as if it was risk by formulating a subjective probability distribution that is used to evaluate (ambiguous) prospects as lotteries (Machina & Schmeidler, 1992). Probabilistic sophistication entails subjective expected utility (Savage, 1954), but also allows for non-linear preferences in probabilities. Its relevance for economics is unequivocal: “It [...] has allowed for the application of a tremendous number of results from probability theory, and it is hard to imagine where the theory of games, the theory of search, or the theory of auctions would be without it” (Machina & Schmeidler, 1992, p.746).

Since Ellsberg (1961), economists have acknowledged that some individuals violate probabilistic sophistication by being either ambiguity averse or ambiguity loving. A DM is ambiguity averse if she prefers any (strict) convex mixture of two prospects among which she is indifferent to either of them (Gilboa & Schmeidler, 1989). Ambiguity love is the opposite. There is no single probability distribution that can rationalize such preferences.

Ambiguity has been successfully applied to explain economic phenomena in various areas, e.g., diversification (Dow & Werlang, 1992), incomplete contracts (Mukerji, 1998), public goods provision (Eichberger & Kelsey, 2002), or behavioral game theory (Eichberger & Kelsey, 2011). Some economic phenomena, such as speculative trade, are precluded in economies consisting of probabilitistically sophisticated agents, and are feasible if and only if ambiguity (and non-neutral attitudes towards it) are present (Dominiak & Lefort, 2015).

4 Experimental design

In this section, we describe the experimental design, including decision tasks and treatments.

The experiment consists of three between subject treatments with varying levels of cognitive load, labeled Zero, Low, and High. In each treatment, subjects carry out six decision tasks: Ambiguity 1, Ambiguity 2, Reduction, Risk, Dominance, and Monotonicity. These decision tasks implement choice problems in different environments. Ambiguity 1 and 2 capture ambiguity. Reduction, Risk, and Dominance represent risk. Monotonicity represents a situation without uncertainty. The tasks are described in the next sub-section.

4.1 Decision tasks

All decision tasks consist of a choice between exactly two prospects: Option A and Option B. We implement simple binary tasks to avoid creating extra cognitive load from additional prospects. In all tasks except Monotonicity, prospects are binary (two payments), and phrased in terms of draws from opaque bags.Footnote 8 Each bag contains 100 marbles, where each marble is either blue or green. Bags with unknown composition of marbles represent ambiguity, while bags with known distributions capture risk. One decision task or cognitive load task (see Section 4.2) is randomly selected for payment for each subject.

Ambiguity 1

In this task, subjects have to decide between the following two options: A) A draw from a bag with 100 marbles, of which 50 are blue and 50 are green. Subjects are paid 10 EURO if the drawn marble is blue, and otherwise 0 EURO. B) A draw from a bag with 100 blue and green marbles in unknown proportion. Subjects are paid 10 EURO if the drawn marble is blue, and otherwise 0 EURO,Footnote 9

Ambiguity 2

Subjects have to decide between the following options: A) A draw from a bag with 100 marbles, of which 50 are blue and 50 are green. Subjects are paid 10 EURO if the drawn marble is green, and otherwise 0 EURO. B) A draw from a bag with 100 blue and green marbles in unknown proportion. Subjects are paid 10 EURO if the drawn marble is green, and otherwise 0 EURO. The same ambiguous bag is used for draws in both ambiguity tasks.

Together, the two ambiguity tasks implement the 2-color experiment of Ellsberg (1961), and are from now on called Ambiguity. Subjects who choose the risky option, A, in both ambiguity tasks, reveal Ambiguity Aversion, while subjects who twice choose the ambiguous option, B, display Ambiguity Love. Subjects who prefer A in one and B in the other task reveal Ambiguity Neutrality, thus behaving consistently with probabilistic sophistication.

Reduction

In this task, subjects have to decide between the following two options: A) A draw from a bag with 100 marbles, 25 of which are blue and 75 of which are green. Subjects are paid 20 EURO if the drawn marble is blue, and otherwise 0 EURO. B) Two draws (with replacement) from a bag with 100 marbles, 50 of which are blue and 50 of which are green. Subjects are paid 20 EURO if two blue marbles are drawn, and otherwise 0 EURO. To break indifference, subjects are paid an additional 0.10 EURO for choice B regardless of the draws.

Option A is a simple lottery, whereas Option B represents a compound (two-stage) lottery. The compound lottery returns 20 EURO with a \(25\%\) chance and nothing with a 75% chance. The simple lottery, A, is the reduced form of B. Subjects who adhere to Reduction choose B because of the additional payment it offers as compared to A, otherwise Reduction fails.

Risk

Subjects have to decide between the two prospects: A) A draw from a bag with 100 marbles, 50 of which are blue and 50 of which are green. Subjects are paid 10 EURO if the drawn marble is blue, and otherwise 0 EURO. B) A draw from a bag with 100 blue marbles. Subjects are paid 5 EURO if the drawn marble is blue, and otherwise 0 EURO. To break indifference, subjects are paid an additional 0.10 EURO for A regardless of the draw.Footnote 10

The safe option, B, pays the expected value of the risky option, A. Hence, subjects who choose B reveal Risk Aversion, otherwise subjects are either risk neutral or risk loving.Footnote 11

Dominance

In this task, subjects are asked to choose between the following two prospects: A) A draw from a bag with 100 marbles, 50 of which are blue and 50 of which are green. Subjects are paid 5 EURO if the drawn marble is blue and 10 EURO if the drawn marble is green. B) A draw from a bag with 100 marbles, 25 of which are blue and 75 of which are green. Subjects are paid 5 EURO if the drawn marble is blue and 10 EURO if the drawn marble is green. In addition, 0.10 EURO are paid in choice B regardless of the draw.

The two lotteries return 5 EURO or 10 EURO, however, with different probabilities. Option A offers equally likely payments. Option B pays 10 EURO with 75% chance and thus, it (first-order) stochastically dominates A. For this reason, subjects who choose the dominant lottery, B, satisfy Stochastic Dominance; otherwise they violate Stochastic Dominance.

Monotonicity

In this task, subjects have to decide between Option A that pays 5 EURO and an additional 0.10 EURO and Option B that pays 5 EURO. Both payments are certain. Since A pays more than B, subjects who choose A satisfy Monotonicity, otherwise they violate it.

4.2 Cognitive load treatments

At sign-up, subjects were randomly allocated to one of three cognitive-load treatments, Zero, Low, or High. In treatment Zero, subjects complete the six decision tasks previously described. In treatments Low and High, subjects are additionally exposed to cognitive load.

Memory

In treatment Low and High, each decision task is simultaneously accompanied by a memory task. Each memory task consists of keeping in memory a 2 digit (in Low) or 6 digit (in High) number.Footnote 12 The randomly drawn number is presented in the initial OUT (output/memorization) stage and has to be entered in the IN (input/reproduction) stage. That is, each decision task is preceded by the OUT stage and followed by the IN stage of the corresponding memory task. Subjects had a time limit of 10s in the OUT stage. However, there was no time limit in the IN stage and neither for any of the six decision tasks. A correctly entered number earns 5 EURO if that memory task was selected for final payment. The variable Memory encodes the number of correctly recalled numbers.

In treatments Low and High, subjects are paid for one task that is randomly determined among the six decision tasks and the six memory tasks. In Zero, subjects are paid for one randomly determined decision task. For these tasks, a subject volunteer conducted random draws from physical bags filled with marbles at the end of each treatment.

To check for order effects, we conducted each treatment by implementing the decision tasks in the order presented in Table 1, and in the reverse order (i.e., Monotonicity, Dominance, Reduction, Risk, Ambiguity 2, Ambiguity 1). Dewitte et al. (2005) find that the effects of cognitive load persist even after the load task has ended. For this reason, we use a between subject design with a low number of overall decision tasks. If the effect of cognitive load is cumulative across tasks, it should affect the last tasks of the design more than the first tasks. However, we do not find any evidence for order effects (see Section 5.1).

4.3 Cognitive ability tasks

The cognitive load stage (i.e., decision tasks, plus memory tasks in treatments Low and High) is followed by an unpaid demographics questionnaire. The questionnaire asks for age, gender, field of study, whether the subjects have taken part in a statistics course, whether the subjects consider themselves good at multitasking, and whether they have previously taken part in an experiment with random draws. Afterwards, the subjects complete cognitive ability tasks. These consist of three paid tasks without a memory task. First, they have five minutes to solve a math task (MATH), which was to answer five calculus and statistics questions. Then, subjects have five minutes to answer the three questions of the Cognitive Reflection Test (CRT) by Frederick (2005) and the Wason Selection Task (WST) by Wason (1968). In total, subjects have to answer nine questions. For each correct answer, 0.20 EURO are added to the payoff from the cognitive load stage. In addition to these payments, each subject receives a show-up fee of 5 EURO.

4.4 Implementation

In an ex-ante power calculation, we arrived at a necessary treatment size of 85 subjects.Footnote 13 In total 259 subjects took part: 89 in treatment Zero, 85 in treatment Low, and 85 in treatment High. All 19 sessions took place in AWILab at University of Heidelberg between July and December 2019. Recruitment was handled with hroot (Bock et al., 2014) and the experiment was programmed in oTree (Chen et al., 2016).

Use of mobile phones was not allowed during the entire experiment. Neither was the use of pens allowed during the first part. The tasks of the cognitive load stage together with their instructions were computerized. After this part, the experimenters asked for a volunteer to conduct all necessary physical draws (using marbles in cotton bags that represented the urns depicted in Table 1, with replacement after draws) for the first part. The volunteer conducted draws for all options of all decision tasks. A random draw then selected, for each subject, one out of the twelve total decision and cognitive load tasks that would be paid,Footnote 14 Afterwards, subjects were handed printouts for the calculus and statistics questions, CRT, and WST. Subjects were not allowed to go back to any previous task.

At the end of the experiment, subjects were paid in cash and private. The total payment consisted of the sum of the show-up fee, the payment of one randomly drawn decision task or cognitive load task, the correctly answered calculus and statistics questions as well as for the correctly solved questions in CRT and WST. The average payout was 12.25 EURO.

5 Experimental results

5.1 Preliminaries

As a first step, we check whether our randomization of subjects worked, whether our cognitive load intervention worked, and whether there are order effects.

Randomization

All subjects were asked for their gender, whether they had taken part in a university statistics course, whether they were generally good at multitasking, and whether they had previously taken part in an experiment using random draws. Figure 1 shows the subjects’ answers by treatment. Using Fisher Exact Tests (FET), our subjects differed significantly across treatments only in the rate of having participated in a statistics course (FET, \(p=0.003\), 259 obs.). Fewer subjects in the Zero treatment reported this than in the Low and High treatments. Subjects also had to answer our cognitive ability tasks, MATH, CRT, and WST (see Table 2). These tasks were incentivized, but outside of our treatment intervention (Section 4). Using FETs, there is no significant difference across treatments for any of the cognitive ability tasks.

Order effects

To check for order effects, we split all treatments into sessions Normal and Reverse. Normal uses the order in which decision tasks are described in Section 4 and summarized in Table 1. In Reverse, the order was reversed; i.e., Monotonicity, Dominance, Risk, Reduction, Ambiguity 2, and Ambiguity 1. In total, 131 subjects took part in Normal and 128 subjects in Reverse. FETs comparing both orders show that there is no significant difference in the outcomes of the decision tasks Dominance, Risk, Reduction, Ambiguity 1, and Ambiguity 2. The FETs neither show significant differences between Normal and Reverse for the number of correct answers to our memory tasks in the cognitive load stage (the latter only comparing Low and High, since there is no intervention in Zero). The only significant difference, at the \(10\%\) level, we found, is for the decision task Monotonicity (FET, two-sided, \(p=0.099\), 259 obs.), with more violations in the reverse order.

Intervention

Did the harder memory task lead to more mistakes by subjects in treatment High compared to treatment Low? It is possible that someone who memorizes in High has more capacity and is not limited by the memory task. We compare the number of correct memory answers, that is, the number of correctly remembered numbers, in both treatments (see Table 2). We find that subjects in Low are significantly better at remembering their numbers (Wilcoxon-Rank-Sum Exact, two-sided, \(p=0.007\), obs. 170).

5.2 Main results

Out of the measures taken under cognitive load, the 259 subjects in the full sample most often respect Monotonicity; 92.7% do, while only 7.3% violate this property. 79.2% adhere to Stochastic Dominance and 62.2% to Reduction (of compound lotteries). Furthermore, 69.9% of subjects in the full sample reveal Risk Aversion, and 62.9% display Ambiguity Aversion (while 20.8% reveal Ambiguity Neutrality, and 16.2% Ambiguity Love). Table 2 shows the results split by treatment.

The main research question is whether there are treatment differences in subjects’ behavior under cognitive load for the decision tasks presented in Table 1. To answer this question, we test all treatment pairs (Zero-Low, Zero-High, and Low-High) via FETs. There are never any significant differences in any treatment pair for subjects’ adherence to Monotonicity and their Ambiguity Attitudes, i.e., whether they are ambiguity averse, ambiguity loving, or probabilitistically sophisticated.

Result 1

Monotonicity and Ambiguity Attitudes are not affected by cognitive load.

For the remaining three decision tasks in the presence of risk, treatment differences exist. Subjects violate Stochastic Dominance significantly more often in treatment High than in treatment Zero (FET, two-sided, \(p=0.005\), 174 obs.). The impact of cognitive load on Reduction is weakly significant. In particular, failures to reduce compound lotteries occur more often in treatment High than in Low (FET, two-sided, \(p=0.056\), 170 obs.). In our Risk task, subjects tend to be less risk averse in Low than in Zero (FET, two-sided, \(p=0.031\), 174 obs.) and in High compared to Zero (FET, two-sided, \(p=0.091\), 174 obs.).

Result 2

Treatment differences exist for adherence to Stochastic Dominance and Reduction, and for Risk Aversion.

Next, we conduct two robustness checks. First, we exclude subjects who violate Monotonicity. Second, we exclude subjects who fail at least one memory task. That is, we exclude all subjects for whom we can not guarantee that working memory was actually devoted to the memory task (subjects may have endogenously decided to focus only on the binary decision task and to not do the memory task).Footnote 15 The above results for adherence to Stochastic Dominance and Reduction are confirmed. These results remain significant at the same p-level or even become stronger. However, the results on Risk Aversion seem to be driven by subjects who violate Monotonicity or fail one of the memory tasks. When we exclude either of these groups of subjects from the sample, the above association between risk-taking behavior and cognitive load becomes weaker or even insignificant.

Result 3

The effects of cognitive load on Risk Aversion are driven by subjects who violate Monotoncity in monetary payments or fail one of the memory tasks.

It is informative to revisit the above results conditional on subjects’ performance in our cognitive ability tasks. To this end, we divide the full sample of subjects into high-ability and low-ability subjects. As it turns out, significant treatment differences depend mainly on the behavior of high-ability subjects. We define the variable (cognitive) Ability as the sum of correct answers in our three incentivized tasks, i.e., MATH, CRT, and WST (see Table 2). All of these measures were taken after the cognitive load stage (see Section 4.3).

High-ability subjects are those who answered five or more, out of the total nine, questions correctly. Low-ability subjects are those who answered four or fewer questions correctly. For our Dominance task, both the Zero-High (FET, two-sided, \(p=0.013\), 117 obs.) and the Zero-Low (FET, two-sided, \(p=0.066\), 118 obs.) treatments are significantly different for high-ability subjects, yet neither treatment pair is significantly different at \(10\%\) level for low-ability subjects. An even stronger picture emerges for the Reduction task. While we only find a weakly significant result for Low-High when testing the full subject pool, both Zero-High (FET, two-sided, \(p=0.037\), 117 obs.) and Low-High (FET, two-sided, \(p=0.007\), 113 obs.) are significant for high-ability subjects. For low-ability subjects the Low-High difference is not significant and the Zero-High difference only weakly so (FET, two-sided, \(p=0.065\), 57 obs.). For the Risk task, no treatment pair is significant at \(5\%\) level for either sub-sample. However, the Zero-High difference stays weakly significant for subjects who exhibit high-ability (FET, two-sided, \(p=0.067\), 117 obs.). The next result summarizes the above findings.

Result 4

The effects of cognitive load on adherence to Stochastic Dominance and Reduction are stronger for high-ability subjects.

Deck et al. (2021) made a similar observation. They find that performance in their CRT greatly predicts which individuals are affected by cognitive load. Those who scored above the median in the CRT are affected more strongly under cognitive load.Footnote 16

Next, we check for the interaction of the above results with the measures without cognitive load taken in the experiment and the demographics data in Probit-regressions. Table 3 reports on the results of Probit-regressions on the following measurements of interest: Ambiguity Aversion, Ambiguity Neutrality, Monotonicity, Reduction, Dominance, and Risk Aversion. We added the following explanatory variables in addition to treatment dummies: Age, Multitask (an affirmative answer to the question about being able to multitask), Experiment (participation in a previous experiment that involved an urn-task and draws), and our Ability variable.

We find that subjects’ cognitive ability significantly correlates with preference for higher monetary payments, less violations of reduction of compound lotteries, and stochastic dominance. However, high cognitive skills cannot determine whether subjects are averse or neutral towards ambiguity, and whether they are risk averse. The Probit-regressions in specifications (1)-(6) omit the variables Male and Statistics. These two variables are highly correlated with subjects’ cognitive ability (see specification (7) in Table 3).

Result 5

High-ability correlates with less violations of Monotonicity, Reduction, and Stochastic Dominance, but not with Ambiguity Aversion, Ambiguity Neutrality, or Risk Aversion.

Whether subjects successfully remembered the sequence of numbers (2 or 6 digits, depending on treatment) they faced during the cognitive-load tasks is coded in the variable Memory. It measures the total number of correctly recalled numbers by a subject. Table 4 presents the relationship between Memory and the results of our six decision tasks, which are summarized in the variables Ambiguity Aversion, Ambiguity Neutrality, Monotonicity, Reduction, Dominance, and Risk Aversion. Neither task is significant at the 5% level, showing no association between the subjects’ behavior in our decision tasks and their performance in the memory task. There is no evidence of the subjects having endogenously decided to avoid cognitive load by not engaging in both tasks (see Footnote 12).

Last but not least, it is not surprising that subjects facing the harder memory task, in treatment High, recall their number significantly less often. Subjects who score well in our ability questions, conducted after the decision tasks, recall more often than subjects who fare badly. Neither of our demographic control variables are significant.Footnote 17

6 Behavioral interpretation

Our experimental results show that only some properties of preferences are affected by cognitive load, while others are not. What these properties are, depends on the nature of the decision task. Monotonicity and Ambiguity Attitudes remain intact as cognitive load increases. In contrast to that, cognitive load (restricted working memory), interferes with behavior in risky environments, i.e., with adherence to Stochastic Dominance, Reduction, and Risk Aversion. In this section, we provide a possible explanation for these findings.

6.1 Dual-process paradigm

The impact of cognitive load on economic decision-making is often interpreted through the lens of dual-process theories (Rustichini, 2008; Alós-Ferrer & Strack, 2014).

The dual-process paradigm assumes that decision-making (and cognition more generally) involves the interplay of two fundamentally distinct types of cognitive processes in the human mind (Stanovich, 1999, 2011; Evans, 2010; Kahneman, 2011; Evans & Stanovich, 2013).Footnote 18 Type 1 processes do not require any working memory. In contrast, Type 2 processes do require working memory.Footnote 19 The reliance/non-reliance on working memory is the crucial distinction for our purposes.

Since working memory is limited, it must be selectively allocated among the tasks at hand. This is the rationale for our cognitive load intervention (memorizing a number). Type 2 processes tackling a decision task and a memory task compete for the same scarce resource: working memory. For instance, if a DM keeps in mind the displayed number, it occupies her working memory and less thereof is available to determine a choice. This may lead to a Type 1 process making the choice.

In terms of their attributes, Type 1 processes are intuitive, effortless, and often based on experience or heuristics. Such processes encompass evolutionarily adaptive responses, the automatic firing of overlearned associations, and general cognitive processes related to implicit learning and conditioning (Evans & Stanovich, 2013). In contrast, Type 2 processes are deliberate, cognitively taxing and strongly correlate with a person’s cognitive abilities (general intelligence). Specifically, decoupling processes must be continually in force during any mental simulation, making them heavily taxing on working memory (Stanovich & Toplak, 2012). This is, in a nutshell, the essence of the dual-process paradigm of human decision-making.Footnote 20

Another key feature of dual-process theories is the interplay of the two types of processes. Under the common view of “default interventionism”, Type 1 and Type 2 processes operate sequentially (Kahneman & Frederick, 2002). That is, Type 1 processes instantly generate an intuitive, default response upon which Type 2 processes may intervene, presupposed there is a sufficient amount of working memory, or not, in order to override the intuitive response by deliberate reasoning. According to (Evans & Stanovich, 2013, p.237): “Default interventionism allows that most of our behavior is controlled by Type 1 processes running in the background. Thus, most behavior will accord with defaults, and intervention will occur only when difficulty, novelty, and motivation combine to command the resources of working memory.” Building on this view of human (or cognitive) behavior, in the consecutive section, we suggest a behavioral framework that explains our main experimental findings.

6.2 Task complexity

To account for the treatment differences reported in Section 5.2, we analyze the six decision tasks through the lens of dual-process theories. More precisely, our goal is to establish a relationship between a decision task and the two types of mental processes (Type 1 and Type 2). That is, what type is most likely to generate a choice in each of our treatments? Since the deliberate Type 2 processes heavily depend on working memory resources but the intuitive Type 1 processes do not, it is useful to look at each task from the point of view of how “cognitively demanding” or “complex” it is for a DM to tackle a decision task. Each such task involves a comparison of two binary prospects. To decide which one is favored, subjects need to somehow evaluate both options and then compare the valuations.

Prospects may be evaluated in different ways, depending on the underlying behavior. For instance, a DM may multiply utilities for outcomes with the respective probabilities, which are known or subjective, and aggregate them. For a single prior, such a calculation results in a unique expected utility. However, a DM may consider many priors, necessitating calculations of multiple expected utilities for each prospect (Gilboa & Schmeidler, 1989).

In Table 5, we classify the decision tasks according to their complexity. While such a classification is ad hoc, it can be thought of as a measure of the basic mathematical operation involved in the evaluations of the binary decision task a DM faces.Footnote 21 Our Monotonicity task, which does not involve uncertainty, has a low complexity. However, our risky tasks (i.e., Reduction, Risk, and Dominance), feature a moderate complexity.

The last column of Table 5 roughly summarizes the experimental results. We find treatment differences for those decision tasks whose complexity would indicate that a Type 2 process is used when cognitive load is absent, but a Type 1 process when cognitive load is present. Meanwhile, we do not find treatment differences for those tasks which would be solved via a Type 1 process even in the absence of cognitive load.

Tasks of low complexity are so trivial that subjects always make a choice based on a Type 1 process. Since these tasks demand no calculation, or were solved enough times in the past, Type 2 processes do not intervene to override the default response. Therefore, the low complexity tasks are solved by an intuitive Type 1 process regardless of whether the DM is exposed to cognitive load or not. For decision tasks of medium complexity, however, a Type 2 process would intervene. Yet, when cognitive load is present, working memory is already taxed. As a consequence, the intervention by a Type 2 process can fail due to lacking cognitive capacity. In other words, for moderately complex tasks, the Type of process used for evaluations of such tasks can differ between treatments Zero, Low, and High.

How complex our Ambiguity task is depends on how subjects evaluate ambiguous prospects. Subjects may refrain from any calculations and heuristically prefer risky prospects over ambiguous ones, revealing ambiguity aversion. Since this requires no analytic reasoning, the evaluation of each ambiguity task would involve an intuitive Type 1 process. On the other hand, a Type 2 process might intervene to evaluate ambiguity via multiple expected utilities (Gilboa & Schmeidler, 1989) or some more sophisticated models (Klibanoff et al., 2005). However, these models are likely too demanding for our subjects to handle. For this reason, a deliberate Type 2 process attempting to come up with an evaluation would fail to achieve a solution, leading subjects to fall back on their intuitive Type 1 decisions.

6.3 Remarks

Some important remarks are in order. First, our approach does not make any assumption about the exact nature of the intuitive Type 1 process involved in any of the tasks. As Evans and Stanovich (2013) emphasize, Type 1 cognition is best understood as a collection of mental processes, which share the feature of not requiring working memory, but may otherwise be very different from each other. The identification of treatment differences in Table 5 only requires that, if a Type 1 process makes a choice under exposure to cognitive load, the same choice is arrived at without load. Then, treatment differences can be attributed to a difference between the decisions suggested by the Type 2 and Type 1 processes.

Second, our complexity notion allows us to establish a relationship that specifies, conditional on the amount of cognitive load, the type of cognitive processes (Type 1 or Type 2) that may generate a choice. It is worth noting that our complexity notion differs from an alternative measure suggested in the experimental literature, which explores how “relative complexity” between two lotteries of a binary decision task affects choice under risk (but without introducing cognitive load). In Huck and Weizsäcker (1999), Sonsino et al. (2002), and Zilker et al. (2020), the complexity of a lottery (and thus, a decision task) is defined as the number of outcomes the lottery returns, i.e., the more outcomes a lottery has, the more complex it is. However, for each decision task, except the Monotonicity task, we described and presented to the subjects both of the two prospects as a two-outcomes option. This includes the safe option in the Risk task. Therefore, the relative complexity notion ranks five of our decision tasks (Ambiguity 1, Ambiguity 2, Reduction, Risk, and Dominance) as equally complex. Only our Monotonicity task is less complex than each of the other tasks. The different results for Ambiguity, on the one hand, and Reduction, Risk, and Dominance, on the other hand, cannot be explained via relative complexity of the outcomes.

Third, the above mentioned experimental studies report that, when the safe option of a safe-risky task is explicitly presented as a one-outcome option, choices were skewed towards the safe option in safe-risky tasks as compared to the choice distributions in risky-risky tasks. These preferences for a safe option have been attributed to subjects’ “complexity aversion”. Zilker et al. (2020) show that this is due to presenting the safe option as less complex and that the effect disappears if the safe option is modeled with two outcomes. We present the safe option as a lottery with two outcomes (with a degenerate distribution). This is similar to the risky option, which presents two outcomes as a lottery with equal probabilities. Since we use an equal number of outcomes for the safe and the risky option, this complexity aversion should not govern our subjects’ choices.Footnote 22

As our last remark, note that the predictions of Table 5 differ from a random choice model, which would predict a move towards a fifty-fifty distribution under cognitive load in the binary decision tasks. Since all choice frequencies in Table 2 are far away from a fifty-fifty distribution, a model assuming that cognitive load would increase randomness could explain our results 2 and 3, but not result 1.

7 Conclusion

The reported experimental study has identified properties of economic behavior that are affected by cognitive load and those that are not. Adherence to (first-order) stochastic dominance, reduction of compound lotteries, and risk aversion can be conditional on the amount of cognitive load. However, monotonicity and ambiguity attitudes are not affected. This suggests that two of the key properties of behavior that are broadly studied in the economic literature, “more is better” and ambiguity attitudes, are intuitive, not deliberate.

Why do these results matter? When creating predictive models, economists should prefer behavioral assumptions that are inherent to the agent of the model and invariant. It is important to recognize which behavioral properties are invariant, and which are not. Put differently, a predictive model is not reliable if the underlying behavior is unstable and changes with environmental conditions not included in the model itself. Specifically, agents should reveal inherent preferences in the same way with and without cognitive load. Yet some of the key properties we tested are sensitive to changes in cognitive load. This suggests that these behavioral properties are either unstable, e.g., because agents reveal them through deliberation, or the elicitation method used is not appropriate, e.g., because its complexity forces agents to deliberate about choices. In either case, economists should be careful. If subjects have to deliberate about the choices they like best, are they actually revealing their ranking of the top choices or their capacity to determine them? On the other hand, we find that both monotonicity and ambiguity aversion are not affected by cognitive load. Thus, these properties are likely to be “hard-wired” components of behavior that will thus make suitable behavioral assumptions for predictive models.

In light of our results, researchers should pay increasing attention to possible inferences produced by experimental elicitation mechanisms. For many very commonly elicited preferences, e.g. risk aversion, a plethora of elicitation methods exists. These methods differ widely in their complexity, from multiple lottery lists (Holt & Laury, 2002) to simple verbal questions (Dohmen et al., 2011). Additionally, the way incentives are set (e.g. pay one randomly vs pay all) and the general structure of the experiment may or may not add additional complexity. Elicited results may depend on said complexity and the interplay between the elicitation method and the incentivization. It may prove helpful to experimentally test the cognitive load induced by elicitation methods in the framework of a dual-process model in future research.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Notes

Interactive behavior has been intensively studied, showing that cognitive load increases generosity in dictator games (Schulz et al., 2014); reduces trust in a trust game (Samson & Kostyszyn, 2015); impairs consistency with backward induction strategies (Carpenter et al., 2013); impairs strategic sophistication in a beauty contest game (Allred et al., 2016; Zhao, 2020). However, cognitive load has shown no significant effects on strategic behavior in an ultimatum game (Cappelletti et al., 2011) and in repeated prisoner’s dilemma games (Duffy & Smith, 2014), but decreases cooperation and punishment in a prisoner’s dilemma game with a punishment option (Mieth et al., 2021).

There is another strand of literature that investigates risk preferences in relation to cognitive abilities. A number of studies have shown that cognitive ability tends to be negatively related to risk aversion in the domain of gains (Burks et al., 2009; Dohmen et al., 2010; Benjamin et al., 2013) and positively related in the domain of losses (Frederick, 2005; Burks et al., 2009). Other studies have reported no significant association (Tymula et al., 2012) or that cognitive ability induces random choice (Andersson et al., 2016). A detailed synopsis of this literature can be found in Dohmen et al. (2018). More recently, Choi et al. (2022) studied the relation between probability weighting (i.e., non-expected utility under risk) and cognitive ability. The authors found that subjects with a lower cognitive score responded “less discriminately to intermediate probabilities and more over-sensitively to extreme probabilities.”

More precisely, their safe-risky task, framed as gains, compared a safe option paying g and a fifty-fifty lottery paying \((2g+g)\) or nothing, where g was an integer drawn from a uniform distribution on \(\{8,\ldots , 15 \}\).

It should be remarked that their subjects had to make choices within a time frame of 6.5 seconds. Thus, there may have been overlapping cognitive load effects. It is known that time pressure is another channel to limit cognitive resources and furthermore, there is empirical evidence that time pressure does indeed significantly increase risk aversion (Kirchler et al., 2017).

Besides affecting risk preferences, all of the four cognitive load manipulation techniques led to poorer performance in math problems, logical problems, and allocation choices.

Reduction of compound lotteries was used in early formulations of expected utility as a justification for the Independence Axiom. However, Segal (1990) clarified that both assumptions are independent.

For instructions, see Appendix A.

Note that we do not add 0.10 EURO to one option in the two Ambiguity tasks to break indifference, like we do for the other tasks. The reason is that we always break indifference in favor of the most “conservative” behavior; i.e., adhering to Stochastic Dominance Reduction, and being risk neutral. Since Ambiguity Attitudes are constructed from two tasks and there are two different choice patterns that lead to the conservative classification of Ambiguity Attitudes (ambiguity neutral subjects can either choose AB or BA), breaking indifference in favor of ambiguity neutrality is not possible here.

Our results are conservative with respect to subjects who might have been attracted by the 10 cent: Selecting A could be consistent with very mild risk aversion. A decision rule of “choose the option which pays an extra 10 cent” works against finding violations of Reduction, Risk Aversion, and Stochastic Dominance.

As mentioned in Section 2, there are experimental findings showing that exposure to cognitive load may enhance risk aversion. However, how strong the effects are, depends on the type of risky tasks. In our setup, we elicit risk attitudes via a binary choice between the risky lottery (Option A) and the safe lottery (Option B). We deliberately implemented a binary choice instead of a more involved multiple lottery method (Holt & Laury, 2002). While the multiple lottery method allows for a finer specification of subjects’ risk aversion, it is more complicated. Since our overall aim was to keep the cognitive load from the tasks’ presentation comparable across tasks, we opted for a simpler binary choice to test risk aversion.

Deck et al. (2021) test four different methods of inducing cognitive load and find that using a memory task is very similar to an auditory 3-back task. They use 8 digits and find that only 47.9% of subjects correctly recall, suggesting that subjects determine their effort in the memory task endogenously. Other experiments in the literature with 8 digits also report high failure rates to recall. We wanted to ensure that a larger share (ideally all) of subjects actively engage in memorization and thus are under cognitive load. Therefore, we lower the number of digits to 6, leading to a correct recall rate of 89.8%. For the same reason, we pay for the memory task.

Power calculation for one-sided Fisher Exact Test with \(\alpha =0.05\), power of 0.8, \(p_1=0.4\), and \(p_2=0.6\).

In Zero this randomization only ran over the six decision tasks.

We also test for the specific memory task which was combined with the decision task. Excluding subjects who fail this memory task leads to qualitatively similar results.

As a robustness check, we run the above subgroup analysis again, using high and low CRT score instead of high and low Ability. We find the same pattern for Reduction (the treatment differences are driven by high CRT score subjects), but not for Stochastic Dominance. In our experiment, the CRT score also predicts how well subjects do in the memory task. Testing OLS-regression (9), specified in Table 4, separately for CRT, WST, and MATH, instead of the Ability sum, reveals that the result is mainly driven by the CRT score.

When testing the individual correlations between single tasks and whether subjects correctly recall the number during this task, only being risk averse in the reverse order treatment is significantly correlated at 5% level with not remembering the number, while no other combination is significant.

Such processes involve hypothetical thinking, mental simulations and cognitive decoupling. Hypothetical thinking and mental simulations crucially rely on the mechanism of cognitive decoupling: “the ability to distinguish supposition from belief and to aid rational choices by running thought experiments” (Evans & Stanovich, 2013, p.236). Decoupling processes enable “abstract” thinking by creating mental copies of the real world, which are used in mental simulations without being confused with the real world.

Many variants of dual-process theories have been suggested in the economics and psychology literature. In economics, the most popular are “dual-self” models, with the prototypical “planner-doer” model introduced by Thaler and Shefrin (1981). The idea is to divide a DM into a patient self (“planner”) who seeks to maximize lifetime utility and a myopic self (“doer”) who is interested in immediate gratification, generating a conflict between the DM’s “selves”. Fudenberg and Levine (2006, 2011) developed a rigorous model that solves this conflict as a sequential game. In the first stage, the patient self chooses a costly self-control action that affects the utility of the myopic self. In the second stage, the myopic self observes the action, and makes a choice. The dual-self model can explain a variety of behavioral phenomena, e.g., preference reversals for delayed rewards, risk aversion in the large and small, and even some effects of cognitive load on behavior.

In economics, the term “complexity” has been used in various contexts. To our knowledge, Payne (1976) and Bettman et al. (1990) are the first who related the “cognitive effort” required to execute a decision strategy to complexity of a choice problem, measured by the number of alternatives and attributes. Wilcox (1993), on the other hand, views complexity of a task as a “decision cost” that can affect economic behavior.

The study by Benjamin et al. (2013), which finds an effect of cognitive load on risk aversion different from our results, uses a one-outcome option for the safe option in the safe-risky tasks. As such, subjects could be driven to the safe option (be risk averse) due to complexity aversion. In contrast, in their risky-risky task, both options consist of two outcomes. Because of this symmetry, subjects should not be complexity averse and correspondingly, the authors find that the effect of cognitive load on risk aversion becomes insignificant.

References

Allred, S., Duffy, S., & Smith, J. (2016). Cognitive load and strategic sophistication. Journal of Economic Behavior & Organization, 125, 162–178.

Alós-Ferrer, C., & Strack, F. (2014). From dual processes to multiple selves: Implications for economic behavior. Journal of Economic Psychology, 41, 1–11.

Andersson, O., Holm, H. J., Tyran, J.-R., & Wengström, E. (2016). Risk aversion relates to cognitive ability: preferences or noise? Journal of the European Economic Association, 14, 1129–1154.

Anscombe, F., & Aumann, R. (1963). A definition of subjective probability. Annals of Mathematical Statistics, 34, 199–205.

Benjamin, D. J., Brown, S. A., & Shapiro, J. M. (2013). Who is ‘behavioral?’ cognitive ability and anomalous preferences. Journal of the European Economic Association, 11, 1231–1255.

Bettman, J., Johnson, E., & Payne, J. (1990). A componential analysis of cognitive effort in choice. Organizational Behavior and Human Decision Processes, 45, 111–139.

Blaywais, R., & Rosenboim, M. (2019). The effect of cognitive load on economic decisions. Managerial and Decision Economics, 40, 993–999.

Bock, O., Baetge, I., & Nicklisch, A. (2014). hroot: Hamburg registration and organization online tool. European Economic Review, 71, 117–120.

Burks, S. V., Carpenter, J. P., Goette, L., & Rustichini, A. (2009). Cognitive skills affect economic preferences, strategic behavior, and job attachment. Proceedings of the National Academy of Sciences, 106, 7745–7750.

Cappelletti, D., Güth, W., & Ploner, M. (2011). Being of two minds: Ultimatum offers under cognitive constraints. Journal of Economic Psychology, 32, 940–950.

Carpenter, J., Graham, M., & Wolf, J. (2013). Cognitive ability and strategic sophistication. Games and Economic Behavior, 80, 115–130.

Chen, D. L., Schonger, M., & Wickens, C. (2016). oTree—An open-source platform for laboratory, online, and field experiments. Journal of Behavioral and Experimental Finance, 9, 88–97.

Choi, S., Kim, J., Lee, E., & Lee, J. (2022). Probability weighting and cognitive ability. Management Science, 68, 5201–5215.

Deck, C., & Jahedi, S. (2015). The effect of cognitive load on economic decision making: A survey and new experiments. European Economic Review, 78, 97–119.

Deck, C., Jahedi, S., & Sheremeta, R. (2021). On the consistency of cognitive load. European Economic Review, 134, 103695.

Dewitte, S., Pandalaere, M., Briers, B., & Warlop, L. (2005). Cognitive load has negative after effects on consumer decision making. Available at SSRN 813684.

Dohmen, T., Falk, A., Huffman, D., & Sunde, U. (2010). Are risk aversion and impatience related to cognitive ability? American Economic Review, 100, 1238–1260.

Dohmen, T., Falk, A., Huffman, D., & Sunde, U. (2018). On the relationship between cognitive ability and risk preference. Journal of Economic Perspectives, 32, 115–134.

Dohmen, T., Falk, A., Huffman, D., Sunde, U., Schupp, J., & Wagner, G. G. (2011). Individual risk attitudes: Measurement, determinants, and behavioral consequences. Journal of the European Economic Association, 9, 522–550.

Dominiak, A., & Lefort, J.-P. (2015). Agreeing to disagree type results under ambiguity. Journal of Mathematical Economics, 61, 119–129.

Dow, J., & Werlang, S. R. C. (1992). Uncertainty Aversion, risk aversion, and the optimal choice of portfolio. Econometrica, 60, 197–204.

Drichoutis, A. C., & Nayga, R. M. (2020). Economic rationality under cognitive load. The Economic Journal, 130, 2382–2409.

Duffy, S., & Smith, J. (2014). Cognitive load in the multi-player prisoner’s dilemma game: Are there brains in games? Journal of Behavioral and Experimental Economics, 51, 47–56.

Eichberger, J., & Kelsey, D. (2002). Strategic complements, substitutes, and ambiguity: The implications for public goods. Journal of Economic Theory, 106, 436–466.

Eichberger, J., & Kelsey, D. (2011). Are the treasures of game theory ambiguous? Economic Theory, 48, 313–339.

Ellsberg, D. (1961). Risk, ambiguity, and the savage axioms. Quarterly Journal of Economics, 75, 643–669.

Evans, J. S. B. T. (2010). Thinking twice: Two minds in one brain. New York: Oxford University Press.

Evans, J. S. B. T., & Stanovich, K. E. (2013). Dual-process theories of higher cognition: Advancing the debate. Perspectives on Psychological Science, 8, 223–241.

Frederick, S. (2005). Cognitive reflection and decision making. Journal of Economic Perspectives, 19, 24–42.

Fudenberg, D., & Levine, D. K. (2006). A dual-self model of impulse control. The American Economic Review, 96, 1449–1476.

Fudenberg, D., & Levine, D. K. (2011). Risk, delay, and convex self-control costs. American Economic Journal: Microeconomics, 3, 34–68.

Gerhardt, H., Biele, G., Heekeren, H., & Uhlig, H. (2016). Cognitive load increases risk aversion. SFB 649 Discussion Paper No. 2016-011.

Gilboa, I., & Schmeidler, D. (1989). Maxmin expected utility with non-unique prior. Journal of Mathematical Economics, 18, 141–153.

Guillemette, M., James, R. N., & Larsen, J. T. (2014). Loss aversion under cognitive load. Journal of Personal Finance, 13, 72–81.

Holt, C., & Laury, S. (2002). Risk aversion and incentive effects. American Economic Review, 92, 1644–1655.

Huck, S., & Weizsäcker, G. (1999). Risk, complexity, and deviations from expected-value maximization: Results of a lottery choice experiment. Journal of Economic Psychology, 20, 699–715.

Kahneman, D. (2011). Thinking, fast and slow. New York: Farrar, Straus and Giroux.

Kahneman, D., & Frederick, S. (2002). Representativeness revisited: Attribute substitution in intuitive judgment. In T. Gilovich, D. Griffin, & D. Kahneman (eds.), Heuristics and biases: The psychology of intuitive judgment. Cambridge: Cambridge University Press.

Kirchler, M., Andersson, D., Bonn, C., Johannesson, M., Sørensen, E., Stefan, M., Gustav, T., & Västfjäll, D. (2017). The effect of fast and slow decisions on risk taking. Journal of Risk and Uncertainty, 54, 37–59.

Klibanoff, P., Marinacci, M., & Mukerji, S. (2005). A smooth model of decision making under ambiguity. Econometrica, 73, 1849–1892.

Machina, M., & Schmeidler, D. (1992). A more robust definition of subjective probability. Econometrica, 60, 745–780.

Mieth, L., Buchner, A., & Bell, R. (2021). Cognitive load decreases cooperation and moral punishment in a Prisoner’s Dilemma game with punishment option. Scientific Reports, 11, 1–12.

Mukerji, S. (1998). Ambiguity aversion and incompleteness of contractual form. American Economic Review, 88, 1207–1231.

Olschewski, S., Rieskamp, J., & Scheibehenne, B. (2019). Taxing cognitive capacities reduces choice consistency rather than preference: A model-based test. Journal of Experimental Psychology: General, 147, 462–484.

Payne, J. W. (1976). Task complexity and contingent processing in decision making: An information search and protocol analysis. Organizational Behavior and Human Performance, 16, 366–387.

Quiggin, J. (1982). A theory of anticipated utility. Journal of Economic Behavior & Organization, 3, 323–343.

Rustichini, A. (2008). Dual or unitary system? Two alternative models of decision making, Cognitive, Affective & Behavioral Neuroscience, 8, 355–362.

Samson, K., & Kostyszyn, P. (2015). Effects of cognitive load on trusting behavior—an experiment using the trust game. PLoS One, 10, 115–130.

Samuelson, P. A. (1952). Probability, utility, and the independence axiom. Econometrica, 20, 670–678.

Savage, L. J. (1954). The Foundation of Statistics. New York: Wiley.

Schulz, J. F., Fischbacher, U., Thöni, C., & Utikal, V. (2014). Affect and fairness: Dictator games under cognitive load. Journal of Economic Psychology, 41, 77–87.

Segal, U. (1990). Two-stage lotteries without the reduction axiom. Econometrica, 58, 349–377.

Sonsino, D., Benzion, U., & Mador, G. (2002). The complexity effects on choice with uncertainty—experimental evidence. The Economic Journal, 112, 936–965.

Stanovich, K. (2011). Rationality and the reflective mind. New York: Oxford University Press.

Stanovich, K., & Toplak, M. (2012). Defining features versus incidental correlates of Type 1 and Type 2 processing. Mind & Society, 11, 3–13.

Stanovich, K. E. (1999). Who is rational?: Studies of individual differences in reasoning. Mahwah, N.J.: Lawrence Erlbaum Associates.

Thaler, R. H., & Shefrin, H. M. (1981). An economic theory of self-control. Journal of Political Economy, 89, 392–406.

Tversky, A., & Kahneman, D. (1992). Advances in prospect theory: Cumulative representation of uncertainty. Journal of Risk and Uncertainty, 5, 297–323.

Tymula, A., Rosenberg Belmaker, L. A., Roy, A. K., Ruderman, L., Manson, K., Glimcher, P. W., & Levy, I. (2012). Adolescents’ risk-taking behavior is driven by tolerance to ambiguity. Proceedings of the National Academy of Sciences, 109, 17135–17140.

Wason, P. C. (1968). Reasoning about a rule. Quarterly Journal of Experimental Psychology, 20, 273–281.

Wilcox, N. T. (1993). Lottery choice: Incentives, complexity and decision time. The Economic Journal, 103, 1397–1417.

Zhao, W. (2020). Cost of reasoning and strategic sophistication. Games, 11, 40.

Zilker, V., Hertwig, R., & Pachur, T. (2020). Age differences in risk attitude are shaped by option complexity. Journal of Experimental Psychology: General, 149, 1644–1683.

Acknowledgements

We are very grateful to Andrzej Baranski, Cary Deck, Urs Fischbacher, Edi Karni, Marco Lambrecht, Nathaniel Neligh, Jörg Oechssler, Agnieszka Tymula, and Marie-Louise Vierø for their very valuable comments and helpful discussions. We are very grateful to an anonymous referee for helpful suggestions. We also wish to thank to the participants of the 2022 World Economic Science Association Conference, the 2022 European Economic Science Association Conference, and the 2022 HeiKaMaX Conference. Financial support from University of Mannheim and University of Konstanz is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. Instructions

Appendix A. Instructions

This appendix includes translations of the original German instructions. Each subsection was presented on a different screen.

1.1 A.1 General instructions

Welcome to our experiment! Please turn off and put away your mobile phone. Read these instructions carefully. If you have any questions, raise your hand. An experimenter will then come to you.

Experiment

On the following screens, you will see different tasks. On some screens, you will have to make a decision between two alternatives, which can correspond to random draws from bags filled with marbles. On other screens, you will have to memorize a line of numbers. There are twelve tasks in total. At the end of the experiment, one of these tasks will be randomly selected for your payment. Random draws from bags will be conducted at the end of the experiment by one volunteer amongst the participants.

Payment

Your payment will be comprised of three parts: First, of the payment for the randomly selected task. Second, of the payment for correctly answered questions. These questions will be distributed at the end of the experiment. Third, every participant receives 5 EURO for participating in the experiment. Your final payment thus is the sum of: payment for randomly selected task + payment for correct answers + 5 EURO participation fee.

1.2 A.2 Ambiguity 1

You have the choice between the following alternatives:

-

Option A:

There is a bag which is filled with 100 marbles. 50 marbles are blue and 50 are green.

You receive 10 EURO if the drawn marble is blue and 0 EURO if the drawn marble is green.

There will be a random draw (with replacement).

-

Option B:

There is a bag which is filled with 100 blue and green marbles. The share of blue and green marbles is unknown, however.

You receive 10 EURO if the drawn marble is blue and 0 EURO if the drawn marble is green.

There will be a random draw (with replacement).

1.3 A.3 Ambiguity 2

You have the choice between the following alternatives:

-

Option A:

There is a bag which is filled with 100 marbles. 50 marbles are blue and 50 are green.

You receive 0 EURO if the drawn marble is blue and 10 EURO if the drawn marble is green.

There will be a random draw (with replacement).

-

Option B:

There is a bag which is filled with 100 blue and green marbles. The share of blue and green marbles is unknown, however.

You receive 0 EURO if the drawn marble is blue and 10 EURO if the drawn marble is green.

There will be a random draw (with replacement). The marble will be drawn from the same bag that will also be used for decision 1.

1.4 A.4 Reduction

You have the choice between the following alternatives:

-

Option A:

There is a bag which is filled with 100 marbles. 25 marbles are blue and 75 green.

You receive 20 EURO if the drawn marble is blue and 0 EURO if the drawn marble is green.

There will be a random draw (with replacement).

-

Option B:

There is a bag which is filled with 100 marbles. 50 marbles are blue and 50 green.

You receive 20 EURO if a blue marble is drawn twice in a row and 0 EURO if a green marble is drawn at least once.

In addition, you receive 0.10 EURO regardless of the color of the drawn marble.

There will be two random draws (with replacement).

1.5 A.5 Risk

You have the choice between the following alternatives:

-

Option A:

There is a bag which is filled with 100 marbles. 50 marbles are blue and 50 green.

You receive 20 EURO if the drawn marble is blue and 0 EURO if the drawn marble is green.

In addition, you receive 0.10 EURO regardless of the color of the drawn marble.

There will be a random draw (with replacement).

-

Option B:

There is a bag which is filled with 100 marbles. 100 marbles are blue and 0 green.

You receive 5 EURO if the drawn marble is blue and 0 EURO if the drawn marble is green.

There will be a random draw (with replacement).

1.6 A.6 Dominance

You have the choice between the following alternatives:

-

Option A:

There is a bag which is filled with 100 marbles. 50 marbles are blue and 50 green.

You receive 5 EURO if the drawn marble is blue and 10 EURO if the drawn marble is green.

There will be a random draw (with replacement).

-

Option B:

There is a bag which is filled with 100 marbles. 25 marbles are blue and 75 green.

You receive 5 EURO if the drawn marble is blue and 10 EURO plus 0.10 EURO if the drawn marble is green.

There will be a random draw (with replacement).

1.7 A.7 Monotonicity

You have the choice between the following alternatives:

-

Option A:

You receive 5 EURO. In addition, you receive 0.10 EURO.

-

Option B:

You receive 5 EURO.

1.8 A.8 Cognitive load IN stage

The next screen will show your a line of numbers. Memorize those numbers. We will ask you later to enter these numbers back into the computer. The line of numbers will fade after 10 s.

1.9 A.9 Cognitive load OUT stage

Please enter the line of numbers which you memorized in memory task 2.

If you enter the correct line of numbers, you will receive a payment of 5 EURO.

You only have one try to enter the numbers.

1.10 A.10 Cognitive ability tasks

-

Questionnaire

-

ID:

-

Seat:

-

Page 1: Answer all questions. For each correctly answered question, you receive 0.20 EURO. You have a maximum of 5 min to solve this page.

Please assume for all questions that dice are six-sided and fair.

Question 1: How big is the probability that the number in a throw of a die is smaller or equal 2? | |

Question 2: How big is the probability that in two throws, the number is both times equal to 4? | |

Question 3: Look at a single throw. Assume that the result is an even number. How big is the probability that the number is equal to 2? | |

Question 4: Assume that the number 3 was thrown 5 times in a row. How big is the probability that the next throw will result in a 3? | |

Question 5: Assume 4 dice are thrown and the numbers added. What is the total number on average? |

-

Questionnaire

-

ID:

-

Seat:

-

Page 2: Answer all questions. For each correctly answered question, you receive 0.20 EURO. You have a maximum of 5 min to solve this page.

Question 6: A bat and a ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much does the ball cost? | ||||

Question 7: If it takes 5 machines 5 minutes to make 5 widgets, how long would it take 100 machines to make 100 widgets? | ||||

Question 8: In a lake, there is a patch of lily pads. Every day, the patch doubles in size. If it takes 48 days for the patch to cover the entire lake, how long would it take for the patch to cover half of the lake? | ||||

Question 9: Assume you see 4 double sided cards in front of you. Each card has a number on one side and a letter on the other side. Which card or cards do you have to turn around to test whether the following assertion is true: “If there is a vowel (A,E,I,O,U) on one side, there is an even number on the other side.” | ||||

|

|

|

| |

Card 11 | Card 12 | Card 13 | Card 14 | |

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dominiak, A., Duersch, P. Choice under uncertainty and cognitive load. J Risk Uncertain 68, 133–161 (2024). https://doi.org/10.1007/s11166-024-09426-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11166-024-09426-6