Abstract

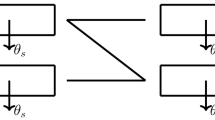

We consider multi-component matching systems in heavy traffic consisting of \(K\ge 2\) distinct perishable components which arrive randomly over time at high speed at the assemble-to-order station, and they wait in their respective queues according to their categories until matched or their “patience" runs out. An instantaneous match occurs if all categories are available, and the matched components leave immediately thereafter. For a sequence of such systems parameterized by n, we establish an explicit definition for the matching completion process, and when all the arrival rates tend to infinity in concert as \(n\rightarrow \infty \), we obtain a heavy traffic limit of the appropriately scaled queue lengths under mild assumptions, which is characterized by a coupled stochastic integral equation with a scalar-valued nonlinear term. We demonstrate some crucial properties for certain coupled equations and exhibit numerical case studies. Moreover, we establish an asymptotic Little’s law, which reveals the asymptotic relationship between the queue length and its virtual waiting time. Motivated by the cost structure of blood bank drives, we formulate an infinite-horizon discounted cost functional and show that the expected value of this cost functional for the nth system converges to that of the heavy traffic limiting process as n tends to infinity.

Similar content being viewed by others

References

Kashyap, B.R.: The double-ended queue with bulk service and limited waiting space. Oper. Res. 14(5), 822–834 (1966)

Kaspi, H., Perry, D.: Inventory systems of perishable commodities. Adv. Appl. Probab. 15(3), 674–685 (1983)

Perry, D., Stadje, W.: Perishable inventory systems with impatient demands. Math. Methods Oper. Res. 50(1), 77–90 (1999)

Xie, B., Gao, Y.: On the long-run average cost minimization problem of the stochastic production-inventory model (2023). (preprint)

Lee, C., Liu, X., Liu, Y., Zhang, L.: Optimal control of a time-varying double-ended production queueing model. Stoch. Syst. 11, 140–173 (2021)

Bar-Lev, S.K., Boxma, O., Mathijsen, B., Perry, D.: A blood bank model with perishable blood and demand impatience. Stoch. Syst. 7(2), 237–263 (2017)

Boxma, O.J., David, I., Perry, D., Stadje, W.: A new look at organ transplantation models and double matching queues. Probab. Eng. Inf. Sci. 25(2), 135–155 (2011)

Khademi, A., Liu, X.: Asymptotically optimal allocation policies for transplant queueing systems. SIAM J. Appl. Math. 81(3), 1116–1140 (2021)

Özkan, E., Ward, A.R.: Dynamic matching for real-time ride sharing. Stoch. Syst. 10(1), 29–70 (2020)

Reed, J., Ward, A.R.: Approximating the GI/GI/1+ GI queue with a nonlinear drift diffusion: hazard rate scaling in heavy traffic. Math. Oper. Res. 33(3), 606–644 (2008)

Koçağa, Y.L., Ward, A.R.: Admission control for a multi-server queue with abandonment. Queueing Syst. 65, 275–323 (2010)

Weerasinghe, A.: Diffusion approximations for g/m/n+ gi queues with state-dependent service rates. Math. Oper. Res. 39(1), 207–228 (2014)

Liu, X.: Diffusion approximations for double-ended queues with reneging in heavy traffic. Queueing Syst. 91(1), 49–87 (2019)

Liu, X., Weerasinghe, A.: Admission control for double-ended queues. arXiv:2101.06893 (2021)

Mairesse, J., Moyal, P.: Editorial Introduction to the Special Issue on Stochastic Matching Models, Matching Queues and Applications. Springer, Berlin (2020)

Conolly, B., Parthasarathy, P., Selvaraju, N.: Double-ended queues with impatience. Comput. Oper. Res. 29(14), 2053–2072 (2002)

Liu, X., Gong, Q., Kulkarni, V.G.: Diffusion models for double-ended queues with renewal arrival processes. Stoch. Syst. 5(1), 1–61 (2015)

Castro, F., Nazerzadeh, H., Yan, C.: Matching queues with reneging: a product form solution. Queueing Syst. 96(3–4), 359–385 (2020)

Weiss, G.: Directed FCFS infinite bipartite matching. Queueing Syst. 96(3–4), 387–418 (2020)

Kohlenberg, A., Gurvich, I.: The cost of impatience in dynamic matching: Scaling laws and operating regimes. Available at SSRN 4453900 (2023)

Xie, B., Wu, R.: Controlling of multi-component matching queues with buffers (2023). (Working paper)

Harrison, J.M.: Assembly-like queues. J. Appl. Probab. 10(2), 354–367 (1973)

Plambeck, E.L., Ward, A.R.: Optimal control of a high-volume assemble-to-order system. Math. Oper. Res. 31(3), 453–477 (2006)

Gurvich, I., Ward, A.: On the dynamic control of matching queues. Stochastic Syst. 4(2), 479–523 (2015)

Rahme, Y., Moyal, P.: A stochastic matching model on hypergraphs. Adv. Appl. Probab. 53(4), 951–980 (2021)

Büke, B., Chen, H.: Stabilizing policies for probabilistic matching systems. Queueing Syst. 80, 35–69 (2015)

Mairesse, J., Moyal, P.: Stability of the stochastic matching model. J. Appl. Probab. 53(4), 1064–1077 (2016)

Nazari, M., Stolyar, A.L.: Reward maximization in general dynamic matching systems. Queueing Syst. 91, 143–170 (2019)

Jonckheere, M., Moyal, P., Ramírez, C., Soprano-Loto, N.: Generalized max-weight policies in stochastic matching. Stochastic Syst. 13(1), 40–58 (2023)

Green, L.: A queueing system with general-use and limited-use servers. Oper. Res. 33(1), 168–182 (1985)

Adan, I., Foley, R.D., McDonald, D.R.: Exact asymptotics for the stationary distribution of a Markov chain: a production model. Queueing Syst. 62(4), 311–344 (2009)

Adan, I., Bušić, A., Mairesse, J., Weiss, G.: Reversibility and further properties of FCFS infinite bipartite matching. Math. Oper. Res. 43(2), 598–621 (2018)

Fazel-Zarandi, M.M., Kaplan, E.H.: Approximating the first-come, first-served stochastic matching model with ohm’s law. Oper. Res. 66(5), 1423–1432 (2018)

Brémaud, P.: Point Processes and Queues: Martingale Dynamics. Springer Series in Statistics, vol. 50. Springer, New York (1981)

Ethier, S.N., Kurtz, T.G.: Markov Processes: Characterization and Convergence. Wiley, New York (2009)

Pang, G., Talreja, R., Whitt, W.: Martingale proofs of many-server heavy-traffic limits for Markovian queues. Probab. Surv. 4, 193–267 (2007)

Mandelbaum, A., Momčilović, P.: Queues with many servers and impatient customers. Math. Oper. Res. 37(1), 41–65 (2012)

Atar, R., Mandelbaum, A., Reiman, M.I., et al.: Scheduling a multi class queue with many exponential servers: asymptotic optimality in heavy traffic. Ann. Appl. Probab. 14(3), 1084–1134 (2004)

Krichagina, E.V., Taksar, M.I.: Diffusion approximation for GI/G/1 controlled queues. Queueing Syst. 12(3), 333–367 (1992)

Xie, B.: Topics of queueing theory in heavy traffic. Ph.D. Thesis, Iowa State University (2022)

Protter, P.E.: General stochastic integration and local times. In: Stochastic Integration and Differential Equations, pp. 153– 236. Springer, New York ( 2005)

Whitt, W.: Stochastic-Process Limits: An Introduction to Stochastic-Process Limits and their Application to Queues. Springer, New York (2002)

Gans, N., Koole, G., Mandelbaum, A.: Telephone call centers: tutorial, review, and research prospects. Manuf. Serv. Oper. Manag. 5(2), 79–141 (2003)

Chung, K.L., Williams, R.J., Williams, R.: Introduction to Stochastic Integration, vol. 2. Springer, New York (1990)

Dai, J., He, S.: Customer abandonment in many-server queues. Math. Oper. Res. 35(2), 347–362 (2010)

Acknowledgements

The author would like to acknowledge his advisor, Ananda Weerasinghe, for his guidance, patience, enthusiasm, and inspiration throughout the research and the writing of the paper. The author also would like to acknowledge Xin Liu and Ruoyu Wu for their suggestions on the content and structure of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Proofs

Appendix A: Proofs

1.1 Appendix A.1: Proof of Lemma 3

Proof of Lemma 3

Observe that \(I_i^n(t)\) is a stochastic process with continuous non-decreasing non-negative sample paths. We also know that \(\{I_i^n(t) < x\}\in \bar{{\mathcal {F}}}_x^n\) for all \(x\ge 0\) and \(t\ge 0\), since to know \(I_i^n(t)\), we need all the information of \(Q_i^n(s)\) for \(0\le s\le t\), which depends on \(I_i^n(s)\) for all \(0\le s\le t\) by (10). Thus, to evaluate \(I_i^n(t)<x\), it suffices to consider \(\{N_i(u): 0\le u\le x\}\). This concludes that \(I_i^n(t)\) is an \(\bar{{\mathcal {F}}}_x^n\)-stopping time for each \(t\ge 0\). By (10) and the non-negativity of \(G_i^n\) in (4) and \(R^n\) in (9), we have a crude inequality \(Q_i^n(t) = Q_i^n(0) + A_i^n(t) - G_i^n(t) - R^n(t) \le Q_i^n(0) + A_i^n(t)\). Using this inequality and since \(Q_i^n(0)\) is deterministic, we further have

and

Since all the conditions of Lemma 3.2 in [36] are fulfilled, we conclude that

is a square-integrable martingale with respect to \((\bar{{\mathcal {F}}}_{I_i^n}^n)\). Consequently, \({\hat{M}}_i^n\) is a square-integrable \({\mathcal {F}}_t^n\)-martingale with quadratic variation process in (25) since the increments of arrival process \(A_i^n(t+s) - A_i^n(t)\) for \(s\ge 0\) is independent of \(Q_i^n(s)\) for \(0\le s\le t\). \(\square \)

1.2 Appendix A.2: Proof of Proposition 4

Proof of Proposition 4

Since \({\hat{M}}_i^n\) is a martingale, by the Burkholder’s inequality (see Theorem 45 in Protter [41]) and (25), we have

where \(\tilde{C}\) is some positive constant. Since \(A_i^n\) is a Poisson arrival process and \(\lambda _i^n/n\rightarrow \lambda _0\) by (7), we further have

where \(C_1\) and \(l\ge 2\) are constants independent of T and n. This concludes (26). Consequently, by the Chebyshev’s inequality, we have

This completes the proof. \(\square \)

1.3 Appendix A.3: Proof of Proposition 5

Proof of Proposition 5

Considering the martingale representation (21), we Assume

By (7), (15), and (26), we can represent \(B_T^n:= B_T^n(\omega )\) as a square-integrable random variable with the second moment bound \(E\left[ (B_T^n)^2\right] \le C_2(1+T^b)\), where \(C_2\) and \(b\ge 2\) are constants independent of T and n. Next, we intend to find a moment bound for \(\varvec{{\hat{Q}}^n}\) as the following:

assuming \(\sup _{1\le k\le K} (\delta _k^n)\le c_0\) for some constant \(c_0>0\) and for all \(n>0\) since \(\lim _{n\rightarrow \infty }\delta _i^n = \delta _i\).

Now it suffices to consider the last term on the right-hand side. Notice that (22) suggests that for any \(k\in \{1, \ldots , K\}\),

The first inequality holds for all \(k\in \{1, \ldots , K\}\) since the scalar-valued process \({\hat{R}}^n\) is defined to be the minimum value as described in (22). Moreover, (22) also suggests an identical upper bound.

These implies an upper bound of \(\vert {{\hat{R}}^n(t)} \vert \), namely

This together with previous inequalities of \(\sum _{k=1}^K \vert {{\hat{Q}}_k^n(t)} \vert \), we further have

We apply the Gronwall’s inequality to function \(t\mapsto \sum _{k=1}^K \vert {{\hat{Q}}_k^n(t)} \vert \) to obtain

which further yields

Consequently, we have the moment-bound result:

which further implies (27). The stochastic boundedness follows by employing Chebyshev’s inequality. This completes the proof. \(\square \)

1.4 Appendix A.4: Proof of Theorem 6

Proof of Theorem 6

For brevity, we intend to consider the case of \(h(\varvec{x}(t)) = (\delta _1 x_1(t), \delta _2 x_2(t), \ldots , \delta _K x_K(t))^\intercal \) for \(t\ge 0\), which is a special case of the integral term in the heavy traffic limit obtained in (16). For fixed \(\varvec{y}(t)\in D^K[0, T]\), we define a functional \(M: D^K[0, T]\rightarrow D^K[0, T]\) by

where \(R(\cdot )\) is defined in (29). To demonstrate the existence of a unique solution, it suffices to show M is a contraction mapping on \( D^K[0, T]\) embedded with the uniform topology. Suppose there are two solutions to the integral representation (28), namely \(\varvec{x}^{(1)}(t)\) and \(\varvec{x}^{(2)}(t)\) for \(t\ge 0\). Accordingly, we have

for \(k = 1, 2\). The functional M defined in (56) suggests

The complication comes from the second term \(\vert {R^{(1)}(t) - R^{(2)}(t)} \vert \). To find an upper bound, we assume there exists some \(l\in [1, K]\) depends on t so that it achieves the minimum in \(R^{(2)}(t)\). By (57), we have for \(t\in [0, T]\),

Similarly, we can obtain an identical upper bound for \(R^{(2)}(t) - R^{(1)}(t)\). Thus,

Therefore, we have

where \(\epsilon (T) = (1+K)\sqrt{K}\left( \sup _{1\le k \le K} (\delta _k)\right) T\). This yields

One may pick \(T_1>0\) such that \(\epsilon (T_1)<1\), and then the functional M formulates a contraction mapping for \(t\in [0, T_1]\) on \( D^K[0, T_1]\) with uniform topology, which leads to the existence of a unique solution to (28) by the Banach fixed-point theorem. If we partition the time interval [0, T] into several length \(T_1\) subintervals, we can apply the above arguments on each one of those length \(T_1\) subintervals to obtain a unique solution for all \(t\in [0, T]\). These guarantees a unique solution \(\varvec{x}\in D^K[0, T]\) to the fixed point problem \(M(\varvec{x}) = \varvec{x}\).

The continuity of f can be deduced by considering \(\Vert f(\varvec{y}(t_n)) - f(\varvec{y}(t))\Vert \). Analogous to previous discussions, we end up with the following inequality:

If we impose the boundedness for \(\varvec{x}(\cdot )\), \(\varvec{x}\) is continuous if \(\varvec{y}\) is continuous. \(\square \)

1.5 Appendix A.5: Proof of Corollary 7

Proof of Corollary 7

The proof of this extension relies on verifying the uniform integrability of a proper integrand. Since (6), (7), and (14), we have \(\varvec{\xi ^n}\Rightarrow \varvec{\xi }\) in \( D^K[0, T]\) as \(n\rightarrow \infty \). By Skorokhod’s representation theorem, we can simply assume that \(\varvec{\xi ^n}\) converges to \(\varvec{\xi }\) a.s. in some special probability space. For given \(\varvec{\xi ^n}\) and \(\varvec{\xi }\) in conjunction with Theorem 6, we obtain \(\varvec{{\hat{Q}}^n}\) and \(\varvec{X}\) associated with the corresponding input processes \(\varvec{\xi ^n}\) and \(\varvec{\xi }\) so that they solve (28), respectively. Therefore, we have

To find an upper bound, the difficulty also comes from the last term \(\vert {{\hat{R}}^n(t) - R(t)} \vert \). To this end, it suffices to find an upper bound for \(\vert {{\hat{R}}^n(\cdot ) - {\hat{R}}(\cdot )} \vert \). Consider two differences without absolute value separately. We assume that there exist indices \(l_1\) and \(l_2\) depend on t such that the minimum entry in \({\hat{R}}^n(t)\) is attained at \(l_1\) and the minimum entry in R(t) is attained at \(l_2\). Hence,

Notice that the first inequality holds since \({\hat{R}}^n(t) \le \xi _k^n(t) - \int _0^t \delta _k^n{\hat{Q}}_k^n(s)\textrm{d}s\) for any \(k\in \{1, \ldots , K\}\) and \(t\ge 0\). Similarly, we can obtain an upper bound for \(R(t) - {\hat{R}}^n(t)\). Consequently, we have the following upper bound:

This fact and (59) suggest that

where we assume \(\left( \sup _{1\le k\le K} \delta _k^n\right) \vee \left( \sup _{1\le k\le K} \delta _k\right) \le C_0\) for some \(C_0\) positive constant. Using the Gronwall’s inequality, we obtain

Now, if we have the convergence of the right-hand side of (61), it is straightforward to show the convergence of the left-hand side term. Notice that we have assumed almost sure convergence of \(\varvec{\xi ^n}\), which further yields \(\Vert \varvec{\xi ^n} - \varvec{\xi }\Vert _T \rightarrow 0\) in probability as \(n\rightarrow \infty \). We intend to show the convergence also holds in \(L^p[0, T]\) for \(1\le p<2l\) where \(l\ge 1\) is any constant. That is the convergence holds for any \(p\ge 1\). Here since we need to find higher moment bounds for appropriate processes in the proof, we tend to present constant l for generality. Then, Vitali’s convergence theorem suggests that if the pth order integrand is uniformly integrable and in conjunction with convergence in probability, it is straightforward to conclude the convergence in \(L^p[0, T]\).

We are left to show the uniform integrability. It is trivial that

where \(c>0\) is a generic constant, and we intend to find a moment bound for those two terms separately. Since the moment bound of the second term can be derived by the moment bound of the first term with the help of Fatou’s lemma, it suffices to consider \(E\left[ \Vert \varvec{\xi ^n}\Vert _T^{2l}\right] \). (7) and (15) suggest

where \(c>0\) is a generic constant depends on K. Let \(e=\{e(t):= t, t\ge 0\}\) be the identity map. Since the centered and scaled arrival processes \(\{{\hat{A}}_j^n\}_{1\le j\le K}\) are independent Poisson processes as assumed in Assumption 2, and \(A_j^n - \lambda _j^n e\) is a \(({\mathcal {F}}_t^n)_{t\ge 0}\) adapted martingale for each \(j\in \{1, \ldots , K\}\), the Burkholder’s inequality (see [36]) renders

The quadratic variation of compensated Poisson process implies \([A_j^n - \lambda _j^n e, A_j^n - \lambda _j^n e](T) = A_j^n(T)\) and \(E\left[ (A_j^n(T))^l\right] \le c(\lambda _j^n T)^l\). As a consequence,

where \(c>0\) is a generic constant independent of T and n. Similarly, since \({\hat{M}}_j^n\) is also a \(({\mathcal {F}}_t^n)_{t\ge 0}\)-martingale for each \(j\in \{1, \ldots , K\}\) and analogous to the proof of Proposition 4, the Burkholder’s inequality yields

where \(c>0\) is a generic constant. Hence, since \(Q_j^n(0)\) is deterministic and using (25), a crude inequality \(Q_j^n(s)\le Q_j^n(0) + A_j^n(s)\) implies

where \(c>0\) is a generic constant. Therefore, we obtain the (2l)th moment bound condition

where \(c>0\) is a generic constant, and c and \(d\ge 2l\ge 2\) are both constants independent of T and n. Hence, using (62), we have

where \(c>0\) is a generic constant and c and \(d\ge 2l \ge 2\) are independent of T and n. This implies the uniform integrability of \(\Vert \varvec{\xi ^n} - \varvec{\xi }\Vert _T^p\) for \(1\le p < 2l\). As a consequence, \(E\left[ \Vert \varvec{\xi ^n} - \varvec{\xi }\Vert _T^p\right] \rightarrow 0\) as \(n\rightarrow \infty \) on a special probability space. Using (61), we further obtain \(E\left[ \Vert \varvec{{\hat{Q}}^n} - \varvec{X}\Vert _T^p\right] \rightarrow 0\). This completes the proof. \(\square \)

1.6 Appendix A.6: Proof of Proposition 8

Proof of Proposition 8

To avoid redundant algebraic manipulations and for brevity, we intend to demonstrate the element indexed by \(i=1\) with \(K=4\) case, and other elements can be obtained by following the same fashion.

To show the coupled process is a semimartingale, it suffices to prove that each component admits a semimartingale decomposition. The limiting processes in (18) can be rewritten as

for \(i=1, 2, 3, 4\) and \(t\ge 0\), where

for each i and \(t\ge 0\). Consider the case of \(i=1\). (66) further suggests that for \(t\ge 0\),

where \(\eta _3(t):= \max \{-\xi _3(t), -\xi _4(t)\}\). Observe that \(\eta _3(t)\) can be further rewritten as

By utilizing Tanaka’s formula (see Sect. 7.3 in [44]), we apply Itô’s lemma to the function \(f(x) = x^+\) for \(x\in {\mathbb {R}}\) and obtain

where

and \(L_t^{(1)}\) is the local time process for \(Y_3(t)\) at the origin, which increases only at time t when \(\xi _3(t) = \xi _4(t)\). Here, \(B_{34}(\cdot )\) is a Brownian motion depends on two independent standard Brownian motions \(W_3\) and \(W_4\) obtained in Proposition 2. Similarly, let \(\eta _2(t):= \max \{-\xi _2(t), \eta _3(t)\}\) in (68) and Tanaka’s formula again yields a similar expression with a new local time process. Following the same fashion, one can move on to the last layer \(\max \{-\xi _1, \eta _2\}\), and iteratively, we can obtain a semimartingale decomposition. The decomposition for general \(K \ge 2\) can be done similarly. \(\square \)

1.7 Appendix A.7: Proof of Theorem 9

First, we prove some results of interest, which will play an important role in the proof of Little’s law. Then, we present the proof of Theorem 9.

Corollary 12

Let \(T>0\) and for each \(i\in \{1, \ldots , K\}\), we have that \({\hat{G}}_i^n\) is stochastically bounded and \({\hat{G}}_i^n(\cdot )\) converges weakly to \(\delta _i \int _0^{\cdot } X_i(s)\textrm{d}s\) in D[0, T] as \(n\rightarrow \infty \).

Proof

We prove the result for the ith queue, and other queues can be proved in a very similar approach. By (20), we have

for \(t\ge 0\). Using Propositions 4 and 5, we derive the second moment bound result:

where C, \(C_1\), \(C_2\), l, and b are constants independent of T and n as described in (26) and (55). Therefore, using the Chebyshev’s inequality, we have \(\lim _{a\rightarrow \infty }\limsup _{n\rightarrow \infty } P \) \( \left[ \Vert {\hat{G}}_i^n\Vert _T>a\right] = 0\).

Next, we show the weak convergence. Since \({\hat{M}}_i^n\) is a \({\mathcal {F}}^n\)-martingale by Lemma 3 and as in the proof of Proposition 4, using the Burkholder’s inequality, we have

Using the moment bound result of \({\hat{Q}}_i^n\) in Proposition 5 and we have assumed that \(\lim _{n\rightarrow \infty }\delta _i^n = \delta _i>0\), we obtain \(E\left[ \Vert {\hat{M}}_i^n\Vert _T^2\right] \) converges to zero as \(n\rightarrow \infty \). By Chebyshev’s inequality, we further have \(\Vert {\hat{M}}_i^n\Vert _T\) converges to zero in probability as \(n\rightarrow \infty \). Since Theorem 1 implies the weak convergence of \({\hat{Q}}_i^n\) in D[0, T], the continuity of integral mappings further suggests that \(\delta _i^n\int _0^{\cdot } {\hat{Q}}_i^n(s)\textrm{d}s\) converges weakly to \(\delta _i\int _0^{\cdot } X_i(s) \textrm{d}s\) in D[0, T]. As a consequence, \({\hat{G}}_i^n(\cdot )\) converges weakly to \(\delta _i\int _0^{\cdot } X_i(s) \textrm{d}s\) in D[0, T] as \(n\rightarrow \infty \). \(\square \)

Now, with the facts obtained above, we are ready to see some crucial properties for the virtual waiting time processes introduced in (39).

Proposition 13

Under the assumptions of Theorem 1 and for each \(i\in \{1, \ldots , K\}\), we have that \({\hat{V}}_i^n\) is stochastically bounded and consequently, \(\Vert V_i^n\Vert _T\rightarrow 0\) in probability as \(n\rightarrow \infty \).

Proof

This argument is similar to the idea of proving Proposition 4.4 in [45]. Let \(M>0\) be arbitrary. If \(0<M<{\hat{V}}_i^n(t)\) for some \(t\in [0, T]\), then we have \(V_i^n(t)>\frac{M}{\sqrt{n}}\), which suggests that the queue length of category i at time \(t+\frac{M}{\sqrt{n}}\) is not empty and

where \(\mathring{G}_i^n\left( t, t+\frac{M}{\sqrt{n}}\right) \) represents the amount of abandoned components from the ith queue for those arrivals during \([t, t+\frac{M}{\sqrt{n}})\). It counts those abandoned items that arrived after time t and abandoned before time \(t+\frac{M}{\sqrt{n}}\). We further observe that the number of abandoned components among those arrivals is less than the number of abandoned components by time \(t+\frac{M}{\sqrt{n}}\), namely \(0\le \mathring{G}_i^n\left( t+\frac{M}{\sqrt{n}}\right) \le G_i^n\left( t+\frac{M}{\sqrt{n}}\right) \), since those arrivals before time t may abandon the system during the time interval \([t, t+\frac{M}{\sqrt{n}})\). Therefore, together with a simple computation, we have a diffusion-scaled inequality:

Let \(0<\delta <1\). Since we assumed \(\lambda _i^n/n\rightarrow \lambda _0\) as \(n\rightarrow \infty \) by (7), we can find an \(\alpha >0\) and \(N\ge 1\) so that for any \(n\ge N\), we have \(0<\frac{M}{\sqrt{n}}<\delta \) and \(\frac{\lambda _i^n}{n}>3\alpha > 0\) hold. Hence, for any \(n\ge N\), we have the following inclusion:

Therefore,

Since the weak convergence of \({\hat{A}}_i^n\) in (14), we can further obtain the tightness of \({\hat{A}}_i^n\) and it also satisfies \(\lim _{\delta \rightarrow 0}\limsup _{n\rightarrow \infty } P\left[ \omega ({\hat{A}}_i^n, \delta , T) > \epsilon \right] =0\). Using this fact and together with Proposition 5 and Corollary 12, we obtain stochastic boundedness of \({\hat{V}}_i^n\). Consequently, \(\lim _{n\rightarrow \infty } \Vert V_i^n\Vert _T = 0\) in probability. \(\square \)

Now, we are ready to prove Theorem 9.

Proof of Theorem 9

We will prove the result in terms of category i and the cases for other categories remain identical. Consider the state of the ith queue at time \(t+V_i^n(t)\) for any \(t\in [0, T]\). We observe that the queue length at time \(t+V_i^n(t)\) equals the number of arrivals during \([t, t+V_i^n(t))\) minus the number of abandoned items among those arrivals and this relation can be characterized by the following equality:

where \(\mathring{G}_i^n(t, t+V_i^n(t))\) represents the amount of abandoned components who arrived after time t and abandoned before \(t+V_i^n(t)\). We scale both sides of (74) by \(1/\sqrt{n}\) and with a simple algebraic manipulation, we can obtain

where the diffusion-scaled \({\hat{Q}}_i^n\) and \({\hat{A}}_i^n\) are as defined in (11), and

Consider the last term \(\hat{\mathring{G}}_i^n\), we observe that

since those who arrived before time t may abandon right after time t and still before time \(t+V_i^n(t)\), and those abandoned items are not counted in \(\mathring{G}_i^n(t, t+V_i^n(t))\). With this observation, we have

Since \({\hat{A}}_i^n\) satisfies (14) and using Corollary 12, we have the tightness of \({\hat{A}}_i^n\) and \({\hat{G}}_i^n\), and they satisfy for any \(\epsilon >0\),

Moreover, we assumed that \(\lim _{n\rightarrow \infty }\vert {\lambda _i^n/n - \lambda _0} \vert = 0\) by (7). Since \({\hat{V}}_i^n\) is stochastically bounded and as a consequence, \(\Vert V_i^n\Vert _T\rightarrow 0\) in probability as proved in Proposition 13, above facts imply that \(\Vert {\hat{Q}}_i^n(t+V_i^n(t)) - \lambda _0{\hat{V}}_i^n(t)\Vert _T \rightarrow 0\) in probability as \(n\rightarrow \infty \).

Now, we are left to show \(\Vert {\hat{Q}}_i^n(t+V_i^n(t)) - {\hat{Q}}_i^n(t)\Vert _T \rightarrow 0\) in probability. By Theorem 1, we have the tightness of \({\hat{Q}}_i^n\), which also satisfies \(\lim _{\delta \rightarrow 0}\limsup _{n\rightarrow \infty } P\left[ \omega ({\hat{Q}}_i^n, \right. \) \(\left. \delta , T)>\epsilon \right] = 0 \) for any \(\epsilon >0\). Thus, it is straightforward to show the above relation together with the fact that \(\Vert V_i^n\Vert _T \rightarrow 0\) in probability as proved in Proposition 13. This completes the proof. \(\square \)

1.8 Appendix A.8: Proof of Proposition 10

Proof of Proposition 10

We prove the case for the ith queue, and other queues remain identical. Here, we first show the stochastic boundedness directly, and then we come back to prove the moment bound condition (41) for each \(t\in [0, T]\) by utilizing the order-preserving property.

First, we intend to show the stochastic boundedness. For i fixed and let \(M>0\) be arbitrary. If \(0<M<{\hat{V}}_i^n(t)\) holds for some \(t\in [0, T]\), we know that the queue length at time \(t+\frac{M}{\sqrt{n}}\) is not empty, namely \(Q_i^n\left( t+\frac{M}{\sqrt{n}}\right) >0\), and satisfies

With a simple algebraic manipulation by centering and scaling, we obtain a diffusion-scaled inequality

Let \(0<\delta <1\) and since we assumed \(\lambda _i^n/n\rightarrow \lambda _0\) as \(n\rightarrow \infty \), we can find an \(\alpha >0\) and \(N\ge 1\) such that for any \(n\ge N\), we have \(0<\frac{M}{\sqrt{n}}<\delta \) and \(\frac{\lambda _i^n}{n}> 2\alpha > 0\) hold. Therefore, for any \(n\ge N\), we have

The weak convergence of \({\hat{A}}_i^n\) suggests the tightness of \({\hat{A}}_i^n\) and the convergence of the modulus of continuity operator of \({\hat{A}}_i^n\), i.e., \(\lim _{\delta \rightarrow 0}\limsup _{n\rightarrow \infty } P\left[ \omega ({\hat{A}}_i^n, \delta , T) > \epsilon \right] =0\). Using these facts and the second moment bound condition of \({\hat{Q}}_i^n\), we have the stochastic boundedness for \({\hat{V}}_i^n\) and consequently, \(\lim _{n\rightarrow \infty } \Vert V_i^n\Vert _T = 0\) in probability.

Now, we are left to show the second moment bound result (41) for each \(t\in [0, T]\). For each \(t\in [0, T]\) fixed and given condition \(A_i^n(t) = k\), we have \(t_{ik}^n< t < t_{i, k+1}^n\) and \(V_i^n(t) = (\max _{j\ne i} \{ t_{j, k+1}^n \} - t)^+\), where \(t_{i, k}^n\) represents the arrival time of the kth component of category i in the nth system. It can be defined as \(t_{i, k}^n = \sum _{j=1}^k \tau _{i, j}^n\), where \(\tau _{i, j}^n\)’s are inter-arrival times. Notice that for a hypothetical component of category i who arrived at time t and \(t>t_{j, k+1}^n\) for all \(j\ne i\), it needs not to wait and there would be a match immediately since other queues have a component of index \(k+1\) waiting to be matched. However, if \(t < t_{j, k+1}^n\) for some \(j\ne i\), then its waiting time would be their maximum difference \((\max _{j\ne i} \{t_{i, k+1}^n\} - t)\). With these facts, we can compute the following conditional moments:

Since we assume renewal arrivals, we let \(E[\tau _{jk}^n] = 1/\lambda _j^n\) and \(\text {Var}(\tau _{jk}^n) = c_j/(\lambda _j^n)^2\) for some \(c_j>0\), and \(\{\tau _{jk}^n\}_{k\ge 1}\) are independent with each other, which further yields

Therefore, with a simple trick of adding and subtracting \((k+1)/\lambda _j^n\) term, we have

Hence, (81) together with above inequality, we obtain

Consequently, we have

which further yields (41). This completes the proof. \(\square \)

1.9 Appendix A.9: Proof of Theorem 11

Proof of Theorem 11

Here, we mainly verify the uniform integrability of appropriate integrands by considering the expectations separately under some restrictions for the cost function (45).

First, we show that

Since \({\hat{Q}}_j^n\) converges weakly to \(X_j\) in D[0, T], using the Skorokhod representation theorem, we can simply assume that \({\hat{Q}}_j^n\) converges to \(X_j\) a.s. in some special probability space. By the continuous mapping theorem, we obtain \(\lim _{n\rightarrow \infty } C_j({\hat{Q}}_j^n(t)) = C_j(X_j(t))\) a.s.

Next, we verify the uniform integrability of the integrand \(e^{-\alpha t}C_j({\hat{Q}}_j^n(t))\) so that it guarantees the interchange of integral and limit. Since cost function \(C_j(\cdot )\) admits polynomial growth as assumed in (45), we have \(C_j({\hat{Q}}_j^n(t))\le c_j(1+\vert {{\hat{Q}}_j^n(t)} \vert ^p)\), where \(c_j>0\) and \(1\le p < 2l\) are constants independent of T and n as in (45). Since \(1\le p<2l\) for \(l\ge 1\) as assumed, let \(\delta >0\) so that \(1+\delta = 2l/p\). We will explain the reason for involving l at the end of this section. Similar to Proposition 5, we can derive a higher order moment bound for \(B_T^n\) random variable introduced in (51), namely \(E\left[ B_T^{2l}\right] \le c(1+T^{d})\). Following the same proof, we can strengthen the moment-bound condition of the queue lengths by

where \(d \ge 1\) and \(l\ge 1\) are constants independent of T and n, and \(c>0\) is a genetic constant. Notice that if we pick \(l=1\), we may obtain a special case proved in (27). This result further renders

Hence, since \(\gamma > 2lc_0(1+K)\) as assumed, we obtain

where \(c>0\) is a generic constant, and K, \(c_0\), and \(d\ge 1\) are constants independent of n. This verifies the uniform integrability. Therefore, (83) follows.

Second, we show that

Using Fubini–Tonelli’s theorem, we derive that \(\gamma \int _{t=0}^{\infty } \int _t^{\infty } e^{-\gamma s}\textrm{d}s d{\hat{G}}_j^n(t) = \gamma \int _0^{\infty } \) \( \int _{t=0}^s e^{-\gamma s}d{\hat{G}}_j^n(t)\textrm{d}s\), which further implies

a.s. Notice that this can also be verified using integration by parts and the moment bound of \({\hat{G}}_k^n\) obtained in Corollary 12. Now, it suffices to show that

As in the proof of Corollary 12, since \(\Vert {\hat{M}}_j^n\Vert _T\) converges to zero in probability and \(\delta _j^n\int _0^{\cdot }{\hat{Q}}_j^n(s)\textrm{d}s\) converges weakly to \(\delta _j\int _0^{\cdot } X_j(s)\textrm{d}s\) in D[0, T], we conclude that \({\hat{G}}_j^n(\cdot )\) converges weakly to \(\delta _j\int _0^{\cdot } X_j(s)\textrm{d}s\) in D[0, T]. Given \({\hat{G}}_j^n(\cdot ) \ge 0\) is non-decreasing, we are left to verify the uniform integrability of \({\hat{G}}_j^n(T)\) as follows:

where \(C_1\), \(C_2\), \(l\ge 1\) and \(b\ge 1\) are constants independent of T and n (see (26) and (27)). Here the first inequality is obtained by the definition of \({\hat{M}}_j^n(\cdot )\) introduced in (20). Consequently, \(\lim _{n\rightarrow \infty }E\left[ {\hat{G}}_j^n(T)\right] = \delta _j E\left[ \int _0^TX_j(s)ds\right] \). By this limit, the above moment bound condition, and assumption \(\gamma >2c_0(1+K)\), we obtain

by verifying the uniform integrability of integrand, namely

since \(\gamma >2c_0(1+K)\) assumed above. Using Fubini’s theorem, we can rewrite the above conclusion as

Hence, (87) follows as well as (85). As a consequence, (48) immediately follows from (83) and (85). \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xie, B. Multi-component matching queues in heavy traffic. Queueing Syst (2024). https://doi.org/10.1007/s11134-024-09907-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11134-024-09907-0

Keywords

- Matching queues

- Assemble-to-order systems

- Heavy-traffic approximations

- Scalar-valued processes

- Waiting time processes

- Coupled stochastic integral equations