Abstract

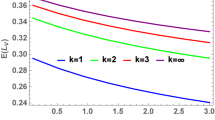

We consider optimal pricing for a two-station tandem queueing system with finite buffers, communication blocking, and price-sensitive customers whose arrivals form a homogeneous Poisson process. The service provider quotes prices to incoming customers using either a static or dynamic pricing scheme. There may also be a holding cost for each customer in the system. The objective is to maximize either the discounted profit over an infinite planning horizon or the long-run average profit of the provider. We show that there exists an optimal dynamic policy that exhibits a monotone structure, in which the quoted price is non-decreasing in the queue length at either station and is non-increasing if a customer moves from station 1 to 2, for both the discounted and long-run average problems under certain conditions on the holding costs. We then focus on the long-run average problem and show that the optimal static policy performs as well as the optimal dynamic policy when the buffer size at station 1 becomes large, there are no holding costs, and the arrival rate is either small or large. We learn from numerical results that for systems with small arrival rates and no holding cost, the optimal static policy produces a gain quite close to the optimal gain even when the buffer at station 1 is small. On the other hand, for systems with arrival rates that are not small, there are cases where the optimal dynamic policy performs much better than the optimal static policy.

Similar content being viewed by others

References

Afèche, P., Ata, B.: Bayesian dynamic pricing in queueing systems with unknown delay cost characteristics. Manuf. Serv. Oper. Manag. 15(2), 292–304 (2013)

Aktaran-Kalayci, T., Ayhan, H.: Sensitivity of optimal prices to system parameters in a steady-state service facility. Eur. J. Oper. Res. 193(1), 120–128 (2009)

Andradóttir, S., Ayhan, H., Down, D.G.: Server assignment policies for maximizing the steady-state throughput of finite queueing systems. Manag. Sci. 47(10), 1421–1439 (2001)

Banerjee, A., Gupta, U.C.: Reducing congestion in bulk-service finite-buffer queueing system using batch-size-dependent service. Perform. Eval. 69(1), 53–70 (2012)

Çil, E.B., Karaesmen, F., Örmeci, E.L.: Dynamic pricing and scheduling in a multi-class single-server queueing system. Queueing Syst. Theory Appl. 67(4), 305–331 (2011)

Çil, E.B., Örmeci, E.L., Karaesmen, F.: Effects of system parameters on the optimal policy structure in a class of queueing control problems. Queueing Syst. Theory Appl. 61(4), 273–304 (2009)

Cheng, D.W., Yao, D.D.: Tandem queues with general blocking: a unified model and comparison results. Discrete Event Dyn. Syst. Theory Appl. 2(3–4), 207–234 (1993)

Ching, W., Choi, S., Li, T., Leung, I.K.C.: A tandem queueing system with applications to pricing strategy. J. Ind. Manag. Optim. 5(1), 103–114 (2009)

Ghoneim, H.A., Stidham, S.: Control of arrivals to two queues in series. Eur. J. Oper. Res. 21(3), 399–409 (1985)

Grassmann, W.K., Drekic, S.: An analytical solution for a tandem queue with blocking. Queueing Syst. 36(1–3), 221–235 (2000)

Hassin, R., Koshman, A.: Profit maximization in the M/M/1 queue. Oper. Res. Lett. 45(5), 436–441 (2017)

Haviv, M., Randhawa, R.S.: Pricing in queues without demand information. Manuf. Serv. Oper. Manag. 16(3), 401–411 (2014)

Low, D.W.: Optimal dynamic pricing policies for an \(M/M/s\) queue. Oper. Res. 22(3), 545–561 (1974)

Low, D.W.: Optimal pricing for an unbounded queue. IBM J. Res. Dev. 18(4), 290–302 (1974)

Maglaras, C.: Revenue management for a multiclass single-server queue via a fluid model analysis. Oper. Res. 54(5), 914–932 (2006)

Maoui, I., Ayhan, H., Foley, R.D.: Congestion-dependent pricing in a stochastic service system. Adv. Appl. Prob. 39(4), 898–921 (2007)

Maoui, I., Ayhan, H., Foley, R.D.: Optimal static pricing for a service facility with holding cost. Eur. J. Oper. Res. 197(3), 912–923 (2009)

Millhiser, W.P., Burnetas, A.N.: Optimal admission control in series production systems with blocking. IIE Trans. 45(10), 1035–1047 (2013)

Mitrani, I., Chakka, R.: Spectral expansion solution for a class of Markov models: application and comparison with the matrix-geometric method. Perform. Eval. 23(3), 241–260 (1995)

Naor, P.: The regulation of queue size by levying tolls. Econometrica 37(1), 15–24 (1969)

Neuts, M.F.: Matrix-Geometric Solutions in Stochastic Models: An Algorithmic Approach. Johns Hopkins Series in the Mathematical Sciences. Johns Hopkins University Press, Baltimore (1981)

Paschalidis, I.C., Tsitsiklis, J.N.: Congestion-dependent pricing of network services. IEEE/ACM Trans. Netw. 8(2), 171–184 (2000)

Puterman, M.L.: Markov Decision Processes. Wiley, New York (1994)

Sennott, L.I.: Stochastic Dynamic Programming and the Control of Queueing Systems. Wiley, New York (1999)

Sentosa Singapore: Promotions. http://www.rwsentosa.com/language/en-US/Homepage/Promotions/category/Attractions (2017). Accessed 9 Mar 2017

Shaked, M., Shanthikumar, J.G.: Stochastic Orders. Springer, New York (2007)

White, D.J.: Markov decision processes: discounted expected reward or average expected reward? J. Math. Anal. Appl. 172, 375–384 (1993)

Xia, L., Chen, S.: Dynamic pricing control for open queueing networks. IEEE Trans. Autom. Control 63(10), 3290–3300 (2018)

Yoon, S., Lewis, M.E.: Optimal pricing and admission control in a queueing system with periodically varying parameters. Queueing Syst. Theory Appl. 47(3), 177–199 (2004)

Ziya, S., Ayhan, H., Foley, R.D.: Optimal prices for finite capacity queueing systems. Oper. Res. Lett. 34(2), 214–218 (2006)

Ziya, S., Ayhan, H., Foley, R.D.: A note on optimal pricing for finite capacity queueing systems with multiple customer classes. Naval Res. Logist. 55(5), 412–418 (2008)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Proofs for Sect. 5

In this section, we provide proofs for results related to optimal dynamic pricing policies in Sect. 5.

1.1 Proofs for Sects. 5.1 and 5.2

This section consists of detailed proofs for the results in Sects. 5.1 and 5.2.

1.1.1 Proof of Lemma 1

-

(1)

Let \(v_1, v_2\in V\) and \(\alpha _1,\alpha _2\ge 0\). Since \({\mathcal {V}}\) is a vector space, \(\alpha _1v_1+\alpha _2v_2\in {\mathcal {V}}\). It follows that \(\alpha _{j}v_{j}\) satisfies (9), (10), (11), and (12), and the inequalities \({\mathcal {M}}\)(1), (2), and (3) for \(j=1,2\). Summing these inequalities over \(j=1,2\) gives that \(\alpha _1v_1+\alpha _2v_2\in V\), which implies V is a convex cone. Let \(\{v^n\}_{n=1}^{\infty } \subset V\) be a sequence of functions. To show that V is closed, it suffices to show that if there exists some v such that \(\Vert v^n-v\Vert \rightarrow 0\) as \(n\rightarrow \infty \), then \(v\in V\). Since \(v^n\in V\) for each n, we have that \(v^n\) satisfies (9), (10), (11), and (12) and the inequalities \({\mathcal {M}}\)(1), (2), and (3) for all n. Since \(v^n\) converges to v uniformly as \(n\rightarrow \infty \), \(v^n(s)\) converges to v(s) as \(n\rightarrow \infty \) for each \(s\in S\). Taking the limit on both sides of the above inequalities as \(n\rightarrow \infty \) gives that v satisfies each of (9), (10), (11), and (12) and the inequalities \({\mathcal {M}}\)(1), (2) and (3). This implies that V is closed.

-

(2)

Consider any \(v\in {\mathcal {V}}\). We have that the inequality holds as equality for each of (9), (10), (11), and (12). It also follows that

$$\begin{aligned} v(s_1,s_2)-v(s_1+1,s_2)= & {} c_1 \ge 0,\\ v(s_1,s_2)-v(s_1,s_2+1)= & {} c_2 \ge 0,\\ v(s_1+1,s_2) - v(s_1,s_2+1)= & {} c_2-c_1 \le 0, \end{aligned}$$where the last inequality holds since \(c_1\ge c_2\ge 0\). Thus, \(v\in V\).

-

(3)

If \(v\in {\mathcal {V}}\) is a constant function, all the inequalities for (9), (10), (11), and (12) and the inequalities \({\mathcal {M}}\)(1), (2), and (3) hold as equalities. Thus, \(v\in V\). \(\square \)

1.1.2 Proof of Lemma 2

Consider any \(v\in V\). By Lemma 1(1) and (2), it suffices to show that \(f_0\) and \(\mu _{1}f_1+\mu _{2}f_2\in V\).

-

(i)

We first show the submodularity of Tv. Choose any \(s=(s_1,s_2)\in S\) such that \(0\le s_i\le B_i\) for \(i=1,2\). Let \(a_1, a_2, a_3, a_4\in A\) be optimal actions that solve (13) for states \((s_1,s_2)\), \((s_1,s_2+1)\), \((s_1+1,s_2)\), and \((s_1+1,s_2+1)\), respectively. For \(s_1\le B_1-1\), we have that

$$\begin{aligned} f_0(s_1,s_2)-f_0(s_1+1,s_2)= & {} a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2) \\&+\, \beta \lambda (1-p(a_1))v(s)- \,[a_3\lambda (a_3)\\&+\,\beta \lambda p(a_3)v(s_1+2,s_2)\\&+\,\beta \lambda (1-p(a_3))v(s_1+1,s_2)]\\\le & {} \, a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2)\\&+\, \beta \lambda (1-p(a_1))v(s)-\, [a_4\lambda (a_4)\\&+\,\beta \lambda p(a_4)v(s_1+2,s_2)\\&+\,\beta \lambda (1-p(a_4))v(s_1+1,s_2)]\\= & {} a_1\lambda (a_1)-\beta \lambda (1-p(a_1))v(s_1+1,s_2)\\&+\,\beta \lambda (1-p(a_1))v(s)-\,[a_4\lambda (a_4)\\&+\,\beta \lambda p(a_4)v(s_1+2,s_2)-\,\beta \lambda p(a_4)v(s_1+1,s_2)]\\= & {} a_1\lambda (a_1)-a_4\lambda (a_4)+\beta \lambda (1-p(a_1))[v(s)\\&-\,v(s_1+1,s_2)] +\,\beta \lambda p(a_4)[v(s_1+1,s_2)\\&-\,v(s_1+2,s_2)]. \end{aligned}$$We also have

$$\begin{aligned}&f_0(s_1,s_2+1)-f_0(s_1+1,s_2+1) \\&\quad =a_2\lambda (a_2)+\beta \lambda p(a_2)v(s_1+1,s_2+1)+\beta \lambda (1-p(a_2))v(s_1,s_2+1)\\&\qquad -\,[a_4\lambda (a_4)+\beta \lambda p(a_4)v(s_1+2,s_2+1)+\beta \lambda (1-p(a_4))v(s_1+1,s_2+1)]\\&\quad \ge \,a_1\lambda (a_1)+\beta \lambda p(a_1)v(s_1+1,s_2+1)+\beta \lambda (1-p(a_1))v(s_1,s_2+1)\\&\qquad -\,[a_4\lambda (a_4)+\beta \lambda p(a_4)v(s_1+2,s_2+1)+\beta \lambda (1-p(a_4))v(s_1+1,s_2+1)]\\&\quad =a_1\lambda (a_1)-\beta \lambda (1- p(a_1))v(s_1+1,s_2+1)+\beta \lambda (1-p(a_1))v(s_1,s_2+1)\\&\qquad -\,[a_4\lambda (a_4)+\beta \lambda p(a_4)v(s_1+2,s_2+1)-\beta \lambda p(a_4)v(s_1+1,s_2+1)]\\&\quad =a_1\lambda (a_1)-a_4\lambda (a_4)+\beta \lambda (1-p(a_1))[v(s_1,s_2+1)-v(s_1+1,s_2+1)]\\&\qquad +\,\beta \lambda p(a_4)[v(s_1+1,s_2+1)-v(s_1+2,s_2+1)]. \end{aligned}$$For \(s_1=B_1\), we have that

$$\begin{aligned} f_0(s_1,s_2)-f_0(s_1+1,s_2)= & {} a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2) \\&+\, \beta \lambda (1-p(a_1))v(s)-\,\beta \lambda v(s_1+1,s_2)\\= & {} a_1\lambda (a_1)-\beta \lambda (1-p(a_1)) v(s_1+1,s_2)\\&+\, \beta \lambda (1-p(a_1))v(s)\\= & {} a_1\lambda (a_1) +\beta \lambda (1-p(a_1))(v(s)\\&-\,v(s_1+1,s_2)). \end{aligned}$$Furthermore, for \(s_1=B_1\), it also holds that

$$\begin{aligned}&f_0(s_1,s_2+1)-f_0(s_1+1,s_2+1) \\&\quad = a_2\lambda (a_2)+\beta \lambda p(a_2)v(s_1+1,s_2+1)+\beta \lambda (1-p(a_2))v(s_1,s_2+1)\\&\qquad -\,\beta \lambda v(s_1+1,s_2+1)\\&\quad = a_2\lambda (a_2)-\beta \lambda (1- p(a_2))v(s_1+1,s_2+1)+\beta \lambda (1-p(a_2))v(s_1,s_2+1)\\&\quad \ge \,a_1\lambda (a_1)-\beta \lambda (1- p(a_1))v(s_1+1,s_2+1)+\beta \lambda (1-p(a_1))v(s_1,s_2+1)\\&\quad = a_1\lambda (a_1)+\beta \lambda (1- p(a_1))(v(s_1,s_2+1)-v(s_1+1,s_2+1)). \end{aligned}$$Since v is submodular, we have \(f_0\) is submodular. We then show that \(f_1\) is submodular.

-

(a)

If \(s_{1}=0\) and \(s_2=B_2\), we have that

$$\begin{aligned} f_{1}(s) - f_{1}(s_1+1,s_2)= & {} v(s_1,s_2) - v(s_1,s_2+1)\\\le & {} v(s_1+1,s_2) - v(s_1+1,s_2+1)\\\le & {} v(s_1,s_2+1) - v(s_1+1,s_2+1)\\= & {} f_{1}(s_1,s_2+1) - f_{1}(s_1+1,s_2+1), \end{aligned}$$where the first inequality follows from (9) and the second inequality follows from the fact that v has property \({\mathcal {M}}\)(3).

-

(b)

If \(s_{1}=0\) and \(s_2\le B_2-1\), we have that

$$\begin{aligned} f_{1}(s) - f_{1}(s_1+1,s_2)= & {} v(s_1,s_2) - v(s_1,s_2+1) \\\le & {} v(s_1,s_2+1) - v(s_1,s_2+2)\\= & {} f_{1}(s_1,s_2+1) - f_{1}(s_1+1,s_2+1), \end{aligned}$$where the inequality follows from (12).

-

(c)

If \(s_{1}\ge 1\) and \(s_2\le B_2-1\), we have that

$$\begin{aligned} f_{1}(s) - f_{1}(s_1+1,s_2)= & {} v(s_1-1,s_2+1) - v(s_1,s_2+1) \\\le & {} v(s_1-1,s_2+2) - v(s_1,s_2+2)\\= & {} f_{1}(s_1,s_2+1) - f_{1}(s_1+1,s_2+1), \end{aligned}$$where the second inequality follows from (9).

-

(d)

If \(s_{1}\ge 1\) and \(s_2= B_2\), we have that

$$\begin{aligned} f_{1}(s) - f_{1}(s_1+1,s_2)= & {} v(s_1-1,s_2+1) - v(s_1,s_2+1) \\\le & {} v(s_1,s_2+1) - v(s_1+1,s_2+1)\\= & {} f_{1}(s_1,s_2+1) - f_{1}(s_1+1,s_2+1), \end{aligned}$$where the inequality follows from (11).

We next show that \(f_2\) is submodular.

-

(a)

If \(s_{2}=0\), we have that

$$\begin{aligned} f_{2}(s) - f_{2}(s_1+1,s_2)= & {} v(s_1,s_2) - v(s_1+1,s_2) \\= & {} f_{2}(s_1,s_2+1) - f_{2}(s_1+1,s_2+1). \end{aligned}$$ -

(b)

If \(s_{2}\ge 1\), we have that

$$\begin{aligned} f_{2}(s) - f_{2}(s_1+1,s_2)= & {} v(s_1,s_2-1) - v(s_1+1,s_2-1) \\\le & {} v(s_1,s_2) - v(s_1+1,s_2)\\= & {} f_{2}(s_1,s_2+1) - f_{2}(s_1+1,s_2+1), \end{aligned}$$where the inequality follows from (9)

By Lemma 1(1) and (2), Tv is submodular.

-

(a)

-

(ii)

We now show that T preserves subconcavity. Choose any \(s=(s_1,s_2)\in S\) such that \(0\le s_1\le B_1-1\) and \(0\le s_2\le B_2\). Let \(a_1, a_2, a_3, a_4\in A\) be optimal actions that solve (13) for states \((s_1,s_2+1)\), \((s_1+1,s_2)\), \((s_1+1,s_2+1)\), and \((s_1+2,s_2)\), respectively. By a similar argument as in (i), we have that \(f_0\) satisfies (10) for \(s_1\le B_1-2\) by replacing states \((s_1,s_2)\), \((s_1+1,s_2)\), \((s_1,s_2+1)\), and \((s_1+1,s_2+1)\) by \((s_1,s_2+1)\), \((s_1+1,s_2+1)\), \((s_1+1,s_2)\), and \((s_1+2,s_2)\), respectively. For \(s_1=B_1-1\), it follows that

$$\begin{aligned}&f_0(s_1,s_2+1)- f_0(s_1+1,s_2+1) \\&\quad = a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2+1) + \beta \lambda (1-p(a_1))v(s_1,s_2+1)\\&\qquad - \,[a_3\lambda (a_3)+\beta \lambda p(a_3) v(s_1+2,s_2+1) + \beta \lambda (1-p(a_3))v(s_1+1,s_2+1)]\\&\quad \le \, a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2+1) + \beta \lambda (1-p(a_1))v(s_1,s_2+1)\\&\qquad -\, [a_K\lambda (a_K)+\beta \lambda p(a_K) v(s_1+2,s_2+1)\\&\qquad +\, \beta \lambda (1-p(a_K))v(s_1+1,s_2+1)]\\&\quad = a_1\lambda (a_1)+\beta \lambda (1-p(a_1))[v(s_1,s_2+1)-v(s_1+1,s_2+1)], \end{aligned}$$where the last equality follows from \(p(a_K)=0\), and

$$\begin{aligned}&f_0(s_1+1,s_2)-f_0(s_1+2,s_2) \\&\quad = a_2\lambda (a_2)+\beta \lambda p(a_2)v(s_1+2,s_2)\\&\qquad +\,\beta \lambda (1-p(a_2))v(s_1+1,s_2)-\beta \lambda v(s_1+2,s_2)\\&\quad \ge a_1\lambda (a_1)-\beta \lambda (1- p(a_1))v(s_1+2,s_2)+\beta \lambda (1-p(a_1))v(s_1+1,s_2)\\&\quad = a_1\lambda (a_1)+\beta \lambda (1- p(a_1))[v(s_1+1,s_2)-v(s_1+2,s_2)]. \end{aligned}$$Therefore, the subconcavity of v implies that \(f_0\) is subconcave. We then show \(f_1\) is subconcave.

-

(a)

If \(0\le s_1\le B_1-1\) and \(s_2=B_2\), it follows that

$$\begin{aligned} f_1(s_1,s_2+1)- f_1(s_1+1,s_2+1)= & {} v(s_1,s_2+1) - v(s_1+1,s_2+1) \\= & {} f_1(s_1+1,s_2)-f_1(s_1+2,s_2) . \end{aligned}$$ -

(b)

If \(s_1=0\) and \(0\le s_2 \le B_2-1\), it follows that

$$\begin{aligned} f_1(s_1,s_2+1)- f_1(s_1+1,s_2+1)\le & {} f_1(s_1+1,s_2)-f_1(s_1+2,s_2)\\ \Leftrightarrow v(s_1,s_2+1) - v(s_1,s_2+2)\le & {} v(s_1,s_2+1) - v(s_1+1,s_2+1)\\ \Leftrightarrow v(s_1,s_2+2)\ge & {} v(s_1+1,s_2+1), \end{aligned}$$where the last inequality holds due to property \({\mathcal {M}}\)(3).

-

(c)

If \(1\le s_1\le B_1-1\) and \(0\le s_2 \le B_2-1\), it follows that

$$\begin{aligned} f_1(s_1,s_2+1)- f_1(s_1+1,s_2+1)\le & {} f_1(s_1+1,s_2)-f_1(s_1+2,s_2)\\ \Leftrightarrow v(s_1-1,s_2+2) - v(s_1,s_2+2)\le & {} v(s_1,s_2+1) - v(s_1+1,s_2+1), \end{aligned}$$where the last inequality holds since v is subconcave.

Thus, \(f_1\) is subconcave. We next show that \(f_2\) is subconcave.

-

(a)

If \(s_{2}=0\), it follows that

$$\begin{aligned} f_2(s_1,s_2+1)- f_2(s_1+1,s_2+1)= & {} v(s_1,s_2) - v(s_1+1,s_2) \\\le & {} v(s_1+1,s_2) - v(s_1+2,s_2)\\= & {} f_2(s_1+1,s_2)-f_2(s_1+2,s_2), \end{aligned}$$where the inequality holds since v satisfies (11).

-

(b)

If \(s_{2}\ge 1\), it follows that

$$\begin{aligned} f_2(s_1,s_2+1) - f_2(s_1+1,s_2+1)\le & {} f_2(s_1+1,s_2)-f_2(s_1+2,s_2)\\ \Leftrightarrow v(s_1,s_2) - v(s_1+1,s_2)\le & {} v(s_1+1,s_2-1) - v(s_1+2,s_2-1), \end{aligned}$$where the last inequality holds since v is subconcave.

Thus, parts (1) and (2) of Lemma 1 imply that Tv is subconcave.

-

(a)

-

(iii)

We now show that T preserves concavity in the first argument. For \(0\le s_1\le B_1-1\) and \(0\le s_2\le B_2\), we have from (i) and (ii) that Tv satisfies (9) and (10), i.e.,

$$\begin{aligned} Tv(s_1,s_2)-Tv(s_1+1,s_2)\le & {} Tv(s_1,s_2+1)-Tv(s_1+1,s_2+1),\\ Tv(s_1,s_2+1)- Tv(s_1+1,s_2+1)\le & {} Tv(s_1+1,s_2) - Tv(s_1+2,s_2), \end{aligned}$$which yield

$$\begin{aligned} Tv(s_1,s_2)-Tv(s_1+1,s_2) \le Tv(s_1+1,s_2) - Tv(s_1+2,s_2). \end{aligned}$$Therefore, it suffices to consider \(0 \le s_{1} \le B_{1}-1\) and \(s_{2}=B_{2}+1\). By a similar argument as in (ii), \(f_0\) satisfies (11) at \(s_2= B_2+1\), too. We then show that \(f_1\) and \(f_{2}\) are concave in the first argument at \(s_2=B_2+1\). It follows that, for \(0\le s_1\le B_1-1\) and \(s_2=B_2+1\),

$$\begin{aligned} f_1(s_1,s_2)-f_1(s_1+1,s_2)= & {} v(s_1,s_2)-v(s_1+1,s_2)\\\le & {} v(s_1+1,s_2) - v(s_1+2,s_2)\\= & {} f_1(s_1+1,s_2)-f_1(s_1+2,s_2), \end{aligned}$$where the inequality holds since v is concave in the first argument. It also follows that

$$\begin{aligned} f_2(s_1,s_2)-f_2(s_1+1,s_2)= & {} v(s_1,s_2-1)-v(s_1+1,s_2-1)\\\le & {} v(s_1+1,s_2-1) - v(s_1+2,s_2-1)\\= & {} f_2(s_1+1,s_2)-f_2(s_1+2,s_2), \end{aligned}$$where the inequality follows from (11). Thus, it follows from Lemma 1(1) and (2) that Tv is concave in the first argument.

-

(iv)

We now show the concavity of Tv in the second argument. Choose \(s\in S\) such that \(0\le s_1\le B_1+1\) and \(0\le s_{2}\le B_{2}-1\). We first show that \(f_0\) satisfies (12).

-

(a)

For \(s_1=B_1+1\), it follows that

$$\begin{aligned} f_0(s_1,s_2)- f_0(s_1,s_2+1)&= \beta \lambda v(s_1,s_2) - \beta \lambda v(s_1,s_2+1) \\&\le \beta \lambda v(s_1,s_2+1) - \beta \lambda v(s_1,s_2+2)\\&= f_0(s_1,s_2+1)- f_0(s_1,s_2+2), \end{aligned}$$where the inequality holds since v satisfies (12).

-

(b)

For \(s_1\le B_1\), \(f_0\) satisfies (12) by a similar argument as in (i) since v is concave in the second argument.

We then show that \(f_1\) satisfies (12).

-

(a)

If \(s_1=0\), it follows that

$$\begin{aligned} f_1(s_1,s_2)- f_1(s_1,s_2+1)= & {} v(s_1,s_2) - v(s_1,s_2+1)\\\le & {} v(s_1,s_2+1) - v(s_1,s_2+2) \\= & {} f_1(s_1,s_2+1)- f_1(s_1,s_2+2), \end{aligned}$$where the inequality follows since v satisfies (12).

-

(b)

If \(1\le s_1 \le B_1\) and \(s_2=B_2-1\), it follows that

$$\begin{aligned} f_1(s_1,s_2)- f_1(s_1,s_2+1)= & {} v(s_1-1,s_2+1) - v(s_1-1,s_2+2)\\\le & {} v(s_1,s_2+1) - v(s_1,s_2+2)\\\le & {} v(s_1-1,s_2+2) - v(s_1,s_2+2) \\= & {} f_1(s_1,s_2+1)- f_1(s_1,s_2+2), \end{aligned}$$where the first inequality holds since v satisfies (9) and the second inequality follows from property \({\mathcal {M}}\)(3).

-

(c)

If \(1\le s_1 \le B_1\) and \(s_2\le B_2-2\),

$$\begin{aligned} f_1(s_1,s_2)- f_1(s_1,s_2+1)= & {} v(s_1-1,s_2+1) - v(s_1-1,s_2+2)\\\le & {} v(s_1-1,s_2+2) - v(s_1-1,s_2+3) \\= & {} f_1(s_1,s_2+1)- f_1(s_1,s_2+2), \end{aligned}$$where the inequality follows since v satisfies (12).

We next show that \(f_2\) is concave in the second argument.

-

(a)

If \(s_2=0\), it follows that

$$\begin{aligned} f_2(s_1,s_2)- f_2(s_1,s_2+1)= & {} v(s_1,s_2) - v(s_1,s_2)\\\le & {} v(s_1,s_2) - v(s_1,s_2+1) \\= & {} f_2(s_1,s_2+1)- f_2(s_1,s_2+2), \end{aligned}$$where the inequality follows from property \({\mathcal {M}}\)(2).

-

(b)

If \(s_2\ge 1\), it follows that

$$\begin{aligned} f_2(s_1,s_2)- f_2(s_1,s_2+1)= & {} v(s_1,s_2-1) - v(s_1,s_2)\\\le & {} v(s_1,s_2) - v(s_1,s_2+1) \\= & {} f_1(s_1,s_2+1)- f_1(s_1,s_2+2), \end{aligned}$$where the first inequality holds since v satisfies (12). It now follows from Lemma 1(1) and (2) that Tv is concave in the second argument.

-

(a)

-

(v)

Next, we show that Tv has property \({\mathcal {M}}\)(1). Choose any \(s\in S\) such that \(0\le s_1\le B_1\) and \(0\le s_2\le B_2+1\). Let \(a_1,a_2\in A\) be the optimal solutions that solve (13) for states \((s_1,s_2)\) and \((s_1+1,s_2)\), respectively. For \(s_1=B_1\), we have that

$$\begin{aligned} f_0(s_1,s_2) - f_0(s_1+1,s_2)= & {} a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2) \\&+\, \beta \lambda (1-p(a_1))v(s_1,s_2)-\, \beta \lambda v(s_1+1,s_2)\\= & {} a_1\lambda (a_1) + \beta \lambda (1-p(a_1))(v(s_1,s_2)-v(s_1+1,s_2))\\\ge & {} 0, \end{aligned}$$where the last inequality holds since v satisfies \({\mathcal {M}}\)(1). For \(s_1\le B_1-1\), it follows that

$$\begin{aligned} f_0(s_1,s_2) - f_0(s_1+1,s_2)= & {} a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2)\\&+\, \beta \lambda (1-p(a_1))v(s_1,s_2)\\&-\, [a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+2,s_2)\\&+\, \beta \lambda (1-p(a_2))v(s_1+1,s_2)]\\\ge & {} a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+1,s_2)\\&+\, \beta \lambda (1-p(a_2))v(s_1,s_2)\\&-\, [a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+2,s_2)\\&+\, \beta \lambda (1-p(a_2))v(s_1+1,s_2)]\\\ge & {} 0, \end{aligned}$$where the last inequality follows from the fact that v satisfies \({\mathcal {M}}\)(1). Thus, \(f_0\) satisfies \({\mathcal {M}}\)(1). We now show that \(\mu _1f_1+\mu _2f_2\) satisfies \({\mathcal {M}}\)(1).

-

(a)

If \(s_1=0\) and \(s_2=0\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1,s_2) + \mu _2v(s_1,s_2) \\\ge & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1+1,s_2)\\= & {} \mu _1 f_1(s_1+1,s_2) + \mu _2 f_2(s_1+1,s_2), \end{aligned}$$since v satisfies properties \({\mathcal {M}}\)(1) and \({\mathcal {M}}\)(2).

-

(b)

If \(s_1=0\) and \(1\le s_2\le B_2+1\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1,s_2) + \mu _2 v(s_1,s_2-1) \\\ge & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1+1,s_2-1)\\= & {} \mu _1 f_1(s_1+1,s_2) + \mu _2 f_2(s_1+1,s_2), \end{aligned}$$where the inequality holds since v has properties \({\mathcal {M}}\)(1) and \({\mathcal {M}}\)(2).

-

(c)

If \(1\le s_1\le B_1\) and \(s_2=0\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1-1,s_2+1) + \mu _2 v(s_1,s_2) \\\ge & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1+1,s_2)\\= & {} \mu _1 f_1(s_1+1,s_2) + \mu _2 f_2(s_1+1,s_2), \end{aligned}$$where the inequality holds since v has property \({\mathcal {M}}\)(1).

-

(d)

If \(1\le s_1\le B_1\) and \(1\le s_2\le B_2\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1-1,s_2+1) + \mu _2 v(s_1,s_2-1) \\\ge & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1+1,s_2-1)\\= & {} \mu _1 f_1(s_1+1,s_2) + \mu _2 f_2(s_1+1,s_2), \end{aligned}$$where the inequality holds since v has property \({\mathcal {M}}\)(1).

-

(e)

If \(s_2 = B_2+1\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1,s_2) + \mu _2 v(s_1,s_2-1) \\\ge & {} \mu _1 v(s_1+1,s_2) + \mu _2 v(s_1+1,s_2-1)\\= & {} \mu _1 f_1(s_1+1,s_2) + \mu _2 f_2(s_1+1,s_2), \end{aligned}$$where the inequality holds since v has property \({\mathcal {M}}\)(1).

Thus, Tv has property \({\mathcal {M}}\)(1) by Lemma 1(1) and (2).

-

(a)

-

(vi)

We next show that Tv has property \({\mathcal {M}}\)(2). Choose any \(s\in S\) such that \(0\le s_1\le B_1+1\) and \(0\le s_2\le B_2\). Let \(a_1,a_2\in A\) be the optimal solutions that solve (13) for states \((s_1,s_2)\) and \((s_1,s_2+1)\). For \(s_1=B_1+1\), we have that

$$\begin{aligned} f_0(s_1,s_2) - f_0(s_1,s_2+1)= & {} \beta \lambda v(s_1,s_2) - \beta \lambda v(s_1,s_2+1) \ge 0, \end{aligned}$$where the inequality holds since v satisfies \({\mathcal {M}}\)(2). For \(s_1\le B_1\), it follows that

$$\begin{aligned} f_0(s_1,s_2) - f_0(s_1,s_2+1)= & {} a_1\lambda (a_1)\\&+\,\beta \lambda p(a_1) v(s_1+1,s_2) + \beta \lambda (1-p(a_1))v(s_1,s_2)\\&-\, [a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+1,s_2+1) \\&+\, \beta \lambda (1-p(a_2))v(s_1,s_2+1)]\\\ge & {} a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+1,s_2)\\&+\, \beta \lambda (1-p(a_2))v(s_1,s_2)\\&-\, [a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+1,s_2+1) \\&+\, \beta \lambda (1-p(a_2))v(s_1,s_2+1)]\\\ge & {} 0, \end{aligned}$$where the first inequality follows from the fact that \(a_1\) is optimal for \((s_1,s_2)\) and the second inequality holds because v satisfies \({\mathcal {M}}\)(2). Thus, \(f_0\) satisfies \({\mathcal {M}}\)(2). We now show that \(\mu _1f_1+\mu _2f_2\) satisfies \({\mathcal {M}}\)(2).

-

(a)

If \(s_1=0\) and \(s_2=0\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1,s_2) + \mu _2v(s_1,s_2) \\\ge & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v satisfies property \({\mathcal {M}}\)(2).

-

(b)

If \(s_1=0\) and \(1\le s_2\le B_2\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1,s_2) + \mu _2 v(s_1,s_2-1) \\\ge & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v has property \({\mathcal {M}}\)(2).

-

(c)

If \(1\le s_1\le B_1+1\) and \(s_2=0\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1-1,s_2+1) + \mu _2 v(s_1,s_2) \\\ge & {} \mu _1 v(s_1-1,s_2+2) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v has property \({\mathcal {M}}\)(2).

-

(d)

If \(1\le s_1\le B_1+1\) and \(s_2= B_2 \ne 0\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1-1,s_2+1) + \mu _2 v(s_1,s_2-1) \\\ge & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$where the inequality holds since v has properties \({\mathcal {M}}\)(1) and \({\mathcal {M}}\)(2).

-

(e)

If \(1\le s_1\le B_1+1\) and \(1\le s_2 \le B_2-1\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1-1,s_2+1) + \mu _2 v(s_1,s_2-1) \\\ge & {} \mu _1 v(s_1-1,s_2+2) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v has property \({\mathcal {M}}\)(2).

-

(f)

If \(1\le s_1 \le B_1\) and \(s_2 = 0 = B_{2}\), it follows that

$$\begin{aligned} \mu _1f_1(s_1,s_2) + \mu _2f_2(s_1,s_2)= & {} \mu _1 v(s_1-1,s_2+1) +\mu _2v(s_{1},s_{2}) \\\ge & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v satisfies property \({\mathcal {M}}\)(1).

Thus, Tv has property \({\mathcal {M}}\)(2); see Lemma 1.

-

(a)

-

(vii)

Next, we show that Tv has property \({\mathcal {M}}\)(3). Choose any \(s\in S\) such that \(0\le s_1\le B_1\) and \(0\le s_2\le B_2\). Let \(a_1,a_2\in A\) be the optimal solutions that solve (13) for states \((s_1+1,s_2)\) and \((s_1,s_2+1)\). For \(s_1=B_1\), it follows that

$$\begin{aligned} f_0(s_1+1,s_2) - f_0(s_1,s_2+1)= & {} \beta \lambda v(s_1+1,s_2)\\&-\, [a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+1,s_2+1) \\&+\, \beta \lambda (1-p(a_2))v(s_1,s_2+1)]\\\le & {} \beta \lambda v(s_1+1,s_2) \\&-\, [a_K\lambda (a_K)+\beta \lambda p(a_K) v(s_1+1,s_2+1)\\&+\, \beta \lambda (1-p(a_K))v(s_1,s_2+1)]\\= & {} \beta \lambda [v(s_1+1,s_2)-v(s_1,s_2+1)] \\\le & {} 0, \end{aligned}$$where the last equality holds because \(p(a_{K})=0\) and the last inequality holds since v satisfies \({\mathcal {M}}\)(3). For \(s_1\le B_1-1\), it follows that

$$\begin{aligned}&f_0(s_1+1,s_2) - f_0(s_1,s_2+1) \\&\quad = a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+2,s_2) + \beta \lambda (1-p(a_1))v(s_1+1,s_2)\\&\qquad - \, [a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+1,s_2+1) + \beta \lambda (1-p(a_2))v(s_1,s_2+1)]\\&\quad \le a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+2,s_2) + \beta \lambda (1-p(a_1))v(s_1+1,s_2)\\&\qquad -\, [a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2+1) + \beta \lambda (1-p(a_1))v(s_1,s_2+1)]\\&\quad \le 0, \end{aligned}$$where the first inequality follows from the fact that \(a_2\) is optimal for \((s_1,s_2+1)\) and the second inequality holds because v satisfies \({\mathcal {M}}\)(3). Thus, \(f_0\) has \({\mathcal {M}}\)(3). We now show that \(\mu _1f_1+\mu _2f_2\) has \({\mathcal {M}}\)(3).

-

(a)

If \(s_1=0\) and \(s_2 = 0\), it follows that

$$\begin{aligned} \mu _1f_1(s_1+1,s_2) + \mu _2f_2(s_1+1,s_2)= & {} \mu _1 v(s_1,s_2+1) + \mu _2v(s_1+1,s_2) \\\le & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v satisfies property \({\mathcal {M}}\)(1).

-

(b)

If \(s_1=0\) and \(1\le s_2\le B_2\), it follows that

$$\begin{aligned} \mu _1f_1(s_1+1,s_2) + \mu _2f_2(s_1+1,s_2)= & {} \mu _1 v(s_1,s_2+1) + \mu _2v(s_1+1,s_2-1) \\\le & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v satisfies property \({\mathcal {M}}\)(3).

-

(c)

If \(1\le s_1 \le B_1\) and \(s_2 = 0 \ne B_{2}\), it follows that

$$\begin{aligned} \mu _1f_1(s_1+1,s_2) + \mu _2f_2(s_1+1,s_2)= & {} \mu _1 v(s_1,s_2+1) + \mu _2v(s_1+1,s_2) \\\le & {} \mu _1 v(s_1-1,s_2+2) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v satisfies properties \({\mathcal {M}}\)(1) and \({\mathcal {M}}\)(3).

-

(d)

If \(1\le s_1\le B_1\) and \(1\le s_2 \le B_2-1\), it follows that

$$\begin{aligned} \mu _1f_1(s_1+1,s_2) + \mu _2f_2(s_1+1,s_2)= & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1+1,s_2-1) \\\le & {} \mu _1 v(s_1-1,s_2+2) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v satisfies property \({\mathcal {M}}\)(3).

-

(e)

If \(1\le s_1\le B_1\) and \( s_2 = B_2 \ne 0\), it follows that

$$\begin{aligned} \mu _1f_1(s_1+1,s_2) + \mu _2f_2(s_1+1,s_2)= & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1+1,s_2-1) \\\le & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v satisfies property \({\mathcal {M}}\)(3).

-

(f)

If \(1\le s_1 \le B_1\) and \(s_2 = 0 = B_{2}\), it follows that

$$\begin{aligned} \mu _1f_1(s_1+1,s_2) + \mu _2f_2(s_1+1,s_2)= & {} \mu _1 v(s_1,s_2+1) + \mu _2v(s_1+1,s_2) \\\le & {} \mu _1 v(s_1,s_2+1) + \mu _2 v(s_1,s_2)\\= & {} \mu _1 f_1(s_1,s_2+1) + \mu _2 f_2(s_1,s_2+1), \end{aligned}$$since v satisfies property \({\mathcal {M}}\)(1).

Thus, Tv has property \({\mathcal {M}}\)(3) by Lemma 1(1) and (2), and this completes the proof. \(\square \)

-

(a)

1.1.3 Proof of Lemma 3

Let \(v\in {\mathcal {V}}\) be the unique value function that satisfies the optimality equation (7). We use value iteration to show the result. Let \(v_0\in V\) be such that \(v^0(s)=0\) for all \(s\in S\). By Lemma 1(3), \(v^0\in V\). Assume that \(v^n\in V\) for each \(n \ge 0\). We define

By Lemma 2, \(v^{n+1}\in V\). Thus, \(v^n\in V\) for all \(n\ge 0\). Since \({\mathcal {V}}\) is a Banach space and \(T:{\mathcal {V}}\mapsto {\mathcal {V}}\) is a contraction mapping with \(\beta \in (0,1)\) by Proposition 6.2.4 in [23], it follows from the Banach fixed-point theorem [23, Theorem 6.2.3] that \(\Vert v^n-v\Vert \rightarrow 0\) as \(n\rightarrow +\infty \), where v is the unique solution to (7). By Lemma 1(1), \(v^n\rightarrow v\) in (uniform) norm as \(n\rightarrow \infty \), implying \(v\in V\). \(\square \)

1.1.4 Proof of Theorem 1

Consider any discounted MDP problem with transition matrix (2), reward function (3), and \(\beta \in (0,1)\). It follows from Remark 1 that there exists \(\pi =d^{\infty }\in \varPi \) that is optimal and satisfies (8). Let \(v\in {\mathcal {V}}\) be the total discounted reward under policy \(d^{\infty }\). Then,

By Theorem 6.2.6 in [23], v satisfies the optimality equation (7). It follows that

Since v satisfies (7), it follows from Lemma 3 that \(v\in V\).

-

(1)

Choose any \(s\in S\) such that \(0\le s_1\le B_1-1\). Let \(a_1=d(s)\) be the optimal price quoted for state s and \(a_2=d(s_1+1,s_2)\) be the optimal price quoted for state \((s_1+1,s_2)\). It follows from (A.1) that

$$\begin{aligned}&r(s,a_1) + \beta \sum _{s^{\prime }\in S}p(s^{\prime }|s,a_1)v(s^{\prime }) \ \ \ge \ \ r(s,a_2)+ \beta \sum _{s^{\prime }\in S}p(s^{\prime }|s,a_2)v(s^{\prime })\\&\quad \Leftrightarrow a_1\lambda (a_1)+\beta \lambda p(a_1) v(s_1+1,s_2) + \beta \lambda (1-p(a_1))v(s)\\&\quad \ge a_2\lambda (a_2)+\beta \lambda p(a_2) v(s_1+1,s_2) + \beta \lambda (1-p(a_2))v(s), \end{aligned}$$which yields

$$\begin{aligned} \lambda (a_1)(a_1+\beta v(s_1+1,s_2)-\beta v(s)) \ \ \ge \ \ \lambda (a_2)(a_2+\beta v(s_1+1,s_2)-\beta v(s)). \end{aligned}$$(A.2)For state \((s_1+1,s_2)\), we have from (A.1) that

$$\begin{aligned}&\lambda (a_2)(a_2+\beta v(s_1+2,s_2)-\beta v(s_1+1,s_2)) \nonumber \\&\quad \ge \lambda (a_1)(a_1+\beta v(s_1+2,s_2)-\beta v(s_1+1,s_2)). \end{aligned}$$(A.3)Since \(v\in V\), it follows that (11) holds. If (11) holds as an equality, i.e., \( v(s_1+1,s_2) - v(s) = v(s_1+2,s_2)-v(s_1+1,s_2)\), it follows that (A.2) and (A.3) hold as equalities. By (8), it holds from (A.2) that \(a_{1}\le a_{2}\) and from (A.3) that \(a_{2}\le a_{1}\), which implies that \(a_{1} = a_{2}\). If (11) holds as a strict inequality, i.e., \( v(s_1,s_2) - v(s_1+1,s_2) < v(s_1+1,s_2) - v(s_1+2,s_2)\), summing (A.2) and (A.3) gives

$$\begin{aligned}&\lambda (a_1)(v(s_1+1,s_2) - v(s)-[v(s_1+2,s_2)-v(s_1+1,s_2)])\\&\quad \ge \ \ \lambda (a_2)(v(s_1+1,s_2) - v(s)-[v(s_1+2,s_2)-v(s_1+1,s_2)]), \end{aligned}$$which implies \(\lambda (a_1)\ge \lambda (a_2)\) and \(a_1\le a_2\).

-

(2)

Choose any \(s\in S\) such that \(0\le s_i\le B_i\) for \(i=1,2\). Let \(a_1=d(s)\) be the optimal price quoted for state s and \(a_2=d(s_1,s_2+1)\) be the optimal price quoted for state \((s_1,s_2+1)\). According to (A.1), it follows that (A.2) holds. For state \((s_1,s_2+1)\), we have from (A.1) that

$$\begin{aligned} \begin{aligned}&\lambda (a_2)(a_2+\beta v(s_1+1,s_2+1)-\beta v(s_1,s_2+1)) \\&\quad \ge \lambda (a_1)(a_1+\beta v(s_1+1,s_2+1)-\beta v(s_1,s_2+1)). \end{aligned} \end{aligned}$$(A.4)Since \(v\in V\), it follows that (9) holds. If (9) holds as an equality, i.e., \(v(s) - v(s_1+1,s_2) = v(s_1,s_2+1) - v(s_1+1,s_2+1)\), it follows that (A.2) and (A.4) hold as equalities. By (8), it holds from (A.2) that \(a_{1}\le a_{2}\) and from (A.4) that \(a_{2}\le a_{1}\), which implies that \(a_{1} = a_{2}\). If (9) holds as a strict inequality, i.e., \(v(s) - v(s_1+1,s_2) < v(s_1,s_2+1) - v(s_1+1,s_2+1)\), summing (A.2) and (A.4) gives

$$\begin{aligned}&\lambda (a_1)(v(s_1+1,s_2) - v(s)-[v(s_1+1,s_2+1)-v(s_1,s_2+1)])\\&\quad \ge \lambda (a_2)(v(s_1+1,s_2) - v(s)-[v(s_1+1,s_2+1)-v(s_1,s_2+1)]), \end{aligned}$$which implies \(\lambda (a_1)\ge \lambda (a_2)\) and \(a_1\le a_2\).

-

(3)

Consider any \(s\in S\) such that \(0\le s_1\le B_1 - 1\) and \(0\le s_{2} \le B_{2}\). Let \(d(s_1+1,s_2)=a_1\) and \(d(s_1,s_2+1)=a_2\). For state \((s_1+1,s_2)\), we have from (A.1) that

$$\begin{aligned} \begin{aligned}&\lambda (a_1)(a_1+\beta v(s_1+2,s_2)-\beta v(s_1+1,s_2))\\&\quad \ge \lambda (a_2)(a_2+\beta v(s_1+2,s_2)-\beta v(s_1+1,s_2)), \end{aligned} \end{aligned}$$(A.5)and for state \((s_1,s_2+1)\) we have that

$$\begin{aligned} \begin{aligned}&\lambda (a_2)(a_2+\beta v(s_1+1,s_2+1)-\beta v(s_1,s_2+1))\\&\quad \ge \lambda (a_1)(a_1+\beta v(s_1+1,s_2+1)-\beta v(s_1,s_2+1)). \end{aligned} \end{aligned}$$(A.6)Since \(v\in V\), it follows that (10) holds. If (10) holds as an equality, i.e., \(v(s_1,s_2+1) - v(s_1+1,s_2+1) = v(s_1+1,s_2) - v(s_1+2,s_2)\), it follows that (A.5) and (A.6) hold as equalities. By (8), it holds from (A.5) that \(a_{1}\le a_{2}\) and from (A.6) that \(a_{2}\le a_{1}\), which implies that \(a_{1} = a_{2}\). If (10) holds as a strict equality, i.e., \(v(s_1,s_2+1) - v(s_1+1,s_2+1) < v(s_1+1,s_2) - v(s_1+2,s_2)\), summing (A.5) and (A.6) gives

$$\begin{aligned}&\lambda (a_1)(v(s_1+2,s_2)-v(s_1+1,s_2)-[v(s_1+1,s_2+1)- v(s_1,s_2+1)])\\&\quad \ge \lambda (a_2)(v(s_1+2,s_2)-v(s_1+1,s_2)-[v(s_1+1,s_2+1)- v(s_1,s_2+1)]), \end{aligned}$$which implies \(\lambda (a_1)\le \lambda (a_2)\) and \(a_1\ge a_2\).

-

(4)

For any \(s\in S\) with \(s_1=B_1+1\), since \(r(s,a)= - \sum _{k=1}^2c_ks_k\) and \(p(s^{\prime }|s,a)\) are independent of a for all \(s^{\prime }\in S\), the optimality equation (7) is independent of a for s. Thus, it follows from (8) that \(d(s)=\min A = a_{0}\).

This completes the proof. \(\square \)

1.2 Proofs for Sect. 5.3

In this section, we provide proofs for some of the results in Sect. 5.3.

1.2.1 Proof of Proposition 1

Since the action space A and state space S are both finite, we have that \(\varPi \) is finite. Let \(\{\beta _n\}_{n=1}^{\infty }\) be an infinite sequence of discount factors, where \(\beta _n\in (0,1)\) for each n and \(\beta _n\rightarrow 1\) as \(n\rightarrow \infty \). It follows from Remark 1 that there exists \(\pi _n\in \varPi \) that is optimal and satisfies (8) for the discounted problem with \(\beta _n\) for each n. Since \(\{\pi _n\}_{n=1}^{\infty }\subset \varPi \) is infinite but \(\varPi \) is finite, there exists some policy \(\pi ^*\in \{\pi _n\}_{n=1}^{\infty }\) which is optimal for a subsequence, \(\{\beta _{n(k)}\}_{k=1}^{\infty }\), of the original sequence. The optimality of \(\pi ^*\) implies that

This completes the proof. \(\square \)

1.2.2 Proof of Proposition 3

Define \(p_d(s):=(p(s^{\prime }|s,d(s)), s^{\prime }\in S)\) as the column vector transposed from the row vector of \(P_d\) corresponding to state \(s\in S\) under \(d^{\infty }\in \varPi \).

-

(1)

For any \(s\in S\) with \(s_1=B_1+1\), it follows from equations (2) and (3) that \(r(s,a) + \sum _{s^{\prime }\in S}p(s^{\prime }|s,a)h(s)\) is independent of action \(a\in A\), which indicates that any policy is h-improving at s with \(s_1=B+1\). Thus, d is h-improving for all \(s\in S\). It follows from Theorem 8.4.4 in [23] that \(d^{\infty }\) is optimal for the long-run average MDP problem.

-

(2)

Since (g, h) satisfies (5) and d is h-improving for all \(s\in S\) by (1), we have that

$$\begin{aligned} r_d - ge+ (P_d-I) h = \max _{\delta : \delta ^{\infty }\in \varPi }\left\{ r_{\delta }-ge+(P_{\delta }-I)h\right\} =0. \end{aligned}$$(A.7)Since d is strictly h-improving for \(s\in S\) such that \(0\le s_1\le B_1\), we have, for all \(s\in S\) such that \(0\le s_1\le B_1\),

$$\begin{aligned} 0&= r(s,d(s)) - g + (p_d(s))^{\intercal }h - h(s) \\&> r(s,a) - g + \sum _{s^{\prime }\in S}p(s^{\prime }|s,a)h(s^{\prime }) - h(s), \forall a\in A, a\ne d(s). \end{aligned}$$It follows from Proposition 8.5.10 in [23] (i.e., the action elimination rule) that any policy that does not use d(s) at s cannot be optimal. Thus, d(s) is the unique optimal action for \(s\in S\) with \(0\le s_1\le B_1\).

-

(3)

Recall that \(h^{d^{\infty }}\) and \(g^{d^{\infty }}\) are the bias and gain under policy \(d^{\infty }\), respectively. It follows from (A.7) and Corollary 8.2.7 in [23] that \(h=h^{d^{\infty }}+ke\) for some k and \(g=g^{d^{\infty }}\) since the MDP is unichain. Define

$$\begin{aligned} E_d: = \left\{ (s,\delta ^{\infty })\in S\times \varPi : 0\le s_1\le B_1, \delta (s) \ne d(s)\right\} . \end{aligned}$$Since d is strictly h-improving at \(s\in S\) with \(0\le s_1\le B_1\), we have that

$$\begin{aligned} r(s,d(s)) + (p_d(s))^{\intercal }h > r(s,\delta (s)) + (p_{\delta }(s))^{\intercal }h, \ \ \forall (s,\delta ^{\infty })\in E_d. \end{aligned}$$Substituting \(h=h^{d^{\infty }}+ke\) with \(k :=-h^{d^{\infty }}(s_0)\) into the above inequality gives that

$$\begin{aligned} \begin{aligned}&r(s,d(s)) + (p_d(s))^{\intercal }(h^{d^{\infty }}-h^{d^{\infty }}(s_0)e) \\&\quad > r(s,\delta (s)) + (p_{\delta }(s))^{\intercal }(h^{d^{\infty }}-h^{d^{\infty }}(s_0)e), \end{aligned} \end{aligned}$$(A.8)where \(s_0\in S\) is some arbitrary state. Define

$$\begin{aligned} \epsilon : = \min _{(s,\delta ^{\infty })\in E_d} \left\{ r(s,d(s)) - r(s,\delta (s))+ (p_d(s)-p_{\delta }(s))^{\intercal }(h^{d^{\infty }}-h^{d^{\infty }}(s_0)e)\right\} . \end{aligned}$$We have that \(\epsilon >0\) is well defined since S and \(\varPi \) are both finite. For any \(\beta \in (0,1)\), since the considered MDP is unichain, it follows from Corollary 8.2.4 in [23] that

$$\begin{aligned} h^{d^{\infty }} = v_{\beta }^{d^{\infty }} - (1-\beta )^{-1}ge -\varphi (\beta ), \end{aligned}$$where \( v_{\beta }^{d^{\infty }} :=( v_{\beta }^{d^{\infty }}(s), s \in S)\) and \(\varphi (\beta )\) are both vectors, and \(\Vert \varphi (\beta )\Vert \rightarrow 0\) as \(\beta \rightarrow 1\). This further implies

$$\begin{aligned} \Vert v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e - (h^{d^{\infty }}-h^{d^{\infty }}(s_0)e)\Vert = \Vert \varphi (\beta )-\varphi (\beta )(s_0)e\Vert \rightarrow 0 \text { as } \beta \rightarrow 1. \end{aligned}$$It follows from Corollary 8.2.5 [23] and the fact that the considered MDP is unichain that \( \Vert (1-\beta )v_{\beta }^{d^{\infty }} - ge \Vert \rightarrow 0\) and \(\Vert (1-\beta )v_{\beta }^{d^{\infty }}(s_0)e - ge\Vert \rightarrow 0\) as \(\beta \rightarrow 1\), which yields

$$\begin{aligned}&\Vert \beta [v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e] - [h^{d^{\infty }}-h^{d^{\infty }}(s_0)e]\Vert \\&\quad = \Vert \beta [v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e] +ge-ge -[ v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e] + [v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e] \\&\qquad - \,[h^{d^{\infty }}-h^{d^{\infty }}(s_0)e]\Vert \\&\quad \le \Vert (1-\beta )v_{\beta }^{d^{\infty }} - ge \Vert + \Vert (1-\beta )v_{\beta }^{d^{\infty }}(s_0)e - ge\Vert + \Vert [v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e] \\&\qquad -\, [h^{d^{\infty }}-h^{d^{\infty }}(s_0)e]\Vert \\&\qquad \rightarrow \ \ 0 \ \text {as} \ \beta \rightarrow 1. \end{aligned}$$Thus, there exists \(\beta ^*\in (0,1)\) such that \(\Vert \beta (v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e)-(h^{d^{\infty }}-h^{d^{\infty }}(s_0)e)\Vert \le {\epsilon }/{4}\) for all \( \beta \in [\beta ^*,1)\), which implies

$$\begin{aligned} -\frac{\epsilon }{4} \le \beta (v_{\beta }^{d^{\infty }}(s) - v_{\beta }^{d^{\infty }}(s_0)) - (h^{d^{\infty }}(s)-h^{d^{\infty }}(s_0)) \le \frac{\epsilon }{4},\ \ \forall \beta \in [\beta ^*,1), s\in S, \end{aligned}$$which with (A.8) implies that, for all \(\beta \in [\beta ^*,1)\) and \((s,\delta ^{\infty })\in E_d\),

$$\begin{aligned}&r(s,d(s)) + \beta (p_d(s))^{\intercal }(v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e) \\&\quad \ge r(s,d(s)) + (p_d(s))^{\intercal }(h^{d^{\infty }} - h^{d^{\infty }}(s_0)e)-{\epsilon }/{4} \\&\quad > r(s,\delta (s)) + (p_{\delta }(s))^{\intercal }(h^{d^{\infty }} - h^{d^{\infty }}(s_0)e)+{\epsilon }/{4} \\&\quad \ge r(s,\delta (s)) + \beta (p_{\delta }(s))^{\intercal }(v_{\beta }^{d^{\infty }} - v_{\beta }^{d^{\infty }}(s_0)e). \end{aligned}$$It follows that, for all \(\beta \in [\beta ^*,1)\),

$$\begin{aligned} v_{\beta }^{d^{\infty }}(s)&= r(s,d(s)) + \beta (p_d(s))^{\intercal }v_{\beta }^{d^{\infty }}\nonumber \\&> r(s,\delta (s)) + \beta (p_{\delta }(s))^{\intercal }v_{\beta }^{d^{\infty }}, \ \ \forall (s,\delta ^{\infty })\in E_d. \end{aligned}$$(A.9)When \(s_1=B_1+1\), (A.9) holds as an equality, since the left-hand side and right-hand side of the inequality are independent of actions. Any \(d(s) \in A\) will be optimal for \(s \in S\) such that \(s_{1} = B_{1}+1\) and \(0 \le s_{2} \le B_{2}+1\). Thus, \(d^{\infty }\) is optimal for the discounted MDP problem for all discount factors \(\beta \in [\beta ^*,1)\) by Theorem 6.2.6 in [23]. Also, note that \(d(s) = a_{0} = \min A\) for all \(s \in S\) with \(s_{1} = B_{1}+1\) and \(0 \le s_{2} \le B_{2}+1 \), which ensures with (A.9) that \(d^{\infty }\) satisfies the tie-breaking rule (8). Thus, \(d^{\infty }\in \varPi ^*\).

This completes the proof. \(\square \)

1.3 Proof for Section 5.4

In this section, we provide a proof for Proposition 5 in Sect. 5.4.

1.3.1 Proof of Proposition 5

Let \(d^{\infty }\in \varPi \) be h-improving. For any \(s\in S\) with \(0\le s_1\le B_1\), define \(\varphi _s(a): = a p(a) + p(a)(h(s_1+1,s_2)-h(s))\). The supposition that \(d^{\infty }\) is h-improving implies that

Since \(\lambda >0\) is a constant, it follows that

Under Assumption 2, we have \(p(a) = 1-F(a)\). Thus, the first-order derivative of the above objective function is

where

and it follows from Assumption 2 that \(u_{s}(a)\) is continuous on \([{{{\underline{a}}}}, {\bar{a}}]\).

Under Assumption 3, \(u_s(a)\) is strictly decreasing in \([{{{\underline{a}}}}, {\bar{a}}]\), and thus \(u_s(a)\) is strictly decreasing in \([a_{0},a_{K}] \subset [{{{\underline{a}}}}, {\bar{a}}]\). Thus, it follows that \(u_s(a_{0}) > u_{s}({a}_{K})\). Recall that we have assumed \(a_{K}=\bar{a}\) in Sect. 5.4. We have the following three cases:

-

Case 1.\(u_s(a_{0})\le 0\). Since \(u_s(a)\) is strictly decreasing in \([a_{0},a_{K}]\), it follows that \(u_s(a) < u_s(a_{0})\le 0\) for all \(a\in (a_{0},a_{K}]\). Since \(f(a)>0\) by Assumption 2, we have that \(\varphi _s^{\prime }(a) = f(a)u_{s}(a) < 0\) in \( (a_{0},a_{K}]\) and \(\varphi _s^{\prime }(a_{0})\le 0\). Thus, \(\varphi _s(a)\) is strictly decreasing in \([a_{0},a_{K}]\), which implies \(d(s) = a_0\) is the unique solution to (A.10).

-

Case 2.\(u_s(a_{K})\ge 0\). Since \(u_s(a)\) is strictly decreasing in \([a_{0},a_{K}]\), it follows that \(u_s(a) > u_s(a_{K})\ge 0\) for all \(a\in [a_{0},a_{K})\). Since \(f(a)>0\), it follows that \(\varphi _s^{\prime }(a) = f(a)u_{s}(a) > 0\) in \( [a_{0},a_{K})\) and \(\varphi _s^{\prime }(a_{K})\ge 0\) since \(f(a)>0\). Thus, \(\varphi _s(a)\) is strictly increasing on \([a_{0},a_{K}]\), which implies that \(d(s) = a_K\) is the unique solution to (A.10).

-

Case 3.\(u_s(a_{0})> 0 > u_s(a_{K})\). Then, there exists a unique \(a_s^*\in (a_{0},a_{K})\) such that \(u_s(a^*_s) = 0\) by the continuity and strict monotonicity of \(u_s(a)\) . We then have the following two cases:

-

(i)

If \(a_s^*\in A\), we have \(u_s(a) > u_s(a_s^*) =0\) for all \(a\in (a_0, a_s^*)\) and \(u_s(a) < u_s(a_s^*) =0\) for all \(a\in (a_s^*, a_K)\), which implies \(\varphi _s^{\prime }(a)>0\) for all \((a_0, a_s^*)\) and \(\varphi _s^{\prime }(a)<0\) for all \((a_s^*,a_K)\). Thus, \(\varphi _s(a)\) is strictly increasing in \((a_0, a_s^*)\) and strictly decreasing in \((a_s^*,a_K)\), which implies \(\varphi _s(a)<\varphi _s(a_s^*)\) for all \(a\in [{{{\underline{a}}}}, \bar{a}]\). Then, \(d(s) = a_s^*\) is the unique solution to (A.10).

-

(ii)

If \(a^*_s\in (a_k,a_{k+1})\), where \(k=0,1,\ldots ,K-1\), by an argument similar to the one in (i), \(\varphi _s(a)<\varphi _s(a_k)\) for all \(a\in [{{{\underline{a}}}}, a_k]\) and \(\varphi _s(a)<\varphi _s(a_{k+1})\) for all \(a\in [a_k, {\bar{a}}]\). Thus, \(d(s)\in \{a_k,a_{k+1}\}\) can take at most two values.

-

(i)

This completes the proof. \(\square \)

Proofs and supporting results for Sect. 6

This section provides proofs for some of the results in Sect. 6, as well as supporting materials.

1.1 Lemma B.1

Consider any static policy \(\pi = d^{\infty } \in \varPi \) such that \(d(s) = a\in A\) for all \(s\in S\). For any \(s:=(s_{1}, s_{2}) \in S\), let \({\hat{\gamma }}_{d}:=\left( {\hat{\gamma }}_{d}(s), s\in S\right) \) denote an invariant measure of \(\{X_{\pi }(t):t\ge 0\}\), where \( {\hat{\gamma }}_{d}(0,0) =1\) and \({\hat{\gamma }}_{d}^{\intercal } Q_{d^{\infty }} = 0\). Define \(\gamma _{d}(s_1): =({\hat{\gamma }}_{d}(s_1,s_2), s_2\in S_2) \in \mathbb {R}^{|S_{2}|}\) as a column vector for each \(s_1\in S_1\), where \(|S_{2}|=2\). Thus, it holds that \({\hat{\gamma }}_{d} = (\gamma _{d}(s_{1}), s_{1}\in S_{1})\).

In Lemma B.1, we derive a closed-form expression of \(\gamma _{d}(s_{1})\) for all \(s_{1} \in S_{1}\), which will be used to prove Proposition 6. Meanwhile, Lemma B.1 is interesting in its own right, as it provides an analytical expression of an invariant measure that we can use to derive the closed-form steady-state distribution for a tandem line with a general \(B_{1}\in \mathbb {Z}_{+}\) and \(B_{2}=0\).

Lemma B.1

Assume that \(B_{2}=0\). Consider any static policy \(\pi = d^{\infty } \in \varPi \) such that \(d(s) = a\in A\) and \(a\ne a_{K}\) for all \(s\in S\). Then,

where \(x_{j} = \lambda (a)\left[ \lambda (a)+\mu _1+\mu _2+(-1)^j\varrho \right] /(2\mu _1\mu _2)\), \(\theta _{j}=(\lambda (a) +\mu _1-{\lambda (a) }/{x_{j}})/\mu _2\), and \(\psi _{j} =(\varrho +(-1)^j(\lambda (a) -\mu _1+\mu _2))/(2 \varrho )\), for \(j \in \{1,2\}\), with

Proof

By ordering the states in S as \((0,0)^{\intercal }, (0,1)^{\intercal }, \ldots , (s_{1},0)^{\intercal }, (s_{1},1)^{\intercal },\ldots , (B_{1}+1,0)^{\intercal },(B_{1}+1,1)^{\intercal }\) and using (1), the infinitesimal rate matrix \(Q_{d^{\infty }}\) is of the following diagonal-block form:

where

Since \({\hat{\gamma }}_d^{\intercal }Q_{d^{\infty }} = 0\), we have the following system of second-order homogeneous difference equations:

where the first and third equations represent the boundary conditions.

Define the polynomial matrix \(Q(x) := Q_1 + Q_0x + Q_{-1}x^2\), and let n be the degree of \(\text {det}(Q(x))\) in x (\(n=3\) for our case). Let \(x_j\in \mathbb {R}\) and \(y_j :=(y_{j,1},y_{j,2})^{\intercal }\in \mathbb {R}^{|S_2|}\), where \(j \in \{1,2,\ldots , n\}\), be such that

Since \({\hat{\gamma }}_{d}(0,0)=1\), it follows from boundary condition (B.2) that

The solution of (B.3) can be written as

where we will need to find nonzero \(x_{j}\) and \(y_{j}\) that satisfy (B.5) for \(j \in \{1,2,\ldots ,n\}\), and \(\psi _{1},\ldots , \psi _{n}\) are the coefficients determined to satisfy \({\hat{\gamma }}_{d}(0,0)=1\) and (B.6); see [19].

Let \(x\in \mathbb {R}\) and \(y\in \mathbb {R}^{|S_2|}\) be such that

Since we need nonzero \(x_{j}\) and \(y_{j}\), where \(j \in \{1,2,\ldots ,n\}\), which satisfy (B.5), consider \(x\ne 0\) and \(y \ne 0\). Thus, \(\text {det}(Q(x)) = 0\). It follows from \(\text {det}(Q(x)) = 0\) that \(y_{j}=(1, \theta _{j})^{\intercal }\) for \(j \in \{1,2,3\}\), \(\theta _{3}=\mu _{1}/\mu _{2}\), \(x_{3}=1\), and \(x_{1}\), \(x_{2}\), \(\theta _{1}\), \(\theta _{2}\) are as stated in the lemma.

It follows from \({\hat{\gamma }}_{d}(0,0) =1\), (B.6), and (B.7) that \(\psi _{1}\), \(\psi _{2}\), \(\psi _{3}\) must satisfy

yielding \(\psi _{3}=0\) and \(\psi _{1}\), \(\psi _{2}\) as stated in the lemma.

It now remains to compute \({\hat{\gamma }}_{d}(B_{1}+1,1)\). With all the parameters obtained above, we have from (B.7) that

It follows from boundary condition (B.4) that

Since \(a \ne a_{K}\), we have \(\lambda (a) \ne \lambda (a_{K})=0\). Thus, we have \(x_{j}\ne 0\) for \(j \in \{1,2\}\). It thus follows that \(\gamma _{d}(s_{1})\) for \(s_{1} \in S_{1}\) is given by (B.1). This completes the proof. \(\square \)

1.2 Lemma B.2

Lemma B.2 characterizes some properties of the parameters in (B.1).

Lemma B.2

For any \(a\in A\) with \(a\ne a_{K}\), the following hold:

-

(1)

\(x_{2}> x_{1}> 0\).

-

(2)

\(\psi _{j}> 0\) for \(j=1,2\).

-

(3)

\(1+\theta _{j} > 0\) for \(j=1,2\).

-

(4)

\( x_{2}<1\) if and only if \(\lambda (a) < \frac{\mu _1\mu _2}{\mu _1+\mu _2}\) (i.e., \(\rho (a)=\frac{\lambda (a)}{\mu _1} + \frac{\lambda (a)}{\mu _2}< 1\)\()\).

-

(5)

If \(\lambda (a) < \frac{\mu _1\mu _2}{\mu _1+\mu _2}\), then \(\theta _{j}\frac{\lambda (a)}{\mu _2}+x_{j} > 0\) for \(j=1,2\).

Proof

Since \(a\ne a_{K}\), then \(\lambda (a)\in (0,\lambda ]\).

-

(1)

It trivially holds that \(0< x_{1}< x_{2}\).

-

(2)

Since \(\varrho ^2 - (\lambda (a)-\mu _1+\mu _2)^2 = 4\lambda (a)\mu _1> 0\) and \(\varrho > 0\), it follows that

$$\begin{aligned} \psi _{j} = \frac{\varrho +(-1)^j(\lambda (a) -\mu _1+\mu _2)}{2 \varrho } \ \ > \ \ 0, \ \ \forall j=1,2. \end{aligned}$$ -

(3)

It also follows that

$$\begin{aligned} 1+ \theta _{1}&= \frac{\lambda (a)+\mu _1+\mu _{2}-\varrho }{2\mu _{2}}> 0,\\ 1 + \theta _{2}&= \frac{\lambda (a)+\mu _1+\mu _{2}+\varrho }{2\mu _{2}} > 0. \end{aligned}$$ -

(4)

Note that \(\varrho > 0\). We first show the “if” direction. Since \(\lambda (a)< \frac{\mu _1\mu _2}{\mu _1+\mu _2}\), we have that \(\lambda (a)< \mu _1\), \(\lambda (a)< \mu _2\), and

$$\begin{aligned}&\lambda (a)^2+\lambda (a)(\mu _1+\mu _2)-2\mu _1\mu _2< \lambda (a)^2+\mu _1\mu _2-2\mu _1\mu _2\\&\quad = \lambda (a)^2-\mu _1\mu _2 < 0. \end{aligned}$$Since \( (\lambda (a)\varrho )^2-(\lambda (a)^2+\lambda (a)(\mu _1+\mu _2)-2\mu _1\mu _2)^2 =\) \(4\mu _1 \mu _2 (\lambda (a)\mu _1 +\lambda (a)\mu _2 -\mu _1\mu _2)< 0\), it follows that \(\lambda (a)^2+\lambda (a)(\mu _1+\mu _2)-2\mu _1\mu _2+\lambda (a) \varrho < 0\). Thus,

$$\begin{aligned} x_{2} - 1&= \frac{\lambda (a)\left( \lambda (a)+\mu _1+\mu _2+\varrho \right) }{2\mu _1\mu _2} -1\\&= \frac{\lambda (a)^2+\lambda (a)(\mu _1+\mu _2)-2\mu _1\mu _2+\lambda (a) \varrho }{2\mu _1\mu _2}< \ \ 0\\&\ \ \Rightarrow \ \ x_{2} < \ \ 1. \end{aligned}$$We now show the “only if” part.

$$\begin{aligned} x_{2}<1&\Rightarrow \ \ \varrho ^{2}< \left( \frac{2\mu _1\mu _2}{\lambda (a)} - (\lambda (a)+\mu _1+\mu _2)\right) ^2 \\&\Rightarrow \ \ \frac{4\mu _{1}\mu _{2}[\lambda (a)(\mu _{1}+\mu _{2})-\mu _{1}\mu _{2}]}{\lambda ^2(a)}<0 \\&\Rightarrow \ \ \lambda (a)<\frac{\mu _{1}\mu _{2}}{\mu _{1}+\mu _{2}}. \end{aligned}$$ -

(5)

Since \(\lambda (a) < \frac{\mu _1\mu _2}{\mu _1+\mu _2}\), it holds that

$$\begin{aligned}&\left[ \mu _1^2+\mu _2^2+\lambda (a)(\mu _1+\mu _2)\right] ^2-[(\mu _1+\mu _2)\varrho ]^2\\&\quad = 4\mu _1\mu _2(\mu _1\mu _2-\lambda (a)\mu _1-\lambda (a)\mu _2)\ \ >\ \ 0, \end{aligned}$$which yields

$$\begin{aligned} \theta _{j}\frac{\lambda (a)}{\mu _2}+x_{j}&= \lambda (a) \frac{\mu _1^2+\mu _2^2+\lambda (a)(\mu _1+\mu _2)+(-1)^{j}(\mu _1+\mu _2)\varrho }{2\mu _1\mu _2^2}\\&> 0, \ \ \forall j=1,2. \end{aligned}$$

This proves the desired result. \(\square \)

1.3 Lemma B.3

Under any \(a \ne a_{K}\) and \(B_{1}, B_{2} \in \mathbb {Z}_{+}\), the Markov process under static policy \(d^{\infty }\), where \(d(s)=a\) for all \(s \in S\), is positive recurrent. Thus, we have a closed-form expression of the steady-state distribution as follows:

where \(\gamma _{d}(s_{1})\) is given by (B.1). Lemma B.3 shows that the blocking probability converges to zero as \(B_{1}\) goes to infinity when \(\rho (a)<1\) and Assumption 4 holds.

Lemma B.3

Consider \(B_{2}=0\) and any static policy \(d^{\infty } \in \varPi \) with \(d(s) = a\in A\) for all \(s \in S\). Under Assumption 4, if \(\rho (a) < 1\), then \(\Vert \eta _d(B_{1}+1)\Vert _{1}\) is non-increasing in \(B_{1}\) and \(\lim _{B_{1}\rightarrow \infty } \Vert \eta _d (B_{1}+1)\Vert _{1}=0\).

Proof

If \(a=a_{K}\), no customers enter the system. Then, \(\Vert \eta _d (B_{1}+1)\Vert _{1}=0\) and the result holds trivially. We now consider \(a\ne a_{K}\). Define \( d_{1}: = \psi _{1}(1+\theta _{1})(1-x_{2})\) and \(d_{2}: = \psi _{2}(1+\theta _{2})(1-x_{1})\). We have that \(d_{1} + d_{2} = 1\). Since \(\lambda (a) < \frac{\mu _1\mu _2}{\mu _1+\mu _2}\), it follows from Lemma B.2(1)–(4) that \(d_{j}>0\) for \(j \in \{1,2\}\).

It follows from (B.8), Lemma B.1, and Lemma B.2(1) and (4) that

which implies by Lemma B.2(5) that \(\Vert \eta _d(B_{1}+1)\Vert _{1}\) is monotone non-increasing in \(B_{1}\) and

This completes the proof. \(\square \)

1.4 Proof of Proposition 7

Since \(\lambda (a_0)<{\mu _1\mu _2}/({\mu _1+\mu _2})\), it follows from \(\lambda (a) \le \lambda (a_{0})\) that \(\lambda (a)<{\mu _1\mu _2}/({\mu _1+\mu _2})\) for all \(a\in A\). It follows from (14) and Lemma B.3 that \(g(a, B_{1}, 0)\) is non-decreasing in \(B_{1}\) and

for each \(a\in A\).

For any static policy \(d^{\infty }\) with \(d(s)=a\in A\) for all \(s\in S\), it follows that

and

Since \((d^{\text {st}})^{\infty }\) is optimal, it follows that \(\Delta g(a, B_{1}, 0)\ge 0\). \(\square \)

1.5 Lemma B.4

Definition B.1 will be useful.

Definition B.1

A random variable X is said to be smaller than random variable Y in the usual stochastic order, denoted by \(X \preceq Y\), if \(\mathbb {P}(X>x) \le \mathbb {P}(Y>x)\) for all \(x\in \mathbb {R}\).

Consider a tandem queueing system with buffer sizes \(B_{1},B_{2} \in \mathbb {Z}_{+}\) under a pricing policy \(\pi = d^{\infty } \in \varPi \). For any time \(t\ge 0\), define \(D_{\pi }(t,B_{1},B_{2})\) as the number of customers departing from the system by time t under \(\pi \), and let \(\Psi _{\pi }(B_{1},B_{2})\) denote the throughput of the system, namely

where the limit exists since the MDP model is unichain with a finite state space. If \(\pi \) is a static pricing policy with \(\pi (s)=a\) for all \(s \in S\), we use \(D_{a}(t,B_{1},B_{2})\) and \(\Psi _{a}(B_{1},B_{2})\) to represent the number of customers departing from the system by time t under \(\pi \) and the throughput of the system, respectively. Under Assumption 4, it follows from the definition of the gain in Sect. 4.2 that, for any \(B_{1},B_{2} \in \mathbb {Z}_{+}\),

Lemma B.4 follows from Proposition 4.1 of [7] and Theorem 1.A.1 of [26] and will be used to show the convergence of the long-run average profit in a tandem queueing system with general buffer sizes.

Lemma B.4

For any buffers \(B_{1}, {\tilde{B}}_{1}, B_{2}, {\tilde{B}}_{2}\) such that \(B_{i} \le {\tilde{B}}_{i}\) for \(i \in \{1,2\}\) and \(a \in A\), \(D_{a}(t,B_{1},B_{2}) \preceq D_{a}(t,{\tilde{B}}_{1},{\tilde{B}}_{2})\) for any \(t \ge 0\).

1.6 Proof of Proposition 8

If \(a=a_{K}\), then \(\lambda (a_{K})=0\) and \(g(a_{K}, B_{1}, B_{2})=\lambda (a_{K})a_{K} = 0\) for all \(B_{1},B_{2} \in \mathbb {Z}_{+}\) and the result trivially holds. Thus, we consider \(a \ne a_{K}\) and \(\lambda (a)>0\) hereafter.

It follows from Lemma B.4 and Equation (1.A.7) of [26] that \(\mathbb {E}[D_{a}(t,B_{1}, 0)] \le \mathbb {E}[{D}_{a}(t, B_{1},{B}_{2})]\) for any \(t\ge 0\), which with (14) and (B.10) implies that

Since \(\rho (a)<1\), taking limits in the above inequalities as \(B_{1} \rightarrow \infty \), we have from Proposition 6 that

yielding that \(\lim _{B_{1}\rightarrow \infty } g(a,B_{1},B_{2}) = a\lambda (a)\). \(\square \)

Proofs and supporting results for Sect. 7

This section provides supporting results and proofs for Sect. 7.

1.1 Proof of Proposition 9

Under Assumptions 2 and 3, \(f(a)>0\) is continuous and e(a) is strictly increasing and continuous in \([a_{0}, a_{K}]\). Define \(\phi (a) :=a \lambda (a)\). Taking the first-order derivative of \(a\lambda (a)\) gives that

where \(u(a) :=-a +1/e(a)\). Thus, u(a) is continuous and strictly decreasing in \([a_{0}, a_{K}]\).

-

(1)

We have that \(u(a_{K}) = -a_{K} + 1/e(a_{K}) = - a_{K}< 0\).

-

(i)

If \(u(a_{0}) \le 0\), since u(a) is strictly decreasing in \([a_{0}, a_{K}]\), it follows that \(u(a) < u(a_{0}) \le 0\) for all \(a \in (a_{0}, a_{K}]\). Note that \(f(a) >0\). Thus, \(\phi '(a) = \lambda f(a)u(a) < 0\) for all \(a \in (a_{0}, a_{K}]\) and \(\phi '(a_{0}) \le 0\), which implies that \(\phi (a)\) is strictly decreasing in \([a_{0}, a_{K}]\). Thus, \(a^{*} = a_{0}\) is unique.

-

(ii)

If \(u(a_{0}) > 0\), since \(u(a_{K}) < 0\) and u(a) is continuous and strictly decreasing in \([a_{0}, a_{K}]\), it follows that there exists a unique \(a^{**} \in (a_{0}, a_{K})\) such that \(u(a^{**}) = 0\). Again, since u(a) is strictly decreasing, it follows that

$$\begin{aligned} \phi '(a) = \lambda f(a) u(a) \left\{ \begin{array}{ll} >0 &{} \quad \text {for } a \in [a_{0}, a^{**}),\\ =0 &{} \quad \text {for } a = a^{**}, \\ <0 &{} \quad \text {for } a \in (a^{**}, a_{K}]. \end{array} \right. \end{aligned}$$(C.1)

Thus, \(\phi (a) \) is strictly increasing in \([a_{0}, a^{**})\) and strictly decreasing in \((a^{**}, a_{K}]\), implying that \(a^{*} = a^{**}\) is unique.

-

(i)

-

(2)

Since \(a^{*} \in \arg \max _{a \in [a_{0}, a_{K}]} a\lambda (a)\) is unique by Proposition 9(1), if \(a^{*} \in A\), it follows that \(a^{*} \in \arg \max _{a \in A}a\lambda (a)\) is the unique maximizer in A. Thus, \(A^{\text {ub}} = \{a^{*}\}\).

-

(3)

If there exists some \(k \in \{0,1,\ldots , K-1\}\) such that \(a^{*} \in (a_{k},a_{k+1})\), we have \(a^{*} = a^{**}\) from the proof of Proposition 9(1). It follows from (C.1) that

$$\begin{aligned} \phi '(a) = \lambda f(a) u(a) \left\{ \begin{array}{ll} >0 &{}\quad \text {for } a \in [a_{0}, a_{k}],\\ <0 &{} \quad \text {for } a \in [a_{k+1}, a_{K}]. \end{array} \right. \end{aligned}$$Thus, \(\phi (a) \) is strictly increasing in \([a_{0}, a_{k}]\) and strictly decreasing in \([a_{k+1}, a_{K}]\), implying that \(A^{\text {ub}} \subset \{a_{k}, a_{k+1}\}\). \(\square \)

1.2 Proof of Theorem 3

-

(1)

Fix \(B_{1},B_{2}\in \mathbb {Z}_{+}\). Since \((d^{\text {st}})^{\infty }\in \varPi \), it holds that \(g^{\text {st}}(B_{1}, B_{2})\le g^{*}(B_{1}, B_{2})\). Let \(\pi ^{*}= (d^{*})^{\infty }\) denote an optimal dynamic pricing policy such that \(d^{*}(s) = a_{s}^{*}\in A\) for each \(s\in S\). Let \({\hat{\eta }}_{\pi ^{*}}\in \mathbb {R}^{|S|}\) denote the steady-state distribution of \(X_{\pi ^{*}}\) with \({\hat{\eta }}_{\pi ^{*}}(s) \ge 0\) for all \(s\in S\) and \(\Vert {\hat{\eta }}_{\pi ^{*}}\Vert _{1}=1\). Since A is finite, \(g^{\text {ub}}\) and \(a^{\text {ub}}\) are well defined and we have that

$$\begin{aligned} g^{*}(B_{1}, B_{2})&= \sum _{s\in S, s_{1}\ne B_{1}+1} a_{s}^{*}\lambda (a_{s}^{*}) {\hat{\eta }}_{\pi ^{*}}(s) \le \sum _{s\in S, s_{1}\ne B_{1}+1}\lambda (a^{\text {ub}})a^{\text {ub}}{\hat{\eta }}_{\pi ^{*}}(s) \\&\le \lambda (a^{\text {ub}})a^{\text {ub}} = g^{\text {ub}}. \end{aligned}$$ -

(2)

Fix \(B_{2}\in \mathbb {Z}_{+}\). Let \((d^{\text {ub}})^{\infty }\in \varPi \) denote a static policy such that \(d^{\text {ub}}(s) = a^{\text {ub}}\in A^{\text {ub}}\) for all \(s\in S\). Thus, it follows from the static optimality of \((d^{\text {st}})^{\infty }\) that \(g(a^{\text {ub}}, B_{1}, B_{2}) \le g^{\text {st}}(B_{1}, B_{2})\) for any \(B_{1} \in Z_{+}\). Since \(\rho (a^{\text {ub}}) <1\), it follows from Proposition 8 and Theorem 3(1) that

$$\begin{aligned} g^{\text {ub}}= & {} a^{\text {ub}}\lambda (a^{\text {ub}}) = \lim _{B_{1}\rightarrow \infty }g(a^{\text {ub}}, B_{1}, B_{2}) \le \lim _{B_{1}\rightarrow \infty } g^{\text {st}}(B_{1}, B_{2})\\\le & {} \lim _{B_{1}\rightarrow \infty }g^{*}(B_{1}, B_{2}) \le g^{\text {ub}}. \end{aligned}$$It follows that \(\lim _{B\rightarrow \infty } g^{\text {st}}(B_{1}, B_{2}) = \lim _{B_{1}\rightarrow \infty } g^{*}(B_{1}, B_{2}) = g^{\text {ub}}\). \(\square \)

1.3 Proof of Theorem 4 and supporting lemmas

Consider the tandem queueing system with buffer sizes \(B_{1},B_{2} \in \mathbb {Z}_{+}\) under a static pricing policy \(\pi =d^{\infty }\), where \(d(s)=a\) for all \(s \in S\), except that there is no blocking and customers departing from station 1 are lost if the buffer space before station 2 is full. Let \({\tilde{\Psi }}_{a}(B_{1},B_{2})\) denote the throughput of this loss system. Moreover, for any stochastic process \(\{X(t)\}\) and time t, let \(X(t-)=\lim _{s\nearrow t}X(s)\) denote the state of the stochastic process just prior to time t.

1.3.1 Lemma C.1

Lemma C.1

It holds that \({\Psi }_{a}(B_{1},B_{2}) \ge {\tilde{\Psi }}_{a}(B_{1},B_{2})\).

Proof

Call the tandem queueing system with communication blocking System 1 and that with customer loss System 2. Define the following notation for time \(t \ge 0\) and \(i \in \{1,2\}\):

-

\(X_{i}(t)\)\(({\tilde{X}}_{i}(t))\): the number of customers waiting in the buffer before station i or being served at station i in System 1 (2) at time t,

-

\({\tilde{D}}_{a}(t,B_{1},B_{2})\): the number of customers departing from System 2 by time t under policy \(\pi \).

Consider three Poisson processes with parameters \(\lambda (a)\), \(\mu _{1}\), and \(\mu _{2}\). Let \(\{t_{k}\}_{k=1}^{\infty }\) denote the sequence of time points for the occurrences in the Poisson process that aggregates the three Poisson processes and has rate parameter \(\lambda (a)+\mu _{1}+\mu _{2}\). Thus, each \(t_{k}\) is the time for a potential arrival to both systems (i.e., Systems 1 and 2) or service completion at station 1 or 2 in both systems. Considering any sample path of the Poisson process with parameter \(\lambda (a)+\mu _{1}+\mu _{2}\), we will show that

Since we can start both systems in the same state, (C.2) holds for \(t \in [0, t_{1})\). Assume that (C.2) holds for all \( t \in [0,t_{k})\). We have the following cases at time \(t_{k}\):

-

(1)

\(t_{k}\) is a customer’s arrival time. Since \(X_{i}(t_{k}-) \ge {\tilde{X}}_{i}(t_{k}-)\) for \(i \in \{1,2\}\) by the induction hypothesis, we have the following cases:

-

(a)

\({\tilde{X}}_{1}(t_{k}-) \le X_{1}(t_{k}-) <B_{1}+1\). Thus, the arriving customer enters the system for both systems. It follows that \(X_{1}(t_{k})=X_{1}(t_{k}-)+1 \ge {\tilde{X}}_{1}(t_{k}-)+1 = {\tilde{X}}_{1}(t_{k})\).

-

(b)

\({\tilde{X}}_{1}(t_{k}-)=X_{1}(t_{k}-)=B_{1}+1\). The arriving customer at \(t_{k}\) will be lost for both systems. Thus, \(X_{1}(t_{k})={\tilde{X}}_{1}(t_{k}) = B_{1}+1\).

-

(c)

\({\tilde{X}}_{1}(t_{k}-)<B_{1}+1\) and \(X_{1}(t_{k}-)=B_{1}+1\). The arriving customer at \(t_{k}\) will enter System 2 but will be lost for System 1. Thus, \({\tilde{X}}_{1}(t_{k}) = {\tilde{X}}_{1}(t_{k}-)+1 \le X_{1}(t_{k})=X_{1}(t_{k}-)=B_{1}+1\).

There is no departure from either station 1 or 2 at time \(t_{k}\). We thus have \(X_{2}(t_{k})=X_{2}(t_{k}-) \ge {\tilde{X}}_{2}(t_{k}-)={\tilde{X}}_{2}(t_{k})\).

-

(a)

-

(2)

\(t_{k}\) is a potential service completion time at station 1. Since \(X_{i}(t_{k}-) \ge {\tilde{X}}_{i}(t_{k}-)\) for \(i \in \{1,2\}\) by the induction hypothesis, we have the following cases:

-

(a)

\({\tilde{X}}_{2}(t_{k}-) \le X_{2}(t_{k}-) <B_{2}+1\). Thus, a customer departing from station 1 at \(t_{k}\) can enter the buffer space before station 2 for both systems. It follows that \(X_{1}(t_{k})=(X_{1}(t_{k}-)-1)^{+}\ge ({\tilde{X}}_{1}(t_{k}-)-1)^{+} = {\tilde{X}}_{1}(t_{k})\) and \(X_{2}(t_{k})=X_{2}(t_{k}-)+{\mathbf {1}}_{[X_{1}(t_{k}-)>0]} \ge {\tilde{X}}_{2}(t_{k}-)+{\mathbf {1}}_{[{\tilde{X}}_{1}(t_{k}-)>0]} = {\tilde{X}}_{2}(t_{k})\).

-

(b)

\({\tilde{X}}_{2}(t_{k}-)=X_{2}(t_{k}-)=B_{2}+1\). A customer departing from station 1 of System 1 would be blocked from entering service at station 2 and that departing from station 1 of System 2 would become lost. Thus, \(X_{1}(t_{k}) = X_{1}(t_{k}-) \ge {\tilde{X}}_{1}(t_{k}-) \ge ({\tilde{X}}_{1}(t_{k}-) - 1)^{+} = {\tilde{X}}_{1}(t_{k})\) and \(X_{2}(t_{k}) = X_{2}(t_{k}-) \ge {\tilde{X}}_{2}(t_{k}-) = {\tilde{X}}_{2}(t_{k})\).

-

(c)

\({\tilde{X}}_{2}(t_{k}-)<B_{2}+1\) and \(X_{2}(t_{k}-)=B_{2}+1\). A customer departing from station 1 is blocked from entering service at station 2 in System 1, and the customer departing from station 1 will enter station 2 in System 2. Thus, \(X_{1}(t_{k}) = X_{1}(t_{k}-) \ge {\tilde{X}}_{1}(t_{k}-) \ge ({\tilde{X}}_{1}(t_{k}-) - 1)^{+} = {\tilde{X}}_{1}(t_{k})\) and \(X_{2}(t_{k}) = X_{2}(t_{k}-) \ge {\tilde{X}}_{2}(t_{k}-) + {\mathbf {1}}_{[{\tilde{X}}_{1}(t_{k}-)>0]} = {\tilde{X}}_{2}(t_{k})\).

-

(a)

-

(3)

\(t_{k}\) is a potential service completion time at station 2. Since \(X_{i}(t_{k}-) \ge {\tilde{X}}_{i}(t_{k}-)\) for \(i \in \{1,2\}\) by the induction hypothesis and there is no arrival or service completion at station 1 at \(t_{k}\), we have \(X_{1}(t_{k}) = X_{1}(t_{k}-) \ge {\tilde{X}}_{1}(t_{k}-) = {\tilde{X}}_{1}(t_{k})\) and \(X_{2}(t_{k})=(X_{2}(t_{k}-) - 1)^{+} \ge ({\tilde{X}}_{2}(t_{k}-) - 1)^{+}={\tilde{X}}_{2}(t_{k})\).

Thus, (C.2) holds for \(t=t_{k}\). Since there is no event occurrence during \(t \in (t_{k}, t_{k+1})\), (C.2) holds for \(t \in [0, t_{k+1})\), implying that (C.2) holds for all \(t \ge 0\).

Since \(X_{2}(t) \ge {\tilde{X}}_{2}(t)\) for all \(t \ge 0\), it follows that

which with (B.9) yields \(\Psi _{a}(B_{1},B_{2}) \ge {\tilde{\Psi }}_{a}(B_{1},B_{2})\). \(\square \)

1.3.2 Lemma C.2

Lemma C.2 provides an upper bound on the optimal dynamic gain and throughput of the system under any dynamic pricing policy.

Lemma C.2

Suppose that Assumption 4 holds. Then, for any \(B_{1},B_{2} \in \mathbb {Z}_{+}\) and policy \(\pi \in \varPi \), it holds that \(\Psi _{\pi }(B_{1},B_{2}) \le \Psi \) and \(g^{*}(B_{1},B_{2}) \le a_{K-1}\Psi \).

Proof

Consider any dynamic pricing policy \(\pi \in \varPi \). Call the tandem queueing system with finite buffers \(B_{1},B_{2}\) under \(\pi \) System 1 and the \(M/M/1/(B_{2}+1)\) queue with arrival rate \(\mu _{1}\) and service rate \(\mu _{2}\) System 2. For any time \(t \ge 0\) and \(i \in \{1,2\}\), define

-

\(X_{i}(t)\): the number of customers waiting in the buffer before station i or being served at station i in System 1 at time t,

-

\({\tilde{X}}_{2}(t)\): the number of customers waiting in the buffer or being served in System 2 at time t,

-

\({\tilde{D}}(t)\): the number of customer departing from System 2 by time t.

We will prove the result by a sample path argument. Similarly to the proof of Lemma C.1, consider three Poisson processes with parameters \(\lambda \), \(\mu _{1}\), and \(\mu _{2}\), and let \(\{t_{k}\}_{k=1}^{\infty }\) denote the sequence of time points for the occurrences in the corresponding aggregate Poisson process that has rate parameter \(\lambda +\mu _{1}+\mu _{2}\).

For any sample path of the Poisson process with parameter \(\lambda +\mu _{1}+\mu _{2}\), we will show that

Since we can start both systems with \(X_{2}(0)={\tilde{X}}_{2}(0)\), (C.3) holds for \(t \in [0, t_{1})\). Assume that (C.3) holds for all \( t \in [0,t_{k})\). If \(t_{k}\) is a customer arrival time in System 1, there is no departure from any station in either system at \(t_{k}\) and (C.3) holds for \(t=t_{k}\). Now, we consider the following cases for time \(t_{k}\):

-

(1)

\(t_{k}\) is a potential service completion time at station 1 in System 1 or arrival time in System 2. Since \(X_{2}(t_{k}-) \le {\tilde{X}}_{2}(t_{k}-)\) by the induction hypothesis, we have the following cases:

-

(a)

\({X}_{2}(t_{k}-) \le {{\tilde{X}}}_{2}(t_{k}-) <B_{2}+1\). Thus, a customer arriving to System 2 enters the system and that departing from station 1 of System 1 at \(t_{k}\) can enter the buffer space before station 2 of System 1. It follows that \({{\tilde{X}}}_{2}(t_{k})= {{\tilde{X}}}_{2}(t_{k}-)+1 \ge {X}_{2}(t_{k}-)+{\mathbf {1}}_{[{X}_{1}(t_{k}-)>0]} = {X}_{2}(t_{k})\).

-

(b)

\({X}_{2}(t_{k}-)= {{\tilde{X}}}_{2}(t_{k}-)=B_{2}+1\). A customer arriving to System 2 would become lost and that departing from station 1 of System 1 would be blocked from entering service at station 2. Thus, \({{\tilde{X}}}_{2}(t_{k}) = {{\tilde{X}}}_{2}(t_{k}-) \ge {X}_{2}(t_{k}-) = {X}_{2}(t_{k})\).

-

(c)

\({X}_{2}(t_{k}-)<B_{2}+1\) and \({{\tilde{X}}}_{2}(t_{k}-)=B_{2}+1\). A customer arriving to System 2 would be lost and that departing from station 1 will enter station 2 in System 1. Thus, \({{\tilde{X}}}_{2}(t_{k}) = {{\tilde{X}}}_{2}(t_{k}-) \ge {X}_{2}(t_{k}-) + {\mathbf {1}}_{[{X}_{1}(t_{k}-)>0]} = {X}_{2}(t_{k})\).

-

(a)

-

(2)

\(t_{k}\) is a potential service completion time at station 2 in System 1 or in System 2. Since \({{\tilde{X}}}_{2}(t_{k}-) \ge {X}_{2}(t_{k}-)\) by the induction hypothesis, we have \({{\tilde{X}}}_{2}(t_{k})=({{\tilde{X}}}_{2}(t_{k}-) - 1)^{+} \ge ({X}_{2}(t_{k}-) - 1)^{+}={X}_{2}(t_{k})\).

Thus, (C.3) holds for \(t=t_{k}\). Since there is no event occurrence during \(t \in (t_{k}, t_{k+1})\), (C.3) holds for \(t \in [0, t_{k+1})\), implying that (C.3) holds for all \(t \ge 0\).

Note that the throughput of System 2 is \(\Psi \), i.e., \(\lim _{t \rightarrow \infty }\frac{\mathbb {E}[{{\tilde{D}}}(t)]}{t} = \Psi \). Since \({\tilde{X}}_{2}(t) \ge {X}_{2}(t)\) for all \(t \ge 0\), it follows that

which with (B.9) yields \(\Psi _{\pi }(B_{1},B_{2}) \le \Psi \).

Let \(a(i) \in A\) denote the price paid by the i-th departing customer in System 1 under \(\pi \). Note that \(a(i) \ne a_{K-1}\) (the customer would have been blocked from entering the system otherwise). Since Assumption 4 holds, it follows from the definition of the gain in Sect. 4.2 and (C.4) that

Since \(\pi \) is arbitrary, we have \(g^{*}(B_{1},B_{2}) \le a_{K-1}\Psi \). \(\square \)

1.3.3 Lemma C.3

For any \(a \in A\) and \(B_{1} \in \mathbb {Z}_{+}\), define

Lemma C.3 provides a lower bound on the throughput of the loss system.

Lemma C.3

For any \(B_{1},B_{2} \in \mathbb {Z}_{+}\) and \(a \in A\), it holds that \({\tilde{\Psi }}_{a}(B_{1},B_{2}) \ge p_{b}(B_{1})\Psi \).

Proof

Consider the loss system under a static pricing policy \( \pi =d^{\infty }\in \varPi \), where \(d(s)=a\) for all \(s \in S\), except that server 2 idles whenever server 1 idles. Let \({\hat{\Psi }}_{a}(B_{1},B_{2})\) denote the throughput of the system. Call the loss system System 1 and that with the idling rule System 2. Define the following notation for \(a \in A\), time \(t \ge 0\), and \(i \in \{1,2\}\):

-

\(\tilde{X}_{i}(t)\)\(({\hat{X}}_{i}(t))\): the number of customers waiting in the buffer before station i or being served at station i in System 1(2) at time t,

-

\(\tilde{W}(t)\)\(({\hat{W}}(t))\): the number of customers entering station 2 of System 1(2) by time t.

Let \(\{t_{k}\}_{k=1}^{\infty }\) denote the event times in a Poisson process with rate parameter \(\lambda (a)+\mu _{1}+\mu _{2}\). We will show that

Since we can start both systems in the same state, (C.5) holds for \(t \in [0, t_{1})\). Assume that (C.5) holds for all \( t \in [0,t_{k})\). We have the following cases for time \(t_{k}\):

-

(1)

\(t_{k}\) is a customer’s arrival time. Since \({\tilde{X}}_{1}(t_{k}-) = {\hat{X}}_{1}(t_{k}-)\) and \({\tilde{X}}_{2}(t_{k}-) \le {\hat{X}}_{2}(t_{k}-)\) by the induction hypothesis, we have the following cases:

-

(a)

\(\tilde{X}_{1}(t_{k}-) = {\hat{X}}_{1}(t_{k}-) < B_{1}+1\). Thus, the arriving customer enters the system for both systems. It follows that \(\tilde{X}_{1}(t_{k})=\tilde{X}_{1}(t_{k}-)+1 = {\hat{X}}_{1}(t_{k}-)+1 = {\hat{X}}_{1}(t_{k})\).

-

(b)

\(\tilde{X}_{1}(t_{k}-)={\hat{X}}_{1}(t_{k}-)=B_{1}+1\). The arriving customer at \(t_{k}\) will be lost for both systems. Thus, \(\tilde{X}_{1}(t_{k})={\hat{X}}_{1}(t_{k}) = B_{1}+1\).

There is no departure from either station 1 or 2 at time \(t_{k}\). We thus have \(\tilde{X}_{2}(t_{k})=\tilde{X}_{2}(t_{k}-) \le {\hat{X}}_{2}(t_{k}-)={\hat{X}}_{2}(t_{k})\).

-

(a)

-

(2)

\(t_{k}\) is a potential service completion time at station 1. Since \({\tilde{X}}_{1}(t_{k}-) = {\hat{X}}_{1}(t_{k}-)\) and \({\tilde{X}}_{2}(t_{k}-) \le {\hat{X}}_{2}(t_{k}-)\) by the induction hypothesis, we have the following cases:

-

(a)

\(\tilde{X}_{2}(t_{k}-) \le {\hat{X}}_{2}(t_{k}-) <B_{2}+1\). Thus, a customer departing from station 1 at \(t_{k}\) can enter the buffer space before station 2 for both systems. It follows that \(\tilde{X}_{1}(t_{k})=(\tilde{X}_{1}(t_{k}-)-1)^{+} = ({\hat{X}}_{1}(t_{k}-)-1)^{+} = {\hat{X}}_{1}(t_{k})\) and \(\tilde{X}_{2}(t_{k})=\tilde{X}_{2}(t_{k}-)+{\mathbf {1}}_{[\tilde{X}_{1}(t_{k}-)>0]} \le {\hat{X}}_{2}(t_{k}-)+{\mathbf {1}}_{[{\hat{X}}_{1}(t_{k}-)>0]} = {\hat{X}}_{2}(t_{k})\).

-

(b)

\(\tilde{X}_{2}(t_{k}-)={\hat{X}}_{2}(t_{k}-)=B_{2}+1\). A customer departing from station 1 of System 1 would be lost for both systems. Thus, \(\tilde{X}_{1}(t_{k}) = (\tilde{X}_{1}(t_{k}-)-1)^{+} = ({\hat{X}}_{1}(t_{k}-) - 1)^{+} = {\hat{X}}_{1}(t_{k})\) and \(\tilde{X}_{2}(t_{k}) = \tilde{X}_{2}(t_{k}-) \le {\hat{X}}_{2}(t_{k}-) = {\hat{X}}_{2}(t_{k})\).

-

(c)

\(\tilde{X}_{2}(t_{k}-)<B_{2}+1\) and \({\hat{X}}_{2}(t_{k}-)=B_{2}+1\). A customer departing from station 1 will enter station 2 in System 1, and the customer departing from station 1 will be lost in System 2. Thus, \(\tilde{X}_{1}(t_{k}) = (\tilde{X}_{1}(t_{k}-)-1)^{+} = ({\hat{X}}_{1}(t_{k}-)-1)^{+} = {\hat{X}}_{1}(t_{k})\) and \(\tilde{X}_{2}(t_{k}) = \tilde{X}_{2}(t_{k}-) + {\mathbf {1}}_{[\tilde{X}_{1}(t_{k}-)>0]} \le {\hat{X}}_{2}(t_{k}-) = {\hat{X}}_{2}(t_{k})\).

-

(a)

-

(3)

\(t_{k}\) is a potential service completion time at station 2. Since \({\tilde{X}}_{1}(t_{k}-) = {\hat{X}}_{1}(t_{k}-)\) and \({\tilde{X}}_{2}(t_{k}-) \le {\hat{X}}_{2}(t_{k}-)\) by the induction hypothesis and there is no arrival or service completion at station 1, we have \( \tilde{X}_{1}(t_{k}-) = \tilde{X}_{1}(t_{k}) = {\hat{X}}_{1}(t_{k}) = {\hat{X}}_{1}(t_{k}-)\) and the following cases:

-

(a)