Abstract

In this work, we study an application of fractional-order Hopfield neural networks for optimization problem solving. The proposed network was simulated using a semi-analytical method based on Adomian decomposition,, and it was applied to the on-line estimation of time-varying parameters of nonlinear dynamical systems. Through simulations, it was demonstrated how fractional-order neurons influence the convergence of the Hopfield network, improving the performance of the parameter identification process if compared with integer-order implementations. Two different approaches for computing fractional derivatives were considered and compared as a function of the fractional-order of the derivatives: the Caputo and the Caputo–Fabrizio definitions. Simulation results related to different benchmarks commonly adopted in the literature are reported to demonstrate the suitability of the proposed architecture in the field of on-line parameter estimation.

Similar content being viewed by others

1 Introduction

The problem of system identification is ubiquitous in different research fields ranging from biology to engineering applications. The access to mathematical models of the considered phenomena, often in the form of systems of nonlinear differential equations, can help the identification process exploiting the available apriori knowledge. However, the parameters of any models present inaccuracies that need to be corrected on the basis of the experimental data available (i.e. grey-box modelling) [27]. The parameter identification problem can be solved using different techniques based on least square and maximum likelihood methods even if other techniques based on genetic algorithms, neural networks, and neural-fuzzy systems are also adopted [26, 40, 41].

It is well established that Hopfield neural networks (HNNs) [17, 18] can be used to solve optimization problems, including parameter estimation in the context of system identification [9]. Interesting examples are reported in [6, 20, 21, 39] where Hopfield neural networks were applied for on-line identification of grey-box models, to continuously obtain an estimation of the system parameters.

The application of Hopfield models to optimization is a consequence of study its stability with an energy function method: the network seeks a minimum of its Lyapunov function which is built from the target function. In parameters identification problems, Hopfield network is designed in a way that its Lyapunov function coincides with the prediction error so that the network evolution approaches a minimum of the error. In [8] and [7], the authors presented a stability analysis of HNNs for on-line parameters identification, which are different from conventional HNNs because weights and biases are time-variant and depend on the state variables of the modelled system. A comprehensive study of the problem of using HNNs for on-line parameter estimation has been provided in [4]. While these studies are based on integer order real neuron models, in our work we examine a generalization of HNNs which dynamic can be described by fractional-order differential equations [10] and, for the first time, we apply them to parameter estimation problems. Fractional-order systems were recently applied to improve the accuracy of epidemic phenomena and electrical models [1, 11] and also to realize more precise and robust control systems [31, 35]. In [12] a fractional control protocol has been applied to multi-agent systems to enhance the convergence speed and robustness of the system under constant disturbances. A new variable fractional-order derivative, applied to the coronavirus epidemic phenomena, has been proposed in [38] where, by using the fixed point theory, the existence and uniqueness of the solution have been demonstrated. In [36] a novel fractional-order PID sliding mode controller with neural network observer is proposed and applied to hypersonic vehicles. Another application of fractional-order PID controllers has been presented in [16] where a particle swarm optimization algorithm is used to search for the optimal parameters of the controllers. The interest of the research community in the field of fractional-order HNN is further demonstrated by recent theoretical analyses. Global stability problem for fractional-order HNN has been investigated in [42], adopting an intermittent control. Moreover, a new three-dimensional fractional-order HNN with a delay has been investigated, proposing a synchronization method based on a state observer [19]. The role of activation functions has been also deepened in [33] where the stability and synchronization of fractional-order HNN are analysed using Lyapunov functions. In [14] classical and non-integer model order reduction methodologies have been presented demonstrating the suitability of fractional calculus in compressing information while modelling systems and in describing long-term memory effects.

In on-line applications the convergence time is particularly relevant, therefore, its relation with fractional-order value has been investigated in simulations which have been carried out using Adomian algorithm, a semi-analytic method for simulating fractional-order differential equations [3]. Different fractional derivative definitions are available in the literature [5, 33, 34]. The Caputo and the Caputo–Fabrizio definitions were applied to develop the proposed fractional-order HNN and compared on two different cases of study commonly adopted in the literature. The former is related to the estimation of the parameter in the well-known Lorenz system that exhibits a chaotic behaviour [28]. Besides the complexity of the system dynamics, the approach can be easily applied to the system that is linear referring to the parameters. It has been used as testbed also by Lazzs and coauthors in [26] to evaluate the performances of parameter estimation methods based on swarm intelligence. Furthermore, the latter case of study is related to a mechanical two-cart system, adopted in [4] to evaluate the identification performance of an integer-order HNN. The role of the fractional order of the derivatives was investigated to demonstrate the improvements introduced by the proposed architecture when compared with traditional integer-order solutions.

The main contribution of this work is summarized as follow:

-

1.

An on-line identification method for grey-box models, based on an HNN, mathematically formulated in the context of fractional-order systems is proposed.

-

2.

The Caputo and Caputo–Fabrizio definitions of the fractional-order derivative are considered and compared in the field of parameter identification.

-

3.

The Adomian decomposition method is applied to guarantee an accurate approximation of the fractional derivatives and a fast convergence of the optimization procedure.

-

4.

The performance of the proposed solutions, both in terms of convergence time and prediction accuracy, are reported and compared, using well-established benchmarks, with other techniques adopted in the literature.

-

5.

The relation between the obtained performance and the fractional-order of the derivatives is deeply investigated to better understand the advantages and bottlenecks of the proposed approach.

The remainder of this paper is organized as follows: Sect. 2 describes the proposed HNN; Sect. 3 presents the Adomian decomposition method which has been used in simulations; Sect. 4 deals with the application of fractional-order Hopfield networks to time-varying parameter identification; and Sect. 5 describes the application of our method and main findings. Finally, conclusions are drawn in Sect. 6.

2 Fractional Hopfield neural network

A generalization of the HNN model is represented by the introduction of a fractional-order neuron. Fractional calculus has been suggested as an appropriate mathematical tool to describe a wide variety of physical, chemical and biological processes and, in particular, those following the so-called power law. Further, fractional calculus is characterized by long-term memory and non-locality: fractional derivatives of a function depend not only on local conditions of the evaluated time but also on all the history of the function [37]. A theoretical study of the behaviour and stability of fractional-order Hopfield networks (FOHNN) was presented in [25], while implementation in the form of an analog circuit in [32].

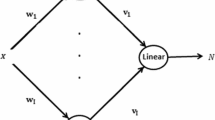

For our work, we consider FOHNN based on real-valued neurons. The network has a recurrent structure with all-to-all interconnections composed by N real-valued neurons. The state of the \(n^{th}\) neuron is denoted by a real \(s_{n}(t)\) variable for \(n=1,\ldots ,N\). Each neuron has bias input \(\varvec{I}=\{I_j\}\) and is connected to every other neuron through weights \(\varvec{W} = \{w_{jk}\}\), where \(w_{jk}\in \mathbb {R}\) is the weight connecting \(j^{th}\) and \(k^{th}\) neuron. Each neuron receives inputs from all other neurons, performs a weighted sum of the inputs \(\varvec{\xi }\) and passes the sum through the following activation function:

where \(\chi \) determines the slope of the activation function.

Dynamical model of the network can be described in vectorial form by the following fractional-order differential equation:

where \(_{_{0}}D_{t}^{(\alpha )}\) is the fractional-order derivative of order \(\alpha \),

are the input potentials to neurons, F is the activation function defined in Eq. (1) and \(\varvec{I}\) is the bias vector. The state vector of the network is expressed as \(\varvec{s}=F(\varvec{\xi }(t))\).

Equation (2) has the same structure of Abe formulation of Hopfield neuron which is widely used in optimization problems [2]. Several definitions of fractional-order time derivative are available in the literature. In this work, the Caputo and the Caputo–Fabrizio definitions have been taken into account [15].

A sufficient condition for the stability of the dynamics is that the matrix of synaptic weights \(\varvec{W}\) is symmetric with non-negative diagonal entries, that is, \(\varvec{W}=\varvec{W}^T\), \(w_{ii}\ge 0\) [23].

The Lyapunov or energy function of the state \(\varvec{s}\) is:

The existence and the specific characteristics of this Lyapunov function guarantee that the network evolves spontaneously in the descending direction of such a function until approaching the minima of the energy function.

3 Adomian decomposition method

Finding numerical solutions to fractional differential equations can be computationally intensive due to the effect of non-local derivatives in which all previous time points contribute to the current iteration [30].

However, a high-accurate approximation of fractional derivatives which demonstrates fast convergence to the solution can be obtained from the Adomian decomposition method which has been developed by George Adomian [3]. The algorithm is based on a decomposition of the nonlinear operator as a series where each term is a generalized polynomial called Adomian polynomial.

Following the notation introduced in [13], we consider the equation

with

and

where \(\varvec{F}\) represents a nonlinear ordinary differential operator involving both linear and nonlinear terms, and \(\mathbf {g}(t)\) is an inhomogeneous term.

The Adomian decomposition method requires that \(\varvec{F}\) is separated into three terms \(\varvec{F}=\varvec{L}+\varvec{R}+\varvec{N}\), where the differential operator \(\varvec{L}\) may be considered as the highest order derivative in the equation, \(\varvec{R}\) is the remainder of the differential operator and \(\varvec{N}\) expresses the nonlinear terms.

Consequently, the system in Eq. (5) becomes

Here, \(\varvec{L}\) is chosen to be easily invertible and applying the inverse operator \(\varvec{L^{-1}}\) to both sides of Eq. (8) gives

where \(\varvec{\varPsi }_0\) is the kernel of the operator \(\varvec{L^{-1}}\).

The Adomian decomposition method admits the decomposition of \(\varvec{x}\) into an infinite series of components

and the nonlinear term \(N(\varvec{x})\) into an infinite series of polynomials

where the components \(A_j^{(i)}\) are called the Adomian polynomials which can be calculated by using the following expression

with \(i=0,\dots ,M-1\) and \(j=1,\dots ,n\).

Substituting (10) and (11) into Eq. (9) gives

The components \(\varvec{x}^i\) of the solution (10) can be easily calculated by using the recursive relation

Having determined the first \(M\) components \(\varvec{x}^{(i)}\) of the solution, the \(M\)-term approximate solution in the interval \([t_0,t]\) can be defined as

In order to calculate an approximate analytical solution to system of differential equations with Caputo’s derivative, we can consider \(\mathbf{L} ^{-1}=\mathbf{J} ^{\alpha }_{t_0}\), where \(\mathbf{J} ^{\alpha }_{t_0}\) is the Riemann–Liouville fractional integration of order \(\alpha \), defined as

As the Caputo’s fractional derivative is defined as

where \(m-1<\alpha \le m\) and \(m \in \mathbb {N}\), by combining Eqs. (16) and (17) we obtain

In the same way, we can consider the Caputo–Fabrizio fractional-order derivative [29, 34]:

given \(t>0\) and \(M(\alpha )\) a normalization constant depending on \(\alpha \). In this case, the associated fractional-order integral is:

In our experiments, we imposed:

While numerical methods generally rely on discretization techniques of nonlinearities in equations and permit to calculate an approximate solution for specific values of times and require computer-intensive calculations, an analytical method like the Adomian’s gives a continuous approximation of unknown solution in terms of a truncated series (see Eq. 15) in which the original nonlinearity is transformed to other nonlinear terms (i.e. Adomian polynomials) [24].

4 Parameter estimation using Hopfield networks

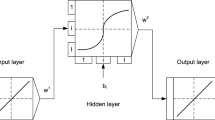

The parameter estimation problem is the identification of the numeric value of uncertain, unknown or time-varying parameters when the ordinary differential equations (ODEs) of the model are known. This kind of optimization problem can be addressed by using Hopfield Networks, as described in [8] and [22]. A scheme of the proposed identification process is reported in Fig. 1.

In particular, the dynamical system is required to be linear in parameters (LIP); therefore, it can be expressed as follows:

where \(\varvec{y}\) is called output vector (that not necessarily corresponds to the physical output of the system), \(\varvec{x}\) state vector, \(\varvec{u}\) input vector and \(\varvec{\theta }\) parameter vector and \(\varvec{A}(\varvec{x},\varvec{u})\) is a matrix whose components are nonlinear functions of the state variables and inputs. It is also required that both \(\varvec{y}\) and \(\varvec{A}\) are measurable or known.

Once the system has been described in the LIP form, the problem of parameter estimation at each time step is equivalent to find the parameter values of \(\varvec{\theta }\) that minimize the prediction error \(\varvec{e}\) of the system, which is given by the difference between \(\varvec{y}\) (the actual, measured value of the output) and \(\varvec{\hat{y}}\) the output value that is calculated by substituting the estimated parameters \(\varvec{\hat{\theta }}\) into the model:

The target function is the squared norm of the prediction error:

and, by using equation (23), it can be expressed as:

The last term of Eq. (25) can be neglected as it does not depend on the estimated parameters. Finally, we obtain the following energy function:

On the basis of the considerations reported in Sect. 2 and, following the Lyapunov function for HNN derived in Eq. (4), we can extract from Eq. (26) the following weights and biases:

As a consequence, the network defined by weights and biases in Eq. (27) has one neuron for each parameter to be estimated and \(\varvec{\hat{\theta }}\) is the state that minimizes the energy function of the network.

Furthermore, as the activation function of the network (see Eq. (1)) has a limited range of variability, it is necessary to have previous knowledge of the maximum range of variability of parameters.

5 Numerical simulations

In order to test the reliability of our model, a simulation tool based on the Adomian decomposition method was developed in Mathematica and tested to address some parameter estimation problems. All simulations were carried out with \(M=8\) terms of Adomian polynomials as a good compromise between computational effort and numerical accuracy. Two cases of study, commonly adopted as a testbed for on-line parameter estimation technique, are considered: the chaotic Lorenz system and a mechanical two-cart system. We have analysed, in both cases, the effect of the fractional parameter \(\alpha \) in a wide range, \(\alpha \in [0.05,\; 1.5]\), evaluating the performance of the Caputo and Caputo–Fabrizio fractional derivative formulations.

5.1 Parameter estimation of a Lorenz system

Parameter estimation in chaotic systems is an important topic in signal processing and control system theory [26]. A representative case of study is here reported applying the proposed identification strategy to the Lorenz oscillator. It is a three-dimensional dynamical system that exhibits chaotic flow and was named after Edward N. Lorenz, who derived it from the simplified equations of convection rolls in the atmosphere [28].

For the first time, he used the term “butterfly effect” to indicate the sensitive dependence on initial conditions: small variations of the initial condition in chaotic system may produce large variations in the long term behaviour.

Lorenz’s system can be described as:

where \(\sigma \) is called the Prandtl number and \(\rho \) is called the Rayleigh number.

All parameters\( \sigma , \rho ,\beta > 0 \), but usually \(\sigma =10\), \(\beta =8/3\), while the system exhibits chaotic behaviour for \(\rho = 28\).

Equation (28) are linear in parameters and can be written in the form \(\varvec{y}=\varvec{A}(\varvec{x},\varvec{u})\varvec{\theta }\), where

The system described in Eq. (28) has been simulated for 20 s with \(\beta =8/3\), \(\rho =28\) and considering an integration time step \(\tau =0.1\), while \(\sigma \) is randomly changed at every 50 s. At each time step \(\tau \), the equations of HNN were integrated with Adomian algorithm with a sub time step of \(\delta =\frac{\tau }{200}\). These hyperparameters have been chosen as a trade-off between precision and the computational effort. Furthermore, different values of \(\alpha \) were investigated. We found that another parameter that influences the performance of the network is the slope \(\chi \) in Eq. (1). In particular, we selected \(\chi =0.02\) for Caputo–Fabrizio definition and \(\chi =4.0\) for Caputo definition of the fractional derivative.

In order to compare the performance of the algorithm, at different conditions (with \(\alpha \) from 0.05 to 1.5), the mean squared error was calculated, that is, the average squared difference between the estimated values and the actual value:

where \(N=2000\) denotes the length of data used for parameter estimation, \(\hat{\sigma }_k\) and \(\sigma _k\) the estimated and actual parameter respectively.

With regard to fractional-order derivative, both Caputo–Fabrizio and Caputo equations were taken into account.

Figure 2a shows the results obtained for Caputo–Fabrizio FOHNN case, while the Caputo FOHNN case is reported in Fig. 2b. It can be noticed that, in both cases, fractional-order HNNs exhibit a better estimation capability, compared to the integer-order neuron structure when \(\alpha <1\). Moreover, lower values of \(\alpha \) provide a better estimation both in terms of precision and convergence time.

Table 1 and Fig. 3 report the estimation performance in term of MSE for each experiment.

The application of fractional-order systems improves the parameter estimation error when \(\alpha <1\). Moreover, the reduction of the fractional parameter \(\alpha \) is highly correlated with the improvement of the estimation performance. However, it can be noticed that for low values of \(\alpha \) (i.e. \(\alpha <0.4\)) for the Caputo–Fabrizio case), the MSE reaches a plateau where further reductions of the fractional parameter value will produce a minimum impact on the estimation performance. In general, when \(\alpha \) is very low (e.g. \(\alpha <0.05\)) numerical issues can arise during the simulations, requiring a further optimization of the adopted hyperparameters and an increase of the computational effort. The reported simulation results show better performance when Caputo–Fabrizio derivative is adopted although the estimation error converges to similar values when the parameter \(\alpha \) is in the next to the bottom side of the considered range.

Our FOHNN architecture was also compared to the particle swarm optimization approach proposed in [26], where a hybrid swarm intelligence algorithm has been proposed for the estimation of \(\sigma \), \(\rho \) and \(\beta \). In our experiments, an estimation of \(\sigma \), \(\rho \) and \(\beta \) was conducted by using fractional-order HNN starting from an initial random estimation chosen in the following range:

A total of nine experiments were conducted by varying \(\alpha \) from 0.05 to 1.5. The Lorenz system was always integrated with \(\tau =0.01\) for \(N=100\) steps, while the FOHNN has been simulated with \(\delta =\frac{\tau }{1000}\) at each time step.

We evaluated the accuracy of the identification process adopting the following index:

Figure 4 shows the MSE as a function of \(\alpha \) and the estimated parameters. Our algorithm outperforms the solution proposed in [26] terms of MSE as demonstrated in Table 2 when a fractional-order \(\alpha \le \)0.4 is considered. In this simulation, the Caputo–Fabrizio method slightly outperforms the Caputo one, for low values of \(\alpha \) (i.e. \(\alpha \le \)0.4).

Further details are reported in Fig. 5 where the estimation error in the simultaneous searching of three unknown parameters \(\sigma \), \(\rho \) and \(\beta \), using different values of \(\alpha \) for both Caputo–Fabrizio and Caputo derivatives, is depicted. The obtained results

5.2 Parameter estimation of a two-cart system

In [4] HNNs have been studied for on-line parameter estimation and applied in a two-cart system connected with a spring-damping mechanism with two unknown parameters k and b (see Fig. 6).

In this simulation, the system under consideration is linear in parameters and then it can be written in the form \(\varvec{y}=\varvec{A}(\varvec{x},\varvec{u})\varvec{\theta }\):

where \(x_i\), \(\frac{d}{dt}x_i(t)\), \(\frac{d^2}{dt^2}x_i(t)\) and \(m_i\) denote the displacement, velocity, acceleration and mass of cart i, respectively, and u(t) is the force applied to the cart 1. The unknown parameters to be identified are the spring constant k and the damper constant b.

In the following simulations, it was assumed that \(m_1=m_2=2\ [\text {kg}]\), \(k=1\ [\frac{\text {N}}{\mathrm {m}}]\), \(b=0.1\ [\frac{\text {Ns}}{\mathrm {m}}]\) and two sets of initial conditions are considered:

-

\(\text {IC}_1\): \(x_i(0)=0\ [\text {m}]\), \(\frac{d}{dt}\big |_{t=0}x_i(t)=0\ [\frac{\text {m}}{\mathrm {s}}]\)

-

\(\text {IC}_2\): \(x_i(0)=0\ [\text {m}]\), \(\frac{d}{dt}\big |_{t=0}x_1(t)=1\), \(\frac{d}{dt}\big |_{t=0}x_2(t)=2\ [\frac{\text {m}}{\mathrm {s}}]\)

Furthermore, two values of force applied to the cart 1 where considered: \(u_1(t)=e^{-t}\ [\text {N}]\) and \(u_2(t)=\text {sin}(\pi t)\ [\text {N}]\); initial estimates of k and b were randomly generated in the interval [0, 5] with uniform distribution.

Time evolution of the parameter estimation obtained with the FOHNN using the Caputo–Fabrizio derivative, when \(\alpha =[0.05,\; 0.2,\; 0.5,\; 0.8,\; 1.2,\; 1.5]\) and for different sets of initial condition IC and forces u, where \(\text {IC}_1\): \(x_i(0)=0\ [\text {m}]\), \(\frac{d}{dt}\big |_{t=0}x_i(t)=0\ [\frac{\text {m}}{\mathrm {s}}]\), \(\text {IC}_2\): \(x_i(0)=0\ [\text {m}]\), \(\frac{d}{dt}\big |_{t=0}x_1(t)=1\), \(\frac{d}{dt}\big |_{t=0}x_2(t)=2\ [\frac{\text {m}}{\mathrm {s}}]\), \(u_1(t)=e^{-t}\ [\text {N}]\) and \(u_2(t)=\text {sin}(\pi t)\ [\text {N}]\). The solid and dashed lines represent the estimated values for parameters k and b, respectively

The time evolution of the estimated parameters is represented in Fig. 7 for the Caputo–Fabrizio derivative and in Fig. 8 for Caputo derivative. The FOHNN behaviour is evaluated for a subset of \(\alpha \) values extract from the considered range. The entire sets of combinations, including initial conditions IC and forces u(t), are reported. All simulations have been performed with the following hyperparameters: \(\tau =0.01\) and \(\delta =\frac{\tau }{200}\).

Time evolution of the parameter estimation obtained with the FOHNN using the Caputo derivative, when \(\alpha =[0.05,\; 0.2,\; 0.5,\; 0.8,\; 1.2,\; 1.5]\) and for different sets of initial condition IC and forces u. The solid and dashed lines represent the estimated values for parameters k and b, respectively

In order to evaluate the improvement of using different fractional-order \(\alpha \), the estimation error (ER) at time t was calculated, defined as:

Tables 3 and 4 show the settling times (in \(\pi \)s) at which \(\text {ER}<5\times 10^{-3}\) for each experiment considering both Caputo–Fabrizio and Caputo definitions of the fractional-order derivative. Also in this case, low values of \(\alpha \) correspond to better performance if compared with the integer-order case reported in [4]. The effect of the derivate definition is different among the considered cases: in the first and fourth set-ups (\(\text {IC}_1\), \(u_1\)) and (\(\text {IC}_2\), \(u_2\)), similar results are obtained in particular for low values of the parameter \(\alpha \); in the second set-up (\(\text {IC}_1\), \(u_2\)), the Caputo–Fabrizio method outperforms the Caputo solution regardless of the selected \(\alpha \); and in the third set-up (\(\text {IC}_2\), \(u_1\)), the results obtained adopting the Caputo definition outperform the Caputo–Fabrizio solution.

6 Conclusions

In this work, an application of fractional-order Hopfield neural network was investigated for on-line parameters estimation of nonlinear dynamical models. In particular, it was found in the simulations that fractional order influences the convergence of the parameter estimation process. The selection of the Fractional-order derivative definition is another important aspect to investigate. Furthermore, simulations have been performed using Adomian decomposition method which has been confirmed as a reliable algorithm for solving fractional-order differential equations.

As known in the literature for integer-order neurons, also in the proposed approach the main requirements for the application in parameter estimation are that parameters must have a limited known variation range and that dynamical system equations must be linear in parameters. It was demonstrated for two different cases of study that the proposed approach can outperform other methods available in literature exploiting the properties of fractional-order systems that are better responsive than the integer-order one and are able to capture complex behaviours, such as the long-term memory effects of the dynamics. In particular, the fractional-order parameter \(\alpha \) represents a key element to be selected. The selection of the parameter \(\alpha >1\) does not lead to good results, whereas, for \(\alpha <1\), the estimation of the parameters improves both in terms of convergence time and accuracy. It is important to note that often the improvements obtained tend to stabilize once an optimal alpha value is reached. However, this value depends on the specific case of study investigated. It seems to be unnecessary to assign very small values to the parameter \(\alpha \) (i.e. below the range considered). However, this action would require further optimization of the hyperparameters to avoid numerical problems. The choice of the fractional-order derivative definition is a further element to be considered for the optimization of the FOHNN. The results obtained show that when \(\alpha \) is close to the top of the considered range the Caputo–Fabrizio derivative is more efficient than the Caputo definition, while, in the bottom of the considered \(\alpha \) range, the two approaches are either equivalent or there is a specific preference based on the case study under consideration. Further works will include the application of the proposed architecture in adaptive control schemes analysing the stability of the related closed-loop systems.

References

Abadias, L., Estrada-Rodriguez, G., Estrada, E.: Fractional-order susceptible-infected model: definition and applications to the study of covid-19 main protease. Fract. Calc. Appl. Anal. 23(3), 635–655 (2020)

Abe, S.: Theories on the hopfield neural networks. In: International 1989 Joint Conference on Neural Networks, vol. 1, pp. 557–564 (1989). https://doi.org/10.1109/IJCNN.1989.118633

Adomian, G.: Solving Frontier Problems of Physics: The Decomposition Method. Fundamental Theories of Physics. Springer, Berlin (2013)

Alonso, H., Mendonça, T., Rocha, P.: Hopfield neural networks for on-line parameter estimation. Neural Netw. 22(4), 450–462 (2009). https://doi.org/10.1016/j.neunet.2009.01.015

Atanackovic, T.M., Pilipovic, S., Zorica, D.: Properties of the Caputo–Fabrizio fractional derivative and its distributional settings. Fract. Calc. Appl. Anal. 21(1), 29–44 (2018). https://doi.org/10.1515/fca-2018-0003

Atencia, M., Joya, G., Sandoval, F.: Gray box identification with hopfield neural networks. Revista Investigación Operacional 25 (2004)

Atencia, M., Joya, G., Sandoval, F.: Parametric identification of robotic systems with stable time-varying hopfield networks. Neural Comput. Appl. 13, 270–280 (2004). https://doi.org/10.1007/s00521-004-0421-4

Atencia, M., Joya, G., Sandoval, F.: Hopfield neural networks for parametric identification of dynamical systems. Neural Process. Lett. 21, 143–152 (2005). https://doi.org/10.1007/s11063-004-3424-3

Atencia, M., Joya, G., Sandoval, F.: Identification of noisy dynamical systems with parameter estimation based on hopfield neural networks. Neurocomputing 121, 14–24 (2013). https://doi.org/10.1016/j.neucom.2013.01.030, advances in Artificial Neural Networks and Machine Learning

Boroomand, A., Menhaj, M.: Fractional-order hopfield neural networks. In: ICONIP 2008 Lecture Notes in Computer Science (2009)

Buscarino, A., Caponetto, R., Graziani, S., Murgano, E.: Realization of fractional order circuits by a constant phase element. Eur. J. Control 54, 64–72 (2020). https://doi.org/10.1016/j.ejcon.2019.11.009

Cajo, R., Zhao, S., Plaza, D., Keyser, R.D., Ionescu, C.: Distributed control of second-order multi-agent systems: Fractional integral action and consensus. In: 2020 39th Chinese Control Conference (CCC), pp. 4652–4657. (2020) https://doi.org/10.23919/CCC50068.2020.9189554

Caponetto, R., Fazzino, S.: An application of adomian decomposition for analysis of fractional-order chaotic systems. Int. J. Bifurc. Chaos 23(03), 1350050 (2013). https://doi.org/10.1142/s0218127413500508

Caponetto, R., Machado, J.T., Murgano, E., Xibilia, M.G.: Model order reduction: a comparison between integer and non-integer order systems approaches. Entropy (2019). https://doi.org/10.3390/e21090876

Caputo, M., Fabrizio, M.: A new definition of fractional derivative without singular kernel. Progr. Fract. Differ. Appl. 1(2), 73–85 (2015)

Dabiri, A., Moghaddam, B., Machado, J.T.: Optimal variable-order fractional PID controllers for dynamical systems. J. Comput. Appl. Math. 339, 40–48 (2018). https://doi.org/10.1016/j.cam.2018.02.029. Modern Fractional Dynamic Systems and Applications, MFDSA 2017

Hopfield, J.J.: Neural networks and physical systems with emergent collective computational abilities. Proc. Nat. Acad. Sci. 79(8), 2554–2558 (1982). arXiv:1411.3159v1

Hopfield, J.J.: Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Nat. Acad. Sci. 81(10), 3088–3092 (1984)

Hu, H.P., Wang, J.K., Xie, F.L.: Dynamics analysis of a new fractional-order hopfield neural network with delay and its generalized projective synchronization. Entropy (2019). https://doi.org/10.3390/e21010001

Hu, Z., Balakrishnan, S.: Online identification and control of aerospace vehicles using recurrent networks. In: Proceedings IEEE Conference on Control Applications, vol. 1, pp. 225–230 (1999). https://doi.org/10.1109/CCA.1999.806180

Hu, Z., Balakrishnan, S.: Parameter estimation in nonlinear systems using hopfield neural networks. In: Proceedings of 7th Mediterranean Conference on Control and Automation, pp. 1896–1961 (1999)

Hunt, K., Sbarbaro, D., Żbikowski, R., Gawthrop, P.: Neural networks for control systems—a survey. Automatica 28(6), 1083–1112 (1992)

Jankowski, S., Lozowski, A., Zurada, J.M.: Complex-valued multistate neural associative memory. IEEE Trans. Neural Netw. 7(6), 1491–1496 (1996). https://doi.org/10.1109/72.548176

Jiao, Y., Yamamoto, Y., Dang, C., Hao, Y.: An aftertreatment technique for improving the accuracy of adomian’s decomposition method. Comput. Math. Appl. 43(6), 783–798 (2002). https://doi.org/10.1016/S0898-1221(01)00321-2

Kaslik, E., Sivasundaram Seenith, S.: Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw. 32, 245–256 (2012)

Lazzús, J.A., Rivera, M., López-Caraballo, C.H.: Parameter estimation of lorenz chaotic system using a hybrid swarm intelligence algorithm. Phys. Lett. A 380(11), 1164–1171 (2016). https://doi.org/10.1016/j.physleta.2016.01.040

Ljung, L.: System Identification: Theory for the User. Pearson, London (1997)

Lorenz, E.N.: Deterministic nonperiodic flow. J. Atmos. Sci. 20(2), 130–141 (1963)

Losada, J., Nieto, J.J.: Properties of a new fractional derivative without singular kernel. Progr. Fract. Differ. Appl. 1(2), 87–92 (2015)

MacDonald, C.L., Bhattacharya, N., Sprouse, B.P., Silva, G.A.: Efficient computation of the Grünwald-Letnikov fractional diffusion derivative using adaptive time step memory. J. Comput. Phys. 297, 221–236 (2015). arXiv:1505.03967

Podlubny, I.: Fractional-order systems and pi/sup /spl lambda//d/sup /spl mu//-controllers. IEEE Trans. Autom. Control 44(1), 208–214 (1999)

Pu, Y., Yi, Z., Zhou, J.: Fractional hopfield neural networks: fractional dynamic associative recurrent neural networks. IEEE Trans. Neural Netw. Learn. Syst. 28(10), 2319–2333 (2017). https://doi.org/10.1109/TNNLS.2016.2582512

Saad, K.: Comparing the Caputo, Caputo–Fabrizio and Atangana–Baleanu derivative with fractional order: fractional cubic isothermal auto-catalytic chemical system. Eur. Phys. J. Plus 133(94), (2018). https://doi.org/10.1140/epjp/i2018-11947-6

Sales Teodoro, G., Tenreiro Machado, J., Capelas de Oliveira, E.: A review of definitions of fractional derivatives and other operators. J. Comput. Phys. 388, 195–208 (2019)

Shah, P., Agashe, S.: Review of fractional PID controller. Mechatronics 38, 29–41 (2016). https://doi.org/10.1016/j.mechatronics.2016.06.005

Sheng, Y., Bai, W., Xie, Y.: Fractional-order PI\(\lambda \)DPI\(\lambda \)D sliding mode control for hypersonic vehicles with neural network disturbance compensator. Nonlinear Dyn 103, 849–863 (2021). https://doi.org/10.1007/s11071-020-06046-y

Tarasov, V.E.: No nonlocality. No fractional derivative. Commun. Nonlinear Sci. Numer. Simul. 62, 157–163 (2018). https://doi.org/10.1016/j.cnsns.2018.02.019

Verma, P., Kumar, M.: Analysis of a novel coronavirus (2019-ncov) system with variable Caputo–Fabrizio fractional order. Chaos Solitons Fractals 142, 110451 (2021). https://doi.org/10.1016/j.chaos.2020.110451

Wang, L., Zhou, G., Xiao, I., Wu, Q.: Hopfield neural network based identification and control of induction motor drive system. IFAC Proc. 32(2), 4488–4493 (1999). 14th IFAC World Congress 1999, Beijing, Chia, 5–9

Wu, J., Wang, J., You, Z.: An overview of dynamic parameter identification of robots. Robot. Comput. Integr. Manuf. 26(5), 414–419 (2010). https://doi.org/10.1016/j.rcim.2010.03.013

Yu, X., Wei, S., Guo, L.: Nonlinear system identification of bicycle robot based on adaptive neural fuzzy inference system. In: Wang, F.L., Deng, H., Gao, Y., Lei, J. (eds.) Artificial Intelligence and Computational Intelligence, pp. 381–388. Springer, Berlin (2010)

Zhang, L., Yang, Y.: Stability analysis of fractional order hopfield neural networks with optimal discontinuous control. Neural Process. Lett. 50, 581–593 (2019)

Funding

Open access funding provided by Università degli Studi di Catania within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fazzino, S., Caponetto, R. & Patanè, L. A new model of Hopfield network with fractional-order neurons for parameter estimation. Nonlinear Dyn 104, 2671–2685 (2021). https://doi.org/10.1007/s11071-021-06398-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-021-06398-z