Abstract

In 2012, Censor et al. (Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 61(9):1119–1132, 2012b) proposed the two-subgradient extragradient method (TSEGM). This method does not require computing projection onto the feasible (closed and convex) set, but rather the two projections are made onto some half-space. However, the convergence of the TSEGM was puzzling and hence posted as open question. Very recently, some authors were able to provide a partial answer to the open question by establishing weak convergence result for the TSEGM though under some stringent conditions. In this paper, we propose and study an inertial two-subgradient extragradient method (ITSEGM) for solving monotone variational inequality problems (VIPs). Under more relaxed conditions than the existing results in the literature, we prove that proposed method converges strongly to a minimum-norm solution of monotone VIPs in Hilbert spaces. Unlike several of the existing methods in the literature for solving VIPs, our method does not require any linesearch technique, which could be time-consuming to implement. Rather, we employ a simple but very efficient self-adaptive step size method that generates a non-monotonic sequence of step sizes. Moreover, we present several numerical experiments to demonstrate the efficiency of our proposed method in comparison with related results in the literature. Finally, we apply our result to image restoration problem. Our result in this paper improves and generalizes several of the existing results in the literature in this direction.

Similar content being viewed by others

1 Introduction

Let H be a real Hilbert space with inner product \(\langle \cdot ,\cdot \rangle\) and induced norm \(||\cdot ||.\) Let C be a nonempty, closed and convex subset of H, and let \(A:H\rightarrow H\) be a mapping. The variational inequality problem (VIP) is formulated as finding a point \(p\in C\) such that

We denote the solution set of the VIP (1.1) by VI(C, A). In the recent years, the VIP has received great research attention due to its wide areas of applications, such as in structural analysis, economics, optimization theory (Alakoya and Mewomo 2022; Aubin and Ekeland 1984; Ogwo et al. 2022), operations research, sciences and engineering (see Baiocchi and Capelo 1984; Censor et al. 2012a; Godwin et al. 2023; Kinderlehrer and Stampacchia 2000 and the references therein). The VIP is an important mathematical model that has been widely utilized to formulate and investigate a plethora of competitive equilibrium problems in various disciplines, such as traffic network equilibrium problems, spatial price equilibrium problems, oligopolistic market equilibrium problems, financial equilibrium problems, migration equilibrium problems, environmental network and ecology problems, knowledge network problems, supply chain network equilibrium problems, internet problems, etc., see, e.g., Geunes and Pardalos (2003), Nagurney (1999), Nagurney and Dong (2002) for further examples and details. The study of VIPs in finite dimensional spaces was initiated independently by Smith (1979) and Dafermos (1980). They set up the traffic assignment problem in terms of a finite dimensional VIP. On the other hand, Lawphongpanich and Hearn (1984), and Panicucci et al. (2007) studied the traffic assignment problems based on Wardrop user equilibrium principle via a variational inequality model. Since then, several other economics related problems like Nash equilibrium problem, spatial price equilibrium problems, internet problems, dynamic financial equilibrium problems and environmental network and ecology problems have been investigated via variational inequality problem (see Aussel et al. 2016; Ciarciá and Daniele 2016; Nagurney et al. 2007; Scrimali and Mirabella 2018).

There are two common approaches to solving the VIP, namely: the regularised methods and the projection methods. In this study, our interest is in the projection methods. The earliest and simplest projection method for solving the VIP is the projected gradient method (GM), which is presented as follows:

Algorithm 1.1 (Gradient Method (GM))

for each \(n\ge 1,\) where \(P_C\) denotes the metric projection map. Observe that the GM requires calculating only one projection per iteration onto the feasible C. However, the method only converges when the cost operator A is \(\alpha\)-strongly monotone and L-Lipschitz continuous, where \(\lambda \in \Big (0,\frac{2\alpha }{L^2}\Big ).\) These stringent conditions greatly limit the scope of applications of the GM (1.2).

In order to relax the conditions for the convergence of the GM to a solution of the VIP, Korpelevich (1976) and Antipin (1976) independently proposed the following extragradient method (EGM) in finite-dimensional Euclidean space:

Algorithm 1.2 (Extragradient Method (EGM))

where \(\lambda \in {(0, \frac{1}{L})}\), \(A:\mathbb {R}^n\rightarrow \mathbb {R}^n\) is monotone and L-Lipschitz continuous. If the solution set VI(C, A) is nonempty, the EGM (1.3) generates a sequence that converges to a solution of the VIP.

Observe that the EGM needs to compute two projections onto the feasible set C and two evaluations of the operator A per iteration. In general, computing projection onto an arbitrary closed and convex set C is complicated. This limitation can affect the efficiency of the EGM. In the recent years, the EGM has attracted the attention of researchers, who improved it in various ways (see, e.g., Ceng et al. 2021; Duong and Gibali 2019; Godwin et al. 2022; He et al. 2019) and the references therein. One of the major areas of improvement of the method is to minimize the number of projections onto the feasible set C per iteration (Thong et al. 2020; Uzor et al. 2022). Censor et al. (2011) initiated an attempt in this direction by modifying the EGM and replacing the second projection with a projection onto a half-space. The resulting method requires only one projection onto the feasible set C and is known as the subgradient extragradient method (SEGM). The SEGM is presented as follows:

Algorithm 1.3 (Subgradient Extragradient Method (SEGM))

Censor et al. (2011) obtained weak convergence result for the SEGM (1.4) under the same assumptions as the EGM (1.3). Since there is an explicit formula to calculate projection onto an half-space, the SEGM can be considered as an improvement over the EGM. However, we observe that the SEGM still requires computing one projection onto the closed convex set C per iteration. This can still be a great barrier to the implementation of the SEGM.

In order to address this limitation, Censor et al. (2012b) also proposed the following method called the two-subgradient method (TSEGM):

Algorithm 1.4 (Two-Subgradient Extragradient Method (TSEGM))

where \(\zeta _n\in \partial c(x_n).\) Here, \(\partial c(x)\) denotes the sub-differential of the convex function \(c(\cdot )\) at x defined in (2.2).

The idea behind the TSEGM is in that any closed and convex set C can be expressed as

where \(c:H\rightarrow \mathbb {R}\) is a convex function. For instance, we can let \(c(x):=\text {dist}(x,C),\) where “\(\text {dist}\)” is the distance function. We observe that the two projections in the TSEGM (1.5) are made onto an half-space, which makes it easier to implement. However, the convergence of the TSEGM (1.5) was puzzling and was therefore posted as an open question by Censor et al. (2012b).

At this point, we briefly discuss the inertial technique. The inertial algorithm is based on a discrete version of the second-order dissipative dynamical system, which was first proposed by Polyak (1964). The main feature of the inertial-algorithm is that the method uses the previous two iterates to generate the next iterate. It is worth mentioning that this small change can greatly improve the convergence rate of an iterative method. In the recent years, many researchers have constructed very fast iterative methods by employing the inertial technique, see, e.g., Alakoya and Mewomo (2022), Alakoya et al. (2022), Gibali et al. (2020), Godwin et al. (2023), Wickramasinghe et al. (2023) and the references therein.

In 2019, Cao and Guo (2020) partially answered the open question posted by Censor et al. (2012b). by combining the inertial technique with the TSEGM and obtained a weak convergence result for the proposed algorithm (Algorithm 7.1) under the assumptions that the cost operator A is monotone, Lipschitz continuous, the convex function \(c:H\rightarrow \mathbb {R}\) in (1.6) is continuously differentiable and the G\(\hat{a}\)teaux differential \(c'(\cdot )\) is Lipschitz continuous.

We need to point out at this point that all the above methods are not applicable when the Lipschitz constant of the cost operator is unknown because the step size of the algorithms depends on prior knowledge of the Lipschitz constant of the cost operator. We also note that the ITSEGM proposed by Cao and Guo (2020) requires prior knowledge of the Lipschitz constant of the G\(\hat{a}\)teaux differential \(c'(\cdot )\) of \(c(\cdot ).\) In most cases, the Lipschitz constants of these operators are unknown or difficult to calculate. All of these drawbacks may hinder the implementation of these algorithms. Moreover, all the above methods only give weak convergence results under these stringent conditions.

Bauschke and Combettes (2001) pointed out that in solving optimization problems, strong convergent iterative methods are more applicable, and hence more desirable than their weak convergent counterparts. Thus, it is important to develop algorithms that generate strong convergence sequence when solving optimization problems.

Very recently, Ma and Wang (2022) tried to improve on the results of Cao and Guo (2020) by proposing a new TSEGM (Algorithm 7.2), which uses a self-adaptive step size such that the implementation of their algorithm does not require prior knowledge of the Lipschitz constant of the cost operator. However, we note that the implementation of their result also requires knowledge of the Lipschitz constant of the G\(\hat{a}\)teaux differential \(c'(\cdot )\) of \(c(\cdot ).\) Moreover, the authors were also only able to obtain weak convergence result for their proposed algorithm.

Considering the above review, it is pertinent to ask the following research questions:

Can we construct a new inertial two-subgradient extragradient method, which is applicable when the Lipschitz constant of the cost operator A and/or when the Lipschitz constant of the Gâteaux differential \(c'(\cdot )\) of \(c(\cdot )\) are unknown? Can we obtain a strong convergence result for this method?

In this paper, we provide affirmative answers to the above questions. More precisely, we introduce a new inertial two-subgradient extragradient method which does not require knowledge of the Lipschitz constant of the cost operator nor knowledge of the Lipschitz constant of the G\(\hat{a}\)teaux differential \(c'(\cdot )\) of \(c(\cdot ).\) This makes our results applicable to a larger class of problems. Moreover, we prove that the sequence generated by our proposed algorithm converges strongly to a minimum-norm solution of the VIP. In many practical problems, finding the minimum-norm solution is very important and useful. All of the above highlighted properties are some of the improvements of our proposed method over the results of Ma and Wang (2022), and Cao and Guo (2020). In addition, the proof of our strong convergence theorem does not rely on the usual “two cases approach” widely employed by authors to prove strong convergence results. We also point out that unlike several of the existing results in the literature, our method does not involve any linesearch technique which could be computationally expensive to implement (e.g., see Cai et al. 2022; Peeyada et al. 2020; Suantai et al. 2020) nor does it require evaluating any inner product function which is not easily evaluated unlike the norm function (see Muangchoo et al. 2021, Corollary 4.4). Rather, we employ a simple but very efficient self-adaptive step size technique, which generates a non-monotonic sequence of step sizes with less dependency on the initial step size. This makes our method more efficient and less expensive to implement. Moreover, we present several numerical experiments to demonstrate the computational advantage of our proposed method over the existing methods in the literature. Finally, we apply our result to image restoration problem. The results of the numerical experiments show that our method is more efficient than several of the existing methods in the literature. Clearly, our proposed method is economically viable and our results improve and generalize several of the existing results in the literature in this direction.

The rest of the paper is organized as follows: In Section 2, we recall some definitions and lemmas employed in the paper. In Section 3, we present our proposed algorithm and highlight some of its features. Convergence analysis of the proposed method is discussed in Section 4. In Section 5 we present some numerical experiments and apply our result to image restoration problem. Finally, in Section 6 we give a concluding remark.

2 Preliminaries

In what follows, we assume that C is a nonempty, closed and convex subset of a real Hilbert space H. We denote the weak and strong convergence of a sequence \(\{x_n\}\) to a point \(x \in H\) by \(x_n \rightharpoonup x\) and \(x_n \rightarrow x\), respectively and \(w_\omega (x_n)\) denotes set of weak limits of \(\{x_n\},\) that is,

Let H be a real Hilbert space, for a nonempty closed and convex subset C of H, the metric projection \(P_C: H\rightarrow C\) (Taiwo et al. 2021) is defined, for each \(x\in H,\) as the unique element \(P_Cx\in C\) such that

It is known that \(P_C\) is nonexpansive and has the following properties (Alakoya and Mewomo 2022; Uzor et al. 2022):

-

1.

\(||P_Cx - P_Cy||^2 \le \langle P_Cx - P_Cy, x -y\rangle \;\;\; \text {for all}\,\, x, y\in C;\)

-

2.

for any \(x\in H\) and \(z\in C, z = P_Cx\) if and only if

$$\begin{aligned} \langle x - z, z - y\rangle \ge 0\;\;\; \text {for all}\,\, y\in C; \end{aligned}$$(2.1) -

3.

for any \(x\in H\) and \(y\in C,\)

$$\begin{aligned} ||P_Cx - y||^2 + ||x - P_Cx||^2 \le ||x - y||^2; \end{aligned}$$ -

4.

for any \(x,y\in H\) with \(y\ne 0,\) let \(Q = \{z\in H: \langle y, z-x \rangle \le 0\}.\) Then, for all \(u\in H,\) \(P_Q(u)\) is given by

$$\begin{aligned} P_Q(u) = u - \max \Big \{0, \frac{\langle y, u-x \rangle }{||y||^2}\Big \}y, \end{aligned}$$which gives an explicit formula for calculating the projection of any given point onto a half-space.

Lemma 2.1

Let H be a real Hilbert space. Then the following results hold for all \(x,y\in H\) and \(\delta \in \mathbb {R}:\)

-

(i)

\(||x + y||^2 \le ||x||^2 + 2\langle y, x + y \rangle ;\)

-

(ii)

\(||x + y||^2 = ||x||^2 + 2\langle x, y \rangle + ||y||^2;\)

-

(iii)

\(||\delta x + (1-\delta ) y||^2 = \delta ||x||^2 + (1-\delta )||y||^2 -\delta (1-\delta )||x-y||^2.\)

Definition 2.2

An operator \(A:H\rightarrow H\) is said to be

-

(i)

\(\alpha\)-strongly monotone, if there exists \(\alpha >0\) such that

$$\begin{aligned} \langle x-y, Ax-Ay\rangle \ge \alpha \Vert x-y\Vert ^2,~~ \forall ~x,y \in H; \end{aligned}$$ -

(ii)

\(\alpha\)-inverse strongly monotone (\(\alpha\)-cocoercive), if there exists a positive real number \(\alpha\) such that

$$\begin{aligned} \langle x-y, Ax-Ay \rangle \ge \alpha ||Ax-Ay||^2,\quad \forall ~ x,y\in H; \end{aligned}$$ -

(iii)

monotone, if

$$\begin{aligned} \langle x-y, Ax-Ay \rangle \ge 0,\quad \forall ~ x,y\in H; \end{aligned}$$ -

(iv)

L-Lipschitz continuous, if there exists a constant \(L>0\) such that

$$\begin{aligned} ||Ax-Ay||\le L||x-y|| ,\quad \forall ~ x,y\in H; \end{aligned}$$

It is known that if A is \(\alpha\)-strongly monotone and L-Lipschitz continuous, then A is \(\frac{\alpha }{L^2}\)-inverse strongly monotone. Furthermore, \(\alpha\)-inverse strongly monotone operators are \(\frac{1}{\alpha }\)-Lipschitz continuous and monotone but the converse is not true.

Definition 2.3

Bauschke and Combettes (2017) A function \(c:H\rightarrow \mathbb {R}\) is said to be G\(\hat{a}\)teaux differentiable at \(x\in H,\) if there exists an element denoted by \(c^{\prime }(x) \in H\) such that

where \(c^{\prime }(x)\) is called the G\(\hat{a}\)teaux differential of c at x. Recall that if for each \(x\in H\), c is G\(\hat{a}\)teaux differentiable at x, then c is G\(\hat{a}\)teaux differentiable on H.

Definition 2.4

Bauschke and Combettes (2017) A convex set \(c:H\rightarrow \mathbb {R}\) is said to be subdifferentiable at a point \(x\in H\) if the set

is nonempty. Each element in \(\partial {c}(x)\) is called a subgradient of c at x. We note that if c is subdifferentiable at each \(x\in H\), then c is subdifferentiable on H. It is also known that if c is G\(\hat{a}\)teaux differentiable at x, then c is subdifferentiable at x and \(\partial {c}(x)=\{c^{\prime }(x)\}\).

Definition 2.5

Let H be a real Hilbert space. A function \(c:H\rightarrow \mathbb {R}\cup \{+\infty \}\) is said to be weakly lower semi-continuous (w-lsc) at \(x\in H,\) if

holds for every sequence \(\{x_n\}\) in H satisfying \(x_n\rightharpoonup x.\)

Lemma 2.6

Bauschke and Combettes (2017) Let \(c:H\rightarrow \mathbb {R}\cup \{+\infty \}\) be convex. Then the following are equivalent:

-

(i)

c is weakly sequential lower semi-continuous;

-

(ii)

c is lower semi-continuous.

Lemma 2.7

He and Xu (2013) Assume that the solution set \(\text{ VI }(C,A)\) of the VIP (1.1) is nonempty, and C is defined as \(C:=\{x\in H~|~c(x)\le 0\}\), where \(c:H\rightarrow \mathbb {R}\) is a continuously differentiable convex function. Given \(p\in C\). Then \(p\in \text{ VI }(C,A)\) if and only if either

-

1.

\(Ap=0,\) or

-

2.

\(p\in \partial {C}\) and there exists \(\eta _p>0\) such that \(Ap=-\eta _p c^{\prime }(p),\) where \(\partial {C}\) denotes the boundary of C.

Lemma 2.8

Dong et al. (2018) Let C be a nonempty closed and convex subset of H. Let \(A:C \rightarrow H\) be a continuous, monotone mapping and \(z\in C\), then

Lemma 2.9

Tan and Xu (1993) Suppose \(\{\lambda _n\}\) and \(\{\phi _n\}\) are two nonnegative real sequences such that

If \(\sum _{n=1}^{\infty }\phi _n<+\infty ,\) then \(\lim \limits _{n\rightarrow \infty }\lambda _n\) exists.

Lemma 2.10

Saejung and Yotkaew (2012) Let \(\{a_n\}\) be a sequence of nonnegative real numbers, \(\{\alpha _{n}\}\) be a sequence in (0, 1) with the condition: \(\sum _{n=1}^{\infty }\alpha _{n}=\infty\) and \(\{b_n\}\) be a sequence of real numbers. Assume that

If \(\limsup _{k\rightarrow \infty }b_{n_k}\le 0\) for every subsequence \(\{a_{n_k}\}\) of \(\{a_n\}\) satisfying the condition:

then \(\lim _{k\rightarrow \infty }a_n=0.\)

3 Proposed Method

In this section, we present our proposed method and discuss some of its important features. We begin with the following assumptions under which our strong convergence result is obtained.

Assumption 3.1

Suppose that the following conditions hold:

-

1.

The set C is defined by

$$\begin{aligned} C=\{x\in H~|~c(x)\le 0\}; \end{aligned}$$(3.1)where \(c:H\rightarrow \mathbb {R}\) is a continuously differentiable convex function such that \(c^{\prime }(\cdot )\) is \(L_1\)-Lipschitz continuous (however, prior knowledge of the Lipschitz constant is not required).

-

2.

-

(a)

\(A:H \rightarrow H\) is monotone and \(L_2\)-Lipschitz continuous (however, prior knowledge of the Lipschitz constant is not needed).

-

(b)

There exists \(K>0\) such that \(\Vert Ax\Vert \le K\Vert c'(x)\Vert\) for all \(x\in \partial C.\)

-

(c)

The solution set VI(C, A) is nonempty.

-

(a)

-

3.

\(\{\alpha _n\}^{\infty }_{n=1},~~\{\beta _n\}^{\infty }_{n=1}\) and \(\{\xi _n\}^{\infty }_{n=1}\) are non-negative sequences satisfying the following conditions:

-

(a)

\(\alpha _n \in (0,1), ~~\) \(\lim \limits _{n\rightarrow \infty } \alpha _n=0,~~\sum _{n=1}^{\infty }\alpha _n= \infty , \lim \limits _{n\rightarrow \infty }\dfrac{\xi _n}{\alpha _n}=0, \theta>0,\lambda _1>0.\)

-

(b)

\(\{\beta _n\}\subset [a,b]\subset (0,1-\alpha _n),\delta \in \Big (0,-K+\sqrt{1+K^2}\Big ).\)

-

(c)

Let \(\{\phi _n\}\) be a nonnegative sequence such that \(\sum _{n=1}^\infty \phi _n<+\infty .\)

-

(a)

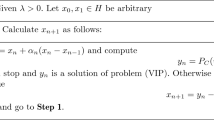

Algorithm 3.2

- Step 0.:

-

Select two arbitrary initial points \(x_0, x_1\in H\) and set \(n=1.\)

- Step 1.:

-

Given the \((n-1)th\) and nth iterates, choose \(\theta _n\) such that \(0\le \theta _n\le \hat{\theta }_n\) with \(\hat{\theta }_n\) defined by

$$\begin{aligned} \hat{\theta }_n = {\left\{ \begin{array}{ll} \min \Big \{\theta ,~ \frac{\xi _n}{\Vert x_n - x_{n-1}\Vert }\Big \}, \quad \text {if}~ x_n \ne x_{n-1},\\ \theta , \hspace{95pt} \text {otherwise.} \end{array}\right. } \end{aligned}$$(3.2) - Step 2.:

-

Compute

$$\begin{aligned} w_n=x_n + \theta _n(x_n-x_{n-1}), \end{aligned}$$ - Step 3.:

-

Construct the half-space

$$\begin{aligned} C_n=\{x\in H: c(w_n)+\langle c'(w_n), x-w_n\rangle \le 0\}, \end{aligned}$$and compute

$$\begin{aligned} y_n= P_{C_n}(w_n-\lambda _nAw_n) \end{aligned}$$$$\begin{aligned} z_n= P_{C_n}(w_n-\lambda _nAy_n) \end{aligned}$$If \(c(y_n)\le 0\) and either \(w_n-y_n=0\) or \(Ay_n=0,\) then stop and \(y_n\) is a solution of the VIP. Otherwise, go to Step 4.

- Step 4.:

-

Compute

$$\begin{aligned} x_{n+1}= (1-\alpha _n-\beta _n)w_n+\beta _nz_n. \end{aligned}$$ - Step 5.:

-

Compute

$$\begin{aligned} \lambda _{n+1}={\left\{ \begin{array}{ll} \min \left\{ \frac{\delta \Vert w_n-y_n\Vert }{\Vert Aw_n-Ay_n\Vert +\Vert c'(w_n)-c'(y_n)\Vert },~\lambda _n+\phi _n\right\} ,&{} \text{ if }~\Vert Aw_n-Ay_n\Vert +\Vert c'(w_n)-c'(y_n)\Vert \ne 0,\\ \lambda _n+\phi _n,&{} \text{ otherwise }. \end{array}\right. } \end{aligned}$$(3.3)Set \(n=n+1\) and go back to Step 1.

Remark 3.3

-

Observe that unlike the results of Cao and Guo (2020) and Ma and Wang (2022) knowledge of the Lipschitz constant of the cost operator A and knowledge of the Lipschitz constant of the G\(\hat{a}\)teaux differential \(c'(\cdot )\) of \(c(\cdot )\) are not required to implement our algorithm.

-

Moreover, we need to point out that our algorithm does not require any linesearch technique, rather we employ a more efficient step size rule in (3.3) which generates a non-monotonic sequence of step sizes. The step size is constructed such that it reduces the dependence of the algorithm on the initial step size \(\lambda _1.\)

-

We also remark that our proposed algorithm generates a strong convergence sequence, which converges to a minimum-norm solution of the VIP.

Remark 3.4

-

(i)

Observe that by the definition of C in (3.1) and the construction of \(C_n,\) we have \(C\subset C_n.\)

-

(ii)

By Assumption 3.1 3(a), it can easily be verified from (3.2) that

$$\begin{aligned} \lim _{n\rightarrow \infty }\theta _n||x_n - x_{n-1}|| = 0 ~~ \text {and}~~ \lim _{n\rightarrow \infty }\frac{\theta _n}{\alpha _n}||x_n - x_{n-1}|| = 0. \end{aligned}$$

Remark 3.5

Observe that by (3.1) and Lemma 4.5 together with the formulation of the variational inequality problem, it is clear that if \(c(y_n)\le 0\) and either \(w_n-y_n=0\) or \(Ay_n=0,\) then \(y_n\) is a solution of the VIP.

4 Convergence Analysis

First, we establish some lemmas which will be needed to prove our strong convergence theorem for the proposed algorithm.

Lemma 4.1

Let \(\{\lambda _n\}\) be a sequence generated by Algorithm 3.2. Then, we have \(\lim \limits _{n\rightarrow \infty }\lambda _n=\lambda ,\) where \(\lambda \in \Big [\text{ min }\{\frac{\delta }{L_2+L_1},\lambda _1\},\lambda _1+\Phi \Big ]\) for some positive constant M and \(\Phi =\sum \limits _{n=1}^{\infty }\phi _n.\)

Proof

Since A is \(L_2\)-Lipschitz continuous and \(c'(\cdot )\) is \(L_1\)-Lipschitz continuous, then for the case \(\Vert Aw_n-Ay_n\Vert +\Vert c'(w_n)-c'(y_n)\Vert \ne 0\) for all \(n\ge 1\) we have

Thus, by the definition of \(\lambda _{n+1},\) the sequence \(\{\lambda _n\}\) has lower bound \(\min \{\frac{\delta }{L_2+L_1},\lambda _1\}\) and upper bound \(\lambda _1 + \Phi .\) By Lemma 2.9, we have that \(\lim \limits _{n\rightarrow \infty }\lambda _n\) exists and denoted by \(\lambda =\lim \limits _{n\rightarrow \infty }\lambda _n.\) It is clear that \(\lambda \in \big [\min \{\frac{\delta }{L_2+L_1},\lambda _1\},\lambda _1+\Phi \big ]\).\(\square\)

Lemma 4.2

Let \(\{x_n\}\) be a sequence generated by Algorithm 3.2 under Assumption 3.1. Then, the following inequality holds for all \(p\in VI(C,A):\)

Proof

From (3.3), we have

which implies that

Let \(p\in VI(C,A).\) For convenience, we set \(v_n=w_n-\lambda _nAy_n\) and we have \(z_n=P_{C_n}(v_n).\) Since \(p\in C\subset C_n,\) then by applying (2.1) we obtain

Observe that

Since \(y_n=P_{C_n}(w_n-\lambda _nAw_n)\) and \(z_n\in C_n,\) it follows from (2.1) that

Applying Young’s inequality and (4.2), we get

Furthermore, by the monotonicity of A, we get

Now, applying (4.4)-(4.7) in (4.3) we get

Now, we consider the following two cases:

- Case 1:

-

: \(Ap=0.\) If \(Ap=0,\) then from (4.8) the desired inequality (4.1) follows.

- Case 2:

-

: \(Ap\ne 0.\) By Lemma 2.7, \(p\in \partial C\) and there exists \(\eta _p >0\) such that \(Ap=-\eta _p c'(p).\) Since \(p\in \partial C,\) then \(c(p)=0.\) By the sub-differential inequality (2.2), we have

$$\begin{aligned} \begin{aligned} c(y_n)&\ge c(p)+\langle c'(p), y_n-p\rangle \\&=\frac{-1}{\eta _p}\langle Ap, y_n-p\rangle . \end{aligned} \end{aligned}$$

From the last inequality we obtain

Since \(y_n\in C_n,\) we obtain

Again, by the sub-differential inequality (2.2), we get

Adding (4.10) and (4.11), we have

Observe that by Assumption 3.1 2(b)

Hence, we have

Applying (4.2), (4.13) and (4.15) in (4.8), we obtain

which is the required inequality (4.1).\(\square\)

Since the limit of \(\{\lambda _n\}\) exists, \(\lim \limits _{n\rightarrow \infty }\lambda _n=\lim \limits _{n\rightarrow \infty }\lambda _{n+1}.\) Hence, by the conditions on the control parameters we have

Therefore, there exists \(n_0\ge 1\) such that for all \(n\ge n_0\) we have

Thus, from (4.1) we have that for all \(n\ge n_0,\)

Lemma 4.3

Let \(\{x_n\}\) be a sequence generated by Algorithm 3.2 under Assumption 3.1. Then, \(\{x_n\}\) is bounded.

Proof

Let \(p\in VI(C,A).\) Then, by the definition of \(w_n\) we have

By Remark 3.4 (ii.), there exists \(M_1 > 0\) such that

Thus, it follows from (4.19) that

By the definition of \(x_{n+1},\) we have

Applying Lemma 2.1(ii) and using (4.18) we have

which implies that

Now, applying (4.20) and (4.22) in (4.21), we have for all \(n\ge n_0\)

Hence, \(\{x_n\}\) is bounded. Consequently, \(\{w_n\}, \{y_n\}\) and \(\{z_n\}\) are all bounded.\(\square\)

Lemma 4.4

Suppose \(\{x_n\}\) is a sequence generated by Algorithm 3.2 such that Assumption 3.1 holds. Then, the following inequality holds for all \(p\in VI(C,A):\)

Proof

Let \(p\in VI(C,A).\) Then, by applying Lemma 2.1(ii) together with the Cauchy-Schwartz inequality we have

where \(M_2:= \sup _{n\in \mathbb {N}}\{\Vert x_n - p\Vert , \theta _n\Vert x_n - x_{n-1}\Vert \}>0.\)

Next, by applying (4.2) together with the nonexpansiveness of \(P_{C_n}\) we have

Using (4.23) and applying Lemmas 2.1 and 4.2, we have

which is the required inequality.\(\square\)

Lemma 4.5

Let \(\{w_n\}\) and \(\{y_n\}\) be two sequences generated by Algorithm 3.2 under Assumption 3.1. If there exists a subsequence \(\{w_{n_k}\}\) of \(\{w_n\},\) which converges weakly to \(x^*\in H\) and \(\lim \limits _{k\rightarrow \infty }\Vert w_{n_k}-y_{n_k}\Vert =0,\) then \(x^*\in VI(C,A).\)

Proof

Suppose \(\{w_n\}\) and \(\{y_n\}\) are two sequences generated by Algorithm 3.2 with subsequences \(\{w_{n_k}\}\) and \(\{y_{n_k}\},\) respectively such that \(w_{n_k}\rightharpoonup x^*.\) Then by the hypothesis of the lemma we have \(y_{n_k}\rightharpoonup x^*.\) Also, since \(y_{n_k} \in C_{n_k},\) then by the definition of \(C_n\) we have

Applying the Cauchy-Schwartz inequality, we obtain from the last inequality

Since \(c'(\cdot )\) is continuous and \(\{w_{n_k}\}\) is bounded, then \(\{c'(w_{n_k})\}\) is bounded, that is, there exists a constant \(M>0\) such that \(\Vert c'(w_{n_k})\Vert \le M \; \text {for all} \; k\ge 0\). Then, from (4.25) we obtain

Since \(c(\cdot )\) is continuous, then it is lower semi-continuous. Also, since \(c(\cdot )\) is convex, by Lemma 2.6 \(c(\cdot )\) is weakly lower semi-continuous. Hence, it follows from (4.26) and the definition of weakly lower semi-continuity that

which implies that \(x^* \in C.\) By property (2.1) of \(P_{C_n}\), we obtain

Since A is monotone, we have

Letting \(k\rightarrow \infty\) in the last inequality, and applying \(\lim \limits _{k\rightarrow \infty }||y_{n_k} - w_{n_k}|| = 0\) and \(\lim \limits _{k\rightarrow \infty }\lambda _{n_k}=\lambda >0,\) we have

Applying Lemma 2.8, we obtain \(x^*\in VI(C, A)\).\(\square\)

At this point, we state and prove the strong theorem for our proposed algorithm.

Theorem 4.6

Let \(\{x_n\}\) be a sequence generated by Algorithm 3.2 under Assumption 3.1. Then \(\{x_n\}\) converges strongly to \(\hat{x}\in VI(C,A),\) where \(\hat{x}= \min \{\Vert p\Vert :p\in VI(C,A)\}.\)

Proof

Since \(\hat{x}= \min \{\Vert p\Vert :p\in VI(C,A)\},\) we have \(\hat{x}=P_{VI(C,A)}(0).\) From Lemma 4.4, we have

where \(b_n= {3M_2 (1-\alpha _n)^2\frac{\theta _n}{\alpha _n}\Vert x_n - x_{n-1}\Vert + 2\langle \hat{x},\hat{x}-x_{n+1}\rangle .}\) Now, we claim that the sequence \(\{\Vert x_n-\hat{x}\Vert \}\) converges to zero. To establish this, by Lemma 2.10 it suffices to show that \(\limsup \limits _{k\rightarrow \infty }b_{n_k}\le 0\) for every subsequence \(\{\Vert x_{n_k}-\hat{x}\Vert \}\) of \(\{\Vert x_n-\hat{x}\Vert \}\) satisfying

Suppose that \(\{\Vert x_{n_k}-\hat{x}\Vert \}\) is a subsequence of \(\{\Vert x_n-\hat{x}\Vert \}\) such that (4.29) holds. Again, from Lemma 4.4 we obtain

Applying (4.29), Remark 3.4 and the fact that \(\lim \limits _{k\rightarrow \infty }\alpha _{n_k}=0,\) we get

By the conditions on \(\alpha _{n_k}, \beta _{n_k}\) and (4.17), we have

Consequently, from (4.24) we get

By Remark 3.4 (ii.), we have

Next, applying (4.31) and (4.32) we have

Now, using (4.32), (4.33) and the fact that \(\lim \limits _{k\rightarrow \infty }\alpha _{n_k}=0\) we obtain

Since \(\{x_n\}\) is bounded, then \(w_\omega (x_n)\) is nonempty. Let \(x^*\in w_\omega (x_n)\) be an arbitrary element. Then, there exists a subsequence \(\{x_{n_k}\}\) of \(\{x_n\}\) such that \(x_{n_k}\rightharpoonup x^*\) as \(k\rightarrow \infty .\) It follows from (4.32) that \(w_{n_k}\rightharpoonup x^*\) as \(k\rightarrow \infty .\) Moreover, by Lemma 4.5 and (4.30) we have \(x^*\in VI(C,A).\) Consequently, we have \(w_\omega (x_n)\subset VI(C,A).\)

Since \(\{x_{n_k}\}\) is bounded, there exists a subsequence \(\{x_{n_{k_j}}\}\) of \(\{x_{n_k}\}\) such that \(x_{n_{k_j}}\rightharpoonup q\) and

Since \(\hat{x}=P_{VI(C,A)}(0),\) we have

Hence, it follows from the last inequality and (4.34) that

Next, by Remark 3.4 (ii.), (4.31) and (4.35) we have \(\limsup \limits _{k\rightarrow \infty }b_{n_k}\le 0.\) Consequently, by invoking Lemma 2.10 it follows from (4.28) that \(\{\Vert x_n-\hat{x}\Vert \}\) converges to zero as required.\(\square\)

5 Numerical Examples

In this section, we present some numerical experiments to illustrate the performance of our method, Algorithm 3.2 in comparison with Algorithms 7.1, 7.2, 7.3, and 7.4. All numerical computations were carried out using Matlab version R2019(b).

In our computations, we choose \(\alpha _n = \frac{2}{3n+2}\), \(\beta _n=\frac{1-\alpha _n}{2}\), \(\xi _n = (\frac{2}{3n+2})^2\), \(\phi _n=\frac{20}{(2n+5)^2}\), \(\theta =0.87\), \(\lambda _1=0.93\) in our Algorithm 3.2, we choose \(\tau =0.0018,\rho _n=\frac{n}{4n+1}\) in Algorithm 7.1, \(\lambda _{-1}=0.0018,\varphi =0.6,\mu =0.8\) in Algorithm 7.2, \(l= 0.018\) in Algorithm 7.3, and \(f(x)=\frac{1}{3}x\) in Algorithms 7.3 and 7.4.

Example 5.1

Let the feasible set \(C=\{x\in \mathbb {R}^2: c(x):=x_1^2+x_2-2\le 0\}\) and define the operator \(A:\mathbb {R}^2\rightarrow \mathbb {R}^2\) by \(A(x)=(6h(x_1), 4x_1+2x_2),\) where \(x=(x_1,x_2)\in \mathbb {R}^2\) and

Then, it can easily be verified that A is monotone and \(2\sqrt{9e^2+5}\)-Lipschitz continuous. Also, c is a continuously differentiable convex function and \(c'\) is 2-Lipschitz continuous. Moreover, we have that \(K=6\sqrt{e^2+1}\) (see He et al. 2018). Hence, we choose \(\delta =0.025.\)

We test the algorithms for four different initial points as follows:

Case 1: \(x_0 = (0.5,1), x_1 = (1,0.7);\)

Case 2: \(x_0 = (1.3, 0.2), x_1 = (0.3, 1.5);\)

Case 3: \(x_0 = (0.7,0.9), x_1= (0.4,0.8);\)

Case 4: \(x_0 = (1.2, 0.3), x_1 = (0.9, 1.1).\)

The stopping criterion used for this example is \(|x_{n+1}-x_{n}|< 10^{-2}\). We plot the graphs of errors against the number of iterations in each case. The numerical results are reported in Figs. 1, 2, 3, and 4 and Table 1.

Example 5.2

Let \(H=(\ell _2(\mathbb {R}), \Vert \cdot \Vert _2),\) where \(\ell _2(\mathbb {R}):=\{x=(x_1,x_2,\ldots ,x_n,\ldots )\), \(x_j\in \mathbb {R}:\sum _{j=1}^{\infty }|x_j|^2<+\infty \}\), \(||x||_2=(\sum _{j=1}^{\infty }|x_j|^2)^{\frac{1}{2}}\) and \(\langle x,y \rangle = \sum _{j=1}^\infty x_jy_j\) for all \(x\in \ell _2(\mathbb {R}).\) Let \(C=\{x \in H: c(x):=\Vert x\Vert ^2-1 \le 0\},\) and we define the operator \(A:H\rightarrow H\) by \(A(x)=2x,~\forall x \in H.\) Then A is monotone and 2-Lipschitz continuous. Moreover, \(K=1\) and we choose \(\delta =0.4.\)

We choose different initial values as follows:

Case 1: \(x_0 = (2, 1, \frac{1}{2}, \cdots ),\) \(x_1 = (-3, 1, -\frac{1}{3},\cdots );\)

Case 2: \(x_0 = (-2, 1, -\frac{1}{2},\cdots ),\) \(x_1 = (-4, 1, -\frac{1}{4}, \cdots );\)

Case 3: \(x_0 = (2, 1, \frac{1}{2}, \cdots ),\) \(x_1 = (-5, 1, -\frac{1}{5}, \cdots );\)

Case 4: \(x_0 = (-2, 1, -\frac{1}{2},\cdots ),\) \(x_1 = (-3, 1, -\frac{1}{3}, \cdots ).\)

The stopping criterion used for this example is \(\Vert x_{n+1}-x_{n}\Vert < 10^{-2}\). We plot the graphs of errors against the number of iterations in each case. The numerical results are reported in Figs. 5, 6, 7, and 8 and Table 2.

Example 5.3

(Application to Image Restoration Problem)

In this last example, we apply our result to image restoration problem. We compare the efficiency of our Algorithm 3.2 with Algorithms 7.1, 7.3, and 7.4.

We recall that the image restoration problem can be formulated as the following linear inverse problem:

where \(x\in \mathbb {R}^{N}\) is the original image, \(D\in \mathbb {R}^{M\times N}\) is the blurring matrix, \(v\in \mathbb {R}^{M}\) is the observed blurred image while e is the Gaussian noise. It is known that solving Problem (5.1) is equivalent to solving the convex minimization problem

where \(\lambda >0\) is the regularization parameter, \(\Vert \cdot \Vert _2\) denotes the Euclidean norm and \(\Vert \cdot \Vert _1\) is the \(\ell _1\)-norm. Our task here is to restore the original image x given the data of the blurred image v. The minimization problem (5.2) can be expressed as a variational inequality problem by setting \(A:=D^T(Dx-v).\) It is known in this case that the operator A is monotone and \(\Vert D^TD\Vert\)-Lipschitz continuous. We consider the \(291 \times 240\) Pout, \(159 \times 191\) Cell, \(223 \times 298\) Shadow, and \(256 \times 256\) Cameraman images from MATLAB Image Processing Toolbox. Moreover, we use the Gaussian blur of size \(7\times 7\) and standard deviation \(\sigma =4\) to create the blurred and noisy image (observed image) and use the algorithms to recover the original image from the blurred image. Also, we measure the quality of the restored image using the signal-to-noise ratio defined by

where x is the original image and \(x^*\) is the restored image. Note that, the larger the SNR, the better the quality of the restored image. We choose the initial values as \(x_0 = {\textbf {0}} \in \mathbb {R}^{N}\) and \(x_1 = {\textbf {1}} \in \mathbb {R}^{N}.\) The results are reported in Table 3, which shows the SNR values for each algorithm, and Figs. 9, 10, 11, 12, 13, 14, 15, and 16 shows the original, blurred and restored images. The major advantages of our proposed Algorithm 3.2 over the other algorithms compared with are the higher SNR values for generating the recovered images.

6 Conclusion

In this paper we study the monotone VIP. We introduce a new inertial two-subgradient extragradient method for approximating the solution of the problem in Hilbert spaces. Unlike several of the existing results in the literature, our method does not require any linesearch technique which could be time-consuming to implement. Rather, we employ a more efficient self-adaptive step size technique which generates a non-monotonic sequence of step sizes. Moreover, under mild conditions we prove that the sequence generated by our proposed algorithm converges strongly to a minimum-norm solution of the VIP. Finally, we presented several numerical experiments and applied our result to image restoration problem. Our result complements the existing results in the literature in this direction.

Data Availability

Not applicable.

References

Alakoya TO, Mewomo OT (2022) Viscosity s-iteration method with inertial technique and self-adaptive step size for split variational inclusion, equilibrium and fixed point problems. Comput Appl Math 41(1):31–39

Alakoya TO, Mewomo OT, Shehu Y (2022) Strong convergence results for quasimonotone variational inequalities. Math Methods Oper Res 47:30

Alakoya TO, Uzor VA, Mewomo OT, Yao J-C (2022) On system of monotone variational inclusion problems with fixed-point constraint. J Inequal Appl 47:30

Antipin AS (1976) On a method for convex programs using a symmetrical modification of the Lagrange function. Ekonom Math Methody 12(6):1164–1173

Aubin J-P, Ekeland I (1984) Applied nonlinear analysis. Wiley, New York

Aussel D, Gupta R, Mehra A (2016) Evolutionary variational inequality formulation of the generalized Nash equilibrium problem. J Optim Theory Appl 169:74–90

Bauschke HH, Combettes PL (2001) A weak-to-strong convergence principle for Fejer-monotone methods in Hilbert spaces. Math Oper Res 26(2):248–264

Bauschke HH, Combettes PL (2017) Convex analysis and monotone operator theory in Hilbert spaces, 2nd edn. Springer, New York

Baiocchi C, Capelo A (1984) Variational and quasivariational inequalities; applications to free boundary problems. Wiley, New York

Cai G, Shehu Y, Iyiola OS (2022) Inertial Tseng’s extragradient method for solving variational inequality problems of pseudo-monotone and non-Lipschitz operators. J Ind Manag Optim 18(4):2873–2902

Cao Y, Guo K (2020) On the convergence of inertial two-subgradient extragradient method for solving variational inequality problems. Optimization 69(6):1237–1253

Censor Y, Gibali A, Reich S (2012a) Algorithms for the split variational inequality problem. Numer Algorithms 59:301–323

Censor Y, Gibali A, Reich S (2012b) Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 61(9):1119–1132

Censor Y, Gibali A, Reich S (2011) The subgradient extragradient method for solving variational inequalities in Hilbert space. J Optim Theory Appl 148(2):318–335

Ceng LC, Petrusel A, Qin X, Yao JC (2021) Two inertial subgradient extragradient algorithms for variational inequalities with fixed-point constraints. Optimization 70:1337–1358

Ciarciá C, Daniele P (2016) New existence theorems for quasi-variational inequalities and applications to financial models. Eur J Oper Res 251:288–299

Dafermos S (1980) Traffic equilibrium and variational inequalities. Transport Sci 14:42–54

Dong Q, Cho Y, Zhong L, Rassias TM (2018) Inertial projection and contraction algorithms for variational inequalities. J Glob Optim 70:687–704

Duong VT, Gibali A (2019) Two strong convergence subgradient extragradient methods for solving variational inequalities in Hilbert spaces. Jpn J Indust Appl Math 36:299–321

Geunes J, Pardalos PM (2003) Network optimization in supply chain management and financial engineering: an annotated bibliography. Networks 42:66–84

Gibali A, Jolaoso LO, Mewomo OT, Taiwo A (2020) Fast and simple Bregman projection methods for solving variational inequalities and related problems in Banach spaces. Results Math 75(4):179, pp 36

Godwin EC, Alakoya TO, Mewomo OT, Yao J-C (2022) Relaxed inertial Tseng extragradient method for variational inequality and fixed point problems. Appl Anal. https://doi.org/10.1080/00036811.2022.2107913

Godwin EC, Mewomo OT, Alakoya TO (2023) A strongly convergent algorithm for solving multiple set split equality equilibrium and fixed point problems in Banach spaces. Proc Edinb Math Soc 66(2):475–515

He S, Dong QL, Tian H (2019) Relaxed projection and contraction methods for solving Lipschitz continuous monotone variational inequalities. Rev R Acad Cienc Exactas Fís Nat Ser A Mat RACSAM 113:2763–2787

He S, Wu T, Gibali A, Dong QL (2018) Totally relaxed, self-adaptive algorithm for solving variational inequalities over the intersection of sub-level sets. Optimization 67(9):1487–1504

He S, Xu HK (2013) Uniqueness of supporting hyperplanes and an alternative to solutions of variational inequalities. J Global Optim 57(4):1375–1384

Kinderlehrer D, Stampacchia G (2000) An introduction to variational inequalities and their applications. Classics in Applied Mathematics, 31. Philadelphia, PA: Society for Industrial and Applied Mathematics

Korpelevich GM (1976) The extragradient method for finding saddle points and other problems. Ekonom Mat Methody 12:747–756

Lawphongpanich S, Hearn DW (1984) Simplical decomposition of the asymmetric traffic assignment problem. Transport Res B 18:123–133

Ma B, Wang W (2022) Self-adaptive subgradient extragradient-type methods for solving variational inequalities. J Inequal Appl 54(2020):18

Muangchoo K, Rehman HU, Kumam P (2021) Two strongly convergent methods governed by pseudo-monotone bi-function in a real Hilbert space with applications. J Appl Math Comput 67:891–917

Nagurney A (1999) Network economics: a variational inequality approach, Second and, Revised. Kluwer Academic Publishers, Dordrecht, The Netherlands

Nagurney A, Dong J (2002) Supernetworks: Decision-making for the information age. Edward Elgar Publishing, Cheltenham, England

Nagurney A, Parkes D, Daniele P (2007) The internet, evolutionary variational inequalities, and the time-dependent Braess paradox. Comput Manag Sci 4:355–375

Ogwo GN, Izuchukwu C, Shehu Y, Mewomo OT (2022) Convergence of relaxed inertial subgradient extragradient methods for quasimonotone variational inequality problems. J Sci Comput 90(10):35

Panicucci B, Pappalardo M, Passacantando M (2007) A path-based double projection method for solving the asymmetric traffic network equilibrium problem. Optim Lett 1:171–185

Peeyada P, Cholamjiak W, Yambangwai D (2020) Solving common variational inequalities by hybrid inertial parallel subgradient extragradient-line algorithm for application to image deblurring. Authorea Preprints

Polyak BT (1964) Some methods of speeding up the convergence of iterates methods. U.S.S.R Comput Math Phys 4(5):1–17

Saejung S, Yotkaew P (2012) Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal 75:742–750

Scrimali L, Mirabella C (2018) Cooperation in pollution control problems via evolutionary variational inequalities. J Global Optim 70:455–476

Shehu Y, Iyiola OS (2017) Strong convergence result for monotone variational inequalities. Numer Algorithms 76(1):259–282

Smith MJ (1979) The existence, uniqueness and stability of traffic equilibria. Transport Res 13:295–304

Suantai S, Peeyada P, Yambangwai D, Cholamjiak W (2020) A parallel-viscosity-type subgradient extragradient-line method for finding the common solution of variational inequality problems applied to image restoration problems. Mathematics 8(2):248

Taiwo A, Jolaoso LO, Mewomo OT (2021) Viscosity approximation method for solving the multiple-set split equality common fixed point problems for quasi-pseudocontractive mappings in Hilbert spaces. J Ind Manag Optim 17(5):2733–2759

Tan KK, Xu HK (1993) Approximating fixed points of nonexpansive mappings by the Ishikawa iteration process. J Math Anal Appl 178:301–308

Thong DV, Hieu DV, Rassias TM (2020) Self adaptive inertial subgradient extragradient algorithms for solving pseudomonotone variational inequality problems. Optim Lett 14:115–144

Uzor VA, Alakoya TO, Mewomo OT (2022) Strong convergence of a self-adaptive inertial Tseng’s extragradient method for pseudomonotone variational inequalities and fixed point problems. Open Math 20:234–257

Wickramasinghe MU, Mewomo OT, Alakoya TO, Iyiola OS (2023) Mann-type approximation scheme for solving a new class of split inverse problems in Hilbert spaces. Appl Anal. https://doi.org/10.1080/00036811.2023.2233977

Yang J, Liu V (2019) Strong convergence result for solving monotone variational inequalities in Hilbert space. Numer Algorithms 80(3):741–752

Acknowledgements

The authors sincerely thank the Editor-in-Chief, Associate Editor and the anonymous referees for their careful reading, constructive comments and useful suggestions that improved the manuscript. The research of the first author is wholly supported by the University of KwaZulu-Natal, Durban, South Africa Postdoctoral Fellowship. He is grateful for the funding and financial support. The second author is supported by the National Research Foundation (NRF) of South Africa Incentive Funding for Rated Researchers (Grant Number 119903) and DSI-NRF Centre of Excellence in Mathematical and Statistical Sciences (CoE-MaSS), South Africa (Grant Number 2022-087-OPA). Opinions expressed and conclusions arrived are those of the authors and are not necessarily to be attributed to CoE-MaSS and NRF.

Funding

Open access funding provided by University of KwaZulu-Natal. The first author is funded by University of KwaZulu-Natal, Durban, South Africa Postdoctoral Fellowship. The second author is supported by the National Research Foundation (NRF) of South Africa Incentive Funding for Rated Researchers (Grant Number 119903) and DSI-NRF Centre of Excellence in Mathematical and Statistical Sciences (CoE-MaSS), South Africa (Grant Number 2022-087-OPA).

Author information

Authors and Affiliations

Contributions

Conceptualization of the article was given by TO and OT, methodology by TO, formal analysis, investigation and writing-original draft preparation by TO, Matlab codes, software and validation by OT, writing-review and editing by OT, project administration by OT. All authors have accepted responsibility for the entire content of this manuscript and approved its submission.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Algorithm 7.1 (Algorithm 8 in Cao and Guo 2020)

- Step 0.:

-

Let \(x_0, x_1\in H\) be two arbitrary initial points and set \(n=1.\)

- Step 1.:

-

Compute

$$\begin{aligned} w_n=x_n + \rho _n(x_n-x_{n-1}), \end{aligned}$$ - Step 2.:

-

Construct the half-space

$$\begin{aligned} C_n=\{x\in H: c(w_n)+\langle c'(w_n), x-w_n\rangle \le 0\}, \end{aligned}$$and compute

$$\begin{aligned} y_n= P_{C_n}(w_n-\tau Aw_n) \end{aligned}$$$$\begin{aligned} x_{n+1}= P_{C_n}(w_n-\tau Ay_n) \end{aligned}$$where

$$\begin{aligned} 0<\tau \le \frac{-\eta _pL_1+\sqrt{\eta _p^2L_1^2+L_2^2\nu ^2}}{L_2^2}, \end{aligned}$$$$\begin{aligned} 0<\nu \le \frac{1-3\rho -\gamma }{1-\rho +2\rho ^2+\gamma },\quad 0<\gamma <1-3\rho , \end{aligned}$$$$\begin{aligned} 0\le \rho _n\le \rho <\frac{1}{3}. \end{aligned}$$Set \(n:=n+1\) and return to Step 1,

where \(A:\mathcal {H}\rightarrow \mathcal {H}\) is monotone and \(L_2\)-Lipschitz continuous, \(c'(\cdot )\) is \(L_1\)-Lipschitz continuous and \(\eta _p\) is the parameter in Lemma 2.7 (2).

Algorithm 7.2 (Algorithm 2 in Ma and Wang 2022)

- Step 0.:

-

Let \(x_{-1},x_0,y_{-1}\in H; \varphi ,\mu \in [a,b]\subset (0,1);\lambda _{-1}\in (0,\frac{1-\varphi ^2}{2\eta _pL_1}],\) set \(n=0.\)

- Step 1.:

-

Given \(\lambda _{n-1},y_{n-1}\) and \(x_{n-1}.\) Let \(p_{n-1}=x_{n-1}-y_{n-1}.\)

$$\begin{aligned} \lambda _n={\left\{ \begin{array}{ll} \lambda _{n-1},\quad &{} \lambda _{n-1}\Vert A(x_{n-1})-A(y_{n-1})\Vert \le \varphi \Vert p_{n-1}\Vert ,\\ \lambda _{n-1}\mu ,\quad &{}\text {Otherwise.} \end{array}\right. } \end{aligned}$$ - Step 2.:

-

Compute

$$\begin{aligned} y_n= P_{C_n}(x_n-\lambda _n Ax_n). \end{aligned}$$ - Step 3.:

-

Compute

$$\begin{aligned} x_{n+1}= P_{C_n}(y_n-\lambda _n (Ay_n-Ax_n)), \end{aligned}$$where

$$\begin{aligned} C_n=\{x\in H: c(w_n)+\langle c'(w_n), x-x_n\rangle \le 0\}. \end{aligned}$$ - Step 4.:

-

Set \(n:=n+1\) and return to Step 1,

where \(A:\mathcal {H}\rightarrow \mathcal {H}\) is monotone and \(L_2\)-Lipschitz continuous, \(c'(\cdot )\) is \(L_1\)-Lipschitz continuous and \(\eta _p\) is the parameter in Lemma 2.7 (2).

Algorithm 7.3 (Algorithm 3.1 in Shehu and Iyiola 2017)

- Step 0.:

-

Given \(l\in (0,1), \mu \in (0,1).\) Let \(x_1\in \mathcal {H}\) be arbitrary.

- Step 1.:

-

Compute

$$\begin{aligned} y_n=P_{\mathcal {C}}(x_n-\lambda _n Ax_n), \end{aligned}$$where \(\lambda _n=l^{m_n},\) where \(m_n\) is the smallest nonnegative integer m such that

$$\begin{aligned} \lambda _n\Vert Ax_n-Ay_n\Vert \le \mu \Vert x_n-y_n\Vert . \end{aligned}$$ - Step 2.:

-

Compute

$$\begin{aligned} z_n=P_{T_n}(x_n-\lambda _n Ay_n), \end{aligned}$$where

$$\begin{aligned} T_n=\{x\in \mathcal {H}:\langle x_n-\lambda _n Ax_n-y_n, x-y_n\rangle \le 0\}. \end{aligned}$$ - Step 3.:

-

Compute

$$\begin{aligned} x_{n+1}=\alpha _nf(x_n)+(1-\alpha _n)z_n, \end{aligned}$$Set \(n:=n+1\) and return to Step 1,

where \(A:\mathcal {H}\rightarrow \mathcal {H}\) is monotone and Lipschitz continuous, and \(f:\mathcal {H}\rightarrow \mathcal {H}\) is a contraction.

Algorithm 7.4 (Algorithm 1 in Yang and Liu 2019)

Initialization: Given \(\lambda _1>0, \mu \in (0,1).\) Let \(x_1\in \mathcal {H}\) be arbitrary.

- Step1.:

-

Given the current iterate \(x_n,\) compute

$$\begin{aligned} y_n=P_{\mathcal {C}}(x_n-\lambda _n Ax_n), \end{aligned}$$If \(x_n=y_n\), then stop: \(x_n\) is a solution of the VIP. Otherwise,

- Step 2::

-

Compute

$$\begin{aligned} x_{n+1}=\alpha _n f(x_n) + (1-\alpha _n)z_n, \end{aligned}$$and

$$\begin{aligned} \lambda _{n+1}= {\left\{ \begin{array}{ll} \text{ min }\{\frac{\mu \Vert x_n-y_n\Vert }{\Vert Ax_n-Ay_n\Vert },\lambda _n\},&{}\text{ if } Ax_n-Ay_n\ne 0,\\ \lambda _n, &{}\text{ otherwise } \end{array}\right. } \end{aligned}$$where

$$\begin{aligned} z_n=y_n+\lambda _n(Ax_n-Ay_n), \end{aligned}$$Set \(n:=n+1\) and return to Step 1,

where \(A:\mathcal {H}\rightarrow \mathcal {H}\) is monotone and Lipschitz continuous, and \(f:\mathcal {H}\rightarrow \mathcal {H}\) is a contraction.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Opeyemi Alakoya, T., Temitope Mewomo, O. Strong Convergent Inertial Two-subgradient Extragradient Method for Finding Minimum-norm Solutions of Variational Inequality Problems. Netw Spat Econ (2024). https://doi.org/10.1007/s11067-024-09615-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s11067-024-09615-5

Keywords

- Variational inequalities

- Two-subgradient extragradient method

- Self-adaptive step size

- Inertial technique

- Minimum-norm solutions

- Image restoration