Abstract

This paper investigates the problems of \(H_\infty \) finite-time boundedness (FTB) and finite-time stability (FTS) for discrete-time neural networks (NNs) with both leakage delay and discrete delay, as well as different generalized activation functions. To this end, we construct suitable Lyapunov–Krasovskii (L–K) functionals and apply the extended reciprocally convex approach to derive delay-dependent criteria for the addressed problems. The obtained conditions are expressed as linear matrix inequalities (LMIs), which can be efficiently solved by the LMI Toolbox in Matlab. The paper also admits of two numerical examples to point up the advantages and reliability of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The idea of the finite-time stability of systems originated in Soviet scientific literature in the mid-20th century [19] and has since been applied to various real-world systems, such as biochemical reactions and communication networks, etc. [4, 12, 27]. The main goal of this analysis is to determine whether a system can maintain its desired behavior within a given time interval, which may be very short. By using the L–K functional approach and LMI techniques, a series of research works on stability, boundedness, stabilization, and \(H_\infty \) control over a finite time interval for both linear and nonlinear discrete-time systems were announced of late, e.g., see [3, 32, 38, 41, 44, 45].

NNs with delays are a type of artificial NNs that can model the temporal dynamics of complex systems. They can capture the effects of past inputs and outputs on the current state of the network and thus can handle non-Markovian processes. NNs with delays can learn the patterns and trends of time series data, such as stock prices, weather, or traffic, and forecast future values based on historical data; NNs with delays can be also used to design adaptive controllers for nonlinear systems, such as robots, vehicles, or power plants; and at last, NNs with delays can be applied to various signal-processing tasks, such as speech recognition, image processing, or biomedical signal analysis [5, 10, 17, 25, 26, 39, 40]. Especially, for the class of discrete-time NNs, there are a number of fascinating articles [1, 27, 37, 40] that deal with one of the topics of stability, passivity, or boundedness in a finite time interval. The paper [14] highlighted the importance of considering both discrete and leakage delays in the analysis of NNs. In particular, leakage delay in the negative feedback term can lead to instability and complex behaviors in NNs. Therefore, it is essential to investigate the effects of leakage delay on the stability and performance of NNs. Based on that fact, stability and passivity for several different classes of continuous-time NNs with leakage delay were studied in [5, 11, 21, 31], while the stability and dissipativity for some discrete-time analogs were considered in [6,7,8, 16, 22, 29, 34,35,36]. Delay-dependent stability criteria for complex-valued NNs with leakage delay were also established, e.g., see [9, 33]. The works mentioned seem to be enough to demonstrate the considerable attractiveness of leakage delay for researchers who have been working in various fields over the years. However, despite that, for the sake of convenience of analysis, leakage delay was unfortunately ignored while studying the finite-time stability, passivity, and boundedness of the systems mentioned in the papers [27, 40]. The same situation also holds for \(H_\infty \) FTB of the systems discussed in [1, 37]. On the other hand, from [15, 20], we know that nonlinear functions satisfying the sector-bounded condition are more general than the usual class of Lipschitz functions. However, up to this point, very few authors have investigated general NNs with activation functions satisfying the sector-bounded condition, and [10, 30] are some typical works among them.

To the best of our knowledge, in the literature, there are no results on the problem of \(H_\infty \) FTB for discrete-time NNs with leakage delay and discrete delay as well as different generalized activation functions. That is the main reason why we want to fill that gap in the existing literature. More specifically, in this paper, by a logical combination of appropriately constructed L–K functionals and an extended reciprocally convex matrix inequality, we first suggest conditions that ensure not only FTB of the involved NNs but also a finite-time \(H_\infty \) performance. With the same technique, the FTS of the corresponding nominal system is also obtained. Some numerical examples are offered to demonstrate the validity of the achieved conditions. The core contributions of this paper consist of:

-

It is the first time to propose delay-dependent criteria for \(H_\infty \) FTB and FTS of discrete-time NNs with a pair of time-varying delays (leakage delay and discrete delay). These criteria contain information about the upper and lower bounds of both types of delay.

-

The neuron activation functions are different and are supposed to satisfy the sector-bounded conditions, which are known to be more general than the usual Lipschitz conditions.

-

The extended reciprocally convex technique is entirely exploited, so the number of decision variables is limited as much as possible.

The arrangement of the rest of this paper is as follows. In Sect. 2, some relevant definitions and technical propositions are presented in detail, including the problem we aim to handle. Delay-dependent criteria in the form of matrix inequalities for \(H_\infty \) FTB and FTS, respectively, together with two illustrative examples, are delivered in Sect. 3. Sections on conclusion and references close the paper.

Notation \(\mathbb Z_+\) represents the set of all non-negative integers; \(\mathbb {R}^{n}\) and \(\mathbb {R}^{m \times n}\) symbol for the n-dimensional Euclidean space and the set of real matrices of size \(m\times n\) respectively; \(A^{-1}\) and \(A^{\textsf{T}}\) are the inverse and transpose of a matrix A respectively; \(A > 0\) means that A is a positive-definite matrix and \(A > B\) implies \(A - B > 0\). The symbol \(*\) stands for entries of a matrix implied by symmetry (in a symmetric matrix).

2 Preliminaries

Let us take the following discrete-time NNs with a pair of time-varying delays and any external disturbance into consideration

where \(x(k)\in \mathbb {R}^n\) is the state vector; n is the number of neurons; \(z(k)\in \mathbb {R}^r\) is the observation output; the diagonal matrix \(A \in \mathbb {R}^{n\times n}\) characterizes the self-feedback terms; \(B,\, B_1\in \mathbb {R}^{n\times n}\) are connection weight matrices; \(C\in \mathbb {R}^{n\times s}, C_1\in \mathbb {R}^{r\times s}\) are known matrices; \(A_1, D, D_1\in \mathbb {R}^{r\times n}\) are the observation matrices. The discrete delay function h(k) and leakage delay function \(\sigma (k)\) satisfy the condition

where \(h_1, h_2, \sigma _1\) and \(\sigma _2\) are given natural numbers; \(\rho := \max \{\sigma _2, h_2\}\) and \(\phi (k)\) is the initial function. \(\omega (k)\in \mathbb {R}^s\) is the external disturbance that is assumed to satisfy

where d is a given positive scalar. Besides, in the framework of this article, we adopt the following assumption for neuron activation functions \( f(\cdot ) \) and \( g(\cdot ) \).

Assumption 1

[10, 30] The diagonal neuron activation functions

are assumed to be continuous and satisfy \( f_i(0) = 0,\, g_i(0) = 0 \) for \(i=1,\dots , n\) as well as the sector-bounded conditions

where \( F_1, F_2, G_1 \) and \( G_2 \) are real matrices of appropriate dimensions.

Remark 1

As the authors explained in detail in the articles [10, 30], the sector-bounded condition covers the standard Lipschitz condition as a special case, so the NNs model (1) is more general than those were depicted in [17, 25, 26, 36, 37, 39].

Definition 1

(FTB [2, 40]) Suppose that \(c_1, c_2, N\) are given scalars with \(0<c_1<c_2,\, N\in \mathbb {Z}_{+}\), and that \(R>0\) is a symmetric matrix. The discrete-time delay NNs with exogenous disturbances \(\omega (k)\) satisfying (3)

is FTB w.r.t. \((c_1, c_2, R, N)\) if

Remark 2

FTS w.r.t. \((c_1, c_2, R, N)\) is a special case of FTB w.r.t. \((c_1, c_2, R, N)\) which happens when \(\omega (k)=0\).

Definition 2

(\(H_{\infty }\) FTB [32, 37]) Suppose that \(c_1, c_2, N\) are given scalars with \(0< c_1<c_2, \, N\in \mathbb {Z}_{+}\), and that \(R>0\) is a symmetric matrix. System (1) is \(H_\infty \) FTB w.r.t. \((c_1, c_2, R, N)\) if the following two conditions hold:

-

1.

System (5) is FTB w.r.t. \((c_1, c_2, R, N)\).

-

2.

Under zero initial condition, for any nonzero \(\omega (k)\) satisfying (3), the output z(k) satisfies the condition

$$\begin{aligned} \sum _{k=0}^Nz^{\textsf{T}}(k)z(k) \leqslant \gamma \sum _{k=0}^N\omega ^{\textsf{T}}(k)\omega (k) \end{aligned}$$(6)with a prescribed scalar \(\gamma >0\).

Proposition 1

(Discrete Jensen inequality, [18]) Suppose that \(M > 0 \) is a symmetric matrix of order n, \(k_1, k_2\) are positive integers satisfying \(k_1 \leqslant k_2,\) and \(\chi : \{k_1, k_1 + 1, \dots , k_2\} \rightarrow \mathbb {R}^{n}\) is a vector function. Then

Proposition 2

(Extended reciprocally convex matrix inequality, [42]) Suppose that \(R > 0 \) is a symmetric matrix of order n. Then the following matrix inequality

holds for some matrix S (of order n) and for all \( \alpha \in (0,1) \), where \( T_1 = R- SR^{-1}S^{\textsf{T}},\; T_2 = R- S^{\textsf{T}}R^{-1}S. \)

3 Main Results

Let \(y(k) = x(k+1) - x(k)\) and assume that \(\max _{k\in \{-\rho ,-\rho +1,\dots ,-1\}}y^{\textsf{T}}(k)y(k) < \tau \) with \(\tau \) is given positive real constant. For \( p, q \in \{1,2\}\), the following notations are used to facilitate the statement of the main results.

Theorem 1

Suppose that \(c_1, c_2, \gamma , N\) are given scalars such that \(0<c_1<c_2,\, \gamma > 0,\, N\in \mathbb {Z}_{+}\), and that \(R>0\) is a symmetric matrix. If there exist some positive-definite symmetric matrices \(P, Q, R_1, R_2, \) \( S_1, S_2\in \mathbb {R}^{n\times n},\) two any matrices \(Y_1, Y_2\in \mathbb {R}^{n\times n}\) and some positive scalars \(\lambda _i, \; i = \overline{1,7}\), \(\delta \geqslant 1\), such that the following matrix inequalities are satisfied

then system (1) is \(H_\infty \) FTB w.r.t. \((c_1, c_2, R, N)\).

Proof

Consider the L–K functional candidate \( V(k) = \displaystyle \sum \limits _{i=1}^{4}V_{i}(k)\), where

Denote

then it is not hard to get the following evaluation

By discrete Jensen inequality (Proposition 1),

where \(\zeta _1 = x(k-h_1)-x(k-h(k)), \; \zeta _2 = x(k-h(k))-x(k-h_2)\) and \( \alpha _1 = \frac{h(k)-h_1}{h_{12}} \). Using Proposition 2 to further evaluate the right hand side of the last inequality, we gain

where \( M_1 = R_2- Y_1 R_2^{-1}Y_1^{\textsf{T}}\) and \( M_2 = R_2- Y_1^{\textsf{T}}R_2^{-1}Y_1. \)

In an entirely similar way, we obtain

where \(\eta _1 = x(k-\sigma _1)-x(k-\sigma (k)), \; \eta _2 = x(k-\sigma (k))-x(k-\sigma _2),\; \alpha _2 = \frac{\sigma (k)-\sigma _1}{\sigma _{12}},\; N_1 = S_2- Y_2 S_2^{-1}Y_2^{\textsf{T}}\) and \( N_2 = S_2- Y_2^{\textsf{T}}S_2^{-1}Y_2. \)

Insert (14), (16) into (12) and (15), (17) into (13) then put (10)–(13) together, we have

In addition, we receive the following evaluations from constraint (4)

Thenceforth, by the combination of (18) and (19), we get

where

and

with the entries of the last matrix are defined as

Next, well-known Schur Complement Lemma gives us

where

possesses its entries which are defined as follows

The convex combination technique allows us to assert that \(\Theta _{h(k),\sigma (k)} < 0 \) if the following four inequalities

hold. In turn, by Schur Complement Lemma again, these inequalities hold if the inequalities stated in (8) hold. Combine these remarks with (20), we have

This implies that

By condition (3), it is obvious that

Moreover, according to condition (7), we can estimate

Substitute these upper bounds of \(V_i(0), i= 1,\dots , 4,\) into (21), it can be derived that

where

Furthermore, by (7), we see that (for \(k\in \mathbb {Z}_{+}\))

Using Schur Complement Lemma to perform equivalent transformations on (9), we find

or

As a result of (22)–(24), it can be concluded that

According to Definition 1, we can confirm that system (5) is FTB w.r.t. \((c_1, c_2, R, N)\). The rest of the proof is to show the system (1) has a finite-time \(l_2\)-gain \(\gamma \), i.e., the condition (6) is satisfied. However, this step can be done in the same way as in [37]. The theorem has been completely proved. \(\square \)

Corollary 2

Suppose that \(c_1, c_2, N\) are given scalars such that \(0<c_1<c_2, N\in \mathbb {Z}_{+}\), and that \(R>0\) is a symmetric matrix. Then, the nominal system, which is the system in Eq. (5) without any disturbance (\(\omega (k) = 0\)), is FTS w.r.t. \((c_1, c_2, R, N)\) if there exist some positive-definite symmetric matrices \(P, Q, R_1, R_2, S_1, S_2\in \mathbb {R}^{n\times n}\), two any matrices \(Y_1, Y_2\in \mathbb {R}^{n\times n}\) and some positive scalars \(\lambda _i, \; i = \overline{1,7}\), \(\delta \geqslant 1\), that satisfy the LMIs in Eq. (7) and also the following matrix inequalities

where \(\overline{\Sigma }_{h_p,\sigma _q}\) as a submatrix of \(\Sigma _{h_p,\sigma _q}\) that is obtained by removing the 10th and 16th rows and columns and \(\overline{\Pi }^{11}= - c_2\delta \lambda _1, \) \( \overline{\Pi }^{ij} = \Pi ^{ij} \; \text {for any other} \; i, j.\)

Proof

We can omit the proof of this result, since it follows the same steps as the one given for Theorem 1. \(\square \)

Remark 3

Theorem 1 and Corollary 2 provide theoretical analysis for \({H}_\infty \) FTB and FTS for a class of discrete-time NNs with leakage delay, discrete delay, and sector-bounded neuron activation functions. Leakage delay is the time-delay in leakage term of the systems and a significant factor that influences the network dynamics; in other words, the influence of leakage delay is not trivial. However, since the time-delay in the leakage term is often not easy to deal with, up to this point, such delay has yet to receive sufficient attention in the qualitative study of NNs. Moreover, in contrast to most existing literature, where the neuron activation functions are assumed to satisfy standard Lipschitz conditions [17, 25, 26, 36, 37, 39], in this paper, we consider more general sector-bounded nonlinearities that include the Lipschitz case as a special one. In short, the criteria stated in Theorem 1 and Corollary 2 are fresh and meaningful contributions.

Remark 4

To verify the practicability of the matrix inequalities in (8), (9), (25), and (26), we can fix the value of \(\delta \) and transform them into LMIs. Then, we can use the LMI Toolbox in MATLAB [13] to solve them efficiently.

Remark 5

The reciprocally convex combination technique, proposed in [24], is a method that minimizes the number of decision variables in matrix inequalities. In the proof of Theorem 1, we have applied a modified version of this technique to deal with double summation terms, which has the potential to achieve less conservative solutions than the widely used original method [42]. Namely, the matrix inequalities (8) and (25) contain only two free-weighting matrices each. Another advantage of Theorem 1 is that the results are derived without using any model transformations or other free-weighting matrix methods. We will demonstrate that the criteria given in Theorem 1 and Corollary 2 are succinct, valid, and practical through some examples.

Example 1

Considering the NNs (1) with its parameters are as follows

Moreover, the nonlinear activation functions f(x(k)) and \(g(x(k-h(k)))\) are chosen as

Then we can confirm that (4) is satisfied with

Since \( \overline{F}_1 = \begin{bmatrix} 0.12 &{} 0\\ 0 &{} 0.15 \end{bmatrix},\; \overline{F}_2 = -0.4I\ne 0,\; \overline{G}_1 = 0.03I,\; \overline{G}_2 = -0.2I\ne 0 \), the neuron activation functions \(f(\cdot ), \, g(\cdot ) \) in this case are sector-bounded.

For given \(h_1=1,\; h_2=7,\; \sigma _1=1,\; \sigma _2=4,\; d=1,\; \tau =1,\; \gamma = 1,\; c_1=1,\; c_2=9,\; N=90\) and \(R = \begin{bmatrix} 2.25 &{} 0.35 \\ 0.35 &{} 1.75 \end{bmatrix}\), the LMIs (7)–(9) are feasible with \(\delta =1.0001\) and the following values of the decision variables

As a result of this, the system under consideration is \({H}_\infty \) FTB w.r.t. (1, 9, R, 90) according to Theorem 1.

Example 2

Considering the nominal system with the same matrices \(A, B, B_1, F_1, F_2,\) \(G_1, G_2, R\) as in Example 1. Also, we choose parameters \(h_1,\) \( \sigma _1, \sigma _2, c_1, c_2, \tau \) and N as in Example 1 except for \(h_2 = 11\). By solving the LMIs (7), (25) and (26) with \(\delta =1.0001\), we can gain the solution matrices and scalars as follows:

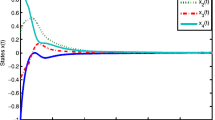

Therefore, by Corollary 2, we can affirm that the nominal system is FTS w.r.t. (1, 9, R, 90). With the initial condition is chosen as

the trajectories of the system are described in Fig. 1.

The trajectories of the system are considered in Example 2

Remark 6

In the proof of Theorem 1, we have applied the Jensen inequality without applying the Wirtinger-based summation inequality or any refined Jensen summation inequalities to obtain better conditions, although they are useful tools for this purpose, see [23, 28, 43] and references therein. The reason is that system (1) is a nonlinear system with leakage delay and discrete delay, which makes the analysis more complicated than the linear systems or NNs with lonely discrete delay in [23, 27, 28, 42, 43]. Therefore, we have used the Jensen inequality and the extended reciprocally convex technique in this paper which are more suitable for our problems. However, we think that trying the use of Wirtinger-based summation inequality or some refined Jensen summation inequalities in qualitative analysis for system (1) are some potential directions for future research to reduce conservatism. Moreover, our results can be also extended to switched NNs or Markovian jump NNs such as [30, 40].

4 Conclusion

This paper addresses the FTB and finite-time \(H_\infty \) performance for a general class of discrete-time NNs subjected to leakage time-varying delay, discrete time-varying delay, and different sector-bounded neuron activation functions. We have developed some novel delay-dependent criteria based on designing suitable L–K functionals and applying an enhanced reciprocally convex technique. These criteria can be easily implemented and solved by MATLAB software in a fast and efficient way.

Data Availability

This article does not involve any data sharing because it did not produce or examine any data sets in the course of this study.

References

Ali MS, Meenakshi K, Gunasekaran N (2017) Finite-time \(H_\infty \) boundedness of discrete-time neural networks norm-bounded disturbances with time-varying delay. Int J Control Autom Syst 15:2681–2689

Amato F, Ariola M, Dorato P (2001) Finite-time control of linear systems subject to parametric uncertainties and disturbances. Automatica 37:1459–1463

Amato F, Ariola M, Cosentino C (2010) Finite-time control of discrete-time linear systems: analysis and design conditions. Automatica 46:919–924

Amato F, Ambrosino R, Ariola M, Cosentino C, Tommasi GD (2014) Finite-time stability and control. Springer, London

Balasubramaniam P, Vembarasan V, Rakkiyappan R (2012) Global robust asymptotic stability analysis of uncertain switched Hopfield neural networks with time delay in the leakage term. Neural Comput Appl 21:1593–1616

Balasundaram K, Raja R, Zhu Q, Chandrasekaran S, Zhou H (2016) New global asymptotic stability of discrete-time recurrent neural networks with multiple time-varying delays in the leakage term and impulsive effects. Neurocomputing 214:420–429

Banu LJ, Balasubramaniam P (2016) Robust stability analysis for discrete-time neural networks with time-varying leakage delays and random parameter uncertainties. Neurocomputing 179:126–134

Banu LJ, Balasubramaniam P, Ratnavelu K (2015) Robust stability analysis for discrete-time uncertain neural networks with leakage time-varying delay. Neurocomputing 151:808–816

Chen X, Song Q (2013) Global stability of complex-valued neural networks with both leakage time delay and discrete time delay on time scales. Neurocomputing 121:254–264

Chen Y, Zheng WX (2012) Stochastic state estimation for neural networks with distributed delays and Markovian jump. Neural Netw 25:14–20

Chen Y, Fu Z, Liu Y, Alsaadid FE (2017) Further results on passivity analysis of delayed neural networks with leakage delay. Neurocomputing 224:135–141

Dorato P (2006) An overview of finite-time stability. In: Menini L, Zaccarian L, Abdallah CT (eds) Current trends in nonlinear systems and control, in Honor of Kokotovic P and Nicosia Turi. Birkhauser, Boston, pp 185–194

Gahinet P, Nemirovskii A, Laub AJ, Chilali M (1995) LMI control toolbox for use with MATLAB. The MathWorks Inc, Massachusetts

Gopalsamy K (2007) Leakage delays in BAM. J Math Anal Appl 325:1117–1132

Han QL (2005) Absolute stability of time-delay systems with sector-bounded nonlinearity. Automatica 41:2171–2176

Hu M, Cao J, Hu A (2014) Exponential stability of discrete-time recurrent neural networks with time-varying delays in the leakage terms and linear fractional uncertainties. IMA J Math Control Inf 31:345–362

Jia T, Chen X, Zhao F, Cao J, Qiu J (2023) Adaptive fixed-time synchronization of stochastic memristor-based neural networks with discontinuous activations and mixed delays. J Frankl Inst 360:3364–3388

Jiang X, Han QL, Yu X (2005) Stability criteria for linear discrete-time systems with interval-like time-varying delay. Proc Am Control Conf 2005:2817–2822

Kamenkov G (1953) On stability of motion over a finite interval of time [in Russian]. J Appl Math Mech 17

Khalil HK (2002) Nonlinear systems, 3rd edn. Prentice Hall, New York

Li XD, Rakkiyappan R (2013) Stability results for Takagi–Sugeno fuzzy uncertain BAM neural networks with time delays in the leakage term. Neural Comput Appl 22:203–219

Maa Z, Sun G, Liu D, Xing X (2016) Dissipativity analysis for discrete-time fuzzy neural networks with leakage and time-varying delays. Neurocomputing 175:579–584

Nam PT, Trinh H, Pathirana PN (2015) Discrete inequalities based on multiple auxiliary functions and their applications to stability analysis of time-delay systems. J Frankln Inst 352:5810–5831

Park PG, Ko JW, Jeong C (2011) Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47:235–238

Rajchakit G, Sriraman R (2021) Robust passivity and stability analysis of uncertain complex-valued impulsive neural networks with time-varying delays. Neural Process Lett 53:581–606

Rajchakit G, Sriraman R, Boonsatit N, Hammachukiattikul P, Lim CP, Agarwal P (2021) Global exponential stability of Clifford-valued neural networks with time-varying delays and impulsive effects. Adv Differ Equ 208:1–21

Saravanakumar R, Stojanovic SB, Radosavljevic DD, Ahn CK, Karimi HR (2019) Finite-time passivity-based stability criteria for delayed discrete-time neural networks via new weighted summation inequalities. IEEE Trans Neural Netw Learn Syst 30:58–71

Seuret A, Gouaisbaut F, Fridman E (2015) Stability of discrete-time systems with time-varying delays via a novel summation inequality. IEEE Trans Autom Control 60:2740–2745

Shan Y, Zhong S, Cui J, Hou L, Li Y (2017) Improved criteria of delay-dependent stability for discrete-time neural networks with leakage delay. Neurocomputing 266:409–419

Shi P, Zhang Y, Agarwal RK (2015) Stochastic finite-time state estimation for discrete time-delay neural networks with Markovian jumps. Neurocomputing 151:168–174

Song Q, Cao J (2012) Passivity of uncertain neural networks with both leakage delay and time-varying delay. Nonlinear Dyn 67:1695–1707

Song H, Yu L, Zhang D, Zhang WA (2012) Finite-time \(H_\infty \) control for a class of discrete-time switched time-delay systems with quantized feedback. Commun Nonlinear Sci Numer Simul 17:4802–4814

Song Q, Shu H, Zhao Z, Liu Y, Alsaadi FE (2017) Lagrange stability analysis for complex-valued neural networks with leakage delay and mixed time-varying delays. Neurocomputing 244:33–41

Sowmiya C, Raja R, Cao J, Li X, Rajchakit G (2018) Discrete-time stochastic impulsive BAM neural networks with leakage and mixed time delays: an exponential stability problem. J Franklin Inst 355:404–4435

Sowmiya C, Raja R, Cao J, Rajchakit G (2018) Impulsive discrete-time BAM neural networks with random parameter uncertainties and time-varying leakage delays: an asymptotic stability analysis. Nonlinear Dyn 91:2571–2592

Suntonsinsoungvon E, Udpin S (2020) Exponential stability of discrete-time uncertain neural networks with multiple time-varying leakage delays. Math Comput Simul 171:233–245

Tuan LA (2020) \(H_\infty \) finite-time boundedness for discrete-time delay neural networks via reciprocally convex approach. VNU J Sci Math Phys 36:10–23

Tuan LA, Phat VN (2016) Finite-time stability and \(H_\infty \) control of linear discrete-time delay systems with norm-bounded disturbances. Acta Math Vietnam 41:481–493

Yi Z, Tan KK (2004) Convergence analysis of recurrent neural networks. Springer, Berlin

Zhang Y, Shi P, Nguang SK, Zhang J, Karimi HR (2014) Finite-time boundedness for uncertain discrete neural networks with time-delays and Markovian jumps. Neurocomputing 140:1–7

Zhang Z, Zhang Z, Zhang H, Zheng B, Kamiri HR (2014) Finite-time stability analysis and stabilization for linear discrete-time system with time-varying delay. J Frankl Inst 351:3457–3476

Zhang CK, He Y, Jiang L, Wang QG, Wu M (2017) Stability analysis of discrete-time neural networks with time-varying delay via an extended reciprocally convex matrix inequality. IEEE Trans Cybern 47:3040–3049

Zhang CK, He Y, Jiang L, Wu M, Zeng HB (2017) Summation inequalities to bounded real lemmas of discrete-time systems with time-varying delay. IEEE Trans Autom Control 62:2582–2588

Zong G, Wang R, Zheng W, Hou L (2015) Finite-time \(H_\infty \) control for discrete-time switched nonlinear systems with time delay. Int J Robust Nonlinear Control 25:914–936

Zuo Z, Li H, Wang Y (2013) New criterion for finite-time stability of linear discrete-time systems with time-varying delay. J Frankl Inst 350:2745–2756

Acknowledgements

The paper benefited greatly from the constructive feedback and insights of the editor and the anonymous reviewers. We are grateful for their time and expertise in helping us enhance the quality of our work.

Funding

The author acknowledges the financial support from Hue University under the project DHH2021-01-188.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The work reported in this paper was not influenced by any competing financial interests or personal relationships that the author is aware of.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tuan, L.A. On \({H}_\infty \) Finite-Time Boundedness and Finite-Time Stability for Discrete-Time Neural Networks with Leakage Time-Varying Delay. Neural Process Lett 56, 7 (2024). https://doi.org/10.1007/s11063-024-11489-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11489-0