Abstract

This paper presents a joint–coordinate adjoint method for optimal control of multi-rigid-body systems. Initially formulated as a set of differential-algebraic equations, the adjoint system is brought into a minimal form by projecting the original expressions into the joint’s motion and constraint force subspaces. Consequently, cumbersome partial derivatives corresponding to joint-space equations of motion are avoided, and the approach is algorithmically more straightforward. The analogies between the formulation of Hamilton’s equations of motion in a mixed redundant-joint set of coordinates and the necessary conditions arising from the minimization of the cost functional are demonstrated in the text. The observed parallels directly lead to the definition of a joint set of adjoint variables. Through numerical studies, the performance of the proposed approach is investigated for optimal control of a double pendulum on a cart. The results demonstrate a successful application of the joint-coordinate adjoint method. The outcome can be easily generalized to optimal control of more complex systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the design and development of many complex multibody systems, researchers have to consider trade-off between various system attributes such as sizing, performance, comfort, or cost. Computational optimization methods are almost always required for most design tasks, and the gradient information of an objective function is heavily exploited in the generation of sensitivities. To this end, a family of black box methods can be distinguished, where the gradient computation routine is agnostic of the underlying dynamics. The main approaches of this kind are finite difference method as well as far more involved automatic differentiation [1].

Conversely, one of the most accurate and computationally efficient methods of calculating derivatives of performance measure with respect to the input variables, such as design or control variables, is based on the mathematical models of multibody system (MBS). In the optimal design and optimal control (OC) of MBS, an implicit dependency exists between state and input variables. There are two major approaches to capturing this dependency: direct differentiation [2–4] and the adjoint methods [5, 6]. Various formulations of the equations of motion (EOM) yield different adjoint systems, each characterized by different properties [7, 8]. Recent works [9–11] have demonstrated that using constrained Hamilton’s canonical equations, in which Lagrange multipliers enforce constraint equations at the velocity level, one can obtain more stable solutions for differential-algebraic equations (DAEs) as compared to acceleration-based counterparts [12, 13]. This phenomenon is partially connected with the differential index reduction of the resultant Hamilton’s equations.

The adjoint approach represents a comprehensive computational framework rather than a single method, where many authors develop this broad branch of research in various directions. For example, the adjoint method for solving the nonlinear optimal control problem has been applied in different engineering areas such as parameter identification [14], structural mechanics [15], time-optimal control problems [16], and feedforward control design [17]. In the conceptual setting of nonlinear mechanical systems, the control problem is investigated using the optimal control theory, where an approximate solution of the resulting set of differential-algebraic equations can be obtained employing a broad variety of numerical procedures. Furthermore, the integration routine may be combined with the discretized optimal control problem to come up with the discrete adjoint equations [18, 19]. The adjoint method has also been employed in hybrid systems involving discontinuities or switching modes [20, 21].

Another important aspect lies in the selection of the variables describing both the dynamic and adjoint subspaces. It is a common practice in the field of multi-rigid body dynamic computations to employ a redundant set of coordinates to describe the underlying phenomena. As a result, the dynamic equations of motion are formulated as a set of differential-algebraic equations. Consequently, the adjoint sensitivity analysis also yields a system of DAEs that must be solved to determine the gradient of the performance measure [5–8]. On the other hand, the derivation of the adjoint system based on the joint-coordinate formulation of the underlying dynamics raises very complicated expressions for the coefficients of the resultant adjoint system. The resulting quantities are much harder to establish systematically than in the case of the redundant DAE formulation [22]. Recent works investigate the relationships and analogies between the adjoint systems formulated as DAEs and ODEs [23, 24], whereas joint-coordinate adjoint formulations are also actively studied in the literature [15].

In this paper, the authors demonstrate a novel formulation of the adjoint method to efficiently compute the gradient of the cost functional that arises in optimal design or optimal control problems. Initially, a multibody system is described in terms of constrained Hamilton’s equations of motion using a redundant set of coordinates. It is well known that such an approach is attractive due to its relative simplicity. However, it leads to a mixed set of differential-algebraic equations, which can be challenging to solve numerically. An alternative is to use a minimal set of coordinates equal to the number of degrees of freedom of a multibody system. Various techniques have been proposed in the literature for coordinate reduction, e.g., [25–27]. The same apparatus can be used to establish the relationships between redundant and independent adjoint variables. Ultimately, the adjoint system is formulated as a set of first-order ODEs with right-hand sides that can be easily presented in a closed form [28, 29].

The primary importance of this research may be summarized as follows:

-

1.

We present a novel adjoint-based method for fixed-time optimal control problems that exploits a set of independent adjoint variables incorporated in the Hamiltonian framework.

-

2.

We show the parallels between the formulation of the equations of motion in mixed redundant-joint coordinates and the necessary conditions arising from the minimization of the cost functional.

-

3.

We derive the adjoint system of ordinary differential equations and consistent terminal conditions expressed in a set of joint-space coordinates derived through the use of the joint’s motion and constraint force subspaces.

-

4.

We demonstrate a successful application of the proposed method to gradient computation for the optimal design of a spatial rigid body and the optimal control of a double pendulum on a cart.

This paper is organized as follows. Section 2 presents the equations of motion for rigid multibody systems formulated in redundant and joint-space sets of coordinates. Two complementary sub-spaces are introduced, which correspond to the joint’s motion and constrained directions. Next, in Sect. 3.1, a design sensitivity problem is concisely recalled. The adjoint method tailored to constrained Hamilton’s equations is presented in Sect. 3.2. The concept of independent adjoint variables is introduced in Sect. 4. The developed method is applied to the optimal design of a spatial pendulum and optimal control of a double pendulum on a cart. The results are shown in Sect. 5, followed by a discussion presented in Sect. 6. Ultimately, the conclusions are drawn in Sect. 7.

2 Hamilton’s canonical equations

This section introduces the reader to the appropriate background concerning various formulations of Hamilton’s canonical equations of motion. Section 2.1 is focused on the equations expressed in dependent coordinates. Subsequently, joint space formulation is derived in Sect. 2.2 by projecting the equations of motion onto the joint’s motion and constraint-force subspaces, respectively. Notation and basic symbols that are used in this paper are also explained.

2.1 Constrained formulation of EOM

Let us define a set of dependent variables \(\boldsymbol{\mathrm{q}} \in \mathcal {R}^{n_{q}}\) that uniquely describe the configuration of a multibody system. Since the number of configuration variables exceeds the number of degrees of freedom, there exist \(m\) algebraic constraints that can be symbolically written as: \(\boldsymbol{\mathrm{\Upphi }}(\boldsymbol{\mathrm{q}}) = \boldsymbol{\mathrm{0}}\), \(\boldsymbol{\mathrm{\Upphi }}\in \mathcal {R}^{m}\). The time derivative of constraint equations is equal to: \(\dot{\boldsymbol{\mathrm{\Upphi }}} = \boldsymbol{\mathrm{\Upphi }}_{ \boldsymbol{\mathrm{q}}}\dot{\boldsymbol{\mathrm{q}}}\), where \(\boldsymbol{\mathrm{\Upphi }}_{\boldsymbol{\mathrm{q}}}\in \mathcal {R}^{m \times n_{q}}\) stands for the constraint Jacobian matrix. The time derivative of generalized coordinates, \(\dot{\boldsymbol{\mathrm{q}}}\), consists of translational (Cartesian coordinates) and rotational (e.g., Euler angles or unit quaternions) components. Conversely, this paper will assume spatial velocities \(\boldsymbol{\mathrm{v}}\in \mathcal {R}^{n_{v}}\) as the primary variables used to formulate the equations of motion. The velocity of an arbitrary body \(A\) can be expressed as \(\boldsymbol{\mathrm{v}}_{A} = [\dot{\boldsymbol{\mathrm{r}}}_{A}^{T}, \, \boldsymbol{\mathrm{\upomega }}_{A}^{T}]^{T}\), where \(\dot{\boldsymbol{\mathrm{r}}}_{A} \in \mathcal{R}^{3}\) and \(\boldsymbol{\mathrm{\upomega }}_{A} \in \mathcal{R}^{3}\) refer to linear and angular velocity of a body, respectively. Moreover, spatial velocity is related to the time derivative of dependent coordinates via bidirectional, configuration-dependent map:  ,

,  , which allows one to rewrite the derivative of the constraint equations in the following way:

, which allows one to rewrite the derivative of the constraint equations in the following way:

Here, the matrix  plays the role of the constraint Jacobian.

plays the role of the constraint Jacobian.

Let ℒ be the system Lagrangian function defined by \(\mathcal{L}(\boldsymbol{\mathrm{q}},\boldsymbol{\mathrm{v}})=T( \boldsymbol{\mathrm{q}},\boldsymbol{\mathrm{v}})-V( \boldsymbol{\mathrm{q}}) \), where \(T=\frac{1}{2}\boldsymbol{\mathrm{v}}^{T}\boldsymbol{\mathrm{M}} \boldsymbol{\mathrm{v}}\) and \(V\) are the kinetic and potential energy of a system, respectively, and let the symbol \(\boldsymbol{\mathrm{M}} \in \mathcal {R}^{n_{v} \times n_{v}}\) denote a mass matrix. Canonical momenta \(\boldsymbol{\mathrm{p}} \in \mathcal{R}^{n_{v}}\) conjugated with the velocities \(\boldsymbol{\mathrm{v}}\) are defined as:

Subsequently, the Hamiltonian function of a multibody system can be expressed as \(\mathcal{H}=\boldsymbol{\mathrm{p}}^{T}\boldsymbol{\mathrm{v}}- \mathcal{L}\). Hamilton’s equations of motion for constrained multi-rigid-body system can, therefore, be formulated as follows:

where \(\boldsymbol{\mathrm{Q}}= \boldsymbol{\mathrm{Q}}_{ex} - \mathcal{H}_{ \boldsymbol{\mathrm{q}}}^{T}\) is a sum of external non-conservative and conservative forces, respectively. The term \(\boldsymbol{\mathrm{D}}\boldsymbol{\mathrm{\uplambda }}\) reflects constraint reaction forces, where the quantity \(\boldsymbol{\mathrm{\uplambda }}\in \mathcal{R}^{m}\) is a vector of Lagrange multipliers that represent constraint forces at joints distributed along the directions indicated by columns of the Jacobian matrix \(\boldsymbol{\mathrm{D}}^{T}\in \mathcal{R}^{m\times n_{v}}\).

Now, let us augment the Lagrangian function with a term explicitly enforcing the kinematic velocity constraints (1): \(\mathcal{L}^{*}=\mathcal{L}+\boldsymbol{\mathrm{\upsigma }}^{T} \dot{\boldsymbol{\mathrm{\Upphi }}}\). The quantity \(\boldsymbol{\mathrm{\upsigma }}\in \mathcal{R}^{m}\) represents a vector of \(m\) new Lagrange multipliers associated with the velocity level constraint equations. Based on this modification, we can define the augmented momenta in the following way:

It can be easily shown that \(\dot{\boldsymbol{\mathrm{\upsigma }}}= \boldsymbol{\mathrm{\uplambda }}\). The physical interpretation of this relation is that \(\boldsymbol{\mathrm{\upsigma }}\) is a vector of constraint force impulses. Moreover, from the definition of the augmented momenta follows that \(\boldsymbol{\mathrm{p}}^{*} = \boldsymbol{\mathrm{p}} + \boldsymbol{\mathrm{D}}\boldsymbol{\mathrm{\upsigma }}\). By differentiating this relation, invoking the property \(\dot{\boldsymbol{\mathrm{\upsigma }}}= \boldsymbol{\mathrm{\uplambda }}\), and substituting it into Eq. (3), we come up with the following formula for the derivative of augmented momenta:

Ultimately, equations (1), (4), and (5) can be collected to form a set of constrained Hamilton’s canonical equations of motion:

Equations (6a), (6b) can be integrated numerically starting from initial configuration described by position (\({\boldsymbol{\mathrm{q}}} \rvert _{t=0}\)) and momenta (\({\boldsymbol{\mathrm{p}}^{*}} \rvert _{t=0}\)) vectors. The initial momentum vector can be obtained directly from the prescribed velocity via Eq. (4) by substituting \({\boldsymbol{\mathrm{\upsigma }}} \rvert _{t=0} = \boldsymbol{\mathrm{0}}\).

Equations of motion (6a), (6b) constitute \(2n_{v}+m\) differential-algebraic equations of index two with position, momenta, and Lagrange multipliers as unknowns. According to the literature, this approach is often more efficient and numerically stable than the acceleration-based formulation, mainly due to the lowered differential index [10, 12, 13]. The formulation based on spatial velocities is preferred to the corresponding formulation presented in ref. [30] since it seamlessly integrates with the joint space formulation presented in the following subsection. This property will be even more useful when deriving the relationships between adjoint variables in Sect. 4.

2.2 Joint–space equations of motion

This section presents the derivation of joint-space Hamilton’s equations via projection method. Equations of motion for open–loop system are demonstrated first and further generalized to take into account closed-loop topologies. Two orthogonal subspaces are defined next, namely joint’s motion subspace \(\boldsymbol{\mathrm{H}}_{j} \in \mathcal{R}^{6\times n_{\mathit{dof}}^{j}}\) and constrained motion subspace \(\boldsymbol{\mathrm{D}}_{j} \in \mathcal{R}^{6\times 6-n_{\mathit{dof}}^{j}}\) that can be utilized to conveniently represent joints in a multibody system [31]. For example, to describe a spherical joint interconnecting two bodies, we would define these subspaces as:

where \(\boldsymbol{\mathrm{s}}_{1c}\in \mathcal {R}^{3}\) denotes a vector starting from the joint’s origin to the body-fixed coordinate frame, typically a center of mass as shown in Fig. 1. The tilde operator transforms a vector to a skew-symmetric matrix, which, when multiplied by another vector, can be interpreted as a cross-product of two vectors. The relative spatial velocity of a single body can be described with the aid of the allowable motion subspace as: \(\boldsymbol{\mathrm{v}}_{A}^{\text{rel}} = \boldsymbol{\mathrm{H}}_{A} \boldsymbol{\mathrm{\upbeta }}_{A}\), where \(\boldsymbol{\mathrm{\upbeta }}_{A} \in \mathcal{R}^{n_{\beta}^{A}}\) is the joint velocity vector denoting relative velocity of body \(A\) with respect to the velocity of its parent body. Subsequently, one can show that there is a linear transformation between joint velocities \(\boldsymbol{\mathrm{\upbeta }}\) and spatial velocities \(\boldsymbol{\mathrm{v}}\), which leads to the following global expression [9, 31]:

Here, both global and joint velocities are presented in a stacked vector format, whereas \(\boldsymbol{\mathrm{H}}(\boldsymbol{\mathrm{\upalpha }}) \in \mathcal {R}^{n_{v} \times k}\) (with subindex dropped) denotes a global allowable motion subspace. The matrix \(\boldsymbol{\mathrm{H}}\) is dependent on joint coordinates \(\boldsymbol{\mathrm{\upalpha }}\in \mathcal {R}^{n_{\upalpha}}\), whose derivative is uniquely related to joint velocities, similarly to the absolute-coordinate case:  ,

,  . Nevertheless, in many practical situations, it is correct to assume that the joint velocity is equal to the derivative of joint coordinates, i.e. \(\boldsymbol{\mathrm{\upbeta }}= \dot{\boldsymbol{\mathrm{\upalpha }}}\). Let us pinpoint that Eq. (7) is the explicit representation of the constraints \(\dot{\boldsymbol{\mathrm{\Upphi }}} = \boldsymbol{\mathrm{0}}\) [32]. Since \(\boldsymbol{\mathrm{\upbeta }}\) is a vector of unconstrained variables for open-chain systems, the following relation also holds: \(\boldsymbol{\mathrm{D}}^{T}\boldsymbol{\mathrm{H}}= \boldsymbol{\mathrm{0}}\).

. Nevertheless, in many practical situations, it is correct to assume that the joint velocity is equal to the derivative of joint coordinates, i.e. \(\boldsymbol{\mathrm{\upbeta }}= \dot{\boldsymbol{\mathrm{\upalpha }}}\). Let us pinpoint that Eq. (7) is the explicit representation of the constraints \(\dot{\boldsymbol{\mathrm{\Upphi }}} = \boldsymbol{\mathrm{0}}\) [32]. Since \(\boldsymbol{\mathrm{\upbeta }}\) is a vector of unconstrained variables for open-chain systems, the following relation also holds: \(\boldsymbol{\mathrm{D}}^{T}\boldsymbol{\mathrm{H}}= \boldsymbol{\mathrm{0}}\).

At this point, Hamilton’s equations of motion can be projected onto the global allowable motion subspace \(\boldsymbol{\mathrm{H}}\). Premultiplying Eq. (6a) by \(\boldsymbol{\mathrm{H}}^{T}\) while taking into account that \(\boldsymbol{\mathrm{H}}^{T} \boldsymbol{\mathrm{D}} \boldsymbol{\mathrm{\upsigma }}= \boldsymbol{\mathrm{0}}\) leads to the first set of canonical equations:

where \(\widehat{\boldsymbol{\mathrm{p}}} \in \mathcal {R}^{k}\) denotes joint momenta in stacked vector format, and \(\widehat{\boldsymbol{\mathrm{M}}} \in \mathcal {R}^{k \times k}\) can be interpreted as joint-space mass matrix. Consequently, the time derivative of \(\widehat{\boldsymbol{\mathrm{p}}}\) can be written as: \(\dot{\widehat{\boldsymbol{\mathrm{p}}}} = \boldsymbol{\mathrm{H}}^{T} \dot{\boldsymbol{\mathrm{p}}}^{*}+ \dot{\boldsymbol{\mathrm{H}}}^{T} \boldsymbol{\mathrm{p}}^{*}\). Now, let us premultiply Eq. (6b) by \(\boldsymbol{\mathrm{H}}^{T}\) and add the term \(\dot{\boldsymbol{\mathrm{H}}}^{T} \boldsymbol{\mathrm{p}}^{*}\) to both sides of the resultant equation. This allows for the following calculation:

By recalling that \(\boldsymbol{\mathrm{H}}^{T} \dot{\boldsymbol{\mathrm{D}}}+ \dot{\boldsymbol{\mathrm{H}}}^{T} \boldsymbol{\mathrm{D}}= \frac{\mathrm{d}}{\mathrm{d}t}(\boldsymbol{\mathrm{H}}^{T} \boldsymbol{\mathrm{D}}^{T}) = \boldsymbol{\mathrm{0}}\), we come up with the following set of governing equations for open-chain systems:

Let us note that certain terms from global formulation, such as \(\boldsymbol{\mathrm{Q}}\) or \(\boldsymbol{\mathrm{M}}\), also appear in Eqs. (10a), (10b). Although we have defined them as dependent on absolute coordinates \(\boldsymbol{\mathrm{q}}\), \(\boldsymbol{\mathrm{v}}\), it is possible to reformulate these quantities to be dependent on joint variables. Furthermore, joint coordinates can be mapped onto a vector of absolute coordinates via unique, non-linear, possibly recursive relation, that can be symbolically expressed as \(\boldsymbol{\mathrm{q}} = \boldsymbol{\mathrm{g}} ( \boldsymbol{\mathrm{\upalpha }})\).

The governing equations (10a), (10b) are applicable only to open-loop MBS. If there is one or more kinematic closed-loops in a system, the joint coordinates become dependent. A cut-joint method is usually employed to transform a closed-loop topology into an open-loop mechanism [33]. Loop-closure constraint equations \(\boldsymbol{\mathrm{\Uptheta }}= \boldsymbol{\mathrm{0}}\), \(\boldsymbol{\mathrm{\Uptheta }}\in \mathcal{R}^{m_{c}}\) are imposed to reflect that the joint coordinates are dependent. The time derivative of the loop constraints can be written as: \(\dot{\boldsymbol{\mathrm{\Uptheta }}} = \boldsymbol{\mathrm{\Upgamma }}^{T} \boldsymbol{\mathrm{\upbeta }}= \boldsymbol{\mathrm{0}}\), where \(\boldsymbol{\mathrm{\Upgamma }}^{T} \in \mathcal{R}^{m_{c} \times n_{ \beta}}\) represents the constraint Jacobian matrix. It can be shown that constrained form of equations of motion reads as:

where \(\boldsymbol{\mathrm{\upsigma }}_{c} \in \mathcal{R}^{m_{c}}\) is a vector of constraint impulse loads associated with loop-closure constraints.

3 Adjoint sensitivity analysis

3.1 Introduction

A prevalent task arising in the field of optimal design or control of MBS is to minimize the following performance index:

where the integrand, denoted by \(h\), represents the expression to be minimized in a fixed time horizon \(t_{f}\), and \(\boldsymbol{\mathrm{b}}\in \mathcal {R}^{n_{b}}\) denotes a vector of design parameters or a set of discretized input functions. The second term in Eq. (12) is a terminal cost, suitable for prescribing a particular state of the system at the terminal time \(t_{f}\), which is fixed.

The most efficient local optimization algorithms that are able to minimize the performance measure (12) rely heavily on the gradient of the performance measure. This computation phase is called the sensitivity analysis, and it usually constitutes a performance bottleneck. Many approaches are available to cope with the problem of efficient gradient computation. The most straightforward is a purely numerical approach that treats the underlying dynamics as a black box and computes the gradient via finite differences. However, the list of the method’s drawbacks is relatively long: from high computational burden to evaluate a single derivative, through undesirable scaling in the case of multiplicity of design variables, to ambiguity in the choice of the perturbation step. Conversely, relying on the mathematical model of the dynamic equations is possible. This family of methods is significantly more difficult to implement; however, it yields the most accurate and computationally efficient results. A direct approach to the sensitivity analysis, known as direct differentiation method, employs a chain rule of differentiation to the performance measure (12):

The idea is to compute the implicit state derivatives \(\boldsymbol{\mathrm{q}}_{\boldsymbol{\mathrm{b}}}\), \(\boldsymbol{\mathrm{v}}_{\boldsymbol{\mathrm{b}}}\) by differentiating the underlying equation of motion (6a), (6b) with respect to the design or control variables \(\boldsymbol{\mathrm{b}}\). This method proves to be reliable and efficient when the number of constraints is relatively large [2, 3] since the state derivatives can be used for each of the constraint equations. Nevertheless, this approach requires solving \(\dim (\boldsymbol{\mathrm{b}})=n_{b}\) sub-problems of size comparable to the underlying EOM. Concurrently, it is more often the case in the field of multibody dynamics that the number of constraints is relatively low compared with the dimension of \(\boldsymbol{\mathrm{b}}\). The adjoint method focuses on solving different equations, thus avoiding the cumbersome computation of the state derivatives. Therefore, its efficiency is not affected by the number of design/control variables, and the total cost of computing the gradient is only proportional to the size of the underlying dynamic problem. This property makes the adjoint method the most viable option for efficient and reliable gradient computation, as will be briefly presented in Sect. 3.2.

3.2 The adjoint method

Equations of motion must be fulfilled throughout the optimization process, hence it is valid to treat them as constraints imposed on the design variables \(\boldsymbol{\mathrm{b}}\). The key principle of the adjoint method is the inclusion of these constraints to the generic cost functional (12). For brevity, let us consider a set of DAEs describing dynamics of a multibody system, such as Eqs. (6a), (6b), in the following implicit form: \(\boldsymbol{\mathrm{F}}(\boldsymbol{\mathrm{b}}, \boldsymbol{\mathrm{y}}, \dot{\boldsymbol{\mathrm{y}}}, \boldsymbol{\mathrm{\upsigma }})=\boldsymbol{\mathrm{0}}\). The symbol \(\boldsymbol{\mathrm{y}}\) represents a vector of state variables of appropriate size. Consequently, the performance measure (12) may be extended to include the dynamic equation of motion in the following way:

where \(\boldsymbol{\mathrm{w}}(t) \in \mathcal {R}^{2n_{v}+m}\) is a vector of arbitrary, time-dependent multipliers, known as adjoint, or costate, variables. Let us investigate the variation of the extended performance measure (14):

The total variation \(\updelta \overline{J}\) is a result of variations \(\updelta \boldsymbol{\mathrm{y}}\), \(\updelta \boldsymbol{\mathrm{\upsigma }}\), \(\updelta \boldsymbol{\mathrm{b}}\), respectively. The variation \(\updelta \dot{\boldsymbol{\mathrm{y}}}\) that appears under the integral can be eliminated by integrating appropriate expression by parts:

Only final time component is taken into account outside of the integral in Eq. (16) since \({\updelta \boldsymbol{\mathrm{y}}} \rvert _{t=0} = \boldsymbol{\mathrm{0}}\) when initial conditions are explicitly prescribed. Upon substituting equation (16) into Eq. (15), we come up with the following expression:

Adjoint variables have at this point arbitrary values. The goal of the adjoint method is to pick such a set of adjoint variables that would simplify equation (17) to the expression involving solely variations of design or control variables \(\updelta \boldsymbol{\mathrm{b}}\):

Equation (18) exposes the gradient of the performance measure and allows us to obtain a unique expression for its computation by exploiting the adjoint variables:

-

\(\nabla _{\boldsymbol{\mathrm{b}}} \overline{J} = \int _{0}^{t_{f}} \big(h_{\boldsymbol{\mathrm{b}}} + \boldsymbol{\mathrm{w}}^{T} \boldsymbol{\mathrm{F}}_{ \boldsymbol{\mathrm{b}}} \big) \, \mathrm{d}t\) – when \(\boldsymbol{\mathrm{b}}\) represents design variables

-

– when \(\boldsymbol{\mathrm{b}}\) denotes discretized control input signals, and

– when \(\boldsymbol{\mathrm{b}}\) denotes discretized control input signals, and  is the discretization time-step.

is the discretization time-step.

To find a set of design variables or control functions that produces a stationary value of the cost functional \(J\), the adjoint variables are required to fulfill the following set of necessary conditions:

which is a general formula for the adjoint system. Equations (19a), (19b) constitute a set of DAEs that must be solved backward in time from the boundary conditions prescribed by Eqs. (19c), (19d). Equation (19d) suggests that the terminal cost \(S\) does not depend on the time derivative \(\dot{\boldsymbol{\mathrm{y}}}\), which is against the assumption presented in Eq. (14). This issue can be easily circumvented; however, it involves introducing additional components that would obscure the main point presented herein. For clarity, the details on how one can treat the boundary conditions of the adjoint system are presented in Appendix A.

3.3 Adjoint in redundant set of variables

Now, let us derive the necessary conditions for the case when a rigid multibody system is described by a set of constrained Hamilton’s equations. Rewriting Eq. (14) in a way that explicitly enforces the dynamic equations (6a), (6b) yields:

Here, the quantities \(\boldsymbol{\mathrm{\upeta }}=\boldsymbol{\mathrm{\upeta }}(t) \in \mathcal{R}^{n_{v}}\), \(\boldsymbol{\mathrm{\upxi }}=\boldsymbol{\mathrm{\upxi }}(t) \in \mathcal{R}^{n_{v}}\), and \(\boldsymbol{\mathrm{\upmu }}=\boldsymbol{\mathrm{\upmu }}(t) \in \mathcal{R}^{m}\) constitute the entries of the adjoint variables vector \(\boldsymbol{\mathrm{w}}\) defined in Sect. 3.2. Splitting the general dynamics formula presented in Eq. (14) into more explicit equations, such as constrained Hamilton’s EOM, will grant us more detailed insight into the properties of the resultant adjoint system; specifically, the relations that arise between absolute and joint-space adjoint variables. Applying the procedure described by Eqs. (14)–(19d) to the extended performance measure (20) yields the following set of equations:

The coefficients \(\boldsymbol{\mathrm{A}}(\boldsymbol{\mathrm{q}}, \boldsymbol{\mathrm{v}}, \boldsymbol{\mathrm{\upsigma }})\) and \(\boldsymbol{\mathrm{r}}(\boldsymbol{\mathrm{q}}, \boldsymbol{\mathrm{v}}, \dot{\boldsymbol{\mathrm{v}}}, \boldsymbol{\mathrm{\upsigma }}, \boldsymbol{\mathrm{\upxi }}, \boldsymbol{\mathrm{\upeta }})\) are quantities that raise directly from calculations presented in Sect. 3.2. The detailed derivation, as well as the formulas for \(\boldsymbol{\mathrm{A}}\) and \(\boldsymbol{\mathrm{r}}\) (cf. Eq. (B.9)), is presented in Appendix B. Equations (21a) and (21b) follow directly from Eq. (19a), while expression (21c) is implied by Eq. (19b). Additionally, Eqs. (19c) or (A.2) provide a set of boundary conditions for the adjoint multipliers \(\boldsymbol{\mathrm{\upeta }}(t_{f})\), \(\boldsymbol{\mathrm{\upxi }}(t_{f})\), \(\boldsymbol{\mathrm{\upmu }}(t_{f})\) at the fixed, terminal time. The formula for these quantities is presented in Appendix A. The system (21a)–(21c) constitutes a set of first order, index–2, linear DAEs with unknown adjoint variables. One of the objectives of this paper is to reduce the adjoint system of equations to a minimal set by introducing a new set of adjoint variables that closely resemble joint coordinates used in the equations of motion. To this end, a significant computational advantage can be gained by writing Eqs. (21a)–(21c) as a set of ordinary-differential equations and reducing their differentiation index. The substitution of \(\dot{\boldsymbol{\mathrm{\upxi }}}\) from Eq. (21a) into a time derivative of Eq. (21c) and into Eq. (21b) yields the following system of equations:

The coefficient matrix on the LHS of equation (22b) is the same as in Eq. (6a). Equations (22a), (22b) must be integrated backward in time from known final conditions, i.e., \(\boldsymbol{\mathrm{\upeta }}(t_{f})\), \(\boldsymbol{\mathrm{\upxi }}(t_{f})\), \(\boldsymbol{\mathrm{\upmu }}(t_{f})\) defined in equations (A.4a), (A.4c). A more detailed discussion on the approach shown herein is presented in reference [30].

4 Independent adjoint variables

The goal of this section is to show the analogies that exist between constrained Hamiltonian formulation and necessary conditions for the minimization of cost functional subjected to differential-algebraic equations. As a result, a novel concept is demonstrated that allows one to introduce a set of independent adjoint variables. The key concept of this derivation lies in the relation between absolute-coordinate and joint-coordinate formulations of the equations of motion. A closer look at these relationships allows one to define a set of independent adjoint co-states. The unknown variables \(\boldsymbol{\mathrm{\upeta }}\), \(\boldsymbol{\mathrm{\upxi }}\) introduced in equations (21a)–(21c) are dependent since Eqs. (21a)–(21c) is the adjoint system to the DAE (6a), (6b). Therefore, these quantities are referred to as absolute (i.e., constrained) adjoint variables. Conversely, the set of independent adjoint variables \(\hat{\boldsymbol{\mathrm{\upeta }}}\in \mathcal {R}^{n_{\upbeta}}\) and \(\hat{\boldsymbol{\mathrm{\upxi }}}\in \mathcal {R}^{n_{\upbeta}}\) analogous to their dependent counterparts will be referred to as joint–space adjoint variables.

The joint-space dynamic equations (10a), (10b) are derived by projecting the EOM (6a), (6b) onto the global motion subspace \(\boldsymbol{\mathrm{H}}\). Let us rewrite both sets of equations in the following way:

The relation between the system of equations (23) and the set in (24) can be captured as follows:

Consequently, the performance measure may be transformed in the desired way to come up with a piece of new useful information. Instead of augmenting the cost functional (12) with the absolute-coordinate equations (23), we utilize the joint-space equations (24) and invoke relations (25) to come up with:

The variables \(\hat{\boldsymbol{\mathrm{\upeta }}}(t) \in \mathcal {R}^{n_{\upbeta}}\) and \(\hat{\boldsymbol{\mathrm{\upxi }}}(t) \in \mathcal {R}^{n_{\upbeta}}\) denote a new set of independent adjoint variables that reside in the joint-space \(\boldsymbol{\mathrm{H}}\). By comparing equation (26) with Eq. (20), we establish the relations between joint- and absolute-coordinate co-states:

The system of equations (27a), (27b) suggests that \(\hat{\boldsymbol{\mathrm{\upeta }}}= \dot{\hat{\boldsymbol{\mathrm{\upxi }}}}\). In fact, this is the joint-space counterpart of the terse relation \(\boldsymbol{\mathrm{\upeta }}= \dot{\boldsymbol{\mathrm{\upxi }}}\) (cf. Eq. (22a)). The simplicity of these relations comes specifically from a virtue of the Hamiltonian formulation since both momenta \(\boldsymbol{\mathrm{p}}^{*}\), \(\hat{\boldsymbol{\mathrm{p}}}\) and their derivatives are not premultiplied by any state–dependent coefficients. Moreover, the second equation in (23), (24) is the derivative of the first one, which is not the case, e.g., in the Lagrangian formulation. Ultimately, let us insert Eq. (21a) into Eq. (21c) and integrate the outcome by parts to get:

The expression (28) can be interpreted as the implicit velocity–level constraint equations imposed on the adjoint variable \(\boldsymbol{\mathrm{\upxi }}\). Similarly, equation (27a) represents the same kinematic constraint formulated explicitly. Ultimately, equations (27a) and (28) reveal an interesting property of the adjoint variable \(\boldsymbol{\mathrm{\upxi }}\). Apparently, this quantity is somewhat analogous to the spatial velocity vector \(\boldsymbol{\mathrm{v}}\). The relations are detailed in Table 1.

Let us now investigate algebraic constraints imposed on the physical and adjoint systems. Table 2 presents a detailed comparison of these quantities. Specifically, one can see that the adjoint constraints are shifted toward the higher-order derivative compared to the geometric constraints imposed on a multibody system. Consequently, index–1 formulation of the adjoint system (22a), (22b) involves jerk-level constraints instead of velocity-level constraints, which is the case for EOM (6a), (6b). Equations appearing in Table 2 represent implicit characterization of the constraints. An equivalent form can be obtained with the aid of the motion subspace \(\boldsymbol{\mathrm{H}}\) whose components specify the allowable velocity range space in \(\mathcal{R}^{6}\). For example, a set of equations (27a), (27b) describes explicit velocity- and acceleration-level constraints imposed on the absolute adjoint variables. Subsequently, the differentiation of Eq. (27b) yields the explicit form of constraints that tie up redundant and independent sets of adjoint variables:

Once we plug Eq. (29) into (22b) and premultiply both sides by the term \(\boldsymbol{\mathrm{H}}^{T}\), it is possible to come up with the joint-space adjoint equations:

A system of equations (30a), (30b) may be integrated backward in time for the unknown adjoint multipliers \(\hat{\boldsymbol{\mathrm{\upeta }}}\) and \(\hat{\boldsymbol{\mathrm{\upxi }}}\). The initial values can be calculated by finding the values of absolute-coordinate adjoint variables (cf. Eqs. (A.4a), (A.4c)) and projecting them onto the subspace \(\boldsymbol{\mathrm{H}}\):

Please note that the matrix \(\boldsymbol{\mathrm{H}}^{T} \boldsymbol{\mathrm{H}}\) is invertible in non-singular configurations. Let us also pinpoint that the RHS of Eq. (30b) depends on absolute adjoint coordinates, i.e. \(\boldsymbol{\mathrm{r}}_{J} = \boldsymbol{\mathrm{r}}_{J}( \boldsymbol{\mathrm{q}}, \boldsymbol{\mathrm{v}}, \dot{\boldsymbol{\mathrm{v}}}, \boldsymbol{\mathrm{\upsigma }}, \boldsymbol{\mathrm{\uplambda }}, \boldsymbol{\mathrm{\upeta }}, \boldsymbol{\mathrm{\upxi }})\); however, the components that appear underneath are straightforward and relatively easy to formulate algorithmically [28]. The expressions that depend on the variables \(\boldsymbol{\mathrm{q}}\), \(\boldsymbol{\mathrm{v}}\), \(\dot{\boldsymbol{\mathrm{v}}}\), \(\boldsymbol{\mathrm{\upsigma }}\), \(\boldsymbol{\mathrm{\uplambda }}\) constitute a set of parameters of the quantity \(\boldsymbol{\mathrm{r}}_{J}\) and Eq. (30b) since their values have already been established in the forward sweep. Moreover, a system of equations (30a), (30b) constitutes a set of ODEs since the constraints are enforced explicitly via Eq. (29), and no additional algebraic constraints must be taken into account (at least for open-loop multibody systems). Once the adjoint variables \(\hat{\boldsymbol{\mathrm{\upeta }}}\), \(\hat{\boldsymbol{\mathrm{\upxi }}}\) are evaluated for the entire time domain, their absolute-coordinate counterparts can be calculated via Eqs. (27a), (27b). Ultimately, they can be utilized to efficiently and reliably compute the gradient of a performance measure (12).

Let us summarize the contents of this section. The forward solution of the equations of motion yields state variables and reaction loads recorded at discrete time intervals of the temporal domain. These quantities are needed to compute the coefficients (i.e., appropriate Jacobi matrices) necessary for the adjoint sensitivity analysis. The results of almost all calculations performed in this process are to be reused for the adjoint gradient calculation (or calculated on-the-fly). Subsequently, by invoking the necessary conditions for the extremum of the performance measure (12), we came up with a set of DAEs, supplied with the relevant boundary conditions, which must be solved backward in time from the time instant \(t=t_{f}\) to the time instant \(t=0\). Simultaneously, we define the dependencies between absolute and joint–space Hamilton’s equations (25). These relationships allow us to derive the relations between joint–space and absolute adjoint variables (27a), (27b). Ultimately, by combining expressions (27a), (27b) with the projection methods, it was possible to develop a set of ordinary differential equations for the adjoint system formulated in terms of a set of independent adjoint variables, i.e., equations (30a), (30b). Ultimately, solving Eqs. (30a), (30b) for the adjoint multipliers allows one for the evaluation of the cost functional gradient (12). A possible approach for computation of the gradient at each step of the optimization procedure is summarized in Algorithm 1.

5 Numerical examples

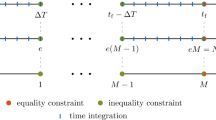

This section presents the application results that demonstrate the validity of the methodology as well as underlying challenges encountered. We investigate the problem of open-loop control signal synthesis for a double pendulum on a cart presented in Fig. 2. The gravity acts along the negative \(y\) axis of the global frame, and a viscous friction loads are introduced at each joint in the system. The mechanism is controlled by a horizontal force applied to the cart. The vector of design parameters \(\boldsymbol{\mathrm{b}}\) consists of discretized input signals \(u(t)\), where \(\Delta t = 0.005\) s is the discretization step. Consequently, \(\boldsymbol{\mathrm{b}}= [b_{0}, b_{1}, \ldots , b_{N}]\), where \(b_{0}=u(0)\), \(b_{1} = u(\Delta t)\), \(b_{N} = u(N \Delta t) = u(t_{f})\) and \(N = \frac{t_{f}}{\Delta t}\). The input signal \(u(t)\) can be easily recreated from \(\boldsymbol{\mathrm{b}}\). When the integration procedure requests an intermediate value, a spline interpolation is performed to approximate it. The parameters for the system, such as masses, moments of inertia, lengths, etc., are gathered in Table 3. Although the analyzed multibody system moves in plane, it has been modeled with a spatial approach following the methodology presented in the previous sections.

5.1 Swing–up maneuver

Initial conditions for joint angles are specified to be \(\alpha _{1}=-\frac{\pi}{2}\), \(\alpha _{2}=0\) and are translated into the system stable equilibrium. The goal of this task is to stabilize the open-loop chain in the upright configuration. Mathematically, this can be described in the following way:

where \(\gamma \) is a binary value enforcing that pendula will stop moving at the final time if \(\gamma =1\). The initial guess is simply no actuation (\(u_{0}(t) = 0\)) exerted on the cart. The gradient is calculated by solving Eqs. (30a), (30b) and conveyed to the optimization algorithm. The employed procedure utilizes \(\mathtt{ode45}\) Matlab function to integrate forward dynamics and the adjoint system, and \(\mathtt{fminunc}\) to compute the desired control input. Figure 3 presents the optimization convergence rates, specifically, how the cost and first–order optimality measure progressed with respect to iteration count in the case when \(\gamma =0\). The first 30 iterations were performed with the steepest descent method, which uniformly reduced the cost to a near-zero value. Subsequently, we employed the quasi-Newton approach with the BFGS method for updating the Hessian for better convergence. In the following step, the optimization has been re-run with the last result used as the initial guess for the case \(\gamma =1\) in Eq. (32). The progress of this computation is presented in Fig. 4, while the results of both processes are displayed in Fig. 5. The plotted lines represent the absolute angle (\(\upvarphi _{1}=\upalpha _{1}\), \(\upvarphi _{2}=\upalpha _{1}+\upalpha _{2}\)) of both pendula at the final iteration for two cases: \(\gamma =\{1,0\}\). Let us point out that the solid lines enter the final value with a slope, which is zero, suggesting that the final velocity is equal to zero.

In summary, overall procedure consists of the following steps:

-

1.

Set \(\gamma =0\) and use fminunc with the steepest descent method to significantly reduce the value of the performance measure (first 30 iterations in Fig. 3)

-

2.

While \(\gamma =0\), use fminunc with the BFGS method for updating the Hessian and converge to more precise solution (iterations 31 and the following in Fig. 3; the result is denoted by dashed lines in Fig. 5).

-

3.

Set \(\gamma =1\) and use fminunc with the BFGS method with initial guess from the former optimization (Fig. 4). The solution is recorded in Fig. 5 as solid lines.

The gradient of the objective function computed at the first iteration from ODE (30a), (30b) has been compared with its DAE counterpart (cf. Eqs. (22a), (22b)). The absolute adjoint variables can be recreated from the joint-space using a set of equations (27a), (27b). Figure 6 shows that these quantities have almost identical values. Moreover, we append a plot with the results taken from the complex step method [35], which additionally shows a good agreement. Lastly, Fig. 7 displays constraint violation errors at different levels: velocity-level  (28), its first derivative ˙ (21c), as well as ¨. The plots refer to the ODE (cf. Eqs. (30a), (30b)) and DAE (Eqs. (22a), (22b)) formulations, respectively. The latter plot reveals that the constraint equations is fulfilled up to the machine accuracy since it is enforced explicitly in Eq. (22b). On the other hand, lower-level constraints are characterized by larger constraint violation errors, when compared against the ODE formulation. The DAE formulation exhibits the drift phenomenon due to a lack of numerical stabilization. Since the adjoint equations are solved backward in time, the most accurate results of the DAE formulation can be found at \(t_{f}=3\) s, whereas the errors are accumulated as the numerical procedure approaches the initial time.

(28), its first derivative ˙ (21c), as well as ¨. The plots refer to the ODE (cf. Eqs. (30a), (30b)) and DAE (Eqs. (22a), (22b)) formulations, respectively. The latter plot reveals that the constraint equations is fulfilled up to the machine accuracy since it is enforced explicitly in Eq. (22b). On the other hand, lower-level constraints are characterized by larger constraint violation errors, when compared against the ODE formulation. The DAE formulation exhibits the drift phenomenon due to a lack of numerical stabilization. Since the adjoint equations are solved backward in time, the most accurate results of the DAE formulation can be found at \(t_{f}=3\) s, whereas the errors are accumulated as the numerical procedure approaches the initial time.

5.2 Stabilization of the cart

This test case investigates the calculation of the input control signal that will stabilize the cart while the pendula are free-falling under the gravitational force. The initial configuration of the MBS can be described in the following way: \(\alpha _{0} = 0\), \(\alpha _{1}=\frac{\pi}{4}\), \(\alpha _{2}=0\), while the bodies’ velocities are equal to zero. The optimal control approach to solving this problem requires specifying a performance measure, which can be defined in the following way:

where \(x := \alpha _{0}\) denotes an \(x\)–coordinate of the cart. On the other hand, it is possible to abandon the OC approach by adding a driving constraint equation of the form \(x=0\), solving only the forward dynamics problem, and recording the Lagrange multiplier \(\uplambda _{x=0}\) associated with the appropriate reaction force. Let us note that the latter approach can be applied to a narrow class of problems and, e.g., it would not work in the case of Sect. 5.1. Nevertheless, the specified approach yields a very good approximation of the solution and can be utilized to compare and verify the outcome. Figure 8 depicts the input control forces calculated using the approach proposed in this paper and taking into account the driving constraint force. Consequently, Fig. 9 presents how the position of the cart changes in time for different actuation signals. The response of the cart for the optimized input signal diverges \(\pm 7\) mm from the equilibrium, which is a very good fit.

The starting guess for the optimization is again set to zero, i.e. \(u_{0}(t)=0\). Figure 10 shows the convergence rate of the \(\mathtt{fminunc}\) Matlab function, which executes quasi-Newton algorithm with a user-supplied gradient calculated from equations (30a), (30b). Figure 11 displays the gradients computed at the initial guess of the optimization and evaluated for the solution found from utilizing a driving constraint. One can see a high agreement between the methods employed. Moreover, the value of the gradient evaluated at the solution is expected to be equal to zero. This is not precisely the case in Fig. 11; however, the magnitude of the computed gradient is significantly lower when compared with the gradient evaluated at the initial guess.

6 Discussion

The scope of this paper is relatively broad, touching different formulations of the EOM, projection methods, and adjoint sensitivity analysis. Its main contribution lies in the novel formulation of the adjoint method based on independent adjoint variables while avoiding computing complex state derivatives, which is a necessity when standard formulation of the adjoint method is combined with the joint–space equations of motion.

In this paper, absolute- (6a), (6b) and relative-coordinate (10a), (10b) formulations of Hamilton’s equations of motion are interchangeably used. By deriving the joint–space approach directly from its absolute–coordinate counterpart, the underlying dependencies between both formulations are grasped and succinctly captured by Eq. (25). These observations are further utilized to identify a set of independent adjoint variables shown in Eq. (26). The interrelations between joint–space and redundant sets of co-states are explicitly discovered in Eqs. (27a), (27b). Specifically, equation (27a) reveals a rather surprising analogy between the joint velocity \(\boldsymbol{\mathrm{\upbeta }}\) and the adjoint variable \(\hat{\boldsymbol{\mathrm{\upxi }}}\). Ultimately, it has been possible to formulate the adjoint system as a set of ODEs represented by a minimal set of variables by combining the derived relations with the projection methods and applying them to the absolute-coordinate adjoint system (22a), (22b). The resultant adjoint system (30a), (30b) can be solved backward in time with the aid of a standard ODE integration routine to efficiently evaluate the gradient of the performance measure.

Formulation (30a), (30b) possesses many advantages over the system (22a), (22b), which are directly inherited from the joint-space formulation (10a), (10b). The most obvious is that ODEs are generally easier to solve than DAEs and give more stable solutions during the numerical integration (cf. Fig. 7). This virtue is valid in the case of open-loop kinematic chains. The analysis of closed-loop systems requires additional efforts to include loop-closure constraints. Nevertheless, the number of algebraic constraints in joint-space framework would be significantly lower compared to the redundant setting. The scope of this paper is limited to tree-like topologies. Consequently, the number of variables that must be integrated numerically is proportional to the number of degrees of freedom, rather than the total number of redundant coordinates, which, in many practical applications, may provide a significant improvement over the absolute–coordinate formulation.

Furthermore, the coefficients and the right-hand side (\(\boldsymbol{\mathrm{r}}_{A}\), \(\boldsymbol{\mathrm{r}}_{B}\), \(\boldsymbol{\mathrm{r}}_{J}\)) of the adjoint system involve state-dependent jacobians of the EOM with respect to state variables. These matrices have a regular and relatively simple structure in the case of Eq. (22b), where a vast majority of expressions are readily available for implementation in closed form [28, 29]. Additional details can be also found in ref. [30] (specifically in equations (17),(27), and (31)). Equation (30b) benefits from this property since its RHS is a projection of the absolute coordinate adjoint system (22a), (22b) onto the motion subspace \(\boldsymbol{\mathrm{H}}\). Figure 12 presents various pathways one can take to generate the adjoint system. For instance, path \(C\) is advertised in ref. [30], whereas path \(C\)–\(D\) is proposed in this paper. Conversely, one can derive the adjoint system by applying variational principles to the original joint-space EOM (10a), (10b) (path \(A\)–\(B\) in Fig. 12). It can be demonstrated that such an approach produces the same set of equations as shown in Eqs. (30a), (30b), however, with the other right-hand side, i.e. \(\boldsymbol{\mathrm{r}}_{J} = \boldsymbol{\mathrm{r}}_{J}( \boldsymbol{\mathrm{\upalpha }}, \dot{\boldsymbol{\mathrm{\upalpha }}}, \ddot{\boldsymbol{\mathrm{\upalpha }}})\) [36]. Although the formulation does not involve additional Lagrange multipliers (e.g., \(\boldsymbol{\mathrm{\upsigma }}\), \(\boldsymbol{\mathrm{\uplambda }}\)), the partial derivatives required to evaluate the RHS become significantly more complex and cumbersome to compute when compared with the RHS of Eq. (30b). For example, the derivative \((\hat{\boldsymbol{\mathrm{M}}} \boldsymbol{\mathrm{\upbeta }})_{ \boldsymbol{\mathrm{\upalpha }}}\) (where \(\widehat{\boldsymbol{\mathrm{M}}} = \boldsymbol{\mathrm{H}}^{T} \boldsymbol{\mathrm{M}} \boldsymbol{\mathrm{H}}\)) is much more involved than its absolute-coordinate counterpart \((\boldsymbol{\mathrm{M}} \boldsymbol{\mathrm{v}})_{\boldsymbol{\mathrm{q}}}\), especially when one aims at implementing a systematic procedure.

The proposed approach is amenable to extensions that consider closed kinematic chains. The idea is based on the approach presented in ref. [33], where a subset of joint variables \(\boldsymbol{\mathrm{\upbeta }}^{*} \in \mathcal {R}^{\mathit{dof}}\) is picked out of a dependent set \(\boldsymbol{\mathrm{\upbeta }}\). The relation between \(\boldsymbol{\mathrm{\upbeta }}\) and \(\boldsymbol{\mathrm{\upbeta }}^{*}\) is similar to that expressed in Eq. (7), i.e. \(\boldsymbol{\mathrm{\upbeta }}= \boldsymbol{\mathrm{E}} \boldsymbol{\mathrm{\upbeta }}^{*}\), which is a conversion of the velocity equation \(\boldsymbol{\mathrm{\Upgamma }}\boldsymbol{\mathrm{\upbeta }}= \boldsymbol{\mathrm{0}}\). The term \(\boldsymbol{\mathrm{E}} \in \mathcal {R}^{n_{\beta} \times \mathit{dof}}\) is the equivalent of the subspace \(\boldsymbol{\mathrm{H}}\) that is a null-space of \(\boldsymbol{\mathrm{\upbeta }}^{*}\). Therefore, by projecting equations (11a), (11b) onto the subspace \(\boldsymbol{\mathrm{E}}\), we come up with the system equivalent to the one described by Eqs. (10a), (10b). Subsequently, the relation (25), as well as all the following derivations, remains valid for \(\boldsymbol{\mathrm{H}}\leftarrow \boldsymbol{\mathrm{H}}^{*} = \boldsymbol{\mathrm{H}}\boldsymbol{\mathrm{E}}\). Specifically, the minimal–coordinate adjoint variables \(\hat{\boldsymbol{\mathrm{\upeta }}}^{*}, \hat{\boldsymbol{\mathrm{\upxi }}}^{*} \in \mathcal {R}^{\mathit{dof}}\) would map onto the absolute–coordinate counterparts \(\boldsymbol{\mathrm{\upeta }}\), \(\boldsymbol{\mathrm{\upxi }}\) via (27a), (27b) if we substitute \(\boldsymbol{\mathrm{H}}^{*}\) and \(\dot{\boldsymbol{\mathrm{H}}}^{*}\) instead of \(\boldsymbol{\mathrm{H}}\), \(\dot{\boldsymbol{\mathrm{H}}}\). The resultant adjoint system would look similar to that shown in Eqs. (30a), (30b). These areas are of current endeavors for the authors.

As a final comment, the discovery of the relations demonstrated in (27a), (27b), combined with the analogies between state and adjoint variables gathered in Table 1, may pave the way to set up a fully recursive formulation for solving the adjoint system. Due to the fact that the leading matrix in the forward (Eq. (6a)) and adjoint (Eq. (22b)) problem is the same, it is expected that the Hamiltonian-based divide-and-conquer parallel algorithm [9] can be applied here to make the design sensitivity analysis performant, especially for multi-degree-of-freedom systems.

7 Summary and conclusions

A novel adjoint-based method that exploits a set of independent co-state variables has been developed, verified, and compared to the existing state-of-the-art formulations (redundant coordinate adjoint method, complex-step method). The joint-coordinate adjoint method is derived as an extension of the efforts presented in [30]. Parallels that exist between the formulation of Hamilton’s equations of motion in mixed redundant–joint set of coordinates and the necessary conditions arising from the minimization of the cost functional are introduced in the text. The proposed unified treatment allows one for reformulation of the adjoint DAEs into a set of first-order ordinary differential equations by reusing a joint-space inertia matrix calculated in the forward dynamics problem. The approach is relatively simple to implement since the core partial derivatives are calculated with respect to the redundant set of coordinates and projected back onto the appropriate joint’s motion and constraint–force subspaces. The validity and properties of the approach are demonstrated based on the optimal control of a double pendulum on a cart. Through numerical studies, the performance of the proposed approach has been quantified, especially in terms of constraint violation errors resulting from backward integration of the adjoint system. Applications show that the joint–coordinate adjoint method is stable, and the accuracy of computations is higher as compared against redundant-coordinate counterpart. Although the method has been tested only in two scenarios, the proposed approach is applicable to optimal control of multibody systems larger than those presented in the text within longer time horizons.

References

Kudruss, M., Manns, P., Kirches, C.: Efficient derivative evaluation for rigid-body dynamics based on recursive algorithms subject to kinematic and loop constraints. IEEE Control Syst. Lett. 3(3), 619–624 (2019). https://doi.org/10.1109/lcsys.2019.2914338

Callejo, A., de Jalón, J.G., Luque, P., Mántaras, D.A.: Sensitivity-based, multi-objective design of vehicle suspension systems. J. Comput. Nonlinear Dyn. 10(3), 031008 (2015). https://doi.org/10.1115/1.4028858

Bhalerao, K.D., Poursina, M., Anderson, K.S.: An efficient direct differentiation approach for sensitivity analysis of flexible multibody systems. Multibody Syst. Dyn. 23(2), 121–140 (2010). https://doi.org/10.1007/s11044-009-9176-0

Mukherjee, R.M., Bhalerao, K.D., Anderson, K.S.: A divide-and-conquer direct differentiation approach for multibody system sensitivity analysis. Struct. Multidiscip. Optim. 35(5), 413–429 (2008). https://doi.org/10.1007/s00158-007-0142-2

Pikuliński, M., Malczyk, P.: Adjoint method for optimal control of multibody systems in the Hamiltonian setting. Mech. Mach. Theory 166, 104473 (2021). https://doi.org/10.1016/j.mechmachtheory.2021.104473

Dopico, D., Sandu, A., Sandu, C.: Adjoint sensitivity index-3 augmented Lagrangian formulation with projections. Mech. Based Des. Struct. Mach., 1–31 (2021). https://doi.org/10.1080/15397734.2021.1890614

Nachbagauer, K., Oberpeilsteiner, S., Sherif, K., Steiner, W.: The use of the adjoint method for solving typical optimization problems in multibody dynamics. J. Comput. Nonlinear Dyn. 10(6) (2015). https://doi.org/10.1115/1.4028417

Serban, R., Recuero, A.: Sensitivity analysis for hybrid systems and systems with memory. J. Comput. Nonlinear Dyn. 14(9) (2019). https://doi.org/10.1115/1.4044028

Chadaj, K., Malczyk, P., Frączek, J.: A parallel Hamiltonian formulation for forward dynamics of closed-loop multibody systems. Multibody Syst. Dyn. 39(1), 51–77 (2017). https://doi.org/10.1007/s11044-016-9531-x

Chadaj, K., Malczyk, P., Frączek, J.: A parallel recursive Hamiltonian algorithm for forward dynamics of serial kinematic chains. IEEE Trans. Robot. 33(3), 647–660 (2017). https://doi.org/10.1109/TRO.2017.2654507

Chadaj, K., Malczyk, P., Frączek, J.: Efficient parallel formulation for dynamics simulation of large articulated robotic systems. In: 20th International Conference on Methods and Models in Automation and Robotics (MMAR), pp. 441–446 (2015). https://doi.org/10.1109/MMAR.2015.7283916

Lankarani, H., Nikravesh, P.: Application of the canonical equations of motion in problems of constrained multibody systems with intermittent motion. Am. Soc. Mech. Eng., Des. Eng. Div. (Publ.) DE 14, 417–423 (1988)

Borri, M., Trainelli, L., Croce, A.: The embedded projection method: a general index reduction procedure for constrained system dynamics. Comput. Methods Appl. Mech. Eng. 195(50-51), 6974–6992 (2006). https://doi.org/10.1016/j.cma.2005.03.010

Oberpeilsteiner, S., Lauss, T., Nachbagauer, K., Steiner, W.: Optimal input design for multibody systems by using an extended adjoint approach. Multibody Syst. Dyn. 40(1), 43–54 (2016). https://doi.org/10.1007/s11044-016-9541-8

Held, A., Knüfer, S., Seifried, R.: Structural sensitivity analysis of flexible multibody systems modeled with the floating frame of reference approach using the adjoint variable method. Multibody Syst. Dyn. 40(3), 287–302 (2016). https://doi.org/10.1007/s11044-016-9540-9

Eichmeir, P., Lauß, T., Oberpeilsteiner, S., Nachbagauer, K., Steiner, W.: The adjoint method for time-optimal control problems. J. Comput. Nonlinear Dyn. 16(2) (2020). https://doi.org/10.1115/1.4048808

Pappalardo, C., Guida, D.: Use of the adjoint method for controlling the mechanical vibrations of nonlinear systems. Machines 6(2), 19 (2018). https://doi.org/10.3390/machines6020019

Lauß, T., Oberpeilsteiner, S., Steiner, W., Nachbagauer, K.: The discrete adjoint gradient computation for optimization problems in multibody dynamics. J. Comput. Nonlinear Dyn. 12(3), 031016 (2017). https://doi.org/10.1115/1.4035197

Maciąg, P., Malczyk, P., Frączek, J.: The discrete Hamiltonian-based adjoint method for some optimization problems in multibody dynamics. Multibody Dyn. 2019, 359–366 (2020). https://doi.org/10.1007/978-3-030-23132-3_43

Corner, S., Sandu, A., Sandu, C.: Adjoint sensitivity analysis of hybrid multibody dynamical systems. Multibody Syst. Dyn. 49, 395–420 (2020). https://doi.org/10.1007/s11044-020-09726-0

Zhang, H., Abhyankar, S., Constantinescu, E., Anitescu, M.: Discrete adjoint sensitivity analysis of hybrid dynamical systems with switching. IEEE Trans. Circuits Syst. I, Regul. Pap. 64(5), 1247–1259 (2017). https://doi.org/10.1109/tcsi.2017.2651683

Singh, S., Russell, R.P., Wensing, P.M.: Efficient analytical derivatives of rigid-body dynamics using spatial vector algebra. IEEE Robot. Autom. Lett. 7(2), 1776–1783 (2022). https://doi.org/10.1109/lra.2022.3141194

Schneider, S., Betsch, P.: On mechanical DAE systems within the framework of optimal control. Proc. Appl. Math. Mech. 19(1) (2019). https://doi.org/10.1002/pamm.201900431

Schneider, S., Betsch, P.: On the dynamics and optimal control of constrained mechanical systems. In: Proceedings of the 10th ECCOMAS Thematic Conference on Multibody Dynamics. Budapest University of Technology and Economics (2021). https://doi.org/10.3311/eccomasmbd2021-130

Blajer, W.: Elimination of constraint violation and accuracy aspects in numerical simulation of multibody systems. Multibody Syst. Dyn. 7(3) (2002). https://doi.org/10.1023/A:1015285428885

Nikravesh, P.E.: Systematic reduction of multibody equations of motion to a minimal set. Int. J. Non-Linear Mech. 25(2), 143–151 (1990). https://doi.org/10.1016/0020-7462(90)90046-C

Bauchau, O.A., Laulusa, A.: Review of contemporary approaches for constraint enforcement in multibody systems. J. Comput. Nonlinear Dyn. 3(1), 011005 (2007). https://doi.org/10.1115/1.2803258.

Haug, E.J.: Computer-Aided Kinematics and Dynamics of Mechanical Systems, Vol. II: Modern Methods. (2021). Research Gate

Serban, R., Haug, E.J.: Kinematic and kinetic derivatives in multibody system analysis. J. Struct. Mech. 26(2), 145–173 (1998)

Maciąg, P., Malczyk, P., Frączek, J.: Hamiltonian direct differentiation and adjoint approaches for multibody system sensitivity analysis. Int. J. Numer. Methods Eng. (2020). https://doi.org/10.1002/nme.6512

Featherstone, R.: Rigid Body Dynamics Algorithms. Springer, New York (2008). https://doi.org/10.1007/978-1-4899-7560-7

Jain, A.: Robot and Multibody Dynamics: Analysis and Algorithms. Springer, New York (2010)

Nikravesh, P.E.: Systematic reduction of multibody equations of motion to a minimal set. Int. J. Non-Linear Mech. 25(2-3), 143–151 (1990)

Serban, R., Hindmarsh, A.: CVODES, the sensitivity-enabled ODE solver in sundials. In: 5th International Conference on Multibody Systems, Nonlinear Dynamics, and Control, vol. 6 (2005). https://doi.org/10.1115/DETC2005-85597.

Martins, J.R.R.A., Sturdza, P., Alonso, J.J.: The complex-step derivative approximation. ACM Trans. Math. Softw. 29(3), 245–262 (2003). https://doi.org/10.1145/838250.838251

Roubíček, T., Valášek, M.: Optimal control of causal differential–algebraic systems. J. Math. Anal. Appl. 269(2), 616–641 (2002)

Acknowledgements

This work has been supported by the Excellence Initiative, Research University in the field of Artificial Intelligence and Robotics under grant no. 1820/25/Z01/POB2/2021.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Boundary conditions for the adjoint variables

This appendix shows the derivation of terminal conditions that arise in the optimal control problem presented in this paper. In the first part, the formulation is general. Subsequently, it is tailored to the Hamiltonian approach. Section 3.2 embraces a formulation of a general method for the adjoint system. The necessary conditions for the adjoint variables include the DAEs (19a)–(19b) as well as the boundary conditions for the adjoint multipliers. Equations (19a)–(19d) allows only to prescribe the terminal configuration of the system, which is dictated by the fact that \(S_{\dot{\boldsymbol{\mathrm{y}}}}\) must be equal to zero to be compatible with Eq. (19d). This implies that there is no dependency between terminal cost and the term \(\dot{\boldsymbol{\mathrm{y}}}\), which is clearly against the assumption specified earlier in Eq. (14). This issue can be resolved by defining additional adjoint variable \(\boldsymbol{\mathrm{x}} \in \mathcal {R}^{2n_{v}+m}\) at the terminal time \(t_{f}\) and appending the quantity \({\boldsymbol{\mathrm{x}}^{T} \boldsymbol{\mathrm{F}}} \rvert _{t_{f}} = \boldsymbol{\mathrm{0}}\) to equation (14). Its variation reads as:

which transforms equations (19c)–(19d) into the following form:

The term \({\boldsymbol{\mathrm{F}}_{\boldsymbol{\mathrm{b}}}^{T} \boldsymbol{\mathrm{x}}} \rvert _{t_{f}}\) must be added to the resultant variation of the performance measure (18), hence the formulas for the gradient should be updated accordingly.

In the case of Hamilton’s formulation, the LHS of Eq. (A.1) expands to the following expression:

where \(\boldsymbol{\mathrm{\upnu }}\in \mathcal {R}^{n_{v}}\) and \(\boldsymbol{\mathrm{\upvarepsilon }}\in \mathcal {R}^{m}\) is a subset of the more general variable \(\boldsymbol{\mathrm{x}}\). By computing the variation (A.3) and appending it to Eq. (20), we obtain the following boundary conditions for the adjoint variables:

The term \(\boldsymbol{\mathrm{r}}_{A}^{\text{boundary}}\) involves known coefficients that arise from computing expression (A.3). Equations (A.4a), (A.4c) can be solved sequentially starting at Eq. (A.4a). On the other hand, if there is no explicit dependency of the terminal cost on the velocity (i.e. one can write \(S(\boldsymbol{\mathrm{q}})\) instead of \(S(\boldsymbol{\mathrm{q}}, \boldsymbol{\mathrm{v}})\)), only Eq. (A.4c) must be solved for \(\boldsymbol{\mathrm{\upeta }}(t_{f})\), \(\boldsymbol{\mathrm{\upmu }}(t_{f})\), while \(\boldsymbol{\mathrm{\upxi }}(t_{f})=\boldsymbol{\mathrm{0}}\).

Appendix B: The derivation of the adjoint equations

In this section, the adjoint system (21a)–(21c) will be derived based on the procedure presented in Sect. 3.2. The entry point of this approach is the augmented performance measure described by equation (20). Let us also assume that the design parameters \(\boldsymbol{\mathrm{b}}\) appear either under the integrand \(h\) or in the forcing terms of the EOM, e.g., as input force parameters or appropriate coefficients. Hence, the variable \(\boldsymbol{\mathrm{Q}}\) (cf. Eq. (6b)) can be expressed as \(\boldsymbol{\mathrm{Q}}(\boldsymbol{\mathrm{q}}, \boldsymbol{\mathrm{v}}, \boldsymbol{\mathrm{b}})\). Consequently, we can compute the first variation of the augmented performance measure, which is the analog of the equation (15), as:

where the subindex denotes a partial difference operator. Subsequently, the operator \(\updelta \boldsymbol{\mathrm{s}}\) refers to the virtual displacement and virtual rotation of the premultiplied expression. Variational quantities introduced in Eq. (B.5) can be written explicitly as:

The virtual rotation \(\updelta \boldsymbol{\mathrm{\uppi }}'\) is non-integrable; therefore, the Jacobian matrices calculated with respect to \(\boldsymbol{\mathrm{\uppi }}'\) (or \(\boldsymbol{\mathrm{s}}\)) should be interpreted only as coefficients of a certain variational relation, rather than partial differences. Similarly, the angular velocity \(\boldsymbol{\mathrm{\upomega }}_{i}'\) is a non-integrable vector quantity. In the following step, we aim to integrate equation (B.5) by parts, which will require to substitute the variation of the angular velocity with an integrable quantity. To this end, we argue that equation (B.5) can be simplified by applying the following relation: \(\updelta \boldsymbol{\mathrm{v}}= \updelta \dot{\boldsymbol{\mathrm{s}}}\). A general formula for integration by parts has been shown in Eq. (16). As an example, the simplest component in the performance measure (B.5) can be expanded as:

Similarly to what was assumed in Sect. 3.2, the initial conditions for a multibody system are prescribed, and the variation of the initial states vanishes. By applying the rule of integrating by parts to all components of Eq. (B.5), we come up with the explicit form of the relation (17):

where the compact and systematic structure is achieved by defining some additional quantities:

One can associate the tilde symbol in the Eqs. (B.9) as an indication of the explicit partial derivative of the equations of motion (6a), (6b) with respect to the appropriate variable, implied by the lower index. Equations (21a)–(21c) constitute a set of necessary conditions for expressing the variation of the cost functional solely in terms of the variation of the design variables. Upon solving these equations and determining the unique adjoint variables, equation (B.8) significantly simplifies into the analog of Eq. (18):

where \(\widetilde{\,\dot{\boldsymbol{\mathrm{p}}}^{*}_{\boldsymbol{\mathrm{b}}}} = \boldsymbol{\mathrm{Q}}_{\boldsymbol{\mathrm{b}}}\) based on the earlier assumption. Equation (B.10) can be used to directly compute the gradient of the performance measure.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Maciąg, P., Malczyk, P. & Frączek, J. Joint–coordinate adjoint method for optimal control of multibody systems. Multibody Syst Dyn 56, 401–425 (2022). https://doi.org/10.1007/s11044-022-09851-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11044-022-09851-y

– when

– when  is the discretization time-step.

is the discretization time-step.